Abstract

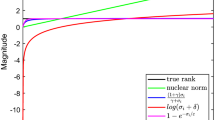

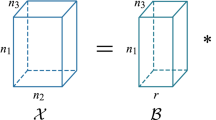

The linear transform-based tensor nuclear norm (TNN) methods have recently obtained promising results for tensor completion. The main idea of these methods is exploiting the low-rank structure of frontal slices of the targeted tensor under the linear transform along the third mode. However, the low-rankness of frontal slices is not significant under the linear transforms family. To better pursue the low-rank approximation, we propose a nonlinear transform-based TNN (NTTNN). More concretely, the proposed nonlinear transform is a composite transform consisting of the linear semi-orthogonal transform along the third mode and the element-wise nonlinear transform on frontal slices of the tensor under the linear semi-orthogonal transform. The two transforms in the composite transform are indispensable and complementary to fully exploit the underlying low-rankness. Based on the suggested low-rankness metric, i.e., NTTNN, we propose a low-rank tensor completion model. To tackle the resulting nonlinear and nonconvex optimization model, we elaborately design a proximal alternating minimization algorithm and establish the theoretical convergence guarantee. Extensive experimental results on hyperspectral images, multispectral images, and videos show that our method outperforms the linear transform-based state-of-the-art LRTC methods in terms of PSNR, SSIM, SAM values and visual quality.

Similar content being viewed by others

Data Availability

All datasets are publicly available.

References

Zhao, X., Bai, M., Ng, M.K.: Nonconvex optimization for robust tensor completion from grossly sparse observations. J. Sci. Comput. 85(46), 1–32 (2020)

He, W., Yao, Q., Li, C., Yokoya, N., Zhao, Q., Zhang, H., Zhang, L.: Non-local meets global: an integrated paradigm for hyperspectral image restoration. IEEE Trans. Pattern Anal. Mach. Intell. (2020). https://doi.org/10.1109/TPAMI.2020.3027563

Miao, Y.-C., Zhao, X.-L., Fu, X., Wang, J.-L.: Hyperspectral denoising using unsupervised disentangled spatio-spectral deep priors. IEEE Trans. Geosci. Remote Sens. 60, 1–16 (2021)

Liu, Y.-Y., Zhao, X.-L., Zheng, Y.-B., Ma, T.-H., Zhang, H.: Hyperspectral image restoration by tensor fibered rank constrained optimization and plug-and-play regularization. IEEE Trans. Geosci. Remote Sens. 60, 1–7 (2021)

Lin, J., Huang, T.-Z., Zhao, X.-L., Jiang, T.-X., Zhuang, L.: A tensor subspace representation-based method for hyperspectral image denoising. IEEE Trans. Geosci. Remote Sens. 59(9), 7739–7757 (2021)

Ji, T.-Y., Chu, D., Zhao, X.-L., Hong, D.: A unified framework of cloud detection and removal based on low-rank and group sparse regularizations for multitemporal multispectral images. IEEE Trans. Geosci. Remote Sens. 60, 1–5 (2022)

Ding, M., Huang, T.-Z., Ji, T.-Y., Zhao, X.-L., Yang, J.-H.: Low-rank tensor completion using matrix factorization based on tensor train rank and total variation. J. Sci. Comput. 81, 941–964 (2019)

Zhang, X.-J., Ng, M.K.: Low rank tensor completion with Poisson observations. IEEE Trans. Pattern Anal. Mach. Intell. (2021)

Shi, C., Huang, Z., Wan, T., Li, X.: Low-rank tensor completion based on log-Det rank approximation and matrix factorization,. J. Sci. Comput. 80, 1888–1912 (2019)

Zhang, H., Zhao, X.-L., Jiang, T.-X., Micahel, N., Huang, T.-Z.: Multi-scale features tensor train minimization for multi-dimensional images recovery and recognition. IEEE Trans. Cybern. (2021)

Wang, J.-L., Huang, T.-Z., Zhao, X.-L., Jiang, T.-X., Ng, M.: Multi-dimensional visual data completion via low-rank tensor representation under coupled transform. IEEE Trans. Image Process. 30, 3581–3596 (2021)

Zhao, X.-L., Yang, J.-H., Ma, T.-H., Jiang, T.-X., Ng, M.K., Huang, T.-Z.: Tensor completion via complementary global, local, and nonlocal priors. IEEE Trans. Image Process. 31, 984–999 (2022)

Li, B.-Z., Zhao, X.-L., Wang, J.-L., Chen, Y., Jiang, T.-X., Liu, J.: Tensor completion via collaborative sparse and low-rank transforms. IEEE Trans. Comput. Imaging 7, 1289–1303 (2021)

Buccini, A., Reichel, L.: An \(\ell ^2-\ell ^q\) regularization method for large discrete ill-posed problems. J. Sci. Comput. 78, 1526–1549 (2019)

Che, M., Wei, Y., Yan, H.: An efficient randomized algorithm for computing the approximate Tucker decomposition. J. Sci. Comput. (2021). https://doi.org/10.1007/s10915-021-01545-5

Li, J.-F., Li, W., Vong, S.-W., Luo, Q.-L., Xiao, M.: A riemannian optimization approach for solving the generalized eigenvalue problem for nonsquare matrix pencils. J. Sci. Comput. (2020). https://doi.org/10.1007/s10915-020-01173-5

Wang, Y., Yin, W., Zeng, J.: Global convergence of ADMM in nonconvex nonsmooth optimization. J. Sci. Comput. 78, 29–63 (2019)

Cui, L.-B., Zhang, X.-Q., Zheng, Y.-T.: A preconditioner based on a splitting-type iteration method for solving complex symmetric indefinite linear systems. Jpn. J. Ind. Appl. Math. 38, 965–978 (2021)

Cui, L.-B., Li, C.-X., Wu, S.-L.: The relaxation convergence of multisplitting AOR method for linear complementarity problem. Linear Multilinear Algebra 69(1), 40–47 (2021)

Song, G.-J., Ng, M.K., Zhang, X.-J.: Robust tensor completion using transformed tensor singular value decomposition. Numer. Linear Algebra Appl. 27, 2299 (2020)

Hitchcock, F.L.: The expression of a tensor or a polyadic as a sum of products. Stud. Appl. Math. 6(1–4), 164–189 (1927)

Zhao, Q., Zhang, L., Cichocki, A.: Bayesian CP factorization of incomplete tensors with automatic rank determination. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1751–1763 (2015)

Xue, J., Zhao, Y., Huang, S., Liao, W., Chan, J.C.-W., Kong, S.G.: Multilayer sparsity-based tensor decomposition for low-rank tensor completion. IEEE Trans. Neural. Netw. Learn. Syst. (2021)

Hillar, C.J., Lim, L.-H.: Most tensor problems are NP-hard. J. ACM 60(6), 1–39 (2013)

Liu, J., Musialski, P., Wonka, P., Ye, J.: Tensor completion for estimating missing values in visual data. IEEE Trans. Pattern Anal. Mach. Intell. 35(1), 208–220 (2012)

Ji, T.-Y., Huang, T.-Z., Zhao, X.-L., Ma, T.-H., Deng, L.-J.: A non-convex tensor rank approximation for tensor completion. Appl. Math. Model. 48, 410–422 (2017)

Cao, W., Wang, Y., Yang, C., Chang, X., Han, Z., Xu, Z.: Folded-concave penalization approaches to tensor completion. Neurocomputing 152, 261–273 (2015)

Oseledets, I.V.: Tensor-train decomposition. SIAM J. Sci. Comput. 33(5), 2295–2317 (2011)

Zhao, Q., Zhou, G., Xie, S., Zhang, L., Cichocki, A.: Tensor ring decomposition. Preprint arXiv:1606.05535 (2016)

Zheng, Y.-B., Huang, T.-Z., Zhao, X.-L., Zhao, Q., Jiang, T.-X.: Fully-connected tensor network decomposition and its application to higher-order tensor completion. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 12, pp. 11 071–11 078 (2021)

Zheng, Y.-B., Huang, T.-Z., Zhao, X.-L., Zhao, Q.: Tensor completion via fully-connected tensor network decomposition with regularized factors. J. Sci. Comput. 92, 1–35 (2022)

Bengua, J., Phien, H., Hoang, T., Do, M.: Efficient tensor completion for color image and video recovery: Low-rank tensor train’’. IEEE Trans. Image Process. 26, 2466–2479 (2017)

Yuan, L., Li, C., Mandic, D., Cao, J., Zhao, Q.: Tensor ring decomposition with rank minimization on latent space: an efficient approach for tensor completion. In: Proceedings of the AAAI Conference on Artificial Intelligence vol. 33, no. 01, pp. 9151–9158 (2019)

Kilmer, M.E., Braman, K., Hao, N., Hoover, R.C.: Third-order tensors as operators on matrices: a theoretical and computational framework with applications in imaging. SIAM J. Matrix Anal. Appl. 34, 148–172 (2013)

Zhang, Z., Ely, G., Aeron, S., Hao, N., Kilmer, M.: Novel methods for multilinear data completion and de-noising based on tensor-SVD. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 3842–3849 (2014)

Kilmer, M., Martin, C.: Factorization strategies for third-order tensors. Linear Algebra Appl. 435(3), 641–658 (2011)

Lu, C., Peng, X., Wei, Y.: Low-rank tensor completion with a new tensor nuclear norm induced by invertible linear transforms. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 5989–5997 (2019)

Xu, W.-H., Zhao, X.-L., Ng, M.K.: A fast algorithm for cosine transform based tensor singular value decomposition. arXiv:1902.03070 (2019)

Kernfeld, E., Kilmer, M., Aeron, S.: Tensor-tensor products with invertible linear transforms. Linear Algebra Appl. 485, 545–570 (2015)

Jiang, T.-X., Ng, M.K., Zhao, X.-L., Huang, T.-Z.: Framelet representation of tensor nuclear norm for third-order tensor completion. IEEE Trans. Image Process. 29, 7233–7244 (2020)

Kong, H., Lu, C., Lin, Z.: Tensor Q-rank: new data dependent tensor rank. Mach. Learn. 110, 1867–1900 (2021)

Jiang, T.-X., Zhao, X.-L., Zhang, H., Ng, M.K.: Dictionary learning with low-rank coding coefficients for tensor completion. IEEE Trans. Neural. Netw. Learn. Syst. (2021). https://doi.org/10.1109/TNNLS.2021.3104837

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009)

Wang, J., Chen, Y., Chakraborty, R., Yu, S.X.: Orthogonal convolutional neural networks. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 11505–11515 (2020)

Jia, K., Li, S., Wen, Y., Liu, T., Tao, D.: Orthogonal deep neural networks. IEEE Trans. Pattern Anal. Mach. Intell. 43, 1352–1368 (2021)

Bansal, N., Chen, X., Wang, Z.: Can we gain more from orthogonality regularizations in training deep CNNs?. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems (2018)

Krishnan, D., Fergus, R.: Fast image deconvolution using hyper-Laplacian priors. In: Proceeding of Advances in Neural Information Processing Systems, pp. 1033–1041 (2009)

Attouch, H., Bolte, J., Redont, P., Soubeyran, A.: Proximal alternating minimization and projection methods for nonconvex problems: an approach based on the Kurdyka-Lojasiewicz inequality. Math. Oper. Res. 35(2), 438–457 (2010)

Cai, J.-F., Candès, E.J., Shen, Z.: A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 20(4), 1956–1982 (2010)

Dongarra, J., Gates, M., Haidar, A., Kurzak, J., Luszczek, P., Tomov, S., Yamazaki, I.: The singular value decomposition: anatomy of optimizing an algorithm for extreme scale. SIAM Rev. 60(4), 808–865 (2018)

Attouch, H., Bolte, J., Svaiter, B.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, and regularized Gauss-Seidel methods. Math. Program. 137, 91–129 (2013)

Bolte, J., Sabach, S., Teboulle, M.: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146, 459–494 (2014)

Zeng, J., Lau, T.T.-K., Lin, S., Yao, Y.: Global convergence of block coordinate descent in deep learning. In: Proceedings of 36th International Conference on Machine Learning (2019)

Haykin, S.: Neural Networks: A Comprehensive Foundation. Prentice Hall (1998)

Yair, N., Michaeli, T.: Multi-scale weighted nuclear norm image restoration. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 3165–3174 (2018)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Shivakumar, B.R., Rajashekararadhya, S.V.: Performance evaluation of spectral angle mapper and spectral correlation mapper classifiers over multiple remote sensor data. In: International Conference on Electrical, Computer and Communication Technologies (2017)

Nair, V., Hinton, G.E.: Rectified linear units improve restricted boltzmann machines. In: Proceedings of the 27th International Conference on International Conference on Machine Learning, pp. 807–814 (2010)

Funding

This research is supported by NSFC (Nos. 61876203, 12171072, 12001432, 12171189, and 11801206), the Applied Basic Research Project of Sichuan Province (No. 2021YJ0107), the Key Project of Applied Basic Research in Sichuan Province (No. 2020YJ0216), and National Key Research and Development Program of China (No. 2020YFA0714001).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have not disclosed any competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research is supported by NSFC (Nos. 61876203, 12171072, 12001432, 12171189, and 11801206), the Applied Basic Research Project of Sichuan Province (No. 2021YJ0107), the Key Project of Applied Basic Research in Sichuan Province (No. 2020YJ0216), and National Key Research and Development Program of China (No. 2020YFA0714001)

Rights and permissions

About this article

Cite this article

Li, BZ., Zhao, XL., Ji, TY. et al. Nonlinear Transform Induced Tensor Nuclear Norm for Tensor Completion. J Sci Comput 92, 83 (2022). https://doi.org/10.1007/s10915-022-01937-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-01937-1