Abstract

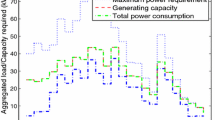

Nonconvex minimax problems have attracted significant attention in machine learning, wireless communication and many other fields. In this paper, we propose an efficient approximation proximal gradient algorithm for solving a class of nonsmooth nonconvex-linear minimax problems with a nonconvex nonsmooth term, and the number of iteration to find an \(\varepsilon \)-stationary point is upper bounded by \({\mathcal {O}}(\varepsilon ^{-3})\). Some numerical results on one-bit precoding problem in massive MIMO system and a distributed non-convex optimization problem demonstrate the effectiveness of the proposed algorithm.

Similar content being viewed by others

Data Availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Giannakis, G.B., Ling, Q., Mateos, G., Schizas, I.D., Zhu, H.: Decentralized learning for wireless communications and networking. Splitting methods in communication, imaging, science, and engineering, pp. 461–497. Springer, Cham (2017)

Hajinezhad, D., Hong, M.: Perturbed proximal primal-dual algorithm for nonconvex nonsmooth optimization. Math. Program. 176(1–2), 207–245 (2019)

Lu, S., Tsaknakis, I., Hong, M.: Block alternating optimization for non-convex min-max problems: algorithms and applications in signal processing and communications. In: ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 4754–4758 (2019)

Li, A., Masouros, C., Liu, F., Swindlehurst, A.L.: Massive MIMO 1-bit DAC transmission: a low-complexity symbol scaling approach. IEEE Trans. Wirel. Commun. 17(11), 7559–7575 (2018)

Wu, Z., Jiang, B., Liu, Y.F., Dai, Y.H.: CI-based one-bit precoding for multiuser downlink massive MIMO systems with PSK modulation: a negative \(\ell _1 \) penalty approach (2021). arXiv preprint arXiv:2110.11628

Mohri, M., Sivek, G., Suresh, A.T.: Agnostic federated learning. In: International Conference on Machine Learning, pp. 4615–4625 (2019)

Qian, Q., Zhu, S., Tang, J., Jin, R., Sun, B., Li, H.: Robust optimization over multiple domains. Proc. AAAI Confer. Artif. Intell. 33(01), 4739–4746 (2019)

Kong, W., Monteiro, R.D.C.: An accelerated inexact proximal point method for solving nonconvex-concave min-max problems. SIAM J. Optim. 31(4), 2558–2585 (2021)

Lin, T., Jin, C., Jordan, M.I.: Near-optimal algorithms for minimax optimization. Proc. Mach. Learn. Res. 125, 1–42 (2020)

Nouiehed, M., Sanjabi, M., Huang, T., Lee, J.D., Razaviyayn, M.: Solving a class of non-convex min-max games using iterative first order methods. In: Advances in Neural Information Processing Systems (2019)

Ostrovskii, D.M., Lowy, A., Razaviyayn, M.: Efficient search of first-order Nash equilibria in nonconvex-concave smooth min-max problems. SIAM J. Optim. 31(4), 2508–2538 (2021)

Rafique, H., Liu, M., Lin, Q., Yang, T.: Weakly-convex-concave min-max optimization: provable algorithms and applications in machine learning. Optim. Methods Softw. 37(3), 1087–1121 (2022)

Thekumparampil, K.K., Jain, P., Netrapalli, P., Oh, S.: Efficient algorithms for smooth minimax optimization. Adv. Neural Inf. Process. Syst. 32, 12659–12670 (2019)

Yang, J., Zhang, S., Kiyavash, N., He, N.: A catalyst framework for minimax optimization. Adv. Neural. Inf. Process. Syst. 33, 5667–5678 (2020)

Daskalakis, C., Ilyas, A., Syrgkanis, V., Zeng, H.: Training GANs with optimism. In: International Conference on Learning Representations (2018)

Daskalakis, C., Panageas, I.: The limit points of (optimistic) gradient descent in min-max optimization. Adv. Neural Inf. Process. Syst. 31, 9256–9266 (2018)

Chambolle, A., Pock, T.: On the ergodic convergence rates of a first-order primal-dual algorithm. Math. Program. 159(1–2), 253–287 (2016)

Gidel, G., Berard, H., Vignoud, G., Vincent, P., Lacoste-Julien, S.: A variational inequality perspective on generative adversarial networks. In: International Conference on Learning Representations (2019)

Ho, J., Ermon, S.: Generative adversarial imitation learning. Adv. Neural Inf. Process. Syst. 29, 4565–4573 (2016)

Letcher, A., Balduzzi, D., Racaniere, S., Martens, J., Foerster, J., Tuyls, K., Graepel, T.: Differentiable game mechanics. J. Mach. Learn. Res. 20(1), 3032–3071 (2019)

Lin, T., Jin, C., Jordan, M.: On gradient descent ascent for nonconvex-concave minimax problems. Int. Confer. Mach. Learn. 119, 6083–6093 (2020)

Xu, Z., Shen, J., Wang, Z., Dai, Y.H.: Derivative-free Alternating Projection Algorithms for General Nonconvex-Concave Minimax Problems. SIAM Journal on Optimization, accepted, arXiv preprint arXiv:2108.00473

Xu, Z., Wang, Z.Q., Wang, J.L., Dai, Y.H.: Zeroth-order alternating gradient descent ascent algorithms for a class of nonconvex-nonconcave minimax problems. J. Mach. Learn. Res 24(313), 1–25 (2023)

Jin, C., Netrapalli, P., Jordan, M.: What is local optimality in nonconvex-nonconcave minimax optimization? Int. Confer. Mach. Learn. 119, 4880–4889 (2020)

Lu, S., Tsaknakis, I., Hong, M., Chen, Y.: Hybrid block successive approximation for one-sided non-convex min-max problems: algorithms and applications. IEEE Trans. Signal Process. 68, 3676–3691 (2020)

Xu, Z., Zhang, H., Xu, Y., Lan, G.: A unified single-loop alternating gradient projection algorithm for nonconvex-concave and convex-nonconcave minimax problems. Math. Progr., Ser. A (2023). https://doi.org/10.1007/s10107-022-01919-z

Zhang, J., Xiao, P., Sun, R., Luo, Z.: A single-loop smoothed gradient descent-ascent algorithm for nonconvex-concave min-max problems. Adv. Neural. Inf. Process. Syst. 33, 7377–7389 (2020)

Pan, W., Shen, J., Xu, Z.: An efficient algorithm for nonconvex-linear minimax optimization problem and its application in solving weighted maximin dispersion problem. Comput. Optim. Appl. 78, 287–306 (2021)

Shen, J., Wang, Z., Xu, Z.: Zeroth-order single-loop algorithms for nonconvex-linear minimax problems. J. Glob. Optim. 87(2), 551–580 (2023)

Asteris, M., Papailiopoulos, D., Dimakis, A.: Nonnegative sparse PCA with provable guarantees. Int. Confer. Mach. Learn. 32(2), 1728–1736 (2014)

Yildiz, M.E., Scaglione, A.: Coding with side information for rate-constrained consensus. IEEE Trans. Signal Process. 56(8), 3753–3764 (2008)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Z. Xu was supported by National Natural Science Foundation of China under the Grant 12071279.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

He, J., Zhang, H. & Xu, Z. An approximation proximal gradient algorithm for nonconvex-linear minimax problems with nonconvex nonsmooth terms. J Glob Optim (2024). https://doi.org/10.1007/s10898-024-01383-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10898-024-01383-3

Keywords

- Nonconvex-linear minimax problem

- Nonsmooth problem

- Iteration complexity

- Approximation proximal gradient algorithm