Abstract

We present several solution techniques for the noisy single source localization problem, i.e. the Euclidean distance matrix completion problem with a single missing node to locate under noisy data. For the case that the sensor locations are fixed, we show that this problem is implicitly convex, and we provide a purification algorithm along with the SDP relaxation to solve it efficiently and accurately. For the case that the sensor locations are relaxed, we study a model based on facial reduction. We present several approaches to solve this problem efficiently, and we compare their performance with existing techniques in the literature. Our tools are semidefinite programming, Euclidean distance matrices, facial reduction, and the generalized trust region subproblem. We include extensive numerical tests.

Similar content being viewed by others

1 Introduction

In this paper we consider the noisy, single source localization problem. The objective is to locate the source of a signal that is detected by a set of sensors with exactly known locations. Distances between sensors and source are given, but contaminated with noise. For instance, in an application to cellular networks, the source of the signal is a cellular phone and the cellular towers are the sensors. Our data is the, possibly noisy, distance measurements from each sensor to the source.

The single source localization problem has applications in e.g. navigation, structural engineering, and emergency response [3, 4, 8, 24, 26, 38]. In general, it is related to distance geometry problems where the input consists of Euclidean distance measurements and a set of points in Euclidean space. The sensor network localization problem is a generalization of our single source problem, where there are multiple sources and only some of the distance estimates are known. The general Euclidean distance matrix completion problem is yet a further generalization, where sensors do not have specified locations and only partial, possibly noisy, distance information is available, e.g. [2, 13, 15]. We refer the readers to the books [1, 5, 9, 10] and survey article [27] for background and applications, and to the paper [18] for algorithmic comparisons. We also refer the readers for the related nearest Euclidean distance matrix (NEDM) problem to the papers [30, 31] where a semismooth Newton approach and a rank majorization approach is presented. The more general weighted NEDM is a much harder problem though. For theory that relates NEDM to semidefinite programming, see e.g. [12, 25].

A common approach to solving an instance of the single source localization problem is a modification of the least squares problem, referred to as the squared least squares (SLS) problem. We consider two equivalent formulations of SLS: the generalized trust region subproblem (GTRS) formulation; and the nearest Euclidean distance matrix with fixed sensors (NEDMF) formulation. We show that every extreme point of the semidefinite relaxation of GTRS may be easily transformed into a solution of GTRS and thus a solution of the SLS problem.

We also introduce and analyze several relaxations of the NEDMFformulation. These utilize semidefinite programming, facial reduction, and parametric optimization. We provide theoretical evidence that, generally, the solutions to these relaxations may be easily transformed into solutions of SLS. We also provide empirical evidence that the solutions to these relaxations may give better prediction for the location of the source.

1.1 Outline

In Sect. 1.2 we establish our notation and introduce background concepts. In Sect. 2.1 we prove strong duality for the GTRS formulation of SLSand in Sect. 2.2 we derive the semidefinite relaxation (SDR ), and prove that it is tight. We also show that the extreme points of the optimal set of SDR correspond exactly to the optimizers of SLS. A purification algorithm for obtaining the extreme points is presented in Sect. 2.2.1. In Sect. 3 we introduce the NEDM formulation as well as several relaxations. We analyze the theoretical properties of the relaxations and present algorithms for solving them. The results of numerical comparisons of the algorithms are presented in Sect. 4.

1.2 Preliminaries

We now present some preliminaries and background on SDP and the facial geometry, see e.g. [17]. We denote by \({{\mathcal {S}}^n}\) the space of \(n\times n\) real symmetric matrices endowed with the trace inner product and corresponding Frobenius norm,

Unless otherwise specified, the norm of a matrix is the Frobenius norm, and we may drop the subscript F. For a convex set C, the convex subset \(f\subseteq C\) is a face of C if for all \(x,y\in C, x,y\in f\) with \(z\in (x,y)\), (the open line segment between x and y) we have \(z\in f\).

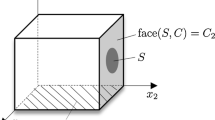

The cone of positive semidefinite matrices is denoted by \({{\mathcal {S}}^n_+\,}\) and its interior is the cone of positive definite matrices, \({{\mathcal {S}}^n_{++}\,}\). The positive semidefinite cone is pointed, closed and convex. Moreover, the cone \({{\mathcal {S}}^n_+\,}\) induces a partial order on \({{\mathcal {S}}^n}\), that is \(Y\succeq X\) if \(Y - X \in {{\mathcal {S}}^n_+\,}\) and \(Y\succ X\) if \(Y-X \in {{\mathcal {S}}^n_{++}\,}\). Every face of \({{\mathcal {S}}^n_+\,}\) is characterized by the range or nullspace of matrices in its relative interior, equivalently, by matrices of maximum rank. For \(S\subseteq {{\mathcal {S}}^n_+\,}\), we denote the minimal face ofS, \({{\,\mathrm{face}\,}}(S)\), the smallest face of \({{\mathcal {S}}^n_+\,}\) that contains S. Let \(X\in {{\mathcal {S}}^n_+\,}\) have rank r with orthogonal spectral decomposition.

Then the range and nullspace characterizations of \({{\,\mathrm{face}\,}}(X)\) are,

We say that the matrix \(QQ^T\) is an exposing vector for \({{\,\mathrm{face}\,}}(X)\).

Sometimes it is helpful to vectorize a symmetric matrix. Let \({{\,\mathrm{{svec}}\,}}: {{\mathcal {S}}^n}\rightarrow \mathbb {R}^{n(n+1)/2}\) map the upper triangular elements of a symmetric matrix to a vector, and let \({{\,\mathrm{{sMat}}\,}}= {{\,\mathrm{{svec}}\,}}^{-1}\).

The centered subspace of\({{\mathcal {S}}^n}\), denoted\({{\mathcal {S}}}_C \), is defined as

where e is the vector of all ones. The hollow subspace of\({{\mathcal {S}}^n}\), denoted\({{\mathcal {S}}}_H\), is

where \({{\,\mathrm{{diag}}\,}}: {{\mathcal {S}}^n}\rightarrow \mathbb {R}^n\), \({{\,\mathrm{{diag}}\,}}(X)\)\(:=(X_{11}, \cdots , X_{nn})^T\). A matrix \(D\in {{\mathcal {S}}}_H\) is said to be a Euclidean distance matrix, EDM if there exists an integer r and points \(x^1,\cdots ,x^n \in \mathbb {R}^r\) such that

where \(||\cdot ||_2\) denotes the Euclidean norm. As for the Frobenius norm, we assume the norm of a vector to be the Euclidean norm when the subscript is omitted. The set of all \(n\times n\)EDMs, denoted \({{{\mathcal {E}}}^n} \), forms a closed, convex cone with \({{{\mathcal {E}}}^n} \subset {{\mathcal {S}}}_H\).

The classical result of Schoenberg [32] states that EDMs are characterized by a face of the positive semidefinite cone. We state the result in terms of the Lindenstrauss mapping, \({{\,\mathrm{{{\mathcal {K}}} }\,}}: {{\mathcal {S}}^n}\rightarrow {{\mathcal {S}}^n}\),

with adjoint and Moore-Penrose pseudoinverse,

respectively. Here \({{\,\mathrm{{Diag}}\,}}\) is the adjoint of \({{\,\mathrm{{diag}}\,}}\), the matrix \(J_n := I - \frac{1}{n}ee^T\) is the orthogonal projection onto \({{\mathcal {S}}}_C \), and \({{\,\mathrm{{offDiag}}\,}}(D)\) refers to zeroing out the diagonal of D, i.e. the orthogonal projection onto \({{\mathcal {S}}}_H\). The range of \({{\,\mathrm{{{\mathcal {K}}} }\,}}\) is exactly \({{\mathcal {S}}}_H\) and the range of \({{\,\mathrm{{{\mathcal {K}}} }\,}}^{\dag }\) is the subspace \({{\mathcal {S}}}_C \). Moreover, \({{\,\mathrm{{{\mathcal {K}}} }\,}}({{\mathcal {S}}^n_+\,}) = {{{\mathcal {E}}}^n} \) and \({{\,\mathrm{{{\mathcal {K}}} }\,}}\) is an isomorphism between \({{\mathcal {S}}}_C \) and \({{\mathcal {S}}}_H\).

The Schoenberg characterization states that \({{\,\mathrm{{{\mathcal {K}}} }\,}}\) is an isomorphism between \({{\mathcal {S}}^n_+\,}\cap {{\mathcal {S}}}_C \) and \({{{\mathcal {E}}}^n} \), see [2] for instance. Specifically,

Moreover, if \(D\in {{{\mathcal {E}}}^n} \) and \({{\,\mathrm{{{\mathcal {K}}} }\,}}^{\dag }(D)=PP^T\) has rank r with full column rank factorization \(PP^T\), then the rows of P correspond to the points in \(\mathbb {R}^r\) with pairwise distances corresponding to the elements of D. For more details, see e.g. [2, 11, 12, 21, 22].

2 SDP Formulation

We begin this section by formulating the SLS problem using the model and notation of [3]. We let n denote the number of sensors, \(p^1,\cdots ,p^n \in \mathbb {R}^r\) denotes their locations, and r is the embedding dimension.

Assumption 2.1

The following holds throughout:

- 1.

\(n \ge r+1\);

- 2.

\(\mathrm{int\,}{{\,\mathrm{{conv}}\,}}(p^1,\cdots , p^n) \ne \emptyset \);

- 3.

\(\sum _{i=1}^n p^i = 0\).

The first two items in Assumption 2.1 ensure that a signal can be uniquely recovered if we have accurate distance measurements. If the towers are positioned in a proper affine subspace of \(\mathbb {R}^r\), and the signal is not contained within this affine subspace, then there are multiple possible locations for the signal with the given distance measurements. We assume that such poor designs are avoided in our applications. The third assumption is made so that the sources are centered about the origin. This property leads to a cleaner exposition in the NEDM relaxations of Sect. 3.

We let \(d=\bar{d} + \varepsilon \in \mathbb {R}^n\) denote the vector of noisy distances from the source to the ith sensor,

where \(\bar{d}_i\) is the true distance and \(\varepsilon _i\) is a perturbation, or noise. When the noise \(\varepsilon _1,\cdots ,\varepsilon _n\) is not too large, then a satisfactory approximation of the location of the source can be obtained as a nearest distance problem to the sensors. Using the Euclidean norm as a metric, we obtain the least squares problem

This problem has the desirable property that its solution is the maximum likelihood estimator when the noise is assumed to be normal and the covariance matrix a multiple of the identity, e.g. [8]. However, it is a non-convex problem with an objective function that is not differentiable. Motivated by the success in [3], the main problem we consider instead is the optimization problem with squared distances

Though still a non-convex problem, in the subsequent sections we show that a solution of \(\mathbf{SLS }\,\) can be obtained by solving at most \(k \le r+1\) convex problems, see Theorem 2.7 below.

2.1 GTRS

The GTRS is an optimization problem where the objective is a quadratic and there is a single two-sided quadratic constraint [29, 33]. Note that this class of problems also includes equality constraints. If we expand the squared norm term in SLS and substitute using \(\Vert x\Vert ^2 = \alpha \) as in [3], we get the equivalent problem

In this formulation, we have a convex quadratic objective that is minimized over a level curve of a convex quadratic function. It follows that (2.3) is an instance of the standard trust region subproblem. Strong duality is proved in [29, 33]. For the sake of completeness, we include a proof of strong duality for our particular class of GTRS.

Theorem 2.2

Let

Consider SLS in (2.2) and the equivalent form given in (2.3). Then:

- 1.

The problem \(\mathbf{SLS }\,\) is equivalent to

$$\begin{aligned} (\mathbf{GTRS }) \qquad p_{\mathbf{SLS }}^*=\min \{ ||Ay - b ||^2 : y^T{\tilde{I}}y + 2{\tilde{b}}^Ty = 0, \ y\in \mathbb {R}^{r+1} \}. \end{aligned}$$(2.5) - 2.

The rank of A is \(r+1\) and the optimal value of GTRS is finite and attained.

- 3.

Strong duality holds for GTRS , i.e. GTRS and its Lagrangian dual have a zero duality gap and the dual value is attained:

$$\begin{aligned} p_{\mathbf{SLS }}^* = d_{\mathbf{SLS }}^*:= \max _\lambda \min _y \{ ||Ay - b ||^2 +\lambda ( y^T{\tilde{I}}y + 2{\tilde{b}}^Ty) \}. \end{aligned}$$(2.6)

Proof

The first claim that SLS can be rewritten as GTRS follows immediately using the substitution \(y = (x^T,\alpha )^T\). For the second claim, note that by Assumption 2.1, Item 2, \({{\,\mathrm{{rank}}\,}}(P_T)=r\). Therefore, \((P_T)^Te=0\) implies that

Now, since A has full column rank, we conclude that \(A^TA\) is positive definite, and therefore the objective of GTRS is strictly convex and coercive. Moreover, the constraint set is closed and thus the optimal value of GTRS is finite and attained, as desired.

That we have a zero duality gap for GTRS follows from [29], since this is a generalized trust region subproblem. We now prove this for our special case. Note that

Let \(\gamma =\lambda _{\min } (4P_T^T P_T)\) be the (positive) smallest eigenvalue of \(4P_T^T P_T\) so that we have \(A^TA-\gamma {\tilde{I}}\succeq 0\), but singular. We note that the convex constraint \(y^T{\tilde{I}}y + 2{\tilde{b}}^Ty \le 0\) satisfies the Slater condition, i.e. strict feasibility. Therefore, the following holds, with justification to follow.

The first equality follows from Item 1 and the second equality holds since \(\gamma (y^T{\tilde{I}}y + 2{\tilde{b}}^Ty)\) is identically 0 for any feasible y.

For the third equality, let the objective and constraint, respectively, be denoted by

The optimal value of (2.8) is a lower bound for \(p^*_{\mathbf{SLS }}\) since the feasible set of (2.8) is a superset of the feasible set of (2.7) and the objectives are the same. Now suppose, for the sake of contradiction, that the optimal value of (2.8) is strictly less than \(p^*_{\mathbf{SLS }}\). Then there exists \(\bar{y}\) satisfying,

Let \(0\ne h \in {{\,\mathrm{Null}\,}}(\nabla ^2 f(\bar{y}))\). Then by the structure of \(A^TA\) and construction of \(\gamma \) we see that \(h = \begin{pmatrix} \bar{h}^T&0\end{pmatrix}^T\) with \(\bar{h} \ne 0\). Moreover, we have,

Now we choose \(\eta \in \{\pm 1\}\) such that,

These observations imply that that there exists \(\bar{\alpha } > 0\) such that

a contradiction.

We have confirmed the third equality. Now (2.8) is a convex quadratic optimization problem where the Slater constraint qualification holds. This implies that strong duality holds, i.e. we get (2.9) with attainment for some \(\lambda \ge 0\). Now if \(\lambda < 0\) in (2.9) then the Hessian of the objective is indefinite (by construction of \(\gamma \)) and the optimal value of the inner minimization problem is \(-\infty \). Thus since (2.9) is maximized with respect to \(\lambda \) in the outer optimization problem, we may remove the non-negativity constraint and obtain (2.10). The remaining lines are due to the definition of the Lagrangian dual and weak duality. Strong duality follows immediately. \(\square \)

The above Theorem 2.2 shows that even though SLS is a non-convex problem, it can be formulated as an instance of GTRS and satisfies strong duality. Therefore it can be solved efficiently using, for instance, the algorithm of [29]. Moreover, in the subsequent results we show that SLS is equivalent to its semidefinite programming (SDP) relaxation in (2.15), a convex optimization problem.

We compare our SDP approach with the approach used by Beck et al. [3]. In their approach they have to solve the following system obtained from the optimality conditions of GTRS:

The so-called hard case results in \(A^T A + \lambda ^* {\tilde{I}}\) being singular for the optimal \(\lambda ^*\) and this can cause numerical difficulties. We note that in our SDP relaxation, we need not differentiate between the ‘hard case’ and ‘easy case’.

2.2 The semidefinite relaxation, SDR

We now study the convex equivalent of SLS. We analyze the dual and the SDP relaxation of GTRS. By homogenizing the quadratic objective and constraint and using the fact that strong duality holds for the standard trust region subproblem [34], we obtain an equivalent formulation of the Lagrangian dual of GTRS as an SDP. We first define

The Lagrangian dual of GTRS may be obtained as follows:

Here the first equality follows from the definition of the dual. The second and third equalities are just equivalent reformulations of the first one. For the last equality, let \({\tilde{y}} = [y^T, y_0]^T\) and \(M = \bar{A} - \lambda \bar{B} - s e_{r+2}e_{r+2}^T \) and suppose \({\tilde{y}}^T M {\tilde{y}} <0\) for some \({\tilde{y}}\). If \(y_0\) is nonzero, we can get a contradition by scaling \({\tilde{y}}\). If \(y_0\) is zero, by the continutity of \({\tilde{y}} \rightarrow {\tilde{y}}^T M {\tilde{y}}\), we can perturb \({\tilde{y}}\) by a small enough amount so that the last element of \({\tilde{y}}\) is nonzero. This is a contradiction as in the previous case.

We observe that (2.14) is a dual-form SDP corresponding to the primal SDP problem, e.g. [39],

Now let \(\mathcal {F}\) and \(\Omega \), respectively, denote the feasible and optimal sets of solutions of SDR. We define the map \(\rho : \mathbb {R}^{r+1} \rightarrow {{\mathcal {S}}} ^{r+2}\) as,

Note that \(\rho \) is an isomorphism between \(\mathbb {R}^{r+1}\) and rank 1 matrices of \({{\mathcal {S}}} ^{r+2}_+\), where the \((r+2,r+2)\) element is 1.

Lemma 2.3

The map \(\rho \) is an isomorphism between the feasible sets of GTRS and SDR. Moreover, the objective value is preserved under \(\rho \), i.e. \(||Ay-b||^2 = \langle \bar{A}, \rho (y) \rangle \).

Theorem 2.4

The following holds:

- 1.

The optimal values of GTRS, SDR, and (2.14) are all equal, finite, and attained.

- 2.

The matrix \(X^*\) is an extreme point of \(\Omega \) if, and only if, \(X^* = \rho (y^*)\) for some minimizer, \(y^*\), of GTRS.

Proof

From Theorem 2.2 and weak duality, we have that

Moreover, since SDR is a relaxation of GTRS we get,

Furthermore, from Theorem 2.2 the above values are all finite and the optimal values of GTRS and (2.14) are attained. To see that the optimal value of SDR is attained it suffices to show that (2.14) has a Slater point. Indeed, the feasible set of (2.14) consists of all \(\mu , s \in \mathbb {R}\) such that,

Setting \(\mu =0\) and applying the Schur complement condition, we have

By Theorem 2.2, \(A^TA\) is positive definite and a Slater point may be obtained by choosing s so that \(b^Tb - s\) is sufficiently large.

Now we consider Item 2.4. By the existence of a Slater point for (2.14) we know that \(\Omega \) is compact and convex. Now we show that \(\Omega \) is actually a face of \(\mathcal {F}\). To see this, let \(\theta \in (0,1)\) and let \(Z = \theta X + (1-\theta )Y \in \Omega \) for some \(X, Y\in \mathcal {F}\). Since Z is optimal for SDR and X and Y are feasible for SDR, we have

Now equality holds throughout and we have \(\langle \bar{A}, X\rangle = \langle \bar{A}, Y\rangle = \langle \bar{A}, Z\rangle \). Therefore \(X,Y \in \Omega \) and by the definition of face, we conclude that \(\Omega \) is a face of \(\mathcal {F}\).

Since \(\Omega \) is a compact convex set it has an extreme point, say \(X^*\). Now \(X^*\) is also an extreme point of \(\mathcal {F}\), as the relation face of is transitive, i.e. a face of a face is a face. Moreover, since there are exactly two equality constraints in SDR, by Theorem 2.1 of [28], we have \({{\,\mathrm{{rank}}\,}}(X^*)(1 + {{\,\mathrm{{rank}}\,}}(X^*))/2 \le 2\). This equation is satisfied if, and only if, \({{\,\mathrm{{rank}}\,}}(X^*) = 1\). Equivalently, \(X^* = \rho (y^*)\) for some \(y^* \in \mathbb {R}^{r+1}\). Now, by Lemma 2.3 and the first part of this proof we have that \(y^*\) is a minimizer of GTRS .

For the converse in Item 2.4, let \(y^*\) be a minimizer of GTRS. Then by Lemma 2.3, \(X^*:= \rho (y^*)\) is optimal for SDR. To see that \(X^*\) is an extreme point of \(\Omega \), let \(Y,Z \in \Omega \) such that

Since \(X^*\) has rank 1 and \(Y,Z \succeq 0\), it follows that Y and Z are non-negative multiples of \(X^*\). But by feasibility, \(X^*_{r+2,r+2} = Y_{r+2,r+2} = Z_{r+2,r+2}\) and thus \(Y=Z=X^*\). So, by definition, \(X^*\) is an extreme point of \(\Omega \), as desired. \(\square \)

We have shown that the optimal value of SLS may be obtained by solving the nice convex problem SDR. Moreover, every extreme point of the optimal face of SDR can easily be transformed into an optimal solution of SLS. However, SDR is usually solved using an interior point method that is guaranteed to converge to a relative interior solution of \(\Omega \). In general, such a solution may not have rank 1. In the following corollary of Theorem 2.4 we address those instances for which the solution of SDR is readily transformed into a solution of SLS. For other instances, we present an algorithmic approach in Sect. 2.2.1.

Corollary 2.5

The following hold.

- 1.

If GTRS has a unique minimizer, say \(y^*\), then the optimal set of SDR is the singleton \(\rho (y^*)\).

- 2.

If the optimal set of SDR is a singleton, say \(X^*\), then \({{\,\mathrm{{rank}}\,}}(X^*) = 1\) and \(\rho ^{-1}(X^*)\) is the unique minimizer of GTRS.

Proof

Let \(y^*\) be the unique minimizer of GTRS . By Theorem 2.4 we know that \(\rho (y^*)\) is an extreme point of \(\Omega \). Now suppose, for the sake of contradiction, that there exists \(X \ne \rho (y^*)\) in \(\Omega \). Since \(\Omega \) is a compact convex set it is the convex hull of its extreme points. Thus there exists an extreme point of \(\Omega \), say Y, that is distinct from \(\rho (y^*)\). By Theorem 2.4, we know that \(\rho ^{-1}(Y)\) is a minimizer of GTRS and by Lemma 2.3, \(\rho ^{-1}(Y) \ne y^*\), contradicting the uniqueness of \(y^*\).

For the converse, let \(X^*\) be the unique minimizer of SDR. Then \(X^*\) is the only extreme point of \(\Omega \) and consequently \(\rho ^{-1}(X^*)\) is the unique minimizer of GTRS, as desired. \(\square \)

2.2.1 A purification algorithm

Suppose the optimal solution of (2.15) is \(\bar{X}\) with optimal value \(p_{\mathbf{SDR }\,\,}^*= \langle \bar{A},\bar{X}\rangle \) and \({{\,\mathrm{{rank}}\,}}(\bar{X}) = \bar{r}\) where \(\bar{r}>1\). Note that we can not obtain an optimal solution of GTRS from \(\bar{X}\) since the rank is too large. However, in this section we construct an algorithm that returns an extreme point of \(\Omega \) which, by Theorem 2.4, is easily transformed into an optimal solution of GTRS. We note that this does not require the extreme point to be an exposed extreme point.

Let the compact spectral decomposition of \(\bar{X}\) be \(\bar{X}:= U D U^T \) with \(D \in {{\mathcal {S}}} _{++}^{\bar{r}}\). We use the substitution \(X = U S U^T\) and solve the problem (2.20), below, to obtain an optimal solution with lower rank. Note that \(D\succ 0\) is a strictly feasible solution for (2.20). We choose the objective matrix \(C\in {{\mathcal {S}}} ^{\bar{r}}_{++}\) to be random and positive definite. To simplify the subsequent exposition, by abuse of notation, we redefine

where \(\bar{E}:= e_{r+2}e_{r+2}^T\). We define the linear map \({{\mathcal {A}}}: \mathbb {S}^{\bar{r}} \rightarrow \mathbb {R}^3\) and the vector \(b \in \mathbb {R}^3\) as,

respectively. The rank reducing program is

In Algorithm 2.1 we extend the idea of the rank reducing program and in the subsequent results we prove that the output of the algorithm is a rank 1 optimal solution of SDR.

Lemma 2.6

Let \(k \ge 1\) be an integer and suppose that \(C^k\), \({{\mathcal {A}}}_S^k\), and \(b^k_S\) are as in Algorithm 2.1. Then

Proof

By construction, \(D^k\in \mathcal {F}^k\). Therefore,

For the forward direction, assume that \(S^{k+1} \succ 0\) and, for the sake of contradiction, suppose that, \(S^{k+1}\) is not the only element of \(\mathcal {F}^k\). Then \(S^{k+1} \in {{\,\mathrm{{relint}}\,}}(\mathcal {F}^k)\) and for any \(T \in {{\,\mathrm{Null}\,}}({{\mathcal {A}}}^k_S)\) there exists \(\varepsilon > 0\) such that,

By the choice of \(C^k\), there exists \(T \in {{\,\mathrm{Null}\,}}({{\mathcal {A}}}^k_S)\) such that \(\langle C^{k}, T \rangle \ne 0\) and we may assume, without loss of generality, that this inner product is in fact negative. Then,

contradicting the optimality of \(S^{k+1}\). \(\square \)

Theorem 2.7

Let \(\bar{X} \in {{\mathcal {S}}} ^{r+2}_+\) be an optimal solution to SDR . If \(\bar{X}\) is an input to Algorithm 2.1, then the algorithm terminates with at most \({{\,\mathrm{{rank}}\,}}(\bar{X}) - 1\le r+1\) calls to the while loop and the output, \(X^*\), is a rank 1 optimal solution of SDR.

Proof

We proceed by considering the trivial case, \({{\,\mathrm{{rank}}\,}}(\bar{X}) = 1\). Clearly \(X^* = \bar{X}\) in this case, and we have the desired result. Thus we may assume that the while loop is called at least once. We show that for every \(S^k\) generated by Algorithm 2.1 with \(k \ge 1\), we have,

To this end, let us consider the constraint \(\langle \bar{B}, S^k \rangle = 0\). By the update formula (2.18), we have,

Similarly the other two constraints comprising \({{\mathcal {A}}}^{k-1}_S\) are satisfied by \(X^k\) and therefore \(X^k \in \Omega \).

Now we show that the sequence of ranks, \(r_1,r_2,\cdots \), generated by Algorithm 2.1 is strictly decreasing. It immediately follows that the algorithm terminates in at most \({{\,\mathrm{{rank}}\,}}(\bar{X}) -1\) calls to the while loop and that the output matrix \(X^*\) has rank 1. Suppose, to the contrary, that there exists an integer \(k \ge 2\) such that \(r_k = r_{k-1}\). Then by construction, we have that \({{\,\mathrm{{rank}}\,}}(S^k) = r_k = r_{k-1}\) and \(S^k\) is a Slater point of the optimization problem,

Therefore, by Lemma 2.6 we have that \(S^k\) is the only feasible solution of (2.22). Now we claim that \(X^k\) as defined above is an extreme point of \(\Omega \). To see this, let \(Y^k,Z^k \in \Omega \) such that \(X^k = \frac{1}{2} Y^k + \frac{1}{2} Z^k\). Since \(Y^k\) and \(Z^k\) are both positive semidefinite we have that

Thus there exist \(V^k, W^k \in {{\mathcal {S}}} ^{r_{k-1}}_+\) such that,

and it follows that \(V^k\) and \(W^k\) are feasible for (2.22). By uniqueness of \(S^k\) we have that \(Y^k = Z^k = X^k\) and \(X^k\) is an extreme point of \(\Omega \). Then by Theorem 2.4, \({{\,\mathrm{{rank}}\,}}(S^k) = 1\) and Algorithm 2.1 terminates before generating \(r_k,\) a contradiction. \(\square \)

We remark that in many of our numerical tests the rank of \(\bar{X}\) was 2 or 3. Consequently, the purification process did not require many iterations.

3 EDM Formulation

In this section we use the Lindenstrauss operator, \({{\,\mathrm{{{\mathcal {K}}} }\,}}\), and the Schoenberg characterization to formulate SLS as an EDM completion problem. Recall that the exact locations of the sensors (towers) are known, and that the tower-source distances are noisy. The corresponding EDM restricted to the towers is denoted \(D_T\) and is defined by

Then the approximate EDM for the sensors and the source is

Recall that

From Assumption 2.1 the towers are centered, i.e. \(e^TP_T = 0\). This property is desirable due to the Schoenberg characterization which states that \({{\,\mathrm{{{\mathcal {K}}} }\,}}\) is an isomorphism between \({{\mathcal {S}}^n_+\,}\cap {{\mathcal {S}}}_C \) and \({{{\mathcal {E}}}^n} \). Moreover, it allows for easy recovery of the towers in the last step of our algorithm by solving a Procrustes problem.

Now let \(G_T:= P_TP_T^T\) be the Gram matrix restricted to the towers, and note that

The nearest EDM problem with fixed sensors is

For any \(x \in \mathbb {R}^n\) let

By simplifying the objective, we see that the NEDMP problem in (3.1) is indeed equivalent to \(\mathbf{SLS }\,\), i.e.

The approach of [13] for the related sensor network localization problem is to replace the matrix \(\begin{bmatrix} P_T \\ x^T \end{bmatrix}\begin{bmatrix} P_T \\ x^T \end{bmatrix}^T\) in (3.1) with the positive semidefinite matrix variable \(X \in {{\mathcal {S}}^{n+1}\,}\), and then introduce a constraint on the block of X corresponding to the sensors. Taking this approach, we obtain nearest Euclidean distance matrix with fixed sensors (NEDMF) problem,

where

The objective of this (3.2) is exactly the objective of SLS (acting on the matrix variable) and the affine constraint restricts X to those Gram matrices for which the block corresponding to the sensors has exactly the same distances as \(P_TP_T^T\). That is, if

is feasible for (3.2), with \(X_T \in \mathbb {R}^{n\times r}\) and \(x_c \in \mathbb {R}^r\), then \(X_T\) differs from \(P_T\) only by translation and rotation. Since neither translation nor rotation affect the distances between the rows of \(X_T\) and \(x_c\) we translate the points in \(\mathbb {R}^r\) so that \(X_T\) is centered. This corresponds to the assumption that \(P_T\) is centered. Then we solve the Procrustes problem

to obtain the rotation and thus have a complete description of the transformation from \(X_T\) to \(P_T\). Applying the transformation to \(x_c\) yields a vector feasible for SLS . Thus every feasible solution of (3.2), corresponds to a feasible solution of SLS . The converse is trivially true and we conclude that (3.2) is equivalent to SLS due to the rank constraint. We show in the subsequent sections that the relaxation where the rank and the linear constraints are dropped, may be used to solve the problem accurately in a large number of instances.

3.1 The relaxed NEDM problem

3.1.1 Nearest Euclidean distance matrix formulation

One relaxation of (3.2) is obtained by removing the affine constraint and modifying the objective as follows:

Due to the semidefinite characterization of \({{{\mathcal {E}}}^{n+1}} \) this problem is the projection of \(D_{T_c}\) onto the set of EDMs with embedding dimension at most r. The motivation behind this relaxation is the assumption that the distance measurements corresponding to the sensors are very accurate. Therefore, any minimizer of NEDM will likely have the first n points very near the sensors. As we show in the subsequent sections by introducing weights, we can obtain a solution arbitrarily close to that of (3.2).

The challenge in problem NEDM is the rank constraint. A simpler problem is to first solve the unconstrained least squares problem and then to project the solution onto the set of positive semidefinite matrices with rank at most r. This is equivalent to solving the inverse nearest EDM problem:

Note that if the positive semidefinite constraint is removed, this problem is just the projection onto the matrices with rank at most r. By the Eckart–Young theorem, this projection is a rank r matrix obtained by setting the \(n-r\) smallest eigenvalues (in magnitude) of \({{\,\mathrm{{{\mathcal {K}}} }\,}}^{\dag }(D_{T_c})\) to zero. In the following lemma we show that for sufficiently small noise, the negative eigenvalue is of small magnitude and hence the Eckart–Young rank r projection is positive semidefinite. We denote by \(\overline{D} \in {{\mathcal {S}}} ^{n+1}\) the true EDM of the sensors and the source, that is

It is easy to see from the definitions of \(\bar{d}\) and \(\varepsilon \) that,

Theorem 3.1

The rank of \({{\,\mathrm{{{\mathcal {K}}} }\,}}^{\dagger }(D_{T_c})\) is at most \(r + 2\). Moreover, \({{\,\mathrm{{{\mathcal {K}}} }\,}}^{\dagger }(D_{T_c})\) has at most 1 negative eigenvalue with magnitude bounded above by \(\frac{\sqrt{2}}{2} ||J_{n+1} ||^2 ||\varepsilon ||\).

Proof

First we note that the norm of \(e_{n+1} \xi ^T + \xi e_{n+1}^T\) is bounded above by the magnitude of the noise:

Next we observe that the matrix \(e_{n+1} \xi ^T + \xi e_{n+1}^T\) has trace 0 and rank 2. Thus \(e_{n+1} \xi ^T + \xi e_{n+1}^T\) has exactly one negative and one positive eigenvalue. By the Moreau decomposition theorem, e.g. [23], \(e_{n+1} \xi ^T + \xi e_{n+1}^T\) may be expressed as the sum of two rank one matrices, say \(P \succeq 0\) and \(Q \preceq 0\), that are the projections of \(e_{n+1} \xi ^T + \xi e_{n+1}^T\) onto \({{\mathcal {S}}^{n+1}_+\,}\) and \(-{{\mathcal {S}}^{n+1}_+\,}\), respectively. Now we have,

where the first term in the last line is positive semidefinite with at least r and at most \(r + 1\) positive eigenvalues (\(-J_{n+1}\overline{D}J_{n+1}\) is a positive semidefinite matrix with rank r and \(-J_{n+1}QJ_{n+1}\) is positive semidefinite with rank at most 1); and the second term is negative semidefinite with at most one negative eigenvalue. Using the Cauchy–Schwartz inequality it can be shown that for \(X,Y \in {{\mathcal {S}}^n}\),

By (3.7) and the fact that P is a projection of \(e_{n+1}\xi ^T + \xi e_{n+1}^T\) onto \(-{{\mathcal {S}}^{n+1}_+\,}\), we have

It follows that \({{\,\mathrm{{{\mathcal {K}}} }\,}}^{\dag }(D_{T_c})\) has rank at most \(r+2\) and by the Courant–Fischer–Weyl theorem, e.g. [37], it has at most one negative eigenvalue whose magnitude is bounded above by \(\frac{\sqrt{2}}{2} ||J_{n+1} ||^2 ||\varepsilon ||\), as desired. \(\square \)

The following corollary follows immediately.

Corollary 3.2

If \(||\varepsilon ||\) is sufficiently small, the optimal solution of NEDMinv is the rank r Eckart–Young projection of \({{\,\mathrm{{{\mathcal {K}}} }\,}}^{\dag }(D_{T_c})\).

3.1.2 Weighted, facially reduced NEDM

While we have discarded the information pertaining to the locations of the sensors in relaxing the problem (3.2) to the problem NEDM , we still make use of the distances between the sensors. Thus, to some extent the locations of the sensors have an implicit effect on the optimal solution of NEDM and the approximation NEDMinv from the previous section. In this section we take greater advantage of the known distances between the sensors by restricting NEDM to a face of \({{\mathcal {S}}^{n+1}_+\,}\) by facial reduction.

The true Gram matrix, \({{\,\mathrm{{{\mathcal {K}}} }\,}}^{\dagger }(\overline{D})\), belongs to the set,

Now the constraint \(X\succeq 0\) in NEDM , may actually be refined to say \(X \in {{\,\mathrm{face}\,}}(F_T,{{\mathcal {S}}} ^{n+1}_+)\) which is the following:

Moreover, we may obtain a closed form expression for \({{\,\mathrm{face}\,}}(F_T,{{\mathcal {S}}} ^{n+1}_+)\) in the form of an exposing vector. To see this, consider the spectral decomposition of the sensor Gram matrix,

Note that \(W_TW_T^T\) is an exposing vector for \({{\,\mathrm{face}\,}}(G_T,{{\mathcal {S}}} _{c,+}^n)\) since the following two conditions hold:

We now extend \(W_TW_T^T\) to an exposing vector for \({{\,\mathrm{face}\,}}(F_T,{{\mathcal {S}}} ^{n+1}_+)\).

Lemma 3.3

Let \({\overline{W}}_T:= [ W_T^T \quad 0]^T\) and let \(W := \overline{W}_T\overline{W}_T^T + ee^T\). Then,

- 1.

\(\overline{W}_T\overline{W}_T^T\) exposes \({{\,\mathrm{face}\,}}(F_T,{{\mathcal {S}}} _{c,+}^{n+1})\),

- 2.

W exposes \({{\,\mathrm{face}\,}}(F_T,{{\mathcal {S}}} ^{n+1}_+)\).

Proof

This statement is a special case of Theorem 4.13 of [16]. \(\square \)

Note that \({{\,\mathrm{face}\,}}({{\,\mathrm{{{\mathcal {K}}} }\,}}^{\dagger }(\overline{D}),{{\mathcal {S}}} ^{n+1}_+) \subsetneq {{\,\mathrm{face}\,}}(F_T, {{\mathcal {S}}} ^{n+1}_+)\) since,

Through W we have a ‘nullspace’ characterization of \({{\,\mathrm{face}\,}}(F_T,{{\mathcal {S}}} ^{n+1}_+)\). However, the ‘range space’ characterization is more useful in the context of semidefinite optimizaiton as it leads to dimension reduction, numerical stability, and strong duality. To this end, we consider any \((n+1)\times (r+1)\) matrix such that its columns form a basis for \({{\,\mathrm{null}\,}}(W)\). One such choice is,

To verify that the columns of V indeed form a basis for \({{\,\mathrm{null}\,}}(W)\), we first observe that \({{\,\mathrm{{rank}}\,}}(V) = r + 1\) and secondly we have,

It follows that

Thus we may replace the variable X in NEDMP by \(VRV^T\) for \(R\in {{\mathcal {S}}} ^{r+1}_+\). To simplify the notation, we define the composite map \({{\,\mathrm{{{\mathcal {K}}} }\,}}_V := {{\,\mathrm{{{\mathcal {K}}} }\,}}(V\cdot V^T)\). Moreover, we introduce a weight matrix to the objective and obtain the weighted facially reduced problem,FNEDM,

Here \(H_{\alpha } := \alpha H_T +H_c\) and \(\alpha \) is positive. Let us make a few comments regarding this problem. When \(\alpha = 1\) the weight matrix has no effect and FNEDM reduces to NEDMP . On the other hand, when \(\alpha \) is very large, the solution has to satisfy the distance constraints for the sensors more accurately and in this case FNEDM approximates (3.2). In fact, in Theorem 3.9 we prove that the solution to FNEDM approaches that of (3.2) as \(\alpha \) increases.

We begin our analysis by proving that \(V_{\alpha }\) is attained.

Lemma 3.4

Let \(\alpha >0\). Then

- 1.

\({{\,\mathrm{null}\,}}(H_{\alpha } \circ {{\,\mathrm{{{\mathcal {K}}} }\,}}_V) = \{0\}\),

- 2.

\(f(R,\alpha )\) is strictly convex and coercive,

- 3.

the problem FNEDM admits a minimizer.

Proof

For Item 1, under the assumption that \(\alpha >0\), we have \(H_{\alpha } \circ {{\,\mathrm{{{\mathcal {K}}} }\,}}_V(R) = 0\) if, and only if, \({{\,\mathrm{{{\mathcal {K}}} }\,}}_V(R) = 0\). Recall that \({{\,\mathrm{{{\mathcal {K}}} }\,}}\) is one-to-one between the centered and hollow subspaces and \({{\,\mathrm{{{\mathcal {K}}} }\,}}(0) = 0\). By construction, \({{\,\mathrm{range}\,}}(V\cdot V^T)\) is a subset of the centered matrices. Hence \(H \circ {{\,\mathrm{{{\mathcal {K}}} }\,}}_V(R)=0\) if, and only if, \(VRV^T = 0\). Since V is full column rank, \(VRV^T = 0\) if, and only if, \(R=0\), as desired.

Now we turn to Item 2. The function \(f(R,\alpha )\) is quadratic with a positive semidefinite second derivative. Moreover, by Item 1, the second derivative is positive definite. Therefore \(f(R,\alpha )\) is strictly convex and coercive.

Finally, the feasible set of FNEDM is closed. Combining this observation with coercivity of the objective, from Item 2, we obtain Item 3. \(\square \)

We conclude this subsection by deriving the optimality conditions for the convex relaxation of FNEDM , which is obtained by dropping the rank constraint.

Lemma 3.5

The matrix \(R\in {{{\mathcal {S}}} ^{r+1}_+\,}\) is optimal for the relaxation of (3.12) obtained by ignoring the rank constraint if, and only if,

In addition, R is optimal for (3.12) if \({{\,\mathrm{{rank}}\,}}R \le r\).

Proof

From the Pshenichnyi–Rockafellar conditions, R is optimal if, and only if, \(\nabla f(R) \in ({{{\mathcal {S}}} ^{r+1}_+\,}- R)^+\), the nonnegative polar cone. This condition holds if, and only if, for all \(X\in {{{\mathcal {S}}} ^{r+1}_+\,}\) and \(\alpha >0\), we have

which implies that \(\alpha \langle \nabla f(R), X\rangle \ge \langle \nabla f(R), R\rangle \) for every \(\alpha > 0\). Since \(\alpha \) may be arbitrarily large we get that \(\langle \nabla f(R), X\rangle \ge 0\) for all \(X \in {{{\mathcal {S}}} ^{r+1}_+\,}\). Therefore, we conclude that \(\nabla f(R) \in ({{{\mathcal {S}}} ^{r+1}_+\,})^+ = {{{\mathcal {S}}} ^{r+1}_+\,}\). Moreover, setting \(X=0\), we get,

hence orthogonality holds. \(\square \)

3.1.3 Analysis of FNEDM

In this section we show that the optimal value of FNEDM is a lower bound for the optimal value of SLS. Moreover, the this lower bound becomes exact as \(\alpha \) is increased to \(+\infty \).

In the \(\mathbf{SLS }\) model, the distances between the towers are fixed, while in the \(\mathbf{NEDM }\,\) model (3.4), the distances between towers are free. The facial reduction model allows the distances between the towers to change but the towers can still be transformed back to their original positions by a square matrix \(Q \in \mathbb {R}^{r \times r}\). Note that Q does not have to be orthonormal, so it is possible that \(QQ^T \ne I\).

Theorem 3.6

Let \(P_T\) be as above, V as in (3.10), and let P be a centered matrix with,

Then there exists a matrix \(Q \in \mathbb {R}^{r\times r}\) such that \(P_TQ = J_nT\) if, and only if,

Proof

Since P is centered,

Substituting into the equation \(P_TQ = J_nT\) we get,

which yields the following expression for P,

Now by (3.11) we have,

Applying (3.13) we verify that the last statement in the equivalence holds,

as desired.

For the other direction, let

and recall that \(V = J_{n+1}V_1\). Suppose \(P P^T \) belongs to the face \(V {{\mathcal {S}}} _+^{r+1}V^T\). Then \(P = J_{n+1}V_1 M\) for some \(M \in \mathbb {R}^{(r+1)\times r}\). We show that if \(Q \in \mathbb {R}^{r\times r}\) denotes the first r rows of M, then \(P_TQ = J_nT\). To this end, let \(\bar{J} = [J_n \quad 0]\) and observe that \(\bar{J} P = J_n T\). Moreover, since \(\bar{J}\) is centered, \(\bar{J} J_{n+1} = \bar{J}\). Then,

as desired. \(\square \)

Theorem 3.6 indicates that when using the facial reduction model FNEDM we can use a least square approach to exactly get back the original positions of the sensors. This approach will be discussed in Sect. 3.2 along with the Procrustes approach.

In the following, we show that the optimal value of the problem in (3.12) is not greater than the optimal value of the \(\mathbf{SLS }\,\) estimates (2.2) or (3.2). We also prove that the solution to FNEDM approaches that of (3.2) as \(\alpha \) increases.

Lemma 3.7

Consider the problem,

Then \(V_T\) is finite and satisfies \(V_T=V_S\).

Proof

That \(V_T\) is finite, follows from arguments analogous to those used in Lemma 3.4.

For the equality claim, it is clear that \(V_S \le V_T\). To show that \(V_S \ge V_T\), consider X that is feasible for (3.2). First we show that X may be assumed to be centered. To see this, consider \(\hat{X} = J_nXJ_n\). Note that \(\hat{X}\) is the orthogonal projection of X onto \({{\mathcal {S}}} _c\) and it can be verified that \(\hat{X} = {{\,\mathrm{{{\mathcal {K}}} }\,}}^{\dagger } {{\,\mathrm{{{\mathcal {K}}} }\,}}(X)\). Now it is clear that \(\hat{X} \succeq 0\) and that \({{\,\mathrm{{{\mathcal {K}}} }\,}}(\hat{X}) = {{\,\mathrm{{{\mathcal {K}}} }\,}}(X)\). Moreover, since \(J_n\) is singular we have, \({{\,\mathrm{{rank}}\,}}(\hat{X}) \le {{\,\mathrm{{rank}}\,}}(X)\). Therefore, \(\hat{X}\) is also feasible for (3.2) and provides the same objective value as X.

Now there exists \(T \in \mathbb {R}^{n\times r}\) and \(c \in \mathbb {R}^r\) such that,

Then, from the tower constraint of (3.2) we get the implications,

Thus, there exists an orthogonal Q such that \(J_nT = P_TQ\). By Theorem 3.6 we have \(X \in V {{\mathcal {S}}} _+^{r+1} V^T\) and it follows that \(V_S \ge V_T\). \(\square \)

Lemma 3.8

Let \(0<\alpha _1<\alpha _2\). Then,

Moreover, the first inequality is strict if \(D_{T_c}\) is not an EDM with embedding dimension r.

Proof

For the first inequality, let \(R \succeq 0 \) be such that \({{\,\mathrm{{rank}}\,}}(R) \le r\). Then,

Note that the inequality is strict if, and only if, \(\Vert H_T \circ ({{\,\mathrm{{{\mathcal {K}}} }\,}}_V(R) - D_{T_c} )\Vert \) is positive. This holds for all R, if \(D_{T_c}\) is not an EDM with embedding dimension r.

For the second inequality, we first observe that,

Now \(V_T\) and \(V_{\alpha _2}\) are both optimal values of \(f(R,\alpha _2)\) over their respective domains, but the domain for \(V_T\) is smaller than that of \(V_{\alpha _2}\). Hence, the second inequality holds. \(\square \)

Theorem 3.9

For any \(\alpha > 0\), let \(R_{\alpha }\) denote the minimizer of FNEDM . Let \(\{\alpha _{\ell }\}_{\ell \in \mathbb {N}} \subset \mathbb {R}_{++}\) be a sequence of increasing numbers such that \(R_{\alpha _{\ell }} \rightarrow \bar{R}\) for some \(\bar{R} \in {{\mathcal {S}}} ^{r+1}\). Then \(V_{\alpha } \uparrow V_T\) and \(\bar{R}\) is a minimizer of (3.14).

Proof

First we note that \(R_{\alpha }\) is well defined by Lemma 3.4. Now, from Lemma 3.8, we have that \(V_{\alpha }\) is monotonically increasing and bounded above by \(V_T\). Hence there exists \(V^*\) such that,

Next, we show that \(\bar{R}\) is feasible for (3.14). Since \({{\mathcal {S}}} ^{r+1}_+\) is closed and the rank function is lower semicontinuous, we have \({{\,\mathrm{{rank}}\,}}(\bar{R}) \le r\) and \(\bar{R} \succeq 0\). Moreover, for every \(\ell \in \mathbb {N}\),

Rearranging and taking the limit we get,

The last equality follows from the continuity of h. Since the limit in (3.16) exists we get,

by continuity. Thus \(\bar{R}\) is feasible for (3.14) and we have \(h(\bar{R}) \ge V_T\). On the other hand, from (3.16) we have \(h(\bar{R}) \le V^*\). Combining these observations with (3.15) we get,

Now equality holds throughout (3.18) and the desired results are immediate. \(\square \)

3.1.4 Solving FNEDM

The solution set of the unconstrained version of (3.12) can be stated in terms of the Moore–Penrose generalized inverse of \(H_{\alpha } \circ {{\,\mathrm{{{\mathcal {K}}} }\,}}_V\), denoted by \((H_{\alpha } \circ {{\,\mathrm{{{\mathcal {K}}} }\,}}_V)^{\dagger }\). Indeed, the solution to the least squares problem is,

In this subsection we explore the relationship between the optimal solution of FNEDM and the eigenvalues of \(R_{LS}\). In general the Moore–Penrose inverse may be difficult to obtain, however, the following result implies that \(R_{LS}\) may be derived efficiently and it is the unique minimizer of f.

Lemma 3.10

Let \(R_{LS}\) be as in (3.19). Then, \(R_{LS}\) is the unique minimizer of f and

Proof

That \(R_{LS}\) is the unique minimizer of f follows from strict convexity as in Item 2 of Lemma 3.4. Moreover, by Item 1 of Lemma 3.4, we have \({{\,\mathrm{null}\,}}(H_{\alpha } \circ {{\,\mathrm{{{\mathcal {K}}} }\,}}_V) = \{0\}\) which implies that \((H_{\alpha } \circ {{\,\mathrm{{{\mathcal {K}}} }\,}}_V)^{\dagger }\) is the left inverse. The desired expression for \(R_{LS}\) is obtained by substituting the left inverse into (3.19). \(\square \)

Note that \((H_{\alpha } \circ {{\,\mathrm{{{\mathcal {K}}} }\,}}_V)^*(H_{\alpha } \circ {{\,\mathrm{{{\mathcal {K}}} }\,}}_V)\) admits an \(r\times r\) matrix representation. Thus if r is small, as in many applications, the inverse of \((H_{\alpha } \circ {{\,\mathrm{{{\mathcal {K}}} }\,}}_V)^*(H_{\alpha } \circ {{\,\mathrm{{{\mathcal {K}}} }\,}}_V)\), and consequently \(R_{LS}\), may be obtained efficiently.

We consider three cases regarding the eigenvalues of \(R_{LS}\), each of which corresponds to a different approach to solving FNEDM .

Case I\(R_{LS} \succeq 0 \) and \({{\,\mathrm{{rank}}\,}}(R_{LS}) \le r\).

Case II\(R_{LS} \notin {{\mathcal {S}}} ^{r+1}_+\).

Case III\(R_{LS} \succ 0\).

In the best scenario, Case I, we have that \(R_{LS}\) is the unique minimizer of FNEDM . In this case FNEDM reduces to an unconstrained convex optimization problem. Moreover, we have a closed form solution for the minimizer, \(R_{LS}\). In Case II, the minimizer of FNEDM may also be obtained through a convex relaxation as is indicated by the following result.

Theorem 3.11

Let \(R^{\star }\) denote the minimizer of the relaxation of FNEDM where the rank constraint is removed. If \(R_{LS} \notin {{\mathcal {S}}} ^{r+1}_+\), then \(R^{\star }\) is a minimizer of FNEDM .

Proof

Let \(R^{\star }\) denote the optimal solution of \(\mathbf{FNEDM }\,\) without the rank constraint. Note that \(R^{\star }\) exists by arguments analogous to those in Lemma 3.4. If \({{\,\mathrm{{rank}}\,}}(R^{\star }) \le r\), then clearly \(R^{\star }\) is a minimizer of FNEDM . Thus we may assume that \(R^{\star } \succ 0\).

Since \(R_{LS}\) is the unique minimizer of f, we have \(f(R_{LS}) < f(R^{\star })\). Moreover, by strict convexity of f, every matrix R in the relative interior of the line segment \([R_{LS},R^{\star }]\) satisfies \(f(R) < f(R^{\star })\). Now since \(R^{\star } \succ 0\) there exists \(\bar{R} \in {{\,\mathrm{{relint}}\,}}[R_{LS},R^{\star }] \cap {{\mathcal {S}}} ^{r+1}_+\). Then, \(\bar{R}\) is feasible for the relaxation of FNEDM where the rank constraint is removed. However, \(f(\bar{R}) < f(R^{\star })\), contradicting the optimality of \(R^{\star }\). \(\square \)

In Case III we are motivated by the primal-dual approach of [30, 31] and the penalty approach of [19, 30, 31]. Let \(h = [1,\cdots , \alpha ]^T\), we notice that \(H_{\alpha }\circ Y = h h^T \circ Y = {{\,\mathrm{{Diag}}\,}}(h) Y {{\,\mathrm{{Diag}}\,}}(h)\) if \({{\,\mathrm{{diag}}\,}}(Y) = 0\). Let \(T = {{\,\mathrm{{Diag}}\,}}(h)\), it is easy to see that (3.12) is equivalent to the problem:

As in [30, 31], we define \(\mathcal {K}_+^{n+1}(r) := \{Y \in {{\mathcal {S}}} ^{n+1} | -J_{n+1} Y J_{n+1} \succeq 0,\; {{\,\mathrm{{rank}}\,}}(J_{n+1} Y J_{n+1} ) \le r \}\), and let \({{\mathcal {B}}\,}(Y) = 0\) represent the linear constraints \({{\,\mathrm{{diag}}\,}}(Y) = 0\) and \(\langle J_{n+1} Y J_{n+1}, W\rangle = 0\). Then (3.20) may be written as,

If \({{\mathcal {B}}\,}\) consists only of the diagonal constraint and \(T = I\), then (3.21) is exactly the problem considered in [30, 31], where a sufficient condition for strong duality was presented. In the subsequent results, we present an analogous dual problem for the general constraint \({{\mathcal {B}}\,}(Y) = 0\).

Lemma 3.12

The Lagrangian dual of (3.21) is

where \(\mathcal {K}_T^{n+1}(r) = \{Y \in {{\mathcal {S}}} ^{n+1} | -Y \succeq 0 \;\text{ on } \; \{Te\}^{\perp },\; {{\,\mathrm{{rank}}\,}}(J_{n+1} T^{-1} Y T^{-1} J_{n+1} ) \le r \}\)

Proof

The Lagrangian function \(L: {{\mathcal {S}}} ^{n+1} \times \mathbb {R}^{n+2} \rightarrow \mathbb {R}\) of (3.21) is,

we then write the dual object function \(\theta : \mathbb {R}^{n+2} \rightarrow \mathbb {R}\) as,

From Eqs. (3.26) to (3.27) we need to prove the triangle equality holds, i.e.

To this end, consider any matrix \(X \in {{\mathcal {S}}} ^{n+1}\) and let \(\prod (X)\) be a nearest point in \(\mathcal {K}_T^{n+1}(r)\) to X. Since \(\mathcal {K}_T^{n+1}(r)\) is a cone, the ray \(\theta \prod (X)\) for all \(\theta \ge 0\) is contained in the set \(\mathcal {K}_T^{n+1}(r)\). Moreover this ray is convex and \(\prod (X)\) is the nearest point to X from this ray. Now we can use orthogonality: \(\prod (X) - X\) is orthogonal to \(\prod (X)-0\). Then the triangle inequality follows:

The Lagrangian dual problem is then defined by,

as desired. \(\square \)

In [30, 31] it is shown that the Lagrangian dual has compact level sets and therefore the optimal value is finite and attained. The dual problem (3.28) can be solved by the semi-smooth Newton approach proposed in [30].

In [30, 31], the authors proposed a rank majorization approach where strong duality is guaranteed if the penalty function goes to zero. The approach can be readily modified to replace the diagonal constraint by the linear constraint \({{\mathcal {B}}\,}\) and to include the diagonal weight matrix T. The strong duality result and global optimal condition can also be carried out to our problem (3.21). The drawback of this approach is the slow convergence when n is large. Therefore, in our facial reduction model we prefer to stay in \({{\mathcal {S}}} ^{r+1}\) rather than \({{\mathcal {S}}} ^{n+1}\) since the dimension is lower. Hence we develop a rank majorization approach in \({{\mathcal {S}}} ^{r+1}\) in the following:

To penalize rank, we consider the concave penalty function,

Note that p is non-negative over the positive semidefinite matrices and

Hence, p is an appropriate penalty function for the rank constraint of FNEDM. Now we consider the penalized version of FNEDM ,

where \(\gamma \) is a positive constant. The objective is a difference of convex functions and the feasible set is convex. The literature on this type of optimization problem is extensive and the theory well established. In particular, the well-known majorization approach guarantees convergence to a matrix satisfying the first order necessary conditions for PNEDM, i.e. a stationary point. See for instance [35, 36].

The majorization approach is outlined below in Algorithm 3.1. Central to the approach is the observation that p is majorized by its linear approximation, since it is concave. In the algorithm, \(\partial p(R)\) denotes the subdifferential of p at R. Thus at every iterate, the convex subproblem (3.31) is solved to obtain the next iterate.

Theorem 3.13

Suppose Algorithm 3.1 converges to a stationary point \(\bar{R}\), and that \({{\,\mathrm{{rank}}\,}}(\bar{R}) = r\). Then \(\bar{R}\) is a global minimizer of FNEDM restricted to \({{\,\mathrm{face}\,}}(\bar{R})\).

Proof

By [35, 36], the stationary point \(\bar{R}\) satisfies the following condition:

Under the assumption \({{\,\mathrm{{rank}}\,}}(\bar{R}) = r\), we have \(\bar{R} = V \begin{bmatrix} \Lambda&0 \\ 0&0 \end{bmatrix} V^T\) where \(\Lambda = {{\,\mathrm{{Diag}}\,}}(\lambda _1, \ldots ,\lambda _r)\) with \(\lambda _1 \ge \ldots \ge \lambda _r > 0\) being the eigenvalues of Z and \(V^T V = I\). Let \(V = [V_1, V_2]\) with the columns of \(V_1\) being the eigenvectors corresponding to \(\lambda _1,\ldots ,\lambda _r\). We have

and

Therefore we have \(\nabla f(\bar{R}, \alpha ) = V \begin{bmatrix} 0&0 \\ 0&\gamma - t \end{bmatrix} V^T\).

Due to the convexity of \(f(R, \alpha )\), for any \(\hat{R} \in {{\,\mathrm{face}\,}}(\bar{R})\), we have

Hence our claim is proved. \(\square \)

3.1.5 Identifying outliers using \(l_1\) minimization and facial reduction

In this section, we address the issue of unequal noise, where a few distance measurements are outliers, i.e. much more inaccurate than others. We use \(l_1\) norm minimization to try and identify the outliers, and remove them to obtain a more stable problem. We assume that we have many more towers available than is necessary, so that removal of a few outliers leaves us with towers that still satisfy Assumption 2.1.

Problem (3.12) is equivalent to minimizing the residual of an overdetermined linear system in the domain of an SDP cone. Let \(z := {{\,\mathrm{{svec}}\,}}(R)\) for \(R \in {{\mathcal {S}}} ^{r+1}\). Abusing our previous notation, let \(b := {{\,\mathrm{{svec}}\,}}(H_{\alpha } \circ D_{T_c})\) and let A denote the matrix representation of \(H_{\alpha }\circ {{\,\mathrm{{{\mathcal {K}}} }\,}}_V\). Then \(z \in \mathbb {R}^{(r+1)(r+2)/2}\) and \(b \in \mathbb {R}^{n(n+1)/2}\). In practice, n is much larger than \(r + 1\), so A will have more rows than columns. In other words, we have an overdetermined system. Under this new notation, problem (3.12) is equivalent to,

To motivate the compressed sensing approach, suppose that only the outlier measurements are noisy and that the remaining measurements are accurate. If \(\bar{z}\) denotes the true solution, then \(A\bar{z} - b\) is sparse and we consider the popular \(l_1\) norm minimization problem,

Aside from the positive semidefinite cone (3.34) is a compressed sensing problem. To see this, note than \(\delta + b = Az\) if, and only if, \(\delta + b \in {{\,\mathrm{range}\,}}(A)\). Let N be a matrix such that \({{\,\mathrm{range}\,}}(A) = {{\,\mathrm{null}\,}}(N)\). Then \(\delta +b = Az\) if, and only if, \(\delta + b \in {{\,\mathrm{null}\,}}(N)\). Therefore the constraint, \(Az - b = \delta \) is equivalent to \(N \delta = -\,Nb\) which is exactly the compressed sensing constraint.

The problem (3.34) differs from the classical compressed sensing model in the positive semidefinite constraint. However, in our numerical tests, we have found that adding the positive semidefinite constraint greatly increases the success rate in identifying outliers. In compressed sensing, If the matrix N satisfy the so-called restricted isometry property, then the sparse signal can be recovered exactly [7, Theorem 1.1]. However, there are no practical algorithms available right now to check if a given matrix satisfies the restricted isometry property. If \(\delta _0\) is the solution to (3.34) and most of the elements of \(\delta _0\) are 0, then the non-zero elements indicate the outlier measurements.

Thus far, we have assumed that most of the measurements in b are exact and a few have large error. Now let us revert to the original assumption of this section: that most elements of b are slightly inaccurate and few elements are very inaccurate. If the positive semidefinite constraint is ignored, then the identification of outliers is guaranteed to be accurate assuming that N satisfies the restricted isometry property. To be specific, if \(\delta ^{\#}\) represents the optimal solution of (3.34) without the positive semidefinite constraint, then \(||\delta ^{\#} - \delta _0||_{l_2} \le C_S \cdot \epsilon \) where \(C_S\) and \(\epsilon \) are small constants [6, 7]. The specifics for our outlier-detection algorithm are stated in Algorithm 3.2.

3.2 Recovering source position from gram matrix

After finding the EDM from our data, we need to rotate the sensors back to their original positions in order to recover the position of the source. This is done by solving a Procrustes problem. That is, suppose that the, appropriately partitioned, final EDM, corresponding Gram matrix and points are,

Assuming \(\bar{P}_f\) and the original data \(P_T\) are both centered, we now have two approaches.

The first approach solves the following Procrustes problem using [20, Algorithm 12.4.1]

The optimal solution can be found explicitly from the singular value decomposition of \(\bar{P}_f^TP_T\). If \(\bar{P}_f^T P_T=:U_f\Sigma _f V_f^T\), then the optimal solution to (3.36) is \(Q^*:=U_fV_f^T\). The recovered position of the source is then \(\boxed {{{\mathcal {P}}\,}_c^T = p_f^T Q^*}\).

The second approach is to solve the least square problem

The least square solution is \(\bar{Q} = \bar{P}_f^{\dagger } P_T\). Recall that \(\bar{P}_f^{\dagger } \) is the Moore–Penrose generalized inverse of \(\bar{P}_f\). The recovered position of the source is then \(\boxed {p_c^T = p_f^T \bar{Q}}\).

4 Numerical results

To compare the different methods, we used randomly generated data with an error proportional to the distance to each tower. The proportionality is given by \(\eta \). This gives

where D is the generated EDM and \(\varepsilon \in U(-\eta ,\eta )\). The outliers are obtained by multiplying (4.1) by another factor \(\theta \) for a small subset of the indices.

We let \({{\mathcal {M}}}\) denote the set of optimization methods to be tested. Then for \(M \in {{\mathcal {M}}}\), the relative error, \(c^M_{re}\), between the true location of the source, c, and the location obtained using method M, denoted \(c_M\), is given by

The data is then found by calculating this error for all the methods and varying the error \(\eta \) in Eq. (4.1) and the amount of sensors n. For each pair \((n,\eta )\), one hundred instances are solved.

The methods in the tables are labelled according to the models with some additional prefixes. To be specific, the L and P prefixes represent the different ways used to obtain the position of the source, c. By L we denote the least square approach of (3.37) and P represents the Procrustes approach in (3.36). We choose \(\alpha = 1\) in FNEDM and the constant \(\gamma \) for PNEDM in (3.30) is chosen to be 1000.

We report some results in the following table.

From Tables 1 and 2 we can see generally P-FNEDM has the smallest error, and occasionally L-FNEDM is better. Also we can see that as the number of towers n increases, the relative error \(c^M_{re}\) decreases which is expected as we have more sensors, the location of the source should be more accurate.

To compare the overall performance of all the methods, we use the well known performance profiles [14]. The approach is outlined below.

For each pair \((n,\eta )\) and one hundred solved instances, we calculate the mean of the relative error \(c^M_{re}\) for method M. We denote this

We then compute the performance ratio,

and the function,

The performance profile is a plot of \(\psi _M(\tau )\) for \(\tau \in (1, +\infty )\) and all choices of \(M \in {{\mathcal {M}}}\). Note that \(r_{n,\eta ,M} \ge 1\) and equality holds if, and only if, the solution obtained by M is best for the pair \((n,\eta )\). In general, smaller values of \(r_{n,\eta ,M}\) indicate better performance. The function \(\psi _M(\tau )\) measures how many pairs \((n,\eta )\) were solved with a performance ratio of \(\tau \) or better. The function is monotonically non-decreasing and larger values are better.

The performance profiles can be seen in Fig. 1a and b, the P-FNEDM approach has the best performance over all 5 methods. Also using the Procrustes approach (3.36) is better than using the least squares approach (3.37). Allowing the sensors to move in FNEDM model is better than fixing the sensors in SDR or making the sensors completely free in NEDM for recovering the location of the source.

We also generate the data with outliers. In FNEDM , the outliers are detected and removed using the \(\ell _1\) norm approach described in Sect. 3.1.5. We report the results with outliers added in the following Tables 3 and 4.

From Table 3 and 4 we can see clearly that when outliers are added, the \(\mathbf{FNEDM }\,\) outperforms both SDR and NEDM with a big improvement, as the outliers can be removed. It is also consistent with our previous conclusion that using the Procrustes approach (3.36) is better than using the least squares approach (3.37).

5 Conclusion

We showed that the SLS formulation of the single source localization problem is inherently convex, by considering the semidefinite relaxation, SDR, of the GTRS formulation. The extreme points of the optimal set of SDR correspond exactly to the optimal solutions of the SLS formulation and these extreme points can be obtained by solving no more than \(r+1\) convex optimization problems.

We also analyzed several EDM based relaxations of the SLS formulation and introduced the weighted facial reduction model FNEDM. The optimal value of FNEDM was shown to converge to the optimal value of SLS by increasing \(\alpha \). In our numerical tests, we showed that our newly proposed model FNEDM performs the best for recovering the location of the source. Without any outliers present, the performance of each method improves as the number of towers increases. This is expected since more information is available. All the methods tend to perform similarly as the number of towers increases but the facial reduction model, FNEDM, using the Procrustes approach performs the best.

Finally, we used the \(\ell _1\) norm approach in Algorithm 3.2, to remove outlier measurements. In Tables 3 and 4 we demonstrate the effectiveness of this approach.

References

Alfakih, A.Y.: Euclidean Distance Matrices and Their Applications in Rigidity Theory. Springer, Cham (2018)

Alfakih, A.Y., Khandani, A., Wolkowicz, H.: Solving Euclidean distance matrix completion problems via semidefinite programming. Comput. Optim. Appl. 12(1–3), 13–30 (1999). A tribute to Olvi Mangasarian

Beck, A., Stoica, P., Li, J.: Exact and approximate solutions of source localization problems. IEEE Trans. Signal Process. 56(5), 1770–1778 (2008)

Beck, A., Teboulle, M., Chikishev, Z.: Iterative minimization schemes for solving the single source localization problem. SIAM J. Optim. 19(3), 1397–1416 (2008)

Borg, I., Groenen, P.: Modern multidimensional scaling: theory and applications. J. Educ. Meas. 40(3), 277–280 (2003)

Candes, E., Rudelson, M., Tao, T., Vershynin, R.: Error correction via linear programming. In: Proceedings of HTE 2005 46th Annual EIII Symposium on Foundations of Computer Science, (FOCS’o5), pp. 1–14. IEEE, New York (2005)

Candès, E.J., Romberg, J.K., Tao, T.: Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 59(8), 1207–1223 (2006)

Cheung, K.W., So, H.-C., Ma, W.-K., Chan, Y.-T.: Least squares algorithms for time-of-arrival-based mobile location. IEEE Trans. Signal Process. 52(4), 1121–1130 (2004)

Cox, T.F., Cox, M.A.: Multidimensional Scaling. Chapman and hall/CRC, Boca Raton (2000)

Crippen, G.M., Havel, T.F.: Distance Geometry and Molecular Conformation, vol. 74. Research Studies Press Taunton, Taunton (1988)

Critchley, F.: Dimensionality theorems in multidimensional scaling and hierarchical cluster analysis. In: Data Analysis and Informatics (Versailles, 1985), pp. 45–70. North-Holland, Amsterdam (1986)

Dattorro, J.: Convex optimization & Euclidean distance geometry. Lulu. com (2010)

Ding, Y., Krislock, N., Qian, J., Wolkowicz, H.: Sensor network localization, Euclidean distance matrix completions, and graph realization. Optim. Eng. 11(1), 45–66 (2010)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2, Ser. A), 201–213 (2002)

Drusvyatskiy, D., Krislock, N., Cheung Voronin, Y.-L., Wolkowicz, H.: Noisy Euclidean distance realization: robust facial reduction and the Pareto frontier. SIAM J. Optim. 27(4), 2301–2331 (2017)

Drusvyatskiy, D., Pataki, G., Wolkowicz, H.: Coordinate shadows of semidefinite and Euclidean distance matrices. SIAM J. Optim. 25(2), 1160–1178 (2015)

Drusvyatskiy, D., Wolkowicz, H.: The many faces of degeneracy in conic optimization. Found. Trends® Optim. 3(2), 77–170 (2017)

Fang, H., O’Leary, D.P.: Euclidean distance matrix completion problems. Optim. Methods Softw. 27(4–5), 695–717 (2012)

Gao, Y., Sun, D.: A majorized penalty approach for calibrating rank constrained correlation matrix problems. Technical Report (2010)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 3rd edn. Johns Hopkins University Press, Baltimore (1996)

Gower, J.C.: Properties of Euclidean and non-Euclidean distance matrices. Linear Algebra Appl. 67, 81–97 (1985)

Hayden, T.L., Wells, J., Liu, W.M., Tarazaga, P.: The cone of distance matrices. Linear Algebra Appl. 144, 153–169 (1991)

Hiriart-Urruty, J.-B., Lemaréchal, C.: Fundamentals of Convex Analysis, Grundlehren Text Editions. Springer, Berlin (2001). Abridged version of it Convex analysis and minimization algorithms. I [Springer, Berlin, 1993; MR1261420 (95m:90001)] and it II [ibid.; MR1295240 (95m:90002)]

Koshima, H., Hoshen, J.: Personal locator services emerge. IEEE Spectr. 37(2), 41–48 (2000)

Krislock, N., Wolkowicz, H.: Euclidean distance matrices and applications. In: Handbook on Semidefinite, Cone and Polynomial Optimization, Number 2009-06 in International Series in Operations Research & Management Science, pp. 879–914. Springer, Berlin (2011)

Kundu, T.: Acoustic source localization. Ultrasonics 54(1), 25–38 (2014)

Liberti, L., Lavor, C., Maculan, N., Mucherino, A.: Euclidean distance geometry and applications. SIAM Rev. 56(1), 3–69 (2014)

Pataki, G.: On the rank of extreme matrices in semidefinite programs and the multiplicity of optimal eigenvalues. Math. Oper. Res. 23(2), 339–358 (1998)

Pong, T.K., Wolkowicz, H.: The generalized trust region subproblem. Comput. Optim. Appl. 58(2), 273–322 (2014)

Qi, H.-D.: A semismooth Newton method for the nearest Euclidean distance matrix problem. SIAM J. Matrix Anal. Appl. 34(1), 67–93 (2013)

Qi, H.-D., Yuan, X.: Computing the nearest Euclidean distance matrix with low embedding dimensions. Math. Program. 147(1), 351–389 (2014)

Schoenberg, I.J.: Metric spaces and positive definite functions. Trans. Am. Math. Soc. 44(3), 522–536 (1938)

Stern, R., Wolkowicz, H.: Trust region problems and nonsymmetric eigenvalue perturbations. SIAM J. Matrix Anal. Appl. 15(3), 755–778 (1994)

Stern, R., Wolkowicz, H.: Indefinite trust region subproblems and nonsymmetric eigenvalue perturbations. SIAM J. Optim. 5(2), 286–313 (1995)

Tao, P.D., An, L.T.H.: Convex analysis approach to dc programming: theory, algorithms and applications. Acta Math. Vietnam. 22(1), 289–355 (1997)

Tao, P.D., An, L.T.H.: The dc (difference of convex functions) programming and dca revisited with dc models of real world nonconvex optimization problems. Ann. Oper. Res. 133(1–4), 23–46 (2005)

Tunçel, L.: Polyhedral and Semidefinite Programming Methods in Combinatorial Optimization. Fields Institute Monographs, vol. 27. American Mathematical Society, Providence, RI (2010)

Warrior, J., McHenry, E., McGee, K.: They know where you are [location detection]. IEEE Spectr. 40(7), 20–25 (2003)

Wolkowicz, H., Saigal, R., Vandenberghe, L. (eds.): Handbook of Semidefinite Programming. International Series in Operations Research & Management Science, vol. 27. Kluwer Academic Publishers, Boston, MA (2000)

Acknowledgements

Open access funding provided by Royal Institute of Technology.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Stefan Sremac: Research supported by the Natural Sciences and Engineering Research Council of Canada. Lucas Pettersson: Research supported by an Undergraduate Student Research Awards Program. Henry Wolkowicz: Research supported by The Natural Sciences and Engineering Research Council of Canada.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sremac, S., Wang, F., Wolkowicz, H. et al. Noisy Euclidean distance matrix completion with a single missing node. J Glob Optim 75, 973–1002 (2019). https://doi.org/10.1007/s10898-019-00825-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-019-00825-7

Keywords

- Single source localization

- Noise

- Euclidean distance matrix completion

- Semidefinite programming

- Wireless communication

- Facial reduction

- Generalized trust region subproblem