Abstract

Purpose

Insufficient effort responding (IER), which occurs due to a lack of motivation to comply with survey instructions and to correctly interpret item content, represents a serious problem for researchers and practitioners who employ survey methodology (Huang et al. 2012). Extending prior research, we examine the validity of the infrequency approach to detecting IER and assess participant reactions to such an approach.

Design/Methodology/Approach

Two online surveys (Studies 1 and 2) completed by employed undergraduates were utilized to assess the validity of the infrequency approach. An on-line survey of paid participants (Study 3) and a paper-and-pencil survey in an organization (Study 4) were conducted to evaluate participant reactions, using random assignment into survey conditions that either did or did not contain infrequency items.

Findings

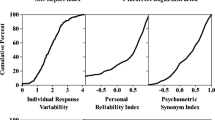

Studies 1 and 2 provided evidence for the reliability, unidimensionality, and criterion-related validity of the infrequency scales. Study 3 and Study 4 showed that surveys that contained infrequency items did not lead to more negative reactions than did surveys that did not contain such items.

Implications

The current findings provide evidence of the effectiveness and feasibility of the infrequency approach for detecting IER, supporting its application in low-stakes organizational survey contexts.

Originality/Value

The current studies provide a more in-depth examination of the infrequency approach to IER detection than had been done in prior research. In particular, the evaluation of participant reactions to infrequency scales represents a novel contribution to the IER literature.

Similar content being viewed by others

Notes

We thank three anonymous reviewers and Action Editor Scott Tonidandel for noting this potential concern that led us to conduct Study 3 and Study 4.

In a pilot study based on 59 part-time and full time working undergraduates, the eight-item infrequency scale was not significantly correlated with Paulhus’s (Paulhus 1991) impression management scale (r = − 0.20, p = ns) or self-deception scale (r = − 0.20, p = ns). If the infrequency items assessed faking (cf. Pannone 1984; Paulhus et al. 2003), the scale should be positively related to impression management and self-deception. Thus, these nonsignificant negative correlations indicate that the infrequency items measured response behavior distinct from impression management and self-deception. As suggested by an anonymous reviewer, we further examined each infrequency item’s correlations with impression management and self-deception. Five items (items #1, 2, 3, 5, and 7) had negative correlations (rs ranging from −0.23 to −0.36, ps < 0.10), while two items (items #4 and #8) had near zero correlations (rs ranging from −0.05 to 0.06, ps = ns) with the two social desirability measures. Item #6 stood out for having weak positive associations with both self-deception and impression management (rs = 0.21 and 0.11, ps = ns). Overall, however, none of the infrequency IER items was saturated with social desirability, thus alleviating the concern that these items measured socially desirable responding.

We thank Scott Tonidandel for bringing these issues to our attention.

We conjecture that the infrequency items might have grabbed the attention of some participants. That is, the presence of the infrequency items might have led some participants to find the items funny and thus had a piqued interest in the questionnaire. This is consistent with presence of infrequency items resulted in a marginal increase in enjoyment. As a result, attentive participants might have become even more attentive after reading the infrequency items, and subsequently tended to think the data quality being higher.

References

Baer, R. A., Ballenger, J., Berry, D. R., & Wetter, M. W. (1997). Detection of random responding on the MMPI-A. Journal of Personality Assessment, 68, 139–151.

Beach, D. A. (1989). Identifying the random responder. Journal of Psychology: Interdisciplinary and Applied, 123, 101–103.

Behrend, T. S., Sharek, D. J., Meade, A. W., & Wiebe, E. N. (2011). The viability of crowdsourcing for survey research. Behavior Research Methods, 43, 800–813.

Buhrmester, M., Kwang, T., & Gosling, S. D. (2011). Amazon’s Mechanical Turk: A new source of inexpensive, yet high-quality, data? Perspectives on Psychological Science, 6, 3–5.

Chiaburu, D. S., Huang, J. L., Hutchins, H. M., & Gardner, R. G. (2014). Trainees’ perceived knowledge gain unrelated to the training domain: The joint action of impression management and motives. International Journal of Training and Development, 18, 37–52.

Colquitt, J. A., Conlon, D. E., Wesson, M. J., Porter, C. O., & Ng, K. Y. (2001). Justice at the millennium: A meta-analytic review of 25 years of organizational justice research. Journal of Applied Psychology, 86, 425–445.

Costa, P. T., Jr, & McCrae, R. R. (2008). The Revised NEO Personality Inventory (NEO-PI-R). In G. J. Boyle, G. Matthews, & D. H. Saklofske (Eds.), The Sage handbook of personality theory and assessment: Personality measurement and testing (pp. 179–198). London: Sage.

Croteau, A.-M., Dyer, L., & Miguel, M. (2010). Employee reactions to paper and electronic surveys: An experimental comparison. IEEE Transactions on Professional Communication, 53, 249–259.

DiLalla, D. L., & Dollinger, S. J. (2006). Cleaning up data and running preliminary analyses. In F. T. L. Leong & J. T. Austin (Eds.), The psychology research handbook: A guide for graduate students and research assistants (pp. 241–253). Thousand Oaks, CA: Sage.

Furr, R. M., & Bacharach, V. R. (2014). Psychometrics: An introduction. Thousand Oaks, CA: Sage.

Goodman, J. K., Cryder, C. E., & Cheema, A. (2013). Data collection in a flat world: The strengths and weaknesses of Mechanical Turk samples. Journal of Behavioral Decision Making, 26, 213–224.

Gorsuch, R. L. (1997). Exploratory factor analysis: Its role in item analysis. Journal of Personality Assessment, 68, 532–560.

Green, S. B., & Stutzman, T. M. (1986). An evaluation of methods to select respondents to structured job-analysis questionnaires. Personnel Psychology, 39, 543–564.

Green, S. B., & Veres, J. G. (1990). Evaluation of an index to detect inaccurate respondents to a task analysis inventory. Journal of Business and Psychology, 5, 47–61.

Hackman, J. R., & Oldham, G. R. (1975). Development of the job diagnostic survey. Journal of Applied Psychology, 60, 159–170.

Hogan, R., & Hogan, J. (2007). Hogan Personality Inventory manual (3rd ed.). Tulsa, OK: Hogan Assessment Systems.

Hough, L. M., Eaton, N. K., Dunnette, M. D., Kamp, J. D., & McCloy, R. A. (1990). Criterion-related validities of personality constructs and the effect of response distortion on those validities. Journal of Applied Psychology, 75, 581–595.

Huang, J. L., Bowling, N. A., & Liu, M. (2014). The effects of insufficient effort responding on the convergent and discriminant validity of substantive measures. Unpublished manuscript.

Huang, J. L., Curran, P. G., Keeney, J., Poposki, E. M., & DeShon, R. P. (2012). Detecting and deterring insufficient effort respond to surveys. Journal of Business and Psychology, 27, 99–114.

Huang, J. L., Liu, M., & Bowling, N. A. (2014, May). Insufficient effort responding: Uncovering an insidious threat to data quality. In J. H. Huang & M. Liu (Co-chairs), Insufficient effort responding to surveys: From impact to solutions. Symposium to be presented at the Annual Conference of Society for Industrial and Organizational Psychology, Honolulu, HA.

Jackson, D. N. (1974). Personality Research Form manual. Goshen, NY: Research Psychologists Press.

Johnson, J. A. (2005). Ascertaining the validity of individual protocols from web-based personality inventories. Journal of Research in Personality, 39, 103–129.

Liu, M., Bowling, N. A., Huang, J. L., & Kent, T. A. (2013). Insufficient effort responding to surveys as a threat to validity: The perceptions and practices of SIOP members. The Industrial-Organizational Psychologist, 51(1), 32–38.

Meade, A. W., & Craig, S. B. (2012). Identifying careless responses in survey data. Psychological Methods, 17, 437–455.

Muthén, B., du Toit, S. H. C., & Spisic, D. (1997). Robust inference using weighted least squares and quadratic estimating equations in latent variable modeling with categorical and continuous outcomes. Unpublished manuscript.

Muthén, L. K., & Muthén, B. O. (2011). Mplus User’s Guide. Los Angeles, CA: Muthén & Muthén.

Pannone, R. D. (1984). Predicting test performance: A content valid approach to screening applicants. Personnel Psychology, 37, 507–514.

Paulhus, D. L. (1991). Measurement and control of response bias. In J. P. Robinson, P. R. Shaver, & L. S. Wrightsman (Eds.), Measures of personality and social psychological attitudes (pp. 17–59). San Diego, CA: Academic Press.

Paulhus, D. L., Harms, P. D., Bruce, M. N., & Lysy, D. C. (2003). The over-claiming technique: Measuring self-enhancement independent of ability. Journal of Personality and Social Psychology, 84, 890–904.

Scandell, D. J. (2000). Development and initial validation of validity scales for the NEO-Five Factor Inventory. Personality and Individual Differences, 29, 1153–1162.

Schmitt, N., & Stults, D. M. (1985). Factors defined by negatively keyed items: The result of careless respondents? Applied Psychological Measurement, 9, 367–373.

Acknowledgments

We would like to thank Neal Schmitt, Fred Oswald, and Adam Meade for comments on earlier drafts of this paper, and Jessica Keeney and Paul Curran for suggestions during the early stages of this research. We also thank Travis Walker for assisting with data collection.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Huang, J.L., Bowling, N.A., Liu, M. et al. Detecting Insufficient Effort Responding with an Infrequency Scale: Evaluating Validity and Participant Reactions. J Bus Psychol 30, 299–311 (2015). https://doi.org/10.1007/s10869-014-9357-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10869-014-9357-6