Abstract

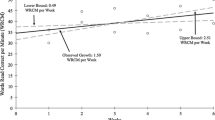

Despite the increased number of quantitative effect sizes developed for single-case experimental designs (SCEDs), visual analysis remains the gold standard for evaluating methodological rigor of SCEDs and determining whether a functional relation between the treatment and the outcome exists. The physical length and range of values plotted on x and y-axes can influence the visual display of data and subsequent interpretations of treatment effects. We explored the extent to which geometric slope (the angle of inclination) of treatment phase data corresponded to three within-case effect sizes (percent exceeding baseline trend, Tau-U, and generalized least squares) in a dataset of published multiple-baseline designs that targeted fluency in academic outcomes (oral reading fluency, written production, and math computation). A secondary purpose of the study was to explore the distributional properties of said effect sizes across a specific class of outcomes. Results suggest that there is very little correspondence between visual depictions of treatment trend and the effect sizes. Noticeable ceiling effects were observed for overlap-based effect sizes and extremely broad ranges were observed for the regression-based effect sizes. The need to standardize graphing procedures for SCEDs using ordinal axes is offered.

Similar content being viewed by others

References

Brossart, D. F., Laird, V. C., & Armstrong, T. W. (2018). Interpreting Kendall’s Tau and Tau-U for single-case experimental designs. Cogent Psychology. https://doi.org/10.1080/23311908.2018.1518687

Burns, M., & Riley-Tillman, T. C. (2009). Response to intervention and eligibility decisions: We need to wait to succeed. Communiqué, 38(1), 1–10.

Calkin, A. B. (2005). Precision teaching: The standard celeration charts. The Behavior Analyst Today, 6(4), 207. https://doi.org/10.1037/h0100073

Cancio, E. J., & Maloney, M. (1994). Teaching students how to proficiently utilize the standard celeration chart. Journal of Precision Teaching, 12(1), 15–45.

Christ, T. J. (2006). Short-term estimates of growth using curriculum-based measurement of oral reading fluency: Estimating standard error of the slope to construct confidence intervals. School Psychology Review, 35(1), 128–133. https://doi.org/10.1080/02796015.2006.12088006

Dart, E. H., & Radley, K. C. (2017). The impact of ordinate scaling on the visual analysis of single-case data. Journal of School Psychology, 63, 105–118. https://doi.org/10.1016/j.jsp.2017.03.008

Dart, E. H., & Radley, K. C. (2018). Toward a standard assembly of linear graphs. School Psychology Quarterly, 33(3), 350. https://doi.org/10.1037/spq0000269

Dart, E. H., Van Norman, E. R., Klingbeil, D. A., & Radley, K. C. (2021). Graph construction and visual analysis: A comparison of curriculum-based measurement vendors. Journal of Behavioral Education. https://doi.org/10.1007/s10864-021-09440-7

Fuchs, D., & Fuchs, L. S. (2006). Introduction to response to intervention: What, why, and how valid is it? Reading Research Quarterly, 41(1), 93–99. https://doi.org/10.1598/RRQ.41.1.4

Fuchs, L. S., Fuchs, D., Hamlett, C. L., Walz, L., & Germann, G. (1993). Formative evaluation of academic progress: How much growth can we expect? School Psychology Review, 22(1), 27–48.

Fuchs, L. S., Fuchs, D., Hosp, M. K., & Jenkins, J. R. (2001). Oral reading fluency as an indicator of reading competence: A theoretical, empirical, and historical analysis. In The Role of Fluency in Reading Competence, Assessment, and instruction (pp. 239–256). Routledge.

Hintze, J. M., & Christ, T. J. (2004). An examination of variability as a function of passage variance in CBM progress monitoring. School Psychology Review, 33(2), 204–217. https://doi.org/10.1080/02796015.2004.12086243

Kendeou, P., McMaster, K. L., & Christ, T. J. (2016). Reading comprehension: Core components and processes. Policy Insights from the Behavioral and Brain Sciences, 3(1), 62–69. https://doi.org/10.1177/2372732215624707

Kinney, C. E. (2022). A clarification of slope and scale. Behavior Modification, 46(1), 90–127. https://doi.org/10.1177/0145445520953366

Kratochwill, T. R., Horner, R. H., Levin, J. R., Machalicek, W., Ferron, J., Johnson, A. (2021) Single-case design standards: An update and proposed upgrades. Journal of School Psychology, 8991–105, S002244052100073X. https://doi.org/10.1016/j.jsp.2021.10.006

Kubina, R. M., King, S. A., Halkowski, M., Quigley, S., Kettering, T. (2022). Slope Identification and Decision Making: A Comparison of Linear and Ratio Graphs. Behavior Modification, 014544552211300. https://doi.org/10.1177/01454455221130002

Kraft, M. A. (2020). Interpreting effect sizes of education interventions. Educational Researcher, 49(4), 241–253. https://doi.org/10.3102/0013189X20912798

Kubina, R. M., Kostewicz, D. E., Brennan, K. M., & King, S. A. (2017). A critical review of line graphs in behavior analytic journals. Educational Psychology Review, 29(3), 583–598. https://doi.org/10.1007/s10648-015-9339-x

Kubina, R. M., Kostewicz, D. E., King, S. A., Brennan, K. M., Wertalik, J., Rizzo, K., & Markelz, A. (2021). Standards of graph construction in special education research: A review of their use and relevance. Education and Treatment of Children, 44(2), 275–290. https://doi.org/10.1007/s43494-021-00053-3

Lane, J. D., & Gast, D. L. (2014). Visual analysis in single case experimental design studies: Brief review and guidelines. Neuropsychological Rehabilitation, 24(3–4), 445–463. https://doi.org/10.1080/09602011.2013.815636

Ledford, J. R., & Gast, D. L. (Eds.). (2018). Single case research methodology. Routledge.

Ledford, J. R., Wolery, M., & Gast, D. L. (2014). Controversial and critical issues in single case research. https://doi.org/10.4324/9780203521892-14.

Lewis, H. H., Radley, K. C., & Dart, E. H. (2022). The effect of graph standardization on intervention evaluation of practitioner-created graphs. Psychology in the Schools. https://doi.org/10.1002/pits.22621

Lomax, R. G. (2001). An introduction to statistical concepts for education and behavioral sciences (Vol. 94). Lawrence Erlbaum Associates.

Ma, H. H. (2006). An alternative method for quantitative synthesis of single-subject researchers: Percentage of data points exceeding the median. Behavior Modification, 30(5), 598–617. https://doi.org/10.1177/0145445504272974

Maggin, D. M., Cook, B. G., & Cook, L. (2019). Making sense of single-case design effect sizes. Learning Disabilities Research & Practice, 34(3), 124–132. https://doi.org/10.1111/ldrp.12204

Maggin, D. M., Swaminathan, H., Rogers, H. J., O’Keeffe, B. V., Sugai, G., & Horner, R. H. (2011). A generalized least squares regression approach for computing effect sizes in single-case research: Application examples. Journal of School Psychology, 49(3), 301–321. https://doi.org/10.1016/j.jsp.2011.03.004

Manolov, R., Losada, J. L., Chacón-Moscoso, S., & Sanduvete-Chaves, S. (2016). Analyzing two-phase single-case data with non-overlap and mean difference indices: Illustration, software tools, and alternatives. Frontiers in Psychology, 7, 32. https://doi.org/10.3389/fpsyg.2016.00032

Matyas, T. A., & Greenwood, K. M. (1990). Visual analysis of single-case time series: Effects of variability, serial dependence, and magnitude of intervention effects. Journal of Applied Behavior Analysis, 23(3), 341–351. https://doi.org/10.1901/jaba.1990.23-341

Parker, R. I., Brossart, D. F., Vannest, K. J., Long, J. R., De-Alba, R. G., Baugh, F. G., & Sullivan, J. R. (2005). Effect sizes in single case research: How large is large? School Psychology Review, 34(1), 116–132. https://doi.org/10.1080/02796015.2005.12086279

Parker, R. I., Vannest, K. J., Davis, J. L., & Sauber, S. B. (2011). Combining nonoverlap and trend for single-case research: Tau-U. Behavior Therapy, 42(2), 284–299. https://doi.org/10.1016/j.beth.2010.08.006

Pustejovsky, J. E., Hedges, L. V., & Shadish, W. R. (2014). Design-comparable effect sizes in multiple baseline designs: A general modeling framework. Journal of Educational and Behavioral Statistics, 39(5), 368–393. https://doi.org/10.3102/1076998614547577

Radley, K. C., Dart, E. H., & Wright, S. J. (2018). The effect of data points per x-to y-axis ratio on visual analysts evaluation of single-case graphs. School Psychology Quarterly, 33(2), 314. https://doi.org/10.1037/spq0000243

Rakap, S. (2015). Effect sizes as result interpretation aids in single-subject experimental research: Description and application of four nonoverlap methods. British Journal of Special Education, 42(1), 11–33. https://doi.org/10.1111/1467-8578.12091

Riley-Tillman, T. C., Burns, M. K., & Kilgus, S. P. (2020). Evaluating educational interventions: Single-case design for measuring response to intervention. Guilford Publications.

Shadish, W. R., Hedges, L. V., Horner, R. H., & Odom, S. L. (2015). The role of between-case effect size in conducting, interpreting, and summarizing single-case research. NCER 2015–002. National Center for Education Research.

Shakow, D., Hilgard, E. R., Kelly, E. L., Luckey, B., Sanford, R. N., & Shaffer, L. F. (1947). Recommended graduate training program in clinical psychology. American Psychologist, 2, 539–558.

Shernoff, E. S., & Kratochwill, T. R. (2004). The application of behavioral assessment methodologies in educational settings. In S. N. Haynes & E. M. Heiby (Eds.), Comprehensive handbook of psychological assessment, Vol. 3. Behavioral assessment (pp. 365–385). John Wiley & Sons, Inc.

Sidman, M. (1960). Tactics of scientific research evaluating experimental data in psychology.

Solomon, B. G., Howard, T. K., & Stein, B. L. (2015). Critical assumptions and distribution features pertaining to contemporary single-case effect sizes. Journal of Behavioral Education, 24(4), 438–458. https://doi.org/10.1007/s10864-015-9221-4

Swaminathan, H., Horner, R. H., Sugai, G., Smolkowski, K., Hedges, L., Spaulding, S. A. (2010). Application of generalized least squares regression to measure effect size in single-case research: A technical report. Unpublished technical report, Institute for Education Sciences.

Tarlow, K. R. (2017). An improved rank correlation effect size statistic for single-case designs: Baseline corrected Tau. Behavior Modification, 41(4), 427–467. https://doi.org/10.1177/0145445516676750

Thorne, F. C. (1947). The clinical method in science. American Psychologist, 2, 161–166.

Van Norman, E. R., & Klingbeil, D. A. (2021). Development of the Database for Initial Reading, Math, and Writing Outcomes. Technical Report v 1.1. Bayesian Analysis of Academic Outcomes from Single-Case Experimental Designs (BAAOSCED) Project. Center for Promoting Research to Practice, Lehigh University. https://osf.io/gd8s7/.

Van Norman, E. R., Klingbeil, D. A., Boorse, J., Drachslin, L., Kim, J., Koller, K., Latham, A., & Younis, D. (2021b). Database of Text Reading Fluency Outcomes from Academic Single-Case Experimental Designs v1.1. Bayesian Analysis of Academic Outcomes from Single-Case Experimental Designs (BAAOSCED) Project. Center for Promoting Research to Practice, Lehigh University. https://osf.io/gd8s7/.

Van Norman, E. R., Klingbeil, D. A., Boorse, J., Drachslin, L., Kim, J., Koller, K., Latham, A., & Younis, D. (2021c). Database of Math Computation Outcomes from Academic Single-Case Experimental Designs v1.1. Bayesian Analysis of Academic Outcomes from Single-Case Experimental Designs (BAAOSCED) Project. Center for Promoting Research to Practice, Lehigh University. https://osf.io/gd8s7/.

Van Norman, E. R., Klingbeil, D. A., Boorse, J., Drachslin, L., Kim, J., Koller, K., Latham, A., & Younis, D. (2021d). Database of Writing Sequence Outcomes from Academic Single-Case Experimental Designs v1.1. Bayesian Analysis of Academic Outcomes from Single-Case Experimental Designs (BAAOSCED) Project. Center for Promoting Research to Practice, Lehigh University. https://osf.io/gd8s7/.

Van Norman, E. R., Klingbeil, D. A., & McLendon, K. E. (2019). The influence of measurement error associated with oral reading progress monitoring measures on the consistency and accuracy of nonparametric single-case design effect size outcomes. Remedial and Special Education, 40(2), 97–111. https://doi.org/10.1177/0741932517749941

What Works Clearinghouse. (2020). Standards handbook, version 1.4. https://ies.ed.gov/ncee/wwc/Docs/referenceresources/WWC-Standards-Handbook-v4-1-508.pdf.

White, O. R., & Haring, N. G. (1980). Exceptional teaching: A multimedia training package. Merrill.

Wolery, M., Busick, M., Reichow, B., & Barton, E. E. (2010). Comparison of overlap methods for quantitatively synthesizing single-subject data. The Journal of Special Education, 44(1), 18–28. https://doi.org/10.1177/0022466908328009

Funding

This research was funded by a grant from the U.S. Department of Education, Institute of Education Sciences (#R305D190023). The opinions expressed here do not reflect those of the Department of Education or the Institute of Education Sciences.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We have no known conflicts of interest to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Van Norman, E.R., Boorse, J. & Klingbeil, D.A. The Relationship Between Visual Depictions of Rate of Improvement and Quantitative Effect Sizes in Academic Single-Case Experimental Design Studies. J Behav Educ (2022). https://doi.org/10.1007/s10864-022-09500-6

Accepted:

Published:

DOI: https://doi.org/10.1007/s10864-022-09500-6