Abstract

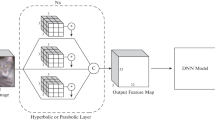

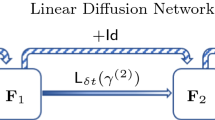

Partial differential equations (PDEs) are indispensable for modeling many physical phenomena and also commonly used for solving image processing tasks. In the latter area, PDE-based approaches interpret image data as discretizations of multivariate functions and the output of image processing algorithms as solutions to certain PDEs. Posing image processing problems in the infinite-dimensional setting provides powerful tools for their analysis and solution. For the last few decades, the reinterpretation of classical image processing problems through the PDE lens has been creating multiple celebrated approaches that benefit a vast area of tasks including image segmentation, denoising, registration, and reconstruction. In this paper, we establish a new PDE interpretation of a class of deep convolutional neural networks (CNN) that are commonly used to learn from speech, image, and video data. Our interpretation includes convolution residual neural networks (ResNet), which are among the most promising approaches for tasks such as image classification having improved the state-of-the-art performance in prestigious benchmark challenges. Despite their recent successes, deep ResNets still face some critical challenges associated with their design, immense computational costs and memory requirements, and lack of understanding of their reasoning. Guided by well-established PDE theory, we derive three new ResNet architectures that fall into two new classes: parabolic and hyperbolic CNNs. We demonstrate how PDE theory can provide new insights and algorithms for deep learning and demonstrate the competitiveness of three new CNN architectures using numerical experiments.

Similar content being viewed by others

References

Ambrosio, L., Tortorelli, V.M.: Approximation of functionals depending on jumps by elliptic functionals via gamma-convergence. Commun. Pure Appl. Math. 43(8), 999–1036 (1990)

Ascher, U.: Numerical Methods for Evolutionary Differential Equations. SIAM, Philadelphia (2010)

Ascher, U., Mattheij, R., Russell, R.: Numerical Solution of Boundary Value Problems for Ordinary Differential Equations. SIAM, Philadelphia (1995)

Bengio, Y., et al.: Learning deep architectures for AI. Found. Trends Mach. Learn. 2(1), 1–127 (2009)

Bengio, Y., Simard, P., Frasconi, P.: Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 5(2), 157–166 (1994)

Biegler, L.T., Ghattas, O., Heinkenschloss, M., Keyes, D., van Bloemen Waanders, B.: Real-Time PDE-Constrained Optimization. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2007)

Borzì, A., Schulz, V.: Computational Optimization of Systems Governed by Partial Differential Equations, vol. 8. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2012)

Chan, T.F., Vese, L.A.: Active contours without edges. IEEE Trans. Image Process. 10(2), 266–277 (2001)

Chang, B., Meng, L., Haber, E., Ruthotto, L., Begert, D., Holtham, E.: Reversible architectures for arbitrarily deep residual neural networks. In: AAAI Conference on AI (2018)

Chaudhari, P., Oberman, A., Osher, S., Soatto, S., Carlier, G.: Deep relaxation: partial differential equations for optimizing deep neural networks. arXiv preprint arXiv:1704.04932, (2017)

Chen, T. Q., Rubanova, Y., Bettencourt, J., Duvenaud, D.: Neural ordinary differential equations. arXiv preprint arXiv:1806.07366 (2018)

Chen, Y., Pock, T.: Trainable nonlinear reaction diffusion: a flexible framework for fast and effective image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1256–1272 (2017)

Cisse, M., Bojanowski, P., Grave, E., Dauphin, Y., Usunier, N.: Parseval networks: improving robustness to adversarial examples. In: Proceedings of the 34th International Conference on Machine Learning, Vol. 70, pp. 854–863. JMLR. org, (2017)

Coates, A., Ng, A., Lee, H.: An analysis of single-layer networks in unsupervised feature learning. In: Proceedings of the 14th International Conference on Artificial Intelligence and Statistics, pp. 215–223 (2011)

Combettes, P. L., Pesquet, J.-C.: Deep neural network structures solving variational inequalities. arXiv preprint arXiv:1808.07526 (2018)

Combettes, P. L., Pesquet, J.-C.: Lipschitz certificates for neural network structures driven by averaged activation operators. arXiv preprint arXiv:1903.01014v2 (2019)

Dundar, A., Jin, J., Culurciello, E.: Convolutional clustering for unsupervised learning. In: ICLR (2015)

Gomez, A. N., Ren, M., Urtasun, R., Grosse, R. B.: The reversible residual network: backpropagation without storing activations. In: Advances in Neural Information Processing Systems, pp. 2211–2221 (2017)

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning. MIT Press, Cambridge (2016)

Goodfellow, I. J., Shlens, J., Szegedy, C.: Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572 (2014)

Haber, E., Ruthotto, L.: Stable architectures for deep neural networks. Inverse Probl. 34, 014004 (2017)

Haber, E., Ruthotto, L., Holtham, E.: Learning across scales—a multiscale method for convolution neural networks. In: AAAI Conference on AI, pp. 1–8, arXiv:1703.02009 (2017)

Hansen, P.C., Nagy, J.G., O’Leary, D.P.: Deblurring Images: Matrices, Spectra and Filtering. SIAM, Philadelphia (2006)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

He, K., Zhang, X., Ren, S., Sun, J.: Identity mappings in deep residual networks. In: 36th International Conference on Machine Learning, pp. 630–645 (2016)

Hernández-Lobato, J.M., Gelbart, M.A., Adams, R.P., Hoffman, M.W., Ghahramani, Z.: A general framework for constrained bayesian optimization using information-based search. J. Mach. Learn. Res. 17, 2–51 (2016)

Herzog, R., Kunisch, K.: Algorithms for PDE-constrained optimization. GAMM-Mitteilungen 33(2), 163–176 (2010)

Hinton, G., Deng, L., Yu, D., Dahl, G.E., Mohamed, A.-R., Jaitly, N., Senior, A., Vanhoucke, V., Nguyen, P., Sainath, T.N., et al.: Deep neural networks for acoustic modeling in speech recognition: the shared views of four research groups. IEEE Signal Process. Mag. 29(6), 82–97 (2012)

Horn, B.K., Schunck, B.G.: Determining optical flow. Artif. Intell. 17(1–3), 185–203 (1981)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: 32nd International Conference on Machine Learning, pp. 448–456 (2015)

Krizhevsky, A., Hinton, G.: Learning multiple layers of features from tiny images. Technical report, University of Toronto (2009)

Krizhevsky, A., Sutskever, I., Hinton, G.: Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 61, 1097–1105 (2012)

LeCun, Y., Bengio, Y.: Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 3361, 255–258 (1995)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436–444 (2015)

LeCun, Y., Kavukcuoglu, K., Farabet, C.: Convolutional networks and applications in vision. In: IEEE International Symposium on Circuits and Systems: Nano-Bio Circuit Fabrics and Systems, pp. 253–256 (2010)

Li, Q., Chen, L., Tai, C., Weinan, E.: Maximum principle based algorithms for deep learning. J. Mach. Learn. Res. 18(1), 5998–6026 (2017)

Modersitzki, J.: FAIR: Flexible Algorithms for Image Registration. Fundamentals of Algorithms. SIAM, Philadelphia (2009)

Moosavi-Dezfooli, S. M., Fawzi, A., F, O.: arXiv, and 2017. Universal adversarial perturbations. openaccess.thecvf.com

Mumford, D., Shah, J.: Optimal approximations by piecewise smooth functions and associated variational-problems. Commun. Pure Appl. Math. 42(5), 577–685 (1989)

Pei, K., Cao, Y., Yang, J., Jana, S.: Deepxplore: automated whitebox testing of deep learning systems. In: 26th Symposium on Oper. Sys. Princ., pp. 1–18. ACM Press, New York (2017)

Perona, P., Malik, J.: Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 12(7), 629–639 (1990)

Raina, R., Madhavan, A., Ng, A. Y.: Large-scale deep unsupervised learning using graphics processors. In: 26th Annual International Conference, pp. 873–880. ACM, New York (2009)

Rogers, C., Moodie, T.: Wave Phenomena: Modern Theory and Applications. Mathematics Studies. Elsevier Science, North-Holland (1984)

Rosenblatt, F.: The perceptron: a probabilistic model for information storage and organization in the brain. Psychol. Rev. 65(6), 386–408 (1958)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D 60(1–4), 259–268 (1992)

Scherzer, O., Grasmair, M., Grossauer, H., Haltmeier, M., Lenzen, F.: Variational Methods in Imaging. Springer, New York (2009)

Torralba, A., Fergus, R., Freeman, W.T.: 80 million tiny images: a large data set for nonparametric object and scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 30(11), 1958–1970 (2008)

Weickert, J.: Anisotropic diffusion in image processing. Stuttgart (2009)

Weinan, E.: A proposal on machine learning via dynamical systems. Commun. Math. Stat. 5(1), 1–11 (2017)

Yang, S., Luo, P., Loy, C. C., Shum, W. K., Tang, X.: Deep visual representation learning with target coding. In: AAAI Conference on AI, pp. 3848–3854 (2015)

Zagoruyko, S., Komodakis, N.: Wide residual networks. arXiv preprint arXiv:1605.07146 (2016)

Acknowledgements

L.R. is supported by the U.S. National Science Foundation (NSF) through awards DMS 1522599 and DMS 1751636 and by the NVIDIA Corporation’s GPU grant program. We thank Martin Burger for outlining how to show stability using monotone operator theory and Eran Treister and other contributors of the Meganet package. We also thank the Isaac Newton Institute (INI) for Mathematical Sciences for support and hospitality during the program on Generative Models, Parameter Learning and Sparsity (VMVW02) when work on this paper was undertaken. INI was supported by EPSRC Grant Number: LNAG/036, RG91310.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ruthotto, L., Haber, E. Deep Neural Networks Motivated by Partial Differential Equations. J Math Imaging Vis 62, 352–364 (2020). https://doi.org/10.1007/s10851-019-00903-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-019-00903-1