Abstract

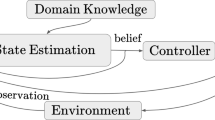

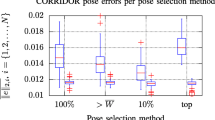

Localization is a key issue for a mobile robot, in particular in environments where a globally accurate positioning system, such as GPS, is not available. In these environments, accurate and efficient robot localization is not a trivial task, as an increase in accuracy usually leads to an impoverishment in efficiency and viceversa. Active perception appears as an appealing way to improve the localization process by increasing the richness of the information acquired from the environment. In this paper, we present an active perception strategy for a mobile robot provided with a visual sensor mounted on a pan-tilt mechanism. The visual sensor has a limited field of view, so the goal of the active perception strategy is to use the pan-tilt unit to direct the sensor to informative parts of the environment. To achieve this goal, we use a topological map of the environment and a Bayesian non-parametric estimation of robot position based on a particle filter. We slightly modify the regular implementation of this filter by including an additional step that selects the best perceptual action using Monte Carlo estimations. We understand the best perceptual action as the one that produces the greatest reduction in uncertainty about the robot position. We also consider in our optimization function a cost term that favors efficient perceptual actions. Previous works have proposed active perception strategies for robot localization, but mainly in the context of range sensors, grid representations of the environment, and parametric techniques, such as the extended Kalman filter. Accordingly, the main contributions of this work are: i) Development of a sound strategy for active selection of perceptual actions in the context of a visual sensor and a topological map; ii) Real time operation using a modified version of the particle filter and Monte Carlo based estimations; iii) Implementation and testing of these ideas using simulations and a real case scenario. Our results indicate that, in terms of accuracy of robot localization, the proposed approach decreases mean average error and standard deviation with respect to a passive perception scheme. Furthermore, in terms of efficiency, the active scheme is able to operate in real time without adding a relevant overhead to the regular robot operation.

Similar content being viewed by others

References

Aloimonos, Y.: Active perception. Advances in Computer Vision Series, vol. I. Lawrence Erlbaum, Hillsdale (1993)

Araneda, A.: Statistical inference in mapping and localization for mobile robots. Ph.D. thesis, Dept. of Statistics, Carnegie Mellon University (2004)

Bajcsy, R., Campos, M.: Active and exploratory perception. CVGIP: Image Understanding 56(1), 31–40 (1992)

Ballard, D.H.: Animate vision. Artif. Intell. 48, 57–86 (1991)

Burgard, W., Fox, D., Thrun, S.: Active mobile robot localization. In: Proc. of the International Joint Conference on Artificial Intelligence (IJCAI) (1997)

Cover, T., Thomas, J.: Elements of Information Theory. Wiley, New York (1991)

Davison, A., Murray, D.: Simultaneous localization and map-building using active vision. IEEE Trans. Pattern Anal. Mach. Intell. 24(7), 865–880 (2002)

Dellaert, F., Fox, D., Burgard, W., Thrun, S.: Monte Carlo localization for mobile robots. In: Proc. of International Conference on Robotics and Automation (ICRA) (1999)

Espinace, P., Langdon, D., Soto, A.: Unsupervised identification of useful visual landmarks using multiple segmentations and top-down feedback. Robot. Auton. Syst. 56(6), 538–548 (2008)

Gordon, N., Salmon, D., Smith, A.: A novel approach to nonlinear/non Gaussian Bayesian state estimation. In: IEE Proc. on Radar and Signal Processing, pp. 107–113 (1993)

Itti, L., Koch, C., Niebur, E.: A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 20(11), 1254–1259 (1998)

Mitsunaga, N., Asada, M.: How a mobile robot selects landmarks to make a decision based on an information criterion. Auton. Robots 21(1), 3–14 (2006)

Murphy, P., Torralba, A., Freeman, W.T.: Using the forest to see the trees: a graphical model relating features, objects and scenes. In: Proc. of the 16th Conf. on Advances in Neural Information Processing Systems, NIPS (2003)

Navalpakkam, V., Itti, L.: An integrated model of top-down and bottom-up attention for optimal object detection. In: Proc. of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2049–2056 (2006)

Oliva, A., Torralba, A.: The role of context in object recognition. Trends Cogn. Sci. 11(12), 520–527 (2007)

Pineau, J., Gordon, G., Thrun, S.: Anytime point-based approximations for large POMDPs. J. Artif. Intell. Res. 27, 335–380 (2006)

Rabinovich, A., Vedaldi, A., Galleguillos, C., Wiewiora, E., Belongie, S.: Objects in context. In: Proc. of Int. Conf. on Computer Vision (ICCV-07), pp. 1–8 (2007)

Roy, N., Gordon, G.: Exponential family PCA for belief compression in POMDPs. In: Advances in Neural Information Processing 15 (NIPS) pp. 1043–1049 (2002)

Roy, N., Thrun, S.: Coastal navigation with mobile robots. In: Proc. of Advances in Neural Processing Systems (NIPS), vol. 12, pp. 1043–1049 (1999)

Siagian, C., Itti, L.: Biologically-inspired robotics vision Monte-Carlo localization in the outdoor environment. In: Proc. IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems, IROS (2007)

Soyer, Ç., Bozma, H.I., Istefanopulos, Y.: Apes: attentively perceiving robot. Auton. Robots 20, 61–80 (2006)

Stachniss, C., Grisetti, G., Burgard, W.: Information gain-based exploration using rao-blackwellized particle filters. In: Proc. of Robotics: Science and Systems (RSS) (2005)

Sun, Y., Fisher, R.: Object-based visual attention for computer vision. Artif. Intell. 146, 77–123 (2003)

Thrun, S., Burgard, W., Fox, D.: Probabilistic Robotics. Cambridge University Press, New York (2006)

Tsotsos, J.K., Culhane, S., Wai, W., Lai, Y., Davis, N., Nuflo, F.: Modeling visual-attention via selective tuning. Artif. Intell. 78(1–2), 507–545 (1995)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Correa, J., Soto, A. Active Visual Perception for Mobile Robot Localization. J Intell Robot Syst 58, 339–354 (2010). https://doi.org/10.1007/s10846-009-9348-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10846-009-9348-4