Abstract

The parallel machine scheduling problem (PMSP) involves the optimized assignment of a set of jobs to a collection of parallel machines, which is a proper formulation for the modern manufacturing environment. Deep reinforcement learning (DRL) has been widely employed to solve PMSP. However, the majority of existing DRL-based frameworks still suffer from generalizability and scalability. More specifically, the state and action design still heavily rely on human efforts. To bridge these gaps, we propose a practical reinforcement learning-based framework to tackle a PMSP with new job arrivals and family setup constraints. We design a variable-length state matrix containing full job and machine information. This enables the DRL agent to autonomously extract features from raw data and make decisions with a global perspective. To efficiently process this novel state matrix, we elaborately modify a Transformer model to represent the DRL agent. By integrating the modified Transformer model to represent the DRL agent, a novel state representation can be effectively leveraged. This innovative DRL framework offers a high-quality and robust solution that significantly reduces the reliance on manual effort traditionally required in scheduling tasks. In the numerical experiment, the stability of the proposed agent during training is first demonstrated. Then we compare this trained agent on 192 instances with several existing approaches, namely a DRL-based approach, a metaheuristic algorithm, and a dispatching rule. The extensive experimental results demonstrate the scalability of our approach and its effectiveness across a variety of scheduling scenarios. Conclusively, our approach can thus solve the scheduling problems with high efficiency and flexibility, paving the way for application of DRL in solving complex and dynamic scheduling problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Modern international supply chains have been increasingly getting more sophisticated, which poses a great challenge for the scheduling of manufacturing processes. For example, the unforeseen arrival of new jobs can disturb planned static schedules and dramatically decrease the production efficiency (Ouelhadj & Petrovic, 2009). Additionally, the diversified demands of the global market require grouping jobs into distinct families based on their processing characteristics, with each shift from one family to another incurring additional setup time. An appropriate solution is hence supposed to adaptively optimize the processing sequence of jobs across various families under the dynamic arrival of jobs.

The scheduling problem in the manufacturing process has been commonly formulated as a parallel machine scheduling problem (PMSP), which offers a practical mathematical framework for optimization and development across diverse domains, for example, in the fields of semiconductor manufacturing (Zhang & Chen, 2022), thin-film-transistor liquid crystal display manufacturing (Shin & Leon, 2004), and offshore oil and gas industry (Abu-Marrul et al., 2021).

In this work, we focus on a particular instance of the PMSP characterized by constraints related to new job arrivals and family setups. This approach effectively abstracts the scheduling process pertinent to the modern manufacturing environment described previously. The objective function is to minimize the total tardiness. A detailed mathematical formulation is defined in Sect. “Problem formulation”. It is worth noticing that this problem is non-trivial, since a previous study without the consideration of these two constraints has been proven to be NP-hard (Biskup et al., 2008).

Under this formulation, the majority of conventional approaches for tackling these problems can be broadly classified into two categories: rule-based methods and metaheuristic algorithms (Ɖurasević & Jakobović, 2023). However, rule-based methods often fail to deliver high-quality results, since they rely on predefined rules that do not account for the unique characteristics of each specific scheduling problem. Meanwhile, metaheuristic algorithms require numerous iterations for yielding an appropriate solution for one instance, which is computationally expensive. Furthermore, both conventional approaches struggle to respond to dynamic environments.

To obtain a proper solution in a time-efficient manner, several research studies have employed reinforcement learning (RL), including deep reinforcement learning (DRL) based approach to solve the PMSP (Kayhan & Yildiz, 2023). RL is a promising branch in the field of machine learning and has achieved remarkable development in many challenging decision-making tasks, such as controlling the tokamak plasma for nuclear fusion (Degrave et al., 2022) and discovering faster matrix multiplication algorithms (Fawzi et al., 2022). In the RL-based approach, an agent is employed to learn an optimal policy through interaction with the environment. This mechanism aligns well with the process of most scheduling problems, making RL a promising alternative solution for the PMSP.

However, even though RL-based approaches show superiority over rule-based methods and metaheuristic algorithms in solving PMSPs, they still have limitations. First, most of them do not tackle the scheduling problem in an end-to-end manner, which means they cannot select job directly based on the raw information of the manufacturing environment. On the contrary, they rely on hand-crafted state features and pre-defined dispatching rules (Guo et al., 2020; Luo, 2020; Luo et al., 2022), which require extensive domain knowledge and lead to tedious work. Moreover, with the integration of hand-crafted state features and pre-defined rules, human bias might be introduced, and the potential of the data-driven method, i.e., RL, could not be fully leveraged. Second, the majority of the existing RL-based approaches need to be re-designed and re-trained when being applied to larger instances (Lang et al., 2020; Liu et al., 2020), which is highly time-consuming and hence restricts their practical applicability in diverse and evolving manufacturing environments.

To the best of our knowledge, the only work that breaksthrough these two limitations is proposed by Li et al. (2024). They represent the PMSP instance with variable-length matrix and then employ a recurrent neural network (RNN) based DRL agent to process the matrix, enabling an end-to-end scheduling process with high scalability. However, this approach equally suffers from significant drawbacks. First, the RNN models process the independent jobs in a sequential manner, making the index of jobs can affect the performance, which is a highly undesirable attribute in the PMSP scenarios. Additionally, the sequential processing of state matrix could also impact the performance when managing a large number of independent jobs, as capturing relationships between distantly positioned jobs in the matrix becomes increasingly complex. More crucially, the state matrix solely contains the information of the current idle machine, neglecting the overall state of all machines at a given decision time point. This restricted perspective limits the agent’s ability to make globally optimal decisions, as it is only considering the immediate circumstances of a single machine. Furthermore, purely expanding the matrix to encompass information regarding all machines brings new challenges, particularly the need to consider the relationships between all jobs and all machines, in addition to solely comparing jobs with each other. Handling these dual distinct dependencies simultaneously could also be problematic for RNN models.

Inspired by the current advantages of Transformer model (Vaswani et al., 2017) over RNN models in the field of natural language processing (NLP), we propose a Transformer-based DRL framework for solving PMSP. To do so, we tailor the Transformer model to fit it for the scheduling problem, since it has originally been designed specifically for NLP. This enables us to overcome the aforementioned limitations and the drawbacks of the RNN-based DRL approach. The tailored Transformer model utilizes the multi-head attention mechanism to process the available jobs in a parallel manner, avoiding the impact of job index on performance. Meanwhile, this mechanism is capable of effectively process diverse relationships in one state matrix, allowing the state matrix to be extended to include all the global information.

With the motivations above, we train this Transformer-based DRL agent with the proximal policy optimization (PPO) algorithm (Schulman et al., 2017), to minimize the total tardiness of PMSPs with family setups and new job arrivals constraints. An overview of the proposed approach is demonstrated in Fig. 1 and the contribution of this work is three-fold:

-

1.

We present a more comprehensive state representation for the PMSP that considers not only the information of all jobs and the current idle machine but also the global state of all machines at the decision time point. This expanded representation enables the agent to make more globally optimal decisions. Meanwhile, this novel state representation retains scalability, allowing the agent trained on a small-size instance to be directly applied to large-size instances, which would be time- and resource-efficient.

-

2.

We represent the DRL agent with an elaborately modified Transformer model featuring a two-head attention mechanism. The proposed agent can efficiently handle the multiple intertwined dependencies introduced by the novel state representation, which contains diverse information about machines and jobs and thus increases its complexity. Additionally, the agent can directly output the index of the selected job as the action, providing a direct scheduling process without relying on any pre-defined rules. This end-to-end framework not only leverages the potential of the data-driven method, but also reduces tedious manual work involved in defining and selecting rules. Furthermore, the Transformer-based agent processes jobs in parallel to ensure that the performance is not affected by the sequence of jobs in the matrix, as expected in industrial applications.

-

3.

We validate the superiority of the proposed agent by comparing it with agents employing one-head and four-head attention mechanisms. We also execute extensive numerical experiments with various parameter configurations to demonstrate the generalization capability of the proposed agent. A learning-based method, a metaheuristic method, and a classic dispatching rule are taken into comparison to illustrate the superiority of the proposed approach. Moreover, an ablation experiment is conducted to illustrate the importance of the global information, where comparative DRL agents are trained without the state of all machines.

The remainder of this article is organized as follows. Section “Literature review” introduces the related works on solving dynamic scheduling problems. Section “Background” presents the background of RL and Transformer model. Section “Problem formulation” formulates the mathematical model of the problem addressed in this paper. Section “Proposed approach” establishes the details of the proposed approach. Section “Numerical experiments” provides the results of numerical experiments. Section “Discussion” carries out a discussion on the application of the proposed approach. The conclusions are finally drawn in Sect. “Conclusion”.

Literature review

Scheduling problems, including PMSP, involve assigning jobs to machines over time to optimize given objectives, such as minimizing total tardiness or makespan. This kind of problem is central to various application domains, for instance semiconductor manufacturing (Ghaedy-Heidary et al., 2024) and vehicle planning (Zhang et al., 2021). Challenges in these problems arise from diverse constraints such as new job arrivals, sequence-dependent setup times (SDST), and machine availability, and many of these problems are inherently NP-hard (Blazewicz et al., 1991), indicating that they do not have known polynomial-time solutions and can be computationally intensive to solve. Therefore, these problems have attracted considerable attention from researchers to develop innovative and efficient methods.

Non-RL-based methods

To obtain the optimal solution of a scheduling problem, many researchers formulate the problem to a mathematical model and solve it with optimization techniques. For instance, Heydari and Aazami (2018) address a job shop scheduling problem (JSP) under SDST constraint considering two objective function, namely makespan minimization and maximum tardiness minimization. They first mathematically describe the problem as a mixed integer nonlinear programming model and then convert it into mixed integer linear programming (MILP) model. A set of Pareto optimal solutions is obtained by utilizing \(\varepsilon \)-constraint method. Hu et al. (2024) consider an unrelated PMSP with new job arrivals constraint to minimize the total tardiness. They formulate the problem into MILP model and then solve it by employing a commercial solver. Optimal solutions are yield on several small-scale-instances with 20 jobs. However, due to the NP-hard nature of the scheduling problems, mathematical modeling-based solutions become extremely computationally intensive when tackling large-scale-instances. Therefore, Hu et al. (2024) also develop a metaheuristic method based on neighborhood search for addressing large-scale-instances up to 200 jobs, on which the metaheuristic method outperforms the mathematical modeling-based method in terms of both solution quality and computational time.

Under this motivation, the metaheuristic methods are widely utilized for solving scheduling problems (Ezugwu, 2024; Pellerin et al., 2020; Para et al., 2022). Rolf et al. (2020) propose a genetic algorithm (GA) to to assign a given set of scheduling rules to several specific time point during the scheduling process. The combination of rules provided by this GA method can outperform any of the composite dispatching rule in solving the addressed hybrid flow shop scheduling problem. Although the metaheuristics is less computationally intensive relative to the mathematical modeling-based approach, they rely on numerous iterations for yielding an appropriate solution for one instance, restricting their applications in scenarios requiring immediate decision-making.

To obtain a real-time solution, many rule-based approaches are developed. Xiong et al. (2017) propose four dispatching rules for a dynamic JSP with the consideration of extended technical precedence constraints and the due date tightness. The proposed rules have promising performance when the due date is relatively loose. Didden et al. (2023) take the entire manufacturing system into consideration by utilizing multiple agents, where the release of jobs, their assignment on the machines, and their sequencing within the queue are all realized by the respective agents through different rules. Meanwhile, they also propose several algorithms to learn weights for proper linear combinations of the rules. In the computational experiments, their proposed MAS outperforms other multi-agent systems and common dispatching rules in several performance measures, including minimizing mean weighted tardiness. However, due to the pre-defined nature of rule-based approaches, they get comparatively poor results on instances with different environment settings, for example different due date tightness. This inability to respond to the changes imitates their application in variable manufacturing environments. In addition, designing new rule or selecting new combination of rules for new environments requires substantial expert knowledge and leads to tedious work.

Therefore, approaches that can provide appropriate solutions in real time under dynamic environments are highly desired.

RL-based methods

In contrast to non-RL-based approaches, RL-based approaches learn a policy through interaction with the environment, enabling them to provide proper results in real time and to respond to environment changes immediately.

Over the last half decade, the application of RL techniques for solving scheduling problems has been subject of many publications. The extensive research in this field is also reflected by a multitude of systematic literature reviews covering RL for scheduling general (Kayhan & Yildiz, 2023; Shyalika et al., 2020) or with focus on specific application domain, for instance production and smart manufacturing (Esteso et al., 2023; Li et al., 2023; Panzer & Bender, 2022; Waubert de Puiseau et al., 2022; Panzer et al., 2021; Wang et al., 2021), maintenance and repair (Ogunfowora & Najjaran, 2023), supply chain management (Rolf et al., 2023), cloud computing (Hou et al., 2024; Zhou et al., 2021), wireless networks (Hurtado Sánchez et al., 2022; Frikha et al., 2021; Erick & Folly, 2020; Yau et al., 2013) or power systems and smart grids (Yu et al., 2021; Arwa & Folly, 2020; Zhang et al., 2019, 2018).

In view of the large number of existing literature analyses, a detailed review of RL applications for scheduling problems seems redundant and would go beyond the scope of this paper. In accordance with the topic of this paper, we want to focus our literature review on the following two aspects: (1) solving the PMSP with RL, and (2) deploying RL-trained Transformer models for scheduling problems in general. With this two perspectives, our approach not only demonstrates significant improvements in solving PMSP with RL but also offers valuable insights into the utilization of Transformer models for addressing a wide range of scheduling challenges.

RL methods for computing the PMSP

We first review the applications of RL technique on the PMSP based on a recent survey article of Kayhan and Yildiz (2023). Their analyses include and even go beyond those of other review articles that examine RL for the PMPS in detail, such as the work of Wang et al. (2021).

In summary, Kayhan and Yildiz (2023) investigate 13 publications describing various RL techniques for computing different variants of the PMSP. The majority of publications consider some PMSP with unrelated machines (Csáji & Monostori, 2005; Zhang et al., 2007; Iwamura et al., 2009; Palombarini & Martínez, 2009; Zhang et al., 2011; Palombarini & Martínez, 2012a, b; Zhang et al., 2012; Ábrahám et al., 2019; Zhou et al., 2020), two publications a PMSP with identical machines (Yuan et al., 2013, 2016), and only one publication a PMSP with uniform machines (Guo et al., 2020). More precisely, the problems analyzed are subject to different constraints. These include machine breakdowns (Csáji & Monostori, 2005; Palombarini & Martínez, 2012a, b; Yuan et al., 2016), precedence constraints (Csáji & Monostori, 2005; Zhang et al., 2011; Ábrahám et al., 2019) and SDST (Zhang et al., 2007, 2011; Palombarini & Martínez, 2012b). Furthermore, different objective functions are taken into, such as makespan (Csáji & Monostori, 2005; Ábrahám et al., 2019; Zhou et al., 2020), total tardiness (Palombarini & Martínez, 2009, 2012a, b), weighted total tardiness (Zhang et al., 2007, 2012), total costs (Zhang et al., 2011) or some mult-criteria objective function (Iwamura et al., 2009; Yuan et al., 2013, 2016; Guo et al., 2020). Looking at the applied RL technique, most publications rely on some Q-Learning strategy (Csáji & Monostori, 2005; Zhang et al., 2007; Iwamura et al., 2009; Zhang et al., 2012; Ábrahám et al., 2019; Zhou et al., 2020; Yuan et al., 2013, 2016; Guo et al., 2020). Only a few publications analyze other RL algorithms, such as SARSA (Zhang et al., 2011) or relational RL strategies (Palombarini & Martínez, 2009, 2012a, b).

Another interesting aspect is how exactly the trained RL agent solves the corresponding PMSP, i. e. how the actions of an agent construct and manipulate a schedule. The vast majority of publications (Zhang et al., 2007, 2011, 2012; Zhou et al., 2020; Yuan et al., 2013, 2016; Guo et al., 2020) describes an agent that constructs a schedule indirectly by selecting a heuristic, for instance a dispatching rule, from a set of heuristics, which in turn determines the next scheduling decision (e. g. selecting a job to be processed on an idle machine). The remaining publications tend to describe individual solutions. In one reported approach, the agent assigns tasks of jobs to a machines, i. e. each action is associated with a machine (Csáji & Monostori, 2005), while another publication suggests to train an agent that selects in each time step a parameter from a set of parameters to compute a utility function, which in turn prioritizes the selection of job operations and machines (Iwamura et al., 2009). Three publications, which, however, stem from the same main author, describe an approach, in which an RL-trained agent repairs unfeasible schedules by selecting from a set of predefined repair operations (Palombarini & Martínez, 2009, 2012a, b). Only one publication presents an agent that selects the next task of a job to be processed on an idle machine (Ábrahám et al., 2019), making it comparable to the approach described in this article. However, since the authors use tabular Q-learning, the state and action space is tailored exactly to one problem instance, which makes it difficult to apply the learned strategy to problem instances of different dimensions.

RL-based approaches also yield promising achievements in solving real-world PMSPs. Rodríguez et al. (2022) consider the scheduling problem in the context of predictive maintenance for a set of parallel machines. To minimize the machine breakdown time and prevent failure, they proposed a DRL-based approach for technicians scheduling. A deep neural network, in particular a Multi-Layer Perceptron (MLP), is utilized to represent of the agent, which can select a proper technician for the current maintenance task based on the availability and skills of each job. Zhang et al. (2021) model the electric vehicles (EVs) charging scheduling problem as a PMSP and employ the DRL-based approach to minimize the total charging time. The agent can assign a suitable charging station for each EV based on the availability of all stations. This approach can significantly reduce the total charging time on all EVs in comparison of two heuristic-based baselines. Paeng et al. (2021) investigate the PMSP with family setups constraint in a semiconductor manufacturing environment. To be more specific, they train an MLP with RL algorithm to minimize the total tardiness. The trained network computes five matrices consisting of respectively five sets of statistical information, based on which it selects a suitable family of jobs. The particular selection of jobs inside the selected family is executed by a dispatching rule. Their method exhibits significant advantages over rule-based and metaheuristic methods on eight given datasets.

However, although the aforementioned works demonstrate advantages over non-learning-based methods with respect to efficiency and quality, they still encounter limitations. First, most of them cannot perform the scheduling process directly, in contrast, they rely on a set of predefined dispatching rules as actions for outputs, and several statistical information derived from the scheduling environment as states for inputs. The combination of dispatching rules cannot cover all possible solutions and is not guaranteed to cover the optimal solution, while the statistical information does not provide a complete description of the whole scheduling environment. Therefore, the potential of RL as a data-driven method is not be fully leveraged under this non-end-to-end manner. Moreover, this kind of RL methods offers restricted flexibility, since the dispatching rules set may require to be re-selected when applied to different scheduling environment, which requires extensive domain knowledge and leads to tedious human effort.

Second, despite the fact that some RL-based methods can schedule directly, they usually cannot be flexible enough to handle problems with arbitrary scales. These methods require re-designed and re-trained when being applied to larger instances with more machines or jobs, since they are implemented through pre-defined tables or neural networks with fixed dimensional, i.e., MLP. The re-training process is highly time-consuming and hence restrict their practical applicability in diverse and evolving production environments. The only work that can directly solve PMSP instances of any scales is proposed by Li et al. (2024) based on the framework of Lang et al. (2019). However, as elaborated in Sect. “Introduction”, this work does not take the global information of the scheduling environment into consideration, and the manner it processes the state matrix does not align with the nature of PMSPs.

To this end, we develop a novel DRL-based framework with highly scalability for solving the proposed PMSP, where the scheduling process is executed end-to-end and the complete information of the environment is considered. A Transformer model is employed for representation of the DRL agent. The upper part of Table 1 summarizes all the aforementioned literature on applying RL to PMSP and provides a comparison with our method.

To further emphasize the novelty of our work and clarify the research gap, in the next subsection, we will briefly discuss whether and in which context RL-trained Transformer models have already been investigated for scheduling problems in general.

Transformer-based RL methods for computing general scheduling problems

Compared to the general literature on RL for scheduling problems, the application of Transformers for scheduling problems is still in its infancy. Against this background, we could only investigate seven publications describing the application of RL-trained Transformer models for computing scheduling problems. Due to the scarcity of literature and the lack of detailed review articles, we will discuss the papers on the use of RL-trained Transformers for scheduling problems in more detail.

Chen et al. (2022) present an RL-trained Transformer, which is trained on the selection of dispatching rules in a dynamic job shop scheduling problem. The input of the Transformer is a disjunctive graph describing the scheduling problem. A particularity of the implementation is that the Transformer is capable to extract and generate higher features of the disjunctive graph representation to facilitate scheduling decisions. The objective is to minimize the makespan. Results show that the makespan is in average 11.67% smaller compared to single dispatching rules, metaheuristics and non-Transformer RL strategies.

Wang et al. (2022) consider a cloud manufacturing scheduling problem, in which an agent allocates manufacturing tasks to enterprises, while optimizing a multi-criteria objective function including the minimization of makespan and total cost as well as the maximization of the reliability. A state comprises the task to be allocated at the current point in time, described by several attributes, as well as attributes of all enterprises to which the task can be allocated. An outstanding particularity of the authors approach is that it utilizes the multi-criteria objective function as reward function, which in turn implies that the engineering of a separate time-step based reward function is not necessary.

Xu and Zhao (2022) investigate a cloud job scheduling problem, in which jobs composed of different tasks must be allocated to computing resources. In particular, each task of each job must be allocated in three stages. First, each task must be allocated to a data center. Second, each task must be allocated to a server node within the selected data center. Third, each task must be allocated to an application container of the selected server node. The authors present a multi-agent RL approach, in which allocation decisions of each stage are conducted by a separate agent. Thus, the agent’s actions are associated with data centers, server nodes, and application containers, respectively. The objective is the minimization of the total energy costs. Each agent is not represented by a whole Transformer model, but incorporates a self-attention layer within its model architecture. The purpose of the self-attention layer is to learn key features from the raw input states. A state comprises the CPU, RAM and hard drive capacity of each application container, server node, and data center, respectively.

Zhao et al. (2022) present an RL-trained Transformer and evaluate its performance on a job shop scheduling problem, with the objective of minimizing the makespan. Here, the actions of the agent point to the available jobs that can be processed in the next time step, while a state comprises multiple attributes of all available jobs. The authors approach performs in average better than several dispatching rules and a GA. In three problem instance, the RL-trained Transformer is even able to compute the optimal solution.

Chen et al. (2023) train a Transformer with RL to compute solutions for a job shop scheduling problem. A special aspect of their implementation is that, analogous to the work of Chen et al. (2022), the complete problem is described as disjunctive graph and considered as a single, forward propagable state. Thus, the intention of using a Transformer is to autonomously derive significant features from the problem data to determine appropriate scheduling decisions. The output of the agent is a global sequence of tasks from which the local processing sequence of tasks on each machine can be derived. The Transformer model outperforms multiple dispatching rules and a Tabu search algorithm. For large-scale problems, the authors approach performs also better than Google OR-Tools.

Chen et al. (2023) consider a cloud edge task scheduling problem comparable to the problem in the paper of Wang et al. (2022). The authors pursue to optimize a multi-criteria objective function including the minimization of the production lead time, production costs, and the variance of utilization as well as the maximization of the work rate of scheduling resources. To do so, the agent observes a one-dimensional vector as a state, which contains, for instance, information about the locations of scheduling resources, the number of unfinished scheduling tasks, and the number of scheduling tasks currently in process. For each observed state, the agent selects a dispatching rule from a set of 9 dispatching rules. The agent comprises self-attention layers to extract additional features from a concatenated vector consisting of the current state as well as the selected action and the received reward, both from the last time step. The authors state that their approach solve the cloud edge task scheduling problem more effectively in comparison to single dispatching rules and other RL algorithms.

Song et al. (2023) investigate an RL-trained Transformer for minimizing the makespan in a dynamic job shop scheduling problem as in the work of Chen et al. (2022). In particular, the agent selects in each time step a dispatching rule from a set of 14 dispatching rules to determine scheduling decisions. As in the aforementioned paper (Chen et al., 2022, 2023), a Transformer model is used to autonomously generate states from the disjunctive graph representation of the scheduling problem. The reward function compares the utilization of machines in the current and in the previous time step, where an increase of the utilization is rewarded and a decrease is penalized. The authors approach outperforms the use of single dispatching rules, a GA and other reinforcement learning methods by in average 15.93%.

The literature on solving scheduling problems in general utilizing Transformer-based RL methods is summarized in the lower part of Table 1. It is evident that these works still do not simultaneously break through the two limitations stated above.

The first limitation is from the perspective of direct scheduling, i.e., end-to-end scheduling. In particular, many approaches equally execute the scheduling process in a indirect fashion by employing a set of dispatching rules as the action space. As we discussed in the previous subsection, this action representation does not leverage the data-driven nature of the learning-based approach. The selection of dispatching rules requires extensive domain knowledge, while the selected combination is not guaranteed to cover the optimal solution. Additionally, several works apply Transformer-based RL for solving JSP. Instead of utilizing the raw features of the scheduling environment, these problems are typically formulated as disjunctive graphs, which describe the complex sequences of operations of jobs across multiple machines. However, such formulation is less applicable to PMSP, since the challenges of PMSP lie in efficient job allocation rather than operation sequencing. Moreover, the process of setting up this graph-based model requires significant domain expertise, increasing complexity in application.

The second limitation is from the perspective of scalability, namely whether the method can be applied retraining-free to environments with more machines and jobs. Several works also rely on the state representation, whose dimension depends on the number of jobs or machines, and therefore require time-consuming re-training when being applied to significant larger instances. Under the modern dynamic manufacturing environment, this representation restricts the application of the RL-based approach. It is worth noting that the work of Zhao et al. (2022) has realized a certain scalability by proposing a state matrix representation with variable number of rows. In particular, each row corresponds to the features of an available job and the approach can therefore process instance with any jobs. However, to embed the machine-related information into the state representation, the dimension of the job features depends on the number of machines. When the practical scheduling environment has a larger machine number than the predefined dimension, the tedious re-training process is inevitable.

With the previous motivation, we first develop a state representation that can adapt to instances with arbitrary numbers of jobs and machines. The state contains the raw features of all available jobs and machines, providing a global perspective for decision-making. To process the state matrix appropriately, we elaborately modify the Transformer model to represent the RL agent. The agent automatically maps the raw information of the scheduling environment to the priority of each job, providing an end-to-end scheduling manner.

Background

In this section, we briefly review the basic concepts related to RL and Transformer, which form the foundation of our proposed framework.

Basic concepts in RL

RL represents a promising branch of machine learning, concentrating on learning an appropriate policy through interaction with the environment to attain predefined objectives. These environments for training the RL agent are commonly formulated as Markov Decision Process (MDP), offering a structured mathematical framework to describe and analyze decision-making scenarios.

An MDP can be framed as a five-tuple representation \((S, A, P, R, \gamma )\), where S is a set of all states representing the different conditions that the agent can encounter in the environment, A is a set of actions that the agent can execute, P is the transition probability function describing the likelihood of state transition under a given action, R is the reward function that assigns the reward to the agent for each state transition due to an action, \(\gamma \) is the discount factor that balances immediate versus long-term rewards.

The RL agent interacts with the MDP-formulated environment under a particular policy \(\pi \). To evaluate the policy, the state value function V is a crucial tool (Sutton & Barto, 2018).

It is defined for each state and represents the expected cumulative reward the agent can achieve starting from that state while following policy \(\pi \). The value function for state s following policy \(\pi \) can be mathematically formulated as:

where \(\pi (s_t)\) is the action taken by the agent in state \(s_t\) and can be denoted as \(a_t\). The goal of RL is to find a optimal policy that maximizes the discounted cumulative reward \(J(\pi )\) and is given by the following expression:

Basic concept of Transformer

The Transformer model is proposed by Vaswani et al. (2017) and has achieved state-of-the-art performance in various fields, such as natural language processing (Ouyang et al., 2022), computer vision (Dosovitskiy et al., 2020), life science(Rives et al., 2021) and so on. Unlike traditional models that rely on recurrent layers, the Transformer utilizes a mechanism called ’attention’ to process sequential data with variable-length, which enables it with more parallelization (Lin et al., 2022).

Figure 2 (left) depicts the attention mechanism, illustrating the transformation of input sequence X into output sequence Y. Prior to entering the attention mechanism, each element of the input sequence X is transformed into three distinct vectors—query Q, key K, and value V—by being multiplied with respective weight matrices as shown as follows:

Subsequently, the attention mechanism processes these vectors to compute the output Y, which can be mathematical expressed by the following equation:

where \(d_K\) is the dimension of the key vector K. The term \(\frac{1}{\sqrt{d_K}}\) servers as a scaling factor, effectively normalizing the dot product to prevent excessively large values, thereby maintaining numerical stability in the attention scores.

For processing sequence with multiple dependencies is the multi-head attention mechanism particularly beneficial. As demonstrated in the Fig. 2 (right), the Q, K and V vectors are segmented into multiple heads, where each head independently performs the aforementioned attention function. The outputs from these heads are then concatenated to form the final result. The mathematical expression of multi-head mechanism is given as follows:

Here, h represents the total number of heads, and the ith head, denoted as \(head_i\), is calculated using Attention(Q, K, V). This segmentation into multiple heads allows the model to capture a more diverse range of information from the input sequence, enhancing its ability to understand and represent complex relationships within the data.

Problem formulation

In this study, we address a complex variant of the PMSP that incorporates the dynamic arrivals of new job along with family setups constraints. The problem is first described in Sect. “Problem description” and a mathematical model is given in Sect. “Mathematical model”.

Problem description

PMSP involves assigning n independent jobs to m parallel operating machines. The set of jobs and machines can be represented as \(J=\{J_1,\ldots J_j,\ldots , J_n\}\) and \(M = \{M_1, \ldots M_i,\ldots ,M_m\}\), respectively. Each job \(J_j\) has an individual processing time \(p_j\) and a due date \(d_j\), while each machine \(M_i\) possesses a specified processing speed \(v_i\). The effective processing time for job \(J_j\) on machine \(M_i\) is thus calculated as \(p_j/v_i\). If a job is completely processed after its own due date, it incurs tardiness. The objective of this work is optimizing the assignment of jobs to machines to minimize the sum of tardiness incurred on all jobs, which is referred as total tardiness and denoted as TT. For a given set of jobs, the TT can be calculated as follows:

where \(C_j\) is the completion time of job \(J_j\). Figure 3 demonstrates a small instance of PMSP that contains only two machines and five jobs. The length of each block indicates the processing time of the corresponding job, and the due date of each job is marked on the horizontal axis. In the shown schedule, tardiness occurs on Job 5.

Furthermore, to obtain a more realistic representation of modern manufacturing environments, we take the following two constraints into the consideration:

-

1.

The new job arrivals constraints. The new job arrivals constraints. In modern dynamic manufacturing environments, new jobs can arrive unpredictably, necessitating real-time adjustments to the schedule. Under this constraint, each job \(J_j\) is associated with a release date \(r_j\), indicating that the job can only be processed after its release date.

-

2.

The family setups. In real manufacturing environments, jobs are usually categorized into several families according to their characteristics, where sequential processing two jobs from different families requires an additional setup time in between (Liaee & Emmons, 1997). The number of families is denoted as \(N_f\) and the family of job \(J_j\) is expressed as \(f_{J_j}\). The family of the previous job processed by machine \(M_i\) is termed as its setup state and is denoted as \(f_{M_i}\). Figure 4 shows the previous instance under the constraint of family setups, where the five jobs are divided into two families. Two additional setup times are required before processing of Job 4 and Job 5, respectively. Moreover, these two setup times lead to a new tardiness on Job 4 and an increased tardiness on Job 5.

This problem formulation, combining the dynamic nature of job arrivals with family setups constraints, presents a realistic and challenging scenario typical in modern manufacturing and service systems, reflecting the intricacies of scheduling in a globalized, dynamic environment. To maintain focus on the core aspects of this challenge without losing the essence of the problem, we introduce the following simplifications:

-

1.

Each machine can immediately start to process a job after the setup is finished.

-

2.

Each machine can process only one job at a time, and each job can be processed on only one machine.

-

3.

There is no moving time for the jobs.

Mathematical model

Then we mathematically describe the PMSP tackled in this paper based on the mixed integer formulation developed by Avalos-Rosales et al. (2015).

Although the proposed DRL approach solves the problem in a model-free manner, which does not inherently rely on a precise mathematical model (Schulman et al., 2017), we still present the mathematical model for the following two benefits. First, it provides an exact definition of the scope of our problem and clarifies the parameters, constraints, and objective. Second, this precise formulation aids in standardizing the problem across various studies, establishing a solid foundation for follow-up researchers that aim to explore alternative solutions or enhancements.

The notations required for modeling are listed below.

-

1.

Parameters:

-

n: total number of jobs

-

m: total number of machines

-

j, g: index of jobs, \(j,g = 1,2,\ldots ,n\)

-

i, k: index of machines, \(i = 1,2,\ldots ,m\)

-

J: the set of jobs

-

M: the set of parallel machines

-

\(p_j\): the processing time of job \(J_j\)

-

\(r_j\) the release date of job \(J_j\)

-

\(d_j\): the due date of job \(J_j\)

-

\(v_i\): the processing speed of machine \(M_i\)

-

\(S_{jg}\): setup time for job \(J_g\) when it immediately follows job \(J_j\) (equal to 0 if \(J_g\) and \(J_j\) come from same family, equal to 10 otherwise)

-

V: a sufficient large constant

-

2.

Decision variables:

-

\(C_j\): the completion time of Job \(J_j\)

-

\(X_{ijg}\): 1 if \(J_j\) is a predecessor of \(J_g\) on machine \(M_i\), 0 otherwise

-

\(Y_{ij}\): 1 if job \(J_j\) is assigned to machine \(M_i\), 0 otherwise

Moreover, to support the problem formulation, a dummy job is introduced at the start and end on each machine and \(J^{\prime }\) denotes the set of jobs includes J and the dummy jobs. The processing times and setup times related to the dummy jobs are considered 0. Our model can be therefore stated as:

Objective function:

Subject to:

Objective (7) minimizes the total tardiness of the solution. Constraint (8) imposes that each job is assigned to one and only one machine. Constraint (9) and constraint (10) ensure that each job on the machine it is assigned to has one and only one predecessor and one successor, respectively. Constraint (11) establishes that at most, one job is scheduled as the first job on each machine. Constraint (12) makes sure that a job can only be processed after its arrival time. Constraint (13) forbids overlapping among the jobs with respect to family setups and machine speeds. Constraint (14) sets the completion time of the dummy job at the start on each machine to 0. Constraint (15)–(17) define the domain of the variables.

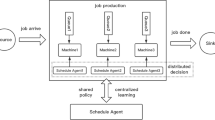

Proposed approach

In this section, the details of the proposed Transformer-based DRL approach are successively provided, including the state and action representations, the structure of the neural network that represents the DRL agent, the reward function, and the architecture of the PPO algorithm for training.

State representation

The state representation is designed based on the scheduling framework of the problem illustrated in Fig. 5. According to the framework, the state is discrete and the decision point is defined as every time a machine becomes idle. State transition occurs when a job is selected for this current idle machine.

To enable the proposed approach to conduct the scheduling process in an end-to-end fashion, the raw features of all available jobs and all machines should be contained in the state matrix. The job features and machine features are shown in Table 2, where the machine feature running time \(\tau \) is computed by adding the current time t to the remaining processing time of the job being processed on the corresponding machine. It is worth noting that \(\tau _i = t\) if \(M_i\) is idle at time point t.

Meanwhile, to allow the approach can process instances of arbitrary scale, we design the state matrix to be variable-length. Li et al. (2024) proposed a variable-length state representation, which can be summarized in Fig. 6. The number of rows is equal to the number of available jobs, where each row contains the value of the features of the corresponding job and the value of the features of the machine that is idle at that decision time point.

However, this state representation only includes the idle machine at each decision time point and omits other working machines, which can result in a myopic manner. To this end, we propose a novel variable-length state representation, which is demonstrated in Fig. 7. As shown in the figure, a new matrix containing the information of all machines is concatenated to the matrix in Fig. 6. We apply zero padding on the matrix with all machine information to make the number of columns of the two matrices equal. Since there are \(n_t\) available jobs and m machines at time t, and each job and machine can be described by 3 features, the shape of the state matrix at time t is \((n_t + m) \times (3+3)\). Additionally, the number of rows decreases with the selection of jobs and increases with the arrival of new jobs.

This expansion of the state matrix introduces an additional dependency, capturing not only the interactions among jobs but also the relationships between jobs and machines. This more comprehensive view of the scheduling environment allows for a richer and more global understanding of the decision-making landscape.

Action representation

To realize the end-to-end scheduling manner, the agent should calculate a priority for each job at each decision time point, based on which the job could be selected directly without any pre-defined rules. Therefore, we define the action representation as the priorities of all available jobs in the current environment. Assuming that the number of available jobs at time point t is \(n_t\), so the action of the DRL agent is an \(n_t\)-dimensional vector. Just like the state space, the action space also decreases with the selection of jobs and increases with the arrival of new jobs.

Then we apply the Softmax function to the output of the DRL agent, to convert the priorities into a probability distribution. In the training process, we conduct the job selection based on this probability distribution, where the job with higher priority corresponds to a higher probability of being selected. While during testing, we make the agent greedily select the job with the highest priority.

Training algorithm and neural network structure

In this work, we adopt the PPO algorithm (Schulman et al., 2017) as the learning algorithm, which is realized by actor-critic architecture. The actor network takes the current state as input, then executes the previously mentioned task of calculating the priority for each available job. The critic network also takes the current state as input but estimates the value of the states based on the current policy, which is a scalar and utilized for updating the actor network. Algorithm 1 illustrates the interaction mechanism between the actor network and the critic network.

We leverage the Transformer model Vaswani et al. (2017) to construct both the actor network and the critic network, which can process variable-length matrix in a parallel manner. To be more specific, the Transformer model utilized in this work employs solely the encoder component and discards the positional encoding. This modification ensures that the sequence of jobs in the state matrix does not impact performance, which is key in applying the Transformer model to the PMSP.

The architecture of the actor network is shown in Fig. 8. In the actor network, the Transformer model is composed of \(N_{Block}\) k-dimensional Transformer blocks. These Transformer blocks encode the state matrix into an encoded matrix with constant number of rows and k columns. Then, to obtain the priority of each job, we utilize the Multi-Layer Perceptron (MLP) to map the encoded matrix from k-dimensional to 1-dimensional. Since only the first \(n_t\) rows of this matrix correspond to the job information, we apply a mask operation on the last m rows to filter out the redundant machine information. It is noticeable that this mask operation will not lead to myopic behavior, since the machine information has been already encoded into the whole matrix by the Transformer model and taken into account of the jobs’ priorities. Finally, we use the Softmax function to convert the priority vector into a probability distribution, where the job with a higher priority has a higher probability of being selected. The final output of the actor network is the index of the selected job that sampled from this distribution.

Figure 9 present the architecture of the critic network. The Transformer model implemented in the critic network has the same architecture as in the actor network. Since the final output of critic network is a scalar that indicate the value of the corresponding state, we straightforwardly sum the encoded matrix by columns to get a k-dimensional vector. Then the vector is mapped to the state value by an MLP.

It is crucial to note that the proposed new state matrix contains two distinct dependencies as described previously, providing global information while also presenting challenges for processing. To process this state matrix appropriately, we leverage the multi-head attention mechanism in the Transformer model.

Two perspectives of the relationship need to be considered for selecting the most suitable job for the current idle machine. The first perspective is how each job is influenced by other jobs, involving the assessment of factors like processing times, due dates and the relative urgency between jobs. The second perspective is the interactions between jobs and machines. This perspective involves considering the impact of machine allocation on overall scheduling efficiency. For instance, determining whether a job suitable for a currently idle machine might be better allocated to a machine that will be idle in the future.

The multi-head attention mechanism employs an architecture, where each attention head first processes the matrix from its own perspective separately, and then the results of each head are synthesized through a concatenation operation. This architecture enables the model to obtain multiple perspectives on the matrix and thus handle multiple dependencies within one matrix. In our work, the number of attention heads is intuitively chosen to be two, corresponding to the previous description of the state matrix. This two-head attention mechanism is proven to be efficient in the subsequent numerical experiments.

Reward function design

Given that the objective is to minimize total tardiness, equating to the cumulative sum of all delays incurred in the scheduling process, it becomes intuitive in our MDP framework to define the reward as the negative of tardiness. However, this direct approach, where the reward function is solely dependent on the negative of total tardiness, can present challenges in the learning process of the agent. Since due dates are sampled from an identical distribution for a particular instance, tardiness tend to incur in the later stages of the scheduling process. Therefore the agent will struggle to evaluate a long sequence of decisions from the rewards that are given only at the end of those decisions.

To address this challenge and enhance the learning efficiency of the agent, we adopt the technique of reward shaping (Ng et al., 1999). Reward shaping involves incorporating intermediate rewards to the original reward function to provide more frequent and informative feedback to the agent, ensuring a effective and stable learning process. The Algorithm 2 illustrates the procedure to calculate the reward.

The rationale behind our reward shaping strategy is rooted in the observation that frequent changes in setup state consequently lead to increased processing time. Hence, a schedule of high quality intuitively features fewer setup changes, as each additional setup can heighten the probability of incurring tardiness for the subsequent jobs. In particular, the agent receives a positive shaping reward \(R_{shape}\) when it selects a job that identical to the setup state among jobs from different families, thereby avoiding additional setup time; Conversely, the agent is penalized with a negative shaping reward \(-R_{shape}\) if it chooses a job from a different family, despite the availability of same-family jobs. Moreover, when the total tardiness remains 0, imply an optimal schedule, an additional positive reward \(R_{opt}\) is granted. In this work, we set \(R_{shape}\) and \(R_{opt}\) to constants with values of 1 and 200, respectively.

Numerical experiments

In this section, we first provide the details of the training process of the agent. The training process is then compared with two agents with a one-head attention mechanism and a four-head attention mechanism, respectively, to show the superiority of the two-head attention mechanism. To demonstrate the generalization capacity of the proposed agent, it is further applied on larger instances without re-training. Meanwhile, the aforementioned comparative agents with different number of attention heads, together with an RNN-based DRL method (Li et al., 2024), a metaheuristics method (Rolf et al., 2020), and a dispatching rule, are taken into comparison.

The training process of the agent

We develop our DRL agent in PyTorch. The proposed agent is trained in an RL environment that simulates a PMSP. We construct the environment based on OpenAI Gym (Brockman et al., 2016) and a Python-based Discrete-Event Simulation (DES) library called Salabim (van der Ham, 2018). The training process and the comparison process that follows are conducted on a PC with Intel Core i911900KF@3.50GHz CPU, 16GB RAM, and a single Nvidia RTX 3080 GPU. The entire training process takes 9028.87 sec on this PC, with the neural network updating process executed on the GPU and lasting for 2473.29 sec.

A production environment of a manufacturing company in Baden-Wuerttemberg, Germany, is selected as the instance for training the DRL agent. To maintain confidentiality, specific values such as processing times and due dates have been reasonably modified. Details of this environment are outlined in Table 3.

This production environment contains 12 machines with 2 different speeds and 80 initial jobs from 8 families. New jobs enter the production environment in batches of 10 at a constant time interval of 10 time units after the 20th time unit. A total of 5 such batches are added to simulate dynamic and fluctuating production demands that commonly occur in the company mentioned above.

We utilize the parameters due date tightness r and due date range R to generate the modified due date for all jobs according to the following uniform distribution, which is proposed by Potts and Van Wassenhove (1985):

where the indicator MP could be considered as the modified cumulative processing time of all jobs and is calculated as:

where \(N_s\) is the total number of setups. And n is the total number of jobs, which is calculated as \(n = n_0 + N_B * n_{new}\). However, the precise value of \(N_s\) cannot be known until the end of the scheduling process. Since its maximum possible value and minimum possible value are equal to the total number of jobs n (each job is not from the same family as the previous one) and the number of families \(N_F\) (not changing families until all jobs from one family have been processed), respectively. Therefore, the value of \(N_s\) is determined as \(N_{s}=\frac{n+N_{F}}{2}\), i.e., the average of the maximum possible value and the minimum possible value.

Since the due date of a new job cannot be earlier than its arrival time, we modify the uniform distribution in (18) to generate the due dates of the new jobs as follows:

where the \(p_{max}\) is the longest possible processing time and S is the setup time. This distribution ensures that every new job must not incur tardiness if it is processed immediately after it arrives in the production environment.

In the training instance, the r and R are set to be 0.1 and 0.5, respectively. A relatively small due date tightness and a relatively large due date range guarantee that a effective policy yields an optimal solution with 0 total tardiness, while a poor policy yields large total tardiness.

Table 4 gives the hyperparameter setting for the training process. It is noticeable that we utilize a smaller learning rate after an optimal solution with 0 total tardiness is found in order to achieve a stable training process (Bengio, 2012).

Table 5 gives the architecture for the Transformer model and the MLP utilized in the actor and critic networks. Since the dimension in the Transformer model is 128 and the number of heads is 2, each attention head contains 64 dimensions.

To verify the effectiveness of this two-head attention mechanism, we train two comparative Transformer-based agents: one with a one-head mechanism and another with a four-head mechanism, keeping all other hyperparameters, including the model dimension, consistent. As a result, each attention head in the one-head and four-head mechanisms contains 128 and 32 dimensions, respectively. The proposed agent, the comparative agents with one-head and four-head mechanisms are denoted in this work as Two-Head Agent, One-Head Agent, and Four-Head Agent, respectively.

The whole training processes of the proposed agent and the comparative agent are illustrated in Fig. 10, in which the abscissa is the number of episodes, and the ordinate is the average number of setups that the agent obtained in the previous 20 episodes. Meanwhile, Fig. 11 demonstrates the last 2000 episodes of these training processes.

From the results, it is evident that the agent with one attention head exhibits a swift decline in tardiness in the initial stages of the training process, which might be due to the simpler structure of the one-head mechanism and the more dimensions that each attention head possesses. However, this simple structure is insufficient for capturing the two different relationships, i.e., the relationship among jobs and the interrelationship between jobs and machines, in the state matrix. Therefore, this agent still fluctuates in the late stage of training. Contrastingly, the proposed agent utilizing the two-head attention mechanism displays a more stable process. Throughout the training process, a consistent reduction in total tardiness is observed, ultimately nearing zero in the late stage of training.

Moreover, increasing the number of attention heads does not induce further improvements within the same training time frame. As observed in Fig. 11, the total tardiness given by the agent with the four-head mechanism is still in a decreasing trend in the last 2,000 episodes, implying that a more prolonged training period might be necessary for convergence.

In conclusion, the comparison validates the superiority of the RL agent with the two-head attention mechanism in addressing this proposed problem.

Generalization capability of the trained agent

To evaluate the generalization of the proposed approach, the agent trained in the previous section is then employed on much larger instances without re-training. These large testing instances, drawn from the more extensive production environments of the companies mentioned above, are similarly modified to protect confidentiality. The parameters defining these larger instances are detailed in Table 6.

The results are compared with those of the comparative agents with respectively one-head and four-head mechanisms. Moreover, to demonstrate the superiority of the proposed approach, we take an RNN-based DRL method (Li et al., 2024), a metaheuristic method (Rolf et al., 2020), and a classic dispatching rule called earliest due date (EDD) into the comparison. The RNN-based DRL method is also trained on the training instance generated with the parameter in Table 3, while the hyperparameter and the state representation are consistent with the original work. This method is denoted as RNN Agent in this work. The metaheuristic method utilizes GA to assign four dispatching rules during the scheduling process and therefore can handle dynamic scheduling problems, where the accurate information of all jobs cannot be known in advance. This metaheuristic method is denoted as GA-Rules in this work, and its parameters are listed in Table 7. The dispatching rule EDD always assigns the highest priority to the job with the earliest due date.

The total tardiness obtained by all the aforementioned approaches on all large instances is given in Tables 8, 9, 10, and 11, with the best result on each instance highlighted in bold. Each Table corresponds to one set due date configuration.

First, it can be observed from Tables 8, 9, 10, and 11 that the proposed DRL approach consistently demonstrates high performance across scenarios with various scales and due date settings. Specifically, within each of the four sets of 48 instances, our approach outperforms the comparative methods on 42, 47, 41, and 47 instances, respectively, indicating robust scalability of the proposed DRL framework to the varying conditions.

Moreover, in the instance sets from Tables 9 and 11, our approach shows a significant advantage by outperforming on 47 out of 48 instances from each set. It is worth noting that these two sets are generated with a larger value of the parameter R, providing the instances a wider range of due dates. This suggests that our DRL approach excels at adapting to wide variations in due dates compared to other comparative methods.

Furthermore, our approach yields optimal solutions on several instances from the sets of Tables 8 and 9, where the due date tightness is relatively low. In particular, the proposed approach incurs no tardiness on 30 and 31 instances from the sets in Tables 8 and 9 respectively, validating the efficiency of our method.

In addition, Table 12 indicates the computational time required by each approach to solve instances of each scale. The result given in Table 12 is the average computational time over the four different due date configurations, as the computational time is primarily influenced by the instance scale rather than the due date configuration. Specifically, Table 12 reveals the computational efficiency of our approach. The agent is able to solve the largest instance with 520 jobs (400 initial jobs and 120 new jobs) within 3 sec, which requires considerably more time with metaheuristics. Although the rule-based method EDD uses less computational time due to its simpler procedures, the quality of results produced by the trained DRL agent significantly surpasses that of the dispatching rules, thus confirming its superiority.

Given the widespread application of dispatching rules in real-world scheduling (Pinedo, 2022), we set the rule-based method EDD as our benchmark and evaluate the performance of our proposed approach together with all the other comparative approaches based on their improvement over this benchmark. We calculate the improvement using the following equation:

where \(TT_{EDD}\) represents the total tardiness obtained by EDD and \(TT_{Approach}\) is the total tardiness obtained by the approach to be evaluated. The improvements achieved by the Transformer-based agents, i.e., the Two-Head Agent, the One-Head Agent, and the Four-Head Agent, on the instances with the aforementioned four due date configurations, are shown in Fig. 12. Meanwhile, Fig. 13 depict the improvements obtained by the Two-Head Agent, the RNN Agent, and the GA-Rules on the instances with the due date configurations of Tables 8, 9, 10, and 11.

From the figures, it can be observed that the proposed Two-Head Agent demonstrates robust and consistent performance improvement over the benchmark across all instances and due date configurations. In comparison, other methods show more variable improvements and might not consistently outperform the benchmark EDD. This indicates the superior ability of the proposed agent to learn effectively from the state matrix and adapt to different scheduling scenarios.

Moreover, another noticeable observation from the figures is the significantly larger improvement demonstrated by the Two-Head Agent in due date configurations with a larger due date range R, which brings about a considerable disparity between optimal and sub-optimal scheduling decisions and further underscores the robustness of our proposed approach.

Ablation study

In this work, we propose a comprehensive state matrix containing information about all jobs and all machines, enabling the DRL agent to make globally informed decision. To validate the benefit of the proposed state representation with global information, we train the proposed DRL agent with a comparative state matrix as previously shown in Fig. 6, which does not provide any information of processing machines and contains solely the relationships among jobs. The configuration of the training instance and all other hyperparameters remain the same.

To further demonstrate the advantage of including global information, we also train the comparative One-Head Agent on the comparative state matrix, where the one-head attention architecture aligns with the state matrix containing only one category of relationship. This training process will eliminate the explanation, that the worse performance on the comparative state matrix obtained by the proposed agent is solely due to the mismatch between the two-head architecture and the comparative state matrix.

Figures 14 and 15 present the training process with and without the global information of the Two-Head Agent and the One-Head Agent, respectively, where the training curves without global information are additionally labeled with “no Global” and shown in yellow.

It is evident from these figures that without the inclusion of global information, the training processes of both agents exhibit significantly more fluctuations. Particularly, the Two-Head Agent tends to diverge abruptly after initially converging, leading to substantial deviation from the optimal performance. Additionally, compared to the training process of the One-Head Agent on the state matrix without global information, it yields a smoother training process on the proposed comprehensive state matrix. Despite the fact that the proposed state matrix contains two distinct dependencies and does not align with the one-head attention mechanism.

Moreover, we further investigate the generalization capability of the agents trained without global information. They are employed to solve the four sets of instances from Tables 8, 9, 10, and 11. Their results are demonstrated in Tables 13, 14, 15, and 16 in Appendix, where the best result on each instance is also highlighted in bold. Our proposed approach maintains its superiority by outperforming on 42, 42, 34, 43 instances in each Table, respectively. Their improvements over EDD are illustrated in Fig. 16. It is worth noting that the Two-Head Agent trained on the comprehensive state matrix still realizes the most stable performance, verifying the robustness of the proposed approach.

In summary, the fluctuating training processes and the worse generalization capabilities could be attributed to the lack of comprehensive relationships that global information provides. Without the state of all machines, the agent might struggle to accurately assess the impact of individual job selection from a broader perspective and thus can only make myopic decisions. For instance, consider two similar scenarios where the available jobs and idle machines are identical. However, the jobs currently being processed on all the other machines are completely different. A proper action in the first scenario might lead to large tardiness in the second scenario, making it difficult to maintain stability during training and finally converge on a robust policy. Therefore, considering global information allows the agent to obtain a deeper understanding of the environment, offering a significant advantage in decision-making and overall performance.

Discussion

Through numerical experiments in various scenarios, our approach demonstrates significant scalability and stable effectiveness. A further discussion on its application will be carried out in this section.

Potential applications

The approach would find promising application the modern manufacturing. Specifically, several key components of manufacturing systems such as product scheduling on production lines (Paeng et al., 2021), Automated Guided Vehicle scheduling in warehouses (Miao et al., 2023), and scheduling of maintenance technicians (Rodríguez et al., 2022) have been investigated by being considered as PMSPs. Our approach can provide a high-quality solution in real-time for instances of any scale, providing the manufacturer with efficient scheduling process and consequent economic benefit.

Moreover, since the PMSP is an essential optimization problem, the utility of our method extends beyond the bounds of manufacturing into multiple domains where scheduling is a critical component. For instance, in the healthcare domain, the assignment of operations to surgeons and machines can be modeled as a PMSP (Kanoun et al., 2023; Burdett & Kozan, 2018). The efficiency and scalability of our approach offers the potential to dynamically give proper scheduling based on surgeon availability as well as the time tightness of the operations, ensuring that the healthcare system remains responsive and efficient under varying circumstances.

Similarly, in the cloud computing field, the scheduling of virtual machines for cloud data centers can also be described as PMSP (Tian et al., 2013). The scheduling problem in this domain is characterized by fluctuating demand and a strong requirement for real-time response, where the scalability and the immediate response capability of our approach could be particularly beneficial.

Practical implementation

By formulating the real-world information into the form of our proposed state representation as shown in Fig. 7, our approach could be seamlessly integrated into the existing decision-making system and then provide effective solution in real-time, without requiring any pre-defined rules.

While for the scenarios where the requirement on real-time response is less stringent, the explorative ability of the RL-based approach can be leveraged (Sutton & Barto, 2018). Instead of directly selecting the job with the highest probability, it can convert the priority of the job into a probability and then select the job according to the probability. The overall performance can be improved by repeating the process several times and selecting the schedule with the best result.

Furthermore, an industrial software with user-friendly interface could be developed based on the algorithm we proposed in this article and would be useful for the further transfer of our method. Since our code for the algorithm is open source, if a software is to be developed, the focus should solely be on the packaging process and user interface design.

Conclusion

In this work, we develop a novel DRL approach to address the PMSP, characterized by new job arrivals and family setups constraints.

The main contributions of our approach are its high scalability and the end-to-end scheduling manner. To be more specific, our approach can directly select the most appropriate job based on the raw information from the scheduling environment of arbitrary scales, reducing laborious manual work and thus increasing the applicability in various scenarios.

To achieve this, we first propose a variable-length state matrix that captures comprehensive information about both jobs and machines. The variable-length nature of the state representation allows it to adapt to scheduling environment of any scale. Meanwhile, the detailed representation is crucial for the DRL agent to perform an informed and global decision-making process. To process the multiple dependencies introduced by the rich information in the state matrix, we then utilize an elaborately modified Transformer model with two-head attention mechanism to represent the DRL agent. The Transformer model processes inputs in a way that enables it to dynamically handle matrix of any size. After training, the Transformer-based DRL agent can compute the raw features of jobs and machines and handle the dependencies between them. The globally optimal job is selected by directly outputting its index and without reliance on any pre-defined rules.

The experimental results validate the scalability and effectiveness of our approach. We first train the proposed DRL agent on a instance with 80 jobs and 12 machines. The training process is analyzed through the comparison with two comparative DRL agents with one-head and four-head attention mechanism respectively. The analysis demonstrates the superiority of the proposed two-head agent in terms of efficiency and stability.

We then apply the well-trained agent on four sets of large-scale instances, where each set has a different due date setting and contains 48 instances with scales ranging from 200 jobs and 16 machines to 400 jobs and 20 machines. Across numerous diverse and large-sized instances, our method exhibits remarkable scalability and effectiveness. In addition, we compare our approach with an RNN-based DRL methods, a metaheuristic algorithm, a standard dispatching rule, and the two previous DRL agents with different number of attention heads. The proposed approach outperforms all the comparative methods on a majority of instances. In particular, it yields the best solution on 42, 47, 41, and 47 instances in the four sets respectively.

Furthermore, an ablation study demonstrates the benefit of the novel state representation with global information. The agents trained on the state without the global information yield more fluctuating training processes, and obtain worse generalization capabilities.

Looking ahead, there are several promising avenues for future research building on the foundation of this work. Given that the PMSP is a specific instance of the broader job shop scheduling problem, an immediate extension of our approach could be its application to this wider context. This adaptation would test the versatility and robustness of our model in even more complex scheduling scenarios. Additionally, incorporating more dynamic constraints into the model, such as machine breakdowns and maintenance schedules, could significantly enhance its applicability in real-world manufacturing environments.

Data availability

The code for realizing the proposed approach and training the agent could be found at https://github.com/ASnowbow/Transformer4PMSP. The data of the instances for testing is also available and can be found in the “Validation” folder. All instances are divided into four folders according to their due date settings, corresponding to Tables 8, 9, 10, and 11. Each instance is named according to the scale, e.g., an instance with 20 machines, 400 initial jobs, 8 batches of new jobs, and 15 new jobs in each batch, and all jobs divided into 8 families is named “20 m_400j_8b_15new_8f”. In this instance all the raw data is saved as CSV files.

References

Ábrahám, G., Auer, P., Dósa, G., Dulai, T., & Werner-Stark, Á. (2019). A reinforcement learning motivated algorithm for process optimization. Periodica Polytechnica Civil Engineering, 64(4), 961–970.

Abu-Marrul, V., Martinelli, R., Hamacher, S., & Gribkovskaia, I. (2021). Matheuristics for a parallel machine scheduling problem with non-anticipatory family setup times: Application in the offshore oil and gas industry. Computers & Operations Research, 128, 105162.

Arwa, E. O., & Folly, K. A. (2020). Reinforcement learning techniques for optimal power control in grid-connected microgrids: A comprehensive review. IEEE Access, 8, 208992–209007.

Avalos-Rosales, O., Angel-Bello, F., & Alvarez, A. (2015). Efficient metaheuristic algorithm and re-formulations for the unrelated parallel machine scheduling problem with sequence and machine-dependent setup times. The International Journal of Advanced Manufacturing Technology, 76(9), 1705–1718.