Abstract

Experimental data sets that include tool settings, tool and machine-tool behavior, and surface roughness data for milling processes are usually of limited size, due mainly to the high costs of machining tests. This fact restricts the application of machine-learning techniques for surface roughness prediction in industrial settings. The primary objective of this work is to investigate the way data streams that are missing product features (i.e. unlabeled data streams) can contribute to the development of prediction models. The investigation is followed by a proposal for a semi-supervised approach to the development of roughness prediction models that can use partly unlabeled data to improve the accuracy of roughness prediction. Following this strategy, records collected during the milling process, which miss roughness measurements, but contain vibration data are used to increase the accuracy of the prediction models. The method proposed in this work is based on the selective use of such unlabelled instances, collected at tool settings that are not represented in the labeled data. This strategy, when applied properly, yields both extended training data sets and higher accuracy in the roughness prediction models that are derived from them. The scale of accuracy improvement and its statistical significance are shown in the study case of high-torque face milling of F114 steel. The semi-supervised approach proposed in this work has been used in combination with supervised k Nearest Neighbours and random forest techniques. Furthermore, the study of both continuous and discretized roughness prediction, showed higher gains in accuracy in the second.

Similar content being viewed by others

Introduction

Any traditional machining process depends on a significant amount of process inputs, most of which can not be directly controlled (Benardos and Vosniakos 2003). If we focus on face milling, only some of these inputs can be fixed by the process engineer when selecting the right cutting tool (diameter or number of teeth) and cutting conditions (axial and radial depths of cut). Some others are fixed by the machine operator (feed-rate and rotation speed). Both these groups of inputs are summarized in this research as tool settings. Many process inputs, depend directly on non-controllable parameters of the material workpiece (non-expected material in the workpiece), of the cutting tool (tool wear), and of the machine-tool behavior (the appearance of chatter) (Quintana and Ciurana 2011). The non-controllable parameters are especially important in face milling, while they can be negligible in other milling processes with less demanding cutting conditions, such as end-ball milling; the reason is due to the fact that face milling is done with very stiff tools and under very high cutting forces, causing excitation of the eigen-frequencies of the milling machine (Iglesias et al. 2014). Such face milling processes and machines are necessary for the manufacture of many industrial goods such as molds and dies for the automotive industry and the structural components of wind turbines. The investigation of experiments performed with these machines and repeated with the same tool settings yield wide variations in roughness values, which clearly confirms the impact of machine-tool inputs on surface roughness (Quintana et al. 2012). The only way to evaluate all the uncontrollable process inputs as a whole is by on-line measurement of cutting vibrations as close as possible to the cutting tool tip (Benardos and Vosniakos 2003).

Although different approaches have been considered to predict surface roughness in face-milling processes (Benardos and Vosniakos 2003), only approaches based on Artificial Intelligence (AI) techniques are of interest in this research. Artificial Neural Networks (ANN) are the most widely used AI technique (Quintana et al. 2012; Grzenda et al. 2012; Díez-Pastor et al. 2012; Benardos and Vosniakos 2002; Samanta et al. 2008; Correa et al. 2008), although other techniques like neuro-fuzzy inference systems (Samanta et al. 2008), Bayesian networks (Correa et al. 2008), genetic algorithms (Brezocnik et al. 2004), swarm optimization techniques (Zainal et al. 2016), and support vector machines (Prakasvudhisarn et al. 2008) have also been tested for the same industrial task. Unfortunately, ANN models are highly dependent on the parameters of the neural networks (Bustillo et al. 2011) and the process of fine-tuning these parameters is a highly time-consuming task that frequently requires expertise for good results. Moreover, studies on surface-roughness prediction in face milling are scarce, compared with the large amount of studies focused on this prediction task for other milling operations, as emphasized in reviews of this domain (Chandrasekaran et al. 2010; Abellan-Nebot 2010). An imbalance that is perhaps because face milling requires very extensive machining tests to provide data sets, compared with processes that demand less power and torque; therefore the size of these data sets ranges between 50 and 250 instances (Grzenda and Bustillo 2013).

The need to develop classifiers using limited data sets has always been a major challenge for the Machine Learning (ML) community (Schwenker and Trentin 2014). However, recent developments in network monitoring (Loo and Marsono 2016) among many other fields, have resulted in an abundance of data streams, possibly of large volumes, yet they frequently lack labels i.e. ground truth values of the feature to be predicted. In the case of face milling, a machine Computer Numerical Control (CNC) can capture tool and machine-tool settings and record them on-line. Still, the problem of how to perform roughness measurements remains, as it has to be performed manually in many cases while off-line.

It may be asked whether unlabeled data streams missing surface roughness can contribute to the development of roughness prediction models. The answer lies in semi-supervised techniques (Tiensuu et al. 2011; Triguero et al. 2015; Schwenker and Trentin 2014) i.e. techniques capable of exploiting labeled training data sets and unlabeled data. These techniques attract particular attention with the growing availability of high-volume data streams, which contain delayed or only partly available labels (Loo and Marsono 2016; de Souza et al. 2015; Wu et al. 2012). A major group of semi-supervised techniques are self-labeled techniques i.e. the techniques that attempt to obtain an enlarged labeled data set, which includes originally unlabeled instances that are augmented with labels generated from their own predictions (Triguero et al. 2015; Loo and Marsono 2016).

There are only a few limited studies on the industrial applications of semi-supervised methods. In Kondratovich et al. (2013), the authors examine classification performed with small data sets in the domain of chemistry and biology with transductive Support-Vector Machines. Importantly, this study relies on transductive inference i.e. inference in which predictions can not be made outside of a particular testing data set (Kondratovich et al. 2013). It means that the approach is not applicable to data streams, with data sources of potentially unlimited and a priori undefined content. A transductive classification approach has also been proposed by G. T. Jemwa and C. Aldrich to model faults in metallurgical systems (Jemwa and Aldrich 2010). One further example is the use of co-training-style algorithms for modeling the temperature of hot rolled steel plate (Tiensuu et al. 2011). To the best of our knowledge, there are no studies on the use of semi-supervised methods for face-milling modeling.

In this work, we propose a semi-supervised method based on a self-training approach to the development of models to predict surface roughness in the face-milling process. The method exploits the availability of unlabeled vibration data streams. It integrates instances from such data streams with the original training data set to improve roughness prediction accuracy. Importantly, the method allows robust integration of various supervised methods, already used in industrial settings, such as (k)Nearest Neighbors ((k)NN) and Random Forest. In particular, the work shows how techniques such as kNN, previously used for roughness modeling elsewhere (Teixidor et al. 2015), can be used when partly unlabeled data becomes available.

The method described in this study can be used both for classification and for regression. Here, it is in particular used to predict roughness treated as both a continuous and a discretized variable. Moreover, we propose and compare different stream integration methods to be used with the semi-supervised method proposed in this study. The experimental results, developed with the extensive data collected in industrial environments, showed that the proposed combination of the semi-supervised method with the stream integration strategy yielded statistically significant accuracy improvement of roughness prediction.

The remainder of this work is structured as follows: first, the way the reference data has been collected is described in “Face-milling data acquisition under modern industrial settings" section, next a proposal for semi-supervised methods is outlined in “Semi-supervised method of modeling machining processes” section. The previous section is followed by a proposal for two stream integration methods in “Stream integration methods and their evaluation” section. The analysis of experimental results is done in “Results” section. Finally, the conclusions are drawn and future works are outlined in “Conclusions” section.

Face-milling data acquisition under modern industrial settings

Milling is a cutting process in which the cutting tool rotates at a fixed speed while linearly moving in a perpendicular direction to the axis of the tool. Face milling, also called roughing, is a type of milling operation. In this cutting process, the cutting speed, Vc, is the peripheral speed of the cutting tool obtained from the tool diameter and its rotating speed N, the feed rate f is the linear movement of the cutting tool related to the workpiece, and the axial depth of cut Ap is the axial contact length between cutting tool and workpiece. Figure 1 shows a scheme of a face-milling operation and its main cutting parameters. The number of teeth, Z, of the cutting tool also defines other cutting parameters: the feed rate per tooth fz and tooth passing frequency ft. All these parameters define the cutting conditions in a milling process that the process engineer can fix before the cutting process begins. During the cutting process, the machine operator may record noise outside of the expected range that is the immediate effect of undesired self-excited vibrations, called chatter (Fu et al. 2017). These vibrations will affect the surface quality of the workpiece and the tool life (Jain and Lad 2017).

The data set used in this work has previously been presented elsewhere (Grzenda and Bustillo 2013). The milling tests consisted of a simple raster along the machine tool’s Y axis. During the milling tests, vibrations on the milling head were captured with two unidirectional accelerometers placed on the machine tool to track the X and Y vibrations: one on the spindle and the other on the table. The specification of the accelerometers and the data-acquisition platform have previously been described elsewhere (Quintana et al. 2012). Different vibration variables were considered on the X and Y axes of the milling machine: low, medium, and high-frequency vibration amplitudes (lx, ly, mx, my, hx and hy respectively), temporal domain vibration amplitude (tdx, tdy) and tooth-passing frequency amplitude (tpx, tpy). Only the vibrations signals corresponding to the engagement of the tool in the workpiece were considered for the purpose of data generation. Then the Fast Fourier Transform was used to transform the signals to the frequency space, where they were split into three ranges: low frequencies i.e. frequencies lower than 500 Hz that are related to machine-tool structural modes (usually around 200 Hz); medium frequencies i.e. between 500 and 2500 Hz, related to the spindle, the tool-holder, and the cutting tool vibration modes (typically around 2000 Hz); finally, high frequencies i.e. those between 2500 and 5000 Hz. Considering the Nyquist-Shannon sampling theorem, we set a maximum frequency for evaluation at half of the sampling frequency (10 kHz in this case). Finally, the face mill, a tool with a radius of 20 mm (R) and \(Z=4\) cutting inserts, and the cast iron blank used for the tests are typically used for machining wind turbine components.

A third group of variables was also considered that refers to productivity indicators, bearing in mind their industrial value: the cutting section (As) and the material removal rate (MRR). The milling tests were designed to evaluate a wide range of values for the selected cutting parameters, covering an extensive spectrum of real industrial conditions.

Once the milling tests had been performed, a Diavite DH5 roughness tester with a nominal stylus tip radius of 2 \(\upmu \)m was used to measure surface roughness in the feed direction. The roughness profile was measured five times along a 0.8 mm tool path and the mean value was considered in the study. The Ra parameter (Ra) was considered to evaluate surface roughness, because it is the most common industrial parameter for this task in accordance with ISO standard 4287:1997 (ISO 1997). The Ra value was first used as a continuous value and then it was discretized according to the ISO 4288:1996 standard (ISO 1996); this standard assigns different roughness levels depending on required quality standards for different industrial applications. For example, a Ra level of 4, N4, is assigned to surfaces with a Ra in the range 0.4–0.8 \(\upmu \)m required for holes of ejector pins or gear teeth surfaces, to guarantee correct ejection of mold components following plastic injection or smooth movement of the gears in a gearbox, respectively. Four levels of Ra were identified within the range of Ra under study, in accordance with ISO 4288:1996: N3:0.2–0.4 \(\upmu \)m, N4:0.4–0.8 \(\upmu \)m, N5:0.8–1.6 \(\upmu \)m and N6:1.6–3.2 \(\upmu \)m. A total of 141 different chatter-free cutting conditions were tested, producing a data set of 423 different records. Although some records share the same cutting conditions, the different levels of cutting tool wear and the material inhomogeneities produce different vibrations levels and, therefore, as previously stated, different surface roughness qualities. The only way to provide information to the AI model on the fact that the cutting conditions fixed by the process engineer are not the only factors that influence the cutting process is, first, to measure the vibration levels and, second, to repeat the experiments under the same cutting conditions, but in another part of the workpiece and after having tested other cutting conditions, so that the tool wear will be higher.

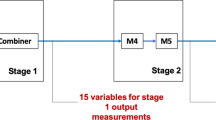

Each record is composed of the 18 previously described non-constant variables. Importantly, every record includes roughness. Hence, the original data is fully labeled. It is important to note that many of the input variables are determined by the others. Table 1 shows the main information on all variables: their symbols, units, and variation range, followed by variable origin and inter-dependencies. With regard to the last column of Table 1, (i) stands for parameters independent of others, while (d) stands for analytically obtained parameters with formulas contained in brackets. In the case of nature, (f) stands for a parameter set by the process engineer before the cutting process; (m) denotes the parameter measured during the cutting process. In the case of the group, T(x) denotes tool settings i.e. fixed parameters, while O(x) denotes tool behavior observed during the milling process. Finally, in the table, the output variable, surface roughness, is shown in bold.

Semi-supervised method of modeling machining processes

The increasing volumes of data processed nowadays explain the growing interest in Big Data systems. However, frequently the data lack ground-truth labels. In the case of surface roughness prediction, the labeled data is composed of labeled machining vectors describing tool settings and tool behavior combined with the surface roughness observed in the product developed with these machining settings. Hence, a labeled machining vector \({\mathbf {x}}\in R^n\) is a vector of \([T({\mathbf {x}}), O({\mathbf {x}}),L]\), where T(x) is a vector representing tool settings defined before the machining process starts, such as feedrate per revolution; O(x)is a vector representing observed tool behavior, such as vibration; and L is the label. In the case under analysis, L denotes the roughness of the surface developed with the process. The roughness label gives information on either a continuous roughness, r, or a discretized roughness, d(r). Time consuming and expensive off-line roughness measurements are performed, to include roughness in the data. As illustrated in Fig. 2, such measurements are a vital step towards constructing and evaluating prediction models, M, developed in supervised processes. The need to perform such measurements inevitably results in the use of only a limited number of records acquired from CNC in the role of training and testing data.

The key assumption of the methods proposed in this study is the use of other records acquired from the CNC with no extra overheads for manual measurements i.e. the records for which no roughness measurements are made. This is to use unlabeled machining vectors \({\mathbf {y}}=[T({\mathbf {y}}), O({\mathbf {y}})]\in U\) to improve the accuracy of the prediction models. Such vectors are available in the form of data streams from modern CNC. The way these data are used is shown in the right part of Fig. 2. First of all, stream integration method is used to select the subset of the data arriving from the CNC. Then, the machining vectors \({\mathbf {y}}\) are provided with propagated labels i.e. roughness predicted by model M to be added to the training data R in the next step. Hence, the extension of the original training set, R, to \({\tilde{R}}: R\subset {\tilde{R}}\) by adding new experimental vectors with propagated roughness labels is considered. We expect that the prediction model \({\tilde{M}}()\), i.e. the model built with the extended data set \({\tilde{R}}\) will yield higher accuracy than the corresponding model M() built with the original training data set R. We propose the process of label propagation based on self-training to attain this objective, i.e. using a prediction model M to generate labels for initially unlabeled vectors \({\mathbf {y}}\in U\) to include \([{\mathbf {y}},M({\mathbf {y}})]\) in the data used to train the ultimate prediction model.

The proposed semi-supervised method and the evaluation of its impact are defined in detail in Algorithm 1. The initial labeled data was collected first of all; including roughness measurements, as shown in Fig. 2. The initial data was split into a training set, R, and a testing set, T. Selected instances from the remaining unlabeled data stream U were used in a semi-supervised process to extend the training data. The performance of the instance selection method is defined by stream integration method and relies on tool settings. The inclusion of instances, \(u\in U\), captured under tool settings already present in R, and the inclusion of instances collected under new tool settings, are both considered in this study. As the division of available data into R, T, U has a possible impact on model accuracy, multiple divisions of data and multiple method runs are applied to develop accuracy estimates for individual stream integration methods. The details of both instance selection performed by stream integration methods and the evaluation process are discussed in “Stream integration methods and their evaluation” section.

As far as the supervised process is concerned, R data are used to develop a prediction model, M, which is represented by DevelopSupervisedModel(). The model is capable of forecasting surface roughness in an on-line manner i.e. using tool settings and tool behavior captured during the process. As the data are constantly transmitted from the CNC, the volume of unlabeled data, \(U_t\), available at time t is also constantly growing. Hence, a part of the unlabeled data stream can be extracted with the help of the stream integration method SelectInstances() to form \(S_t\). The machining vectors contained in \(S_t\) after having been provided with predicted roughness are added to R to form an extended training data set \({\tilde{R}}_t\). Subsequently, the \({\tilde{M}}_t\) model is built with \({\tilde{R}}_t\) data. Finally, its performance indicators are calculated and compared with the performance indicators of model M built in a standard supervised process.

Some of the key aspects of the method outlined in Algorithm 1 are as follows:

The algorithm relies on the fact that tool settings and tool behavior are available during the machining process. These data can be automatically collected from the CNC of the machine and vibration acquisition equipment and extracted through the Programmable Logic Controller (PLC) of the milling machine, as has already been demonstrated in huge milling machines with a standard CNC, under real-life industrial conditions (Palasciano et al. 2016).

The key value of stream data \(S_t\) extracted with the stream integration method is the tool behavior \(O({\mathbf {x}})\) observed at known tool settings \(T({\mathbf {x}}), {\mathbf {x}} \in S_t\), a core aspect of which is vibration data. Importantly, the vibration data acquired for new tool settings, not represented in the training data R, can be used by the proposed algorithm to train the prediction model \({\tilde{M}}\).

We propose a method capable of using potentially different artificial intelligence techniques to exploit the unlabeled data from industrial processes. Various supervised techniques such as neural networks, kNN, and random forest can be used in DevelopModel(). Periodical updates to \({\tilde{R}}\) have to be considered to take into account newly arriving \({\mathbf {y}}\in U\) instances received from a data stream produced by the machine CNC. Hence, techniques with a limited need to search for parameter values, such as random forest and kNN, are preferred to techniques that are less resilient to improper parameter settings, such as multilayer perceptrons, known to rely largely on appropriate network topology settings.

Stream integration methods and their evaluation

Two stream integration methods to be used with the high level Algorithm 1 in its SelectInstances() step are proposed in this study. The key objective is to select the data from an unlabeled data stream for inclusion in the self-training process. This yields two combinations of Algorithm 1 and the stream integration method, which in what follows are referred to as the methods. The first is the Self-training over New Tool Settings (SToNewTS) method. This method relies on the selection of unlabeled data only collected under tool settings not present in the training data set. Therefore, \(S_t\) includes machining vectors such that \(\forall {\mathbf {x}}\in S_t: \not \exists _{y\in R}: T(y)=T(x)\).

Another approach is the use of the Self-Training over the Same Tool Settings (SToSameTS) method. The latter method assumes that the data set \(S_t\) contains the same tool settings as labeled data R. Hence, unlabeled \(S_t\) data is selected, such that \(\forall {\mathbf {x}}\in U: \exists _{y\in R}: T(y)=T(x)\).

These two methods have been verified with extensive calculations that are shown below. The question is whether propagating labels from training records to initially unlabeled records attained in every day production can improve roughness prediction. In industrial settings, the two methods described above could be mixed, as both new tool settings and machining under tool settings already represented in the training data could take place.

What is crucial for the evaluation of any semi-supervised technique is the procedure aiming at calculating the performance indicators based on multiple runs of the technique with multiple data subsets. This approach ensures that multiple data sets are taken into account in method evaluation, while addressing the real-life constrained size of difficult-to-attain labeled experimental data.

In response to all of these points, a formal algorithm for evaluating semi-supervised techniques is required, especially in view of the need to model machining processes. This algorithm is summarized in Algorithm 2. The key part of Algorithm 2 is selecting the subsets of \(\varOmega \) data to play the role of training, unlabeled, and testing data sets, respectively. Let us denote by \(H(A)=\{T({\mathbf {x}}): {\mathbf {x}} \in A\}\). \(Split(\varOmega ,T(\varOmega ))\) generates random divisions of available data into three disjoint subsets, possibly of equal size, while taking into account tool settings present in each of the vectors contained in \(\varOmega \). In the case of SToNewTS, the Split() method satisfies condition \(H(S_i)\cap H(S_j)=\phi , i\ne ~j\). However, in the case of SToSameTS, the Split() method yields another division. Namely, under the latter technique, \(\forall i,j: H(S_i)=H(S_j)\). The proportion of machining vectors to be provided with predicted roughness \(M({\mathbf {x}})\) is controlled by an \(\alpha \) parameter. This parameter makes it possible to see the impact of growing proportions of \(\frac{card({\tilde{R}})-card(R)}{card({\tilde{R}})}\) on model accuracy. The selection of the subset of vectors to be provided with predicted roughness is performed within Select() and can be based on various random and non-random strategies. Finally, RunSemiSupervised() calls Algorithm 1. Importantly, it is \(S_t=U_t\) in the latter case, as the stream integration method has been already applied in Algorithm 2.

As a consequence of Algorithm 2, \(3\times N_\mathrm {max}\) runs were performed, which provided a basis for modeling accuracy estimates produced over a number of data sets of varied content. Moreover, the varied outputs of the modeling technique applied to the same training data were also taken into account.

Results

Key assumptions and objectives of the evaluation method

When estimating the impact of adding extra records with propagated labels, several aspects have to be considered. First of all, it is important to verify whether the models \({\tilde{M}}\) developed in a semi-supervised manner yield improved prediction accuracy as compared to the M models developed with the original training data.

Secondly, the statistical significance of possible accuracy improvements should be verified. Moreover, whether the improved model accuracy is observed under one modeling technique such as kNN needs to be verified. One further aspect is to test the impact of using a growing volume of stream data \(U_t\) to develop prediction models i.e. whether models developed based on \(U_{t+\varDelta t}\) data attain higher accuracy than the models developed while using \(U_t\) data.

Last, but not least, the industrial aspect is of particular importance. More precisely, the question is whether the addition of experimental data missing measurement-based roughness labels, which have been gathered from experiments under the tool settings already represented in the training data, can improve model accuracy. Equally importantly, the question of whether enrichment of the training data set with the data from tool settings that are not represented in the original training data will yield improvements in model accuracy needs to be answered.

A number of simulations were performed using Algorithm 2 to answer all of these questions and to verify the merits of the proposed method. Two embedded techniques were used to develop prediction models. The first, Nearest Neighbor (kNN, k = 1), is an example of an instance-based method that illustrates the impact of semi-supervised approaches on distance-based machine learning techniques. The other method in use, random forest with k = 50 trees, illustrates the class of methods relying on an ensemble of trees. Both techniques were used to develop the roughness prediction models.

In relation to the experiments performed with the SToNewTS and the SToSameTS methods, the identical tool settings, were defined as \(T({\mathbf {x}})=[Ap,f,N,fz,Vc,ft,As,MRR]\). Hence, in the case of the SToNewTS approach, the unlabeled data, U, only contains records with new combinations of [Ap, f, N, fz, vc, ft, As, MRR] not found in the training data R. In particular, some new settings of individual parameters not present in the training data may be present in the unlabeled data.

Continuous versus discretized roughness case

The results of sample experiments performed for discretized roughness are summarized in Table 2. These results were attained under SToNewTS method i.e. where only unlabeled records collected under tool settings not present in the training data are potentially added to the training data. So that different possible data divisions could be taken into account in the analysis, Algorithm 2 was used with \(N_\mathrm {max}=50\). This yields 150 runs of the process for each \(\alpha \) value. The table shows the accuracy of roughness prediction starting from \(\alpha =0\) i.e. fully supervised model development. So, only originally labeled data is used for model development. Next, as \(\alpha \) is growing, more and more instances \({\mathbf {x}}\in U\) are labeled and added to the training data to contribute to the development of the model. It can be observed that correct prediction of discretized roughness grew gradually from 64.80% for \(\alpha =0\) to 69.86% for \(\alpha =1\) in turn.

Accuracy distribution of the prediction models summarised in Table 2 is shown in Fig. 3. The figure depicts the distribution of roughness prediction accuracy for discretized roughness cases under \(\alpha =0,0.1,0.2,\ldots ,1\) and confirms the growth of median accuracy of the models, which follows from the growth of \(\alpha \) coefficient. Observe that the standard deviation of the accuracy of individual models tends to decrease with growing values of \(\alpha \). In other words, the development of the self-trained model with kNN in the SToNewTS approach with \(\alpha =1\) yields more stable results than the results of model development with only scarce training data. However, although important, Fig. 3 does not verify whether the difference in the accuracy of models only built with training data in comparison with the models developed with both original training data and unlabeled instances provided with propagated labels is statistically significant. Statistical significance tests were therefore applied to establish the statistical significance of the difference between model accuracy under the supervised and the semi-supervised approaches observed in the testing sets, T. In particular, Table 2 includes p values and confidence intervals \([c_\mathrm {min},c_\mathrm {max}]\) of the aforementioned difference of the classification accuracy of models \({\tilde{M}}\) and M developed in 150 method runs. It follows from the table that the accuracy improvement is statistically significant at \(p<0.05\) already for \(\alpha \ge 0.3\). Hence, even a limited number of cases can contribute to the improvement of the roughness model accuracy.

To provide further insight into the calculations documented in Table 2, let us investigate two confusion matrices, developed for worst case and best case scenario i.e. for \(\alpha =0\) and \(\alpha =1\), respectively. These matrices are visualized in Fig. 4, each shown with its own graph composed of tiles representing matrix entries. In the upper part of every figure tile, \(p_{\mathrm {C}}(l,{\tilde{l}})=100\times \)\(\frac{\sum _{i=1}^{3 \times N_{max}} card(\{({\mathbf {x}},L)\in T_i: L=l\wedge M({\mathbf {x}})={\tilde{l}}\})}{\sum _{i=1}^{3 \times N_{max}} card(\{({\mathbf {x}},L)\in T_i : L=l\})}\) is provided i.e. the percentage of testing records having true roughness l, for which predicted roughness was \({\tilde{l}}\). A darker color means higher \(p_{\mathrm {C}}(l,{\tilde{l}})\) values i.e. that for test cases having true class l, class \({\tilde{l}}\) was frequently predicted. The concentration of dark tiles along diagonals of the figures reflects the fact that most frequently correct prediction of true class or one of similar classes is made. In addition, the lower part of every tile contains in brackets \(p(l,{\tilde{l}})=100\times \frac{\sum _{i=1}^{3 \times N_{max}} card(\{({\mathbf {x}},L)\in T_i: L=l\wedge M({\mathbf {x}})={\tilde{l}} \})}{\sum _{i=1}^{3 \times N_{max}}card(T_i)}\) i.e. the percentage of all testing records having true roughness of class l for which predicted roughness was \({\tilde{l}}\). Hence, the confusion matrix is extended with the share of individual \((l,{\tilde{l}})\) cases in the testing data set. This reveals that the data is largely imbalanced with only \(\sum _{{\tilde{l}}\in \{N3,N4,N5,N6\}} p(N3,{\tilde{l}})=4.02\%\) cases being N3 class cases. Furthermore, only 4.72% of cases belong to class N6.

On the left part of the figure, the accuracy observed under \(\alpha =0\) i.e. when only original training data was used to develop roughness prediction models is shown. It follows from the figure that the recognition of individual classes largely varies. Only 23.29% of N3 cases were correctly predicted to have N3 roughness. This can be attributed to the fact that N3 records are relatively rare in the data and whether N3 roughness is attained depends on unmeasurable conditions such as tool wear or material inhomogeneities. The class with the highest correct prediction rate is N5 with an accuracy of 76.08%. Importantly, N4 and N5 cases are very frequent in the data; out of all test records, \(p{(N5,N5)}=44.96\%\). Hence, the models correctly predict roughness for the majority of cases in industrial experiments.

On the right side of Fig. 4, the accuracy attained under \(\alpha =1\) i.e. best case scenario with the semi-supervised approach used to its full extent is shown. It can be observed that by switching to semi-supervised models and extending the training data using the SToNewTS approach, accuracy improvements were attained for classes N3, N4 and N5. A minor decrease was observed only for the N6 class. Importantly, (N4, N5) misclassification was reduced from 26.59 to 11.88%. At the same time, the growth of the share of correctly recognized N5 cases from 76.08 to 81.59% is observed. What is of particular industrial value is the fact that only 0.74% of all testing records were misclassified at more than one level, which directly follows from the combined value of p(N3, N5) and p(N5, N3). This is a major reduction compared to 1.71% of all testing records misclassified at more than one level under \(\alpha =0\). Hence, the semi-supervised approach increased overall accuracy, increased recognition of the majority of classes and largely reduced major errors in roughness prediction.

There is also the question of whether the benefits arising from the semi-supervised approach might also be observed in other embedded supervised techniques such as random forest. Figure 5 was developed to answer this question. It shows the confidence level of the differences in model accuracy between the semi-supervised SToNewTS and the fully-supervised approach, for varied \(\alpha \) values attained from the same evaluation process laid out in Algorithm 2 and performed with 150 runs. The confidence levels of two series of data are shown: i.e. for models developed with both Nearest Neighbor and random forest. The dark gray sections highlight the \(\alpha \) ranges that yield statistically significant differences between the accuracy of models \({\tilde{M}}\) and M. For each series, not only is the mean accuracy difference line shown, but also the \([c_\mathrm {min},c_\mathrm {max}]\) ranges below and above it. It can be observed that accuracy improvement caused by the use of unlabeled machining vectors [T(x), O(x)] starts at the level of \(\alpha =0.2\) in the case of random forest. Hence, even though the data from other tool settings than those present in the labeled training data set were placed in the unlabeled data set, the latter data set made a successful contribution to the development of the model.

It may be asked whether semi-supervised model development yields similar improvements in the accuracy of the SToNewTS for the continuous roughness value. The results of each evaluation are shown in Fig. 6. This time it can be observed that a statistically significant reduction of the Mean Absolute Error (MAE) \(\varDelta E_\alpha (T)\) observed on testing sets is only attained for the kNN technique exploiting relatively large unlabeled data sets developed for \(\alpha \ge 0.8\). This phenomenon can be explained by the fact that discretized roughness can be predicted with higher confidence than continuous roughness. Hence, the development of the model can benefit more from the use of the self-training approach when the prediction problem is treated as a classification task performed with discretized roughness. Still, large volumes of unlabeled data can also yield accuracy gains in the case of the prediction of continuous feature values.

Label propagation under the same and different tool settings

Finally, Table 3 provides the comparison of the SToNewTS method with the SToSameTS method in the case of discretized roughness. The first part of the table shows the results for the SToNewTS technique, previously depicted in Fig. 5. However, the latter figure shows the change in the correct classification rate \(A_\alpha (T)\) between the fully and the semi-supervised scenarios. Table 3 provides the correct classification rate, \(A_\alpha (T)\), and p value for different \(\alpha \) settings starting from the fully supervised scenario of \(\alpha =0\) through to the possible addition of many instances provided with propagated labels. The accuracy values \(A_\alpha (T)\) that are statistically different at \(p=0.05\) from the accuracy \(A_0(T)\) are shown in bold. What can be observed in the case of SToNewTS is that random forest yields higher accuracy than the kNN technique.

It is interesting to compare these results with the results attained for SToSameTS. First of all, in the latter case, it can be observed that the initial accuracy for \(\alpha =0\) is higher than in the case of SToNewTS. This result is not surprising, because the SToSameTS method assumes that testing is only performed on the data containing the same tool settings as present in the originally labeled training set. Interestingly, in such a scenario, the attempts to use the semi-supervised approach yielded no statistically significant improvement in accuracy for random forest. Even more importantly, for the kNN technique the results worsened with the growth of \(\alpha \), which can be explained by the fact that the initial training data set contains the data for all tool settings present in the testing phase. Hence, extending it with the instances provided with propagated labels may result in training data \({\tilde{R}}\) that are more noisy than the initial training data R. While random forest is resilient to such noise, kNN is more dependent on individual instances. This clearly shows that additional instances, arising from semi-supervised approach have to be introduced on a selective basis. In particular, there is a need for stream integration methods which extract only a part of the data stream from the CNC for use as a training data extension.

Conclusions

In this study, the improvement of roughness prediction techniques based on the use of otherwise discarded unlabeled data from the CNC has been proposed. A method based on the idea of semi-supervised learning has been developed. More precisely, the method that has been proposed in this paper is based on a self-training paradigm and is focused on data stream processing. We have shown that this approach can yield statistically significant improvements in prediction accuracy, if properly applied. Moreover, the benefits of the proposed approach were shown to be at their best when discretized roughness was predicted and new tool settings were present in the data stream. Hence, the method is capable of exploiting the data acquired under tool settings that are not represented in the training data. Furthermore, the need for stream integration methods that select only a part of the vibration data stream from the CNC has been confirmed. It should be emphasized that the same trends in accuracy changes resulting from the integration of unlabeled data were observed for different machine learning techniques, namely instance-based learners and random forests.

Future work will focus on the iterative model development and the comparison of multiple techniques for selecting unlabeled instances that can be labeled with high confidence and that can contribute to extended training data sets. Moreover, the model will also be applied to other manufacturing sectors where experimental tests are very costly such as the laser machining of microcomponents.

References

Abellan-Nebot, J. (2010). A review of artificial intelligent approaches applied to part accuracy prediction. International Journal of Machining and Machinability of Materials, 8(1–2), 6–37. https://doi.org/10.1504/IJMMM.2010.034486.

Benardos, P., & Vosniakos, G. (2002). Prediction of surface roughness in CNC face milling using neural networks and Taguchi’s design of experiments. Robotics and Computer-Integrated Manufacturing, 18(5–6), 343–354.

Benardos, P. G., & Vosniakos, G. C. (2003). Predicting surface roughness in machining: A review. International Journal of Machine Tools and Manufacture, 43(8), 833–844. https://doi.org/10.1016/S0890-6955(03)00059-2.

Brezocnik, M., Kovacic, M., & Ficko, M. (2004). Prediction of surface roughness with genetic programming. Journal of Materials Processing Technology, 157–158, 28–36.

Bustillo, A., Díez-Pastor, J. F., Quintana, G., & García-Osorio, C. (2011). Avoiding neural network fine tuning by using ensemble learning: Application to ball-end milling operations. The International Journal of Advanced Manufacturing Technology. https://doi.org/10.1007/s00170-011-3300-z.

Chandrasekaran, M., Muralidhar, M., Krishna, C. M., & Dixit, U. S. (2010). Application of soft computing techniques in machining performance prediction and optimization: A literature review. The International Journal of Advanced Manufacturing Technology, 46(5), 445–464. https://doi.org/10.1007/s00170-009-2104-x.

Correa, M., Bielza, C., de J Ramirez, M., & Alique, J. R. (2008). A bayesian network model for surface roughness prediction in the machining process. International Journal of Systems Science, 39(12), 1181–1192. https://doi.org/10.1080/00207720802344683.

de Souza, V. M. A., Silva, D. F., Batista, G. E. A. P. A., & Gama, J. (2015). Classification of evolving data streams with infinitely delayed labels. In 14th IEEE international conference on machine learning and applications, ICMLA 2015, Miami, FL, USA, December 9-11, 2015, pp. 214–219. https://doi.org/10.1109/ICMLA.2015.174.

Díez-Pastor, J. F., Bustillo, A., Quintana, G., & García-Osorio, C. (2012). Boosting projections to improve surface roughness prediction in high-torque milling operations. Soft Computing, 16(8), 1427–1437. https://doi.org/10.1007/s00500-012-0846-0.

Fu, Y., Zhang, Y., Gao, H., Mao, T., Zhou, H., Sun, R., et al. (2017). Automatic feature constructing from vibration signals for machining state monitoring. Journal of Intelligent Manufacturing,. https://doi.org/10.1007/s10845-017-1302-x.

Grzenda, M., & Bustillo, A. (2013). The evolutionary development of roughness prediction models. Applied Soft Computing, 13(5), 2913–2922. https://doi.org/10.1016/j.asoc.2012.03.070.

Grzenda, M., Bustillo, A., Quintana, G., & Ciurana, J. (2012). Improvement of surface roughness models for face milling operations through dimensionality reduction. Integr Comput-Aided Engineering, 19(2), 179–197. https://doi.org/10.3233/ICA-2012-0398.

Iglesias, A., Munoa, J., & Ciurana, J. (2014). Optimisation of face milling operations with structural chatter using a stability model based process planning methodology. International Journal of Advanced Manufacturing Technology, 70(1–4), 559–571. https://doi.org/10.1007/s00170-013-5199-z.

ISO-4287 (1997). Geometrical Product Specifications (GPS)—Surface texture: Profile method—Terms, definitions and surface texture parameters. International Organization for Standardization.

ISO-4288. (1996). Geometrical Product Specifications (GPS)—Surface texture: Profile method—Rules and procedures for the assessment of surface texture. International Organization for Standardization.

Jain, A., & Lad, B. (2017). A novel integrated tool condition monitoring system. Journal of Intelligent Manufacturing. https://doi.org/10.1007/s10845-017-1334-2 (article in Press).

Jemwa, G. T., & Aldrich, C. (2010). A transductive learning approach to process fault identification. In IFAC proceedings volumes 13th IFAC symposium on automation in mining, mineral and metal processing43(9), 68 – 73. https://doi.org/10.3182/20100802-3-ZA-2014.00016.

Kondratovich, E., Baskin, I. I., & Varnek, A. (2013). Transductive support vector machines: Promising approach to model small and unbalanced datasets. Molecular Informatics, 32(3), 261–266. https://doi.org/10.1002/minf.201200135.

Loo, H. R., & Marsono, M. N. (2016). Online network traffic classification with incremental learning. Evolving Systems, 7(2), 129–143. https://doi.org/10.1007/s12530-016-9152-x.

Palasciano, C., Bustillo, A., Fantini, P., & Taisch, M. (2016). A new approach for machine’s management: from machine’s signal acquisition to energy indexes. Journal of Cleaner Production, 137, 1503–1515. https://doi.org/10.1016/j.jclepro.2016.07.030.

Prakasvudhisarn, C., Kunnapapdeelert, S., & Yenradee, P. (2008). Optimal cutting condition determination for desired surface roughness in end milling. The International Journal of Advanced Manufacturing Technology, 41(5), 440–451. https://doi.org/10.1007/s00170-008-1491-8.

Quintana, G., Bustillo, A., & Ciurana, J. (2012). Prediction, monitoring and control of surface roughness in high-torque milling machine operations. International Journal of Computer Integrated Manufacturing, 25(12), 1129–1138. https://doi.org/10.1080/0951192X.2012.684717.

Quintana, G., & Ciurana, J. (2011). Chatter in machining processes: A review. International Journal of Machine Tools and Manufacture, 51(5), 363–376. https://doi.org/10.1016/j.ijmachtools.2011.01.001.

Samanta, B., Erevelles, W., & Omurtag, Y. (2008). Prediction of workpiece surface roughness using soft computing. Proceedings of the Institution of Mechanical Engineers, Part B: Journal of Engineering Manufacture, 222(10), 1221–1232. https://doi.org/10.1243/09544054JEM1035.

Schwenker, F., & Trentin, E. (2014). Pattern classification and clustering: A review of partially supervised learning approaches. Pattern Recognition Letters, 37, 4–14. https://doi.org/10.1016/j.patrec.2013.10.017.

Teixidor, D., Grzenda, M., Bustillo, A., & Ciurana, J. (2015). Modeling pulsed laser micromachining of micro geometries using machine-learning techniques. Journal of Intelligent Manufacturing, 26(4), 801–814. https://doi.org/10.1007/s10845-013-0835-x.

Tiensuu, H., Juutilainen, I., & Röning, J. (2011). Modeling the temperature of hot rolled steel plate with semi-supervised learning methods (pp. 351–364). Berlin Heidelberg: Springer. https://doi.org/10.1007/978-3-642-24477-3_28.

Triguero, I., García, S., & Herrera, F. (2015). Self-labeled techniques for semi-supervised learning: Taxonomy, software and empirical study. Knowledge and Information Systems, 42(2), 245–284. https://doi.org/10.1007/s10115-013-0706-y.

Wu, X., Li, P., & Hu, X. (2012). Learning from concept drifting data streams with unlabeled data. Neurocomputing, 92, 145–155. https://doi.org/10.1016/j.neucom.2011.08.041.

Zainal, N., Zain, A., Radzi, N., & Othman, M. (2016). Glowworm swarm optimization (gso) for optimization of machining parameters. Journal of Intelligent Manufacturing, 27(4), 797–804. https://doi.org/10.1007/s10845-014-0914-7.

Acknowledgements

This work was made possible thanks to the support received from Nicolas Correa S.A. and Ascamm Technological Center, which provided the milling data and performed all the experimental tests. The authors would especially like to thank Prof. Joaquim Ciurana and Dr. Guillem Quintana for their kind-spirited and useful advice.

Funding

The contribution of Andres Bustillo to this study was funded by the Spanish Ministry of Economy and Competitiveness (Grant Number TIN2015-67534-P). The contribution of Maciej Grzenda to this study was funded by the Warsaw University of Technology (Grant Numbers 504/01869/1120 and 504/03306/1120/40.000101). Maciej Grzenda is also affiliated with Orange Polska Research and Development Center.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Grzenda, M., Bustillo, A. Semi-supervised roughness prediction with partly unlabeled vibration data streams. J Intell Manuf 30, 933–945 (2019). https://doi.org/10.1007/s10845-018-1413-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-018-1413-z