Abstract

We deal with the problem of predictive maintenance (PdM) in a vehicle fleet management setting following an unsupervised streaming anomaly detection approach. We investigate a variety of unsupervised methods for anomaly detection, such as proximity-based, hybrid (statistical and proximity-based) and transformers. The proposed methods can properly model the context in which each member of the fleet operates. In our case, the context is both crucial for effective anomaly detection and volatile, which calls for streaming solutions that take into account only the recent values. We propose two novel techniques, a 2-stage proximity-based one and context-aware transformers along with advanced thresholding. In addition, to allow for testing PdM techniques for vehicle fleets in a fair and reproducible manner, we build a new fleet-like benchmarking dataset based on an existing dataset of turbofan simulations. Our evaluation results show that our proposals reduce the maintenance costs compared to existing solutions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Proper maintenance of the industrial equipment is important and, traditionally, maintenance is scheduled based on empirical rules or statistics, such as the average time that a component of the equipment is damaged, or simply takes place after a component’s failure. These techniques often lead to both over- and under-estimates of the period in which maintenance should be performed, which, in turn, leads to waste, such as spendings on unnecessary maintenance and unforeseen suspensions of production. Predictive maintenance (PdM) provides a promising solution to all these problems, since it has been reported that it can achieve quality production operation with decreased maintenance costs (Scully, 2019).

Modern PdM techniques are data-driven and employ several advanced techniques from the area of data management and machine learning, e.g., Carvalho et al. (2019) and Kovalev et al. (2018). There are two main characteristics in the state-of-the-art: (i) most PdM techniques rely on labeled data and model training (Carvalho et al., 2019); and (ii) techniques tend to be application-dependent without evidence that can efficiently generalize to arbitrary industrial settings (Korvesis et al., 2018; Carvalho et al., 2019; Kovalev et al., 2018). In this work, we also focus on a specific setting, namely vehicle fleet (VF) management, but we depart from the mainstream rationale in that we do not employ supervised learning. This alleviates the need for relying on the existence of appropriate labeled training data.

Most PdM applications are deployed in industrial environments with the objective to avoid failures in major equipment. Most commonly, equipment is used for a specific repetitive task in the production line in a setting that remains constant over time. In contrast, vehicles operate in a volatile environment, e.g., under different weather conditions (rain, cold, sun, and so on) on a variety of roads and at different speeds. The driver is part of the dynamic environment as well. So, PdM in vehicles needs to address additional challenges due to the dynamic environment the VF operates (Theissler et al., 2021), which results in changes in the vehicle’s behavior over time. However, such changes should be considered along with their context; they may not correspond to failures and interestingly, non-changes may also denote anomalous behavior. Figure 1 shows an example of monitored histograms of a vehicle in our test dataset at three different days; the real-world dataset that we employ consists of such histograms. The leftmost histogram corresponds to a period immediately before a failure, while the two other histograms refer to normal operation. Comparing the leftmost histogram with the normal ones, e.g., using the Hellinger distance, does not provide any evidence about a forthcoming damage. Also, labeling the leftmost histogram as anomalous and using it for model training does not work. However, taking into account the behavior of similar vehicles during the same period operating in the same context can help us to identify the need for maintenance in the first case. Overall, we cannot build traditional machine learning models regarding the normality of a vehicle using supervised methods easily; PdM solutions in a dynamic context call for the development of unsupervised context-aware techniques.

To develop context-aware PdM techniques, we can distinguish between normal and anomalous behavior based on data that are collected in the same context as our target component. By target component, we refer to the member of the fleet, for which we try to detect deviations that correspond to failures in the close future. An external factor could lead to a change in the normal operation of the equipment and such a change does not necessarily imply a future failure; thus we do not aim to detect changes but deviations from the behavior of similar vehicles in the same context.

In our solution, we employ streaming outlier detection without any need for labeled data. Using such an approach is not new in vehicle PdM, as reported in Rögnvaldsson et al. (2018), Byttner et al. (2011), and Fan et al. (2015). However, we differ in the manner we employ proximity-based anomaly-detectionFootnote 1 , we devise a novel 2-stage solution, which is shown to be particularly effective in certain cases and also, we build a fleet anomaly detection framework using a deep learning model. The latter is the superior technique in the average case. In summary, the contribution of this work is threefold:

-

We propose distance-based, hybrid and deep-learning context-aware methods to perform fleet PdM. To efficiently define the context, we may employ clustering and dimensionality reduction. Also, we present how to properly fit a state-of-the-art deep learning model in a fleet environment.

-

We thoroughly evaluate our proposals against the state-of-the-art PdM solutions for VF in Rögnvaldsson et al. (2018), Byttner et al. (2011), and Fan et al. (2015) in terms of cost. We consider the cost and benefits of false and correct predictions, respectively, since presenting accuracy results may be misleading and/or meaningless in PdM.

-

Finally, we develop a novel benchmark fleet dataset based on an existing dataset used for remaining useful time prediction. The complete implementation of the techniques presented is freely availableFootnote 2 and the results presented hereby are reproducible to their full extent.

The remainder of this work is structured as follows. Section 2 discusses the related work. The existing techniques along with our proposals are presented in Section 3. We thoroughly evaluate the described techniques in a fleet management scenario, using a constructed fleet dataset based on an existing turbofan dataset in Section 4 and in a real bus fleet dataset in Section 5. We conclude in Section 6.

2 Related work

Employing advanced data-analytics for PdM is an emerging topic and several techniques have been proposed in the recent years. An overview of the data-driven PdM state-of-the-art is presented in works such as Carvalho et al. (2019) and Kovalev et al. (2018), where techniques for data preprocessing, feature selection, data storing, model building and validation, fault detection and prediction, and estimation of remaining useful life are described. However, it is important to notice that each application comes with its unique characteristics that affect the corresponding design (Korvesis et al., 2018). According to these survey reports, the most commonly employed techniques include Random Forest, Neural Networks in several forms and architectures, Support Vector Machines, Hidden Markov Models, and clustering solutions, such as k-means. These are accompanied by Fourier transformations, SARIMA models and techniques, such as PCA for dimensionality reduction.

We differ in that we advocate an unsupervised learning approach, i.e., we do not rely on any labeled data to train a model, as we perform (continuous) outlier detection. The most similar work to ours, which also employs outlier detection in a PdM fleet management scenario, has appeared in Rögnvaldsson et al. (2018), Byttner et al. (2011), and Fan et al. (2015) with all the outlier detection techniques in that work being hybrid, combining statistical and proximity techniques, but relying mostly on statistical assumptions. Our main difference is that we go beyond these proposals by leveraging pure distance-based anomaly detection and recent deep learning models. Distance-based anomaly detection has been also employed in an Industry 4.0 setting in Naskos et al. (2019) with a view to detecting failures; here we employ similar techniques for PdM, i.e., for timely prediction of failures before they actually occur. Furthermore, we implement a cluster-based technique for streaming data based on Diez et al. (2016) and fit a state-of-the-art transformer model, namely TranAD from Tuli et al. (2022), to fleet management.

PdM on top of continuous data

PdM techniques based on data analytics can be distinguished according to the data they operate on. The majority of techniques process sensor signals (Carvalho et al., 2019); however, there exist advanced methodologies that process distinct event logs, e.g., Korvesis et al. (2018), Sipos et al. (2014), and Wang et al. (2017). Our work falls into the former category with the clarification that we use aggregated values, although our techniques could be used also over raw data directly.

In both categories, data-driven techniques can be further separated in unsupervised, semi-supervised, and supervised. Unsupervised models are preferred in dynamic environments and when there are no labels in the data. As far as the first category (sensor signals) is concerned, the authors in Diez et al. (2016), Diez-Olivan et al. (2017), and Diez-Olivan et al. (2018) try to model the normality using clustering algorithms or v-SVM. Specifically, in Diez et al. (2016), the authors try to detect failures in bridge joints; regarding bridge joints as a fleet renders this technique applicable to our setting.

In many cases, we know how normal data look like or can obtain a small labeled dataset. In these cases, semi-supervised models may be used in order to take advantage of such knowledge. In PdM, most semi-supervised models use the information of normal data (without anomalies) to train a model; then, this model is applied to detect anomalies based on the reconstruction error. State-of-the-art examples of this rationale are presented in Audibert et al. (2020), Hundman et al. (2018), Meng et al. (2020), and Zhang et al. (2019), where Autoencoders, LSTM, and Transformers are trained to reconstruct normal data and detect anomalies. The combination of these models with adversarial and meta-learning techniques has shown tremendous results in anomaly detection tasks (Audibert et al., 2020; Tuli et al., 2022). Furthermore, in (Hundman et al., 2018; Meng et al., 2020), a dynamic thresholding technique is presented in order to mitigate the difficulty in efficient tuning, which is important, especially in streaming environments. Finally, in Zhang et al. (2019), a Convolution LSTM-like network is used to detect anomalies through the reconstruction of images derived from the data. In our work, we have selected the TranAD model in Tuli et al. (2022), which can learn from small datasets and is time-efficient (fast training). We fit TranAD in the fleet PdM case under investigation. To this end, we make extensions, such as clustering before training, application of a sliding window to handle streams and advanced thresholding.

PdM on top of discrete data

With regards to the second category, i.e., event-based PdM, the proposed techniques tend to use predefined event codes or artificial ones, extracted from raw data, in order to detect a deviation in the data and predict anomalies. Manco et. al predict failures in train doors using events from pre-installed software in Manco et al. (2017). In (Filios et al., 2020), the authors try to predict the number of stoppages of a packing machine using ARIMA and Prophet algorithms. Extreme learning is used in Fink et al. (2013) for the prediction of failure in trains in the near future. The ARMA algorithm is used in Baptista et al. (2018) as a feature along with other thirteen statistical features and PCA is performed before the event prediction process using KNN, RF, and SVM. In (Shafi et al., 2018), a novel framework is proposed for failure prediction using Diagnostic Trouble Codes (DTC) that are transmitted to the smartphone via bluetooth connections Another supervised methodology is presented in Wang et al. (2018) using a graph with relations among known anomaly sequences and sub-sequences of the data. Finally, the authors in Naskos et al. (2019) use both supervised and unsupervised methods for event-based predictive maintenance in a real industry case study, where a novel algorithm with Matrix Profile (Yeh et al., 2016) was used to transform data into time series of events. There are similarities between our techniques and event-based ones, such as the way the streaming data are handled, but the proposed techniques are suitable for (aggregated) sensor signals.

PdM in vehicle fleets

In this section, we narrow our focus on PdM in vehicles. Apart from the works in Rögnvaldsson et al. (2018), Byttner et al. (2011), and Fan et al. (2015) against which we directly compare, a survey about machine learning in predicting the need of maintenance tasks in the automotive industry can be found in Shafi et al. (2018). In (Derse et al., 2021), a solution based on handling missing values, applying PCA for feature selection and performing anomaly detection through isolation forest, particle swarm optimization, and k-means clustering algorithms to detect electronic control unit (ECU) system outliers is presented. The objective of this proposal is different than ours: in Derse et al. (2021), the aim is to detect outliers in the ECU system, which can be due to an intrusion or fault in a sensor, where our analysis focuses on fault prediction regarding in-vehicle equipment. In Chen et al. (2020), prediction of time-between-failures is performed. Data are combined with GIS information so that contextual information like weather conditions is used. The forecasting techniques employed are supervised, such as ANN, SVR, random forest, and gcForest. This prediction refers to the time between failures, while, on the other hand, we try to provide an effective way for live analysis and prediction of fault in each vehicle of the fleet.

In (Massaro et al., 2020), the authors use supervised ANN and clustering techniques in OBD II signals to perform driver style classification, which can be used for multiple purposes, such as consulting and PdM. This case is similar to ours with the difference that we use unsupervised methods instead of supervised ones. Also, the authors in Gardner et al. (2020) deal with a 2400-vehicle fleet with access to two types of logs, one with information for each vehicle and another one with maintenance details. They discover sequential patterns in the multivariate maintenance data using tensor decomposition, employ LSTM for PdM, and predict vehicle and fleet-level costs using an ARIMA time series model. With regards to the PdM task, we differ in that we use sensor readings from the vehicle in order to predict if maintenance is needed. The PdM techniques for vehicles are summarized in Table 1, where, apart from the different goals mentioned above, we also highlight their main limitations that render them inapplicable to our setting.

Streaming proximity-based anomaly detection

Finally, the state-of-the-art in streaming anomaly (or outlier) detection is described in textbooks such as Aggarwal (2017) and surveys, such as Boukerche et al. (2020). In this work, we build upon the proposal in Kontaki et al. (2011) due to the reasons mentioned in Section 3. However, our approach can be followed using any other existing exact distance-based outlier detection technique, such as Cao et al. (2014), Kontaki et al. (2016), Cao et al. (2016), Cao et al. (2017), Zhao et al. (2018), Yoon et al. (2019), and Tran et al. (2020) without changing the final results.

3 Unsupervised outlier detection techniques

Commonly, in PdM, we continuously monitor and process the condition metadata of the equipment under examination and the goal is to detect anomalous behavior (deviations) and system malfunctions with a view to proceeding to their timely treatment thus avoiding any more severe damage. The monitoring data is collected in real-time and, as such, is in the form of a data stream. In our case, we deal with fleet PdM. Instead of stand-alone equipment, we have multiple components (e.g., buses) as a part of a VF. Fleet members operate simultaneously and exhibit performance degradation over time. Moreover, the dynamic environment in which they operate results in a twofold challenge regarding outlier detection for PdM: firstly, to model the equipment behavior in such a way that extreme values correspond to malfunctions, and secondly, the underlying technique to take into account the context in which the equipment operates.

In this work, we investigate three existing approaches to perform outlier detection for PdM in vehicle fleets:

-

1.

Grand, which is a hybrid statistical and proximity-based technique (Rögnvaldsson et al., 2018).

-

2.

MCOD, a distance-based (proximity) technique (Kontaki et al., 2011).

-

3.

A simple streaming extension of the clustering-based technique in Diez et al. (2016), which considers each member of the fleet as a single cluster.

Our main novelty lies in that we propose two new methods that are shown to be more effective:

-

1.

A novel 2-stage algorithm, which adds a post-processing proximity check stage over the Grand method.

-

2.

A novel extension to the TranAD solution (Tuli et al., 2022) for vehicle fleets, where the state-of-the-art deep learning solution of TranAD is endowed by context-awareness and a more efficient thresholding technique (Yang et al., 2019).

The three existing approaches are also used to compare our proposals and are chosen because (i) they are already applied in real-world industrial cases, (ii) they are unsupervised and (iii) they can operate in a context-aware manner as explained in this section. Moreover, Grand is the main approach accompanying the VF dataset used in the evaluation.

3.1 Problem formulation and main workflow

In fleet PdM, we have data that are produced from a fleet Fl of components (members):

where each fi represents the data from the ith member of the fleet and is a multivariate time-series:

where each \({f_{i}^{t}}\) datapoint is collected from fi at a specific timestamp t and \({f_{i}^{t}} \in \mathbb {R}^{m}\) ∀ t. Moreover each fi has a start of life \(f_{i}^{start}\) and end of life \(f_{i}^{end}\), which can be different for each component of the fleet. Our objective is to predict the wear (behavior degradation) of each member fi before fi fails via outlier detection techniques.

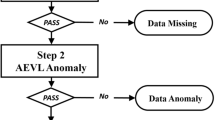

All the examined techniques conform to the high-level workflow depicted in Fig. 2. For all techniques, context-awareness is achieved through the definition of the Peer Group Pr, as explained below, whereas the deep learning technique employs clustering as well.

3.2 Statistical and hybrid methods

In statistical techniques, the data model is in the form of a closed-form probability distribution, and the parameters of this model are learned according to the corresponding data. As stated in Aggarwal (2017), “a major advantage of probabilistic models is that they can be easily applied to virtually any data type (or mixed data type), as long as an appropriate generative model is available for each mixture component.” However, this type of outlier detection is known to suffer from several limitations, such as over-fitting, difficulty in interpretation, especially in high dimensions, and the fact that sometimes, fitting the data to distribution is simply not appropriate (Aggarwal, 2017). Nevertheless, statistical-based methods have been proposed for fleet management (Rögnvaldsson et al., 2018; Byttner et al., 2011; Fan et al., 2015) especially for the VF dataset considered in our work; therefore we consider the corresponding techniques as our first competitor.

More specifically, we consider one variant of the so-called Consensus Self-Organizing Models (COSMO) (Byttner et al., 2011) method. This method is based on the wisdom of the crowd principle, where a group of similar equipment (i.e. a peer group) is used to determine normal behavior. The setting where the COSMO approach is applicable corresponds to a population (fleet) of components with similar characteristics and functionalities. In our case, this fleet of similar components is a fleet of vehicles, such as buses of a single type. COSMO extracts several metadata from raw monitored data, such as mean, average, correlation, and distributions values. The deviations are detected after the comparison of a sample with the peer group and the testing of the null hypothesis. COSMO is not an outlier detection technique, but a state-of-the-art framework for fleet management. We focus on the failure prediction part, which is done using an anomaly detection technique called Group anomaly detection (Grand).

In simple terms, Grand computes the strangeness of a new sample in relation to a Peer Group Pr, which captures the context. Then it derives the deviation level of the testing component using the strangeness of the lasts samples in time. GrandFootnote 3 comprises three parts regarding PdM, namely (i) streaming data representation, (ii) identification of the reference group, and (iii) detection of deviations. Regarding the first part, the Grand method can use several representations such as daily histograms, raw sensor data, or any representation of the raw data like Auto-Encoders (Rögnvaldsson et al., 2018). In addition, regarding the second part, in cases where there is no provided grouping of data sources, the peer group Pr is calculated from historical data from the same source as the testing sample. In such cases, the technique is referred to as Individual anomaly detection instead of Group anomaly detection.

More precisely, in Individual anomaly detection, there are two user-defined options for the construction of the Pr group, an inductive and a transductive one. In both, the Pr group is constructed in a manner that it captures the normal operation, which implies that it is necessary to have the capability to define what the normal system behavior is3. This is a limitation addressed by Group anomaly detection, where the knowledge of normal system behavior is not required. In Grand, the Pr group is constructed by fleet members that are assumed to operate in a similar environment. An anomaly is detected when the monitored data of a target member deviate in relation to the other members’ data.

In the Grand method, in order to detect deviations, we construct a distinct peer group Pr for each \({f_{i}^{t}}\) using the last npr datapoints of all fj ∈ Fl members excluding datapoints of fi, where npr is a parameter and can be expressed in the time domain (e.g., 15 days, one month). To test if a sample \({f_{i}^{t}}\) deviates, we calculate the strangeness of a sample \({f_{i}^{t}}\) (\(strng({f_{i}^{t}})\)) in relation to the rest of samples in a homogeneous set Pr. For this purpose, a non-conformity measure needs to be employed. Specifically, three non-conformity measures are considered to calculate \(strng({f_{i}^{t}})\): (i) Median, which is the distance of \({f_{i}^{t}}\) from the median of Pr (i.e., its most central pattern), (ii) Knn, which is the average distance to the k-nearest neighbors of \({f_{i}^{t}}\) within Pr, and (iii) Lof, which is the local outlier factor (Breunig et al., 2000) of \({f_{i}^{t}}\). In this way, the strangeness (\(strng({f_{i}^{t}})\)) of the examined sample \({f_{i}^{t}}\) and for all samples in the Pr are computed. After that, the p-value pt of the examined sample \({f_{i}^{t}}\) is computed using Algorithm 1 from (Dai and Bouguelia, 2020). If the p-values are uniformly distributed in [0,1], then the deviation level will be low; otherwise, it exhibits an increasing behavior. This procedure is slow, so it can be used only with low frequency in a real-time application, or with aggregated data like daily histograms as in our case (details are in Section 5).

3.3 Our proposal for proximity-based solutions

The rationale in proximity-based methods is to model outliers as points in a preferably metric space that are isolated from the remaining data based on similarity or distance functions (Aggarwal, 2017). To this end, three different techniques are usually used, namely clustering, density-based, and nearest-neighbor methods. In clustering and density-based methods, the outliers are points that are far away from dense regions. In the nearest-neighbor methodology (commonly referred to as distance-based outlier detection), the outliers are detected based on the number of their neighbors within an R distance or based on the distance from the k-nearest neighbor (Knorr et al., 2000). An advantage of proximity-based solutions is that they do not make any assumptions about the data distribution.

3.3.1 Distance-based outlier detection (DOD) for streams

In our case, continuous distance-based outlier detection is deployed, where one of the most widely used definitions for a distance-based outlier is adopted: an object \({f_{i}^{t}}\) is marked as an outlier, if there are less than k objects in a distance at most R from \({f_{i}^{t}}\), excluding \({f_{i}^{t}}\) itself (Knorr et al., 2000). Specifically, in this work where data is in form of a stream, the Micro-cluster-based Continuous Outlier Detection (MCOD) method is used (Kontaki et al., 2011); however, MCOD can be replaced by any other exact continuous distance-based outlier method mentioned in Section 2. MCOD operates exclusively in main-memory. It employs a sliding window to handle active objects of the data stream and an event-based approach to handle expired data (i.e. data that no longer belong to the window) and the newly arrived data. Also, MCOD uses micro-clusters to group data with a view to decreasing the number of distance computations, which are expensive; this aspect is important if real-time constraints are imposed.

Applying MCOD in our setting requires the specification of the window contents at each slide and the distance function. In a nutshell, the main rationale is to process new data as they arrive and compare new samples based on their distance from all existing samples in the current window. The window, as already mentioned, is typically sliding in an overlapping manner (as opposed to tumbling windows) and is common for all similar objects. If the segment of the bus fleet examined comprises one type of bus, this corresponds to a single sliding window. We can think of the window W as an alternative of Pr, with the difference that W contains datapoints from all fleet members and is calculated in every slide, while Pr is calculated for every fleet member. Such a distance-based outlier approach is characterized by the following configuration parameters apart from R and k: (i) the distance function, where the Euclidean distance is used in this work, (ii) the size of the window W, e.g., last 3 days or last 10000 measurements, and (iii) the size of the slide, which defines how frequently outlier computation is performed, e.g., every 1 hour or every new 1000 points. The reason we have chosen MCOD is threefold: (i) as evidenced in a third-party evaluation, it is suitable for real-time applications (Tran et al., 2016); (ii) it comes with flavors that can run several parameterizations concurrently (Kontaki et al., 2016), and (iii) it can be deployed on massively parallel platforms when PdM needs to be approached as a big data application (Toliopoulos et al., 2020). Furthermore, several open-source, freely available implementations exist (Georgiadis et al., 2013; Tran et al., 2016; Toliopoulos et al., 2020).

3.3.2 The 2-stage solution

The 2-stage solution aims to combine the rationale of the Grand and distance-based outliers methods. More specifically, to improve outlier detection results of the Grand method, we have added a proximity-based post-processing check after the computation of the deviation level. The idea is to use the proximity information of the sample in relation to the other samples in Pr. The 2-stage technique uses three parameters: R, Tout, and Tin, which are leveraged to assess (i) whether a deviation reported by the original Grand technique should be indeed reported as an outlier and (ii) whether a normal sample according to Grand should be reported as an outlier.

The main details are as follows. In the first stage, the Grand method is applied. In the second stage, for every sample, we calculate nn, which is the number of samples in Pr that are less than R distance far away. If the sample corresponds to a deviation and the percentage nn/|Pr| is larger than Tin, then we discard the initial decision and the sample is flagged as an inlier. In this way, we aim to decrease the false positives that are reported. Similarly, if the sample does not deviate and nn/|Pr| is smaller than Tout, we still report it as an outlier with a view to increasing true positives by catching more outliers. This double-check takes place to detect extreme cases, hence Tin takes relatively high values and Tout relatively low values.Footnote 4 In this way, we capture both seemingly deviating samples with many neighbors and non-seemingly deviating samples with very few neighbors.

3.4 Cluster-based outlier detection in fleet streaming data

In (Diez et al., 2016), a cluster-based method to find failure indications in joints of bridges is presented. We transfer this solution to our setting by treating joints as a fleet. This clustering-based technique comprises three main operations: i) outlier removal and cluster calculation, ii) computation of distance between clusters, and iii) deviation/outlier detection.

Each member of the fleet fi is seen as a different cluster. To form the clusters, we consider only datapoints of each fi in a sliding time window, as in MCOD. The only difference from MCOD is that W here is calculated for each time step. Essentially, as cluster fWi, we consider the datapoints of fi, which belong to W, fWi \(=\{{f_{i}^{t}} \mid t \in W \}\)

For each fWi, we perform outlier detection and removal in a repetitive process. Specifically, we calculate the distance of each datapoint in fWi from the cluster mean. Then we mark as an outlier each datapoint, whose distance is greater than the mean of all distances plus the standard deviation of such distances multiplied by a factor. This factor is set to 2, as proposed in the original paper (Diez et al., 2016). This process is stopped after a fixed number of iterations or when the maximum of such distances exceeds a threshold. The latter threshold is the mean of such distances plus the standard deviation multiplied by 0.5, using the distances of the first iteration. Finally, we pick the mean \(\mu _{W}^{fi}\) of the remaining datapoints in fWi as the representative point of the cluster. The rationale here is to clean the data from outliers in order to catch datapoints that refer to the normal operation of each member fi in W.

Algorithm of 2T along with standard deviation (Yang et al., 2019)

Regarding the second and third operations, to score each fi in W, we calculate the average distance of \(\mu _{W}^{fi}\) with the representative of all other members in W. Finally, to report anomalies we use the 2T standard deviation threshold algorithm presented in Yang et al. (2019). Having a set of anomaly scores, the 2T algorithm calculates an initial threshold based on all data, and after that, it recalculates the threshold using the scores which are smaller than the previous threshold. This process can be performed multiple times, but as the authors suggest, we perform the Algorithm 1 with two iterations.

3.5 TranAD for fleet PdM

After the big success of the transformers and the attention mechanism in Natural Language Processing (NLP) (Vaswani et al., 2017), transformers have been used in several machine learning tasks, such as forecasting and anomaly detection. TranAD (Deep Transformer Networks for Anomaly Detection in Multivariate Time Series Data) is a transformer model proposed in Tuli et al. (2022) and seems to outperform the previous state-of-the-art deep learning solutions for anomaly detection. A strong advantage of TranAD is that it can use a small training set without result quality degradation. We use this model and try to fit it into fleet predictive maintenance tasks.

The main procedure of applying TranAD for fleet PdM is shown in Algorithm 2. We leverage the capability of easy training of TranAD with few epochs (5) and a relatively small amount of training dataset (e.g. last 15 days of operation). Firstly, we apply a time-shifted window W as in previous methods. For each fi in W, we compute a peer group Pr similarly to the Grand method, which contains datapoints from all other members fj except fi; Pr thus captures the appropriate context. The difference from the Grand method is that in Grand, the Pr contents are computed for each \({f^{t}_{i}}\), while in TranAD, they are computed in every slide. We use the first half of Pr (in the time domain) to train a TranAD model to reconstruct its input, and the second half to calculate an appropriate threshold. The latter part in training is a novel extensions of ours. Optionally, we can perform dimensionality reduction on Pr, e.g., through PCA. We calculate the reconstruction error of a sample by calculating the L1 distance between the sample and the output of the model. So, for all datapoints of the second half of Pr, we compute the reconstruction error, which is used as an anomaly score. Then, the 2T algorithm (see Algorithm 1) is used to calculate an appropriate threshold. Finally, we use the model to reconstruct the datapoints of fWi and report an anomaly for each datapoint \({f_{i}^{t}}\) that has a reconstruction error higher than the threshold. The rationale is to train a TranAD model in set of data that represents the normality in the current specific context. Furthermore, the homogeneous set is also used to calculate the threshold using normal data.

However, we have observed that often, the procedure above does not properly capture a homogenous normality context. To ameliorate this problem, we proceed to the pre-processing described next.

3.5.1 Clustering before training TranAD

To further improve the results of TranAD, we suggest a human-in-the-loop solution. Specifically, we have observed that TranAD could not identify anomalies easily when the training set comprises multiple well isolated clusters, e.g., there is a set of different vehicle types, each one with distinct behavior. To provide experimental evidence regarding this observation, we build three simple synthetic datasets of two-dimensional data following the normal distribution. The first one contains two clusters, the second contains three clusters and the third contains five clusters. Each dataset is split into training and testing sets. The training set contains only normal data and the test set contains data from the same distributions of training set plus some outliers points from different distributions. In Fig. 3, we plot these artificial datasets. Then, for these synthetic datasets, we compare the results when training using all clusters against training a different TranAD model for each group, after we apply clustering in data points. For the latter case, we apply clustering only in the training data. To detect outliers in the testing set, for each data point, we detect the closest cluster and use the corresponding TranAD model to calculate the reconstruction error. For the first case, where a common TranAD model is used, the reconstruction error in the test set for all three datasets is shown in Fig. 4. The computed error of the outliers is on the rightmost part in red separated by a dotted line. As the number of outliers grows, a significant portion of outliers, up to one third, is not detected because their reconstruction error is similar to the error of normal points. On the other hand, in the second case, where we train TranAD models per cluster, the reconstruction error of outliers is well distinguished from normal data, as shown in Fig. 5.

In summary, we suggest running a hierarchical clustering algorithm first to detect the numbers of the clusters in data. As soon as the number of clusters cn is defined, instead of using the whole Pr as described above, we employ clustering in Pr datapoints using cn clusters. Then, we train cn models with the same process as before, with the only difference that each model uses datapoints of the corresponding cluster instead of the whole Pr. Finally, to produce the reconstruction error of fi, we assign each datapoint \({f_{i}^{t}}\) to its closer cluster and use the corresponding model to calculate the reconstruction error. This improves the results of TranAD in complex datasets because TranAD can easier learn to reconstruct the data of a single cluster. Apparently, this adds a time overhead, e.g., at the order of several minutes, which is acceptable because of the low frequency of our data updates (e.g., a couple of times per day).

3.6 Complexity

In this work, data are already aggregated thus we have relatively low frequency of data samples, therefore, we do not emphasize a lot on real-time response issues. However, all techniques have time and space complexity at most O(n2), where n is the number of points in Pr or in W. If TranAD employs hierarchical clustering its time complexity increases to cubic though. TranAD is also at most cubic in the number of dimensions when it employs PCA. Details are omitted due to space constraints.

4 Creating a benchmark dataset from NASA C-Maps

The Turbofan Engine Degradation Simulation Data Set includes run-to-failure simulated data from turbofan jet engines. Engine degradation simulation was carried out using C-MAPSS (Saxena and Goebel, 2008). Four different degradation sets were simulated under different combinations of operational conditions and fault modes. This dataset is widely used to predict the remaining useful life (RUL). The C-Maps dataset provides four sets of turbofan simulations, termed f001,f002,f003 and f004, where each set contains several run-to-failure examples operated under similar conditions. The time instance in these examples are cycles and for each cycle, we have a vector of three settings and twenty-one sensor values. We use the training set to build a benchmarking dataset, which we make available. The strongest point of this procedure is that the dataset is accompanied by ground truth, which is extremely difficult to acquire in practice. The end of life of each turbofan is treated as a fault to be predicted. In our experiments, we use multiple values of prediction horizons (PHs) to evaluate the PdM methods. An alert is considered a true positive if it is inside the prediction horizon, which defines the period before the fault in which the prediction should be made. Alerts should not be raised too early or too late.

4.1 Building an artificial vehicle fleet PdM dataset

We consider each of the four sets as a fleet of turbofan engines because they operate in similar conditions. Knowing that each example starts from a normal condition and starts deviating at some point until its end of life, we can construct a realistic fleet dataset. In real-life fleet cases, there is a sufficient amount of data considered normal and a small proportion of data deviating each time. So, we first need to apply some pre-processing to the original dataset. A description of such pre-processing for each set of similar turbofans is presented below for fully reproducibility reasons. It is important to mention that this pre-processing does not benefit by default any of the methods we test for two reasons. Firstly, our assumption that a proportion (e.g., two thirds) of operating fleet members is running in relatively normal condition is not an overestimation of a real-world behavior in vehicle fleets. E.g., in Rögnvaldsson et al. (2018), it is mentioned that downtime of buses is 11% of their whole lifetime. Secondly, we do not use any knowledge about the starting time of wear in turbofans, which is unknown to us; we use only the information about their end of life.

When constructing the benchmark, we aim to ensure that, at each time point, at least \(\frac {2}{3}\) of the fleet operates in a relatively healthy condition. We name this parameter as Minimum Good Proportion Reference (MGPR). We start the dataset building process by sorting each set of examples (f001,f002,f003,f004) by the end of the life cycle of each turbofan operation. Each turbofan is assigned an id equal to its position in the sorted list. We refer to a run-to-failure example of a turbofan as ti, where ti is a time series and i is the id of the example. Initially, each ti has start of life cycle ti.start set equal to 1 and ti.end to the cycle at the end of its life. We aim to shift ti in the time domain to simulate the behavior of a fleet, where some members fail and others start operating over time. At the same time, we want to ensure that MGPR is fulfilled for as many examples as possible. To attain this, we group examples into bins that we may shift in the time axis later. Using predefined step values, we split the set into step bins. Using an iterative process, we assign each ti into a bin. The jth bin contains all ti examples for which i mod step = j. For example, in the f001 set, which has cardinality of 100, setting the step equal to 4 yields 4 bins with 25 examples each. Due to the manner bins are populated, the end of life of each ti in each bin is as far away as possible from the end of life of the remaining examples in the same bin. Next, we provide some definitions, which help us define how to shift the bins and construct the Pr sets in a meaningful manner.

Definition 1 (good example)

ti is considered a good example for tj, if ti starts before the end of life of tj and ti’s end of life is at least mindist time units (cycles) after the tj’s end of life, i.e., ti.end − tj.end > mindist (mindist is a constant value set to 60).

Definition 2 (bad example)

ti is considered a bad example for tj, if it starts before the end of life of tj and ti’s end of life is not at least mindist time units apart from tj’s end of life (|tj.end − ti.end| < mindist ).

Definition 3 (Good Proportion Reference (GPR))

The GPR of each ti is calculated using the formula |good|/(|good| + |bad|).

Definition 4 (Example Validity)

The MGPR is the minimum GPR value that an instance ti must have to be considered valid for analysis. This is set to \(\frac {2}{3}\) in our case.

Starting from the first constructed bin Bbin, we use the instances of the other bins Rbins = ti∉Bbin to improve the GPR of each ti ∈ Bbin through the Algorithm 3. Initially, all instances ti have their start of life values set to cycle 1. To improve an instance ti in Bbin, we shift the instances from Rbins in order the end of life of ti ∈ Bbin to overlap with a healthy state of ti ∈ Rbins. In other words, we shift instances from Rbins to ensure that as many as possible instances from Bbin meet the MGPR threshold.

After the application of Algorithm 3, we end up with a set of run-to-failure examples shifted in time. We will test our techniques for all valid examples, i.e., examples with GPR > MGPR. Finally, to use the constructed dataset, we map the cycles in artificial timestamps. One cycle is translated to one day of operation. As a starting point, we select the January 1, 2000 (dates here are used just as a label and do not impact on the results). In this way, we place each ti in the time domain simulating a real fleet usage in time, where equipment starts and stops operating uniformly in time. By analyzing only the samples with a GPR above \(\frac {2}{3}\), we assume that at least two-thirds of the equipment is in a normal state as explained already. Having potentially abnormal points mixed with normal ones captures real-world scenarios more realistically. In Figs. 6 and 7, we plot a line for each ti in the final set, starting from ti.start and ending to ti.end to show how the run-to-failures examples are shifted in time to produce the final fleet dataset.

4.2 Evaluation based on maintenance costs and benefits

In order to evaluate our models, we use a cost-based approach, where we assign a cost for each prediction of the model. The idea is to transfer the results of the model to real-life metrics. First, we count one false positive (FP) whenever an alarm is raised, i.e., an anomaly is detected, before the PH or very close to the end of life of a component; in our examples, this buffer period is constant to 2 days. We count one true positive (TP) if at least one alarm is raised within the PH. We assume that an alarm in PH could lead to the prevention of failure (i.e. the expert could verify that something is wrong). It holds that false negatives FN= 1-TP. We assign the cost of FP as 1, because an aimless check was made and/or we stop the component operation while it has at least PH of remaining life. We assign the cost of FN as a multiple α of the cost of FP, where α ranges from 5 to 100, because a failure may be catastrophic depending on the case. Finally, we assign the cost of TP as 1, which is the cost of maintenance of a deviating component. Lastly, for true negatives TN there is no cost. To evaluate our methods, we calculate the FP, TP, FN, TN for each component in the fleet, and we sum them, and then we derive the cost according to the assignments mentioned above. The parameter configurations are provided in the repository. The research question we aim to answer is: do our proposals yield lower maintenance costs than existing techniques?

4.2.1 F001 fleet set

In the first dataset, TranAD performs better than all other methods, achieving the lowest cost. We can notice from Fig. 8 that the cost for TranAD is constant for all different FN costs, which is due to zero False Negatives. In other words, TranAD can detect all failures in time regardless of the different lengths of the PH. It is important to mention that TranAD manages to achieve these costs using a single parameterization, whereas for the other techniques, several configurations are tested and we present the best performing ones; these details are provided along with the datasets in the publicly available code repository. As far as the other methods are concerned, Grand, DOD and 2-stage have similar costs. The second lowest cost is achieved by the 2-stage method. The results about which method performs better are the same for all different PHs, where Clusterjoint has a similar cost with other methods for low FN cost but has a much higher total cost for a high FN cost; this is due to the number of false negatives that it reports.

4.2.2 F003 fleet set

We expect to observe similar results to the f001 set, because we know that f001 and f003 refer to the same kind of fault (Saxena and Goebel, 2008). Indeed, TranAD reports have the lowest cost in general than other methods as shown in Fig. 9. Again, the second smallest cost is produced by the 2-stage method, which is better in TrandAD for high FN cost and larger PH. In this setting, distance-based outlier detection (DOD) also performs particularly efficiently.

4.2.3 F002 and F004 fleet sets

The f002 and f004 datasets contain about 250 and 225 components, respectively. Here, the initial approach of TranAD was unable to perform and hardly made reports. We have a situation where the training set consists of data from multiple well-separated clusters while previously there was a single cluster (see Fig. 10). To handle this, we use the pre-processing clustering discussed in Section 3.5. More specifically, we plot the Pr data points for each window in our TranAD process. To do so, we perform a PCA with two components in Pr. The plotted data depict six separated clusters for f002 and f004 in Fig. 11. For f001 and f003, the corresponding plots depict a single cluster (see Fig. 10). We also apply hierarchical clustering for each time window. In Fig. 12 and 13, the dendrogram of hierarchical clustering for a specific window is shown. We can observe that, for f001, the data are considered as part of one cluster for distance near 250, while for f004, for the same distance, we identify six different clusters.

After applying the above process for TranAD, we can observe the minimum cost produced by each method for f002 in Fig. 14 and for f004 in Fig. 15. For f002, we can observe that TranAD is better than all other methods except for FN cost equal to 100, where the 2-stage method performs equally well or better. In the f004 dataset, 2-stage results in lower cost from TranAD as the cost of FN increases. Table 2 presents the relative performance of the two best-performing techniques, namely TranAD and 2-stage. TranAD is superior apart from case where there are multiple clusters in the fleet dataset and FN cost is high.

5 Bus fleet case-study

This case study concerns a fleet of 19 buses used in a town on the west coast of Sweden. All information about the data presented here is taken from the work in Rögnvaldsson et al. (2018). The buses are on service contracts offered by the original equipment manufacturer and repairs are made either in a manufacturer’s garage or on the road with additional cost. The manufacturer’s workshop is 1 hour far away from the bus operator. Hence a sub-contractor repair shop is sometimes used for maintenance to save time. A critical KPI (key performance indicator) is the time a bus is not available due to maintenance or repair. To avoid disruptions in service due to unavailable vehicles, the typical solution is to use spare buses. The target is the time a bus is unavailable to be up to 5% of the total operating time. If this is achieved, the operator can use just a single spare bus for every 20 operating buses. The data collected refer to a period of 4 years (2011-2015), during which it was observed that the buses spent on average 11% of the operating time in repairs and maintenance. The buses visited about 11 times a year the workshop, a number which is twice the number of scheduled visits. The time duration a bus was at the workshop is, on average, 4 days, and much of this time is due to waiting for other buses to be repaired. Thus the capability to predict failures in time is a powerful tool for enhanced maintenance planning of the buses and damage replacement, avoiding many of the above delays.

In this context, the data we have access to are related to the air pressure signal (Wet Tank Air Pressure). This signal is the only one associated with the components of the air compressor, which was very problematic according to the bus service logs. The datasetFootnote 5 contains the aggregated results in the form of histograms, and more specifically, for each bus, there is at most a single sample per day, which is normalized and contains 50 histogram bins. Each such bin is a different dimension, i.e., we need to process a 50-dimensional dataset. The faults concerning the air compressor are categorized as follows: (1) faults in the compressor that led to the need for the replacement of the component during the operation of the bus, (2) damage to the compressor leading to its replacement in the workshop, and (3) other damages related to the suspensions and the brakes. The most serious failures are these of the first two categories. The information about the dates on which these faults occurred is obtained from Figure 7 of the work in Rögnvaldsson et al. (2018)Footnote 6. In the evaluation, we consider 12 out of 19 buses, for which we have failures, where towing of the bus was needed; more specifically, we considered buses with numbers 369, 370, 371, 372, 373, 376, 377, 378, 380, 381, 382 and 383.

5.1 Bus experimental setting

We separate outliers into three classes: (i) class A outliers, which concern the most serious failures, because of the need for towing the bus; (ii) class B outliers, which refer to compressor replacement in the workshop; and (iii) class C outliers for the less serious failures, which are repairs related to the air suspension and brakes. As in Fan et al. (2015) and in the previous section, we will use a PH (prediction horizon) tolerance period for the prediction of faults. More specifically, a reported outlier is counted as a correct warning about a forthcoming failure, when this failure takes place in a PH period before the failure. For these experiments, the PH is set to 15, 23, and 30 days, as in the benchmarking experiments. An important notice is that, after a replacement of the compressor component in the fleet of buses takes place, it is expected this component to exhibit deviations in its behavior just because the component is a new one. To account for this fact and focus solely on PdM rather than outlier detection in general, such false detection incidents that are reported within PH time after the replacement of the component are ignored. We assign a different cost for each class of failures. The class A outliers are the most expensive ones, so we assign ten times higher costs than the class C outliers. Class B outliers are cheaper than the ones in class A, due to no need for towing but they are still expensive. So we assign a cost five times higher than class C. The class C is considered as less expensive repairs, so we assign them a cost equal to 2,3,4 or 5. False alarms are assigned a cost equal to 1.

5.2 Results

We use several hyper-parameters for each method and keep the lowest cost for each different PH and maintenance score as previously (see Fig. 16). The Grand and Clusterjoint methods could not produce lower cost than a dummy method that never raises alarms. DOD achieves good results and can be used to decrease the cost of maintenance in such a scenario. As in the previous set of experiments, 2-stage and TranAD achieve the lowest cost. Based on the uncertainty of the ground truth, we can assume that both of these methods achieve the best results. 2-stage managed to successfully prune Grand method’s false positives and keep most of the correct alarms of Grand. Also, it managed to raise an alarm when the deviation level near a class A outlier was near zero (bus 378 July of 2013). TranAD’s efficient behavior is largely attributed to the dimensionality reduction through using PCA and keeping 10 features (see Algorithm 2); otherwise, its cost would be much higher.

6 Conclusions

We present a predictive maintenance (PdM) context-aware solution for vehicle fleet management using unsupervised techniques to deal with the dynamic environment, in which fleet members operate. To this end, we use a reference group to process the fleet’s members, which mostly contains vehicle data in the same context without faults since we assume that only a small portion of fleet members experience failures at each specific time point. We examine a novel hybrid proximity-based solution. Moreover, we show how the state-of-the-art deep learning anomaly detection method called TranAD can be used to handle fleet streaming data. Rendering the deep learning techniques context-aware is not trivial and, in our case, we resort to hierarchical clustering, while dimensionality reduction is also important. The evaluation targets lowering maintenance cost to better reflect real-world situations. Last but not least, we develop and make available a benchmark dataset for evaluating PdM solutions in vehicle fleets; this dataset is based on an existing PdM dataset for turbofan engines. Our two novel suggestions, i.e., the proximity-based 2-stage technique and the adaptation of TranAD to vehicle fleets, are shown to outperform existing solutions in our experiments. In the future, we intend to investigate automated fine-tuning solutions that are more sophisticated than the 2T technique already employed and to judiciously combine the results of multiple predictors to form an advanced ensemble solution.

Notes

In this work, we use the terms outlier and anomaly, interchangeably, as is commonly done in the literature, e.g. in Boukerche et al. (2020).

The 2-stage approach is expected to function differently than MCOD, because it looks for neighbors only in the past samples, while MCOD continuously assesses all the window contents.

Regarding the exact time period during which the failures occurred, there may be a deviation of a few days, but this deviation does not affect the overall results due to the time granularity of the case study.

References

Aggarwal, C.C. (2017). An introduction to outlier analysis. In: Outlier Analysis. Springer. https://doi.org/10.1007/978-3-319-47578-3_1

Audibert, J., Michiardi, P., Guyard, F., Marti, S., & Zuluaga, M. A. (2020). Usad: Unsupervised anomaly detection on multivariate time series. In Proc. of the 26th ACM SIGKDD Int. Conf. on Knowledge Discovery & Data Mining, pp. 3395–3404.

Baptista, M., Sankararaman, S., de Medeiros, I. P., Nascimento Jr, C., Prendinger, H., & Henriques, E. M. (2018). Forecasting fault events for predictive maintenance using data-driven techniques and arma modeling. Computers & Industrial Engineering, 115, 41–53.

Boukerche, A., Zheng, L., & Alfandi, O. (2020). Outlier detection: methods, models, and classification. ACM Computing Survey, 53(3), 55:1–55:37.

Breunig, M.M., Kriegel, H.P., Ng, R.T., & Sander, J. (2000). Lof: Identifying density-based local outliers. SIGMOD Rec., 29(2), 93–104. https://doi.org/10.1145/335191.335388.

Byttner, S., Rögnvaldsson, T., & Svensson, M. (2011). Consensus self-organized models for fault detection (cosmo). Engineering Applications of Artificial Intelligence, 24(5), 833–839. https://doi.org/10.1016/j.engappai.2011.03.002. http://www.sciencedirect.com/science/article/pii/S0952197611000467.

Cao, L., Wang, J., & Rundensteiner, E. A. (2016). Sharing-aware outlier analytics over high-volume data streams. In ICDM, pp. 527–540. ACM.

Cao, L., Yan, Y., Kuhlman, C., Wang, Q., Rundensteiner, E. A., & Eltabakh, M. Y. (2017). Multi-tactic distance-based outlier detection. In ICDE, pp. 959–970.

Cao, L., Yang, D., Wang, Q., Yu, Y., Wang, J., & Rundensteiner, E. A. (2014). Scalable distance-based outlier detection over high-volume data streams. In ICDE, pp. 76–87.

Carvalho, T. P., Soares, F. A. A. M. N., Vita, R., da Piedade Francisco, R., Basto, J. P., & Alcalá, S. G. S. (2019). A systematic literature review of machine learning methods applied to predictive maintenance. Computers and Industrial Engineering, 137.

Chen, C., Liu, Y., Sun, X., Di Cairano-Gilfedder, C., & Titmus, S. (2020). Automobile maintenance modelling using gcforest. In 2020 IEEE 16Th Int. Conf. on Automation Science and Engineering (CASE), pp. 600–605. IEEE.

Dai, L., & Bouguelia, M.R. (2020). Testing exchangeability with martingale for change-point detection.

Derse, C., el Baghdadi, M., Hegazy, O., Sensoz, U., Gezer, H. N., & Nil, M. (2021). An anomaly detection study on automotive sensor data time series for vehicle applications. In 2021 Sixteenth Int. Conf. on Ecological Vehicles and Renewable Energies (EVER), pp. 1–5. IEEE.

Diez, A., Khoa, N. L. D., Alamdari, M. M., Wang, Y., Chen, F., & Runcie, P. (2016). A clustering approach for structural health monitoring on bridges. Journal of Civil Structural Health Monitoring, 6(3), 429–445.

Diez-Olivan, A., Pagan, J. A., Khoa, N. L. D., Sanz, R., & Sierra, B. (2018). Kernel-based support vector machines for automated health status assessment in monitoring sensor data. The Int. Journal of Advanced Manufacturing Technology, 95(1), 327–340.

Diez-Olivan, A., Pagan, J. A., Sanz, R., & Sierra, B. (2017). Data-driven prognostics using a combination of constrained k-means clustering, fuzzy modeling and lof-based score. Neurocomputing, 241, 97–107.

Fan, Y., Nowaczyk, S., & Rögnvaldsson, T. (2015). Evaluation of self-organized approach for predicting compressor faults in a city bus fleet. Procedia Computer Science, 53, 447–456. INNS Conf. on Big Data 2015 Program San Francisco, CA. USA 8–10 August 2015. https://doi.org/10.1016/j.procs.2015.07.322.

Filios, G., Katsidimas, I., Nikoletseas, S., Panagiotou, S., & Raptis, T. P. (2020). An agnostic data-driven approach to predict stoppages of industrial packing machine in near. In 2020 16Th Int. Conf. on Distributed Computing in Sensor Systems (DCOSS), pp. 236–243. https://doi.org/10.1109/DCOSS49796.2020.00046.

Fink, O., Zio, E., & Weidmann, U. (2013). Extreme learning machines for predicting operation disruption events in railway systems.

Gardner, J., Mroueh, J., Jenuwine, N., Weaverdyck, N., Krassenstein, S., Farahi, A., & Koutra, D. (2020). Driving with data in the motor city: Mining and modeling vehicle fleet maintenance data. arXiv:2002.10010.

Georgiadis, D., Kontaki, M., Gounaris, A., Papadopoulos, A. N., Tsichlas, K., & Manolopoulos, Y. (2013). Continuous outlier detection in data streams: an extensible framework and state-of-the-art algorithms. In SIGMOD, pp. 1061–1064.

Hundman, K., Constantinou, V., Laporte, C., Colwell, I., & Soderstrom, T. (2018). Detecting spacecraft anomalies using lstms and nonparametric dynamic thresholding. In Proceeding of the 24th ACM SIGKDD Int. Conf. on knowledge discovery & data mining, pp. 387–395.

Knorr, E. M., Ng, R. T., & Tucakov, V. (2000). Distance-based outliers: Algorithms and applications. The VLDB Journal 8(3-4).

Kontaki, M., Gounaris, A., Papadopoulos, A. N., Tsichlas, K., & Manolopoulos, Y. (2011). Continuous monitoring of distance-based outliers over data streams. In 2011 IEEE 27Th Int. Conf. on Data Engineering, pp. 135–146. https://doi.org/10.1109/ICDE.2011.5767923.

Kontaki, M., Gounaris, A., Papadopoulos, A. N., Tsichlas, K., & Manolopoulos, Y. (2016). Efficient and flexible algorithms for monitoring distance-based outliers over data streams. Information systems, 55, 37–53.

Korvesis, P., Besseau, S., & Vazirgiannis, M. (2018). Predictive maintenance in aviation: Failure prediction from post-flight reports. In 2018 IEEE 34Th Int. Conf. on Data Engineering (ICDE), pp. 1414–1422. IEEE.

Kovalev, D., Shanin, I., Stupnikov, S., & Zakharov, V. (2018). Data mining methods and techniques for fault detection and predictive maintenance in housing and utility infrastructure. In 2018 Int. Conf. on Engineering Technologies and Computer Science (ent), pp. 47–52.

Li, Y., Liu, C., Hua, J., Gao, J., & Maropoulos, P. (2019). A novel method for accurately monitoring and predicting tool wear under varying cutting conditions based on meta-learning. CIRP Annals, 68(1), 487–490.

Manco, G., Ritacco, E., Rullo, P., Gallucci, L., Astill, W., Kimber, D., & Antonelli, M. (2017). Fault detection and explanation through big data analysis on sensor streams, (Vol. 87. https://doi.org/10.1016/j.eswa.2017.05.079. https://www.sciencedirect.com/science/article/pii/S0957417417304074.

Massaro, A., Selicato, S., & Galiano, A. (2020). Predictive maintenance of bus fleet by intelligent smart electronic board implementing artificial intelligence. IoT, 1(2), 180–197.

Meng, H., Zhang, Y., Li, Y., & Zhao, H. (2020). Spacecraft anomaly detection via transformer reconstruction error. In Z. Jing (Ed.) Proc. of the Int. Conf. on Aerospace System Science and Engineering 2019, pp. 351–362.

Naskos, A., Gounaris, A., Metaxa, I., & Kȯchling, D. (2019). Detecting anomalous behavior towards predictive maintenance. In Advanced information systems engineering workshops - CAiSE 2019 int. workshop, 349, pp. 73–82.

Naskos, A., Kougka, G., Toliopoulos, T., Gounaris, A., Vamvalis, C., & Caljouw, D. (2019). Event-based predictive maintenance on top of sensor data in a real industry 4.0 case study. In Machine Learning and Knowledge Discovery in Databases - int. Workshops of ECML PKDD proceedings, Part II, pp. 345–356.

Rögnvaldsson, T., Nowaczyk, S., Byttner, S., Prytz, R., & Svensson, M. (2018). Self-monitoring for maintenance of vehicle fleets. Data Mining and Knowledge Discovery, 32(2), 344–384. https://doi.org/10.1007/s10618-017-0538-6.

Saxena, A., & Goebel, K. (2008). Turbofan engine degradation simulation data set. NASA Ames Prognostics Data Repository, 1551–3203.

Scully, P. (2019). Predictive maintenance report 2019-2024 iot analytics. Iot-analytics.com.

Shafi, U., Safi, A., Shahid, A. R., Ziauddin, S., & Saleem, M. Q. (2018). Vehicle remote health monitoring and prognostic maintenance system. Journal of Advanced Transportation, 2018.

Sipos, R., Fradkin, D., Moerchen, F., & Wang, Z. (2014). Log-based predictive maintenance. In Proc. of the 20th ACM SIGKDD Int. Conf. on Knowledge Discovery and Data Mining, pp. 1867–1876. ACM.

Theissler, A., Pérez-Velázquez, J., Kettelgerdes, M., & Elger, G. (2021). Predictive maintenance enabled by machine learning: Use cases and challenges in the automotive industry. Reliability Engineering & System Safety, 215, 107864.

Toliopoulos, T., Bellas, C., Gounaris, A., & Papadopoulos, A. (2020). PROUD: Parallel outlier detection for streams. In SIGMOD, pp. 2717–2720.

Toliopoulos, T., Gounaris, A., Tsichlas, K., Papadopoulos, A., & Sampaio, S. (2020). Continuous outlier mining of streaming data in flink. Information Systems, 93, 101569.

Tran, L., Fan, L., & Shahabi, C. (2016). Distance-based outlier detection in data streams. Proceedings of the VLDB Endowment, 9(12), 1089–1100.

Tran, L., Mun, M. Y., & Shahabi, C. (2020). Real-time distance-based outlier detection in data streams. 14, (2), 141–153.

Tuli, S., Casale, G., & Jennings, N. R. (2022). Tranad: Deep transformer networks for anomaly detection in multivariate time series data. arXiv:2201.07284.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). In Attention is all you need. Advances in Neural Information Processing Systems 30.

Wang, J., Li, C., Han, S., Sarkar, S., & Zhou, X. (2017). Predictive maintenance based on event-log analysis: a case study. IBM Journal of Research and Development, 61(1), 11–121.

Wang, J., Liu, C., Zhu, M., Guo, P., & Hu, Y. (2018). Sensor data based system-level anomaly prediction for smart manufacturing. In 2018 IEEE Int. Congress on Big Data (Bigdata Congress), pp. 158–165. IEEE.

Yang, J., Rahardja, S., & Fränti, P. (2019). Outlier detection: how to threshold outlier scores?. In Proc. of the Int. Conf. on Artificial Intelligence, Information Processing and Cloud Computing, pp. 1–6.

Yeh, C. M., Zhu, Y., Ulanova, L., Begum, N., Ding, Y., Dau, H. A., Silva, D. F., Mueen, A., & Keogh, E. J. (2016). Matrix profile i: all pairs similarity joins for time series: a unifying view that includes motifs, discords and shapelets. In IEEE ICDM, pp. 1317–1322.

Yoon, S., Lee, J., & Lee, B. S. (2019). NETS: Extremely fast outlier detection from a data stream via set-based processing. PVLDB, 12(11), 1303–1315.

Zhang, C., Song, D., Chen, Y., Feng, X., Lumezanu, C., Cheng, W., Ni, J., Zong, B., Chen, H., & Chawla, N. V. (2019). A deep neural network for unsupervised anomaly detection and diagnosis in multivariate time series data. In Proc. of the AAAI Conf. on Artificial Intelligence, 33, 1409–1416.

Zhao, G., Yu, Y., Song, P., Zhao, G., & Ji, Z. (2018). A parameter space framework for online outlier detection over high-volume data streams. IEEE Access, 6, 38124–38136.

Acknowledgements

Not Applicable

Funding

Open access funding provided by HEAL-Link Greece

Author information

Authors and Affiliations

Contributions

Both authors actively contributed to the research and the writing. The implementation was conducted by the 1st author

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

Not Applicable

Consent for Publication

Not Applicable

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Giannoulidis, A., Gounaris, A. A context-aware unsupervised predictive maintenance solution for fleet management. J Intell Inf Syst 60, 521–547 (2023). https://doi.org/10.1007/s10844-022-00744-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10844-022-00744-2