Abstract

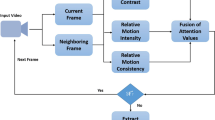

This work addresses the development of a computational model of visual attention to perform the automatic summarization of digital videos from television archives. Although the television system represents one of the most fascinating media phenomena ever created, we still observe the absence of effective solutions for content-based information retrieval from video recordings of programs produced by this media universe. This fact relates to the high complexity of the content-based video retrieval problem, which involves several challenges, among which we may highlight the usual demand on video summaries to facilitate indexing, browsing and retrieval operations. To achieve this goal, we propose a new computational visual attention model, inspired on the human visual system and based on computer vision methods (face detection, motion estimation and saliency map computation), to estimate static video abstracts, that is, collections of salient images or key frames extracted from the original videos. Experimental results with videos from the Open Video Project show that our approach represents an effective solution to the problem of automatic video summarization, producing video summaries with similar quality to the ground-truth manually created by a group of 50 users.

Similar content being viewed by others

References

Almeida, J., Leite, N.J., & Torres, R.daS. (2013). Online video summarization on compressed domain. Journal of Visual Communication and Image Representation, 6, 729–738.

Avila, S., Lopes, A., Luz, A., & Albuquerque, A. (2011). VSUMM: A mechanism designed to produce static video summaries and a novel evaluation method. Pattern Recognition Letters, 32, 56–68.

Baber, J., Afzulpurkar, N., & Satoh, S. (2013). A framework for video segmentation using global and local features. International Journal of Pattern Recognition and Artificial Intelligence, 27, 1355007.

Bay, H., Ess, A., Tuytelaars, T., & Van Gool, L. (2008). Speeded-up robust features (SURF). Computer vision and image understanding, 110, 346–359.

Bellman, S., Murphy, J., Treleaven-Hassard, S., O’Farrell, J., Qiu, L., & Varan, D. (2013). Using internet behavior to deliver relevant television commercials. Journal of Interactive Marketing, 27, 130–140.

Benoit, A., Caplier, A., Durette, B., & Hérault, J. (2010). Using human visual system modeling for bio-inspired low level image processing. Computer Vision and Image Understanding, 114, 758–773.

Bhattacharyya, A. (1946). On a measure of divergence between two multinomial populations. Sankhya: The Indian Journal of Statistics, 1, 401–406.

Borji, A., & Itti, L. (2013). State-of-the-art in visual attention modeling. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35, 185–207.

Carrasco, M. (2011). Visual attention: The past 25 years. Vision Research, 51, 1484–1525.

Cesar, P., & Chorianopoulos, K. (2008). The evolution of TV systems, content, and users toward interactivity. Foundations and Trends in Human-Computer Interaction, 2, 279–373.

Charaudeau, P. (2002). A communicative conception of discourse. Discourse Studies, 4, 301–318.

Chen, B., Wang, J., & Wang, J. (2009). A novel video summarization based on mining the story-structure and semantic relations among concept entities. IEEE Transactions on Multimedia, 11, 295–312.

Cohen, RA. (2014). Computational approaches to attention, (pp. 891–930). Gainesville: The Neuropsychology of Attention, Springer.

Conceição, F.L.A., Pádua, F.L.C., Pereira, A.C.M., Assis, G.T., Silva, G.D., & Andrade, A.A.B. (2016). Semiodiscursive Analysis of TV Newscasts Based on Data Mining and Image Processing. Acta Scientiarum Technology (To appear).

Dong, P., Xia, Y., Wang, S., Zhuo, L., & Feng, D. (2015). An iteratively reweighting algorithm for dynamic video summarization. Multimedia Tools and Applications, 74, 9449–9473.

Ejaz, N., Tariq, T., & Baik, S. (2012). Adaptive key frame extraction for video summarization using an aggregation mechanism. Information Systems, 23, 1031–1040.

Evangelopoulos, G., Zlatintsi, A., Potamianos, A., Maragos, P., Rapantzikos, K., Skoumas, G., & Avrithis, Y. (2013). Multimodal saliency and fusion for movie summarization based on aural, visual, and textual attention. IEEE Transactions on Multimedia, 15, 1553–1568.

Fontaine, G., Borgne-Bachschmidt, L., & Leiba, M. (2010). Scenarios for the internet migration of the television industry. Communications and Strategies, 77, 21–34.

Geisler, G., Marchionini, G., Wildemuth, B., Hughes, A., Yang, M., Wilkens, T., & Spinks, R. (2002). Video browsing interfaces for the open video project. Proceedings of Human Factors in Computing Systems, 514–515.

Guironnet, M., Pellerin, D., Guyader, N., & Ladret, P. (2007). Video summarization based on camera motion and a subjective evaluation method. EURASIP Journal on Image and Video Processing, 2007, 60245.

Hanjalic, A., & Xu, L. (2005). Affective video content representation and modeling. IEEE Transactions on Multimedia, 7, 143–154.

Hérault, J., & Barthélémy, D. (2007). Modeling visual perception for image processing. In International Work-Conference on Artificial Neural Networks. Berlin: Springer.

Hutchins, B., & Rowe, D. (2012). Sport beyond television: The internet, digital media and the rise of networked media sport. London: Routledge.

Kannan, R., Ghinea, G., & Swaminathan, S. (2015). What do you wish to see? a summarization system for movies based on user preferences. Information Processing & Management, 51, 286—305.

Li, Y., Lee, S., Yeh, C., & Kuo, C. (2006). Techniques for movie content analysis and skimming: tutorial and overview on video abstraction techniques. IEEE Signal Processing Magazine, 23, 79–89.

Lowe, D. (2004). Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision, 60, 91–110.

Lucas, B., & Kanade, T. (1981). An iterative image registration technique with an application to stereo vision. In Proceedings of Image Understanding Workshop (pp. 121–130).

Ma, Y., & Zhang, H. (2001). A new perceived motion based shot content representation. In Proceedings of IEEE International Conference on Image Processing (pp. 426–429).

Ma, Y., & Zhang, H. (2003). Contrast-based image attention analysis by using fuzzy growing. In Proceedings of ACM International Conference on Multimedia (pp. 374–381).

Ma, Y., Hua, X., Lu, L., & Zhang, H. (2005). A generic framework of user attention model and its application in video summarization. IEEE Transactions on Multimedia, 7, 907–919.

Money, A., & Agius, H. (2008). Video summarisation: a conceptual framework and survey of the state of the art. Journal of Visual Communication and Image Representation, 19, 121–143.

Money, A., & Agius, H. (2009). Analysing user physiological responses for affective video summarisation. Displays, 30, 59–70.

Obrist, M., Bernhaupt, R., & Tscheligi, M. (2008). Interactive TV for the home: an ethnographic study on users’ requirements and experiences. International Journal of Human–Computer Interaction, 24, 174–196.

Owen, B.M. (2009). The Internet challenge to television. Cambridge: Harvard University Press.

Parkhurst, D., Law, K., & Niebur, E. (2002). Modeling the role of salience in the allocation of overt visual attention. Vision Research, 42, 107–123.

Peng, W., Chu, W., Chang, C., Chou, C., Huang, W., Chang, W., & Hung, Y. (2011). Editing by viewing: automatic home video summarization by viewing behavior analysis. IEEE Transactions on Multimedia, 13, 539–550.

Pereira, M., Souza, C., Pádua, F., Silva, G., Assis, G., & Pereira, A. (2015). SAPTE: A Multimedia information system to support the discourse analysis and information retrieval of television programs. Multimedia Tools and Applications (Dordrecht, Online), 10923–10963.

Pereira, M., Pádua, F.L.C., & Silva, G.D. (2015). Multimodal approach for automatic emotion recognition applied to the tension levels study in TV newscasts. Brazilian Journalism Research, 11, 146–167.

Rubin, N. (2009). Preserving digital public television: not just an archive, but a new attitude to preserve public broadcasting. Library Trends, 57, 393–412.

Smeaton, A. (2007). Techniques used and open challenges to the analysis, indexing and retrieval of digital video. Information Systems, 32, 545–559.

Smirnakis, S.M., & et al. (1997). Adaptation of retinal processing to image contrast and spatial scale. Nature, 69–73.

Souza, C., Pádua, F., Nunes, C., Assis, G., & Silva, G. (2014). A unified approach to content-based indexing and retrieval of digital videos from television archives. Artificial Intelligence Research, 3, 49–61.

Truong, B., & Venkatesh, S. (2007). Video abstraction: a systematic review and classification. ACM Transactions on Multimedia Computing, Communications, and Applications, 3, 1–37.

Tsai, C., Kang, L., Lin, C., & Lin, W. (2013). Scene-Based Movie summarization via Role-Community networks. IEEE Transactions on Circuits and Systems for Video Technology, 23, 1927–1940.

Van Dijk, T.A. (2013). News as discourse. New York: Routledge.

Viola, P., & Jones, M. (2004). Robust real-time face detection. International Journal of Computer Vision, 57, 137–154.

Won, W., Yeo, J., Ban, S., & Lee, M. (2007). Biologically motivated incremental object perception based on selective attention. International Journal of Pattern Recognition and Artificial Intelligence, 21, 1293–1305.

Xu, Q., Liu, Y., Li, X., Yang, Z., Wang, J., Sbert, M., & Scopigno, R. (2014). Browsing and exploration of video sequences: a new scheme for key frame extraction and 3d visualization using entropy based jensen divergence. Information Systems, 278, 736–756.

Zeadally, S., Moustafa, H., & Siddiqui, F. (2011). Internet protocol television (IPTV): Architecture, trends, and challenges. IEEE Systems Journal, 5, 518–527.

Zhang, L., Xia, Y., Mao, K., Ma, H., & Shan, Z. (2015). An effective video summarization framework toward handheld devices. IEEE Transactions on Industrial Electronics, 62, 1309–1316.

Acknowledgments

The authors gratefully acknowledge the financial support of CNPq under Procs. 468042/2014-8 and 313163/2014-6; FAPEMIG under Procs. APQ-01180-10, APQ-02269-11 and PPM-00542-15; CEFET-MG under Procs. PROPESQ-088/12, PROPESQ-076/09 and PROPESQ-10314/14; and CAPES.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jacob, H., Pádua, F.L.C., Lacerda, A. et al. A video summarization approach based on the emulation of bottom-up mechanisms of visual attention. J Intell Inf Syst 49, 193–211 (2017). https://doi.org/10.1007/s10844-016-0441-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10844-016-0441-4