Abstract

Cubical type theory is an extension of Martin-Löf type theory recently proposed by Cohen, Coquand, Mörtberg, and the author which allows for direct manipulation of n-dimensional cubes and where Voevodsky’s Univalence Axiom is provable. In this paper we prove canonicity for cubical type theory: any natural number in a context build from only name variables is judgmentally equal to a numeral. To achieve this we formulate a typed and deterministic operational semantics and employ a computability argument adapted to a presheaf-like setting.

Similar content being viewed by others

1 Introduction

Cubical type theory as presented in [7] is a dependent type theory which allows one to directly argue about n-dimensional cubes, and in which function extensionality and Voevodsky’s Univalence Axiom [15] are provable. Cubical type theory is inspired by a constructive model of dependent type theory in cubical sets [7] and a previous variation thereof [6, 10]. One of its important ingredients is that expressions can depend on names to be thought of as ranging over a formal unit interval \(\mathbb {I}\).

Even though the consistency of the calculus already follows from its model in cubical sets, desired—and expected—properties like normalization and decidability of type checking are not yet established. This note presents a first step in this direction by proving canonicity for natural numbers in the following form: given a context I of the form \(i_1 : \mathbb {I}, \dots , i_k : \mathbb {I}\), \(k \ge 0\), and a derivation of \(I \vdash u : \mathsf {N}\), there is a unique \(n \in \mathbb {N}\) with \(I \vdash u = {{\mathrm{\mathsf {S}}}}^n 0 : \mathsf {N}\). This n can moreover be effectively calculated. Canonicity in this form also gives an alternative proof of the consistency of cubical type theory (see Corollary 2).

The main idea to prove canonicity is as follows. First, we devise an operational semantics given by a typed and deterministic weak-head reduction included in the judgmental equality of cubical type theory. This is given for general contexts although we later on will only use it on terms whose only free variables are name variables, i.e., variables of type \(\mathbb {I}\). One result we obtain is that our reduction relation is “complete” in the sense that any term in a name context whose type is the natural numbers can be reduced to one in weak-head normal form (so to zero or a successor). Second, we will follow Tait’s computability method [12, 13] and devise computability predicates on typed expressions in name contexts and corresponding computability relations (to interpret judgmental equality). These computability predicates are indexed by the list of free name variables of the involved expressions and should be such that substitution induces a cubical set structure on them. This poses a major difficulty given that the reduction relation is in general not closed under name substitutions. A solution is to require for computability that reduction should behave “coherently” with substitution: simplified, reducing an expression and then substituting should be related, by the computability relation, to first substituting and then reducing. A similar condition appeared independently in the Computational Higher Type Theory of Angiuli et al. [4, 5] and Angiuli and Harper [3] who work in an untyped setting; they achieve similar results but for a theory not encompassing the Univalence Axiom.

In a way, our technique can be considered as a presheaf extension of the computability argument given in [1, 2]; the latter being an adaption of the former using a typed reduction relation instead. A similar extension of this technique has been used to show the independence of Markov’s principle in type theory [8].

The rest of the paper is organized as follows. In Sect. 2 we introduce the typed reduction relation. Section 3 defines the computability predicates and relations and shows their important properties. In Sect. 4 we show that cubical type theory is sound w.r.t. the computability predicates; this entails canonicity. Section 5 sketches how to adapt the computability argument for the system extended with the circle and propositional truncation, and we deduce an existence property for existentials defined as truncated \(\varSigma \)-types. We conclude by summarizing and listing further work in the last section. We assume that the reader is familiar with cubical type theory as given in [7]. The present paper is part of the author’s PhD thesis [11].

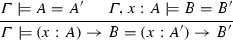

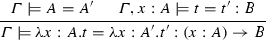

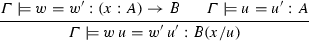

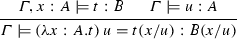

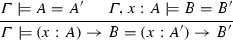

2 Reduction

In this section we give an operational semantics for cubical type theory in the form of a typed and deterministic weak-head reduction. Below we will introduce the relations \(\varGamma \vdash A \succ B\) and \(\varGamma \vdash u \succ v : A\). These relations are deterministic in the following sense: if \(\varGamma \vdash A \succ B\) and \(\varGamma \vdash A \succ C\), then B and C are equal as expressions (i.e., up to \(\alpha \)-equivalence); and, if \(\varGamma \vdash u \succ v : A\) and \(\varGamma \vdash u \succ w : B\), then v and w are equal as expressions. Moreover, these relations entail judgmental equality, i.e., if \(\varGamma \vdash A \succ B\), then \(\varGamma \vdash A = B\), and if \(\varGamma \vdash u \succ v : A\), then \(\varGamma \vdash u = v : A\).

For a context \(\varGamma \vdash \), a \(\varGamma \)-introduced expression is an expression whose outer form is an introduction, so one of the form

where we require \(\varphi \ne 1 \mod \varGamma \) (which we from now on write as \(\varGamma \vdash \varphi \ne 1 : \mathbb {F}\)) for the latter two cases, and in the case of a system (third to last) we require \(\varGamma \vdash \varphi _1 \vee \dots \vee \varphi _n = 1 : \mathbb {F}\) but \(\varGamma \vdash \varphi _k \ne 1 : \mathbb {F}\) for each k. In case \(\varGamma \) only contains object and interval variable declarations (and no restrictions \(\varDelta ,\psi \)) we simply refer to \(\varGamma \)-introduced as introduced. In such a context, \(\varGamma \vdash \varphi = \psi : \mathbb {F}\) iff \(\varphi = \psi \) as elements of the face lattice \(\mathbb {F}\); since \(\mathbb {F}\) satisfies the disjunction property, i.e.,

a system as above will never be introduced in such a context without restrictions. We call an expression non-introduced if it is not introduced and abbreviate this as “n.i.” (often this is referred to as neutral or non-canonical). A \(\varGamma \)-introduced expression is normal w.r.t. \(\varGamma \vdash \cdot \succ \cdot \) and \(\varGamma \vdash \cdot \succ \cdot : A\).

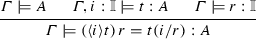

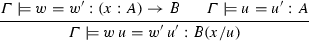

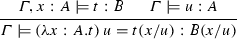

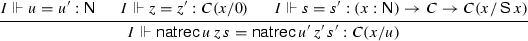

We will now give the definition of the reduction relation starting with the rules concerning basic type theory.

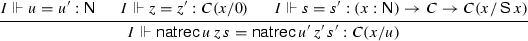

Note, \(\mathsf {natrec}\,t \, z \, s\) is not considered as an application (opposed to the presentation in [7]); also the order of the arguments is different to have the main premise as first argument.

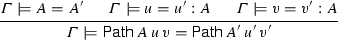

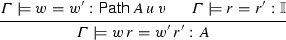

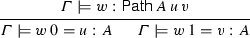

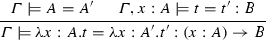

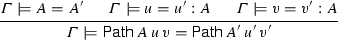

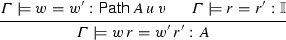

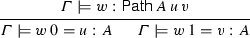

Next, we give the reduction rules for \(\mathsf {Path}\)-types. Note, that like for \(\varPi \)-types, there is no \(\eta \)-reduction or expansion, and also there is no reduction for the end-points of a path.

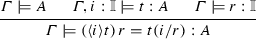

The next rules concern reductions for \(\mathsf {Glue}\).

Note that in [7] the annotation \([\varphi \mapsto w]\) of \(\mathsf {unglue}\) was left implicit. The rules for systems are given by:

The reduction rules for the universe are:

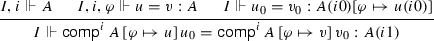

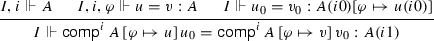

Finally, the reduction rules for compositions are given as follows.

Here \({{\mathrm{\mathsf {pred}}}}\) is the usual predecessor function defined using \(\mathsf {natrec}\).Footnote 1

Here \(a_1\) and \(t_1\) are defined like in [7], i.e., given by

where we indicated the intended context on the right.

Here \({{\mathrm{\mathsf {equiv}}}}^i\) is defined as in [7]. This concludes the definition of the reduction relation.

For \(\varGamma \vdash A\) we write \(A {!}_\varGamma \) if there is B such that \(\varGamma \vdash A \succ B\); in this case B is uniquely determined by A and we denote B by \(A {\downarrow }_\varGamma \); if A is normal we set \(A {\downarrow }_\varGamma \) to be A. Similarly for \(\varGamma \vdash u : A\), \(u {!}_\varGamma ^A\) and \(u {\downarrow }^A_\varGamma \). Note that if a term or type has a reduct it is non-introduced. We usually drop the subscripts and sometimes also superscripts since they can be inferred.

From now on we will mainly consider contexts \(I,J,K,\dots \) only built from dimension name declarations; so such a context is of the form \(i_1 : \mathbb {I}, \dots , i_n : \mathbb {I}\) for \(n \ge 0\). We sometimes write I, i for \(I, i : \mathbb {I}\). Substitutions between such contexts will be denoted by \(f,g,h,\dots \). The resulting category with such name contexts I as objects and substitutions \(f :J \rightarrow I\) is reminiscent of the category of cubes as defined in [7, Section 8.1] with the difference that the names in a contexts I are ordered and not sets. This difference is not crucial for the definition of computability predicates in the next section but it simplifies notations. (Note that if \(I'\) is a permutation of I, then the substitution assigning to each name in I itself is an isomorphism \(I' \rightarrow I\).) We write \(r \in \mathbb {I}(I)\) if \(I \vdash r : \mathbb {I}\), and \(\varphi \in \mathbb {F}(I)\) if \(I \vdash \varphi : \mathbb {F}\).

Note that in general reductions \(I \vdash A \succ B\) or \(I \vdash u \succ v : A\) are not closed under substitutions \(f :J \rightarrow I\). For example, if u is a system \([ (i = 0) ~u_1, 1 ~u_2]\), then \(i \vdash u \succ u_2 : A\) (assuming everything is well typed), but \(\vdash u (i0) \succ u_1 (i0) : A(i0)\) and \(u_1,u_2\) might be chosen that \(u_1(i0)\) and \(u_2(i0)\) are judgmentally equal but not syntactically (and even normal by considering two \(\lambda \)-abstractions where the body is not syntactically but judgmentally equal). Another example is when u is \(\mathsf {unglue}\,[\varphi \mapsto w]\, (\mathsf {glue}\,[\varphi \mapsto t]\,a)\) with \(\varphi \ne 1\) and with \(f :J \rightarrow I\) such that \(\varphi f = 1\); then u reduces to a, but uf reduces to \(wf.1\,(\mathsf {glue}\,[\varphi f \mapsto t f]\,a f)\) which is in general not syntactically equal to af.

We write \(I \vdash A \succ _\mathsf {s}B\) and \(I \vdash u \succ _\mathsf {s}v : A\) if the respective reduction is closed under name substitutions. That is, \(I \vdash A \succ _\mathsf {s}B\) whenever \(J \vdash A f \succ B f\) for all \(f :J \rightarrow I\). Note that in the above definition, all the rules which do not have a premise with a negated equation in \(\mathbb {F}\) and which do not have a premise referring to another reduction are closed under substitution.

3 Computability Predicates

In this section we define computability predicates and establish the properties we need for the proof of Soundness in the next section. We will define when a type is computable or forced, written \(I \Vdash _\ell A\), when two types are forced equal, \(I \Vdash _\ell A = B\), when an element is computable or forced, \(I \Vdash _\ell u : A\), and when two elements are forced equal, \(I \Vdash _\ell u = v : A\). Here \(\ell \) is the level which is either 0 or 1, the former indicating smallness.

The definition is given as follows: by main recursion on \(\ell \) (that is, we define “\(\Vdash _0\)” before “\(\Vdash _1\)”) we define by induction–recursion [9]

where the former two are mutually defined by induction, and the latter two mutually by recursion on the derivation of \(I \Vdash _\ell A\). Formally, \(I \Vdash _\ell A\) and \(I \Vdash _\ell A = B\) are witnessed by derivations for which we don’t introduce notations since the definitions of \(I \Vdash _\ell u : A\) and \(I \Vdash _\ell u = v : A\) don’t depend on the derivation of \(I \Vdash _\ell A\). Each such derivation has a height as an ordinal, and often we will employ induction not only on the structure of such a derivation but on its height.

Note that the arguments and definitions can be adapted to a hierarchy of universes by allowing \(\ell \) to range over a (strict) well-founded poset.

We write \(I \Vdash _\ell A \doteqdot B\) for the conjunction of \(I \Vdash _\ell A\), \(I \Vdash _\ell B\), and \(I \Vdash _\ell A = B\). For \(\varphi \in \mathbb {F}(I)\) we write \(f :J \rightarrow I,\varphi \) for \(f :J \rightarrow I\) with \(\varphi f = 1\); furthermore we write

Where the last two abbreviations need suitable premises to make sense. Note that \(I,1 \Vdash _\ell A\) is a priori stronger than \(I \Vdash _\ell A\); that these notions are equivalent follows from the Monotonicity Lemma below. Moreover, the definition is such that \(I \vdash \mathscr {J}\) whenever \(I \Vdash _\ell \mathscr {J}\) (where \(\mathscr {J}\) is any judgment form); it is shown in Remark 4 that the condition \(I,\varphi \vdash \mathscr {J}\) in the definition of \(I,\varphi \Vdash _\ell \mathscr {J}\) is actually not needed and follows from the other.

assuming \(I \vdash A\) (i.e., the rules below all have a suppressed premise \(I \vdash A\)).

Note, that the rule Gl-C above is not circular, as for any \(f :J \rightarrow I,\varphi \) we have \(\varphi f = 1\) and so \((\mathsf {Glue}\, [ \varphi \mapsto (T, w)] \, A)f\) is non-introduced.

assuming \(I \Vdash _\ell A\), \(I \Vdash _\ell B\), and \(I \vdash A = B\) (i.e., each rule below has the suppressed premises \(I \Vdash _\ell A\), \(I \Vdash _\ell B\), and \(I \vdash A = B\)).

assuming \(I \vdash u : A\). We distinguish cases on the derivation of \(I \Vdash _\ell A\).

CaseN-C

CasePi-C

CaseSi-C

CasePa-C

CaseGl-C

Later we will see that from the premises of Gl-C we get \(I \Vdash w = w : \mathsf {Equiv}\,T\,A\), and the second premise above implies in particular \(I \Vdash \mathsf {unglue}\,[\varphi \mapsto w]\,u : A\); the quantification over other possible equivalences is there to ensure invariance for the annotation.

CaseU-C

CaseNi-C

assuming \(I \Vdash _\ell u : A\), \(I \Vdash _\ell v : A\), and \(I \vdash u = v :A\). (I.e., each of the rules below has the suppressed premises \(I \Vdash _\ell u : A\), \(I \Vdash _\ell v : A\), and \(I \vdash u = v : A\), but they are not arguments to the definition of the predicate. This is subtle since in, e.g., the rule for pairs we only know \(I \Vdash _\ell v.2 : B (x/v.1)\) not \(I \Vdash _\ell v.2 : B (x/u.1)\).) We distinguish cases on the derivation of \(I \Vdash _\ell A\).

CaseN-C

CasePi-C

CaseSi-C

CasePa-C

CaseGl-C

CaseU-C

CaseNi-C

Note that the definition is such that \(I \Vdash _\ell A = B\) implies \(I \Vdash _\ell A\) and \(I \Vdash _\ell B\); and, likewise, \(I \Vdash _\ell u = v : A\) gives \(I \Vdash _\ell u : A\) and \(I \Vdash _\ell v :A\).

Remark 1

-

1.

In the rule Ni-E and the rule for \(I \Vdash _\ell u = v : \mathsf {N}\) in case u or v are non-introduced we suppressed the premise that the reference to “\({\downarrow }\)” is actually well defined; it is easily seen that if \(I \Vdash _\ell A\), then \(A {\downarrow }\) is well defined, and similarly for \(I \Vdash _\ell u : \mathsf {N}\), \(u {\downarrow }^\mathsf {N}\) is well defined.

-

2.

It follows from the substitution lemma below that \(I \Vdash _\ell A\) whenever A is non-introduced and \(I \vdash A \succ _\mathsf {s}B\) with \(I \Vdash _\ell B\). (Cf. also the Expansion Lemma below.)

-

3.

Note that once we also have proven transitivity, symmetry, and monotonicity, the last premise of Ni-C in the definition of \(I \Vdash _\ell A\) (and similarly in the rule for non-introduced naturals) can be restated as \(J \Vdash _\ell A f {\downarrow }= A {\downarrow }f\) for all \(f :J \rightarrow I\).

Lemma 1

The computability predicates are independent of the derivation, i.e., if we have two derivations trees \(d_1\) and \(d_2\) of \(I \Vdash _\ell A\), then

where \(\Vdash _\ell ^{d_i}\) refers to the predicate induced by \(d_i\).

Proof

By main induction on \(\ell \) and a side induction on the derivations \(d_1\) and \(d_2\). Since the definition of \(I \Vdash _\ell A\) is syntax directed both \(d_1\) and \(d_2\) are derived by the same rule. The claim thus follows from the IH. \(\square \)

Lemma 2

-

1.

If \(I \Vdash _\ell A\), then \(I \vdash A\) and:

-

(a)

\(I \Vdash _\ell u : A \Rightarrow I \vdash u : A\),

-

(b)

\(I \Vdash _\ell u = v : A \Rightarrow I \vdash u = v : A\).

-

(a)

-

2.

If \(I \Vdash _\ell A = B\), then \(I \vdash A = B\).

Lemma 3

-

1.

If \(I \Vdash _0 A\), then:

-

(a)

\(I \Vdash _1 A\)

-

(b)

\(I \Vdash _0 u : A \Leftrightarrow I \Vdash _1 u : A\)

-

(c)

\(I \Vdash _0 u = v : A \Leftrightarrow I \Vdash _1 u = v : A\)

-

(a)

-

2.

If \(I \Vdash _0 A = B\), then \(I \Vdash _1 A = B\).

Proof

By simultaneous induction on \(I \Vdash _0 A\) and \(I \Vdash _0 A = B\). \(\square \)

We will write \(I \Vdash A\) if there is a derivation of \(I \Vdash _\ell A\) for some \(\ell \); etc. Such derivations will be ordered lexicographically, i.e., \(I \Vdash _0 A\) derivations are ordered before \(I \Vdash _1 A\) derivations.

Lemma 4

-

1.

\(I \Vdash _\ell A \Rightarrow I \Vdash _\ell A = A\)

-

2.

\( I \Vdash _\ell A \, \& \,I \Vdash _\ell u : A \Rightarrow I \Vdash _\ell u = u : A\)

Proof

Simultaneously, by induction on \(\ell \) and side induction on \(I \Vdash _\ell A\). In the case Gl-C, to see (2), note that from the assumption \(I \Vdash u : B\) with B being \(\mathsf {Glue}\,[\varphi \mapsto (T,w)]\,A\) we get in particular

But by IH, the premise follows from \(I,\varphi \Vdash w : \mathsf {Equiv}\,T\,A\); moreover, \(I,\varphi \Vdash u = u : B\) is immediate by IH, showing \(I \Vdash u = u : B\). \(\square \)

Lemma 5

(Monotonicity/Substitution) For \(f :J \rightarrow I\) we have

-

1.

\(I \Vdash _\ell A \Rightarrow J \Vdash _\ell A f\),

-

2.

\(I \Vdash _\ell A = B \Rightarrow J \Vdash _\ell A f = B f\),

-

3.

\( I \Vdash _\ell A \, \& \,I \Vdash _\ell u : A \Rightarrow J \Vdash _\ell u f : A f\),

-

4.

\( I \Vdash _\ell A \, \& \,I \Vdash _\ell u = v : A \Rightarrow J \Vdash _\ell u f = v f : A f\).

Moreover, the respective heights of the derivations don’t increase.

Proof

By induction on \(\ell \) and side induction on \(I \Vdash _\ell A\) and \(I \Vdash _\ell A=B\). The definition of computability predicates and relations is lead such that this proof is immediate. For instance, note for (1) in the case Gl-C, i.e.,

we distinguish cases: if \(\varphi f = 1\), then \(J \Vdash _\ell \mathsf {Glue}\, [ \varphi f \mapsto (T f, w f)] \, A f\) by the premise \( I, \varphi \Vdash _\ell \mathsf {Glue}\, [ \varphi \mapsto (T, w)] \, A\); in case \(\varphi f \ne 1\) we can use the same rule again. \(\square \)

Lemma 6

-

1.

\(I \Vdash A \Rightarrow I \Vdash A {\downarrow }\)

-

2.

\(I \Vdash A = B\Rightarrow I \Vdash A {\downarrow }= B {\downarrow }\)

-

3.

\( I \Vdash A \, \& \,I \Vdash u : A \Rightarrow I \Vdash u : A {\downarrow }\)

-

4.

\( I \Vdash A \, \& \,I \Vdash u = v : A \Rightarrow I \Vdash u = v: A {\downarrow }\)

-

5.

\(I \Vdash u : \mathsf {N}\Rightarrow I \Vdash u {\downarrow }: \mathsf {N}\)

-

6.

\(I \Vdash u = v : \mathsf {N}\Rightarrow I \Vdash u {\downarrow }= v {\downarrow }: \mathsf {N}\)

Moreover, the respective heights of the derivations don’t increase.

Proof

(1) By induction on \(I \Vdash A\). All cases were A is an introduction are immediate since then \(A {\downarrow }\) is A. It only remains the case Ni-C:

We have \(I \Vdash A {\downarrow }\) as this is one of the premises.

(5) By induction on \(I \Vdash u : \mathsf {N}\) similarly to the last paragraph.

(2) By induction on \(I \Vdash A = B\). The only case where a reduct may happen is Ni-E , in which \(I \Vdash A {\downarrow }= B {\downarrow }\) is a premise. Similar for (6).

(3) and (4): By induction on \(I \Vdash A\), where the only interesting case is Ni-C, in which what we have to show holds by definition. \(\square \)

Lemma 7

-

1.

If \(I \Vdash A = B\), then

-

(a)

\(I \Vdash u : A \Leftrightarrow I \Vdash u : B\), and

-

(b)

\(I \Vdash u = v : A \Leftrightarrow I \Vdash u = v : B\).

-

(a)

-

2.

\( I \Vdash A = B \, \& \,I \Vdash B = C \Rightarrow I \Vdash A = C\)

-

3.

Given \(I \Vdash A\) we get

$$ \begin{aligned} I \Vdash u = v : A \, \& \,I \Vdash v = w : A \Rightarrow I \Vdash u = w : A. \end{aligned}$$ -

4.

\(I \Vdash A = B \Rightarrow I \Vdash B = A\)

-

5.

\( I \Vdash A \, \& \,I \Vdash u = v : A \Rightarrow I \Vdash v = u : A\)

Proof

We prove the statement for “\(\Vdash _\ell \)” instead of “\(\Vdash \)” by main induction on \(\ell \) (i.e., we prove the statement for “\(\Vdash _0\)” before the statement for “\(\Vdash _1\)”); the statement for “\(\Vdash \)” follows then from Lemma 3.

Simultaneously by threefold induction on \(I \Vdash _\ell A\), \(I \Vdash _\ell B\), and \(I \Vdash _\ell C\). (Alternatively by induction on the (natural) sum of the heights of \(I \Vdash _\ell A\), \(I \Vdash _\ell B\), and \(I \Vdash _\ell C\); we only need to be able to apply the IH if the complexity of at least one derivation decreases and the others won’t increase.) In the proof below we will omit \(\ell \) to simplify notation, except in cases where the level matters.

(1) By distinguishing cases on \(I \Vdash A = B\). We only give the argument for (1a) as (1b) is very similar except in case Gl-E . The cases N-E and U-E are trivial.

CasePi-E Let \(I \Vdash w : (x :A) \rightarrow B\) and we show \(I \Vdash w : (x :A') \rightarrow B'\). For \(f :J \rightarrow I\) let \(J \Vdash u : A' f\); then by IH (since \(J \Vdash A f = A' f\)) we get \(J \Vdash u : A f\), and thus \(J \Vdash w f \, u : B (f, x/u )\); again by IH we obtain \(J \Vdash w f \,u : B' (f, x/u )\). Now assume \(J \Vdash u = v: A' f\); so by IH, \(J \Vdash u = v: A f\), and thus \(J \Vdash w f \, u = w f \, v : B (f, x/u )\). Again by IH, we conclude \(J \Vdash w f \, u = w f \, v : B' (f, x/u )\). Thus we have proved \(I \Vdash w : (x :A') \rightarrow B'\).

CaseSi-E Let \(I \Vdash w : (x :A) \times B\) and we show \(I \Vdash w : (x :A') \times B'\). We have \(I \Vdash w.1 : A\) and \(I \Vdash w.2 : B (x/w.1)\). So by IH, \(I \Vdash w.1 : A'\); moreover, we have \(I \Vdash B (x/w.1) = B' (x/w.1)\); so, again by IH, we conclude with \(I \Vdash w.2 : B' (x/w.1)\).

CasePa-E Let \(I \Vdash u : \mathsf {Path}\,A\,a_0\,a_1\) and we show \(I \Vdash u : \mathsf {Path}\,B\,b_0\,b_1\). Given \(f :J \rightarrow I\) and \(r \in \mathbb {F}(J)\) we have \(J \Vdash u f \, r : A f\) and thus \(J \Vdash u f \, r : B f\) by IH. We have to check that the endpoints match: \(I \Vdash u \, 0 = a_0 : A\) by assumption; moreover, \(I \Vdash a_0 = b_0 : A\), so by IH (3), \(I \Vdash u \, 0 = b_0 : A\), thus again using the IH, \(I \Vdash u \, 0 = b_0 : B\).

CaseGl-E Abbreviate \(\mathsf {Glue}\, [ \varphi \mapsto (T, w)] \, A\) by D, and \(\mathsf {Glue}\, [ \varphi \mapsto (T', w')] \, A'\) by \(D'\).

(1a) Let \(I \Vdash u : D\), i.e., \(I,\varphi \Vdash u : D\) and

whenever \(f :J \rightarrow I\) and \(J,\varphi f \Vdash w'' = wf : \mathsf {Equiv}\,Tf\,Af\). Directly by IH we obtain \(I,\varphi \Vdash u : D'\). Now let \(f :J \rightarrow I\) and \(J,\varphi f \Vdash w''=w'f : \mathsf {Equiv}\,T'f\,A'f\); by IH, also \(J,\varphi f \Vdash w''=w'f : \mathsf {Equiv}\,Tf\,Af\). Moreover, we have \(J,\varphi f \Vdash w f = w' f : \mathsf {Equiv}\,Tf\,Af\), hence (1) gives (together with symmetry and transitivity, applicable by IH)

Hence, transitivity and symmetry (which we can apply by IH) give that the above left-hand sides are forced equal of type Af, applying the IH(1b) gives that they are forced equal of type \(A'f\), and thus \(I \Vdash u : D'\).

(1b) Let \(I \Vdash u = v : D\), so we have \(I,\varphi \Vdash u = v : D\) and

By IH, we get \(I,\varphi \Vdash u = v : D'\) from \(I,\varphi \Vdash u = v : D\). Note that we also have \(I \Vdash u : D\) and \(I \Vdash v : D\), and thus

and thus with (2) and transitivity and symmetry (which we can apply by IH) we obtain \(I \Vdash \mathsf {unglue}\,[\varphi \mapsto w']\, u = \mathsf {unglue}\,[\varphi \mapsto w']\, v : A\), hence also at type \(A'\) by IH. Therefore we proved \(I \Vdash u = v : D'\).

CaseNi-E Let \(I \Vdash u : A\); we have to show \(I \Vdash u : B\).

SubcaseB is non-introduced. Then we have to show \(J \Vdash u f : B f {\downarrow }\) for \(f :J \rightarrow I\). We have \(J \Vdash A f {\downarrow }= B f {\downarrow }\) and since \(I \Vdash B\) is non-introduced, the derivation \(J \Vdash B f {\downarrow }\) is shorter than \(I \Vdash B\), and the derivation \(J \Vdash A f {\downarrow }\) is not higher than \(I \Vdash A\) by Lemma 6. Moreover, also \(J \Vdash uf : Af\) so by Lemma 6 (3) we get \(J \Vdash u f : A f {\downarrow }\), and hence by IH, \(J \Vdash u f : B f {\downarrow }\).

SubcaseB is introduced. We have \(I \Vdash u : A {\downarrow }\) and \(I \Vdash A {\downarrow }= B {\downarrow }\) but \(B {\downarrow }\) is B, and \(I \Vdash A {\downarrow }\) has a shorter derivation than \(I \Vdash A\), so \(I \Vdash u : B\) by IH.

(2) Let us first handle the cases where A, B, or C is non-introduced. It is enough to show \(J \Vdash A f {\downarrow }= C f {\downarrow }\) (if A and C are both introduced, this entails \(I \Vdash A = C\) for f the identity). We have \(J \Vdash A f {\downarrow }= B f {\downarrow }\) and \(J \Vdash B f {\downarrow }= C f {\downarrow }\). None of the respective derivations get higher (by Lemma 6) but one gets shorter since one of the types is non-introduced. Thus the claim follows by IH.

It remains to look at the cases where all are introduced; in this case both equalities have to be derived by the same rule. We distinguish cases on the rule.

CaseN-E Trivial. CaseSi-E . Similar to Pi-E below. CasePa-E and Gl-E . Use the IH. CaseU-E . Trivial.

CasePi-E Let use write A as \((x : A') \rightarrow A''\) and similar for B and C. We have \(I \Vdash A' = B'\) and \(I \Vdash B' = C'\), and so by IH, we get \(I\Vdash A' = C'\); for \(J \Vdash u : A' f\) where \(f :J \rightarrow I\) it remains to be shown that \(J \Vdash A'' (f,x/u ) = C'' (f, x/u )\). By IH, we also have \(J \Vdash u : B' f\), so we have

and can conclude by the IH.

(3) By cases on \(I \Vdash A\). All cases follow immediately using the IH, except for N-C and U-C. In case N-C, we show transitivity by a side induction on the (natural) sum of the height of the derivations \(I \Vdash u = v : \mathsf {N}\) and \(I \Vdash v = w : \mathsf {N}\). If one of u,v, or w is non-introduced, we get that one of the derivations \(J \Vdash u f {\downarrow }= v f {\downarrow }: \mathsf {N}\) and \(J \Vdash v f {\downarrow }= w f {\downarrow }: \mathsf {N}\) is shorter (and the other doesn’t get higher), so by SIH, \(J \Vdash u f {\downarrow }= w f {\downarrow }: \mathsf {N}\) which entails \(I \Vdash u = w : \mathsf {N}\). Otherwise, \(I \Vdash u = v : \mathsf {N}\) and \(I \Vdash v = w : \mathsf {N}\) have to be derived with the same rule and \(I \Vdash u = w : \mathsf {N}\) easily follows (using the SIH in the successor case).

In case U-C, we have \(I \Vdash _1 u = v : \mathsf {U}\) and \(I \Vdash _1 v = w : \mathsf {U}\), i.e., \(I \Vdash _0 u = v\) and \(I \Vdash _0 v = w\). We want to show \(I \Vdash _1 u = w : \mathsf {U}\), i.e., \(I \Vdash _0 u = w\). But by IH(\(\ell \)), we can already assume the lemma is proven for \(\ell = 0\), hence can use transitivity and deduce \(I \Vdash _0 u = w\).

The proofs of (4) and (5) are by distinguishing cases and are straightforward. \(\square \)

Remark 2

Now that we have established transitivity, proving computability for \(\varPi \)-types can also be achieved as follows. Given we have \(I \Vdash (x :A) \rightarrow B\) and derivations \(I \vdash w : (x :A) \rightarrow B\), \(I \vdash w' : (x :A) \rightarrow B\), and \(I \vdash w = w' : (x :A) \rightarrow B\), then \(I \Vdash w = w' : (x :A) \rightarrow B\) whenever we have

(In particular, this gives \(I \Vdash w : (x :A) \rightarrow B\) and \(I \Vdash w' : (x :A) \rightarrow B\).)

Likewise, given \(I \vdash (x :A) \rightarrow B\), \(I \vdash (x :A') \rightarrow B'\), and \(I \vdash (x :A) \rightarrow B = (x :A') \rightarrow B'\), we get \(I \Vdash (x :A) \rightarrow B = (x :A') \rightarrow B'\) whenever \(I \Vdash A = A'\) and

Lemma 8

-

1.

\(I \Vdash A \Rightarrow I \Vdash A = A {\downarrow }\)

-

2.

\(I \Vdash u : \mathsf {N}\Rightarrow I \Vdash u = u {\downarrow }: \mathsf {N}\)

Proof

(1) We already proved \(I \Vdash A {\downarrow }\) in Lemma 6 (1). By induction on \(I \Vdash A\). All cases where A is an introduction are immediate since then \(A {\downarrow }\) is A. It only remains the case Ni-C:

We now show \(I \Vdash A = A {\downarrow }\); since A is non-introduced we have to show \(J \Vdash A f {\downarrow }= (A {\downarrow }f) {\downarrow }\) for \(f :J \rightarrow I\). \(I \Vdash A {\downarrow }\) has a shorter derivation than \(I \Vdash A\), thus so has \(J \Vdash A {\downarrow }f\); hence by IH, \(J \Vdash A {\downarrow }f = (A {\downarrow }f) {\downarrow }\). We also have \(J \Vdash A {\downarrow }f = A f {\downarrow }\) by definition of \(I \Vdash A\), and thus we obtain \(J \Vdash A f {\downarrow }= (A {\downarrow }f) {\downarrow }\) using symmetry and transitivity.

(2) Similar, by induction on \(I \Vdash u : \mathsf {N}\). \(\square \)

Lemma 9

(Expansion Lemma) Let \(I \Vdash _\ell A\) and \(I \vdash u : A\); then:

In particular, if \(I \vdash u \succ _\mathsf {s}v : A\) and \(I \Vdash _\ell v : A\), then \(I \Vdash _\ell u :A\) and \(I \Vdash _\ell u = v : A\).

Proof

By induction on \(I \Vdash A\). We will omit the level annotation \(\ell \) whenever it is inessential.

CaseN-C. We have to show \(K \Vdash u f {\downarrow }g = u f g {\downarrow }: \mathsf {N}\) for \(f :J \rightarrow I\) and \(g :K \rightarrow J\); we have \(J \Vdash u f {\downarrow }= u {\downarrow }f : \mathsf {N}\), thus \(K \Vdash u f {\downarrow }g = u {\downarrow }f g : \mathsf {N}\). Moreover, \(K \Vdash u {\downarrow }f g = u f g {\downarrow }: \mathsf {N}\) by assumption, and thus by transitivity \(K \Vdash u f {\downarrow }g = u {\downarrow }f g = u f g {\downarrow }: \mathsf {N}\). (Likewise one shows that the data in the premise of the lemma is closed under substitution.)

\(I \Vdash u = u {\downarrow }: \mathsf {N}\) holds by Lemma 6 (2).

CasePi-C. First, let \(J \Vdash a : A f\) for \(f :J \rightarrow I\). We have

for \(g :K \rightarrow J\), and also \(K \Vdash (u {fg}) {\downarrow }\, (a g) : B (fg, x/a g)\) and we have the compatibility condition

so by IH, \(J \Vdash u f \, a : B (f, x/a )\) and \(J \Vdash u f \, a = u f {\downarrow }\, a : B (f,x/a )\). Since also \(J \Vdash u f {\downarrow }= u {\downarrow }f : ((x : A) \rightarrow B)f\) we also get \(J \Vdash u f \, a = u {\downarrow }f \, a : B (f,x/a )\).

Now if \(J \Vdash a = b : A f\), we also have \(J \Vdash a : A f\) and \(J \Vdash b : A f\), so like above we get \(J \Vdash u f \, a = uf{\downarrow }\, a : B (f,x/a )\) and \(J \Vdash u f \, b = uf {\downarrow }\, b : B (f,x/b )\) (and thus also \(J \Vdash u f \, b = uf{\downarrow }\, b : B (f,x/a )\)). Moreover, \(J \Vdash uf {\downarrow }\, a = u f {\downarrow }\, b : B (f,x/a )\) and hence we can conclude \(J \Vdash u f \, a = u f \, b : B (f,x/a )\) by transitivity and symmetry. Thus we showed both \(I \Vdash u : (x : A) \rightarrow B\) and \(I \Vdash u = u{\downarrow }: (x : A) \rightarrow B\).

CaseSi-C. Clearly we have \((u.1 f) {\downarrow }= (u f {\downarrow }).1\), \(J \Vdash (u f {\downarrow }).1 : A f\), and

so the IH gives \(I \Vdash u.1 : A\) and \(I \Vdash u.1 = (u {\downarrow }).1 : A\). Likewise \((u.2 f) {\downarrow }= (u f {\downarrow }).2\) and \(J \Vdash (u f {\downarrow }).2 : B (f, x/uf{\downarrow }.1)\), hence also \(J \Vdash (u f {\downarrow }).2 : B (f, x/uf.1)\); as above one shows \(J \Vdash (u.2 f) {\downarrow }= u.2 {\downarrow }f : B (f, x/uf.1)\), applying the IH once more to obtain \(I \Vdash u.2 = u{\downarrow }.2 : B(x/u.1))\) which was what remained to be proven.

CasePa-C. Let us write \(\mathsf {Path}\,A\,v\,w\) for the type and let \(f :J \rightarrow I\), \(r \in \mathbb {I}(J)\), and \(g :K \rightarrow J\). We have

and \(K \Vdash (uf g) {\downarrow }\, (rg) : A fg\); moreover,

Thus by IH, \(J \Vdash u f\, r : A f\) and

So we obtain \(I \Vdash u \, 0 = u{\downarrow }\, 0 = v : A\) and \(I \Vdash u \, 1 = u{\downarrow }\, 1 = w : A\), and hence \(I \Vdash u : \mathsf {Path}\,A\,v\,w\); \(I \Vdash u = u {\downarrow }: \mathsf {Path}\,A\,v\,w\) follows from (3).

CaseGl-C. Abbreviate \(\mathsf {Glue}\, [\varphi \mapsto (T,w)] \, A\) by B. Note that we have \(\varphi \ne 1\). First, we claim that for any \(f :J \rightarrow I\), \(J \Vdash b : Bf\), and \(J,\varphi f \Vdash w' = wf : \mathsf {Equiv}\,Tf\,Af\),

(In particular both sides are computable.) Indeed, for \(g :K \rightarrow J\) with \(\varphi f g = 1\) we have that

and \(K \Vdash w'g.1 \, (b g) : A f g\) since \(I,\varphi \Vdash B = T\) (which follows from Lemma 8 (1)). Thus by IH (\(J \Vdash A f\) has a shorter derivation than \(I \Vdash B\)), \(K \Vdash (\mathsf {unglue}\,[\varphi f\mapsto w']\,b) g = (w'.1 \, b)g : A f g\) as claimed.

Next, let \(f :J \rightarrow I\) such that \(\varphi f = 1\); then using the IH (\(J \Vdash Bf\) has a shorter derivation than \(I \Vdash B\)), we get \(J \Vdash uf : Bf\) and \(J \Vdash uf = u f {\downarrow }: Bf\), and hence also \(J \Vdash u f = u {\downarrow }f : B f\) (since \(J \Vdash u f {\downarrow }= u {\downarrow }f : B f\)). That is, we proved

We will now first show

for and \(f :J \rightarrow I\) and \(J,\varphi f \Vdash w' = wf : \mathsf {Equiv}\,Tf\,Af\). We can assume that w.l.o.g. \(\varphi f \ne 1\), since if \(\varphi f = 1\), \(J \Vdash u f : B f\) by (5), and (6) follows from (4) noting that its right-hand side is the reduct. We will use the IH to show (6), so let us analyze the reduct:

where \(g :K \rightarrow J\). In either case, the reduct is computable: in the first case, use (5) and \(J \Vdash w'.1 : T \rightarrow A\) together with the observation \(I,\varphi \Vdash B = T\); in the second case this follows from \(J \Vdash ufg {\downarrow }: B fg\). In order to apply the IH, it remains to verify

In case \(\varphi f g \ne 1\), we have

which is what we had to show in this case. In case \(\varphi f g = 1\), we have to prove

But by (5) we have \(K \Vdash u f g = u f g {\downarrow }= u f {\downarrow }g : B f g\), so also

so (8) follows from (4) using \(J \Vdash u f {\downarrow }: Bf\). This concludes the proof of (6).

As \(w'\) could have been wf we also get

In order to prove \(I \Vdash u : B\) it remains to check that the left-hand side of (6) is forced equal to the left-hand side of (9); so we can simply check this for the respective right-hand sides: in case \(\varphi f = 1\), these are \(w'.1 \, uf\) and \(wf.1 \, uf\), respectively, and hence forced equal since \(J \Vdash w' = wf : \mathsf {Equiv}\,Tf\,Af\); in case \(\varphi f \ne 1\), we have to show

which simply follows since \(J \Vdash uf {\downarrow }: B f\).

In order to prove \(I \Vdash u = u {\downarrow }: B\) it remains to check

but this is (9) in the special case where f is the identity.

CaseU-C. Let us write B for u. We have to prove \(I \Vdash _1 B : \mathsf {U}\) and \(I \Vdash _1 B = B {\downarrow }: \mathsf {U}\), i.e., \(I \Vdash _0 B\) and \(I \Vdash _0 B = B {\downarrow }\). By Lemma 8 (1), it suffices to prove the former. For \(f :J \rightarrow I\) we have

and hence also

i.e., \(B f {!}\), and \(B f {\downarrow }\) is \(B f {\downarrow }^U\); since \(J \Vdash _1 Bf {\downarrow }: \mathsf {U}\) we have \(J \Vdash _0 B f {\downarrow }\), and likewise \(J \Vdash _0 B f {\downarrow }= B {\downarrow }f\). Moreover, if also \(g :K \rightarrow J\), we obtain \(K \Vdash _0 B f g {\downarrow }= B {\downarrow }f g\) from the assumption. Hence \(K \Vdash _0 B f {\downarrow }g = B {\downarrow }f g = B f g {\downarrow }\), therefore \(I \Vdash _0 B\) what we had to show.

CaseNi-C. Then \(I \Vdash A {\downarrow }\) has a shorter derivation than \(I \Vdash A\); moreover, for \(f :J \rightarrow I\) we have \(J \vdash u f \succ uf {\downarrow }^{Af} : A f\) so also \(J \vdash u f \succ uf {\downarrow }^{A f} : A {\downarrow }f\) since \(J \vdash A f = A {\downarrow }f\). By Lemma 6 (1), \(I \Vdash A = A {\downarrow }\) so also \(J \Vdash uf {\downarrow }: A {\downarrow }f\) and \(J \Vdash uf {\downarrow }= u {\downarrow }f : A {\downarrow }f\), and hence by IH, \(I \Vdash u : A {\downarrow }\) and \(I \Vdash u = u {\downarrow }: A {\downarrow }\), so also \(I \Vdash u : A\) and \(I \Vdash u = u {\downarrow }: A\) using \(I \Vdash A = A {\downarrow }\) again. \(\square \)

4 Soundness

The aim of this section is to prove canonicity as stated in the introduction. We will do so by showing that each computable instance of a judgment derived in cubical type theory is computable (allowing free name variables)—this is the content of the Soundness Theorem below.

We first extend the computability predicates to contexts and substitutions.

assuming \(\varGamma \vdash {}\).

by induction on \({} \Vdash \varGamma \) assuming \(I \vdash \sigma : \varGamma \).

by induction on \({} \Vdash \varGamma \), assuming \(I \Vdash \sigma : \varGamma \), \(I \Vdash \tau : \varGamma \), and \(I \vdash \sigma = \tau : \varGamma \).

We write \(I \Vdash r : \mathbb {I}\) for \(r \in \mathbb {I}(I)\), \(I \Vdash r = s : \mathbb {I}\) for \(r = s \in \mathbb {I}(I)\), and likewise \(I \Vdash \varphi : \mathbb {F}\) for \(\varphi \in \mathbb {F}(I)\), \(I \Vdash \varphi = \psi : \mathbb {F}\) for \(\varphi = \psi \in \mathbb {F}(I)\). In the next definition we allow A to be \(\mathbb {F}\) or \(\mathbb {I}\), and also correspondingly for a and b to range over interval and face lattice elements.

Definition 1

Remark 3

-

1.

For each I we have \({}\Vdash I\), and \(J \Vdash \sigma : I\) iff \(\sigma :J \rightarrow I\); likewise, \(J \Vdash \sigma = \tau : I\) iff \(\sigma = \tau \).

-

2.

For computability of contexts and substitutions monotonicity and partial equivalence properties hold analogous to computability of types and terms.

-

3.

Given \(\Vdash \varGamma \) and \(I \Vdash \sigma = \tau : \varGamma \), then for any \(\varGamma \vdash \varphi : \mathbb {F}\) we get \(\varphi \sigma = \varphi \tau \in \mathbb {F}(I)\) since \(\varphi \sigma \) and \(\varphi \tau \) only depend on the name assignments of \(\sigma \) and \(\tau \) which have to agree by \(I \Vdash \sigma = \tau : \varGamma \). Similarly for \(\varGamma \vdash r : \mathbb {I}\).

-

4.

The definition of “\(\models \)” slightly deviates from the approach we had in the definition of “\(\Vdash \)” as, say, \(\varGamma \models A\) is defined in terms of \(\varGamma \models A = A\). Note that by the properties we already established about “\(\Vdash \)” we get that \(\varGamma \models A = B\) implies \(\varGamma \models A\) and \(\varGamma \models B\) (given we know \(\varGamma \vdash A\) and \(\varGamma \vdash B\), respectively); and, likewise, \(\varGamma \models a = b : A\) entails \(\varGamma \models a : A\) and \(\varGamma \models b : A\) (given \(\varGamma \vdash a : A\) and \(\varGamma \vdash b : A\), respectively). Also, note that in the definition of, say, \(\varGamma \models A\), the condition

$$\begin{aligned} \forall I, \sigma ,\tau (I \Vdash \sigma = \tau : \varGamma \Rightarrow I \Vdash A \sigma = A \tau ) \end{aligned}$$implies

$$\begin{aligned} \forall I, \sigma (I \Vdash \sigma : \varGamma \Rightarrow I \Vdash A \sigma ). \end{aligned}$$In fact, we will often have to establish the latter condition first when showing the former.

-

5.

\(I \models A = B\) iff \(I \Vdash A = B\), and \(I \models a = b : A\) iff \(I \Vdash A\) and \(I \Vdash a = b : A\); moreover, given \(I \models A\) and \(I, x : A \vdash B\), then \(I, x: A \models B\) iff

$$ \begin{aligned}&\forall f :J \rightarrow I \forall u (J \Vdash u : A f \Rightarrow J \Vdash B (f, x/u )) \quad \, \& \,\\&\forall f :J \rightarrow I \forall u,v (J \Vdash u = v : A f \Rightarrow J \Vdash B (f,x/u ) = B(f,x/v )) \end{aligned}$$(Note that the second formula in the above display implies the first.) Thus the premises of Pi-C and Si-C are simply \(I \models A\) and \(I, x :A \models B\). Also, \(I,\varphi \Vdash A = B\) iff \(I,\varphi \models A = B\); and \(I,\varphi \models a = b : A\) iff \(I,\varphi \Vdash A\) and \(I,\varphi \Vdash a = b : A\).

-

6.

By Lemma 7 we get that \(\varGamma \models \cdot = \cdot \), \(\varGamma \models \cdot = \cdot : A\), and \(\varGamma \models \cdot = \cdot : \varDelta \) are partial equivalence relations.

Theorem 1

(Soundness) \(\varGamma \vdash \mathscr {J}\Rightarrow \varGamma \models \mathscr {J}\)

The proof of the Soundness Theorem spans the rest of this section. We will mainly state and prove congruence rules as the proof of the other rules are special cases.

Lemma 10

The context formation rules are sound:

Proof

Immediately by definition. \(\square \)

Lemma 11

Given \(\varGamma \models \), \(\varGamma \vdash r : \mathbb {I}\), \(\varGamma \vdash s : \mathbb {I}\), \(\varGamma \vdash \varphi : \mathbb {F}\), and \(\varGamma \vdash \psi : \mathbb {F}\) we have:

-

1.

\(\varGamma \vdash r = s : \mathbb {I}\Rightarrow \varGamma \models r = s : \mathbb {I}\)

-

2.

\(\varGamma \vdash \varphi = \psi : \mathbb {F}\Rightarrow \varGamma \models \varphi = \psi : \mathbb {F}\)

Proof

(1) By virtue of Remark 3 (3) it is enough to show \(r \sigma = s \sigma \in \mathbb {I}(I)\) for \(I \Vdash \sigma : \varGamma \). But then by applying the substitution \(I \vdash \sigma : \varGamma \) we get \(I \vdash r\sigma = s \sigma : \mathbb {I}\), and thus \(r \sigma = s \sigma \in \mathbb {I}(I)\) since the context I does not contain restrictions. The proof of (2) is analogous. \(\square \)

Lemma 12

The rule for type conversion is sound:

Proof

Suppose \(I \Vdash \sigma = \tau : \varGamma \). By assumption we have \(I \Vdash a \sigma = b \tau : A \sigma \). Moreover also \(I \Vdash \sigma = \sigma : \varGamma \), so \(I \Vdash A \sigma = B \sigma \), and hence \(I \Vdash a \sigma = b \tau : B \sigma \) by Lemma 7 which was what we had to prove. \(\square \)

Lemma 13

Proof

Immediate by definition. \(\square \)

Lemma 14

The rules for \(\varPi \)-types are sound:

-

1.

-

2.

-

3.

-

4.

-

5.

Proof

Abbreviate \((x: A) \rightarrow B\) by C. We will make use of Remark 2.

(1) It is enough to prove this in the case where \(\varGamma \) is of the form I, in which case this directly follows by Pi-E .

(2) Suppose \(\varGamma \models A = A'\) and \(\varGamma , x : A \models t = t' : B\); this entails \(\varGamma , x: A \models B\). For \(I \Vdash \sigma = \tau : \varGamma \) we show \(I \Vdash (\lambda x : A. t) \sigma = (\lambda x : A'. t') \tau : C \sigma \). For this let \(J \Vdash u = v : A \sigma f\) where \(f :J \rightarrow I\). Then also \(J \Vdash u = v : A' \tau f\),

Moreover, \(J \Vdash (\sigma f, x/u ) = (\tau f, x/v ): \varGamma , x :A\), and so \(J \Vdash B(\sigma f, x/u ) = B(\tau f, x/v )\) and

which gives

by applying the Expansion Lemma twice, and thus also

what we had to show.

(3) For \(I \Vdash \sigma = \tau : \varGamma \) we get \(I \Vdash w \sigma = w' \tau : C \sigma \) and \(I \Vdash u \sigma = u' \tau : A \sigma \); so also \(I \Vdash w \sigma : C \sigma \), therefore \(I \Vdash (w \, u) \sigma = w \sigma \, u'\tau = (w'\, u') \tau : B (\sigma , x/u)\).

(4) Given \(I \Vdash \sigma = \tau : \varDelta \) we get, like in (2), \(I \Vdash (\lambda x : A. t) \sigma \, u \sigma = t (\sigma , x/u\sigma ) : B (\sigma , x/u \sigma )\) using the Expansion Lemma; moreover, \(I \Vdash (\sigma , x/u \sigma ) = (\tau , x/u \tau ) : \varGamma , x : A\), hence

(5) Suppose \(I \Vdash \sigma = \tau : \varGamma \) and \(J \Vdash u : A \sigma f\) for \(f :J \rightarrow I\). We have to show \(J \Vdash w \sigma f \, u = w' \tau f \, u : B(\sigma f, x/u )\). We have

and thus, by the assumption \(\varGamma , x : A \models w \, x = w' \, x : B\), we get

Since x does neither appear in w nor in \(w'\) this was what we had to prove. \(\square \)

Lemma 15

The rules for \(\varSigma \)-types are sound:

-

1.

-

2.

-

3.

-

4.

-

5.

Lemma 16

Given \(I, x : \mathsf {N}\models C\) we have:

-

1.

-

2.

Proof

By simultaneous induction on \(I \Vdash u : \mathsf {N}\) and \(I \Vdash u = u' : \mathsf {N}\).

Case\(I \Vdash 0 : \mathsf {N}\). We have \(I \vdash \mathsf {natrec}\, 0 \, z \, s \succ _\mathsf {s}z : C (x/0)\) so (1) follows from the Expansion Lemma.

Case\(I \Vdash 0 = 0 : \mathsf {N}\). (2) immediately follows from (1) and \(I \Vdash z = z' : C (x/0)\).

Case\(I \Vdash {{\mathrm{\mathsf {S}}}}u : \mathsf {N}\) from \(I \Vdash u : \mathsf {N}\). We have

and \(I \Vdash s \, u \, (\mathsf {natrec}\, u \, z \, s) : C (x/{{\mathrm{\mathsf {S}}}}u)\) by IH, and using that u and s are computable. Hence we are done by the Expansion Lemma.

Case\(I \Vdash {{\mathrm{\mathsf {S}}}}u = {{\mathrm{\mathsf {S}}}}u' : \mathsf {N}\) from \(I \Vdash u = u' : \mathsf {N}\). (2) follows from (1) and \( I \Vdash s = s' : (x : \mathsf {N}) \rightarrow C \rightarrow C (x/{{\mathrm{\mathsf {S}}}}x)\), \(I \Vdash u = u' : \mathsf {N}\), and the IH.

Case\(I \Vdash u : \mathsf {N}\) for u non-introduced. For \(f :J \rightarrow I\) we have

Moreover, we have \(I \Vdash u f {\downarrow }\) and \(I \Vdash u f {\downarrow }= u {\downarrow }f : \mathsf {N}\) with a shorter derivation (and thus also \(J \Vdash C (f, x/uf {\downarrow }) = C (x/u {\downarrow }) f\)), hence by IH

which yields the claim by the Expansion Lemma.

Case\(I \Vdash u = u' : \mathsf {N}\) for u or \(u'\) non-introduced. We have

by either (1) (if u is non-introduced) or by reflexivity (if u is an introduction); likewise for \(u'\). So with the IH for \(I \Vdash u {\downarrow }= u' {\downarrow }: \mathsf {N}\) we obtain

what we had to show. \(\square \)

We write \(\underline{n}\) for the numeral \({{\mathrm{\mathsf {S}}}}^n 0\) where \(n \in \mathbb {N}\).

Lemma 17

If \(I \Vdash u : \mathsf {N}\), then \(I \Vdash u = \underline{n} : \mathsf {N}\) (and hence also \(I \vdash u = \underline{n} : \mathsf {N}\)) for some \(n \in \mathbb {N}\).

Proof

By induction on \(I \Vdash u : \mathsf {N}\). The cases for zero and successor are immediate. In case u is non-introduced, then \(I \Vdash u {\downarrow }= \underline{n}\) for some \(n \in \mathbb {N}\) by IH. By Lemma 8 (2) and transitivity we conclude \(I \Vdash u = \underline{n} : \mathsf {N}\). \(\square \)

Lemma 18

\(I \Vdash \cdot = \cdot : \mathsf {N}\) is discrete, i.e., if \(I \Vdash u : \mathsf {N}\), \(I \Vdash v : \mathsf {N}\), and \(J \Vdash u f = v g : \mathsf {N}\) for some \(f, g :J \rightarrow I\), then \(I \Vdash u = v : \mathsf {N}\).

Proof

By Lemma 17, we have \(I \Vdash u = \underline{n} : \mathsf {N}\) and \(I \Vdash v = \underline{m} : \mathsf {N}\) for some \(n,m \in \mathbb {N}\), and thus \(J \Vdash \underline{n} = u f = v g = \underline{m} : \mathsf {N}\), i.e., \(J \Vdash \underline{n} = \underline{m} : \mathsf {N}\) and hence \(n = m\) which yields \(I \Vdash u = v : \mathsf {N}\). \(\square \)

Lemma 19

The rules for \(\mathsf {Path}\)-types are sound:

-

1.

-

2.

-

3.

-

4.

-

5.

-

6.

Proof

(1) Follows easily by definition.

(2) For \(I \Vdash \sigma = \sigma ' : \varGamma \) we have to show

For \(f :J \rightarrow I\) and \(r \in \mathbb {I}(J)\) we have \(J \Vdash (\sigma f, i/r ) = (\sigma ' f, i/r ) : \varGamma , i : \mathbb {I}\) and

and moreover \(J \Vdash t (\sigma f, i/r ) = t' (\sigma ' f, i/r ) : A \sigma f\) and \(J \Vdash A \sigma f = A \sigma ' f\) by assumption. Hence the Expansion Lemma yields

in particular also, say \(J \Vdash (\langle i \rangle t) \sigma \, 0 = t (\sigma , i/0 ) : A \sigma \) and \(J \Vdash (\langle i \rangle t') \sigma ' \, 0 = t' (\sigma ', i/0 ) = t (\sigma , i/0 ) : A \sigma \). And hence (10) follows.

(3) Supposing \(I \Vdash \sigma = \sigma ' : \varGamma \) we have to show \(I \Vdash (w \sigma ) \, (r \sigma ) = (w'\sigma ') \, (r' \sigma ) : A \sigma \). We have \(I \Vdash w \sigma = w'\sigma ' : \mathsf {Path}\,A \sigma \,u \sigma \,v \sigma \) and \(r \sigma = r' \sigma '\), hence the claim follows by definition.

(4) Let \(I \Vdash \sigma = \sigma ' : \varGamma \); we have to show, say, \(I \Vdash w \sigma \, 0 = u \sigma ' : A \sigma \). First, we get \(I \Vdash w \sigma : \mathsf {Path}\,A \sigma \, u\sigma \, v \sigma \). Since \(\varGamma \models w : \mathsf {Path}\,A\,u\,v\) we also have \(\varGamma \models \mathsf {Path}\,A\,u\,v\), hence

Hence we also obtain \(I \Vdash w \sigma : \mathsf {Path}\,A \sigma '\, u \sigma '\, v \sigma '\), and thus \(I \Vdash w \sigma \, 0 = u \sigma ' : A \sigma '\). But (11) also yields \(I \Vdash A \sigma = A \sigma '\) by definition, so \(I \Vdash w \sigma \, 0 = u \sigma ' : A \sigma \) what we had to show.

(5) Similar to (2) using the Expansion Lemma.

(6) For \(I \Vdash \sigma = \sigma ' : \varGamma \), \(f :J \rightarrow I\), and \(r \in \mathbb {I}(J)\), we have \(J \Vdash (\sigma f, i/r ) = (\sigma 'f,i/r ) : \varGamma ,i : \mathbb {I}\), and thus

But \((w \, i) (\sigma f, i/r )\) is \(w \sigma f ~ r\), and \((w' \, i) (\sigma 'f, i/r )\) is \(w' \sigma ' f ~ r\), so (12) is what we had to show. \(\square \)

Lemma 20

Let \(\varphi _i \in \mathbb {F}(I)\) and \(\varphi _1 \vee \dots \vee \varphi _n = 1\).

-

1.

Let \(I, \varphi _i \Vdash _\ell A_i\) and \(I, \varphi _i \wedge \varphi _j \Vdash _\ell A_i = A_j\) for all i, j; then

-

(a)

\(I \Vdash _\ell [ \varphi _1 ~A_1, \dots , \varphi _n ~A_n]\), and

-

(b)

\(I \Vdash _\ell [ \varphi _1 ~A_1, \dots , \varphi _n ~A_n] = A_k\) whenever \(\varphi _k = 1\).

-

(a)

-

2.

Let \(I \Vdash _\ell A\), \(I,\varphi _i \Vdash _\ell t_i : A\), and \(I,\varphi _i \wedge \varphi _j \Vdash _\ell t_i = t_j : A\) for all i, j; then

-

(a)

\(I \Vdash _\ell [ \varphi _1 ~t_1, \dots , \varphi _n ~t_n] : A\), and

-

(b)

\(I \Vdash _\ell [ \varphi _1 ~t_1, \dots , \varphi _n ~t_n] = t_k : A\) whenever \(\varphi _k = 1\).

-

(a)

Proof

(1) Let us abbreviate \([ \varphi _1 ~A_1, \dots , \varphi _n ~A_n]\) by A. Since A is non-introduced, we have to show \(J \Vdash A f {\downarrow }\) and \(J \Vdash A f {\downarrow }= A {\downarrow }f\). For the former observe that \(A f {\downarrow }\) is \(A_k f\) with k minimal such that \(\varphi _k f = 1\). For the latter use that \(J \Vdash A_k f = A_l f\) if \(\varphi _k f = 1\) and \(\varphi _l = 1\), since \(I,\varphi _k \wedge \varphi _l \Vdash A_k = A_l\).

(2) Let us write t for \([ \varphi _1 ~t_1, \dots , \varphi _n ~t_n]\). By virtue of the Expansion Lemma, it suffices to show \(J \Vdash t f {\downarrow }: A f\) and \(K \Vdash t f {\downarrow }= t {\downarrow }f : A f\). The proof is just like the proof for types given above. \(\square \)

Lemma 21

Given \(\varGamma \models \varphi _1 \vee \dots \vee \varphi _n = 1 : \mathbb {F}\), then:

Proof

Let \(\varphi = \varphi _1 \vee \dots \vee \varphi _n\). Say if \(\mathscr {J}\) is a typing judgment of the form A. For \(I \Vdash \sigma : \varGamma \) we have \(\varphi \sigma = 1\), so \(\varphi _k \sigma = 1\) for some k, hence \(I \Vdash A \sigma \) by \(\varGamma ,\varphi _k \models A\). Now let \(I \Vdash \sigma = \tau : \varGamma \); then \(\varphi _i \sigma = \varphi _i \tau \) (\(\sigma \) and \(\tau \) assign the same elements to the interval variables), so \(\varphi \sigma = \varphi \tau = 1\) yields \(\varphi _k \sigma = \varphi _k \tau = 1\) for some common k and thus \(I \Vdash A \sigma = A \tau \) follows from \(\varGamma , \varphi _k \models A\). The other judgment forms are similar. \(\square \)

For \(I \Vdash A\) and \(I, \varphi \Vdash v : A\) we write \(I \Vdash u : A [\varphi \mapsto v]\) for \(I \Vdash u : A\) and \(I,\varphi \Vdash u = v : A\). And likewise \(I \Vdash u = w : A [\varphi \mapsto v]\) means \(I \Vdash u = w : A\) and \(I,\varphi \Vdash u = v : A\) (in this case also \(I,\varphi \Vdash w = v : A\) follows). We use similar notations for for “\(\models \)”.

Lemma 22

Given \(\varphi \in \mathbb {F}(I)\) and \(I \Vdash _\ell A, I,\varphi \Vdash _\ell T\), and \(I,\varphi \Vdash _\ell w : \mathsf {Equiv}\,T\,A\), and write B for \(\mathsf {Glue}\,[\varphi \mapsto (T,w)]\,A\). Then:

-

1.

\(I \Vdash _\ell B\) and \(I,\varphi \Vdash _\ell B = T\).

-

2.

If \(I \Vdash _\ell A = A'\), \(I, \varphi \Vdash _\ell T = T'\), \(I, \varphi \Vdash _\ell w = w' : \mathsf {Equiv}\,T\,A\), then \(I \Vdash _\ell B = \mathsf {Glue}\,[\varphi \mapsto (T',w')]\,A'\).

-

3.

If \(I \Vdash _\ell u : B\) and \(I, \varphi \Vdash _\ell w = w' : \mathsf {Equiv}\,T\,A\), then \(I \Vdash _\ell \mathsf {unglue}\,[\varphi \mapsto w']\, u : A [\varphi \mapsto w'.1 \, u]\) and \(I \Vdash _\ell \mathsf {unglue}\,[\varphi \mapsto w]\,u = \mathsf {unglue}\,[\varphi \mapsto w']\,u : A\).

-

4.

If \(I \Vdash _\ell u = u' : B\), then

$$\begin{aligned} I \Vdash _\ell \mathsf {unglue}\,[\varphi \mapsto w]\,u = \mathsf {unglue}\,[\varphi \mapsto w]\,u' : A. \end{aligned}$$ -

5.

If \(I, \varphi \Vdash _\ell t = t' : T\) and \(I \Vdash _\ell a = a' : A[\varphi \mapsto w.1 \, t]\), then

-

(a)

\(I \Vdash _\ell \mathsf {glue}\,[\varphi \mapsto t]\,a = \mathsf {glue}\,[\varphi \mapsto t']\,a' : B\),

-

(b)

\(I,\varphi \Vdash _\ell \mathsf {glue}\,[\varphi \mapsto t]\,a = t : T\), and

-

(c)

\(I \Vdash _\ell \mathsf {unglue}\,[\varphi \mapsto w]\, (\mathsf {glue}\,[\varphi \mapsto t]\,a) = a : A\).

-

(a)

-

6.

If \(I \Vdash _\ell u : B\), then \(I \Vdash _\ell u = \mathsf {glue}\,[\varphi \mapsto u] (\mathsf {unglue}\,[\varphi \mapsto w]\,u) : B\).

Proof

(1) Let us first prove \(I,\varphi \Vdash B\) and \(I,\varphi \Vdash B = T\); but in \(I,\varphi \), \(\varphi \) becomes 1 so w.l.o.g. let us assume \(\varphi = 1\); then B is non-introduced and \(I \vdash B \succ _\mathsf {s}T\) so \(I \Vdash B\) from \(I \Vdash T\). For \(I \Vdash B = T\) we have to show \(J \Vdash B f {\downarrow }= T f {\downarrow }\) for \(f :J \rightarrow I\). But \(B f {\downarrow }\) is Tf so this is an instance of Lemma 8.

It remains to prove \(I \Vdash B\) in case where \(\varphi \ne 1\); for this use Gl-C with the already proven \(I,\varphi \Vdash B\).

(2) In case \(\varphi \ne 1\) we only have to show \(I,\varphi \Vdash B = B'\) and can apply Gl-E . But restricted to \(I,\varphi \), \(\varphi \) becomes 1 and hence we only have to prove the statement for \(\varphi = 1\). But then by (1) we have \(I \Vdash B = T = T' = B'\).

(3) In case \(\varphi \ne 1\), \(I \Vdash \mathsf {unglue}\,[\varphi \mapsto w']\, u : A\) and

are immediate by definition. Using the Expansion Lemma (and \(I \vdash \mathsf {unglue}\,[\varphi \mapsto w']\,u \succ _\mathsf {s}w'.1 \, u : A\) for \(\varphi = 1\)) we obtain \(I,\varphi \Vdash \mathsf {unglue}\,[\varphi \mapsto w']\,u = w'.1 \, u : A\), which also shows \(I \Vdash \mathsf {unglue}\,[\varphi \mapsto w']\,u : A\) as well as (13) in case \(\varphi = 1\).

(4) In case \(\varphi \ne 1\), this is by definition. For \(\varphi = 1\) we have

(5) Let us write b for \(\mathsf {glue}\,[\varphi \mapsto t]\,a\), and \(b'\) for \(\mathsf {glue}\,[\varphi \mapsto t']\,a'\). We first show \(I \Vdash b : B\) and \(I,\varphi \Vdash b= t : B\) (similarly for \(b'\)).

In case \(\varphi = 1\), \(I \vdash b \succ _\mathsf {s}t : T\) so by the Expansion Lemma \(I \Vdash b : T\) and \(I \Vdash b = t : T\), and hence also \(I \Vdash b : B\) and \(I \Vdash b = t : B\) by (1). This also proves (5b).

Let now \(\varphi \) be arbitrary; we claim

[and thus proving (5c)]. We will apply the Expansion Lemma to do so; for \(f :J \rightarrow I\) let us analyze the reduct of \((\mathsf {unglue}\,[\varphi \mapsto w]\,b) f\):

Note that, if \(\varphi f = 1\), we have as in the case for \(\varphi =1\), \(J \Vdash b f = t f : B f\) and hence \(J \Vdash wf.1 \, b f = wf.1 \, t f = af : A f\). This ensures \(J \Vdash (\mathsf {unglue}\,[\varphi \mapsto w]\,b) f {\downarrow }= (\mathsf {unglue}\,[\varphi \mapsto w]\,b) {\downarrow }f : A\), and thus the Expansion Lemma applies and we obtain \(I \Vdash \mathsf {unglue}\,[\varphi \mapsto w]\,b = (\mathsf {unglue}\,[\varphi \mapsto w]\,b) {\downarrow }: A\); but as we have seen in either case, \(\varphi = 1\) or not, \(I \Vdash (\mathsf {unglue}\,[\varphi \mapsto w]\, b) {\downarrow }= a : A\) proving the claim.

Let now be \(\varphi \ne 1\), \(f :J \rightarrow I\), and \(J \Vdash w'=wf : \mathsf {Equiv}\,Tf\,Af\). We can use the claim for Bf and \(\mathsf {Glue}\,[\varphi f \mapsto (Tf,w')]\,Af\) (which is forced equal to Bf by (2)) and obtain both

so the left-hand sides are equal; moreover, \(I,\varphi \Vdash b : B\) (as in the case \(\varphi =1\)), and hence \(I \Vdash b : B\). Likewise one shows \(I \Vdash b' : B\).

It remains to show \(I \Vdash b = b' : B\). If \(\varphi =1\), we already showed \(I \Vdash b = t : T\) and \(I \Vdash b' = t' : T\), so the claim follows from \(I \Vdash t = t' : T\) and \(I \Vdash T = B\). Let us now assume \(\varphi \ne 1\). We immediately get \(I, \varphi \Vdash b = t = t' = b' : B\) as for \(\varphi =1\). Moreover, we showed above that \(I \Vdash \mathsf {unglue}\,[\varphi \mapsto w]\,b = a : A\) and \(I \Vdash \mathsf {unglue}\,[\varphi \mapsto w]\,b' = a' : A\). Hence we obtain

from \(I \Vdash a = a' : A\).

(6) In case \(\varphi = 1\), this follows from (5b). In case \(\varphi \ne 1\), we have to show

The former is an instance of (5c); the latter follows from (5b). \(\square \)

Lemma 23

Let B be \(\mathsf {Glue}\, [ \varphi \mapsto (T, w)] \, A\) and suppose \(I \Vdash B\) is derived via Gl-C, then also \(I,\varphi \Vdash T\) and the derivations of \(I,\varphi \Vdash T\) are all proper sub-derivations of \(I \Vdash B\) (and hence shorter).

Proof

We have the proper sub-derivations \(I,\varphi \Vdash B\). For each \(f :J \rightarrow I\) with \(\varphi f = 1\), we have that Bf is non-introduced with reduct Tf so the derivation of \(J \Vdash B f\) has a derivation of \(J \Vdash T f\) as sub-derivation according to Ni-C. \(\square \)

For the next proof we need a small syntactic observation. Given \(\varGamma \vdash \alpha : \mathbb {F}\) irreducible, there is an associated substitution \({\bar{\alpha }} :\varGamma _\alpha \rightarrow \varGamma \) where \(\varGamma _\alpha \) skips the names of \(\alpha \) and applies a corresponding \({\bar{\alpha }}\) to the types and restrictions (e.g., if \(\varGamma \) is \(i:\mathbb {I}, x : A, j : \mathbb {I}, \varphi \) and \(\alpha \) is \((i=0)\), then \(\varGamma _\alpha \) is \(x : A(i0),j:\mathbb {I},\varphi (i0)\)). Since \(\alpha {\bar{\alpha }} = 1\) we even have \({\bar{\alpha }} :\varGamma _\alpha \rightarrow \varGamma , \alpha \). The latter has an inverse (w.r.t. judgmental equality) given by the projection \(\mathsf {p}:\varGamma ,\alpha \rightarrow \varGamma _\alpha \) (i.e., \(\mathsf {p}\) assigns each variable in \(\varGamma _\alpha \) to itself): in the context \(\varGamma ,\alpha \), \({\bar{\alpha }} \mathsf {p}\) is the identity, and \(\mathsf {p}{\bar{\alpha }}\) is the identity since the variables in \(\varGamma _\alpha \) are not changed by \({\bar{\alpha }}\).

Remark 4

We can use the above observation to show that the condition \(I, \varphi \vdash \mathscr {J}\) in the definition of \(I,\varphi \Vdash _\ell \mathscr {J}\) (in Sect. 3) already follows from the other, i.e., \(J \Vdash _\ell \mathscr {J}f\) for all \(f :J \rightarrow I,\varphi \): We have to show \(I,\alpha \vdash \mathscr {J}\) for each irreducible \(\alpha \le \varphi \). But we have \(I_\alpha \Vdash _\ell \mathscr {J}{\bar{\alpha }}\) by the assumption and \({\bar{\alpha }} :I_\alpha \rightarrow I,\varphi \), and hence \(I_\alpha \vdash \mathscr {J}{\bar{\alpha }}\). Substituting along \(\mathsf {p}:I,\alpha \rightarrow I_\alpha \) yields \(I,\alpha \vdash \mathscr {J}\).

Theorem 2

Compositions are computable, i.e., for \(\varphi \in \mathbb {F}(I)\) and \(i \notin {{\mathrm{dom}}}(I)\):

-

1.

-

2.

-

3.

Proof

By simultaneous induction on \(I,i \Vdash A\) and \(I,i \Vdash A = B\). Let us abbreviate \(\mathsf {comp}^i \, A \, [\varphi \mapsto u] \, u_0\) by \(u_1\), and \(\mathsf {comp}^i \, A \, [\varphi \mapsto v] \, v_0\) by \(v_1\). The second conclusion of (1) holds since in each case we will use the Expansion Lemma and in particular also prove \(I \Vdash u_1 {\downarrow }: A (i1)\).

Let us first make some preliminary remarks. Given the induction hypothesis holds for \(I,i \Vdash A\) we also know that filling operations are admissible for \(I,i \Vdash A\), i.e.:

To see this, recall the explicit definition of filling

where j is fresh. The derivation of \(I,i,j \Vdash A (i/i \wedge j)\) isn’t higher than the derivation of \(I,i \Vdash A\) so we have to check, with \(u' = [\varphi ~u(i/i \wedge j), (i=0) ~u_0]\) and \(A' = A (i/i \wedge j)\),

To check the former, we have to show

in order to apply Lemma 20. So let \(f :J \rightarrow I,i,j\) with \(\varphi f = 1\) and \(f (i) = 0\); then as \(\varphi \) doesn’t contain i and j, also \(\varphi (f-i,j) = 1\) for \(f - i,j :J \rightarrow I\) being the restriction of f, so by assumption \(J \Vdash u(i0) (f-i,j) = u_0 (f -i,j) : A(i0) (f-i,j)\). Clearly, \((i0) (f-i,j) = (i/i \wedge j) f\) so the claim follows.

Let us now check the right-hand side equation of (15): by virtue of Lemma 21 we have to check the equation in the contexts \(I,i,\varphi \) and \(I,i,(i=0)\); but \(I,i,\varphi \Vdash u'(j0) = u(i0) = u_0 : A(i0)\) and \(I, i, (i=0) \Vdash u'(j0) = u_0 : A(i0)\) by Lemma 20.

And likewise the filling operation preserves equality.

CaseN-C. First, we prove that

To show this, it is enough to prove \(I,\alpha ,i: \mathbb {I}\vdash u = u_0 : \mathsf {N}\) for each \(\alpha \le \varphi \) irreducible. Let \({\bar{\alpha }} :I_\alpha \rightarrow I\) be the associated face substitution. We have \(I_\alpha , i \Vdash u ({\bar{\alpha }}, i/i ) : \mathsf {N}\) and also \(I_\alpha \Vdash u ({\bar{\alpha }}, i/0 ) = u_0 {\bar{\alpha }}: \mathsf {N}\) since \(\varphi {\bar{\alpha }} = 1\). By discreteness of \(\mathsf {N}\) (Lemma 18),

therefore \(I_\alpha , i \vdash u ({\bar{\alpha }}, i/i ) = u_0 \bar{\alpha }: \mathsf {N}\), i.e., \(I_\alpha , i \vdash u {\bar{\alpha }} = u_0 \bar{\alpha }: \mathsf {N}\) with \({\bar{\alpha }}\) considered as substitution \(I_\alpha ,i \rightarrow I,i\) and \(u_0\) weakened to I, i. Hence \(I, \alpha , i : \mathbb {I}\vdash u = u_0 : \mathsf {N}\) by the observation preceding the statement of the theorem.

Second, we prove that

\(I,\varphi \vdash u(i1) = u_0 : \mathsf {N}\) immediately follows from (16). For \(f:J \rightarrow I\) with \(\varphi f = 1\) we have to show \(J \Vdash u (i1) f = u_0 f :\mathsf {N}\); since \(\varphi f = 1\) we get \(J \Vdash u (i0) f = u_0 f :\mathsf {N}\) by assumption, i.e., \(J \Vdash u (f,i/j )(j0) = u_0 (f,i/j ) (j0) :\mathsf {N}\) (where \(u_0\) is weakened to I, j and j fresh). By discreteness of \(\mathsf {N}\), we obtain \(J,j \Vdash u (f,i/j ) = u_0 (f,i/j ) :\mathsf {N}\) and hence \(J \Vdash u (f,i/1 ) = u_0 (f,i/1 ) :\mathsf {N}\), i.e., \(J \Vdash u (i1) f = u_0 f : \mathsf {N}\).

We now prove the statements simultaneously by a side induction on \(I \Vdash u_0 : \mathsf {N}\) and \(I \Vdash u_0 = v_0 : \mathsf {N}\).

Subcase\(I \Vdash 0 : \mathsf {N}\). By (16) it follows that \(I \vdash u_1 \succ _\mathsf {s}0 : \mathsf {N}\), and hence \(I \Vdash u_1 : \mathsf {N}\) and \(I \Vdash u_1 = 0 : \mathsf {N}\) by the Expansion Lemma. Thus also \(I,\varphi \Vdash u_1 = u(i1) : \mathsf {N}\) by (17).

Subcase\(I \Vdash {{\mathrm{\mathsf {S}}}}u_0' : \mathsf {N}\) from \(I \Vdash u_0' : \mathsf {N}\) with \(u_0 = {{\mathrm{\mathsf {S}}}}u_0'\). By (16) it follows that

From \(I,\varphi \Vdash {{\mathrm{\mathsf {S}}}}u_0' = u (i0) : \mathsf {N}\) we get \(I,\varphi \Vdash u'_0 = {{\mathrm{\mathsf {pred}}}}({{\mathrm{\mathsf {S}}}}u_0') = {{\mathrm{\mathsf {pred}}}}u(i0) : \mathsf {N}\) by Lemma 16 and thus by SIH, \(I \Vdash \mathsf {comp}^i \, \mathsf {N}\, [\varphi \mapsto {{\mathrm{\mathsf {pred}}}}u] \, u'_0 : \mathsf {N}[\varphi \mapsto ({{\mathrm{\mathsf {pred}}}}u) (i1)]\); hence \(I \Vdash u_1 : \mathsf {N}\) and \(I \Vdash u_1 = {{\mathrm{\mathsf {S}}}}( \mathsf {comp}^i \, \mathsf {N}\, [\varphi \mapsto {{\mathrm{\mathsf {pred}}}}u] \, u'_0) : \mathsf {N}\) by the Expansion Lemma. Thus also

using (17).

Subcase\(u_0\) is non-introduced. We use the Expansion Lemma: for each \(f :J \rightarrow I\)

the right-hand side is computable by SIH, and this results in a compatible family of reducts by SIH, since we have \(K \Vdash u_0 f {\downarrow }g = u_0 f g {\downarrow }: \mathsf {N}\). Thus we get \(I \Vdash u_1 : \mathsf {N}\) and \(I \Vdash u_1 = u_1 {\downarrow }: \mathsf {N}\). By SIH, \(I,\varphi \Vdash u_1 {\downarrow }= u(i1) : \mathsf {N}\) and thus also \(I,\varphi \Vdash u_1 = u(i1) : \mathsf {N}\).

Subcase\(I \Vdash 0 = 0 : \mathsf {N}\). Like above we get that \(I \Vdash u_1 = 0 = v_1 : \mathsf {N}\).

Subcase\(I \Vdash S u_0' = S v_0' : \mathsf {N}\) from \(I \Vdash u_0' = v_0' : \mathsf {N}\). Follows from the SIH \(I \Vdash \mathsf {comp}^i \, \mathsf {N}\, [\varphi \mapsto {{\mathrm{\mathsf {pred}}}}u] \, u'_0 = \mathsf {comp}^i \, \mathsf {N}\, [\varphi \mapsto {{\mathrm{\mathsf {pred}}}}v] \, v'_0 : \mathsf {N}\) like above.

Subcase\(I \Vdash u_0 = v_0 : \mathsf {N}\) and \(u_0\) or \(v_0\) is non-introduced. We have to show \(J \Vdash u_1 f {\downarrow }= v_1 f {\downarrow }: \mathsf {N}\) for \(f :J \rightarrow I\). We have \(J \Vdash u_0 f {\downarrow }= v_0 f {\downarrow }: \mathsf {N}\) with a shorter derivation, thus by SIH

which is what we had to show.

CasePi-C. Let us write \((x : A) \rightarrow B\) for the type under consideration. (1) In view of the Expansion Lemma, the reduction rule for composition at \(\varPi \)-types (which is closed under substitution), and Lemma 14 (2) and (5), it suffices to show

where \(x' = \mathsf {fill}^i\,A (i/1-i)\,[]\,x\) and \({\bar{x}} = x' (i/1-i)\). By IH, we get \(I, x : A(i1), i:\mathbb {I}\models {\bar{x}} : A \) and \(I, x : A(i1) \models {\bar{x}} (i1) = x : A(i1)\), i.e.,

To see (20), let \(J \Vdash (f,x/a ) = (f,x/b ) : I, x : A(i1)\), i.e., \(f :J \rightarrow I\) and \(J \Vdash a = b : A (i1) f\); for j fresh, we have \(J,j \Vdash A (f,i/1-j)\) (note that \((i1) f = (f, i/1-j) (j0)\)) and we get

by IH, i.e., \(J,j \Vdash x' (f,x/a ,i/j ) = x' (f,x/a ,i/j ) : A (f, i/1 -j)\), and hence for \(r \in \mathbb {I}(J)\)

Thus we get \(I, x : A(i1) \models u_0 \, {\bar{x}} (i0) : B (i0) (x/\bar{x} (i0))\), \(I,x : A(i1),\varphi , i : \mathbb {I}\models u \, {\bar{x}} : B (x/\bar{x})\), and

And hence again by IH, we obtain (18) and (19).

(2) Let \(f :J \rightarrow I\) and \(J \Vdash a:A(f,i/1 )\). Then \(J,j \Vdash {\bar{a}} : A(f,i/j )\) as above and we have to show

But this follows directly from the IH for \(J,j \Vdash B(f,x/{\bar{a}}, i/j)\).

CaseSi-C. Let us write \((x : A) \times B\) for the type under consideration. (1) We have

so by IH,

Let us call the above filler w. Thus we get \(I,i \Vdash B (x/w)\),

and hence

The IH yields

let us write \(w'\) for the above. By the reduction rules for composition in \(\varSigma \)-types we get \(I \vdash u_1 \succ _\mathsf {s}(w(i1),w') : (x : A(i1)) \times B (i1)\) and hence the Expansion Lemma yields

Which in turn implies the equality

The proof of (2) uses that all notions defining w and \(w'\) preserve equality (by IH), and thus \(I \Vdash u_1 {\downarrow }= v_1 {\downarrow }: (x : A(i1)) \times B(i1)\).

CasePa-C. Let us write \(\mathsf {Path}\,A\,a_0\,a_1\) for the type under consideration. We obtain (for j fresh)

by the IH. Using the Expansion Lemma, the reduction rule for composition at \(\mathsf {Path}\)-types, and Lemma 19 (2) this yields

where \({\tilde{u}}\) is the element in (23) and \(u_1\) is \(\langle j \rangle {\tilde{u}}\). But \(I \Vdash {\tilde{u}} (jb) = a_b (i1) : A(i1)\), so \(I \Vdash u_1 : \mathsf {Path}\,A(i1)\,a_0(i1)\,a_1 (i1)\). Moreover,

by the correctness of the \(\eta \)-rule for paths (Lemma 19 (6)).

CaseGl-C. To not confuse with our previous notations, we write \(\psi \) for the face formula of u, and write B for \(\mathsf {Glue}\, [ \varphi \mapsto (T, w)] \, A\).

Thus we are given:

and also \(I,i,\psi \Vdash u : B\) and \(I \Vdash u_0 : B (i0) [\psi \mapsto u(i0)]\). Moreover we have \(I,i,\varphi \Vdash T\) with shorter derivations by Lemma 23. We have to show

-

(i)

\(I \Vdash u_1 : B(i1)\), and

-

(ii)

\(I,\psi \Vdash u_1 = u(i1) : B(i1)\).

We will be using the Expansion Lemma: let \(f :J \rightarrow I\) and consider the reducts of \(u_1 f\):

with \(f'=(f,i/j)\), and \(t_1\) and \(a_1\) as in the corresponding reduction rule, i.e.:

First, we have to check \(J \Vdash u_1 f {\downarrow }: B (i1) f\). In case \(\varphi f' = 1\) this immediately follows from the IH. In case \(\varphi f' \ne 1\), this follows from the IH and the previous lemmas ensuring that notions involved in the definition of \(t_1\) and \(a_1\) preserve computability.

Second, we have to check \(J \Vdash u_1 f {\downarrow }= u_1 {\downarrow }f : B (i1) f\). For this, the only interesting case is when \(\varphi f' = 1\); then we have to check that:

Since all the involved notions commute with substitutions, we may (temporarily) assume \(f = \mathrm {id}\) and \(\varphi = 1\) to simplify notation. Then also \(\delta = 1 = \varphi (i1)\), and hence (using the IH)

so (24) follows from Lemma 22 (5b) and (1).

So the Expansion Lemma yields (i) and \(I \Vdash u_1 = \mathsf {glue}\, [\varphi (i1) \mapsto t_1]\,a_1 : B(i1)\). (ii) is checked similarly to what is done in [7, Appendix A] using the IH. This proves (1) in this case; for (2) one uses that all notions for giving \(a_1\) and \(t_1\) above preserve equality, and thus \(I \Vdash u_1 {\downarrow }= v_1 {\downarrow }: B(i1)\) entailing \(I \Vdash u_1 = v_1 : B(i1)\).

CaseU-C. We have

thus it is sufficient to prove that the right-hand side is computable, i.e.,

that is,

We have \(I \Vdash _0 u_0\) so by Lemma 22 (1) it suffices to prove

To see this recall that the definition of \({{\mathrm{\mathsf {equiv}}}}^i {u (i/1-i)}\) is defined from compositions and filling operations for types \(I,i \Vdash _0 u\) and \(I,i \Vdash _0 u (i/1-i)\) using operations we already have shown to preserve computability. But in this case we have as IH, that these composition and filling operations are computable since the derivations of \(I,i \Vdash _0 u\) and \(I,i \Vdash _0 u\) are less complex than the derivation \(I \Vdash _1 \mathsf {U}\) since the level is smaller.

CaseNi-C. So we have \(J \Vdash A f {\downarrow }\) for each \(f :J \rightarrow I,i\) and \(J \Vdash A {\downarrow }f = A f {\downarrow }\) (all with a shorter derivation than \(I,i \Vdash A\)). Note that by Lemma 8 (1), we also have \(I,i \Vdash A = A {\downarrow }\).

(1) We have to show \(J \Vdash u_1 f : A (i1) f {\downarrow }\) for each \(f :J \rightarrow I\). It is enough to show this for f being the identity; we do this using the Expansion Lemma. Let \(f :J \rightarrow I\) and j be fresh, \(f' = (f,i/j)\); we first show \(J \Vdash u_1 f {\downarrow }: A {\downarrow }(i1) f\). We have

hence also at type \(A f' (j1) {\downarrow }\), and so, by IH (1) for \(J,j \Vdash A f' {\downarrow }\), we obtain \(J \Vdash u_1 f {\downarrow }: A f' (j1) {\downarrow }\). But \(J \Vdash A f' (j1){\downarrow }= A {\downarrow }(i1) f\), so \(J \Vdash u_1 f {\downarrow }: A {\downarrow }(i1) f\).

Next, we have to show \(J \Vdash u_1 {\downarrow }f = u_1 f {\downarrow }: A {\downarrow }(i1) f\). Since \(J,j \Vdash A {\downarrow }f' = A f' {\downarrow }\) (with a shorter derivation) we get by IH (3), \(J \Vdash u_1 {\downarrow }f = u_1 f {\downarrow }: A {\downarrow }f' (j1)\) what we had to show.

Thus we can apply the Expansion Lemma and obtain \(I \Vdash u_1 : A {\downarrow }(i1)\) and \(I \Vdash u_1 = u_1 {\downarrow }: A {\downarrow }(i1)\), and hence also \(I \Vdash u_1 : A (i1)\) and \(I \Vdash u_1 = u_1 {\downarrow }: A (i1)\). By IH, we also have \(I,\varphi \Vdash u_1 = u_1 {\downarrow }= u(i1) : A {\downarrow }(i1) = A (i1)\).

(2) Like above, we obtain

But since the derivation of \(I,i \Vdash A {\downarrow }\) is shorter, and \(u_1 {\downarrow }= \mathsf {comp}^i \, A{\downarrow }\, [\varphi \mapsto u] \, u_0\) and similarly for \(v_1 {\downarrow }\), the IH yields \(I \Vdash u_1 {\downarrow }= v_1 {\downarrow }: A {\downarrow }(i1)\), thus also \(I \Vdash u_1 = v_1 : A {\downarrow }(i1)\), that is, \(I \Vdash u_1 = v_1 : A (i1)\) since \(I,i \Vdash A = A {\downarrow }\).

It remains to show that composition preserves forced type equality (i.e., (3) holds). The argument for the different cases is very similar, namely using that the compositions on the left-hand and right-hand side of (3) are equal to their respective reducts [by (1)] and then applying the IH for the reducts. We will only present the case Ni-E .

CaseNi-E . Then A or B is non-introduced and \(I,i \Vdash A {\downarrow }= B {\downarrow }\) with a shorter derivation. Moreover, by (1) (if the type is non-introduced) or reflexivity (if the type is introduced) we have

but the right-hand sides are forced equal by IH. \(\square \)

Lemma 24

The rules for the universe \(\mathsf {U}\) are sound:

-

1.

\(\varGamma \models A : \mathsf {U}\Rightarrow \varGamma \models A\)

-

2.

\(\varGamma \models A = B : \mathsf {U}\Rightarrow \varGamma \models A = B\)

Moreover, the rules reflecting the type formers in \(\mathsf {U}\) are sound.

Proof

Of the first two statements let us only prove (2): given \(I \Vdash \sigma = \tau : \varGamma \) we get \(I \Vdash A\sigma = B\tau : \mathsf {U}\); this must be a derivation of \(I \Vdash _1 A\sigma = B\tau : \mathsf {U}\) and hence we also have \(I \Vdash _0 A\sigma = B\tau \).

The soundness of the rules reflecting the type formers in \(\mathsf {U}\) is proved very similar to proving the soundness of the type formers. Let us exemplify this by showing soundness for \(\varPi \)-types in \(\mathsf {U}\): we are give \(\varGamma \models A : \mathsf {U}\) and \(\varGamma , x : A \models B : \mathsf {U}\), and want to show \(\varGamma \models (x : A) \rightarrow B: \mathsf {U}\). Let \(I \Vdash \sigma = \tau : \varGamma \), then \(I \Vdash A \sigma = A \tau : \mathsf {U}\), so, as above, \(I \Vdash _0 A \sigma = A \tau \); it is enough to show

for \(J \Vdash u = v : A \sigma f\) with \(f :J \rightarrow I\). Then \(J \Vdash (\sigma f, x/u ) = (\tau f, x/v ) : \varGamma , x : A\), hence \(J \Vdash B (\sigma f, x/u) = B (\tau f, x/v) : \mathsf {U}\) and hence (25). \(\square \)

Proof of Soundness (Theorem 1)

By induction on the derivation \(\varGamma \vdash \mathscr {J}\).

We have already seen above that most of the rules are sound. Let us now look at the missing rules. Concerning basic type theory, the formation and introduction rules for \(\mathsf {N}\) are immediate; its elimination rule and definitional equality follow from the “local” soundness from Lemma 16 as follows. Suppose \(\varGamma \models u : \mathsf {N}\), \(\varGamma , x : \mathsf {N}\models C\), \(\varGamma \models z : C (x/0)\), and \(\varGamma \models s : (x : \mathsf {N}) \rightarrow C \rightarrow C (x/{{\mathrm{\mathsf {S}}}}x)\). For \(I \Vdash \sigma = \tau : \mathsf {N}\) we get by Lemma 16 (2)

(Hence \(\varGamma \models \mathsf {natrec}\,u\,z\,s : C (x/u)\).) Concerning, the definitional equality, if, say, u was of the form \({{\mathrm{\mathsf {S}}}}v\), then, Lemma 16 (1) gives

and \((\mathsf {natrec}\,({{\mathrm{\mathsf {S}}}}v\tau )\,z\tau \,s\tau ){\downarrow }\) is \(s \tau \, v\tau \,(\mathsf {natrec}\,v\tau \,z\tau \,s\tau )\), proving

similarly, the soundness of the other definitional equality is established.

Let us now look at the composition operations: suppose \(\varGamma , i : \mathbb {I}\models A\), \(\varGamma \models \varphi : \mathbb {F}\), \(\varGamma ,\varphi , i : \mathbb {I}\models u : A\), and \(\varGamma \models u_0 : A (i0) [\varphi \mapsto u (i0)]\). Further let \(I \Vdash \sigma = \tau : \varGamma \), then for j fresh, \(I, j \Vdash \sigma ' = \tau ' : \varGamma ,i:\mathbb {I}\) where \(\sigma ' = (\sigma ,i/j)\) and \(\tau ' = (\tau ,i/j)\), hence \(I,j \Vdash A \sigma ' = A \tau '\), \(\varphi \sigma = \varphi \tau \), \(I,j,\varphi \sigma \Vdash u \sigma ' = u \tau ' : A \sigma '\), and \(I \Vdash u_0 \sigma = u_0 \tau : A \sigma ' (j0) [\varphi \sigma \mapsto u \sigma ' (j0)]\). By Theorem 2,

and

hence we showed \(\varGamma \models \mathsf {comp}^i\,A\,[\varphi \mapsto u]\,u_0 : A (i1) [\varphi \mapsto u (i1)]\). Similarly one can justify the congruence rule for composition.