Abstract

Suppose \(\lambda \) and \(\mu \) are integer partitions with \(\lambda \supseteq \mu \). Kenyon and Wilson have introduced the notion of a cover-inclusive Dyck tiling of the skew Young diagram \(\lambda \backslash \mu \), which has applications in the study of double-dimer models. We examine these tilings in more detail, giving various equivalent conditions and then proving a recurrence which we use to show that the entries of the transition matrix between two bases for a certain permutation module for the symmetric group are given by counting cover-inclusive Dyck tilings. We go on to consider the inverse of this matrix, showing that its entries are determined by what we call cover-expansive Dyck tilings. The fact that these two matrices are mutual inverses allows us to recover the main result of Kenyon and Wilson. We then discuss the connections with recent results of Kim et al., who give a simple expression for the sum, over all \(\mu \), of the number of cover-inclusive Dyck tilings of \(\lambda \backslash \mu \). Our results provide a new proof of this result. Finally, we show how to use our results to obtain simpler expressions for the homogeneous Garnir relations for the universal Specht modules introduced by Kleshchev, Mathas and Ram for the cyclotomic quiver Hecke algebras.

Similar content being viewed by others

1 Introduction

The motivation for this paper is the study of the modular representation theory of the symmetric group, and more generally the representation theory of the cyclotomic Hecke algebra of type A. This area has recently been revolutionised by the discovery by Brundan and Kleshchev of new presentations for these algebras, which show in particular that the algebras are non-trivially \({\mathbb {Z}}\)-graded. The contribution in the present paper concerns the definition of the Specht modules, which play a central role in the representation theory of cyclotomic Hecke algebras. These modules have been studied within the graded setting by Brundan, Kleshchev and Wang, and developed further by Kleshchev, Mathas and Ram, who have given a presentation for each Specht module with a single generator and a set of homogeneous relations. These relations include homogeneous analogues of the classical Garnir relations for the symmetric group, which allow the Specht module to be expressed as a quotient of a ‘row permutation module’. Although the homogeneous Garnir relations are in some sense simpler than their classical counterparts, their statement in [10] is awkward in that the ‘Garnir elements’ involved are given as linear combinations of expressions in the standard generators \(\psi _1,\ldots ,\psi _{n-1}\) for the row permutation module which are not always reduced. Our main result concerning Specht modules is an expression for each Garnir relation as a linear combination of reduced expressions; this simplifies calculations with Specht modules, both theoretically and computationally.

But this result is a by-product of the main work in this paper, which is to consider tilings of skew Young diagrams by Dyck tiles. These tilings were introduced by Kenyon and Wilson, who defined in particular the notion of a cover-inclusive Dyck tiling. They used these tilings to give a formula for the inverse of a certain matrix M arising in the study of double-dimer models. We re-interpret the entries of M in terms of what we call cover-expansive Dyck tilings, and then, by proving recurrence relations for the numbers of cover-inclusive and cover-expansive Dyck tilings, we show that (sign-modified versions of) M and \(M^{-1}\) are in fact transition matrices for two natural bases for a certain permutation representation of the symmetric group. The fact that the two transition matrices are obviously mutually inverse provides a new proof of Kenyon and Wilson’s result. Along the way we give a result showing several different equivalent conditions to the cover-inclusive condition.

In order to derive our result on Garnir relations, we then express the sum of the elements of one of our two bases in terms of the other; this involves defining a certain function \(F\) on partitions, and proving a similar recurrence to the recurrence for cover-inclusive Dyck tilings. Combining this with our results on transition coefficients means that \(F(\lambda )\) equals the sum over all partitions \(\mu \subseteq \lambda \) of the number of cover-inclusive Dyck tilings of \(\lambda \backslash \mu \). In fact, this had already been shown by Kim, and then by Kim, Mészáros, Panova and Wilson, verifying a conjecture of Kenyon and Wilson. As well as providing a new proof of this result, our results working directly with the function \(F\) allow us to derive our application to Garnir relations without using Dyck tilings.

We now describe the structure of this paper. Section 2 is devoted to definitions. In Sect. 3 we study cover-inclusive Dyck tilings, giving equivalent conditions for cover-inclusiveness and then proving several bijective results which allow us to deduce recurrences for the number \({\text {i}}_{\lambda \mu }\) of cover-inclusive Dyck tilings of \(\lambda \backslash \mu \). In Sect. 4 we prove similar, though considerably simpler, recurrences for cover-expansive Dyck tilings. In Sect. 5 we recall the Young permutation module \(\mathscr {M}^{(f,g)}\) for the symmetric group; we define our two bases for this module, and use Dyck tilings to describe the transition coefficients. We then introduce the function \(F\) and use it to express the sum of the elements of the first basis in terms of the second, before summarising the relationship between our work and that of Kenyon, Kim, Mészáros, Panova and Wilson. Finally in Sect. 6 we give the motivating application of this work, introducing the Specht modules in the modern setting, and using our earlier results to give a new expression for the homogeneous Garnir relations.

Since the first version of this paper was written, we have become aware of the paper [12] by Shigechi and Zinn-Justin, where cover-inclusive and cover-expansive Dyck tilings are used in the calculation of parabolic Kazhdan–Lusztig polynomials. (They do not use the same terminology—in their paper, Dyck tiles are called “Dyck strips”, and the cover-inclusive and cover-expansive conditions are “rules I and II”. In addition—following the more widespread convention for Dyck paths—their convention for diagrams is the top-to-bottom reflection of ours.) It is likely that some of our results can be deduced from the results in [12].

2 Definitions

2.1 Partitions and Young diagrams

As usual, a partition is a weakly decreasing sequence \(\lambda =(\lambda _1,\lambda _2,\ldots )\) of non-negative integers with finite sum. We write this sum as \(|\lambda |\), and say that \(\lambda \) is a partition of \(|\lambda |\). When writing partitions, we may group equal parts together with a superscript, and omit trailing zeroes, and we write the partition \((0,0,\ldots )\) as \(\varnothing \).

The Young diagram of a partition \(\lambda \) is the set

We may abuse notation by identifying \(\lambda \) with its Young diagram; for example, we may write \(\lambda \supseteq \mu \) to mean that \(\lambda _i\geqslant \mu _i\) for all i. If \(\lambda \supseteq \mu \), then the skew Young diagram \(\lambda \backslash \mu \) is simply the set difference between the Young diagrams for \(\lambda \) and \(\mu \).

We draw (skew) Young diagrams as arrays of boxes in the plane, and except in the final section of this paper we use the Russian convention, where a increases from south-east to north-west, and b increases from south-west to north-east. For example, the Young diagram of \((7^2,4,3,2^2)\backslash (2,1^2)\) is as follows.

The conjugate partition to \(\lambda \) is the partition \(\lambda '\) obtained by reflecting the Young diagram for \(\lambda \) left to right; thus \(\lambda '_i=\left| \left\{ \left. j\geqslant 1\ \right| \ \smash {\lambda _j\geqslant i}\right\} \right| \) for all i.

We define a node to be an element of \({\mathbb {N}}^2\), and a node of \(\lambda \) to be an element of the Young diagram of \(\lambda \). The height of the node (a, b) is \(a+b\). The jth column of \({\mathbb {N}}^2\) is the set of all nodes (a, b) for which \(b-a=j\).

We use compass directions to label the neighbours of a node; for example, if \({\mathfrak {n}}\) is a node, then we write \(\mathtt {SW}({\mathfrak {n}})={\mathfrak {n}}-(0,1)\) and refer to this as the SW neighbour of \({\mathfrak {n}}\); we also write \(\mathtt {N}({\mathfrak {n}})={\mathfrak {n}}+(1,1)\), and similarly for the other compass directions.

A node \({\mathfrak {n}}\) of \(\lambda \) is removable if it can be removed from \(\lambda \) to leave the Young diagram of a partition (i.e. if neither \(\mathtt {NW}({\mathfrak {n}})\) nor \(\mathtt {NE}({\mathfrak {n}})\) is a node of \(\lambda \)), while a node \({\mathfrak {n}}\) not in \(\lambda \) is an addable node of \(\lambda \) if it can be added to \(\lambda \) to leave the Young diagram of a partition.

2.2 Tiles and tilings

We define a tile to be a finite non-empty set t of nodes that can be ordered \({\mathfrak {n}}_1,\ldots ,{\mathfrak {n}}_r\) such that \({\mathfrak {n}}_{i+1}\in \{\mathtt {NE}({\mathfrak {n}}_i),\mathtt {SE}({\mathfrak {n}}_i)\}\) for each \(i=1,\ldots ,r-1\). We say that t starts at its leftmost node, which we denote \({\text {st}}(t)\), and ends at its rightmost, which we denote \({\text {en}}(t)\). The height \({\text {ht}}(t)\) of t is defined to be \(\max \left\{ \left. {\text {ht}}({\mathfrak {n}})\ \right| \ \smash {{\mathfrak {n}}\in t}\right\} \), and we say that t is a Dyck tile if this maximum is achieved at the start and end nodes of t, i.e. \({\text {ht}}(t)={\text {ht}}({\text {st}}(t))={\text {ht}}({\text {en}}(t))\). t is big if it contains more than one node, and is a singleton otherwise. The depth of a node \({\mathfrak {n}}\in t\) is

Now suppose \(\lambda \) and \(\mu \) are partitions with \(\lambda \supseteq \mu \). A Dyck tiling of \(\lambda \backslash \mu \) is a partition of \(\lambda \backslash \mu \) into Dyck tiles. Given a Dyck tiling T of \(\lambda \backslash \mu \) and a node \({\mathfrak {n}}\in \lambda \backslash \mu \), we write \({\text {tile}}({\mathfrak {n}})\) for the tile containing \({\mathfrak {n}}\). We say that \({\mathfrak {n}}\) is attached to \(\mathtt {NE}({\mathfrak {n}})\) if \({\mathfrak {n}}\) and \(\mathtt {NE}({\mathfrak {n}})\) lie in the same tile in T, and similarly for \(\mathtt {SE}({\mathfrak {n}})\), \(\mathtt {NW}({\mathfrak {n}})\) and \(\mathtt {SW}({\mathfrak {n}})\). If t is a tile in T, the NE neighbour of t is the tile starting at \(\mathtt {NE}({\text {en}}(t))\), if there is one, while the SW neighbour of t is the tile ending at \(\mathtt {SW}({\text {st}}(t))\), if there is one. SE and NW neighbours of tiles are defined similarly.

Now we recast the main definition from [6]. Say that a Dyck tiling T of \(\lambda \backslash \mu \) is left-cover-inclusive if whenever \({\mathfrak {a}}\) and \(\mathtt {N}({\mathfrak {a}})\) are nodes of \(\lambda \backslash \mu \), \({\text {st}}({\text {tile}}(\mathtt {N}({\mathfrak {a}})))\) lies weakly to the left of \({\text {st}}({\text {tile}}({\mathfrak {a}}))\). Similarly, T is right-cover-inclusive if whenever \({\mathfrak {a}}\) and \(\mathtt {N}({\mathfrak {a}})\) are nodes of \(\lambda \backslash \mu \), \({\text {en}}({\text {tile}}(\mathtt {N}({\mathfrak {a}})))\) lies weakly to the right of \({\text {en}}({\text {tile}}({\mathfrak {a}}))\). Say that T is cover-inclusive if it is both left- and right-cover-inclusive.

We let \({\mathscr {I}}(\lambda ,\mu )\) denote the set of cover-inclusive Dyck tilings of \(\lambda \backslash \mu \) if \(\lambda \supseteq \mu \), and set \({\mathscr {I}}(\lambda ,\mu )=\emptyset \) otherwise. Let \({\text {i}}_{\lambda \mu }=\left| {\mathscr {I}}(\lambda ,\mu )\right| \).

Next we make a definition which is in some sense dual to the notion of cover-inclusiveness and which appears heavily disguised in [6]. Say that a Dyck tiling is left-cover-expansive if whenever \({\mathfrak {a}}\) and \(\mathtt {SE}({\mathfrak {a}})\) are nodes of \(\lambda \backslash \mu \), \({\text {st}}({\text {tile}}(\mathtt {SE}({\mathfrak {a}})))\) lies weakly to the left of \({\text {st}}({\text {tile}}({\mathfrak {a}}))\), and right-cover-expansive if whenever \({\mathfrak {a}}\) and \(\mathtt {SW}({\mathfrak {a}})\) are nodes of \(\lambda \backslash \mu \), \({\text {en}}({\text {tile}}(\mathtt {SW}({\mathfrak {a}})))\) lies weakly to the right of \({\text {en}}({\text {tile}}({\mathfrak {a}}))\). A Dyck tiling is cover-expansive if it is both left- and right-cover-expansive.

We write \({\text {e}}_{\lambda \mu }\) for the number of cover-expansive Dyck tilings of \(\lambda \backslash \mu \), setting \({\text {e}}_{\lambda \mu }=0\) if \(\lambda \nsupseteq \mu \). We shall see later that \({\text {e}}_{\lambda \mu }\leqslant 1\) for all \(\lambda ,\mu \).

Example

We illustrate four Dyck tilings of \((6^2,4,3,1^2)\backslash (4,1^2)\). Only the first one is cover-inclusive, and only the second is cover-expansive.

We end this section with some notation which we shall use repeatedly later. Suppose \(j\in {\mathbb {Z}}\) is fixed, and \(\lambda \) is a partition with an addable node \({\mathfrak {l}}\) in column j. We define \(X_{\lambda }\) to be the set of all integers x such that \(\lambda \) has a removable node \({\mathfrak {n}}\) in column \(j+x\), with \({\text {ht}}({\mathfrak {n}})={\text {ht}}({\mathfrak {l}})-1\), and \({\text {ht}}({\mathfrak {p}})<{\text {ht}}({\mathfrak {l}})\) for all nodes \({\mathfrak {p}}\in \lambda \) in all columns between j and \(j+x\). We set \(X_{\lambda }^+\) to be the set of positive elements of \(X_{\lambda }\). Note that \(x\in X_{\lambda }^+\) precisely when there is a Dyck tile \(t\subset \lambda \), starting in column \(j+1\) and ending in column \(j+x\), which can be removed from \(\lambda \) to leave a smaller partition; we denote this smaller partition \(\lambda ^{[x]}\). We define \(\lambda ^{[x]}\) for \(x\in X_{\lambda }^-=X_{\lambda }\setminus X_{\lambda }^+\) similarly.

Example

Take \(\lambda =(6,4^2,3,2^2)\). Then \(\lambda \) has an addable node in column 0, and for this node we have \(X_{\lambda }=\{-1,1,5\}\). The partitions \(\lambda ^{[x]}\) are as follows.

3 Cover-inclusive Dyck tilings

In this section we examine cover-inclusive Dyck tilings in more detail. We give some equivalent conditions to the cover-inclusive condition, and then we prove a recurrence for the number \({\text {i}}_{\lambda \mu }\) of cover-inclusive Dyck tilings.

3.1 Equivalent conditions

Theorem 3.1

Suppose \(\lambda \) and \(\mu \) are partitions with \(\lambda \supseteq \mu \), and T is a Dyck tiling of \(\lambda \backslash \mu \). The following are equivalent.

-

1.

T is cover-inclusive.

-

2.

If \({\mathfrak {a}}\) and \(\mathtt {N}({\mathfrak {a}})\) are nodes of \(\lambda \backslash \mu \), then \({\text {dp}}(\mathtt {N}({\mathfrak {a}}))\geqslant {\text {dp}}({\mathfrak {a}})\).

-

3.

If \({\mathfrak {a}}\) and \(\mathtt {N}({\mathfrak {a}})\) are nodes of \(\lambda \backslash \mu \), then \({\text {tile}}({\mathfrak {a}})+(1,1)\subseteq {\text {tile}}(\mathtt {N}({\mathfrak {a}}))\).

-

4.

If \({\mathfrak {a}}\) and \(\mathtt {N}({\mathfrak {a}})\) are nodes of \(\lambda \backslash \mu \) and \(\mathtt {NW}({\mathfrak {a}})\) is attached to \({\mathfrak {a}}\), then \(\mathtt {NW}(\mathtt {N}({\mathfrak {a}}))\) is a node of \(\lambda \backslash \mu \) and is attached to \(\mathtt {N}({\mathfrak {a}})\).

-

5.

If \({\mathfrak {a}}\) and \(\mathtt {N}({\mathfrak {a}})\) are nodes of \(\lambda \backslash \mu \) and \(\mathtt {N}({\mathfrak {a}})\) is the end node of its tile, then \({\mathfrak {a}}\) is the end node of its tile.

-

6.

T is right-cover-inclusive.

-

7.

If \({\mathfrak {a}}\) and \(\mathtt {N}({\mathfrak {a}})\) are nodes of \(\lambda \backslash \mu \) and \(\mathtt {NE}({\mathfrak {a}})\) is attached to \({\mathfrak {a}}\), then \(\mathtt {NE}(\mathtt {N}({\mathfrak {a}}))\) is a node of \(\lambda \backslash \mu \) and is attached to \(\mathtt {N}({\mathfrak {a}})\).

-

8.

If \({\mathfrak {a}}\) and \(\mathtt {N}({\mathfrak {a}})\) are nodes of \(\lambda \backslash \mu \) and \(\mathtt {N}({\mathfrak {a}})\) is the start node of its tile, then \({\mathfrak {a}}\) is the start node of its tile.

-

9.

T is left-cover-inclusive.

Proof

- (1\(\Rightarrow \)2):

-

Take \({\mathfrak {a}}\in \lambda \backslash \mu \) such that \(\mathtt {N}({\mathfrak {a}})\in \lambda \backslash \mu \), and let \({\mathfrak {c}}={\text {st}}({\text {tile}}({\mathfrak {a}}))\). Since T is cover-inclusive, there is a node \({\mathfrak {b}}\) in \({\text {tile}}(\mathtt {N}({\mathfrak {a}}))\) in the same column as \({\mathfrak {c}}\), and

$$\begin{aligned} {\text {dp}}(\mathtt {N}({\mathfrak {a}}))\geqslant {\text {ht}}({\mathfrak {b}})-{\text {ht}}(\mathtt {N}({\mathfrak {a}}))\geqslant {\text {ht}}({\mathfrak {c}})+2-({\text {ht}}({\mathfrak {a}})+2)={\text {dp}}({\mathfrak {a}}). \end{aligned}$$ - (2\(\Rightarrow \)3):

-

Suppose (3) is false, and take \({\mathfrak {a}}\in \lambda \backslash \mu \) such that \(\mathtt {N}({\mathfrak {a}})\in \lambda \backslash \mu \) and \({\text {tile}}({\mathfrak {a}})+(1,1)\nsubseteq {\text {tile}}(\mathtt {N}({\mathfrak {a}}))\). There is a node \({\mathfrak {b}}\in {\text {tile}}({\mathfrak {a}})\) such that \({\mathfrak {b}}+(1,1)\notin {\text {tile}}(\mathtt {N}({\mathfrak {a}}))\), and we may assume that \({\mathfrak {b}}\) is attached to \({\mathfrak {a}}\); in fact, by symmetry, we may assume \({\mathfrak {b}}\) is either \(\mathtt {NE}({\mathfrak {a}})\) or \(\mathtt {SE}({\mathfrak {a}})\). If \({\mathfrak {b}}=\mathtt {NE}({\mathfrak {a}})\), then \(\mathtt {N}({\mathfrak {a}})\) is attached to neither its NE nor its SE neighbour, so is the end node of its tile, and in particular \({\text {dp}}(\mathtt {N}({\mathfrak {a}}))=0\). On the other hand \({\text {dp}}({\mathfrak {a}})>{\text {dp}}({\mathfrak {b}})\geqslant 0\), contradicting (2). If instead \({\mathfrak {b}}=\mathtt {SE}({\mathfrak {a}})\), then \(\mathtt {NE}({\mathfrak {a}})\) is attached to neither its NW nor its SW neighbour, so is the start node of its tile, and has depth 0; but \({\text {dp}}({\mathfrak {b}})>{\text {dp}}({\mathfrak {a}})\geqslant 0\), and again (2) is contradicted.

- (3\(\Rightarrow \)4):

-

This is trivial.

- (4\(\Rightarrow \)5):

-

Suppose (5) is false, and take \({\mathfrak {a}}\in \lambda \backslash \mu \) as far to the left as possible such that \(\mathtt {N}({\mathfrak {a}})\in \lambda \backslash \mu \) and is the end node of its tile, while \({\mathfrak {a}}\) is not the end node of its tile. Since \({\mathfrak {a}}\) is not the end node of its tile, it is attached to either \(\mathtt {SE}({\mathfrak {a}})\) or \(\mathtt {NE}({\mathfrak {a}})\). If \({\mathfrak {a}}\) is attached to \(\mathtt {SE}({\mathfrak {a}})\), then \(\mathtt {SE}({\mathfrak {a}})\) is attached to its NW neighbour, but \(\mathtt {N}(\mathtt {SE}({\mathfrak {a}}))=\mathtt {NE}({\mathfrak {a}}){}\) is not attached to its NW neighbour, contradicting (4).

So assume that \({\mathfrak {a}}\) is attached to \(\mathtt {NE}({\mathfrak {a}})\). This implies in particular that \({\mathfrak {a}}\) has positive depth.

Claim 1

If \({\mathfrak {c}}\in {\text {tile}}({\mathfrak {a}})\) and \(\mathtt {N}({\mathfrak {c}})\in {\text {tile}}(\mathtt {N}({\mathfrak {a}}))\), then \({\mathfrak {c}}\) is not the start node of \({\text {tile}}({\mathfrak {a}})\).

Proof

Since \({\text {tile}}(\mathtt {N}({\mathfrak {a}}))\) is a Dyck tile and \(\mathtt {N}({\mathfrak {a}})\) is its end node, we have \({\text {ht}}(\mathtt {N}({\mathfrak {c}}))\leqslant {\text {ht}}(\mathtt {N}({\mathfrak {a}}))\). Hence \({\text {ht}}({\mathfrak {c}})\leqslant {\text {ht}}({\mathfrak {a}})\), so \({\text {dp}}({\mathfrak {c}})\geqslant {\text {dp}}({\mathfrak {a}})>0\).

Claim 2

For every node \({\mathfrak {b}}\in {\text {tile}}(\mathtt {N}({\mathfrak {a}}))\) we have \(\mathtt {S}({\mathfrak {b}})\in {\text {tile}}({\mathfrak {a}})\).

Proof

If the claim is false, let \({\mathfrak {b}}\) be the rightmost counterexample. Obviously \({\mathfrak {b}}\ne \mathtt {N}({\mathfrak {a}})\), and in particular \({\mathfrak {b}}\) is not the end node of its tile, so \({\mathfrak {b}}\) is attached to either \(\mathtt {NE}({\mathfrak {b}})\) or \(\mathtt {SE}({\mathfrak {b}})\). In the first case, the choice of \({\mathfrak {b}}\) means that we have \(\mathtt {SE}({\mathfrak {b}})\in {\text {tile}}({\mathfrak {a}})\); neither \({\mathfrak {b}}\) nor \(\mathtt {S}({\mathfrak {b}})\) is in \({\text {tile}}({\mathfrak {a}})\), so \(\mathtt {SE}({\mathfrak {b}})\) is the start node of \({\text {tile}}({\mathfrak {a}})\), contradicting Claim 1.

So we can assume \({\mathfrak {b}}\) is attached to \(\mathtt {SE}({\mathfrak {b}})\). The choice of \({\mathfrak {b}}\) means that \(\mathtt {S}(\mathtt {SE}({\mathfrak {b}}))\in {\text {tile}}({\mathfrak {a}})\) and is not attached to \(\mathtt {S}({\mathfrak {b}})\). By Claim 1 \(\mathtt {S}(\mathtt {SE}({\mathfrak {b}}))\) is not the start node of \({\text {tile}}({\mathfrak {a}})\), so is attached to \(\mathtt {S}(\mathtt {S}({\mathfrak {b}}))\). But now \(\mathtt {S}({\mathfrak {b}})\) is not attached to either its NE or SE neighbour, so is the end node of its tile. \(\mathtt {S}(\mathtt {S}({\mathfrak {b}}))\) is not the end node of its tile, and this contradicts the choice of \({\mathfrak {a}}\).

Now let \({\mathfrak {b}}={\text {st}}({\text {tile}}(\mathtt {N}({\mathfrak {a}})))\). Then by Claim 2, \({\mathfrak {c}}:=\mathtt {S}({\mathfrak {b}})\in {\text {tile}}({\mathfrak {a}})\), and by Claim 1 \({\mathfrak {c}}\) is not the start node of \({\text {tile}}({\mathfrak {a}})\). So \({\mathfrak {c}}\) is attached to either \(\mathtt {NW}({\mathfrak {c}})\) or \(\mathtt {SW}({\mathfrak {c}})\). The first possibility contradicts (4), since \({\mathfrak {b}}\) is not attached to \(\mathtt {NW}({\mathfrak {b}})\), so assume that \({\mathfrak {c}}\) is attached to \(\mathtt {SW}({\mathfrak {c}})\). But then \(\mathtt {NW}({\mathfrak {c}})\) is attached to neither \({\mathfrak {b}}\) nor \({\mathfrak {c}}\), so is the end node of its tile, while \(\mathtt {SW}({\mathfrak {c}})\) is not the end node of its tile, and this contradicts the choice of \({\mathfrak {a}}\).

- (5\(\Rightarrow \)6):

-

Suppose T is not right-cover-inclusive, and take \({\mathfrak {a}}\in \lambda \backslash \mu \) such that \(\mathtt {N}({\mathfrak {a}})\in \lambda \backslash \mu \) and \({\text {tile}}({\mathfrak {a}})\) ends strictly to the right of \({\text {tile}}(\mathtt {N}({\mathfrak {a}}))\). Let \({\mathfrak {b}}={\text {en}}({\text {tile}}(\mathtt {N}({\mathfrak {a}})))\); then there is a node in \({\text {tile}}({\mathfrak {a}})\) in the same column as \({\mathfrak {b}}\), which we can write as \({\mathfrak {b}}-(h,h)\) for some \(h>0\). If we let \(i\in \{1,\ldots ,h\}\) be minimal such that \({\mathfrak {b}}-(i,i)\) is not the end node of its tile, then \(\mathtt {N}({\mathfrak {b}}-(i,i))\) is the end node of its tile, contradicting (5).

- (6\(\Rightarrow \)7):

-

Suppose \({\mathfrak {a}},\mathtt {N}({\mathfrak {a}})\in \lambda \backslash \mu \) and \({\mathfrak {a}}\) is attached to \(\mathtt {NE}({\mathfrak {a}})\). Then \({\text {en}}({\text {tile}}({\mathfrak {a}}))\) lies to the right of \({\mathfrak {a}}\), so by (6) \({\text {en}}({\text {tile}}(\mathtt {N}({\mathfrak {a}})))\) does too. So \(\mathtt {N}({\mathfrak {a}})\) is attached to either \(\mathtt {NE}(\mathtt {N}({\mathfrak {a}}))\) or \(\mathtt {SE}(\mathtt {N}({\mathfrak {a}}))\). But \(\mathtt {SE}(\mathtt {N}({\mathfrak {a}}))=\mathtt {NE}({\mathfrak {a}})\) is attached to \({\mathfrak {a}}\), so \(\mathtt {N}({\mathfrak {a}})\) is attached to \(\mathtt {NE}(\mathtt {N}({\mathfrak {a}}))\).

- (7\(\Rightarrow \)8):

-

This is symmetrical to the argument that 4\(\Rightarrow \)5.

- (8\(\Rightarrow \)9):

-

This is symmetrical to the argument that 5\(\Rightarrow \)6.

- (9\(\Rightarrow \)1):

-

Since 6\(\Rightarrow \)7\(\Rightarrow \)8\(\Rightarrow \)9, right-cover-inclusive implies left-cover-inclusive. Symmetrically, left-cover-inclusive implies right-cover-inclusive, and hence cover-inclusive. \(\square \)

We shall use these equivalent definitions of cover-inclusiveness, often without comment, in what follows. We also observe the following property of cover-inclusive Dyck tilings, which we shall use without comment.

Lemma 3.2

Suppose T is a cover-inclusive Dyck tiling of \(\lambda \backslash \mu \). If \({\mathfrak {n}}\in \lambda \backslash \mu \) is the highest node in its column in \(\lambda \backslash \mu \), then every node in \({\text {tile}}({\mathfrak {n}})\) is the highest node in its column in \(\lambda \backslash \mu \).

Proof

Suppose not, and take \({\mathfrak {m}}\in {\text {tile}}({\mathfrak {n}})\) which is not the highest node in its column. Without loss of generality we may assume \({\mathfrak {m}}\) lies in the column immediately to the right of \({\mathfrak {n}}\), i.e. \({\mathfrak {m}}\) is either \(\mathtt {NE}({\mathfrak {n}})\) or \(\mathtt {SE}({\mathfrak {n}})\). But if \({\mathfrak {m}}=\mathtt {NE}({\mathfrak {n}})\), then the assumption \(\mathtt {N}({\mathfrak {m}})\in \lambda \backslash \mu \) means that \(\mathtt {N}({\mathfrak {n}})\in \lambda \backslash \mu \) (otherwise \(\lambda \backslash \mu \) would not be a skew Young diagram), a contradiction. So assume \({\mathfrak {m}}=\mathtt {SE}({\mathfrak {n}})\). But now \({\mathfrak {m}}\) is attached to \(\mathtt {NW}({\mathfrak {m}})\) and \(\mathtt {N}({\mathfrak {m}})\in \lambda \backslash \mu \), so by Theorem 3.1(4) \(\mathtt {N}({\mathfrak {n}})\in \lambda \backslash \mu \), contradiction. \(\square \)

3.2 Recurrences

Now we consider recurrences. We start with a simple result.

Proposition 3.3

Suppose \(\lambda \) and \(\mu \) are partitions and \(j\in {\mathbb {Z}}\), and that \(\mu \) has an addable node \({\mathfrak {m}}\) in column j, but \(\lambda \) does not have an addable node in column j. Let \(\mu ^+=\mu \cup \{{\mathfrak {m}}\}\). Then \({\text {i}}_{\lambda \mu }={\text {i}}_{\lambda \mu ^+}\).

Proof

The fact that \(\lambda \) does not have an addable node in column j implies that \(\lambda \supseteq \mu \) if and only if \(\lambda \supseteq \mu ^+\), so we may as well assume that both of these conditions hold. Given a cover-inclusive Dyck tiling of \(\lambda \backslash \mu ^+\), we can obtain a tiling of \(\lambda \backslash \mu \) simply by adding the singleton tile \(\{{\mathfrak {m}}\}\), and it is clear that this tiling is a cover-inclusive Dyck tiling.

In the other direction, suppose T is a cover-inclusive Dyck tiling of \(\lambda \backslash \mu \); then we claim that \({\mathfrak {m}}\) forms a singleton tile. If we let \({\mathfrak {n}}\) denote the highest node in column j of \(\lambda \backslash \mu \), then (since \(\lambda \) does not have an addable node in column j) \(\mathtt {NE}({\mathfrak {n}})\) and \(\mathtt {NW}({\mathfrak {n}})\) are not both nodes of \(\lambda \backslash \mu \); suppose without loss that \(\mathtt {NE}({\mathfrak {n}})\notin \lambda \backslash \mu \). Then in particular \({\mathfrak {n}}\) is not attached to \(\mathtt {NE}({\mathfrak {n}})\) in T, and so [using Theorem 3.1(7)] \({\mathfrak {m}}\) is not attached to \(\mathtt {NE}({\mathfrak {m}})\). \({\mathfrak {m}}\) cannot be attached to \(\mathtt {SE}({\mathfrak {m}})\) or \(\mathtt {SW}({\mathfrak {m}})\) (since these are not nodes of \(\lambda \backslash \mu \)), and a node in a Dyck tile cannot be attached only to its NW neighbour. So \({\mathfrak {m}}\) is not attached to any of its neighbours, i.e. \(\{{\mathfrak {m}}\}\) is a singleton tile as claimed. We can remove this tile, and we clearly obtain a cover-inclusive Dyck tiling of \(\lambda \backslash \mu ^+\). So we have a bijection between \({\mathscr {I}}(\lambda ,\mu )\) and \({\mathscr {I}}(\lambda ,\mu ^+)\). \(\square \)

Now we prove a more complicated result, for which it will help us to fix some notation.

Note that if \(T\in {\mathscr {I}}(\lambda ,\mu ^+)\), then there must be at least one tile starting in column \(j+1\), since there are more nodes in column \(j+1\) of \(\lambda \backslash \mu ^+\) than in column j; so \({\scriptstyle \overrightarrow{\displaystyle T}}\) is well-defined, and similarly \({\scriptstyle \overleftarrow{\displaystyle T}}\) is well-defined.

To prove our main recurrence result for the numbers \({\text {i}}_{\lambda \mu }\), we give three results in which we construct bijections between sets of cover-inclusive Dyck tilings. The first of these is as follows; recall the definition of \(X_\lambda \) from Sect. 2.2.

Proposition 3.4

Proof

Note first that if \(\lambda ^+\nsupseteq \mu ^+\) then \(\lambda \nsupseteq \mu ^+\), so both sides equal zero. Conversely, if \(\lambda \nsupseteq \mu ^+\), then either \(\lambda ^+\nsupseteq \mu ^+\) or there are no nodes in column j of \(\lambda ^+\backslash \mu ^+\), so again both sides are zero. So we may assume that \(\lambda \supseteq \mu ^+\). We’ll construct a bijection

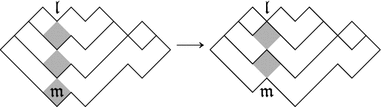

We begin by giving an example so that the reader can see how the bijection works; in this example, the tiling on the left lies in \(\left\{ \smash {T\in {\mathscr {I}}(\lambda ,\mu ^+)}\ \left| \ {\scriptstyle \overrightarrow{\displaystyle T}}\notin X_{\lambda }\right. \right\} \), and the tiling on the right is its image under \(\phi _1\). The nodes \({\mathfrak {l}}\) and \({\mathfrak {m}}\) are marked, the nodes in column j are shaded, and the tile t (introduced below) is marked with dots.

Given T in \({\mathscr {I}}(\lambda ,\mu ^+)\) with \({\scriptstyle \overrightarrow{\displaystyle T}}\notin X_{\lambda }\), let t be the highest tile in T starting in column \(j+1\), and let A be the set of nodes in column j which are higher than \({\text {st}}(t)\). No node in column \(j+1\) can be attached to its NW neighbour, because the highest node in this column [namely \(\mathtt {SE}({\mathfrak {l}})\)] is not. So (by the choice of t) every node \({\mathfrak {b}}\) higher than \({\text {st}}(t)\) in column \(j+1\) is attached to \(\mathtt {SW}({\mathfrak {b}})\), and hence each \({\mathfrak {a}}\in A\) is attached to \(\mathtt {NE}({\mathfrak {a}})\). In a Dyck tiling a node cannot be attached only to its NE neighbour, so each \({\mathfrak {a}}\in A\) is attached to either its NW or SW neighbour. But a node in column \(j-1\) cannot be attached to its NE neighbour (because the highest node in column \(j-1\) is not), so every \({\mathfrak {a}}\in A\) is attached to \(\mathtt {NW}({\mathfrak {a}})\).

Now we consider columns x and \(x+1\), where \(x={\scriptstyle \overrightarrow{\displaystyle T}}+j=|t|+j\). Let \({\mathfrak {d}}=\mathtt {SE}({\mathfrak {l}})\). Then \({\mathfrak {d}}={\text {st}}(t)+(h,h)\) for some \(h\geqslant 0\), so by Theorem 3.1(3) \({\text {tile}}({\mathfrak {d}})\) contains \(t+(h,h)\). In particular, \({\text {tile}}({\mathfrak {d}})\) includes the node \({\mathfrak {e}}={\text {en}}(t)+(h,h)\) in column x, and (if \({\text {st}}(t)\ne {\text {en}}(t)\)) includes \(\mathtt {SW}({\mathfrak {e}})\). Since \({\mathfrak {d}}\) is the highest node in its column, the same is true for every node in \({\text {tile}}({\mathfrak {d}})\), and in particular neither \(\mathtt {N}({\mathfrak {e}})\) nor \(\mathtt {NW}({\mathfrak {e}})\) is a node of \(\lambda \). However, \({\mathfrak {e}}\) cannot be a removable node of \(\lambda \), since then \({\scriptstyle \overrightarrow{\displaystyle T}}=|t|\) would lie in \(X_{\lambda }\). So \(\mathtt {NE}({\mathfrak {e}})\) is a node of \(\lambda \backslash \mu \). Now \(\mathtt {NE}({\mathfrak {e}})\) is not attached to its NW neighbour (since this is \(\mathtt {N}({\mathfrak {e}})\notin \lambda \)), so no node in column \(x+1\) is attached to its NW neighbour, and so no node in column x is attached to its SE neighbour. This implies that any node in column x of positive depth must be attached to its NE neighbour, while any node in column x of depth 0 is the end node of its tile, and this tile has a NE neighbour. In particular, t has a NE neighbour.

Now suppose \({\mathfrak {a}}\in A\) has depth 1, and write \({\mathfrak {a}}={\text {st}}(t)+(i,i-1)\) for \(i>0\). Then \({\text {tile}}({\mathfrak {a}})\) contains \({\text {st}}(t)+(i,i)\), and hence contains \({\text {en}}(t)+(i,i)\). Since \({\mathfrak {a}}\) has depth 1, \({\text {en}}(t)+(i,i)\) has depth 0, and so from the last paragraph \({\text {en}}(t)+(i,i)\) is the end node of \({\text {tile}}({\mathfrak {a}})\), and \({\text {tile}}({\mathfrak {a}})\) has a NE neighbour.

Now we can construct \(\phi _1(T)\) as follows.

-

For each \({\mathfrak {a}}\in A\) of depth at least 2, change \({\text {tile}}({\mathfrak {a}})\) by replacing \({\mathfrak {a}}\) with \(\mathtt {N}({\mathfrak {a}})\).

-

For each \({\mathfrak {a}}\in A\) of depth 1, let \(u={\text {tile}}({\mathfrak {a}})\). Then u has a NE neighbour v; replace u and v with the following tiles:

-

the tile consisting of the portion of u ending at \(\mathtt {NW}({\mathfrak {a}})\);

-

the tile obtained by combining \(\mathtt {N}({\mathfrak {a}})\), the portion of u starting at \(\mathtt {NE}({\mathfrak {a}})\), and v.

-

-

Finally, let w denote the NE neighbour of t, and replace t and w with the tile obtained by joining \(\mathtt {NW}({\text {st}}(t))\) to t and w.

Clearly all these new tiles are Dyck tiles. It is also clear that the last tile mentioned is a big tile starting in column j. So to see that \(\phi _1(T)\) lies in the codomain, all that remains is to check that \(\phi _1(T)\) is cover-inclusive. But this is easy using (for example) Theorem 3.1(4).

To show that \(\phi _1\) is a bijection, we construct the inverse map

Suppose T is a cover-inclusive Dyck tiling of \(\lambda ^+\backslash \mu ^+\) in which there is at least one big tile starting in column j. Since the highest node in column j [namely \({\mathfrak {l}}\)] is not attached to its NE or NW neighbour, no node in column j is attached to its NE or NW neighbour. So from top to bottom, column j of T consists of:

-

(possibly) some nodes attached to both their SE and SW neighbours;

-

at least one node attached only to its SE neighbour;

-

(possibly) some singleton tiles.

Construct \(\psi _1(T)\) as follows.

-

For each node \({\mathfrak {a}}\) in column j which is attached to its SE and SW neighbours, change \({\text {tile}}({\mathfrak {a}})\) by replacing \({\mathfrak {a}}\) with \(\mathtt {S}({\mathfrak {a}})\).

-

For each node \({\mathfrak {a}}\) in column j which is the start of a big tile, let \({\mathfrak {b}}\) be the first node of \({\text {tile}}({\mathfrak {a}})\) to the right of column j which has the same height as \({\mathfrak {a}}\). Replace \({\text {tile}}({\mathfrak {a}})\) and \({\text {tile}}(\mathtt {SW}({\mathfrak {a}}))\) with the following tiles:

-

the tile consisting of the portion of \({\text {tile}}({\mathfrak {a}})\) starting at \({\mathfrak {b}}\);

-

(if \({\text {tile}}({\mathfrak {a}})\) is the lowest big tile of T starting in column j) the tile consisting of the portion of \({\text {tile}}({\mathfrak {a}})\) running from \(\mathtt {SE}({\mathfrak {a}})\) to \(\mathtt {SW}({\mathfrak {b}})\);

-

(if \({\text {tile}}({\mathfrak {a}})\) is not the lowest big tile of T starting in column j) the tile obtained by joining together \({\text {tile}}(\mathtt {SW}({\mathfrak {a}}))\), the node \(\mathtt {S}({\mathfrak {a}})\) and the portion of \({\text {tile}}({\mathfrak {a}})\) running from \(\mathtt {SE}({\mathfrak {a}})\) to \(\mathtt {SW}({\mathfrak {b}})\).

-

Again, it is easy to check that the new tiles are all Dyck tiles, and checking that \(\psi _1(T)\) is cover-inclusive is straightforward using Theorem 3.1(4).

So \(\psi _1(T)\) is a cover-inclusive Dyck tiling. It remains to check that \({\scriptstyle \overrightarrow{\displaystyle \psi _1(T)}}\notin X_{\lambda }\). Let t be the highest tile in \(\psi _1(T)\) starting in column \(j+1\); then t starts at \(\mathtt {SE}({\mathfrak {a}})\), where \({\mathfrak {a}}\) is the lowest node in column j which is the start of a big tile in T, and ends at \(\mathtt {SW}({\mathfrak {b}})\), where \({\mathfrak {b}}\) is the first node in \({\text {tile}}({\mathfrak {a}})\) to the right of column j with the same height as \({\mathfrak {a}}\). In T, \(\mathtt {SW}({\mathfrak {b}})\) is attached to \({\mathfrak {b}}\), and so every node above \(\mathtt {SW}({\mathfrak {b}})\) in the same column is attached to its NE neighbour in T. In particular, the highest node in this column has a NE neighbour in \(\lambda \), and so is not removable; so \({\scriptstyle \overrightarrow{\displaystyle \psi _1(T)}}\notin X_{\lambda }\).

So our two maps \(\phi _1,\psi _1\) really do map between the specified sets. It is easy to see from the construction that they are mutual inverses. \(\square \)

Symmetrically, we have the following result.

Proposition 3.5

Our next bijective result is the following.

Proposition 3.6

For each \(x\in X_{\lambda }^+\),

Proof

Fix \(x\in X_{\lambda }^+\). Our aim is to define a bijection

Again we begin with an example to illustrate the bijection. In this example \(x=5\), and the nodes in columns j and \(j+x+1\) are shaded, and the tiles \(\rho \) and t (introduced below) are marked with dots.

Since \(x\in X_{\lambda }^+\), there is a Dyck tile \(\rho \subset \lambda \) consisting of the highest nodes in columns \(j+1,\ldots ,j+x\) of \(\lambda \). Take \(T\in {\mathscr {I}}(\lambda ,\mu ^+)\) with \({\scriptstyle \overrightarrow{\displaystyle T}}=x\), and let t be the highest tile in T starting in column \(j+1\). Then every northward translate of t is an interval in a tile; in particular, \({\text {tile}}(\mathtt {SE}({\mathfrak {l}}))\) contains a translate \(t+(h,h)\) for some \(h\geqslant 0\); since \(\mathtt {SE}({\mathfrak {l}})\) is the highest node in its column, the same is true for every node in \({\text {tile}}(\mathtt {SE}({\mathfrak {l}}))\), so the translate \(t+(h,h)\) coincides with \(\rho \).

Consider the nodes in column j of \(\lambda \backslash \mu ^+\). Arguing as in the proof of Proposition 3.4, every node \({\mathfrak {a}}\) in column j which is higher than t is attached to \(\mathtt {NE}({\mathfrak {a}})\) and \(\mathtt {NW}({\mathfrak {a}})\).

Next we consider nodes in column \(j+x\). The highest node in column \(j+x\) of \(\lambda \backslash \mu ^+\) is the end node of \(\rho \), and so is not attached to its NE neighbour (since this node is not in \(\lambda \)); hence no node in column \(j+x\) is attached to its NE neighbour. So no node in column \(j+x+1\) is attached to its SW neighbour. So from top to bottom, the nodes in column \(j+x+1\) higher than \({\text {en}}(t)\) comprise:

-

(possibly) some nodes attached to their NW neighbours;

-

(possibly) some nodes attached to neither their NW nor SW neighbours.

Now we can construct \(\phi _2(T)\) by doing the following, for each pair \(({\mathfrak {a}},{\mathfrak {d}})\) of nodes with \({\mathfrak {a}}\) in column j, \({\mathfrak {d}}\) in column \(j+x+1\) and \({\text {ht}}({\mathfrak {a}})={\text {ht}}({\mathfrak {d}})>{\text {ht}}(t)\).

-

If \({\mathfrak {d}}\) is attached to \(\mathtt {NW}({\mathfrak {d}})\), then \({\mathfrak {a}}\) and \({\mathfrak {d}}\) lie in the same tile in T; change this tile by moving the portion lying in columns \(j+1\) to \(j+x\) south one step.

-

If \({\mathfrak {d}}\) is not attached to \(\mathtt {NW}({\mathfrak {d}})\), then \({\text {en}}({\text {tile}}({\mathfrak {a}}))=\mathtt {NW}({\mathfrak {d}})\) and hence \(\mathtt {NW}({\mathfrak {a}})\) has depth 0. Replace \({\text {tile}}({\mathfrak {a}})\) and \({\text {tile}}({\mathfrak {d}})\) with the following two tiles:

-

the portion of \({\text {tile}}({\mathfrak {a}})\) ending at \(\mathtt {NW}({\mathfrak {a}})\);

-

the tile obtained by joining the portion of \({\text {tile}}(\mathtt {SE}({\mathfrak {a}}))\) between columns \(j+1\) and \(j+x\) to \({\mathfrak {a}}\) and \({\text {tile}}({\mathfrak {d}})\).

-

Finally remove t. It is easy to see that the new tiles are Dyck tiles, and the cover-inclusive property follows very easily from the cover-inclusive property for T.

Now we construct the inverse map

Suppose T is a cover-inclusive Dyck tiling of \(\lambda ^{[x]}\backslash \mu ^+\). No node in column \(j-1\) or column j of \(\lambda ^{[x]}\) can be attached to its NE neighbour (since the NE neighbours of the highest nodes in these columns are not nodes of \(\lambda ^{[x]}\)). Hence from top to bottom the nodes in column j comprise:

-

(possibly) some nodes attached to their NW and SE neighbours;

-

(possibly) some nodes attached only to their SE neighbours;

-

(possibly) some singleton nodes.

Note that if \({\mathfrak {a}}\) is a node in column j which is not attached to \(\mathtt {NW}({\mathfrak {a}})\), then \(\mathtt {NW}({\mathfrak {a}})\) is the end node of its tile; in particular, \({\text {tile}}({\mathfrak {a}})\) has a NW neighbour.

Claim

Suppose \(r\geqslant 1\), and the node \({\mathfrak {a}}:={\mathfrak {l}}-(r,r)\) is attached to \(\mathtt {SE}({\mathfrak {a}})\). Then \({\text {tile}}({\mathfrak {a}})\) reaches column \(j+x+1\) and includes all the nodes in \(\rho -(r,r)\).

Proof

We proceed by induction on r. First suppose \(r=1\). Since \(x\in X_{\lambda }\), every node in columns \(j+1,\ldots ,j+x\) of \(\lambda \) has height less than \({\text {ht}}({\mathfrak {l}})\). Hence, every node in columns \(j+1,\ldots ,j+x\) of \(\lambda ^{[x]}\) has height less than \({\text {ht}}({\mathfrak {l}})-2={\text {ht}}({\mathfrak {a}})\); since \({\text {tile}}({\mathfrak {a}})\) is a Dyck tile, it must reach \({\text {ht}}({\mathfrak {a}})\) at some point to the right of \(\mathtt {SE}({\mathfrak {a}})\), and so must reach column \(x+j+1\). Furthermore, \({\mathfrak {a}}\) is the highest node in its column, so every node in \({\text {tile}}({\mathfrak {a}})\) is the highest in its column, and in particular the portion of \({\text {tile}}({\mathfrak {a}})\) between columns \(j+1\) and \(j+x\) must consist of the highest nodes in these columns, i.e. the nodes in \(\rho -(1,1)\).

Now suppose \(r>1\). By induction \({\text {tile}}({\mathfrak {a}})\) cannot include any of the nodes in \(\rho -(v,v)\) for any \(v<r\); all the remaining nodes in columns \(j+1,\ldots ,j+r\) have height less than \({\text {ht}}({\mathfrak {a}})\), and so (since \({\text {tile}}({\mathfrak {a}})\) must reach \({\text {ht}}({\mathfrak {a}})\) at some point to the right of \(\mathtt {SE}({\mathfrak {a}})\)) \({\text {tile}}({\mathfrak {a}})\) must reach column \(j+x+1\). The translate \({\text {tile}}({\mathfrak {a}})+(1,1)\) is contained in \({\text {tile}}(\mathtt {N}({\mathfrak {a}}))\), and by induction this includes the nodes in \(\rho -(r-1,r-1)\); so \({\text {tile}}({\mathfrak {a}})\) includes the nodes in \(\rho -(r,r)\).

Now we consider the nodes in column \(j+x+1\). If \({\mathfrak {d}}\) is a node in column \(j+x+1\), then by similar arguments to those used above, \({\mathfrak {d}}\) cannot be attached to \(\mathtt {NW}({\mathfrak {d}})\), and if \({\mathfrak {d}}\) is attached to \(\mathtt {SW}({\mathfrak {d}})\), then \({\text {tile}}({\mathfrak {d}})\) includes a node in column j and also includes all the nodes in \(\rho -(r,r)\), where \(r=\frac{1}{2}({\text {ht}}({\mathfrak {l}})-{\text {ht}}({\mathfrak {d}}))\).

So we find that if \({\mathfrak {a}}\) and \({\mathfrak {d}}\) are nodes in columns j and \(j+x+1\), respectively, with the same height, then \({\mathfrak {a}}\) is attached to \(\mathtt {SE}({\mathfrak {a}})\) if and only if \({\mathfrak {d}}\) is attached to \(\mathtt {SW}({\mathfrak {d}})\), and that in this case \({\text {tile}}({\mathfrak {a}})={\text {tile}}({\mathfrak {d}})\). Say that such a pair \(({\mathfrak {a}},{\mathfrak {d}})\) is a connected pair. Now we can construct \(\psi _2(T)\) as follows: for each connected pair \(({\mathfrak {a}},{\mathfrak {d}})\), write \({\mathfrak {a}}={\mathfrak {l}}-(r,r)\) and then:

-

if \({\mathfrak {a}}\) is attached to \(\mathtt {NW}({\mathfrak {a}})\), change \({\text {tile}}({\mathfrak {a}})\) by replacing the portion \(\rho -(r,r)\) with \(\rho -(r-1,r-1)\);

-

if \({\mathfrak {a}}\) is not attached to \(\mathtt {NW}({\mathfrak {a}})\), replace \({\text {tile}}(\mathtt {NW}({\mathfrak {a}}))\) and \({\text {tile}}({\mathfrak {a}})\) with the following two tiles:

-

the tile comprising \({\mathfrak {a}}\), \({\text {tile}}(\mathtt {NW}({\mathfrak {a}}))\) and \(\rho -(r-1,r-1)\);

-

the portion of \({\text {tile}}({\mathfrak {a}})\) starting at \({\mathfrak {d}}\).

-

Finally, add the tile \(\rho -(r,r)\), where r is the number of connected pairs.

Once more, we see that the new tiles are all Dyck tiles, and the cover-inclusive property is easy to check from the construction. Furthermore, the last tile mentioned above is the highest tile starting in column \(j+1\), and has size x.

So \(\psi _2\) really does map \({\mathscr {I}}(\lambda ^{[x]},\mu ^+)\) to \(\left\{ \left. T\in {\mathscr {I}}(\lambda ,\mu ^+)\ \right| \ \smash {{\scriptstyle \overrightarrow{\displaystyle T}}=x}\right\} \). And it is easy to see that \(\phi _2\) and \(\psi _2\) are mutual inverses. \(\square \)

Symmetrically, we have the following.

Proposition 3.7

For each \(x\in X_{\lambda }^-\),

Our third bijection involves the partition \({\lambda ^+}\).

Proposition 3.8

Proof

First observe that \(\lambda \supseteq \mu \) if and only if \(\lambda ^+\supseteq \mu ^+\), so we may as well assume that both these conditions hold. Next note that if \({\mathfrak {l}}={\mathfrak {m}}\), then \(\lambda \backslash \mu =\lambda ^+\backslash \mu ^+\), and this skew Young diagram contains no nodes in column j; in particular, it does not include \({\mathfrak {m}}\). So in this case, Proposition 3.8 amounts to the trivial statement \({\text {i}}_{\lambda \mu }={\text {i}}_{\lambda ^+\mu ^+}\).

So we assume that \(\lambda \supset \mu \) and \({\mathfrak {l}}\ne {\mathfrak {m}}\); so \({\mathfrak {m}}\) is a node of \(\lambda \backslash \mu \). Now we want to construct a bijection

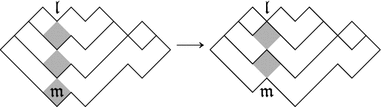

The following diagrams gives an illustrative example of how our bijection works; the nodes in the same column as \({\mathfrak {l}}\) and \({\mathfrak {m}}\) are shaded to make it easier to see the effect on these nodes.

Suppose we have \(T\in {\mathscr {I}}(\lambda ,\mu )\) with \({\mathfrak {m}}\) lying in a big tile. Then \({\mathfrak {m}}\) is attached to both \(\mathtt {NE}({\mathfrak {m}})\) and \(\mathtt {NW}({\mathfrak {m}})\), and hence every node \({\mathfrak {a}}\) in column j is attached to both \(\mathtt {NE}({\mathfrak {a}})\) and \(\mathtt {NW}({\mathfrak {a}})\). In particular, every node in column j has depth at least 1. Now we construct \(\phi _3(T)\) from T as follows. For each node \({\mathfrak {a}}\) in column j:

-

if \({\mathfrak {a}}\) has depth greater than 1, then change \({\text {tile}}({\mathfrak {a}})\) by replacing \({\mathfrak {a}}\) with \(\mathtt {N}({\mathfrak {a}})\);

-

if \({\mathfrak {a}}\) has depth 1, then replace \({\text {tile}}({\mathfrak {a}})\) with the following three tiles:

-

the portion of \({\text {tile}}({\mathfrak {a}})\) ending in column \(j-1\);

-

the portion of \({\text {tile}}({\mathfrak {a}})\) starting in column \(j+1\);

-

the singleton tile \(\{\mathtt {N}({\mathfrak {a}})\}\).

-

Clearly all these new tiles are Dyck tiles. The cover-inclusive property follows easily from the corresponding property of T, and in particular the fact [Theorem 3.1(2)] that depth weakly decreases down columns in a cover-inclusive tiling. Moreover, \(\phi _3(T)\) has no big tile starting or ending in column j, so lies in the codomain.

To show that \(\phi _3\) is a bijection, we construct its inverse

Suppose \(T\in {\mathscr {I}}(\lambda ^+,\mu ^+)\), and that there is no big tile in T starting or ending in column j. The highest node in column j of \(\lambda ^+\backslash \mu ^+\), namely \({\mathfrak {l}}\), is not attached to \(\mathtt {NE}({\mathfrak {l}})\) or \(\mathtt {NW}({\mathfrak {l}})\) (since these are not nodes of \({\lambda ^+}\)) and so no node in column j is attached to its NE or NW neighbour. So every node in column j is either a singleton or attached to both its SE and SW neighbours. Now we can construct \(\psi _3(T)\) from T as follows: for each node \({\mathfrak {a}}\) in column j, replace the tiles \({\text {tile}}({\mathfrak {a}})\), \({\text {tile}}(\mathtt {SW}({\mathfrak {a}}))\), \({\text {tile}}(\mathtt {SE}({\mathfrak {a}}))\) (which might or might not coincide) with the tile obtained by taking the union of these three tiles and replacing \({\mathfrak {a}}\) with \(\mathtt {S}({\mathfrak {a}})\).

Again, it is easy to check that \(\psi _3(T)\) is a cover-inclusive Dyck tiling, and clearly \({\mathfrak {m}}\) lies in a big tile in \(\psi _3(T)\). Furthermore, it is easy to see that \(\phi _3\) and \(\psi _3\) are mutually inverse. \(\square \)

Now we combine Propositions 3.4–3.8 to prove our main recurrence. We retain the assumptions and notation from above.

Our main result is as follows. Recall that \({\text {i}}_{\lambda \mu }\) denotes the total number of cover-inclusive Dyck tilings of \(\lambda \backslash \mu \).

Proposition 3.9

With notation as above,

Proof

By Proposition 3.6, we have

So

by Proposition 3.4.

Symmetrically, we have

Now consider \({\text {i}}_{\lambda \mu }\). Obviously we have

and by Proposition 3.8

Hence

Since a cover-inclusive Dyck tiling cannot contain big tiles starting and ending in the same column, the sum of the last three terms is \({\text {i}}_{\lambda ^+\mu ^+}\), and we are done. \(\square \)

4 Cover-expansive Dyck tilings

In this section we consider cover-expansive Dyck tilings, proving similar (though considerably simpler) recurrences to those in Sect. 3.

4.1 Basic properties

We begin by studying left- and right-cover-expansive Dyck tilings. In contrast to cover-inclusive Dyck tilings, it is not the case that the left-cover-expansive and right-cover-expansive conditions are equivalent. For example, the unique Dyck tiling of \((2)\backslash \varnothing \) is left- but not right-cover-expansive.

We begin with equivalent conditions to the left- and right-cover-expansive conditions.

Proposition 4.1

Suppose \(\lambda \) and \(\mu \) are partitions with \(\lambda \supseteq \mu \), and T is a Dyck tiling of \(\lambda \backslash \mu \).

-

1.

T is left-cover-expansive if and only if for every tile t in T, we have \(\mathtt {NW}({\text {st}}(t))\notin \lambda \backslash \mu \).

-

2.

T is right-cover-expansive if and only if for every tile t in T, we have \(\mathtt {NE}({\text {en}}(t))\notin \lambda \backslash \mu \).

Proof

We prove (1); the proof of (2) is similar.

Suppose T is left-cover-expansive. Given a tile t, let \({\mathfrak {a}}=\mathtt {NW}({\text {st}}(t))\). If \({\mathfrak {a}}\in \lambda \backslash \mu \), then by the left-cover-expansive property \({\text {st}}({\text {tile}}({\mathfrak {a}}))\) lies weakly to the right of \({\text {st}}(t)\); but \({\mathfrak {a}}\) lies strictly to the left of \({\text {st}}(t)\), a contradiction.

Conversely, suppose the given property holds, and \({\mathfrak {a}},\mathtt {SE}({\mathfrak {a}})\) are nodes of \(\lambda \backslash \mu \). Let \({\mathfrak {b}}={\text {st}}({\text {tile}}(\mathtt {SE}({\mathfrak {a}})))\), and suppose \({\mathfrak {b}}\) lies in column i. Then \(\mathtt {NW}({\mathfrak {b}})\notin \lambda \backslash \mu \), and hence there are no nodes in column \(i-1\) of \(\lambda \backslash \mu \) higher than \({\mathfrak {b}}\) (otherwise \(\lambda \backslash \mu \) would not be a skew Young diagram). In particular, there are no nodes of \({\text {tile}}({\mathfrak {a}})\) in column \(i-1\), and hence \({\text {st}}({\text {tile}}({\mathfrak {a}}))\) lies weakly to the right of \({\mathfrak {b}}\). \(\square \)

Lemma 4.2

Suppose \(\lambda ,\mu \) are partitions with \(\lambda \supseteq \mu \), and T is a left-cover-expansive Dyck tiling of \(\lambda \backslash \mu \). If \({\mathfrak {a}}\in \lambda \backslash \mu \) is the lowest node in its column, then every node in \({\text {tile}}({\mathfrak {a}})\) to the right of \({\mathfrak {a}}\) is the lowest node in its column.

Proof

Suppose \({\mathfrak {a}}\) lies in column i, and proceed by induction on the number of nodes to the right of \({\mathfrak {a}}\) in \({\text {tile}}({\mathfrak {a}})\). Assuming \({\mathfrak {a}}\) is not the end node of its tile, there is a node \({\mathfrak {b}}\) in \({\text {tile}}({\mathfrak {a}})\) in column \(i+1\), which must be either \(\mathtt {NE}({\mathfrak {a}})\) or \(\mathtt {SE}({\mathfrak {a}})\). The only way \({\mathfrak {b}}\) can fail to be the lowest node in column \(i+1\) is if \({\mathfrak {b}}=\mathtt {NE}({\mathfrak {a}})\) and \(\mathtt {SE}({\mathfrak {a}})\in \lambda \backslash \mu \). But in this case, \(\mathtt {SE}({\mathfrak {a}})\) is not attached to \({\mathfrak {a}}\) or to \(\mathtt {S}({\mathfrak {a}})\) (which is not a node of \(\lambda \backslash \mu \)), and so \(\mathtt {SE}({\mathfrak {a}})\) is the start of its tile; but this contradicts Proposition 4.1.

So \({\mathfrak {b}}\) is the lowest node in its column. By induction every node to the right of \({\mathfrak {b}}\) in the same tile is the lowest node in its column, and we are done. \(\square \)

Proposition 4.3

Suppose \(\lambda ,\mu \) are partitions with \(\lambda \supseteq \mu \). Then \(\lambda \backslash \mu \) admits at most one left-cover-expansive Dyck tiling, and at most one right-cover-expansive Dyck tiling. If \(\lambda \backslash \mu \) admits both a left- and a right-cover-expansive Dyck tiling, then these tilings coincide.

Proof

We use induction on \(|\lambda \backslash \mu |\). If \(\lambda =\mu \) then the result is trivial, so assume \(\lambda \supset \mu \). Let \({\mathfrak {a}}\) be the unique leftmost node of \(\lambda \backslash \mu \), and suppose \({\mathfrak {a}}\) lies in column i. Let \(m\geqslant 0\) be maximal such that columns \(i,\ldots ,i+m\) each contain a node of height at most \({\text {ht}}({\mathfrak {a}})\). Suppose T is a left-cover-expansive Dyck tiling of \(\lambda \backslash \mu \).

Claim 1

\({\text {tile}}({\mathfrak {a}})\) consists of the lowest nodes in columns \(i,\ldots ,i+m\).

Proof

Since \({\mathfrak {a}}\) is the start node of its tile, every node in \({\text {tile}}({\mathfrak {a}})\) has height at most \({\text {ht}}({\mathfrak {a}})\), and in particular \({\text {tile}}({\mathfrak {a}})\) cannot contain a node in column \(i+m+1\) or further to the right.

Now we prove by induction on l that \({\text {tile}}({\mathfrak {a}})\) contains the lowest node in column l, for \(i\leqslant l\leqslant i+m\). Let \({\mathfrak {b}}\) be the lowest node in column l; then (assuming \(l>i\)) the lowest node in column \(l-1\) is either \(\mathtt {NW}({\mathfrak {b}})\) or \(\mathtt {SW}({\mathfrak {b}})\). In the first case, \({\mathfrak {b}}\) cannot be the start of its tile, by Proposition 4.1; \({\mathfrak {b}}\) cannot be attached to \(\mathtt {SW}({\mathfrak {b}})\), since this is not a node of \(\lambda \backslash \mu \), and so \({\mathfrak {b}}\) is attached to \(\mathtt {NW}({\mathfrak {b}})\), and hence lies in \({\text {tile}}({\mathfrak {a}})\). In the second case, the fact that \({\text {ht}}(\mathtt {SW}({\mathfrak {b}}))<{\text {ht}}({\mathfrak {b}})\leqslant {\text {ht}}({\mathfrak {a}})\) means that \(\mathtt {SW}({\mathfrak {b}})\) cannot be the end node of \({\text {tile}}({\mathfrak {a}})\), so is attached to either \({\mathfrak {b}}\) or \(\mathtt {S}({\mathfrak {b}})\); but \(\mathtt {S}({\mathfrak {b}})\) is not a node of \(\lambda \backslash \mu \), and hence \(\mathtt {SW}({\mathfrak {b}})\) is attached to \({\mathfrak {b}}\), i.e. \({\mathfrak {b}}\in {\text {tile}}({\mathfrak {a}})\).

The definition of m means that the nodes in \({\text {tile}}({\mathfrak {a}})\) can be removed to leave a smaller skew Young diagram \(\lambda \backslash \nu \), and the fact that T is left-cover-expansive means that \(T\setminus \{{\text {tile}}({\mathfrak {a}})\}\) is a left-cover-expansive Dyck tiling of \(\lambda \backslash \nu \). By induction on \(|\lambda \backslash \mu |\) there is at most one such tiling, and so T is uniquely determined.

So there is at most one left-cover-expansive Dyck tiling of \(\lambda \backslash \mu \), and similarly at most one right-cover-expansive Dyck tiling. To prove the final statement, we continue to assume that \(\lambda \backslash \mu \) is non-empty; we choose a connected component \({\mathcal {C}}\) of \(\lambda \backslash \mu \), and let \({\mathfrak {a}}\) denote the unique leftmost node of \({\mathcal {C}}\), and \({\mathfrak {c}}\) the unique rightmost node of \({\mathcal {C}}\). (So there is no node in the column to the left of the column containing \({\mathfrak {a}}\) or in the column to the right of the column containing \({\mathfrak {c}}\), but there are nodes in all columns in between.)

Claim 2

Suppose there exists a left-cover-expansive Dyck tiling T of \(\lambda \backslash \mu \). Then \({\text {ht}}({\mathfrak {a}})\leqslant {\text {ht}}({\mathfrak {c}})\), and if equality occurs then \({\mathfrak {a}}\) and \({\mathfrak {c}}\) lie in the same tile in T.

Proof

Let \({\mathfrak {b}}_1,\ldots ,{\mathfrak {b}}_r\) be the nodes in \({\mathcal {C}}\) which are both the end nodes of their tiles and the lowest nodes in their columns, numbering them so that they appear in order from left to right. Then \({\mathfrak {b}}_1={\text {en}}({\text {tile}}({\mathfrak {a}}))\) by Claim 1, and \({\mathfrak {b}}_r={\mathfrak {c}}\). We claim that \({\text {ht}}({\mathfrak {b}}_1)<\cdots <{\text {ht}}({\mathfrak {b}}_r)\). Given \(1\leqslant l<r\), let \({\mathfrak {d}}\) be the lowest node in the column to the right of \({\mathfrak {b}}_l\). Then \({\mathfrak {d}}\) is either \(\mathtt {NE}({\mathfrak {b}}_l)\) or \(\mathtt {SE}({\mathfrak {b}}_l)\); but in the latter case, \({\mathfrak {d}}\) must be the start node of its tile, and this contradicts Theorem 3.1. So \({\mathfrak {d}}=\mathtt {NE}({\mathfrak {b}}_l)\), and in particular \({\text {ht}}({\mathfrak {d}})>{\text {ht}}({\mathfrak {b}}_l)\). By Lemma 4.2 \({\text {en}}({\text {tile}}({\mathfrak {d}}))={\mathfrak {b}}_{l+1}\), and hence \({\text {ht}}({\mathfrak {b}}_{l+1})={\text {ht}}({\mathfrak {d}})>{\text {ht}}({\mathfrak {b}}_l)\).

So we have \({\text {ht}}({\mathfrak {a}})={\text {ht}}({\mathfrak {b}}_1)<\cdots <{\text {ht}}({\mathfrak {b}}_r)={\text {ht}}({\mathfrak {c}})\), so \({\text {ht}}({\mathfrak {a}})\leqslant {\text {ht}}({\mathfrak {c}})\), with equality if and only if \(r=1\), in which case \({\mathfrak {c}}={\mathfrak {b}}_1={\text {en}}({\text {tile}}({\mathfrak {a}}))\).

Now we can complete the proof. Assume there is a left-cover-expansive Dyck tiling T of \(\lambda \backslash \mu \) and a right-cover-expansive Dyck tiling U. By Claim 2, we have \({\text {ht}}({\mathfrak {a}})\leqslant {\text {ht}}({\mathfrak {c}})\), and symmetrically (since U exists) we have \({\text {ht}}({\mathfrak {a}})\geqslant {\text {ht}}({\mathfrak {c}})\). Hence \({\text {ht}}({\mathfrak {a}})={\text {ht}}({\mathfrak {c}})\), and so \({\mathfrak {a}},{\mathfrak {c}}\) lie in the same tile \(t\in T\), which consists of the lowest node in every column of \({\mathcal {C}}\). Similarly, t is a tile in U. Removing t from \(\lambda \backslash \mu \) yields a smaller skew Young diagram, and \(T\setminus \{t\}\) is a left-cover-expansive Dyck tiling of this diagram, while \(U\setminus \{t\}\) is a right-cover-expansive Dyck tiling. By induction \(T\setminus \{t\}=U\setminus \{t\}\), and hence \(T=U\). \(\square \)

Now we restrict attention to cover-expansive Dyck tilings. We shall need the following lemma, which examines the effect of the cover-expansive property on depths of nodes.

Lemma 4.4

Suppose \(\lambda \supseteq \mu \), T is a cover-expansive Dyck tiling of \(\lambda \backslash \mu \), and \({\mathfrak {a}},\mathtt {N}({\mathfrak {a}})\in \lambda \backslash \mu \). Then \({\text {dp}}(\mathtt {N}({\mathfrak {a}}))<{\text {dp}}({\mathfrak {a}})\).

Proof

Suppose the lemma is false, and take \({\mathfrak {a}}\in \lambda \backslash \mu \) as far to the left as possible such that \(\mathtt {N}({\mathfrak {a}})\in \lambda \backslash \mu \) and \({\text {dp}}(\mathtt {N}({\mathfrak {a}}))\geqslant {\text {dp}}({\mathfrak {a}})\). We have \(\mathtt {NW}({\mathfrak {a}})\in \lambda \backslash \mu \), and \(\mathtt {NW}({\mathfrak {a}})\) cannot be the end node of its tile by Proposition 4.1, so is attached to either \({\mathfrak {a}}\) or \(\mathtt {N}({\mathfrak {a}})\).

If \(\mathtt {NW}({\mathfrak {a}})\) is attached to \(\mathtt {N}({\mathfrak {a}})\), then \({\mathfrak {a}}\) must be attached to \(\mathtt {SW}({\mathfrak {a}})\) since it cannot be the start node of its tile (again by Proposition 4.1). But now \({\text {dp}}(\mathtt {NW}({\mathfrak {a}}))-{\text {dp}}(\mathtt {SW}({\mathfrak {a}}))=({\text {dp}}(\mathtt {N}({\mathfrak {a}}))+1)-({\text {dp}}({\mathfrak {a}})+1)\geqslant 0\), contradicting the choice of \({\mathfrak {a}}\).

So suppose instead that \(\mathtt {NW}({\mathfrak {a}})\) is attached to \({\mathfrak {a}}\). Then \(\mathtt {N}({\mathfrak {a}})\) cannot be the start node of its tile, since \({\text {dp}}(\mathtt {N}({\mathfrak {a}}))\geqslant {\text {dp}}({\mathfrak {a}})={\text {dp}}(\mathtt {NW}({\mathfrak {a}}))+1>0\). So \(\mathtt {N}({\mathfrak {a}})\) is attached to \(\mathtt {NW}(\mathtt {N}({\mathfrak {a}}))\). But now \({\text {dp}}(\mathtt {NW}(\mathtt {N}({\mathfrak {a}})))-{\text {dp}}(\mathtt {NW}({\mathfrak {a}}))=({\text {dp}}(\mathtt {N}({\mathfrak {a}}))-1)-({\text {dp}}({\mathfrak {a}})-1)\geqslant 0\), and again the choice of \({\mathfrak {a}}\) is contradicted. \(\square \)

We remark that, in contrast to the similar condition in Theorem 3.1(2) for cover-inclusive Dyck tilings, the condition in Lemma 4.4 does not imply the cover-expansive condition. For example, the unique Dyck tiling of \((2)\backslash \varnothing \) satisfies this condition (trivially) but is not right-cover-expansive.

The following lemma will be useful in the next section.

Lemma 4.5

Suppose \(\lambda \supseteq \mu \), and that \(\mu \) has an addable node in column j. If there exists a cover-expansive Dyck tiling of \(\lambda \backslash \mu \), then \(\lambda \) has either an addable or a removable node in column j.

Proof

Suppose not, and let T be the cover-expansive Dyck tiling of \(\lambda \backslash \mu \). There must be at least one node in column j of \(\lambda \backslash \mu \) (otherwise the addable node of \(\mu \) would also be an addable node of \(\lambda \)). Let \({\mathfrak {a}}\) be the highest node in column j, and consider the nodes attached to \({\mathfrak {a}}\) in T.

The lowest node in column j of \(\lambda \backslash \mu \), namely \({\mathfrak {m}}\), is not attached to its SW neighbour (since this is not a node of \(\lambda \backslash \mu \)). Now let \({\mathfrak {b}}\) be the highest node in column j which is not attached to its SW neighbour, and suppose \({\mathfrak {b}}\ne {\mathfrak {a}}\). Then the choice of \({\mathfrak {b}}\) means that \(\mathtt {NW}({\mathfrak {b}})\) is attached to \(\mathtt {N}({\mathfrak {b}})\), so \({\mathfrak {b}}\) is the start node of its tile; but \(\mathtt {NW}({\mathfrak {b}})\in \lambda \backslash \mu \), and this contradicts Proposition 4.1. So \({\mathfrak {b}}={\mathfrak {a}}\).

So \({\mathfrak {a}}\) is not attached to \(\mathtt {SW}({\mathfrak {a}})\), and symmetrically is not attached to \(\mathtt {SE}({\mathfrak {a}})\). If \({\mathfrak {a}}\) is attached to either \(\mathtt {NW}({\mathfrak {a}})\) or \(\mathtt {NE}({\mathfrak {a}})\), then (since T is a Dyck tiling) it is attached to both \(\mathtt {NW}({\mathfrak {a}})\) and \(\mathtt {NE}({\mathfrak {a}})\), and hence both of these nodes belong to \(\lambda \), so \(\lambda \) has an addable node in column j. The remaining possibility is that \({\mathfrak {a}}\) is a singleton tile. But now Proposition 4.1 implies that neither \(\mathtt {NW}({\mathfrak {a}})\) nor \(\mathtt {NE}({\mathfrak {a}})\) is a node of \(\lambda \backslash \mu \), so \(\lambda \) has a removable node in column j. \(\square \)

4.2 Recurrences

We now reassume the notation in Sect. 3: \(\lambda ,\mu \) are partitions with addable nodes \({\mathfrak {l}},{\mathfrak {m}}\), respectively, in column j, and \({\lambda ^+},\mu ^+\) are the partitions obtained by adding these nodes.

Proposition 4.6

Suppose \(\lambda \supseteq \mu ^+\), and let \({\mathfrak {a}}=\mathtt {S}({\mathfrak {l}})\). Then:

-

1.

there exists a cover-expansive Dyck tiling of \(\lambda \backslash \mu \) in which \({\mathfrak {a}}\) has depth 1 if and only if there exists a cover-expansive Dyck tiling of \(\lambda \backslash \mu ^+\);

-

2.

there exists a cover-expansive Dyck tiling of \(\lambda \backslash \mu \) in which \({\mathfrak {a}}\) has depth greater than 1 if and only if there exists a cover-expansive Dyck tiling of \(\lambda ^+\backslash \mu ^+\).

Proof

Suppose there is a cover-expansive Dyck tiling T of \(\lambda \backslash \mu \). Arguing as in the proof of Lemma 4.5, \({\mathfrak {a}}\) cannot be attached to either \(\mathtt {SE}({\mathfrak {a}})\) or \(\mathtt {SW}({\mathfrak {a}})\) (since \(\mu \) has an addable node in column j), and \(\{{\mathfrak {a}}\}\) cannot be a singleton tile (since \(\lambda \) has an addable node in column j). So \({\mathfrak {a}}\) is attached to both \(\mathtt {NW}({\mathfrak {a}})\) and \(\mathtt {NE}({\mathfrak {a}})\). So by the cover-expansive conditions, every node in column j is attached to its NE and NW neighbours.

-

1.

Suppose \({\mathfrak {a}}\) has depth 1 in T. We construct a cover-expansive Dyck tiling U of \(\lambda \backslash \mu ^+\) from T as illustrated in the following diagram.

Formally, to construct a cover-expansive Dyck tiling of \(\lambda \backslash \mu ^+\):

-

replace \({\text {tile}}({\mathfrak {a}})\) with the two tiles comprising \({\text {tile}}({\mathfrak {a}})\setminus \{{\mathfrak {a}}\}\);

-

for every node \({\mathfrak {b}}\ne {\mathfrak {a}}\) in column j, change \({\text {tile}}({\mathfrak {b}})\) by replacing \({\mathfrak {b}}\) with \(\mathtt {N}({\mathfrak {b}})\).

It is easy to check that U really does give a cover-expansive Dyck tiling. For the other direction, suppose T is a cover-expansive Dyck tiling of \(\lambda \backslash \mu ^+\). We claim that every node in column j must be attached to its SW and SE neighbours. If there are no nodes in column j (i.e. if \({\mathfrak {m}}=\mathtt {S}({\mathfrak {l}})\)) then this statement is trivial, so suppose otherwise; then \({\mathfrak {a}}\in \lambda \backslash \mu ^+\). \({\mathfrak {a}}\) cannot be attached to \(\mathtt {NW}({\mathfrak {a}})\) in T, since then every node in column \(j-1\) would be attached to its SE neighbour; but the SE neighbour of the bottom node in column \(j-1\) is not a node of \(\lambda \backslash \mu ^+\). Since \({\mathfrak {a}}\) is not attached to \(\mathtt {NW}({\mathfrak {a}})\), it must be attached to \(\mathtt {SW}({\mathfrak {a}})\). Similarly \({\mathfrak {a}}\) is attached to \(\mathtt {SE}({\mathfrak {a}})\), and the cover-expansive property implies that every node in column j is attached to its SE and SW neighbours as claimed. Now we construct a cover-expansive Dyck tiling of \(\lambda \backslash \mu \) as follows:

-

for each node \({\mathfrak {b}}\) in column j, change \({\text {tile}}({\mathfrak {b}})\) by replacing \({\mathfrak {b}}\) with \(\mathtt {S}({\mathfrak {b}})\);

-

replace the tiles \({\text {tile}}(\mathtt {NW}({\mathfrak {a}}))\) and \({\text {tile}}(\mathtt {NE}({\mathfrak {a}}))\) with the tile obtained by joining these two tiles to \({\mathfrak {a}}\).

Again, it is easy to see that we have a cover-expansive Dyck tiling. Moreover, \({\mathfrak {a}}\) has depth 1 in this tiling, since \(\mathtt {NW}({\mathfrak {a}})\) is the end node of its tile in T, and so has depth 0 (in both tilings).

-

-

2.

Suppose \({\mathfrak {a}}\) has depth greater than 1 in T. Construct a cover-expansive Dyck tiling U of \(\lambda ^+\backslash \mu ^+\) from T as follows: for each node \({\mathfrak {b}}\) in column j, change \({\text {tile}}({\mathfrak {b}})\) by replacing \({\mathfrak {b}}\) with \(\mathtt {N}({\mathfrak {b}})\). Again, U is a Dyck tiling, since every node in column j of \(\lambda \backslash \mu \) has depth greater than 1 in T. And the cover-expansive property for U follows from that for T. The other direction is very similar.\(\square \)

Corollary 4.7

\({\text {e}}_{\lambda \mu }={\text {e}}_{\lambda \mu ^+}+{\text {e}}_{\lambda ^+\mu ^+}\).

Proof

First suppose \(\lambda \supseteq \mu ^+\). The first paragraph of the proof of Proposition 4.6 shows that the node \({\mathfrak {a}}=\mathtt {S}({\mathfrak {l}})\) must have positive depth in a cover-expansive Dyck tiling of \(\lambda \backslash \mu \). Hence the result follows from Proposition 4.6.

Alternatively, suppose \(\lambda \nsupseteq \mu ^+\). Then we have \(\lambda \supseteq \mu \) if and only if \(\lambda ^+\supseteq \mu ^+\), and if these conditions hold then \(\lambda \backslash \mu =\lambda ^+\backslash \mu ^+\); so \({\text {e}}_{\lambda \mu }={\text {e}}_{\lambda ^+\mu ^+}\). \(\square \)

Proposition 4.8

\({\text {e}}_{\lambda ^+\mu }={\text {e}}_{\lambda \mu }\).

Proof

Suppose T is a cover-expansive Dyck tiling of \(\lambda ^+\backslash \mu \). Then \({\mathfrak {l}}\) cannot be attached to \(\mathtt {SE}({\mathfrak {l}})\), since then every node in column j would be attached to its SE neighbour; but \(\mathtt {SE}({\mathfrak {m}})\) is not a node of \(\lambda ^+\backslash \mu \). Similarly \({\mathfrak {l}}\) is not attached to \(\mathtt {SW}({\mathfrak {l}})\), so forms a singleton tile in T. Removing this tile yields a cover-expansive Dyck tiling of \(\lambda \backslash \mu \).

In the other direction, suppose T is a cover-expansive Dyck tiling of \(\lambda \backslash \mu \). From the proof of Proposition 4.6, \(\mathtt {S}({\mathfrak {l}})\) is attached to both \(\mathtt {SE}({\mathfrak {l}})\) and \(\mathtt {SW}({\mathfrak {l}})\). Hence if we add \({\mathfrak {l}}\) as a singleton tile, the resulting tiling is a cover-expansive Dyck tiling of \(\lambda ^+\backslash \mu \). \(\square \)

5 Young permutation modules for two-part compositions

In this section we apply our results on Dyck tilings to compute transition coefficients for two bases for a certain module for the symmetric group.

5.1 The Young permutation module \(\mathscr {M}^{(f,g)}\)

Suppose \(k\geqslant 0\), and let \({\mathfrak {S}}_k\) denote the symmetric group of degree k, with \(\{t_1,\ldots ,t_{k-1}\}\) the set of Coxeter generators (so \(t_i\) is the transposition  ). Let \({\mathbb {F}}\) be any field, and consider the group algebra \({\mathbb {F}}{\mathfrak {S}}_k\). Define \(s_i=t_i-1\in {\mathbb {F}}{\mathfrak {S}}_k\) for \(i=1,\ldots ,n-1\).

). Let \({\mathbb {F}}\) be any field, and consider the group algebra \({\mathbb {F}}{\mathfrak {S}}_k\). Define \(s_i=t_i-1\in {\mathbb {F}}{\mathfrak {S}}_k\) for \(i=1,\ldots ,n-1\).

Now write \(k=f+g\) with f, g non-negative integers. Consider the Young permutation module \(\mathscr {M}^{(f,g)}\) for \({\mathbb {F}}{\mathfrak {S}}_k\) indexed by the composition (f, g). This is just the permutation module on the set of cosets of the maximal Young subgroup \({\mathfrak {S}}_f\times {\mathfrak {S}}_g\), and has the following presentation:

Lemma 5.1

For \(1\leqslant i\leqslant k-2\), the element \(s_is_{i+1}s_i-s_i=t_is_{i+1}s_i-s_{i+1}s_i-s_i\) annihilates \(\mathscr {M}^{(f,g)}\).

Proof

In terms of permutations, the given element is

\(\mathscr {M}^{(f,g)}\) may be viewed as the permutation module on the set of f-subsets of the set \(\{1,\ldots ,k\}\); it is easily seen that the given element kills any such subset. \(\square \)

\(\mathscr {M}^{(f,g)}\) has a basis indexed by the set of minimal left coset representatives of \({\mathfrak {S}}_f\times {\mathfrak {S}}_g\) in \({\mathfrak {S}}_k\); this set is in one-to-one correspondence with the set \({\mathscr {P}}_{f,g}\) of partitions \(\lambda \) for which \(\lambda _1\leqslant f\) and \(\lambda _1'\leqslant g\); given \(\lambda \in {\mathscr {P}}_{f,g}\), we write the corresponding basis element as \(t_\lambda m\), where

Our objective here is to study the elements

for \(\lambda \in {\mathscr {P}}_{f,g}\). It is easy to see that \(\left\{ \smash {s_\lambda m}\ \left| \ \lambda \in {\mathscr {P}}_{f,g}\right. \right\} \) is also a basis for \(\mathscr {M}^{(f,g)}\), since when each \(s_\lambda m\) is expressed as a linear combination of the elements \(t_\mu m\), the matrix of coefficients is unitriangular with respect to a suitable ordering.

We shall use cover-expansive Dyck tilings to describe this transition matrix explicitly, and then describe its inverse using cover-inclusive Dyck tilings. We also give a simple expression for the sum \(\sum _{\lambda \in {\mathscr {P}}_{f,g}}t_\lambda m\) as a linear combination of the elements \(s_\lambda m\), which will be useful in Sect. 6.

5.2 Change of basis

Our first result on transition coefficients is the following.

Theorem 5.2

Suppose f, g are non-negative integers, and \(\mu \in {\mathscr {P}}_{f,g}\). Then

We begin with some simple observations concerning the actions of the generators \(t_1,\ldots ,t_{k-1}\) on the basis elements \(t_\lambda m\). We continue to use the Russian convention for Young diagrams.

Lemma 5.3

Suppose \(\lambda \in {\mathscr {P}}_{f,g}\) and \(1\leqslant i<k\). Then:

-

if \(\lambda \) has an addable node \({\mathfrak {l}}\) in column \(i-g\), then \(t_it_\lambda m=t_{\lambda ^+}m\), where \(\lambda ^+\) is the partition obtained by adding \({\mathfrak {l}}\) to \(\lambda \);

-

if \(\lambda \) has a removable node \({\mathfrak {l}}\) in column \(i-g\), then \(t_it_\lambda m=t_{\lambda ^-}m\), where \(\lambda ^-\) is the partition obtained by removing \({\mathfrak {l}}\) from \(\lambda \);

-

if \(\lambda \) has neither an addable nor a removable node in column \(i-g\), then \(t_it_\lambda m=t_\lambda m\).

Proof

This is an easy consequence of the definitions and the Coxeter relations. \(\square \)

Proof of Theorem 5.2

We proceed by downwards induction on \(|\mu |\). If \(\mu =(f^g)\), then \(s_\mu =t_\mu =1\) and the result follows. Assuming \(\mu \ne (f^g)\), \(\mu \) has an addable node in column j, for some \(-g<j<f\). We let \({\mu ^+}\) denote the partition obtained by adding this addable node; then we have \(s_\mu =s_{j+g}s_{\mu ^+}\), and by induction

Let \({\mathscr {P}}_{f,g}^+\) denote the set of \(\lambda \in {\mathscr {P}}_{f,g}\) having an addable node in column j, and for \(\lambda \in {\mathscr {P}}_{f,g}^+\) let \(\lambda ^+\) denote the partition obtained by adding this addable node; similarly, let \({\mathscr {P}}_{f,g}^-\) denote the set of partitions in \({\mathscr {P}}_{f,g}\) having a removable node in column j, and for each \(\lambda \in {\mathscr {P}}_{f,g}^-\) let \(\lambda ^-\) denote the partition obtained by removing this removable node. Note that the functions \(\lambda \mapsto \lambda ^+\) and \(\lambda \mapsto \lambda ^-\) define mutually inverse bijections between \({\mathscr {P}}_{f,g}^+\) and \({\mathscr {P}}_{f,g}^-\). Now

So Theorem 5.2 follows by induction. \(\square \)

Now we give our second main result on transition coefficients.

Theorem 5.4

Suppose f, g are non-negative integers, and \(\mu \in {\mathscr {P}}_{f,g}\). Then

The proof of this result is rather more difficult. To begin with, we compute the actions of \(s_1,\ldots ,s_{k-1}\) on the basis elements \(s_\lambda m\).

Proposition 5.5

Suppose \(\mu \in {\mathscr {P}}_{f,g}\) and \(1\leqslant i<k\). Then exactly one of the following occurs.

-

1.

\(\mu \) has an addable node in column \(i-g\). In this case \(s_is_\mu m=-2s_\mu m\).

-

2.

\(\mu \) has a removable node in column \(i-g\). In this case \(s_is_\mu m=s_{\mu ^-}m\), where \(\mu ^-\) denotes the partition obtained by removing this node.

-

3.

For some \(0\leqslant a\leqslant g\) we have \(\mu _a>i-g+a>\mu _{a+1}\) (where the left-hand inequality is regarded as automatically true in the case \(a=0\)).

-

(a)

If \(\mu _w<i-g+2a-w\) for all \(a<w\leqslant g\), then \(s_is_\mu m=0\).

-

(b)

Otherwise, let \(w>a\) be minimal such that \(\mu _w=i-g+2a-w\), and set

$$\begin{aligned} \mu ^{a,w}=(\mu _1,\ldots ,\mu _a,i-g+a,\mu _{a+1}+1,\ldots ,\mu _{w-1}+1,\mu _{w+1},\ldots ,\mu _g). \end{aligned}$$Then \(s_is_\mu m=s_{\mu ^{a,w}}m\).

-

(a)

-

4.

For some \(1\leqslant a<g\) we have \(\mu _a=\mu _{a+1}=i-g+a\).

-

(a)

If \(i+2a>k\) and \(\mu _w<i-g+2a-w\) for \(w=1,\ldots ,a-1\), then \(s_is_\mu m=0\).

-

(b)

Otherwise, let \(w<a\) be maximal such that \(\mu _w\geqslant i-g+2a-w\) (taking \(w=0\) if there is no such w), and define

$$\begin{aligned}&\mu ^{w,a}=(\mu _1,\ldots ,\mu _w,i-g+2a-w,\mu _{w+1}\\&\quad +1,\ldots ,\mu _{a-1}+1,\mu _{a+1},\ldots ,\mu _g). \end{aligned}$$Then \(s_is_\mu m=s_{\mu ^{w,a}}m\).

-

(a)

Examples.

-

1.

Suppose \(f=4\), \(g=5\) and \(\mu =(4,2^4)\). Then