Abstract

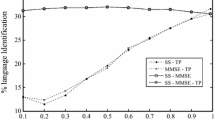

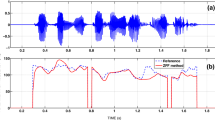

This paper explores pitch synchronous and glottal closure (GC) based spectral features for analyzing the language specific information present in speech. For determining pitch cycles (for pitch synchronous analysis) and GC regions, instants of significant excitation (ISE) are used. The ISE correspond to the instants of glottal closure (epochs) in the case of voiced speech, and some random excitations like onset of burst in the case of nonvoiced speech. For analyzing the language specific information in the proposed features, Indian language speech database (IITKGP-MLILSC) is used. Gaussian mixture models are used to capture the language specific information from the proposed features. Proposed pitch synchronous and glottal closure spectral features are evaluated using language recognition studies. The evaluation results indicate that language recognition performance is better with pitch synchronous and GC based spectral features compared to conventional spectral features derived through block processing. GC based spectral features are found to be more robust against degradations due to background noise. Performance of proposed features is also analyzed on standard Oregon Graduate Institute Multi-Language Telephone-based Speech (OGI-MLTS) database.

Similar content being viewed by others

References

Ambikairajah, E., Li, H., Wang, L., Yin, B., & Sethu, V. (2011). Language identification: a tutorial. IEEE Circuits and Systems Magazine, 11(2), 82–108.

Benesty, J., Sondhi, M. M., & Huang, Y. (2007). Springer handbook of speech processing. New York: Springer.

Bhaskararao, P. (2005). Salient phonetic features of Indian languages in speech technology. Sâdhana, 36(5), 587–599.

Carrasquillo, P. A. T., Reynolds, D. A., & Deller, J. R. (2002). Language identification using Gaussian mixture model tokenization. In Proceedings of IEEE int. conf. acoust., speech, and signal processing (Vol. I, pp. 757–760).

Cimarusti, D., & Eves, R. B. (1982). Development of an automatic identification system of spoken languages: phase I. In Proceedings of IEEE int. conf. acoust., speech, and signal processing (pp. 1661–1663).

Cole, R. A., Inouye, J. W. T., Muthusamy, Y. K., & Gopalakrishnan, M. (1989). Language identification with neural networks: a feasibility study. In Proc. IEEE pacific rim conf. communications, computers and signal processing (pp. 525–529).

Corredor-Ardoy, C., Gauvain, J., Adda-Decker, M., & Lamel, L. (1997). Language identification with language-independent acoustic models. In Proc. EUROSPEECH-1997 (pp. 55–58).

Dalsgaard, P., & Andersen, O. (1992). Identification of mono- and poly-phonemes using acoustic-phonetic features derived by a self-organising neural network. In Proc. int. conf. spoken language processing (ICSLP-1992) (pp. 547–550).

Guoliang, Z., Fang, Z., & Zhanjiang, S. (2001). Comparison of different implementations of MFCC. Journal of Computer Science and Technology, 16(16), 582–589.

Ives, R. (1986). A minimal rule AI expert system for real-time classification of natural spoken languages. In Proc. 2nd annual artificial intelligence and advanced computer technology conf. (pp. 337–340).

Jayaram, A. K. V. S., Ramasubramanian, V., & Sreenivas, T. V. (2003). Language identification using parallel sub-word recognition. In Proceedings of IEEE int. conf. acoust., speech, and signal processing (Vol. I, pp. 32–35).

Jyotsna, B., Murthy, H. A., & Nagarajan, T. (2000). Language identification from short segments of speech. In Proceedings of int. conf. spoken language processing, Beijing, China, October 2000 (pp. 1033–1036).

Koolagudi, S. G., & Rao, K. S. (2011). Two-stage emotion recognition based on speaking rate. International Journal of Speech Technology, 14(3), 167–181.

Lamel, L. F., & Gauvain, J. L. (1994). Language identification using phonebased acoustic likelihoods. In Proceedings of IEEE int. conf. acoust., speech, and signal processing (Vol. 1, pp. 293–296).

Lander, T., Cole, R., Oshika, B., & Noel, M. (1995). The OGI 22 language telephone speech corpus. In Proc. EUROSPEECH-1995 (pp. 817–820).

LDC, Philadelphia, PA (1996). LDC96S46–LDC96S60. http://www.ldc.upenn.edu/Catalog.

Leonard, R. G., & Doddington, G. R. (1974). Automatic language identification (Tech. Rep., A.F.R.A.D. Centre Tech. Rep. RADC-TR-74-200).

Mallidi, S. H. R., Prahallad, K., Gangashetty, S. V., & Yegnanarayana, B. (2010). Significance of pitch synchronous analysis for speaker recognition using AANN models. In Proceedings of INTERSPEECH-2010, Makuhari, Japan (pp. 669–672).

Martínez, D., Burget, L., Ferrer, L., & Scheffer, N. (2012). iVector-based prosodic system for language identification. In ICASSP.

Mary, L., & Yegnanarayana, B. (2004). Autoassociative neural network models for language identification. In Proc. int. conf. intelligent sensing and information processing, Chennai, India (pp. 317–320).

Mary, L., & Yegnanarayana, B. (2008). Extraction and representation of prosodic features for language and speaker recognition. Speech Communication, 50, 782–796.

Mary, L., Rao, K. S., & Yegnanarayana, B. (2005). Neural network classifiers for language identification using syntactic and prosodic features. In Proc. IEEE int. conf. intelligent sensing and information processing, Chennai, India, January 2005 (pp. 404–408).

Murty, K. S. R., & Yegnanarayana, B. (2008). Epoch extraction from speech signals. IEEE Transactions on Audio, Speech, and Language Processing, 16, 1602–1613.

Muthusamy, Y. K., Cole, R. A., & Oshika, B. T. (1992). The OGI multi-language telephone speech corpus. In Proceedings of int. conf. spoken language processing, October 1992 (pp. 895–898).

Nagarajan, T., & Murthy, H. A. (2002). Language identification using spectral vector distribution across languages. In Proc. international conference on natural language processing (pp. 327–335).

Nakagawa, S., Ueda, Y., & Seino, T. (1992). Speaker-independent, text independent language identification by HMM. In Proc. int. conf. spoken language processing (ICSLP-1992) (pp. 1011–1014).

Narendra, N. P., Rao, K. S., Ghosh, K., Reddy, V. R., & Maity, S. (2011). Development of syllable-based text to speech synthesis system in Bengali. International Journal of Speech Technology, 14(3), 167–181.

Navratil, J. (2001). Spoken language recognition a step toward multilinguality in speech processing. IEEE Transactions on Speech and Audio Processing, 9(6), 678–685.

Pellegrino, F., & Andre-Abrecht, R. (1999). An unsupervised approach to language identification. In Proceedings of IEEE int. conf. acoust., speech, and signal processing (pp. 833–836).

Pellegrino, F., Farinas, J., & André-Obrecht, R. (1999). Comparison of two phonetic approaches to language identification. In Proc. EUROSPEECH’99 (pp. 399–402).

Prahalad, L., Prahalad, K., & Ganapathiraju, M. (2005). A simple approach for building transliteration editors for Indian languages. Journal of Zhejiang University. Science A, 6(11), 1354–1361.

Ramasubramanian, V., Sai Jayaram, A. K. V., & Sreenivas, T. V. (2003). Language identification using parallel phone recognition. In WSLP, TIFR, Mumbai, January 2003 (pp. 109–116).

Rao, K. S. (2010a). Real time prosody modification. Journal of Signal and Information Processing, 1(1), 50–62.

Rao, K. S. (2010b). Voice conversion by mapping the speaker-specific features using pitch synchronous approach. Computer Speech & Language, 24(1), 474–494.

Rao, K. S. (2011a). Application of prosody models for developing speech systems in Indian languages. International Journal of Speech Technology, 14(1), 19–33.

Rao, K. S. (2011b). Role of neural network models for developing speech systems. Sâdhana, 36, 783–836.

Rao, K. S., & Koolagudi, S. G. (2010). Selection of suitable features for modeling the durations of syllables. Journal of Software Engineering and Applications, 3(12), 1107–1117.

Reynolds, D. A. (1995). Speaker identification and verification using Gaussian mixture speaker models. Speech Communication, 17(1–2), 91–108.

Riek, L., Mistreta, W., & Morgan, D. (1991). Experiments in language identification (Tech. Rep., Lockheed Sanders Tech. Rep. SPCOT-91-002).

Sangwan, A., Mehrabani, M., & Hansen, J. H. L. (2010). Automatic language analysis and identification based on speech production knowledge. In ICASSP.

Siu, M.-H.H., Yang, X., & Gish, H. (2009). Discriminatively trained GMMs for language classification using boosting methods. IEEE Transactions on Audio, Speech, and Language Processing, 17(1), 187–197.

Ueda, Y., & Nakagawa, S. (1990). Diction for phoneme/syllable/word-category and identification of language using HMM. In Proc. int. conf. spoken language processing (ICSLP-1990) (pp. 1209–1212).

Wong, E., & Sridharan, S. (2002). Gaussian mixture model based language identification system. In Proc. int. conf. spoken language processing (ICSLP-2002) (pp. 93–96).

Zissman, M. A. (1993). Automatic language identification using Gaussian mixture and hidden Markov models. In Proceedings of IEEE int. conf. acoust., speech, and signal processing, April 1993 (pp. 399–402).

Zissman, M. A. (1996). Comparison of four approaches to automatic language identification of telephone speech. IEEE Transactions on Speech and Audio Processing, 4, 31–44.

Znai, L.-F, Siu, M.-H.H., Yang, X., & Gish, H. (2006). Discriminatively trained language models using support vector machines for language identification. In Proc. speaker and language recognition workshop 2006. IEEE odyssey (pp. 1–6).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rao, K.S., Maity, S. & Reddy, V.R. Pitch synchronous and glottal closure based speech analysis for language recognition. Int J Speech Technol 16, 413–430 (2013). https://doi.org/10.1007/s10772-013-9193-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-013-9193-5