Abstract

Visual smoke recognition remains a substantial challenging task due to: (1) the large variations of smoke color, texture, brightness and shape caused by complex environment; (2) the difficulties in data collection and insufficient smoke datasets. The novel Transformer has attracted increasing interests in computer vision, but it still falls behind state-of-the-art convolutional neural networks when trained on limited datasets. To improve the visual feature representation of smoke image and address the problem of too few smoke datasets in real scenes, this paper proposes a new convolution-enhanced vision Transformer network (CViTNet) for smoke recognition by introducing desirable properties of convolutional neural network into vision Transformer. Instead of the straight tokenization in vision Transformer, we firstly revisit the merits of convolutional neural network and design convolutional token embedding by overlapping convolution operation with stride on the token feature maps, achieving feature resolution reduction and channel capacity expansion. We then partition vision Transformer into multiple stages by convolutional token embedding and construct a hierarchical structure to enhance feature representation and reduce computational complexity. CViTNet enjoys the advantages of both CNN and Transformer. Finally, we validate our approach by conducting extensive experiments, showing that CViTNet is establishing a new stage-of-the-art detection accuracy that exceeds 99.54\(\%\) on average with 4.49 M learnable parameters and 346 M FLOPs.

Similar content being viewed by others

References

Yuan F, Shi J, Xia X, Yang Y, Wang R (2016) Sub oriented histograms of local binary patterns for smoke detection and texture classification. KSII Trans Internet Inf Syst 10(4):1807–1823

Yuan F, Shi J, Xia X, Yang Y, Fang Y, Fang Z, Mei T (2016) High-order local ternary patterns with locality preserving projection for smoke detection and image classification. Inf Sci 372:225–240

Dubey SR, Singh SK, Singh RK (2016) Multichannel decoded local binary patterns for content-based image retrieval. IEEE Trans Image Process 25(9):4018–4032

Gubbi J, Marusic S, Palaniswami M (2009) Smoke detection in video using wavelets and support vector machines. Fire Saf J 44(8):1110–1115

Ferrari RJ, Zhang H, Kube CR (2007) Real-time detection of steam in video images. Pattern Recogn 40(3):1148–1159

Krizhevsky A, Sutskever I, Hinton GE (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90

Szeged C, Liu W, Ji Y, Sermanet P, Reed S (2015) Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 1–9

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 770–778

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Comput Sci

Huang G, Liu Z, Laurens V, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 1–8

Yin Z, Wang B, Yuan F, Xia X, Shi J (2017) A deep normalization and convolutional neural network for image smoke detection. IEEE Access 5:18429–18438

Yuan F, Zhang L, Wan B, Xia X, Shi J (2019) Convolutional neural networks based on multi-scale additive merging layers for visual smoke recognition. Mach Vis Appl 30(2):345–358

Mao W, Wang W, Dou Z, Li Y (2018) Correction to: fire recognition based on multi-channel convolutional neural network. Fire Technol 54(2):1–24

Liu Y, Qin W, Liu K, Fang Z, Xiao Z (2019) A dual convolution network using dark channel prior for image smoke classification. IEEE Access 7:60697–60706

Pundir AS, Raman B (2019) Dual deep learning model for image based smoke detection. Fire Technol 55(6):2419–2442

Zhang F, Qin W, Liu Y, Xiao Z, Liu K (2020) A dual-channel convolution neural network for image smoke detection. Multimed Tools Appl 79(8):34587–34603

Gu K, Xia Z, Qiao J, Lin W (2020) Deep dual-channel neural network for image-based smoke detection. IEEE Trans Multimed 22(2):311–323

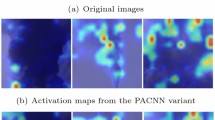

Cheng G, Chen X, Gong J (2022) Deep convolutional network with pixel-aware attention for smoke recognition. Fire Technol 1–24

Wu Z, Xue R, Li H (2022) Real-time video fire detection via modified yolov5 network model. Fire Technol. https://doi.org/10.1007/s10694-022-01260-z

Ashish V, Noam S, Niki P, Jakob U, Llion J, Aidan NG, Lukasz K (2016) Attention is all you need. In: Proceedings of the Intererational Conference on Neural Information Processing Systems (NIPS), pp 6000–6010

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S et al (2020) An image is worth 16x16 words: transformers for image recognition at scale. arXiv preprint 2010.11929

Sun C, Shrivastava A, Singh S, Gupta A (2017) Revisiting unreasonable effectiveness of data in deep learning era. In: Proceedings of the IEEE International Conference on Computer Vision, pp 843–852

Lecun Y, Bottou L (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M et al (2015) Imagenet large scale visual recognition challenge. Int J Comput Vis 115(3):211–252

Khan A, Sohail A, Zahoora U, Qureshi AS (2020) A survey of the recent architectures of deep convolutional neural networks. Artif Intell Rev 53(8):5455–5516

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 7794–7803

Jie H, Li S, Albanie S, Gang S, Enhua W (2020) Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell 42(8):2011–2023

Woo S, Park J, Lee J-Y, Kweon IS (2018) Cbam: Convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 3–19

Srinivas A, Lin T-Y, Parmar N, Shlens J, Abbeel P, Vaswani A (2021) Bottleneck transformers for visual recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 16519–16529

Bello I (2021) Lambdanetworks: modeling long-range interactions without attention. arXiv preprint 2102.08602

Lin T, Wang Y, Liu X, Qiu X (2021) A survey of transformers. arXiv preprint arXiv:2106.04554

Khan S, Naseer M, Hayat M, Zamir SW, Khan FS, Shah M (2021) Transformers in vision: a survey. ACM Comput Surv 24:200

Han K, Wang Y, Chen H, Chen X, Guo J, Liu Z, Tang Y, Xiao A, Xu C, Xu Y et al (2020) A survey on visual transformer. arXiv e-prints, 2012

Touvron H, Cord M, Matthijs D, Massa F, Sablayrolles A, Jegou H (2021) Training data-efficient image transformers & distillation through attention. In: Proceedings of the International Conference on Learning Representations (ICLR), pp 10347–10357

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: Hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE Intererational Conference on Computer Vision (ICCV), pp 10012–10022

Chen C-F, Panda R, Fan Q (2021) Regionvit: Regional-to-local attention for vision transformers. arXiv preprint arXiv:2106.02689

Zhou D, Kang B, Jin X, Yang L, Lian X, Jiang Z, Hou Q, Feng J (2021) Deepvit: Towards deeper vision transformer. arXiv preprint arXiv:2103.11886

Ali A, Touvron H, Caron M, Bojanowski P, Douze M, Joulin A, Laptev I, Neverova N, Synnaeve G, Verbeek J et al (2021) Xcit: cross-covariance image transformers. In: Advances in Neural Information Processing Systems, vol 34

Wu H, Xiao B, Codella N, Liu M, Dai X, Yuan L, Zhang L (2021) Cvt: introducing convolutions to vision transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 22–31

Xu W, Xu Y, Chang T, Tu Z (2021) Co-scale conv-attentional image transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 9981–9990

Graham B, El-Nouby A, Touvron H, Stock P, Joulin A, Jégou H, Douze M (2021) Levit: a vision transformer in convnet’s clothing for faster inference. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 12259–12269

Wang W, Xie E, Li X, Fan D-P, Song K, Liang D, Lu T, Luo P, Shao L (2021) Pyramid vision transformer: a versatile backbone for dense prediction without convolutions. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 568–578

Han K, Xiao A, Wu E, Guo J, Xu C, Wang Y (2021) Transformer in transformer. Adv Neural Inf Process Syst 34:15908–15919

Yuan K, Guo S, Liu Z, Zhou A, Yu F, Wu W (2021) Incorporating convolution designs into visual transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 579–588

Wang W, Xie E, Li X, Fan D-P, Song K, Liang D, Lu T, Luo P, Shao L (2022) Pvt 2: improved baselines with pyramid vision transformer. Comput Visual Media 8(3):415–424

Heo B, Yun S, Han D, Chun S, Choe J, Oh SJ (2021) Rethinking spatial dimensions of vision transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 11936–11945

Chu X, Tian Z, Zhang B, Wang X, Wei X, Xia H, Shen C (2021) Conditional positional encodings for vision transformers. arXiv preprint arXiv:2102.10882

Li Y, Zhang K, Cao J, Timofte R, Van Gool L (2021) Localvit: bringing locality to vision transformers. arXiv preprint 2104.05707

Su X, You S, Xie J, Zheng M, Wang F, Qian C, Zhang C, Wang X, Xu C (2021) Vision transformer architecture search. arXiv e-prints, 2106

Chen M, Peng H, Fu J, Ling H (2021) Autoformer: searching transformers for visual recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 12270–12280

Chen B, Li P, Li C, Li B, Bai L, Lin C, Sun M, Yan J, Ouyang W (2021) Glit: Neural architecture search for global and local image transformer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 12–21

Ba JL, Kiros JR, Hinton GE (2016) Layer normalization. arXiv preprint arXiv:1607.06450

Hendrycks D, Gimpel K (2016) Bridging nonlinearities and stochastic regularizers with gaussian error linear units

Zhang H, Cisse M, Dauphin YN, Lopez-Paz D (2017) mixup: Beyond empirical risk minimization. arXiv preprint arXiv:1710.09412

Yun S, Han D, Oh SJ, Chun S, Choe J, Yoo Y (2019) Cutmix: Regularization strategy to train strong classifiers with localizable features. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 6023–6032

Zhong Z, Zheng L, Kang G, Li S, Yang Y (2020) Random erasing data augmentation. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 34, pp 13001–13008

Cubuk ED, Zoph B, Shlens J, Le QV (2020) Randaugment: Practical automated data augmentation with a reduced search space. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops

Yuan L, Tay F, Li G, Wang T, Feng J (2019) Revisit knowledge distillation: a teacher-free framework. arxiv 2019. arXiv preprint 1909.11723

Huang G, Sun Y, Liu Z, Sedra D, Weinberger KQ (2016) Deep networks with stochastic depth. In: European Conference on Computer Vision, pp 646–661. Springer, New York

Loshchilov I, Hutter F (2018) Fixing weight decay regularization in adam

Aditya C, Anirban S, Abhishek D, Prantik H (2018) Grad-cam++: improved visual explanations for deep convolutional networks. arxiv 2018. arXiv preprint 1710.11063

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cheng, G., Zhou, Y., Gao, S. et al. Convolution-Enhanced Vision Transformer Network for Smoke Recognition. Fire Technol 59, 925–948 (2023). https://doi.org/10.1007/s10694-023-01378-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10694-023-01378-8