Abstract

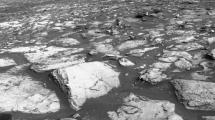

The Extreme Universe Space Observatory on the Japanese Experiment Module (JEM-EUSO) on board the International Space Station (ISS) is the first space-based mission worldwide in the field of Ultra High-Energy Cosmic Rays (UHECR). For UHECR experiments, the atmosphere is not only the showering calorimeter for the primary cosmic rays, it is an essential part of the readout system, as well. Moreover, the atmosphere must be calibrated and has to be considered as input for the analysis of the fluorescence signals. Therefore, the JEM-EUSO Space Observatory is implementing an Atmospheric Monitoring System (AMS) that will include an IR-Camera and a LIDAR. The AMS Infrared Camera is an infrared, wide FoV, imaging system designed to provide the cloud coverage along the JEM-EUSO track and the cloud top height to properly achieve the UHECR reconstruction in cloudy conditions. In this paper, an updated preliminary design status, the results from the calibration tests of the first prototype, the simulation of the instrument, and preliminary cloud top height retrieval algorithms are presented.

Similar content being viewed by others

References

Adams, J.H., et al.: (JEM-EUSO collaboration), An evaluation of the exposure in nadir observation of the JEM-EUSO mission. Astropart. Phys. 44, 76–90 (2013)

Rodríguez Frías, M.D.: The JEM-EUSO Space Mission collaboration, The JEM-EUSO Space Mission @ forefront of the highest energies never detected from Space. In: Proceedings Workshop on Multifrequency Behaviour of High Energy Cosmic Sources, MdSAI, vol. 83, pp. 337–341 (2012)

Rodríguez Frías, M.D., et al.: The JEM-EUSO Space Mission, The JEM-EUSO Space Mission: Frontier Astroparticle Physics @ ZeV range from Space, Nova Science Publishers, ISBN: 978-1-62618-998-0, Inc (2014). In press

Sáez-Cano, G., Morales de los Rios, J.A., Prieto, H., del Peral, L., Pacheco Gmez, N., Hernanndez Carretero, J., Shinozaki, K., Fenu, F., Santangelo, A., Rodríguez Frías, M.D.: JEM-EUSO collaboration. Observation of ultra-high energy cosmic rays in cloudy conditions by the JEM-EUSO space observatory. In: Proceedings of 32nd International Cosmic Ray Conference (ICRC), Beijing, vol. 3, p.231 (2011). (Preprint) arXiv: 1204.5065.

Sáez-Cano, G., et al.: Observation of ultra-high energy cosmic rays in cloudy conditions by the Space-based JEM-EUSO observatory. J. Phys. Conf. Ser. 375, 052010 (2012)

Sáez-Cano, G., Shinozaki, K, del Peral, L., Bertaina, M., Rodríguez Frías, M.D.: Observation of extensive air showers in cloudy conditions by the JEM-EUSO space mission. Advances in space research: Special issue “centenary of the discover of the cosmic rays”. In press (2014)

The JEM-EUSO collaboration, Calibration aspects of the JEM-EUSO mission, this edition (2014)

The JEM-EUSO collaboration, JEM-EUSO observation in cloudy conditions, this edition (2014)

The JEM-EUSO collaboration, The Atmospheric Monitoring System of the JEM-EUSO instrument, this edition (2014)

Wada, S., Ebisuzaki, T., Ogawa, T., Sato, M., Peter, T, Mitev, V., Matthey, R., Anzalone, A., Isgro, F., Tegolo, D., Colombo, E., Morales de los Ríos, J.A., Rodríguez Frías, M.D., Park II, Nam Shinwoo, Park Jae, JEM-EUSO Collaborators: Potential of the Atmospheric Monitoring System of JEM-EUSO Mission. In: Proceedings 31st International Cosmic Ray Conference (2009)

Meteosat Second Generation, Website: http://www.eumetsat.int/website/home/Satellites/CurrentSatellites/Meteosat/0DegreeService/index.html

Rodríguez Frías, M.D.: JEM-EUSO collaboration, The Atmospheric Monitoring System of the JEM-EUSO space mission. In: Proceedings International Symposium on Future Directions in UHECR Physics, CERN. Eur. Phys. J. 53 (10005), 1–7 (2013) doi:10.1051/epjconf/20135310005

Neronov, A., Wada, S., Rodríguez Frías, M.D., et al.: Atmospheric Monitoring System of JEM-EUSO, In: Proceedings of 32nd International Cosmic Ray Conference (ICRC), Beijing, vol. 6, p. 332 (2011). (Preprint) arXiv: 1204.5065

Rodríguez Frías, M.D., et al.: Towards the Preliminary Desigh Review of the Infrared Camera of the JEM-EUSO space mission. In: Proceedings of 33rd International Cosmic Ray Conference (ICRC), Rio de Janeiro (2013). (Preprint) arXiv: 1307.7071

Morales de los Ríos, J.A., et al.: The IR-Camera of the JEM-EUSO Space Observatory. In: Proceedings of 32nd International Cosmic Ray Conference (ICRC) Beijing, vol. 11, p. 466 (2011)

UL 04 17 1 640x480 VGA LWIR uncooled microbolometer Data sheet from ULIS Propietary (UL 04 17 1/15.10.07/UP/DVM/NTC07 1010-4 rev.4)

CODE V Optical Design Software, http://www.opticalres.com/

IRXCAM Control Application Software User Manual, INO, September, 2010

Surnia JANOS, SURNIA Lenses Website: http://www.janostech.com/products-services/thermal-imaging-lenses/surnia-lenses.html

Infrared Reference Source DCN 1000 Users Manual, HGH Systems infrarouges (version August, 2010)

Morales de los Ríos, J.A., Joven, E., del Peral, L., Reyes, M., Licandro, J., Rodríguez Frías, M.D.: The Infrared Camera Prototype Characterization for the JEM-EUSO Space Mission, Nuclear Instruments and Methods in Physics Research section A, vol. 749, pp. 74–83 (20114). ISSN 0168–9002. doi:10.1016/j.nima.2014.02.0050

Morales de los Ríos, J.A., et al.: An End to End Simulation code for the IR-camera of the JEM-EUSO Space Observatory. In: Proceedings of 33rd International Cosmic Ray Conference (ICRC), Rio de Janeiro (2013)

Masunaga, H., et al.: Satellite Data Simulator Unit, a multisensor, multispectral satellite simulator package. Am. Meteorol. Soc. (2010)

MODIS (Moderate Resolution Imaging Spectroradiometer) NASA website: http://modis.gsfc.nasa.gov/, http://ladsweb.nascom.nasa.gov/data/search.html

CALIPSO (Cloud-Aerosol Lidar and Infrared Pathfinder Satellite Observation) NASA website: http://www-calipso.larc.nasa.gov/, CNES website: http://smsc.cnes.fr/CALIPSO/

HP Labs LOCO-I/JPEG-LS. http://www.hpl.hp.com/loco/

Moroney, C., et al.: IEEE Trans. Geos. Remote. Sens. 40 (7), 1532–1540 (2002). doi:10.1109/TGRS.2002.801150

Muller, J.P., et al.: Int. J. Remote. Sens. 28 (9), 1921–1938 (2007). doi:10.1080/01431160601030975

Naud, C., Muller, J.P., Clothiaux, E.E.: Comparison of cloud-top heights derived from misr stereo and modis co2-slicing. Geophys. Res. Lett. 29 (16) (2002)

Naud, C., Baum, B., Bennartz, R., Fischer, J., Frey, R., Menzel, P., Muller, J.P., Preusker, R., Zhang, H.: Inter-comparison of meris, modis and misr cloud top heights. In: Proceedings of the ESA MERIS Workshop, Frascati (2003)

Naud, C., Muller, J.P., Haeffelin, M., Morille, Y., Delaval, A.: Assessment of misr and modis cloud top heights through inter comparison with a back-scattering lidar at sirta. Geophys. Res. Lett. 31 (4) (2004)

Naud, C., et al.: Inter-comparison of multiple years of modis, misr and radar cloud-top heights. Ann. Geophys. 23, 2415–2424 (2005)

Nauss, T., Kokhanovsky, A.A., Nakajima, T.Y., Reudenbach, C., Bendix, J. Atmos. Res. 78, 46–78 (2005)

Marchand, R.T., Ackerman, T.P., Moroney, C.: An assessment of Multiangle Imaging Spectroradiometer (MISR) stereo-derived cloud top heights and cloud top winds using ground-based radar, lidar, and microwave radiometers. J. Geophys. Res. 112, D06204 (2007). doi:10.1029/2006JD007091

Manizade, K.F., Spinhirne, J.D.: IEEE Trans. Geosci. Remote Sens. 44 (9), 2481–2491 (2006)

Qin, Z., Dall’Olmo, G., Karnieli, A., Berliner, P.: Derivation of split window algorithm and its sensitivity analysis for retrieving land surface temperature from noaa-advanced very high resolution radiometer data. J. Geophys. Res. Atmos. (1984-2012) 106 (D19), 22,655 (2001)

Ouaidrari, H., Goward, S., Czajkowski, K., Sobrino, J., Vermote, E.: Land surface temperature estimation from AVHRR thermal infrared measurements: An assessment for the AVHRR land pathfinder II data set. Remote. Sens. Environ. 81 (1), 114 (2002)

Zhao, S., Qin, Q., Yang, Y., Xiong, Y., Qiu, G.: Comparison of two split-window methods for retrieving land surface temperature from MODIS data. J. Earth. Syst. Sci. 118 (4), 345 (2009)

Merchant, C., Le Borgne, P., Marsouin, A., Roquet, H.: Optimal estimation of sea surface temperature from split-window observations. Remote. Sens. Environ. 112 (5), 2469 (2008)

Sobrino, J., Li, Z., Stoll, M., Becker, F.: Multi-channel and multi-angle algorithms for estimating sea and land surface temperature with ATSR data. Int. J. Remote Sens. 17 (11), 2089 (1996)

Briz, S., de Castro, A.J., Fernández-Gómez, I., Rodríguez, I., López F.: JEM-EUSO collaboration: Remote Sensing of Water Clouds Temperature with an IR Camera on board the ISS in the frame of JEM-EUSO Mission. Remote Sensing of Clouds and the Amosphere XVIII; and Optics in Atmospheric Propagation and Adaptive Systems XVI. In: Comeron, A., et al. (eds.) Proceedings of SPIE, vol. 8890, 88900K1-12 (2013)

Genkova, I., Seiz, G., Zuidema, P., Zhao, G., Di Girolamo, L.: Cloud top height comparisons from ASTER, MISR and MODIS for trade wind cumuli. Remote. Sens. Environ. 107, 211–222 (2007)

Inoue, T.: A cloud type classification with noaa 7 split-window measurements. J. Geophys. Res. Atmos. (1984-2012) 92 (D4), 3991–4000 (1987)

Ackerman, S.A., Strabala, K.I., Menzel, P.W., Frey, R.A., Moeller, C.C., Gumley, L.E.: Discriminating clear sky from clouds with MODIS. J. Geophys. Res. 103 (D24), 32,141–32,157 (1998)

Nauss, T., Kokhanovsky, A., Nakajima, T., Reudenbach, C., Bendix, J.: The inter-comparison of selected cloud retrieval algorithms. Atmos. Res. 78 (1), 46–78 (2005)

Hamada, A., Nishi, N.: Development of a cloud-top height estimation method by geostationary satellite split-window measurements trained with CloudSat data. J. Appl. Meteorol. Climatol. 49, 2035–2049 (2010)

Schmetz, J., et al.: BAMS 83, 977 (2002). doi:10.1175/BAMS-83-7-Schmetz-1

Menzel, W.P., et al.: http://modis-atmos.gsfc.nasa.gov/docs/MOD06CT:MYD06CTATBDC005.pdf (2006)

Vincent, O.R., Folorunso, O.: A descriptive Algorithm for Sobel Image Edge Detection. In: Proceedings of Informing Science and IT Education Conference (InSITE) (2009)

Michalakes, J., Dudhia, J., Gill, D., Henderson, T., Klemp, J., Skamarock, W., Wang, W.: The Weather Reseach and Forecast Model: Software Architecture and Performance. In: Mozdzynski, G. (ed.) Proceedings of the 11th ECMWF Workshop on the Use of High Performance Computing in Meteorology, 25-29 October 2004, Reading U.K. (2004). (to appear)

Acknowledgements

This work is supported by the Spanish Government MICINN & MINECO under the Space Program: projects AYA2009-06037-E/AYA, AYA-ESP 2010-19082, AYA-ESP 2011-29489-C03, AYA-ESP 2012-39115-C03, CSD2009-00064 (Consolider MULTIDARK) and by Comunidad de Madrid under project S2009/ESP-1496. M. D. Rodriguez Frias deeply acknowledge Instituto de Astrofisica de Canarias (IAC) the grant under the ”Excelence Severo Ochoa Program” to perform a mid-term visit at this institute (Tenerife, Canary Islands).

Author information

Authors and Affiliations

Consortia

Corresponding authors

Additional information

The JEM-EUSO Collaboration

The full author list and affiliations are given at the end of the paper.

Rights and permissions

About this article

Cite this article

The JEM-EUSO Collaboration., Adams, J.H., Ahmad, S. et al. The infrared camera onboard JEM-EUSO. Exp Astron 40, 61–89 (2015). https://doi.org/10.1007/s10686-014-9402-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10686-014-9402-5