Abstract

This paper experimentally investigates the effect of introducing unavailable alternatives and irrelevant information regarding the alternatives on the optimality of decisions in choice problems. We find that the presence of unavailable alternatives and irrelevant information generates suboptimal decisions with the interaction between the two amplifying this effect. Irrelevant information in any dimension increases the time costs of decisions. We also identify a “preference for simplicity” beyond the desire to make optimal decisions or minimize time spent on a decision problem.

Similar content being viewed by others

Notes

Note that in all of these examples, the firm/regulatory agency in question may have separate incentives for providing irrelevant information. These can be statutory (as in cases of regulated information provision), strategic (e.g. a firm may provide distracting irrelevant information to hide negative attributes), or because of dynamic considerations (e.g. an item may not be available currently, but the firm wants to signal the possibility that it is available in the future). We do not directly consider the firm’s incentives in the current work, instead focusing on pure effect of irrelevant information on choice.

An attribute that does not vary across available options may be utility relevant, but it is certainly not decision relevant information in that it does not meaningfully distinguish one good from another.

Oprea (2019) also looks at “complexity" of decision rules, though in a different context than what we consider herein.

Several other models can be considered to have a “pruning" stage, though this element of the model is less explicit relative to the attention-based models mentioned here. For example, Bordalo et al. (2012, (2013), Bordalo et al. (2016) can be considered to treat irrelevant attributes as “pruned" in that they are de-facto treated with zero salience and, hence, ignored. Additionally, Kahneman and Tversky (1979) include an “editing" stage wherein lotteries are re-expressed by compressing payoff-equivalent states and therefore the lottery framing information of this form is “pruned." The latter model is less explicitly connected to our experiment, but is mentioned for posterity.

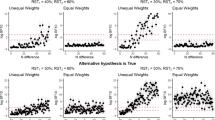

Our design of varying irrelevant information in two dimensions will later be shown to create symmetric difficulty for subjects. Even though one may think that the perceptual operations required to solve a task are very different in these two dimensions (keeping track of payoffs horizontally and vertically), the impact of these two dimensions on optimality of choice turn out to be similar.

Note that our design differs slightly from Gabaix et al. (2006) and Caplin et al. (2011). In each of those experiments, the coefficient applied to an attribute appeared directly next to the attribute value. To port that design directly to address our research question, we would then have to display zeros for irrelevant attributes as cells in the matrix. In our view, this limits the applicability to real-world scenarios in which we think that information may be irrelevant, even subjectively. We are interested in an environment where irrelevant information is displayed, but not valued. Furthermore, by including coefficients in the column header only, we treat irrelevant attributes and unavailable options symmetrically, a necessary design component in order to interpret our findings with sufficient generalizability.

OPM plan comparison tool: https://www.opm.gov/healthcare-insurance/healthcare/plan-information/compare-plans/.

Subjects earned a payoff of $0 if they didn’t make a choice within 75 s.

We also conducted some control experiments for \(i,j \in \{5,8\}\) where we added three (rather than ten) unavailable options or irrelevant attributes to decision problems. Results for those experiments are in Sect. 4 and Electronic supplementary material Appendices D.1 and D.2

The complete set of decision problems is available upon request.

Two additional sessions were conducted for robustness wherein we asked for WTP for \(O_{15}A_{15} \rightarrow O_{5}A_{5}\). These results are explained in Sect. 4 and included in Electronic supplementary material Appendix D.3.

An interested reader may wonder whether our central results are dependent on the specific time limit chosen in our design. First, note that in Table 19, the mean time taken to choose correctly is substantially less than the time limit of 75 s for each type of decision problem. We take this as evidence that our time limit was not meaningfully binding for a very large portion of our subject pool. Additionally, we conducted four pilot sessions under various design schemes, all without any time limit. Results, including mistake rates and time spent per problem, are qualitatively similar and are available upon request.

There were four subjects who experienced timeouts in more than 20% of their decision problems. They are included in the sample upon which all analysis is conducted, but results are not qualitatively different if they are excluded.

Additional results using alternative parameters that are discussed in Sect. 4 and Electronic supplementary material Appendix D show that this finding is not driven solely by the increase in the amount in irrelevant information displayed; instead caused by the introduction of both unavailable options and irrelevant attributes).

Our data generation process gave equal weight to the possibility of having a positive or negative relevant attribute. However, we only used generated decision problems that i) had a unique optimal available option and ii) had all positive-valued available options. Thus, the range of the number of positive available options in the generated dataset is more restrictive than that which would be generated without these constraints.

We further explore alternative complexity measures for relevant subsamples of this dataset in Electronic supplementary material Appendix B.4.

Our results are robust to (i) dropping all observations with such a “tie" and (iii) rounding up such “ties" and these results are available upon request.

See Table 27 and Fig. 3 in Electronic supplementary material Appendix C for learning trends across decision problem types.

To investigate the sensitivity of our results to this choice, we conduct further regressions using lower time thresholds. These can be found in Tables 20 and 21 of Electronic supplementary material Appendix B.2.

Across all model specifications, we find some evidence of learning and subject-level heterogeneity, including gender, native language, and cognitive ability effects. However, our experiment was not explicitly designed to test for the effects of these demographic variables. As such, these results are included as statistical controls.

For these regressions, answers submitted at \(time = 75\) s are coded as mistakes to avoid collinearity of regressors.

Table 24 of Electronic supplementary material Appendix B.3 repeats the regressions reported in Table 11 by replacing the measure for Cognitive Score with self-reported GPA. The results are qualitatively the same.

In all relevant analysis, “No Additional Mistakes" and “No Additional Mistakes or Time Costs" are defined at the subject-\(O_{i}A_{j}\) decision problem type level, independent of behavior in other decision problem types. As such, a subject could be considered to have made “No Additional Mistakes" in some decision problems, but not others, and may appear in some cells of Tables 12 and 13, but not all. These measures do not require any joint conditions over multiple decision problem types for a given subject.

We would, however, like to caution the reader against interpreting these results as evidence that unavailable options and irrelevant attributes can never affect welfare for a DM when presented alone. While this is mostly true in our experimental dataset, no single experiment (or set of experiments) can fully explore the parameter space of such decision problem such that we can precisely estimate the full specification of the mistake rate function. Our results simply indicate that there is sufficient evidence that, within the confines of our experimental design, the interaction between unavailable options and irrelevant attributes indeed matters.

We also view mistake rates in the treatments used for robustness as lower bounds on true mistake rates. The mistake rate for the baseline treatment of this dataset was \(16.8\%\), lower than the baseline mistake rate of \(21.3\%\) for the main dataset. This difference could be due to relative overall easiness of the robustness experiments with a maximum of \(O_{8}A_{8}\) difficulty rather than \(O_{15}A_{15}\) of the main experiments. Nevertheless it is important to note that even the lower bound of the mistake rate in \(O_{8}A_{8}\) is higher than those observed in \(O_{5}A_{15}\) and \(O_{15}A_{5}\).

References

Becker, G. M., DeGroot, M. H., & Marschak, J. (1964). Measuring utility by a single-response sequential method. Behavioral Science, 9(3), 226–232.

Bordalo, P., Gennaioli, N., & Shleifer, A. (2012). Salience theory of choice under risk. Quarterly Journal of Economics, 127(3), 1243–1285.

Bordalo, P., Gennaioli, N., & Shleifer, A. (2013). Salience and consumer choice. Journal of Political Economy, 121(5), 803–843.

Bordalo, P., Gennaioli, N., & Shleifer, A. (2016). Competition for attention. The Review of Economic Studies, 83(2), 481–513.

Caplin, A., Dean, M., & Martin, D. (2011). Search and satisficing. American Economic Review, 101(7), 2899–2922.

Cohen, P., Cohen, J., Aiken, L. S., & West, S. G. (1999). The problem of units and the circumstance for POMP. Multivariate Behavioral Research, 34(3), 315–346.

Farquhar, P. H., & Pratkanis, A. R. (1993). Decision structuring with phantom alternatives. Management Science, 39(10), 1214–1226.

Filiz-Ozbay, E., Ham, J. C., Kagel, J. H., & Ozbay, E. Y. (2016). The role of cognitive ability and personality traits for men and women in gift exchange outcomes. Experimental Economics,. https://doi.org/10.1007/s10683-016-9503-2.

Fischbacher, U. (2007). z-Tree: Zurich toolbox for ready-made economic experiments. Experimental Economics, 10(2), 171–178.

Gabaix, X., Laibson, D., Moloche, G., & Weinberg, S. (2006). Costly information acquisition: experimental analysis of a boundedly rational model. American Economic Review, 96(4), 1043–1068.

Kahneman, D., Tversky, A., & Theory, P. (1979). An analysis of decision under risk. Econometrica, 47(2), 263–292.

Klabjan, D., Olszewski, W., & Wolinsky, A. (2014). Attributes. Games and Economic Behavior, 88, 190–206.

Kőszegi, B., & Szeidl, A. (2012). A model of focusing in economic choice. The Quarterly journal of economics, 128(1), 53–104.

Lleras, J. S., Masatlioglu, Y., Nakajima, D., & Ozbay, E. Y. (2017). When more is less: Limited consideration. Journal of Economic Theory, 170, 70–85.

Manzini, P., & Mariotti, M. (2007). Sequentially rationalizable choice. American Economic Review, 97(5), 1824–1839.

Manzini, P., & Mariotti, M. (2012). Categorize then choose: Boundedly rational choice and welfare. Journal of the European Economic Association, 10(5), 1141–1165.

Manzini, P., & Mariotti, M. (2014). Stochastic choice and consideration sets. Econometrica, 82(3), 1153–1176.

Masatlioglu, Y., Nakajima, D., & Ozbay, E. Y. (2012). Revealed attention. American Economic Review, 102(5), 2183–2205.

Oprea, R. (2019). What is complex? Working paper.

Reutskaja, E., Nagel, R., Camerer, C. F., & Rangel, A. (2011). Search dynamics in consumer choice under time pressure: An eye-tracking study. American Economic Review, 101(2), 900–926.

Richter, S. G. L. P. M. (2017). Breadth versus depth.

Sanjurjo, A. (2017). Search with multiple attributes: Theory and empirics. Games and Economic Behavior, 104, 535–562.

Soltani, A., De Martino, B., & Camerer, C. (2012). A range-normalization model of context-dependent choice: A new model and evidence. PLoS Computational Biology, 8(7), e1002607.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We thank Gary Charness, Mark Dean, Allan Drazen, Daniel Martin, Yusufcan Masatlioglu, Pietro Ortoleva, Ariel Rubinstein, and Lesley Turner for helpful comments and fruitful discussions. We also would like to thank our anonymous reviewers for useful suggestions.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Chadd, I., Filiz-Ozbay, E. & Ozbay, E.Y. The relevance of irrelevant information. Exp Econ 24, 985–1018 (2021). https://doi.org/10.1007/s10683-020-09687-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10683-020-09687-3