Abstract

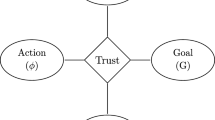

We argue that the notion of trust, as it figures in an ethical context, can be illuminated by examining research in artificial intelligence on multi-agent systems in which commitment and trust are modeled. We begin with an analysis of a philosophical model of trust based on Richard Holton’s interpretation of P. F. Strawson’s writings on freedom and resentment, and we show why this account of trust is difficult to extend to artificial agents (AAs) as well as to other non-human entities. We then examine Margaret Urban Walker’s notions of “default trust” and “default, diffuse trust” to see how these concepts can inform our analysis of trust in the context of AAs. In the final section, we show how ethicists can improve their understanding of important features in the trust relationship by examining data resulting from a classic experiment involving AAs.

Similar content being viewed by others

Notes

In a provocative (and now, arguably, classic) paper, Floridi and Sanders (2004) suggest that AAs can be fully rational, autonomous, and moral agents (in addition to being “moral patients”). They also contrast autonomous artificial agents with what they call “heteronomous artificial agents.” But not everyone has accepted the view that AA’s can qualify as moral agents. For example, Johnson (2006) argues that because AAs lack intentionality, they cannot be viewed as moral agents, even if they satisfy the requirements for “moral entities.” And Himma (2009) argues that for AAs to qualify as moral agents, they will have to convincingly demonstrate that they posses a range of properties, including consciousness. Others have questioned whether AAs can be “responsible entities” and some suggest that we may need to expand the notion of responsibility because of the kinds of accountability issues raised by AAs (Stahl 2006). However, we will not pursue these controversies here. Basically, we agree with Moor (2006) that there are at least four distinct levels in which AAs can have an “ethical impact,” even if we cannot attribute moral accountability to AAs themselves and even if AAs do not qualify as (full) moral agents.

We use the standard definition of an artificial agent introduced by Subrahamanian, et al. (2000, p. 4), which easily enables us to define a multi-agent system as well. On this definition, artificial software agents consist of a collection of software providing useful services that other agents might employ, a description of the services accessible to other agents, the capacity for autonomous actions, the capacity to describe how that agent determines which specific courses-of-action to take, and the capacity to interact with other agents, both artificial and human, in different environments, ranging from cooperative to hostile.

We also believe that researchers working on multi-agent systems who model the notions of commitment and trust in their experiments can benefit from examining the philosophical literature on trust. However, this argument is developed in a separate paper.

American Heritage College Dictionary (Fourth edition), Houghton Mifflin Company, 2002.

Peter Strawson, “Freedom and Resentment,” in Freedom and Resentment and Other Essays, Routledge, 1974, pp. 1–28. Strawson introduces his distinction between the “participant attitude toward others” and an “objective attitude towards others,” where the participant attitude brings in the normative notion of responsibility to others, while the objective attitude is characterized by non-normative, descriptive features.

See Richard Holton, “Deciding to Trust, Coming to Believe,” in Australasian Journal of Philosophy, 72 (1994), pp. 63–76.

Margaret Urban Walker, Moral Repair: Reconstructing Moral Relations After Wrongdoing, Cambridge University Press, 2006, p. 79.

Walker, op. cit., p. 85.

Ibid., p. 85.

See, for example, Murdoch (1973). We cannot argue for the legitimacy of our claim here, since doing so would require additional space for arguments to show how the metaphysics of fictional entities allows a natural explanation to be provided of how we can have normative relations to fictional entities.

Walker, op. cit., pp. 85ff.

Ibid., p. 85.

A special issue of Artificial Intelligence (142, 2002) was devoted to many of the papers that were delivered at ICMAS-2000. In a separate paper, we argue that designers of multi-agent systems should pay attention to work in ethics.

Barbara Grosz, Sarit Kraus, David G. Sullivan, and Sanmay Das, “The influence of social norms and social consciousness on intention reconciliation,” Artificial Intelligence, 142 (2002), pp. 147–177.

However, Quine does allow that a non-principled defeasible form of the distinction can be entertained. See “Two Dogmas of Empiricism,” in W. V. O. Quine, From a Logical Point of View (Harvard University Press, 1953), pp. 20–46.

Grosz et al., op cit., p. 152.

American Heritage College Dictionary, op. cit. Some take the notion of “entrusting” to be weaker than trusting; i.e., A can entrust B to do X, without B’s being committed to doing X. However, we will not pursue this distinction here.

We will not, in the present essay, at any rate, discuss the issue of whether the simulation involved is of one of an authentic intention, nor will we say what the concept of a human intention consists in.

There are additional possibilities for the restrictions on not being able to do both A and B. These include social commitment policies or cultural norms or psychological norms, such as not wanting to appear outlandish in one’s actions. We will not investigate this matter here.

Grosz et al., op cit., pp. 156–157.

Moreover, this level of abstraction isolates constitutive and logical properties of the notions, and thus does not succumb to the fallacy of identifying two different things because they share certain features.

The SPIRE framework includes various parameters that need to be set. These parameters are intrinsic to the kinds of decisions that software agents in a collaborative setting make. In varying the parameters, one can see what the overall effects are on the type of issue studied with respect to that particular parameter. Of course, the ways in which parameters interact in a complex system can be daunting. Thus, the methodology is to vary one or two parameters at a time, to keep the study of the system reasonably tractable. There is also a fundamental distinction between parameters that are intrinsic to the agent and the tasks that agent performs, and parameters that are intrinsic to the environment and the physical setting in which the agent is situated. It is the separation of these two distinct kinds of parameters that allows one to study how each can produce effects within the system and allow one to recognize the cause of those effects. One can, thus, separate out the contributions of a social consciousness factor, such as an agent's reputation, from extrinsic factors, such as the number of job offers that are made to an agent who has a prior commitment to working cooperatively to achieve a particular goal.

Grosz, et al., op. cit., pp. 157–159.

Abbreviations

- AAs:

-

Artificial agents

- DSCP:

-

Discount-based social-commitment policy

- RSCP:

-

Ranking-based social-commitment policy

- SPIRE:

-

Shared plans intention reconciliation experiments

References

American Heritage College Dictionary. 4th edn. (2002). New York: Houghton Mifflin Company.

Baier, A. (1986). Trust and antitrust. Ethics, 96(2), 231–260.

Camp, L. J. (2000). Trust and risk in internet commerce. Cambridge, MA: MIT Press.

Floridi, L., & Sanders, J. W. (2004). On the morality of artificial agents. Minds and Machines, 14(3), 349–379.

Fried, C. (1990). Privacy: A rational context. In M. D. Ermann, M. B. Williams, & C. Guitierrez (Eds.), Computers, ethics, and society (pp. 51–63). New York: Oxford University Press.

Gambetta, D. (1998). Can we trust trust? In D. Gambetta (Ed.), Trust: Making and breaking cooperative relations (pp. 213–238). New York: Blackwell.

Grodzinsky, F. S., Miller, K. W., & Wolf, M. J. (2009). Developing artificial agents worthy of trust: Would you buy a used car from this artificial agent? In M. Bottis (Ed.), Proceedings of the eighth international conference on computer ethics—philosophical enquiry (CEPE 2009) (pp. 288–302). Athens, Greece: Nomiki Bibliothiki.

Grosz, B., Kraus, S., Sullivan, D. G., & Das, S. (2002). The influence of social norms and social consciousness on intention reconciliation. Artificial Intelligence, 142, 147–177.

Himma, K. E. (2009). Artificial agency, consciousness, and the criteria for moral agency: What properties must an artificial agent have to be a moral agent? Ethics and Information Technology, 11(1), 19–29.

Holton, R. (1994). Deciding to trust, coming to believe. Australasian Journal of Philosophy, 72, 63–76.

Johnson, D. G. (2006). Computer systems: Moral entities but not moral agents. Ethics and Information Technology, 8(4), 195–204.

Lim, H. C., Stocker, R., & Larkin, H. (2008). Review of trust and machine ethics research: Towards a bio-inspired computational model of ethical trust (CMET). In Proceedings of the 3rd international conference on bio-inspired models of network, information, and computing systems. Hyogo, Japan, November. 25–27, Article No. 8.

Luhmann, N. (1979). Trust and power. Chichester, UK: John Wiley and Sons.

Moor, J. H. (2006). The nature, importance, and difficulty of machine ethics. IEEE Intelligent Systems, 21(4), 18–21.

Murdoch, I. (1973). The black prince. Chatto and Windus.

Nissenbaum, H. (2001). Securing trust online: Wisdom or oxymoron. Boston University Law Review, 81(3), 635–664.

O’Neill, O. (2002). Autonomy and trust in bioethics. Cambridge, MA: Cambridge University Press.

Quine, W. V. O. (1953). Two dogmas of empiricism. In W. V. O. Quine (Ed.), From a logical point of view (pp. 20–46). Cambridge, MA: Harvard University Press.

Simon, J. (2009). MyChoice & traffic lights of trustworthiness: Where epistemology meets ethics in developing tools for empowerment and reflexivity. In M. Bottis, (Ed.), Proceedings of the eighth international conference on computer ethics—philosophical enquiry (CEPE 2009) (pp. 655–670). Athens, Greece: Nomiki Bibliothiki.

Stahl, B. C. (2006). Responsible computers? A case for ascribing quasi-responsibility to computers independent of personhood and agency. Ethics and Information Technology, 8(4), 205–213.

Strawson, P. F. (1974). Freedom and resentment. In P. F. Strawson (Ed.), Freedom and resentment and other essays (pp. 1–28). New York: Routledge.

Subrahamanian, V. S., Bonatti, J., Dix, J., Editor, T., Kraus, S., Ozcan, F., et al. (2000). Heterogeneous agent systems: Theory and implementation. Cambridge, MA: MIT Press.

Taddeo, M. (2008). Modeling trust in artificial agents, a first step toward the analysis of e-trust. In Proceedings of the sixth European conference of computing and philosophy, University for Science and Technology, Montpelier, France. Reprinted in C. Ess, & M. Thorseth (Eds.). Trust and virtual worlds: Contemporary perspectives. Bern: Peter Lang (in press).

Taddeo, M. (2009). Defining trust and e-trust: from old theories to new problems. International Journal of Technology and Human Interaction, 5(2), 23–25.

Walker, M. U. (2006). Moral repair: Reconstructing moral relations after wrongdoing. Cambridge, MA: Cambridge University Press.

Weckert, J. (2005). Trust in cyberspace. In R. Cavalier (Ed.), The impact of the internet on our moral lives (pp. 95–120). Albany, NY: State University of New York Press.

Acknowledgments

We are grateful to Frances Grodzinsky, as well as to two anonymous reviewers, for some helpful suggestions on an earlier draft of this essay.

Author information

Authors and Affiliations

Corresponding author

Additional information

This essay is the first in a series of papers in which we examine the role of trust in multi-agent systems. In subsequent papers we consider the issue of whether artificial agents can trust and be trusted, the connection between frame problems in artificial intelligence and the trust relation, the conceptual connection between the moral notion of trust and the epistemic notion of trust, and the complex network of connections between artificial agents and human agents with respect to both moral trust and epistemic trust.

Rights and permissions

About this article

Cite this article

Buechner, J., Tavani, H.T. Trust and multi-agent systems: applying the “diffuse, default model” of trust to experiments involving artificial agents. Ethics Inf Technol 13, 39–51 (2011). https://doi.org/10.1007/s10676-010-9249-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10676-010-9249-z