Abstract

We investigate the relative probabilistic support afforded by the combination of two analogies based on possibly different, structural similarity (as opposed to e.g. shared predicates) within the context of Pure Inductive Logic and under the assumption of Language Invariance. We show that whilst repeated analogies grounded on the same structural similarity only strengthen the probabilistic support this need not be the case when combining analogies based on different structural similarities. That is, two analogies may provide less support than each would individually.

Similar content being viewed by others

1 Introduction

Suppose that I am considering how likely it is that my son would enjoy a visit to the cinema to see The Sound of Music. Thinking about it I recall that last year he did enjoy seeing Toy Story which somewhat enhances my belief that he will also enjoy The Sound of Music. I then remember my aunt telling me how much she enjoyed it. Should this now further increase the probability I would give to my son liking it?

Here is an example of two facts which individually seem to provide analogical support for my son liking The Sound of Music yet in combination they seem to be pulling in different directions and possibly canceling each other out. (Like enjoying curry and rhubarb crumble but not both at the same time!)

The plan in this paper is to investigate this issue of combining analogical support (at least up to two such supports) within the context of Pure Inductive Logic (PIL for short). Of course since this version of Carnapian Inductive Logic considers the assignment of rational, or logical, probability in the absence of any intended interpretation of the language on closer scrutiny the above example hardly seems relevant (since we already know a great deal about films, aunts, etc. so they are very far indeed from being uninterpreted). Nevertheless it still seems to us interesting to consider this question ‘in vacuo’, in other words as a nascent artificial agent might do.

Within philosophy, and similarly in AI and psychology, there is a considerable literature on analogy, see Bartha’s (2013, 2009) for an excellent overview. Bartha however explicitly avoids considering approaches to analogical reasoning within the general framework of Carnap’s Inductive Logic, as found for example in Carnap (1952, 1980), Carnap and Stegmüller (1959), Costantini (1983), Festa (1996), Huttegger (2014), Kuipers (2000, 1984), Maher (2000, 2001), Maio (1995), Niiniluoto (1981, 1988), Pietarinen (1972), Romeijn (2006), Skyrms (1993), Spohn (1981), Welch (1999).Footnote 1 It is within this framework, more specifically within PIL and directly following on from the earlier (Hill et al. 2011; Hill and Paris 2013a, b; Howarth et al. 2016; Paris and Vencovská 2015), that this present paper is set. Working within such a mathematical setting has the advantage of precision and permanence, a correct theorem cannot be denied only at worst put aside as irrelevant, though we will argue later that results in this formal backwater do have relevance within the wider ambit.

2 Notation and Context

The context of this paper is PIL as explained, for example, in Paris (2015) and Paris and Vencovská (2015). Thus we have a language L with relation symbols \(R_1, R_2, \ldots , R_q\), say of finite arities \(r_1,r_2, \ldots , r_q\) respectively, and constants \(a_n\) for \(n \in {\mathbb {N}}^+ = \{1,2,3, \ldots \}\), and no function symbols nor equality.Footnote 2 Let SL denote the set of first order sentences of this language L.

We are interested in picking a rational probability function w on SL, i.e. a function \(w:SL \rightarrow [0,1]\) such that for \(\theta , \phi , \exists x \,\psi (x) \in SL\)

-

(P1)

If \(\models \theta \) then \(w(\theta ) = 1\),

-

(P2)

If \(\models \lnot (\theta \wedge \phi )\) then \(w(\theta \vee \phi ) = w(\theta ) + w(\phi )\),

-

(P3)

\(w(\exists x \, \psi (x)) = \lim _{m \rightarrow \infty } w(\bigvee ^m_{i=1}\psi (a_i)),\)

where rational is customarily identified with w satisfying certain arguably rational principles. Whilst there is currently no clear consensus on what these principles should be one that is very widely adopted.

The Principle of Constant Exchangeability, Ex.

A probability function w on SL satisfies Constant Exchangeability if, for any permutation \(\sigma \) of \(1,2,\ldots \) and \(\theta (a_1,\ldots ,a_m) \in SL\),

All the probability functions considered in this paper will be assumed to satisfy Ex.

A second similar principle.

The Principle of Predicate Exchangeability, Px

If \(R_i,R_j\) are relation symbols of \(L_q\), of the same arity, then for \(\theta \in SL\),

where \(\theta '\) is the result of transposing \(R_i, R_j\) throughout \(\theta \).

Px necessarily differs from Ex as far as our default language L is concerned in that L has infinitely many constant symbols but only finitely many relation symbols.Footnote 3 If w satisfying Ex + Px can be extended to a probability function \(w_\infty \) on the sentences of a language \(L_\infty \) with infinitely many relation symbols of each arity and continue to satisfy Ex + Px we say that w satisfies Language Invariance, Li. This is a stronger condition on w than simply satisfying Ex + Px nevertheless it holds widely for the main probability functions considered in this subject, for example Carnap’s Continuum of Inductive Methods. It is also arguably rational on the grounds that the probability assigned to a sentence \(\theta \) should surely not depend on the presence or otherwise of relation symbols in the overlying language which are not mentioned in \(\theta \). This amounts to the requirement that a rational probability function on a language L should be extendible to a rational probability function on any larger language, and once Px is taken as a condition for rationality this is equivalent to Li by a sequential compactness argument (see Paris and Vencovská for more details).

3 The Results

In Hill and Paris (2013b) a further putative rationality principle was proposed based on ‘probabilistic analogical support by structural similarity’:Footnote 4

The Counterpart Principle, CP

Let \(\theta , \theta ' \in SL\) be such that \(\theta '\) is the result of replacing some constant/relation symbols in \(\theta \) by new constant/relation symbols not occurring in \(\theta \). Then Footnote 5

For example in the case where \(R_1\) was unary and \(R_2,R_3\) binary and

we could have that

and this instance of CP would give

We are thinking of \(\theta \) and \(\theta '\) in the statement of CP as capturing what is meant by structural similarity, that they have exactly the same syntactic form, only some names have changed. For example, purely in terms of the syntax and in the absence of any interpretation my aunt enjoying The Sound of Music at Christmas is structurally similar to my son enjoying The Sound of Music on his birthday. For such structurally similar \(\theta , \theta '\) just Ex + Px force that they must get the same probability. The Counterpart Principle adds to this formal connection by asserting that there is also a material connection in that conditioning on \(\theta '\) enhances (or at least does not diminish) the probability of \(\theta \).

Relating CP to the common format of analogical reasoning within philosophy as a whole (Bartha 2013), gives a Candidate Analogical Inference Rule (\({\mathcal{{R}}}\)) saying, in short, that having a mapping \(^*\) from a source domain \({\mathcal{{S}}}\) to a target domain \({\mathcal{{T}}}\) the plausibility of a proposition \(Q^*\) holding in \({\mathcal{{T}}}\) given that Q holds in \({\mathcal{{S}}}\) should be stronger the more features \(\theta , \theta ^*\) the domains have in common as opposed to features on which they differ. Within this template CP corresponds to the null case where any evidence of matching/dismatching features is absent (a case that Bartha does not treat). In fact as we shall explain in the penultimate section allowing in even one such matching feature can, rather unexpectedly, destroy the analogical support in the way we are measuring it.

The general pattern of support by analogy that CP endeavours to capture seems to be rather common in our everyday lives, and in science. For example learning that every natural number can be proved to be the sum of four squares might well cause one to guess that every natural number can also be proved to be the sum of nine cubes. From such familiarity one might then feel that there was possibly a case to argue for the rationality of CP. In fact we do not really need to do so since by the following theorem from (Hill and Paris 2013b) it is actually inherited from Li.

Theorem 1

Li implies CP.

In a sense one might say that this theorem provides one answer to the question raised by Hesse (1966), as to what is the philosophical justification for analogical reasoning. For whenever we reason it is necessary to simply discard almost everything we know—there is just too much of it to fully incorporate. We must ring fence a tiny fraction of it that we consider relevant and reason simply on the basis of that knowledge. Theorem 1 tells us that if all we consider relevant is our knowledge that \(\theta '\) holds and we assign probabilities rationally, in the sense of satisfying Li (and Ex) then the probability of \(\theta \) will be enhanced.Footnote 6 From this viewpoint the derived analogical support arises through adopting Li.

Still we should emphasize here that what is ‘derived’ is an increased belief in \(\theta \) within the ring fence. Whether or not this has any relevance to beliefs in the wider world outside the ring fence depends on how far we can accept the ring fence assumption.Footnote 7 It should also be made clear that we are not claiming that this increased belief equates with increased probability of being ‘true’ in any objective sense. But what it might be argued to provide is plausibility, and in turn the impetus to attempt to confirm a truth, though no more than that. For example the discovery that every natural number is the sum of \(4 = 2^2\) squares might equally have encouraged mathematicians to try to prove that every natural number is the sum of \(2^3 =8\) cubes rather than \(3^2=9\) cubes.

As a further development of the idea of structural similarity as embodied in CP the following result is shown in Paris and Vencovská (2015):

Theorem 2

Suppose that \(\theta ,\theta ',\theta '' \in SL\) are such that \(\theta '\) is the result of replacing some constant/relation symbols in \(\theta \) by new constant/relation symbols not occurring in \(\theta \) and similarly \(\theta ''\) is the result of replacing some constant/relation symbols in \(\theta '\) by new constant/relation symbols not occurring in \(\theta \) or \(\theta '\). Then for w satisfying Li, Footnote 8

Carrying on from the above example then this gives that

For \(\theta , \theta ', \theta ''\) as in this theorem there is a clear sense in which \(\theta '\) is structurally at least as similar to \(\theta \) as \(\theta ''\) is. In other words there is a sense of distance, or degree of similarity, the nearer \(\theta '\) is to \(\theta \) the more analogical support it provides. In summary then we could say that Theorem 1 tells us that \(\theta '\) provides ‘analogical support by structural similarity’ for \(\theta \) under the assumption that w satisfies Li and Theorem 2 tells us that the closer the analogy (i.e. structural similarity) the stronger the analogical support.

Theorem 2 raises the question of what the effect is of combining multiple such analogies and more generally what is the algebra of analogical support by similarity in the presence of Li? We will certainly not answer that question in this paper but will settle for elucidating the situation when ‘multiple’ is weakened to ‘two, at most’.

In order to state our results, which will be proved in full in the next section, it will be useful to sketch an artifice in the proof of Theorem 2 as given in (Paris and Vencovská 2015) because it enables us to reduce these questions to a particularly simple form. Leaving implicit any constant and relation symbols common to them all, we can write these \(\theta , \theta '\) and \(\theta ''\) as

where the \(\vec {B}_1, \vec {B}_2, \vec {C}_1,\vec {C}_2,\vec {D}_1, \vec {D}_2, \vec {D}_3\) are entirely disjoint blocks of distinct constant and/or relation symbols and the \(\vec {B}_1, \vec {B}_2\) are matching in terms of the positions of constant and relation symbols, and similarly for the \(\vec {C},\vec {D}\). So for example if \(\vec {B}_1 = \langle a_1, R_1, R_2 \>\) with \(R_1\) unary and \(R_2\) ternary then \(\vec {B}_2\) must similarly be of the form \(\langle a_k, R_m, R_g \>\) with \(R_m\) unary, \(R_g\) ternary.

Let the probability function w on SL satisfy Li and as explained above let \(w_\infty \) be the extension of w to \(SL_\infty \) satisfying Ex + Px. Let \(\vec {B}_{n}\) for \(n >2\) be blocks of totally new relation and constant symbols from \(L_\infty \) matching \(\vec {B}_1\) and similarly produce \(\vec {C}_n, \vec {D}_n\) matching \(\vec {C}_1\), \(\vec {D}_1\) respectively, so that no constant or relation symbol appears in more than one block of any sort. Notice that since \(w_\infty \) extends w and satisfies Ex + Px to show that \(w(\theta \,|\,\theta ') \ge w(\theta \,|\,\theta '')\) it is enough to show that

Let \({\mathcal{{L}}}\) be the language with a single binary relation symbol R. Define a probability function v on \(S{\mathcal{{L}}}\) by

and more generally

where the \(\epsilon _{i,j} \in \{0,1\}\) and \(R^1=R, R^0=\lnot R,\) etc. (By a theorem of Gaifman, see Gaifman (1964) or Paris and Vencovská (2015), v extends uniquely to a probability function on SL satisfying Ex.)

Referring to Paris and Vencovská (2015, p. 187) for the details it can now be shown that for v satisfying Ex,

But this is just another way of formulating the inequality (2).

From this sketch we see that to show that (1) holds for w satisfying Li it is enough to show that (3) holds for a probability function v on \(S{\mathcal{{L}}}\) satisfying Ex.

We shall use the same method to investigate other simple cases of ‘analogical support by (multiple) instances of structural similarity’. Note that any probability function on \(S{\mathcal{{L}}}\) satisfying Ex also satisfies Li: for \({\mathcal{{L}}}\) has just one (binary) relation symbol and if v is a probability function on a language with at most one relation symbol of each arity which satisfies Ex then v trivially satisfies Px and also satisfies Li since we can just introduce relation symbols which for each arity are identical (to within the name). Hence showing above that (1) held for w satisfying Li was in fact equivalent to showing that (3) held for v on \(S{\mathcal{{L}}}\) satisfying Ex; any v on \(S{\mathcal{{L}}}\) satisfying Ex and failing (3) would have provided a counterexample to (1) satisfying Li.

This observation leads us to concentrate on deciding which inequalities must hold between

for w a probability function on \(S{\mathcal{{L}}}\) satisfying Ex. In other words we are interested in comparing the degrees of support afforded by up two individually structurally similar sentences.Footnote 9 To illustrate these within a less formal context suppose that we read ‘enjoys’ for R, ‘my son’ for \(a_1\), The Sound of Music for \(a_2\) and so on. Then (a) would correspond, ceteris paribus, to the probability I would give to my son enjoying The Sound of Music, (c) to his enjoying it on my recalling that he enjoyed Toy Story and (g) to when I also recalled that my aunt enjoying the sound of music. Likewise (b) would correspond to the probability I would give to my son enjoying The Sound of Music just given that my work colleague enjoyed Toy Story.Footnote 10 (Of course this informal example is very special because in the general case, as indicated in the earlier, R will correspond to a template for a sentence and the \(a_i\) blocks of constants and/or relations.)

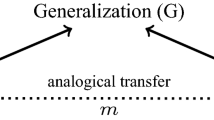

The answers we obtain are given by the following Fig. 1 where a connection from x to y by upward sloping lines than means that y is always at least as large as x (and for some w strictly larger) whilst in unconnected cases the inequality can go either way. In each case, as above, establishing the inequality here provides a corresponding inequality for the more general case of multiple analogical support whilst a counterexample to the inequality holding itself provides a counterexample to the corresponding theorem for multiple analogical support.

So, with the above ring fence assumptions in place, (a) \(\le \) (b) prescribes that the probability I would give that my son will enjoy The Sound of Music should not be diminished when I recall his previously enjoying Toy Story. Similarly (d) \(\le \) (g) would prescribe one giving at least as much probability to the generalized Pell’s equation \( x^2 -3y^2=6\) having a solution when judging (just!) on the basis of \( x^2 -3y^2=1\) and \( x^2 -10y^2=6\) having solutions than when judging instead on the basis of \( x^2 -5y^2=4\) and \( x^2 -2y^2=1\) having solutions.

Each of these inequalities then corresponds to a ‘Principle of Analogical Support by Structural Similarity’ satisfied by a probability function satisfying Li. For example (b) \(\le \) (e) gives that for w satisfying Li,

whilst we cannot in general conclude that for such a w the instance

of (d) \(\le \) (c) holds.

As we shall see for the most part the inequalities and incompatibilities in the above diagram will be straightforward to show. The main novelty in this paper is in proving Theorem 4, that (d) \(\le \) (g).

4 The Proofs

We now set about proving that the inequalities indicated in the above diagram are all that necessarily hold for w a probability function on \(S{\mathcal{{L}}}\) satisfying Ex. We shall start by giving the positive results. In order to do this, as well as to later furnish some counterexamples, we need to recall (and slightly rejig) the Representation Theorem for polyadic probability functions as given in (Paris and Vencovská 2015, Chapter 25) the special case of the language \({\mathcal{{L}}}\) as above.

Let \(D=(d_{i,j})\) be an \(N \times N\) \(\{0,1\}\)-matrix. Define a probability function \(w^D\) on \(S{\mathcal{{L}}}\) by setting

to be the probability of (uniformly) randomly picking, with replacement, \(h(1), h(2),\) \(\ldots ,h(n) \) from \( \{1,2, \ldots , N\}\) such that for each \(i,j \le n\), \( d_{h(i),h(j)}= \epsilon _{i,j}\). This uniquely determines a probability function on \(S{\mathcal{{L}}}\) satisfying Ex. (For details see e.g. Paris and Vencovská 2015, Chapter 7).

Clearly convex mixtures of these \(w^D\) also satisfy Ex. The converse (that any probability function satisfying Ex is a convex mixture of these \(w^D\)) does not hold but as shown in (Paris and Vencovská 2015, Chapter 25) if we move to a suitable non-standard universe and take N to be a non-standard integer then any probability function w on \(S{\mathcal{{L}}}\) satisfying Ex is a convex mixture (as an integral) of the standard parts \(^\circ w^D\) of \(w^D\) from this non-standard universe (see Paris and Vencovská 2015, Theorem 25.1). The important point to take away from this as far as this paper is concerned is that it is sometimes enough to check whether an inequality holds for these \(^\circ w^D\) and since the mathematics is exactly the same it is just as good to check it for the \(w^D\) for standard N.

Given this observation, it turns out that that (d) \(\le \) (g) is a consequence of the following theorem whose rather messy proof is given in Paris and Vencovská.

Theorem 3

Let \((d_{i,j})\) be an \(N \times N\) \(\{0,1\}\)-matrix such that \(\sum _{i,j} d_{i,j} = T >0.\) Then

where \(A_i = \sum _r d_{i,r}, B_j = \sum _{s} d_{s,j}\).

Given this inequality we can now prove the main theorem of this paper which shows that (d) \(\le \) (g).

Theorem 4

Let w be a probability function on the sentences of the binary language \({\mathcal{{L}}}=\{R\}\) satisfying Ex. Then

Proof

For D an \(N \times N\) \(\{0,1\}\)-matrix and \(n \ne m\), \(w^D(R(a_n,a_m))\) is the probability of picking \(h(n), h(m) \in \{1,2, \ldots , N\}\) such that \(d_{h(n),h(m)}=1\). In other words

Hence, since the choices h(1), h(2), h(3), h(4), h(5), h(6) are all independent,

Likewise \(w^D(R(a_1,a_2) \wedge R(a_1,a_3) \wedge R(a_4,a_2))\) is the probability of picking h(1), h(2) such that \(d_{h(1),h(2)}=1\) and then (independently) picking h(3), h(4) such that \(d_{h(1),h(3)}=1\) and \(d_{h(4),h(2)}=1.\) For a particular choice of h(1), h(2) these latter two probabilities are respective

Thus the combined probability of these with \(d_{h(1),h(2)}=1\) is

By Theorem 3 this is at least

and combined with (4) we obtain that

Being a linear inequality this also holds for convex mixtures of the \(w^D\) so by the Representation Theorem (Paris and Vencovská 2015, Theorem 25.1)

for any probability function w on \(S{\mathcal{{L}}}\) satisfying Ex. Since by Ex

the denominators of the conditional probabilities on both sides of the inequality in the statement of the theorem are the same and the required result now follows. \(\square \)

Along similar lines, although much easier, we can show that (d) \(\le \) (e). For, when \(T =\sum _{i,j} d_{i,j} = \sum _i A_i\) as before,

and

follows by Hölder’s Inequality. Thus for w a probability function on \(S{\mathcal{{L}}}\) satisfying Ex,

Since w satisfies Ex,

and (d) \(\le \) (e) follows.

Turning to the other positive inequalities, (a) \(\le \) (b) is just Theorem 1 whilst (b) \(\le \) (d) and (c) \(\le \) (f) follow similarly by applying the Principle of Instantial Relevance, PIR, see Gaifman (1971), Humburg (1971), Paris and Vencovská (2015) (or for the former using the footnote to Theorem 2). Furthermore, (b) \(\le \) (c) is (3).

We now address the inequalities that do not (necessarily) hold. To give a counterexample to (c) \(\le \) (g) consider \(w= w^D\) where D is an \(N \times N\) \(\{0,1\}\)-matrix. In this case, with the above abbreviations,

Letting D be the \(6 \times 6\) matrix

gives values to (5), (6) of 9 / 32 and 7 / 24 respectively, and provides the required counterexample. This same probability function also gives a counterexample to (e) \(\le \) (g) [(hence also to (e) \( \le \) (d)].

A counterexample to (d) \(\le \) (c) is given by setting

where v is any \(c_\lambda ^{L_1}\) of Carnap’s Continuum of Inductive MethodsFootnote 11 for the unary language \(L_1=\{P\}\) with \( 0< \lambda < \infty \). In this case (d) \(\le \) (c) becomes

Putting in the actual values here gives

which is false for \(\lambda \) in this range.

Similarly this probability function also gives counterexamples to (e) \(\le \) (c), (f) \(\le \) (c) and (b) \(\le \) (a).

We now describe a counterexample to (g) \(\le \) (f) . Let v be a probability function on the sentences of the language \( \{P_1,P_2,P_3, \ldots \}\) where the \(P_i\) are unary, satisfying Px and Ex, and set

Then w satisfies Ex and the instance of (g) \(\le \) (f) for this w becomes

and this can be seen to fail for the probability function v which treats the predicates as stochastically independent and identically distributed as, say, \(c_2^{L_1}\) on \(L_1=\{P\}\). This same probability function furnishes a counterexample to (g) \(\le \) (e) and (g) \(\le \) (d).

To show that we do not have (c) \(\le \) (e) let \(D_1,D_2\) be respectively the \(4 \times 4\) matrices

Then,

Hence for \(w= (w^{D_1} + w^{D_2})/2,\)

giving a counterexample to (c) \(\le \) (e).

Finally to give an example where (d) \(\le \) (f) fails let \(E_1,E_2\), respectively, be the \(2 \times 2\) matrices

Then

Hence for \(w= (w^{E_1} + w^{E_2})/2,\)

giving a counterexample to (d) \(\le \) (f).

Combining the inequalities and non-inequalities produced above now gives exactly the relationships indicated in Fig. (1).

As already mentioned (a)–(g) do not form an exhaustive list of all such conditionals with at most two individually structurally similar sentences. Firstly each of these has a ‘mirror image’ formed by transposing the two coordinates, for example

Of these (a), (b), (d), (g) are the same as their mirror images while for the others it is easy to see by the device used with (7) or (8) that including them in Fig. (1) does not give any new vertical lines beyond those already present in the mirror image of Fig. (1).

More significantly we have left for future consideration the seemingly thorny cases of conditionals where the two supporting structurally similar sentences contain common constants which are not also common to the conditioned sentence, for example \( w(R(a_1,a_2) \,|\,R(a_1,a_3) \wedge R(a_4, a_3)).\)

5 Other Analogy Principles in PIL

In this short section we briefly mention some other analogy principles which have been suggested within the context of PIL as being in some sense rational. Ideally such principles will both capture a facet of analogical support as we intuit it and be consequences of other established and acknowledged rational principles (such as is the case with the Counterpart Principle) from which they too will inherit this status. Failing that they will present and formalize altogether new aspects of what might be understood as rational though their acceptability will then to a large degree hinge on their being consistent with other established and acknowledged rational principles.

Already in the joint paper (Carnap and Stegmüller 1959) with Stegmüller Carnap was interested in the idea of analogical support within the context of his (unary) Inductive Logic and this was to continue right up to (Carnap 1980, Chapter 16). The probability functions \(c_\lambda \) of Carnap’s Continuum of Inductive Methods were too restrictive to encompass modeling the sort of analogical effects that Carnap had in mind which has led a number of investigators to propose instead various mixtures, products and generalizations of the \(c_\lambda \), see for example Carnap (1954, 1980), Costantini (1983), Festa (1996), Hesse (1964), Huttegger (2014), Kuipers (1984), Maher (2000, 2001), Maio (1995), Niiniluoto (1981), Romeijn (2006), Skyrms (1993), Spohn (1981). Such approaches however have so far still failed to fully achieve the required effects see for example Kuipers (2000, p. 81), Hill et al. (2011), Hill and Paris (2013a), Maher (2001), Pietarinen (1972), Spohn (1981), Welch (1999). Furthermore being largely chosen to satisfy a property rather than being derived as the functions characterizing some concrete, arguably rational, principles they seem to us to suffer from a certain arbitrariness in the choices of the constituent functions, factors.

Two notable exceptions founded on general principles are (Maio 1995; Maher 2000). Unfortunately neither seem entirely satisfactory, di Maio’s requires a questionable (to our mind) linearity condition, (Maio 1995, II3, p. 378), and Maher’s is effectively restricted to a language with at most two predicates (see Maher 2001).

In Carnap (1973) and Carnap and Stegmüller (1959) the authors also suggested a somewhat less arbitrary notion of analogical support by similarityFootnote 12 based on sharing properties and a derived notion of closeness. To explain this let \(R_1,R_2,\ldots , R_q\) be, as usual, the now all unary, predicates of L and let \(\alpha _1(x), \alpha _2(x), \ldots , \alpha _{2^q}(x)\) be the atoms of L, that is the formulae of the form

where \(\epsilon _1, \epsilon _2, \ldots , \epsilon _q \in \{0,1\}\). Then for atoms

the analogical support afforded to \(\alpha _m(a_2)\) by \(\alpha _r(a_1)\), in the absence of any further information on \(a_1,a_2\), was to be a decreasing function (in terms of the subset ordering) of the set \(\Delta (\alpha _r,\alpha _m)=\{i \,|\,\epsilon _i = \delta _i\}\).

More specifically Carnap’s writings suggest an analogy principle see Carnap (1973, p. 320), Hill (2013, p. 101) of the form:

For atoms \(\alpha _r, \alpha _m, \alpha _k\), if \( \Delta (\alpha _r,\alpha _m) \subseteq \Delta (\alpha _r, \alpha _k )\) then

Using Johnson’s Sufficientness Postulate it is easy to see that this principle holds for the probability functions \(c_\lambda \) in Carnap’s Continuum of Inductive Methods. Unfortunately this is somewhat less satisfactory than it might initially appear because for the \(c^L_\lambda \) (9) holds with equality whenever \(m, k \ne r\),Footnote 13 hardly capturing the idea that \(\alpha _m(a_{n+1})\)’s support for \(\alpha _r(a_{n+2})\) depends on the set of shared features. Several families of probability functions are known which do satisfy (9) with strict subset and inequality but currently no complete characterization is available see for example D’Asaro (2014, Section 3), Hesse (1964), Maher (2000), Welch (1999).Footnote 14

A second point on which (9) might be queried is the requirement that the standing evidence should be of the form \(\bigwedge _{i=1}^n \alpha _{h_i}(a_i)\), in other words a state description, rather than, say, simply a sentence \(\phi (a_1,a_2, \ldots , a_n) \in QFSL\). After all the underlying intuition in both cases would seem to be no different. In fact allowing this generalization has a significant effect. With the exception of \(c^L_0, c^L_\infty \) the principle no longer holds for Carnap’s Continuum even when \(q=2\). Indeed there are only a very few further probability functions satisfying the principle (and Ex + Px + SNFootnote 15), see Hill and Paris (2013a) for a complete characterization, none of them seemingly particularly attractive as a rational choice.

More recently several other analogy principles based on interpretations of the earlier mentioned Candidate Analogical Inference Rule (\({\mathcal{{R}}}\)) of Bartha (2013), have been investigated in the context of PIL, see Howarth et al. (2016), in particular a version of this rule which prescribes that the more properties on which a target and source agree (and the fewer on which they disagree) the more support there is that the target will satisfy an additional property known to be satisfied by the source. While this allows considerable freedom of formalization within PIL the basic version seems to be the principle:

For \(\theta ( a_{n+1},\vec {a}), \phi (a_{n+1},\vec {a}) \in QFSL\), where \(\vec {a} = \langle a_1,a_2, \ldots , a_n \rangle\),

That is the similarity of \(a_{n+1}, a_{n+2}\) engendered by them both satisfying \(\theta \) argues that \(a_{n+2}\) will satisfy \(\phi \) given that \(a_{n+1}\) does.

Unfortunately \(c_0^L\) is the only probability function satisfying this principle (together with Ex + Px + SN). In Howarth et al. (2016) various refinements of this principle were considered some of which do follow from commonly accepted rational principles within PIL. Nevertheless the failure of the above basic version must put a question mark against this formalization of the underlying intuition grounding rule (\({\mathcal{{R}}}\)), at least within the framework of PIL.

6 Conclusion

In this paper we have considered all possible variations of the Counterpart Principle of Analogical Support by Structural Similarity which hold under the assumption of Language Invariance, Li, when we allow up to two analogies and all relation/constant symbols common to the conditioning conjuncts are also present in the conditioned sentence.Footnote 16 In summary these turn out to be generated by the template of particular instances

for w a probability function on the language \(\{R\}\) satisfying Ex. By a template we mean that \(R(a_i,a_j)\) may be replaced here by a sentence of a language L in which the \(a_i\) substitute for disjoint blocks of constant and/or relation symbols of L and w is replaced by a probability function on L satisfying Li.

From (b) \(\ge \) (a), (d) \(\ge \) (b) and (f) \(\ge \) (c) we see that, as one might expect, more analogies of the same structural similarity produce more support (or at least not strictly less support) whilst from (c) \(\ge \) (b), (e) \(\ge \) (d) and (g) \(\ge \) (d) we have, again not unexpectedly, that greater structural similarity gives greater analogical support. More surprising are some of the inequalities which do not necessarily hold, for example that we need not have (e)\( \ge \) (c). Here adding to the conditioning \(R(a_1,a_4)\) in (c) the analogous but more distantly similar \(R(a_5,a_6)\) can actually reduce the analogical support, though support it remains since from (e) \(\ge \) (b), (b) \(\ge \) (a) we do still have (e) \(\ge \) (a). In terms of our introductory example this says that although adding the information about the aunt and The Sound of Music still leaves some analogical support, it may in fact diminish the support provided by the Toy Story evidence alone.

The results in this paper are clearly just the tip of the iceberg, for example we have not considered the case where the underlying template involves not simply binary R but ternary, 4-ary etc. Even in the case of binary R we have not considered cases where constants common to the conditioning sentences are not also present in the conditioned sentence. We have also not considered cases where we also have negated analogies (as allowed by Bartha in his ‘Candidate Analogical Inference Rule’ described in Howarth et al. 2016). Given our results so far, and the technicalities needed to show them, the challenge of answering the apparently limitless number of genuinely new questions that arise, and in turn of building those answers into our understanding, seems formidable. Certainly at this juncture the prospect of an elegant, meaningful general theory of multiple analogical support by structural similarity can be no more than a matter of pure speculation.

Finally it is worth reminding ourselves that the results in this paper have been derived on the assumption that one’s chosen rational probability function satisfies just Li. In Polyadic Inductive Logic however there are a number of stronger ‘rationality principles’ which have been proposed, in particular Li with Spectrum Exchangeability,Footnote 17and it may be that making these further assumptions will produce a different picture. Given our previous difficulties in attempting to generalize PIR to this polyadic context (see Paris and Vencovská 2015, Chapter 36) that too would look to be a challenging endeavour.Footnote 18

Notes

We identify L with the set \(\{ R_1,R_2, \ldots , R_q\}\).

In the study of PIL there are good reasons for this differing treatment, in particular almost all principles currently being considered are adequately captured in languages with only finitely many relation symbols, unlike the case for constants.

This underlying conception of analogy is similar to that employed in Structure-Mapping Theory in AI, see Gentner (1983).

Throughout we avoid any problems of conditioning on sentences with zero probability by identifying, for example, \(w(\phi \,|\,\psi ) \ge w(\eta \,|\,\zeta )\) with \(w(\phi \wedge \psi )\cdot w(\zeta ) \ge w(\eta \wedge \zeta ) \cdot w(\psi ).\)

Or at least not diminished—the conditions for merely equality in CP are rather messy but in our view sufficiently ‘unnatural’ as to offer no serious grounds on which to criticize CP, see Hill and Paris (2013b) for more details.

Possibly this explains the force of analogy in mathematics and science where the outside world according to our current view is so structured and lawlike.

With nothing more than complicating the notation we could also add to the conditioning sentences a sentence \(\psi \) provided that none of the relation and constant symbols which replace or are replaced in the passage from \(\theta \) to \(\theta '\) to \(\theta ''\) appear in \(\psi \). This remark also applies to Theorem 3.

This is not an exhaustive list of such conditionals, a point we shall return to later.

It is of course essential in these cases that one treats them purely as given without introducing any further knowledge or interpretation. Difficult as this is for anything but a nascent artificial agent, still it is the case that in everyday reasoning we do ring fence the available information between relevant and irrelevant. All we ask of our reader here is that they use ring fencing that includes only what is explicitly given.

In Carnap (1980) Carnap also has a notion of analogy by proximity but that essentially denies Ex so is not under consideration here.

In the accounts related in this section the problem of conditional probabilities being undefined when the denominator probability is zero was avoided by adopting the convention of treating \(w(\theta \,|\,\phi ) \ge w(\theta \,|\,\psi )\) (etc.) as an abbreviation for \(w(\theta \wedge \phi )w(\psi ) \ge w(\theta \wedge \psi )w(\phi )\).

In fact with Atom Exchangeability and Regularity this characterizes the \(c^L_\lambda \) for \(0 < \lambda \le \infty \), see (D’Asaro and Paris).

SN is the Strong Negation Principle which asserts that if \(\theta '\) is the result of replacing a relation symbol R everywhere in \(\theta \) by \(\lnot R\) then \(w(\theta ')=w(\theta )\).

In this paper the relationship of, say, \(w(R(a_1,a_2) \,|\,R(a_1, a_3) \wedge R(a_4,a_3))\) to (a)–(g) is not considered.

In a nutshell Spectrum Exchangeability asserts that the probability of a state description should depend only on the numbers of constants which look identical according to that state description and not on what particular relations they satisfy, (see for example Paris and Vencovská 2015). It is a natural extension to polyadic languages of Atom Exchangeability, so essentially Carnap’s Attribute Symmetry, (Carnap 1980, p. 77), for unary languages.

Another approach to PIR which may also shed some light on analogy can be found in Ronel and Vencovská (2016).

References

Bartha, P. (2009). By parallel reasoning. Oxford: Oxford University Press.

Bartha, P. (2013). Analogy and analogical reasoning. In E. N. Zalta (ed) The stanford encyclopedia of philosophy (Fall Edition). http://plato.stanford.edu/archives/fall2013/entries/reasoning-analogy/.

Carnap, R. (1952). The continuum of inductive methods. Chicago: University of Chicago Press.

Carnap, R. (1954). \(m(Z)\) for \(n\) families as a combination of \(m_\lambda \)-functions, document 093–22-01 (see also 093–22-03) in the Rudolf Carnap Collection. University of Pittsburg Library, Pittsburgh

Carnap, R. (1973). Logical empiricist. In J. Hintikka (Ed.), Notes on probability and induction (pp. 293–324). Dordrecht: Reidel.

Carnap, R. (1980). A basic system of inductive logic part II. In I. I. Volume (Ed.), Studies in inductive logic and probability (pp. 7–155). Berkeley: R. C. Jeffrey, University of California Press.

Carnap, R., & Stegmüller, W. (1959). Induktive Logik und Wahrscheinlichkeit. Wien: Springer.

Costantini, D. (1983). Analogy by similarity. Erkenntnis, 20, 107–114.

D’Asaro, F. A. (2014). Analogical reasoning in unary inductive logic. Master’s Thesis, University of Manchester. Available at http://www.maths.manchester.ac.uk/~jeff/theses/fdathesis.

D’Asaro, F. A., & Paris, J. B. A Note on Carnap’s continuum and the weak state description analogy principle. Available at http://www.maths.manchester.ac.uk/~jeff/papers/fad160208sdah.

di Maio, M. C. (1995). Predictive probability and analogy by similarity. Erkenntnis, 43(3), 369–394.

Festa, R. (1996). Analogy and exchangeability in predictive inferences. Erkenntnis, 45, 229–252.

Gaifman, H. (1964). Concerning measures on first order calculi. Israel Journal of Mathematics, 2, 1–18.

Gaifman, H. (1971). Applications of de Finetti’s theorem to inductive logic. In I. Volume (Ed.), Studies in inductive logic and probability (pp. 235–251). Berkeley: R. Carnap & R. C. Jeffrey, University of California Press.

Gentner, D. (1983). Structure-mapping: A theoretical framework for analogy. Cognitive Science, 7, 155–170.

Hesse, M. (1964). Analogy and confirmation theory. Philosophy of Science, 31, 319–327.

Hesse, M. (1966). Models and analogies in science. Notre Dame: University of Notre Dame Press.

Hill, A. (2013). Reasoning by analogy in inductive logic. Doctorial Thesis, University of Manchester. Available at http://www.maths.manchester.ac.uk/~jeff/theses/ahthesis.

Hill, A., & Paris, J. B. (2011). Reasoning by analogy in inductive logic. In M. Peliš & V. Punčochář (Eds.), The logica yearbook 2011. London: College Publications.

Hill, A., & Paris, J. B. (2013a). An analogy principle in inductive logic. Annals of Pure and Applied Logic, 164, 1293–1321.

Hill, A., & Paris, J. B. (2013b). The counterpart principle of analogical support by structural similarity. Erkenntnis, 79(2), 1–16.

Howarth, E., Paris, J. B., & Vencovská, A. (2016). An examination of the SEP candidate analogical inference rule within pure inductive logic. Journal of Applied Logic, 14, 22–45.

Humburg, J. (1971). The principle of instantial relevance. In R. Carnap & R. C. Jeffrey (Eds.), Studies in inductive logic and probability (Vol. I, pp. 225–233). Berkeley: University of California Press.

Huttegger, S. M. (2014). Analogical predictive probabilities. Available at http://faculty.sites.uci.edu/shuttegg/files/2011/03/AnalogicalPredictionV4.

Kuipers, T. A. F. (1984). Two types of inductive analogy by similarity. Erkenntnis, 21, 63–87.

Kuipers, T. A. F. (2000). From instrumentalism to constructive realism (Vol. 287). Berlin: Synthese Library.

Maher, P. (2000). Probabilites for two properties. Erkenntnis, 52, 63–91.

Maher, P. (2001). Probabilities for multiple properties: The models of Hesse. Carnap and Kemeny, Erkenntnis, 55, 183–216.

Niiniluoto, I. (1981). Analogy and inductive logic. Erkenntnis, 16, 1–34.

Niiniluoto, I. (1988). Analogy and similarity in scientific reasoning. In D. H. Helman (Ed.), Analogical reasoning (pp. 271–298). Berlin: Kluwer Academic Publishers.

Paris, J. B. (2015). Pure inductive logic: Workshop notes for progic. Available at http://www.maths.manchester.ac.uk/~jeff/lecture-notes/Progic15.

Paris, J. B. An equivalent of language invariance. Available at MIMS EPrints, http://eprints.ma.man.ac.uk/2437/.

Paris, J. B., & Vencovská, A. (2015). Pure inductive logic, in the association of symbolic logic perspectives in mathematical logic series. Cambridge: Cambridge University Press.

Paris, J. B., & Vencovská, A. An inequality concerning the expected values of row-sum and column-sum products in Boolean matrices. Available at MIMS EPrints http://eprints.ma.man.ac.uk/2469/.

Pietarinen, J. (1972). Lawlikeness, analogy and inductive logic. Amsterdam: North-Holland Pub. Co.

Romeijn, J. W. (2006). Analogical predictions for explicit similarity. Erkenntnis, 64(2), 253–280.

Ronel, T., & Vencovská, A. (2016). The principle of signature exchangeability. Journal of Applied Logic, 15, 16–45.

Skyrms, B. (1993). Analogy by similarity in hyper-carnapian inductive logic. In J. Earman, A. I. Janis, R. J. Massey, & N. Rescher (Eds.), Philosophical problems of the internal and external worlds (pp. 273–282). Pittsburg: University of Pittsburg Press/Universitätsverlag Konstanz.

Spohn, W. (1981). Analogy and inductive logic: A note on Niiniluoto. Erkenntnis, 16, 35–52.

Welch, J. (1999). Singular analogy and quantitative inductive logics. Theoria, 14(2), 207–247.

Author information

Authors and Affiliations

Corresponding author

Additional information

J. B. Paris: Supported by a UK Engineering and Physical Sciences Research Council Research Grant.

A. Vencovská: Supported by a UK Engineering and Physical Sciences Research Council Research Grant.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Paris, J.B., Vencovská, A. Combining Analogical Support in Pure Inductive Logic. Erkenn 82, 401–419 (2017). https://doi.org/10.1007/s10670-016-9825-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10670-016-9825-7