Abstract

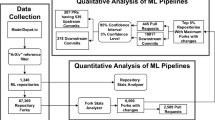

Recent work has shown that Machine Learning (ML) programs are error-prone and called for contracts for ML code. Contracts, as in the design by contract methodology, help document APIs and aid API users in writing correct code. The question is: what kinds of contracts would provide the most help to API users? We are especially interested in what kinds of contracts help API users catch errors at earlier stages in the ML pipeline. We describe an empirical study of posts on Stack Overflow of the four most often-discussed ML libraries: TensorFlow, Scikit-learn, Keras, and PyTorch. For these libraries, our study extracted 413 informal (English) API specifications. We used these specifications to understand the following questions. What are the root causes and effects behind ML contract violations? Are there common patterns of ML contract violations? When does understanding ML contracts require an advanced level of ML software expertise? Could checking contracts at the API level help detect the violations in early ML pipeline stages? Our key findings are that the most commonly needed contracts for ML APIs are either checking constraints on single arguments of an API or on the order of API calls. The software engineering community could employ existing contract mining approaches to mine these contracts to promote an increased understanding of ML APIs. We also noted a need to combine behavioral and temporal contract mining approaches. We report on categories of required ML contracts, which may help designers of contract languages.

Similar content being viewed by others

Data Availibility Statement

The dataset (labeled SO posts, queries, source codes, etc.) generated during the study are available in the figshare repository, https://figshare.com/s/c288c02598a417a434df

Notes

References

Aghajani E, Nagy C, Vega-Márquez OL, Linares-Vásquez M, Moreno L, Bavota G, Lanza M (2019) Software documentation issues unveiled. 2019 IEEE/ACM 41st International Conference on Software Engineering (ICSE) pp 1199–1210

Barua A, Thomas SW, Hassan AE (2012) What are developers talking about? an analysis of topics and trends in Stack Overflow. Empirical Software Engineering 19:619–654

Beyer S, Pinzger M (2014) A manual categorization of android app development issues on Stack Overflow. 2014 IEEE International Conference on Software Maintenance and Evolution pp 531–535

Cai L, Wang H, Xu B, Huang Q, Xia X, Lo D, Xing Z (2019) Answer Bot: An answer summary generation tool based on Stack Overflow. In: Proceedings of the 2019 27th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Association for Computing Machinery, New York, NY, USA, ESEC/FSE 2019, p 1134–1138. https://doi.org/10.1145/3338906.3341186

Chatterjee P, Kong M, Pollock L (2020) Finding help with programming errors: An exploratory study of novice software engineers’ focus in Stack Overflow posts. Journal of Systems and Software 159. https://doi.org/10.1016/j.jss.2019.110454

Corbin J, Strauss A (1990) Grounded theory research: Procedures, canons and evaluative criteria. Zeitschrift für Soziologie 19(6):418–427. https://doi.org/10.1515/zfsoz-1990-0602

Corbin J, Strauss A (2008) Basics of qualitative research (3rd ed.): Techniques and procedures for developing grounded theory

Cousot P, Cousot R, Fahndrich M, Logozzo F (2013) Automatic inference of necessary preconditions. In: in Proceedings of the 14th Conference on Verification, Model Checking and Abstract Interpretation (VMCAI’13), Springer Verlag. https://www.microsoft.com/en-us/research/publication/automatic-inference-of-necessary-preconditions/

Cummaudo A, Vasa R, Barnett SA, Grundy J, Abdelrazek M (2020) Interpreting cloud computer vision pain-points: A mining study of Stack Overflow. arXiv:2001.10130

Dvijotham KD, Stanforth R, Gowal S, Qin C, De S, Kohli P (2019) Efficient neural network verification with exactness characterization. In: Proc. Uncertainty in Artificial Intelligence, UAI, p 164

Ellmann M (2017) On the similarity of software development documentation. In: Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering, Association for Computing Machinery, New York, NY, USA, ESEC/FSE 2017, p 1030–1033, https://doi.org/10.1145/3106237.3119875, https://doi.org/10.1145/3106237.3119875

Endres DM, Schindelin JE (2003) A new metric for probability distributions. IEEE Transactions on Information theory 49(7):1858–1860

Glaser B (1978) Theoretical sensitivity. Advances in the Methodology of Grounded Theory. https://ci.nii.ac.jp/naid/10028142446/en/

Graham B, Furr W, Kuczmarski K, Biskup B, Palay A (2010) Pycontracts. https://andreacensi.github.io/contracts//

Gruska N, Wasylkowski A, Zeller A (2010) Learning from 6,000 projects: Lightweight cross-project anomaly detection. In: Proceedings of the 19th International Symposium on Software Testing and Analysis, Association for Computing Machinery, New York, NY, USA, ISSTA ’10, p 119–130. https://doi.org/10.1145/1831708.1831723

Guo Y (2017) 7 Steps of ML. https://towardsdatascience.com/the-7-steps-of-machine-learning-2877d7e5548e, retrieved Aug 2020

Hoare CAR (1969) An axiomatic basis for computer programming. Commun ACM 12(10):576–580. https://doi.org/10.1145/363235.363259

Höst M, Wohlin C, Thelin T (2005) Experimental context classification: Incentives and experience of subjects. In: Proceedings of the 27th International Conference on Software Engineering, Association for Computing Machinery, New York, NY, USA, ICSE ’05, p 470–478. https://doi.org/10.1145/1062455.1062539

Humbatova N, Jahangirova G, Bavota G, Riccio V, Stocco A, Tonella P (2020) Taxonomy of real faults in deep learning systems. In: ICSE’20: The 42nd International Conference on Software Engineering

Islam MJ, Nguyen G, Pan R, Rajan H (2019) A comprehensive study on deep learning bug characteristics. In: ESEC/FSE’19: The ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering (ESEC/FSE), ESEC/FSE 2019

Islam MJ, Pan R, Nguyen G, Rajan H (2020) Repairing deep neural networks: Fix patterns and challenges. In: ICSE’20: The 42nd International Conference on Software Engineering

Jia L, Zhong H, Wang X, Huang L, Lu X (2020) An empirical study on bugs inside tensorflows. In: Proc. DASFAA, p to appear

Jothimurugan K, Alur R, Bastani O (2019) A composable specification language for reinforcement learning tasks. In: Advances in Neural Information Processing Systems, pp 13021–13030

Khairunnesa SS, Nguyen HA, Nguyen TN, Rajan H (2017) Exploiting implicit beliefs to resolve sparse usage problem in usage-based specification mining. In: OOPSLA’17: The ACM SIGPLAN conference on Object-Oriented Programming, Systems, Languages, and Applications, OOPSLA’17

Le TDB, Lo D (2018) Deep specification mining. In: Proceedings of the 27th ACM SIGSOFT International Symposium on Software Testing and Analysis, Association for Computing Machinery, New York, NY, USA, ISSTA 2018, p 106–117. https://doi.org/10.1145/3213846.3213876, https://doi.org/10.1145/3213846.3213876

Le Goues C, Nguyen T, Forrest S, Weimer W (2012) GenProg: A generic method for automatic software repair. IEEE Transactions on Software Engineering 38(1):54–72

Leavens GT, Baker AL, Ruby C (2006) Preliminary design of JML: A behavioral interface specification language for Java. SIGSOFT Softw Eng Notes 31(3):1–38. https://doi.org/10.1145/1127878.1127884

Leavens GT, Cok DR, Nilizadeh A (2022) Further lessons from the jml project. The Logic of Software. Springer, A Tasting Menu of Formal Methods, pp 313–349

Lehtosalo J (2012) mypy. http://mypy-lang.org/index.html retrieved Aug 2020

Lemieux C (2015) Mining temporal properties of data invariants. In: 2015 IEEE/ACM 37th IEEE International Conference on Software Engineering, IEEE, vol 2 pp 751–753

Lemieux C, Park D, Beschastnikh I (2015) General LTL specification mining (T). In: 2015 30th IEEE/ACM International Conference on Automated Software Engineering (ASE), IEEE, pp 81–92

Li Y, Wang S, Nguyen TN (2020) DLFix: Context-based code transformation learning for automated program repair. In: ICSE’20: The 42nd International Conference on Software Engineering

Long F, Rinard M (2015) Staged program repair with condition synthesis. In: Proceedings of the 2015 10th Joint Meeting on Foundations of Software Engineering, Association for Computing Machinery, New York, NY, USA, ESEC/FSE 2015, p 166–178. https://doi.org/10.1145/2786805.2786811, https://doi.org/10.1145/2786805.2786811

Manna Z, Pnueli A (1992) The Temporal Logic of Reactive and Concurrent Systems. SV, NY

Mechtaev S, Yi J, Roychoudhury A (2016) Angelix: Scalable multiline program patch synthesis via symbolic analysis. In: Proceedings of the 38th International Conference on Software Engineering, Association for Computing Machinery, New York, NY, USA, ICSE ’16, p 691–701. https://doi.org/10.1145/2884781.2884807, https://doi.org/10.1145/2884781.2884807

Mendoza H, Klein A, Feurer M, Springenberg JT, Urban M, Burkart M, Dippel M, Lindauer M, Hutter F (2019) Towards automatically-tuned deep neural networks. In: Automated Machine Learning, Springer, pp 135–149

Meyer B (1988) Object-oriented Software Construction. Prentice Hall, NY

Meyer B (1992) Applying "design by contract’’. Computer 25(10):40–51. https://doi.org/10.1109/2.161279

Murali V, Chaudhuri S, Jermaine C (2017) Bayesian specification learning for finding API usage errors. In: Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering, Association for Computing Machinery, New York, NY, USA, ESEC/FSE 2017, p 151–162. https://doi.org/10.1145/3106237.3106284

Murphy KP (2012) Machine learning: a probabilistic perspective. MIT press

Nasehi SM, Sillito J, Maurer F, Burns C (2012) What makes a good code example?: A study of programming q amp;a in stackoverflow. In: 2012 28th IEEE International Conference on Software Maintenance (ICSM), pp 25–34. https://doi.org/10.1109/ICSM.2012.6405249

Nguyen HA, Dyer R, Nguyen TN, Rajan H (2014) Mining preconditions of API s in large-scale code corpus. In: FSE‘14: 22nd International Symposium on Foundations of Software Engineering, FSE’14

Nguyen TT, Nguyen HA, Pham NH, Al-Kofahi JM, Nguyen TN (2009) Graph-based mining of multiple object usage patterns. In: Proceedings of the 7th Joint Meeting of the European Software Engineering Conference and the ACM SIGSOFT Symposium on The Foundations of Software Engineering, Association for Computing Machinery, New York, NY, USA, ESEC/FSE ’09, p 383–392. https://doi.org/10.1145/1595696.1595767

Pandita R, Xiao X, Zhong H, Xie T, Oney S, Paradkar A (2012) Inferring method specifications from natural language api descriptions. In: 2012 34th International Conference on Software Engineering (ICSE), pp 815–825. https://doi.org/10.1109/ICSE.2012.6227137

Pei Y, Furia CA, Nordio M, Wei Y, Meyer B, Zeller A (2014) Automated fixing of programs with contracts. IEEE Transactions on Software Engineering 40(5):427–449. https://doi.org/10.1109/TSE.2014.2312918

Pradel M, Gross TR (2009) Automatic generation of object usage specifications from large method traces. In: Proceedings of the 2009 IEEE/ACM International Conference on Automated Software Engineering, IEEE Computer Society, USA, ASE ’09, p 371–382. https://doi.org/10.1109/ASE.2009.60

Pǎsǎreanu CS, Rungta N (2010) Symbolic path finder: Symbolic execution of Java bytecode. In: Proceedings of the IEEE/ACM International Conference on Automated Software Engineering, Association for Computing Machinery, New York, NY, USA, ASE ’10, p 179–180. https://doi.org/10.1145/1858996.1859035

Reger G, Barringer H, Rydeheard D (2013) A pattern-based approach to parametric specification mining. In: 2013 28th IEEE/ACM International Conference on Automated Software Engineering (ASE), IEEE, pp 658–663

Rosen C, Shihab E (2015) What are mobile developers asking about? a large scale study using Stack Overflow. Empirical Software Engineering 21:1192–1223

Sankaran A, Aralikatte R, Mani S, Khare S, Panwar N, Gantayat N (2017) DARVIZ: deep abstract representation, visualization, and verification of deep learning models. In: 2017 IEEE/ACM 39th International Conference on Software Engineering: New Ideas and Emerging Technologies Results Track (ICSE-NIER), IEEE, pp 47–50

Sarker S, Lau F, Sahay S (2000) Building an inductive theory of collaboration in virtual teams: an adapted grounded theory approach. In: Proceedings of the 33rd Annual Hawaii International Conference on System Sciences, pp 10 pp. vol 2

Seshia SA, Desai A, Dreossi T, Fremont DJ, Ghosh S, Kim E, Shivakumar S, Vazquez-Chanlatte M, Yue X (2018) Formal specification for deep neural networks. In: International Symposium on Automated Technology for Verification and Analysis, Springer, pp 20–34

Sim J, Wright CC (2005) The kappa statistic in reliability studies: Use, interpretation, and sample size requirements. Physical Therapy 85(3):257–268. https://doi.org/10.1093/ptj/85.3.257

StackOverflow Reputation (2023) StackOverflow reputation and moderation. https://stackoverflow.com/help/reputation, retrieved Jan 2023

StackOverflow Survey (2017) Survey. https://survey.stackoverflow.co/2022/, retrieved Jan 2023

Sun X, Zhou T, Li G, Hu J, Yang H, Li B (2017) An empirical study on real bugs for machine learning programs. In: 2017 24th Asia-Pacific Software Engineering Conference (APSEC), pp 348–357. https://doi.org/10.1109/APSEC.2017.41

Thung F, Wang S, Lo D, Jiang L (2012) An empirical study of bugs in machine learning systems. In: Proceedings of the 2012 IEEE 23rd International Symposium on Software Reliability Engineering, IEEE Computer Society, USA, ISSRE ’12, p 271–280. https://doi.org/10.1109/ISSRE.2012.22

Treude C, Robillard MP (2016) Augmenting API documentation with insights from Stack Overflow. In: Proceedings of the 38th International Conference on Software Engineering, Association for Computing Machinery, New York, NY, USA, ICSE ’16, p 392–403, https://doi.org/10.1145/2884781.2884800, https://doi.org/10.1145/2884781.2884800

Viera AJ, Garrett JM et al (2005) Understanding interobserver agreement: the kappa statistic. Fam med 37(5):360–363

Wang S, Chollak D, Movshovitz-Attias D, Tan L (2016) Bugram: Bug detection with n-Gram language models. In: Proceedings of the 31st IEEE/ACM International Conference on Automated Software Engineering, Association for Computing Machinery, New York, NY, USA, ASE 2016, p 708–719, https://doi.org/10.1145/2970276.2970341, https://doi.org/10.1145/2970276.2970341

Wasylkowski A, Zeller A, Lindig C (2007) Detecting object usage anomalies. In: Proceedings of the the 6th Joint Meeting of the European Software Engineering Conference and the ACM SIGSOFT Symposium on The Foundations of Software Engineering, Association for Computing Machinery, New York, NY, USA, ESEC-FSE ’07, p 35–44. https://doi.org/10.1145/1287624.1287632

Xie D, Li Y, Kim M, Pham HV, Tan L, Zhang X, Godfrey MW (2022) Docter: Documentation-guided fuzzing for testing deep learning api functions. In: Proceedings of the 31st ACM SIGSOFT International Symposium on Software Testing and Analysis, Association for Computing Machinery, New York, NY, USA, ISSTA 2022, p 176–188. https://doi.org/10.1145/3533767.3534220

Zhang T, Upadhyaya G, Reinhardt A, Rajan H, Kim M (2018a) Are code examples on an online Q &A forum reliable? a study of API misuse on Stack Overflow. In: Proceedings of the 40th International Conference on Software Engineering, Association for Computing Machinery, New York, NY, USA, ICSE ’18, p 886–896. https://doi.org/10.1145/3180155.3180260

Zhang T, Gao C, Ma L, Lyu M, Kim M (2019) An empirical study of common challenges in developing deep learning applications. In: 2019 IEEE 30th International Symposium on Software Reliability Engineering (ISSRE), pp 104–115. https://doi.org/10.1109/ISSRE.2019.00020

Zhang T, Gao C, Ma L, Lyu M, Kim M (2019) An empirical study of common challenges in developing deep learning applications. In: 2019 IEEE 30th International Symposium on Software Reliability Engineering (ISSRE), IEEE, pp 104–115

Zhang Y, Chen Y, Cheung SC, Xiong Y, Zhang L (2018b) An empirical study on Tensor Flow program bugs. In: Proceedings of the 27th ACM SIGSOFT International Symposium on Software Testing and Analysis, Association for Computing Machinery, New York, NY, USA, ISSTA 2018, p 129–140. https://doi.org/10.1145/3213846.3213866,

Zhong H, Meng N, Li Z, Jia L (2020) An empirical study on API parameter rules. In: ICSE’20: The 42nd International Conference on Software Engineering

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare conflict of interest with the people affiliated with Iowa State University, University of Central Florida, and Bradley University.

Additional information

Communicated by: Carlo A. Furia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Khairunnesa, S.S., Ahmed, S., Imtiaz, S.M. et al. What kinds of contracts do ML APIs need?. Empir Software Eng 28, 142 (2023). https://doi.org/10.1007/s10664-023-10320-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s10664-023-10320-z