Abstract

Code smells are seen as major source of technical debt and, as such, should be detected and removed. However, researchers argue that the subjectiveness of the code smells detection process is a major hindrance to mitigate the problem of smells-infected code. This paper presents the results of a validation experiment for the Crowdsmelling approach proposed earlier. The latter is based on supervised machine learning techniques, where the wisdom of the crowd (of software developers) is used to collectively calibrate code smells detection algorithms, thereby lessening the subjectivity issue. In the context of three consecutive years of a Software Engineering course, a total “crowd” of around a hundred teams, with an average of three members each, classified the presence of 3 code smells (Long Method, God Class, and Feature Envy) in Java source code. These classifications were the basis of the oracles used for training six machine learning algorithms. Over one hundred models were generated and evaluated to determine which machine learning algorithms had the best performance in detecting each of the aforementioned code smells. Good performances were obtained for God Class detection (ROC= 0.896 for Naive Bayes) and Long Method detection (ROC= 0.870 for AdaBoostM1), but much lower for Feature Envy (ROC= 0.570 for Random Forrest). The results suggest that Crowdsmelling is a feasible approach for the detection of code smells. Further validation experiments based on dynamic learning are required to a comprehensive coverage of code smells to increase external validity.

Similar content being viewed by others

Notes

Receiver operating characteristic (ROC) is a curve that plots the true positive rates against the false positive rates for all possible thresholds between 0 and 1.

References

Baltes S, Treude C (2020) Code duplication on stack overflow. In: Proceedings of the ACM/IEEE 42nd International conference on software engineering: new ideas and emerging results, ICSE-NIER ’20. Association for Computing Machinery, New York, pp 13–16

Bavota G, Russo B (2016) A large-scale empirical study on self-admitted technical debt. In: Proceedings of the 13th international conference on mining software repositories, pp 315–326

Bentzien J, Muegge I, Hamner B, Thompson DC (2013) Crowd computing: Using competitive dynamics to develop and refine highly predictive models. Drug Discov Today 18(9-10):472–478

Bigham JP, Bernstein MS, Adar E (2014) Human-computer interaction and collective intelligence. In: Malone TW, Bermstein MS (eds) The Collective Intelligence Handbook. CMU

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Caldeira J, Brito e Abreu F, Cardoso J, dos Reis JP (2020) Unveiling process insights from refactoring practices

de Mello R, Oliveira R, Sousa L, Garcia A (2017) Towards effective teams for the identification of code smells. In: 2017 IEEE/ACM 10th international workshop on cooperative and human aspects of software engineering (CHASE), pp 62–65

Di Nucci D, Palomba F, Tamburri DA, Serebrenik A, De Lucia A (2018) Detecting code smells using machine learning techniques: Are we there yet? In: 2018 IEEE 25th international conference on software analysis, evolution and reengineering (SANER), pp 612–621

Feldt R, Zimmermann T, Bergersen GR, Falessi D, Jedlitschka A, Juristo N, Münch J, Oivo M, Runeson P, Shepperd M, Sjøberg DI, Turhan B (2018) Four commentaries on the use of students and professionals in empirical software engineering experiments. Empirical Software Engineering 23(6):3801–3820

Fontana FA, Braione P, Zanoni M (2012) Automatic detection of bad smells in code: an experimental assessment. Journal of Object Technology 11(2)

Fontana FA, Mäntylä MV, Zanoni M, Marino A (2015) Comparing and experimenting machine learning techniques for code smell detection. Empirical Software Engineering

Fontana FA, Zanoni M, Marino A, Mäntylä MV (2013) Code smell detection: Towards a machine learning-based approach. In: 2013 IEEE international conference on software maintenance, pp 396–399

Fowler M, Beck K, Brant J, Opdyke W, Roberts D (1999) Refactoring: improving the design of existing code. Addison-Wesley Longman Publishing Co., Inc

Freund Y, Schapire RE (1996) Experiments with a new boosting algorithm. In: Proceedings of the Thirteenth international conference on international conference on machine learning, ICML’96. Morgan Kaufmann Publishers Inc, San Francisco, pp 148–156

Hall M, Frank E, Holmes G, Pfahringer B, Reutemann P, Witten IH (2009) The weka data mining software: An update. SIGKDD Explor Newsl 11(1):10–18

Humphrey W (2009) The Future of Software Engineering: I. The Watts New? Collection: Columns by the SEI’s Watts Humphrey

John G, Langley P (1995) Estimating continuous distributions in bayesian classifiers. In: Inproceedings of the eleventh conference on uncertainty in artificial intelligence, pp 338–345. Morgan Kaufmann

Kessentini W, Kessentini M, Sahraoui H, Bechikh S, Ouni A (2014) A cooperative parallel search-based software engineering approach for code-smells detection. IEEE Trans Softw Eng 40(9):841–861

Mansoor U, Kessentini M, Maxim BR, Deb K (2017) Multi-objective code-smells detection using good and bad design examples. Softw Qual J 25(2):529–552

Oliveira R (2016) When more heads are better than one? understanding and improving collaborative identification of code smells. In: 2016 IEEE/ACM 38th international conference on software engineering companion (ICSE-C), pp 879–882

Oliveira R, de Mello R, Fernandes E, Garcia A, Lucena C (2020) Collaborative or individual identification of code smells? on the effectiveness of novice and professional developers. Inf Softw Technol 120:106242

Oliveira R, Estácio B, Garcia A, Marczak S, Prikladnicki R, Kalinowski M, Lucena C (2016) Identifying code smells with collaborative practices: A controlled experiment. In: 2016 X Brazilian symposium on software components, architectures and reuse (SBCARS), pp 61–70

Oliveira R, Sousa L, de Mello R, Valentim N, Lopes A, Conte T, Garcia A, Oliveira E, Lucena C (2017) Collaborative identification of code smells: A multi-case study. In: 2017 IEEE/ACM 39th international conference on software engineering: software engineering in practice track (ICSE-SEIP), pp 33–42

Palomba F, Di Nucci D, Tufano M, Bavota G, Oliveto R, Poshyvanyk D, De Lucia A (2015) Landfill: an open dataset of code smells with public evaluation. In: 2015 IEEE/ACM 12th working conference on mining software repositories, pp 482–485

Pecorelli F, Di Nucci D, De Roover C, De Lucia A (2019) On the role of data balancing for machine learning-based code smell detection. In: Proceedings of the 3rd ACM SIGSOFT international workshop on machine learning techniques for software quality evaluation, MaLTeSQuE 2019. Association for Computing Machinery, New York, pp 19–24

Pereira dos Reis J, Brito e Abreu F, de Figueiredo Carneiro G, Anslow C (2021) Code smells detection and visualization: A systematic literature review. Archives of Computational Methods in Engineering

Platt JC (1999) Fast training of support vector machines using sequential minimal optimization. In: Advances in kernel methods - support vector learning, MIT Press, pp 185–208

Proksch S, Amann S, Mezini M (2014) Towards standardized evaluation of developer-assistance tools. In: Proceedings of the 4th international workshop on recommendation systems for software engineering - RSSE 2014. ACM Press, New York, pp 14–18

Quinlan JR (2014) C4.5: Programs for Machine Learning. Elsevier

Rahman F, Devanbu P (2013) How, and why, process metrics are better. In: Proceedings of the 2013 international conference on software engineering, ICSE ’13, IEEE Press, pp 432–441

Reis JP, Brito e Abreu F, de F. Carneiro G (2017) Code smells detection 2.0: Crowdsmelling and visualization. In: 2017 12th iberian conference on information systems and technologies (CISTI), pp 1–4

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning Internal Representations by Error Propagation. MIT Press, Cambridge, pp 318–362

Sharma M, Padmanaban R (2014) Leveraging the wisdom of the crowd in software testing. CRC Press

Stol K-J, Fitzgerald B (2014) Researching crowdsourcing software development: Perspectives and concerns. In: Proceedings of the 1st international workshop on CrowdSourcing in software engineering, CSI-SE 2014. Association for Computing Machinery, New York, pp 7–10

Stone M (1974) Cross-validatory choice and assessment of statistical predictions. Journal of the Royal Statistical Society. Series B (Methodological) 36 (2):111–147

Tahir A, Yamashita A, Licorish S, Dietrich J, Counsell S (2018) Can you tell me if it smells? a study on how developers discuss code smells and anti-patterns in stack overflow. In: Proceedings of the 22nd International Conference on Evaluation and Assessment in Software Engineering 2018, EASE’18. Association for Computing Machinery, New York, pp 68–78

Travassos G, Shull F, Fredericks M, Basili VR (1999) Detecting defects in object-oriented designs: Using reading techniques to increase software quality. In: Proceedings of the 14th conference on object oriented programming, systems, languages, and applications. ACM Press, New York, pp 47–56

Tsantalis N, Chaikalis T, Chatzigeorgiou A (2018) Ten years of jdeodorant: Lessons learned from the hunt for smells. In: 2018 IEEE 25th international conference on software analysis, evolution and reengineering (SANER), pp 4–14

Wang C, Hirasawa S, Takizawa H, Kobayashi H (2015) Identification and elimination of Platform-Specific code smells in high performance computing applications. International Journal of Networking and Computing 5(1):180–199

Yamashita A, Moonen L (2013) To what extent can maintenance problems be predicted by code smell detection? - an empirical study. Inf Softw Technol 55(12):2223–2242

Zitzler E, Thiele L, Laumanns M, Fonseca CM, da Fonseca VG (2003) Performance assessment of multiobjective optimizers: an analysis and review. IEEE Transaction on Evolutionary Computation 7(2):117–132

Acknowledgements

This work was partially funded by the Portuguese Foundation for Science and Technology, under ISTAR’s projects UIDB/04466/2020 and UIDP/04466/2020, and by Anima Institute (Edital N∘ 43/2021).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by: Shaowei Wang, Tse-Hsun (Peter) Chen, Sebastian Baltes, Ivano Malavolta, Christoph Treude and Alexander Serebrenik

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article belongs to the Topical Collection: Collective Knowledge in Software Engineering

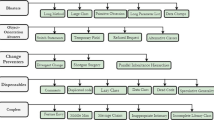

Appendix: Code metrics

Appendix: Code metrics

Metric | Acronym |

|---|---|

Lines of Code | LOC |

Lines of Code Without Accessor or Mutator Methods | LOCNAMM |

Number of Packages | NOPK |

Number of Classes | NOCS |

Number of Methods | NOM |

Number of Not Accessor or Mutator Methods | NOMNAMM |

Number of Attributes | NOA |

Cyclomatic Complexity | CYCLO |

Weighted Methods Count | WMC |

Weighted Methods Count of Not Accessor or Mutator Methods | WMCNAMM |

Average Methods Weight | AMW |

Average Methods Weight of Not Accessor or Mutator Methods | AMWNAMM |

Maximum Nesting Level | MAXNESTING |

Weight of Class | WOC |

Called Local Not Accessor or Mutator Methods | CLNAMM |

Number of Parameters | NOP |

Number of Accessed Variables | NOAV |

Access to Local Data | ATLD |

Number of Local Variable | NOLV |

Tight Class Cohesion | TCC |

Lack of Cohesion in Methods | LCOM |

Fanout | FANOUT |

Access to Foreign Data | ATFD |

Foreign Data Providers | FDP |

Response for A Class | RFC |

Coupling Between Objects Classes | CBO |

Called Foreign Not Accessor or Mutator Methods | CFNAMM |

Coupling Intensity | CINT |

Coupling Dispersion | CDISP |

Maximum Message Chain Length | MAMCL |

Number of Message Chain Statements | NMCS |

Mean Message Chain Length | MEMCL |

Changing Classes | CC |

Changing Methods | CM |

Number of Accessor Methods | NOAM |

Number of Public Attributes | NOPA |

Locality of Attribute Accesses | LAA |

Depth of Inheritance Tree | DIT |

Number of Interfaces | NOI |

Number of Children | NOC |

Number of Methods Overridden | NMO |

Number of Inherited Methods | NIM |

Number of Implemented Interfaces | NOII |

Number of Default Attributes | NODA |

Number of Private Attributes | NOPVA |

Number of Protected Attributes | NOPRA |

Number of Final Attributes | NOFA |

Number of Final and Static Attributes | NOFSA |

Number of Final and Non - Static Attributes | NOFNSA |

Number of Not Final and Non - Static Attributes | NONFNSA |

Number of Static Attributes | NOSA |

Number of Non - Final and Static Attributes | NONFSA |

Number of Abstract Methods | NOABM |

Number of Constructor Methods | NOCM |

Number of Non - Constructor Methods | NONCM |

Number of Final Methods | NOFM |

Number of Final and Non - Static Methods | NOFNSM |

Number of Final and Static Methods | NOFSM |

Number of Non - Final and Non - Abstract Methods | NONFNABM |

Number of Final and Non - Static Methods | NONFNSM |

Number of Non - Final and Static Methods | NONFSM |

Number of Default Methods | NODM |

Number of Private Methods | NOPM |

Number of Protected Methods | NOPRM |

Number of Public Methods | NOPLM |

Number of Non - Accessors Methods | NONAM |

Number of Static Methods | NOSM |

Rights and permissions

About this article

Cite this article

Reis, J.P.d., Abreu, F.B.e. & Carneiro, G.d.F. Crowdsmelling: A preliminary study on using collective knowledge in code smells detection. Empir Software Eng 27, 69 (2022). https://doi.org/10.1007/s10664-021-10110-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s10664-021-10110-5