Abstract

Automated static analysis tools (ASATs) have become a major part of the software development workflow. Acting on the generated warnings, i.e., changing the code indicated in the warning, should be part of, at latest, the code review phase. Despite this being a best practice in software development, there is still a lack of empirical research regarding the usage of ASATs in the wild. In this work, we want to study ASAT warning trends in software via the example of PMD as an ASAT and its usage in open source projects. We analyzed the commit history of 54 projects (with 112,266 commits in total), taking into account 193 PMD rules and 61 PMD releases. We investigate trends of ASAT warnings over up to 17 years for the selected study subjects regarding changes of warning types, short and long term impact of ASAT use, and changes in warning severities. We found that large global changes in ASAT warnings are mostly due to coding style changes regarding braces and naming conventions. We also found that, surprisingly, the influence of the presence of PMD in the build process of the project on warning removal trends for the number of warnings per lines of code is small and not statistically significant. Regardless, if we consider defect density as a proxy for external quality, we see a positive effect if PMD is present in the build configuration of our study subjects.

Similar content being viewed by others

1 Introduction

Automated static analysis tools (ASATs) support software developers with warnings and information regarding common coding mistakes, design anti-patterns like code smells (Fowler 1999), or code style violations. ASATs work directly on the source code or bytecode without executing the program. They are using abstract models of the source code, e.g., the Abstract Syntax Tree (AST) or the control flow graph to match the provided source code against a set of rules defined in the ASAT. If a part of the source code violates a predefined rule, a warning is generated. These rules can be customized by the project using the ASAT to fit their needs by removing rules deemed unnecessary. ASAT reports usually contain a type of warning, a short description, and the file and line number of the source code that triggered the warning. Developers can then inspect the line specified in the warning and decide if a change is necessary.

The defects that can be found by static analysis include varying severities. Java String comparisons with “==” instead of using the equals() method, would compare the object reference instead of the object contents. The severity rating for this type of warning is critical as it can lead to undesired behavior in the program. Naming convention warnings, e.g., not using camel case for class names on the other hand have a minor severity.

ASATs are able to uncover problems with significant real world impact. The Apple Goto Fail defect Footnote 1 for example could have been detected by static analysis utilizing the control flow graph. This importance regarding software quality is further demonstrated by the inclusion of ASATs in software quality models, e.g., Quamoco (Wagner et al. 2012) and ColumbusQM (Bakota et al. 2011). Zheng et al. (2006) found that the number of ASAT warnings can be used to effectively identify problematic modules. Moreover, developers also believe that static analysis improves quality as reported by a survey of Microsoft employees by Devanbu et al. (2016).

ASATs can be integrated as part of general static analysis via IDE plugins where the developer can see the warnings almost instantly. Usually IDE plugins are able to access a central configuration for rules that generate warnings. A central rule configuration is essential for project specific rules and exclusions of rules and directories. Integrating ASATs in the software development process as part of the buildfile of the project has the advantage of providing a central point of configuration which can also be accessed by IDE plugins. It also enables the developer to view generated reports prior to, or after the compilation as part of the build process. Moreover, the inclusion into the buildfile also allows Continuous Integration (CI) Systems to generate reports automatically. The reports can then be used to plot trends for general quality management or provide assistance in code reviews. Published industry reports share some findings regarding static analysis infrastructure and warning removal. Google (Sadowski et al. 2018) and Facebook (Distefano et al. 2019) both found that just presenting developers with a large list of findings rarely motivates them to fix all reported warnings. However, reporting the warnings as early as possible, or at latest at code review time, improves the adoption and subsequent removal of static analysis warnings. One of the lessons that Facebook and Google learned, is that static analysis warnings are not removed in bulk, but as part of a continuous process when code is added after the static analysis is configured or old code is changed.

ASAT warnings are able to indicate software quality because of how the rules that trigger the warnings are designed. The ASAT developers designed these rules not only to remove obvious bugs but also to express what is important for high quality source code via the designed rules. Therefore, a lot of the existing rules are based on best practices, common coding mistakes and coding style recommendations. Best practices and coding styles are also subject to evolution as the user base of a programming language evolves, new tooling is created and also as a programming language itself gets new features. For example, the Java code written today is different than the Java code written 10 years ago. These differences and, more importantly, the evolution of the usage of best practices are an interesting research topic, e.g., language feature evolution (Malloy and Power 2019), and design pattern evolution (Aversano et al. 2007).

The topics covered in research with regards to ASATs are concerned with configuration changes (Beller et al. 2016), CI-pipelines (Zampetti et al. 2017), finding reported defects (Habib and Pradel 2018; Thung et al. 2012; Vetro et al. 2011) or warning resolution times (Marcilio et al. 2019; Digkas et al. 2018). Vassallo et al. (2019) provide a thorough investigation of developer usage of ASATs in different developing contexts. One of the problems identified for ASAT usage is the number of false positives (Johnson et al. 2013; Christakis and Bird 2016; Vassallo et al. 2019) for which warning priorization (Kim and Ernst 2007a; 2007b) was proposed, sometimes as actionable warning identification (Heckman and Williams 2009), see also the systematic literature review by Heckman and Williams (2011). Developers perceive ASATs as quality improving (Marcilio et al. 2019; Devanbu et al. 2016) although the percentage of resolved ASAT warnings vary, e.g., 0.5% (Liu et al. 2018), 8.77% (Marcilio et al. 2019), 6%-9% (Kim and Ernst 2007b) and 36.3% (Digkas et al. 2018).

What is still missing, is a longitudinal, more general overview of the evolution of ASAT warnings over the years of development which includes complete measurement of ASAT warnings over the complete development history. This would improve our understanding of exactly how the warnings evolve, e.g., how ASAT tools are used and the impact on the overall numbers of warnings over the project evolution. Moreover, a direct linking between static analysis warnings and removal of the implicated code in the process of bug fixing may be limiting the insights that can be gained from investigating ASAT usage. Most ASATs are also detecting problems due to spacing, braces, readability, and best practices which are not directly causing a defect. Therefore, the influence of ASATs on defects or software quality as a whole may be more indirect. To the best of our knowledge, only the work by Plosch et al. (2008) directly investigates this so far (there is also the work by Rahman et al. (2014) however it is not directly investigating correlations). Their work shows a positive correlation between ASAT warnings and defects, although their empirical study is limited to one project.

In this article, we investigate the usage of one ASAT in Java open source projects of the Apache Software Foundation in the context of software evolution. We determine the trends of removal of code with ASAT warnings over the projects lifetime. We are interested in the evolution of ASAT warnings on a project and on a global level, i.e., ASAT warnings for all projects combined. We examine general trends independent of developer interaction, i.e., is the state of software generally improving with regards to ASAT warnings. We also investigate which types of ASAT warnings have positive and negative trends to infer which types of coding standards or best practices are important to developers. To this end, we are not only interested in the absolute numbers of ASAT warnings but put them in relation to the project size. Moreover, we investigate the impact of including an ASAT in the build process on ASAT warning trends regarding their resolution. Additionally, we approximate the impact of including an ASAT in the build process on external quality via defect density (Fenton and Bieman 2014) by including defect information.

Our longitudinal, retrospective case study results in the following contributions of this work:

-

An analysis of evolutionary trends of ASAT warnings in 54 open source projects from 2001-2017.

-

An assessment of the effects of ASAT usage in open source projects on warning trends and software quality via defect density.

-

An extension of prior work by providing a broader, long-term, evolutionary perspective with regards to ASAT warnings in open source projects.

The subjects of our case study are Java open source projects under the umbrella of the Apache Software Foundation. We observe ASAT warning trends via PMDFootnote 2 and defects via the Issue Tracking System (ITS) of the respective projects under study. In accordance with evidence based software engineering as introduced by Kitchenham et al. (2004) we provide our data and analysis for researchers and practitioners regarding the evolution of warnings and the impact of PMD on software quality.

The main findings of our study are the following.

-

While the number of ASAT warnings is continuously increasing, the density of warnings per line of code is decreasing.

-

Most ASAT warning changes are related to style changes.

-

The presence of PMD in the build file coincides with reduced defect density.

The remainder of this work is structured as follows. In Section 2, we discuss prior work related to this study. After that, in Section 3, we present a short overview of static analysis in software development and discuss challenges in mining software repository data. In Section 4, we define our research questions, describe the selection criteria for our study subjects and explain our methodology in detail. In Section 5, we present the results. In Section 6, we discuss the results and relate them to current research. In Section 7, we evaluate the threats to validity to our study. Section 8 provides a short conclusion and provides an outlook on future work based on the data and methods described this article.

2 Related Work

In this section, we present the related work on empirical studies of ASATs and put them into relation to our work. Beller et al. (2016) investigated the usage of ASATs in open source projects. They focused on the prevalence of the usage of ASATs for different programming languages, how they are configured, and how the configuration evolves. In our work we are also investigating the evolution of the configuration. In contrast to Beller et al., we also run an ASAT on our study subjects for each commit. This enables us to analyze when ASAT warnings are resolved or introduced and the kind and number of warnings. We are using the projects buildfiles to extract whether PMD or other ASATs are used at the time of the commit and if custom rulesets were deployed. Thus, we expand on the previous work by not only investigating the changes in the configuration but also if the ASAT was used to remove any warnings at all. The drawback of this detailed view on ASAT warnings is that we have to narrow the focus on one programming language and one ASAT.

Kim and Ernst (2007b) utilized commit histories of ASAT warnings. They investigated the possibility of leveraging the removal times to prioritize the warnings. Instead of prioritizing ASAT warnings for removal, we are interested in removals on a global scale, by taking a longer history of the projects into account to get a broader view on the evolution of the projects under study with regard to ASAT warnings.

Liu et al. (2018) performed a large scale study using FindBugsFootnote 3 via SonarQubeFootnote 4 where they investigated ASAT warnings over time. They created an approach to identify fix-patterns that are then applied to unfixed warnings. Similar to our own work, Liu et al. have run an ASAT on the project source code retroactively. In comparison to Liu et al., we include the build system and custom rules in our analysis. Thus, we can be sure that when we investigate removal of warnings that the developers could have seen the warnings. Instead of FindBugs via SonarQube, we focus on PMD which reports a different set of warnings due to PMDs usage of source code instead of byte code.

Digkas et al. (2018) also utilized SonarQube to detect ASAT warnings and their removal. They focused on the technical debt metaphor (Kruchten et al. 2012) and the resolution time that SonarQube assigns to each detected ASAT warning. The authors took snapshots of their projects every two weeks to run the ASAT and store the warnings. In our study, we are not concerned with technical debt. Instead, we want to give a bigger, longitudinal overview over the evolution of the project regarding ASAT warnings. Instead of using snapshots, we ran PMD retroactively on every commit to extract data, although due to run time constraints, this results in a smaller number of projects in our study. Nevertheless, due to utilizing PMD our data covers a longer period of time.

Marcilio et al. (2019) take a closer look at developer usage of ASATs through SonarQube. They investigated the time to fix for different types of issues with a focus on active developer engagement to specifically solve the reported ASAT warnings. In our study, we are not only concerned with resolution times. Instead, we are primarily interested in general trends regarding ASATs to infer information about the evolution of software quality in our candidate projects.

Plosch et al. (2008) utilized data collected by (Zimmermann et al. 2007) and correlated source code quality metrics and defects with warnings found by different static analysis tools. They used three releases of eclipse and presented correlations for different size, complexity and object oriented source code metrics. In contrast to Plösch et al. we are not concerned only with releases, we collected static analysis warnings for every commit of our candidate projects. In addition, we consider multiple projects instead of one. Although we are only able to provide data for one static analysis tool, we are able to provide more detailed defect information and on a larger scale. This should also cover effects of readability and maintainability changes due to ASAT usage.

Querel and Rigby (2018) build additional static analysis on top of CommitGuru (Rosen et al. 2015). Initial results show that the additional information that static analysis warnings provide can improve statistical bug prediction models. In our study, we investigate the evolution of ASAT warnings. Our own investigation into the impact of static analysis warnings on quality complements the initial results by Querel and Rigby (2018).

Static analysis software is often used in a dedicated security context. Penta et al. (2009) analyze security related ASAT warnings for three open source projects along their history. The authors performed an empirical study using three open source projects and three ASATs. Aloraini et al. (2019) also analyze security related ASAT warnings. The authors collect two snapshots, one at 2012 and one at 2017 for 116 open source projects. Both works come to the conclusion that the warning density of the security related warnings stays constant throughout their analysis time span. In contrast to our study both focused exclusively on security related ASATs.

3 Background

In this section, we introduce important topics regarding this study. First, we give a short description of the challenges regarding mining software repository histories and how they apply to this study. Then, we briefly discuss static analysis tools for Java and our choice of ASAT as well as software quality evaluation.

3.1 Mining Software Repository Histories

Working with old software revisions has its challenges. For projects which use the Java programming language some of these are:

-

The build system may have been switched completely, e.g., from Ant to Maven.

-

The project has no pinned version for the libraries it needs to be built successfully. This means, it may be impossible to build an older version because of incompatibilities with required libraries or missing versions of libraries (Tufano et al. 2017).

-

The main source directory may have been moved, e.g., from src/java to src/main/java as is the case for most Java projects with a longer history.

In this study, we follow two different paths of inquiry, the first is only concerned with general trends regarding ASAT warnings. Hence, we do not need to consider build systems and libraries. However, even in that simplest approach we ignore test code as we only want to inspect production code. As no direct information via the build system is available we utilize regular expressions to exclude non-production code.

The second path of inquiry provides a more detailed view and also takes the build system into account. This is necessary as we extract ASAT usage via the build system configuration files. We therefore restrict the build system to Apache Maven as it is used by the majority of our candidate projects and allows extraction of this information. Including build information provides us with the ability to restrict the production code via the source directories specified in the configuration. The restriction to production code not only excludes test code but also additional tooling and examples. Apache Maven allows a tree like build configuration, i.e., a root configuration shared by the project and all its modules. As the build configuration can also contain custom rules and ASAT configurations, we have to consider all parts of the configuration tree. The root of the tree, usually parent POMs, can be included via Maven central and the leaves, usually modules that are part of the project, can be included via the filesystem.

In addition to the build system, we restrict ourselves to an ASAT that does not need compiled source code because of preliminary tests which found similar problems as Tufano et al. (2017). Tufano et al. found that 38% of commits in their data could not be compiled anymore. Nevertheless, even without the need to compile to bytecode, we are also experiencing some of the problems Tufano et al. found. As we want to extract custom rule definitions for PMD we need to consider build configuration files that may not exist anymore, e.g., missing parent POMs. To mitigate this problem, we manually rename some artifacts so that they can be found on maven central and incorporated into our extraction process, usually this only consists of removing -SNAPSHOT from the package name but in 3 cases we need to change the name of the package, e.g., from commons to commons-parent.

The extraction first tries to build the effective POM via Maven, i.e., including every module and configuration as well as explicit default values. If this fails it changes the pom.xml by removing the -SNAPSHOT, or, if the combination from group, artifact and version is in our rename list, it performs the artifact rename. After that the effective POM is built again. In case that the error persists it is logged and the existing state of the custom rules is not changed. The remaining errors consist of Maven configuration mismatches, in most cases a module references a parent with a wrong version because the parent pom.xml has been upgraded but the modules still reference the old version.

3.2 Static Analysis Tools for Java

Beller et al. (2016) noted, that most static analysis tools are in use for languages which are not compiled, because the compilation process includes certain static checks. Nevertheless, even Java and also C have some static analysis tools that can be utilized by practitioners to warn about potential problems in the source code.

As we are focusing on Java there are a few well known, open source static analysis tools for Java. One of the most prevalent is CheckstyleFootnote 5 which works directly on the source code and is mostly concerned with checking the code against certain predefined coding style guidelines. Another one is FindBugs which works on compiled Java bytecode to find bugs and common coding mistakes, e.g., a clone() method that may return null. SonarQube, a cloud based tool, has the ability to use the already described static analysis tools and also defines its own rules, e.g., cognitive complexity for a method is too high. It relates the ASAT warnings to a resolution time via a formula depending on the warning and programming language.

In this work we are focusing on PMD which works on the Java source code and finds coding style problems, e.g., an if without braces, but also common coding mistakes, e.g., comparison of two String objects using “==” instead of the equals() method. PMD provides a broad set of rules from a wide range of categories. Moreover, PMD is available since 2002 and therefore has been in use for a long time. This results in more data for our analysis and in a mature ASAT for us to use. The detailed documentation and changelog allow us to keep track of which ASAT warnings were available at a certain point in time.

3.3 Software Quality Evaluation

Software quality is notoriously hard to measure (Kitchenham and Pfleeger 1996). Since the beginning of investigating software quality it seemed clear that software quality consists of a combination of factors. The first models for software quality introduced by Boehm et al. (1976) and McCall et al. (1977) also mirror this combination of quality factors. Multiple quality factors are still in use throughout the subsequent ISO standards 9126 and 25010 and later quality models, e.g., Wagner et al. (2012) and Bakota et al. (2011). Fenton and Bieman (2014) as well as the ISO standards discern between internal and external quality. Internal quality factors concern the source code, e.g., cyclomatic complexity (McCabe 1976) or the process, e.g., the developers. External quality factors are on the customer facing side, e.g., defects, efficiency. Internal quality factors influence external quality factors, the problem is to evaluate which internal factors influence which external factors in which way.

Let us assume that software quality is a combination of multiple factors, e.g., maintainability or efficiency. If we want to automatically evaluate software quality, we need to find the concrete measurements that capture the corresponding factor. We then also need to know how to combine the measurements or factors together for the best approximation of software quality. Instead of using metric measurements as approximations, we can instead use ASATs based on their rules. Some ASATs not only include warnings about possible defects but also directly maintainability related warnings, e.g., default should always come last in a switch, exception handling code should not be empty or class names in Java should be in CamelCase. The rules that trigger the ASAT warnings are based on real world experiences and best practices of the developers, therefore, we expect that they are important in an overall evaluation of software quality. Although this means that any ASAT considered for general software quality evaluation should support a broad set of rules.

If we consider the ASATs introduced in the previous Section 3.2 we find that PMD and SonarQube fit that definition best. While FindBugs and Checkstyle are both very established software products they fit different profiles. FindBugs focuses on possible defects and Checkstyle focuses on validating style rule conformance. While SonarQube would be a good fit, it does not exist for as long as PMD, FindBugs and Checkstyle. This limits the ability to observe actual usage of the ASAT in historical data. PMD on the other hand has both a long history of use and a broad set of rules. Therefore, we assume that PMD is a good approximation of internal software quality.

As an approximation for external software quality we utilize defect density (Fenton and Bieman 2014), i.e., the number of defects in relation to the size of the project. With both of these approximations, we can investigate internal and external software quality over the history of our study subjects.

4 Case Study Design

The goal of the case study is to investigate evolution of ASAT warnings and to examine the impact of PMD in the short and long term on ASAT warning trends as well as its impact on external software quality via defect density. In this Section, we formulate the research questions we aim to answer, explain the selection of subjects of the case study, and describe the methodology for the data collection, and the analysis procedures.

4.1 Research Questions

To structure our investigations, we define the following research questions which we separate into two main questions. The first main research question is only concerned with evolution of ASAT warnings over the full lifetime of the project: How are ASAT warnings evolving over time? (RQ1). We divide this research question into two sub-questions:

-

RQ1.1: Is the number of ASAT warnings generally declining over time?

-

RQ1.2: Which warning types have declined or increased the most over time?

Investigating these questions should shed some light on the general evolution of our study subjects regarding ASAT warnings. More specifically, we want to answer the question if “code gets better over time” with regard to ASAT warnings and also if there are differences between the different types of ASAT warnings. Differences between types of ASAT warnings may point to changing Java programming practices or changes in perceived importance, e.g., more camel case name violations for class names at the beginning of 2001 than at the end of 2017. The trend of resolved warnings by type should indicate which warning types are perceived as the most important by the developers that are active in our study subjects. ASAT warnings is a generic term, we specifically investigate ASAT warnings generated by PMD.

The second research question is focused on the impact of ASAT usage on the warning trends and on external software quality: What is the impact of using PMD? (RQ2). We divide this research question into five sub-questions:

-

RQ2.1: What is the short term impact of PMD on the number of ASAT warnings?

-

RQ2.2: What is the long term impact of PMD on the number of ASAT warnings?

-

RQ2.3: Does the active usage of custom rules for PMD correlate with higher ASAT warning removal?

-

RQ2.4: Is there a difference in ASAT warning removal trends whether PMD is included in the build process or not?

-

RQ2.5: Is there a difference in defect density whether PMD is included in the build process or not?

Our second set of research questions focuses on the ASAT usage according to the buildfile of the study subjects. Therefore, we focus only on the project development lifetimes where we can determine that an ASAT is used as part of the build process. Moreover, we consider only source directories configured in the build system. This allows us to exclude examples and tooling. We are also taking time and available rules for PMD into account, e.g., which rules are active in the configuration file and which were available at the time of the commit. This enables us to analyze the impact only for the rules that the developers were able to see and therefore address consciously. Moreover, we investigate the impact of PMD on external software quality via defect density by including information from the issue tracking system of our study subjects.

4.2 Subject Selection

Our study subjects are part of a convenience sample of open source projects under the leadership of the Apache Software FoundationFootnote 6 but nevertheless we applied some restrictions on our selection of study subjects. The base list of projects consists of every Java project of the Apache Software Foundation. We then apply the restrictions and start mining the remaining projects. The final list of study subjects is a sample of the projects that pass the criteria. The complete data for all cannot be used due to the computational effort required to calculate the ASAT warnings for all commits.

We focus on Java projects but exclude Android projects because of the different structure of the source code of the applications. We also restrict the build system to Maven as we utilize the buildfiles to extract the source directory and ASAT configurations for RQ2. Moreover, Maven provides the tooling necessary to combine multiple buildfiles of all sources per project.

We only include active, recent projects that are not currently in incubator status within the Apache Software Foundation, i.e., fully integrated into the Apache Software Foundation. All projects are actively using an issue tracking system as part of their development process. Our study subjects consist of libraries and applications with a variety of domains, e.g., math libraries, pdf processing, http processing, machine learning, a web application framework, and a wiki system. Moreover, our study subjects contain a diverse set of project sizes. The size ranges from small projects such as commons-rdf to lager projects such as Jena and Archiva.

The rest of our selection criteria are focused around project size, infrastructure, project maturity, and up-to-dateness of the project. All applied criteria are given in Table 1.

4.3 Methodology RQ1

In this section, we explain our approach to extract the required data and to calculate the required metrics to answer our research questions. An overview of the approach for data extraction is given in Fig. 1.

4.3.1 Select Commit Path

To select the commits we are interested in, we build a Directed Acyclic Graph (DAG) from all commits in the repository and their parent-child relationships. After the graph construction, we extract a single path of commits for the project. This is depicted in the first part of Fig. 1. Commits are denoted as circles with a number referring to their order of introduction into the codebase. We extract a single path from the latest master branch commit to the oldest reachable orphan commit. We need to select a path this way because we can not just select the master branch as the information on which branch a commit is created is not stored in Git (Bird et al. 2009). Moreover, we select a single path because if work is done in parallel on two or more branches of the project and we order the commits by date we get jumps in the data as we would have a sequence of commits that represent different states of the codebase at the same time.

The latest master branch commit is extracted via the “origin/head” reference of Git which points to the default branch of the repository. The default branch is usually named master, although in some Apache projects the default branch is called trunk as the projects were converted or are mirrored from Subversion. Orphan commits do not have parents. This is usually the initial commit of the repository. It can also happen that a repository has multiple orphan commits, which also can be merged back into the current development branch. By choosing the oldest orphan commit, we extract the first initial commit. Then, we use the graph representation to find the shortest path between these two commits via Dijkstra’s shortest path first algorithm (Dijkstra 1959). The end result of this step is the shortest path between the oldest orphan commit and the newest commit on the default branch of the project.

4.3.2 Metric Extraction

The second step in Fig. 1 depicts the extraction of ASAT warnings and Software metrics. For both we are using OpenStaticAnalzerFootnote 7 as part of a pluginFootnote 8 for the SmartSHARK infrastructure (Trautsch et al. 2017). SmartSHARK in conjunction with a HPC-Cluster provided us with the means to extract this information for each file in each commit of our candidate projects. OpenStaticAnaylzer is an open sourced version of the commercial tool SourceMeter (FrontEndART 2019) which has been used in multiple studies, e.g., Faragó et al. (2015), Szóke et al. (2014), and Ferenc et al. (2014) and, more recently (Ferenc et al. 2020). It works by constructing an Abstract Semantic Graph (ASG) from the source code which is then used to calculate static source code metrics. As it is included in SmartSHARK we perform a validation step after each mining step which verifies if the metrics are collected for each source code file. In addition to the size, complexity and coupling metrics OpenStaticAnalyzer also provides us with ASAT warnings by PMD. OpenStaticAnalyzer applies 193 rules from which the warnings are generated including line number, type and severity rating. The source code metrics are provided at package, file, class, method and attribute level. The resulting data from the mining step includes ASAT warnings from PMD and source code metrics for each file in each commit of our candidate projects. As we primarily want to investigate program code we exclude non-production code by path. We use the regular expression shown in Table 2 to filter non-production code. The regular expressions were created based on manual inspection of the directory structure of the projects we use in our study.

Furthermore, we only compare full years of continuous development in our analysis, thus we remove incomplete years: we remove the first year and everything after 31.12.2017 because we started collecting the data in 2018. This ensures that we only have trends over the complete development history of the project but also for each single year of development which provides a more detailed view in addition to a full view of the projects lifetime.

4.3.3 Calculate Warning Density

The absolute number of ASAT warnings is correlated with the amount of source code in the project. Increasing the code size seems to increase the number of warnings. Even in projects using PMD, this is expected as we also study warnings which the developers could not have seen before. Either because the ASAT did not support them at the time of the commit or the rules that trigger the warnings are not active. Most of the biggest additions and removals of ASAT warnings are due to the addition and removal of files in the repository. The measure for size of the source code we are using is Logical Lines of Code (LLoC) in steps of one thousand (kLLoC). By using LLoC instead of just Lines of Code (LOC) we discard blank lines and comments. LLoC provides a more realistic estimation of the project size.

Table 3 shows the correlation between the sum of ASAT warnings and the sum of kLLoC per commit in all commits available in our data. We are using two non-parametric correlation metrics, Kendall’s τ (Kendall 1955), which uses concordance of pairs, i.e., if xi > xj and yi > yj and Spearman’s ρ (Spearman 1904) which uses a rank transformation to measure the monotonicity between two sets of values instead of concordance of pairs of observations.

We can see in Table 3 that there is a positive correlation between kLLoC and the number of ASAT warnings, i.e., as kLLoC increases so does the number of ASAT warnings. As we want to analyze ASAT warning trends with minimum interference of functionality being added or deleted we decided to use warning density instead of the absolute number of ASAT warnings. Warning density is the ratio of the ASAT warnings and product size.

As product size we chose kLLoC, the warning density is calculated per commit. The advantage of this measurement is that we still see when code with less warnings is added or removed. This also accounts for the effect of developers only scrutinizing new code being added as the new code would then contain less warnings than the existing and show up in our data as a declining trend of ASAT warnings.

Nevertheless, we keep the sum of all warnings for completeness which means we have two aggregations of warning data for our next step:

- S (sum)::

-

The sum of all ASAT warnings per commit which are also available on basis of warning type and severity rating.

- R (warning density)::

-

The ratio meaning the warning density per commit which is also available on a basis of warning type and severity rating.

4.3.4 Fit Linear Regression

Fitting a regression line results in a trend line that we can use to determine if ASAT warnings are generally increasing or decreasing in a more appropriate way as just using a delta between the last and first data points. This method, while still being simple and comprehensible, utilizes all available information, e.g., if the project contained high numbers of warnings for most of its lifetime and only at the end of the extracted data resolved most of them. We fit multiple linear regression lines to our data:

-

all years per project, for a long-term trend,

-

per year per project, for a short-term trend.

Moreover, we additionally fit regression lines for each group of filtered ASAT warnings we introduce in Section 4.4.2 for our second main research question. The linear regression lines provide broad overall trends and specific trends for the ASAT warnings to answer our research questions.

After the fitting of the regression lines, we utilize the coefficient of the linear regressions as the slope. As we have only one variable, this is the same as calculating the slope for each line by applying the point slope formula (2) where y are the values of the fitted regression line and x is the day of the commit.

The slope provides us with a single number representing the trend which we use for further analyses. Moreover, this enables the merging of results for projects with different lifetimes in order to create a global overview of a trend.

In order to restrict the calculated trends to meaningful values we use an F-Score which is calculated via a correlation between our regression line and the measured value. First, we calculate the correlation:

Where Xi is the i-th day of our commits, yj is the j-th value of the regression line and \(\overline {X}, \overline {y}\) is the mean of the number of days of commits and mean of the regression values respectively. σ(X),σ(y) denotes the standard deviation of X and y. The correlation is then converted to an F-Score and a p-value.

The p-value conversion is achieved via the survival function of the F-distribution.

To restrict noise introduced by bad regression fits for trends, we include only slope values in our analysis where the F-Score is above 1 and the p-value for the F-Score is lower than 0.05. As the F-Score describes a relationship between the regression values and the time, we chose this performance metric instead of others related to linear regression such as R2.

4.4 Methodology RQ2

To answer our second research question, we need to include knowledge about the inclusion of ASATs in the build process of the projects. As previously mentioned, we focus on Maven as the build system. To extract the additional information, we extend our approach shown in Fig. 1 with the additional steps depicted in Fig. 2. In a nutshell, we filter out commits where Maven was not used, create new sets of rules depending on custom rules included in the available Maven build configuration, and include defect density as external software quality measure.

4.4.1 Parse buildfiles

We traverse the path of commits previously selected to determine where Maven was introduced to the project and all commits where its configuration file was changed. Maven projects can contain multiple buildfiles, modules and configuration residing in parent buildfiles. In order to account for these features, we utilize a Maven feature that combines all this information including fetching the parent buildfiles from the Maven repository. For each commit where one or multiple Maven files were changed in the target repository, we execute Maven to automatically resolve potential project modules defined in the main Maven configuration file, potential parent configurations, and set all settings explicitly taking default values and overrides into account. We also extract source and test directories from the configuration which allows us to restrict our analysis to program source code and to exclude tests that reside in non-standard directories. This information is further refined to extract which ASATs are currently active, i.e., we detect if PMD, Checkstyle, and FindBugs are configured. If PMD is configured, we extract the configuration including all additional custom configuration files.

Custom configurations for PMD can consist of multiple files with rules and categories of rules. We parse every custom ruleset file and extract rule categories and single rules. Single rules are used as is, whereas the rule categories are expanded to the single rules they contain according to the current PMD documentation on all rulesetsFootnote 9. This ensures that we have an accurate representation of the warnings that were actively presented to the developers.

4.4.2 Filter Commits and Warnings

We remove all commits where no Maven buildfile was present. This is true for commits where the build system is not Maven but, e.g., Ant or Gradle. To make our comparisons viable, we restrict our data to Maven and remove commits until a Maven buildfile is introduced. The project selection performed in the first step ensures that we only have projects where the Maven buildfile was present in the latest commit of our data. Thus, we do not have to remove commits due to the project switching its buildsystem from Maven to Gradle. After that, we create subsets of warnings in our data by filtering certain warnings.

- t (time-corrected):

-

The first subset consists of time corrected warnings. This includes only warnings that were available at the time of the commit where we collected the warning. To be able to utilize this information, we included a mapping for each detected rule to the PMD version that introduced the warning together with the release date of that version. Then we filter out the rules that could not have been reported because at the time of the commit the rule was not available in PMD yet. This is only possible because of the very thorough documentation of rules from PMD and the detailed changelog that stretches back to the first version. We include this subset because of the length of the project histories considered. As some of our data goes back to 2007Footnote 10, we need to take the ASAT warnings into account that were possible to gain from PMD at that point in time.

- d (default)::

-

The second subset represents the default configuration of the maven-pmd-plugin. The default rules are taken from the most recent configurationFootnote 11 and filtered to include only detectable rules. This results in a set of 45 rules.

- e (effective)::

-

For the third subset we want to include as much detail as possible. To achieve this, we calculate the currently active ASAT rules for each commit. These effective active rules take all custom rules and rule excludes into account. If no custom rules are defined we are using the default rules according to the documentation of the maven-pmd-pluginFootnote 12 same as for the subset d. This subset contains all information that a developer on the project under investigation can acquire by utilizing the buildfile.

- o (without overlapping)::

-

The final subset removes rules overlapping with other ASATs used in the projects. This is achieved by filtering rules which overlap with rules supported by current versions of Checkstyle and FindBugs. This subset enables us to increase the precision of our impact measurement for the effects of using PMD on ASAT warning trends. This avoids skewed results for study subjects which are in the non PMD group but utilize FindBugs or Checkstyle which contain rules that are also present in PMD. A complete list of overlapping rules can be found in the Appendix.

All of these subsets of warnings can be combined to provide us with a set of rules for the analyses, e.g., the warning density of the default rules with time-correction or the warning density of the effective rules with time-correction without overlapping rules.

4.4.3 Calculate Defect Density

This step utilizes the SmartSHARK infrastructure which we already used for the metrics collection to incorporate information from the ITS into our data. The extracted information is condensed to a metric per development year, the “de facto standard measure of software quality” (Fenton and Bieman 2014), defect density, which is a ratio of the number of known defects and the size of the product.

In this study we use the mean kLLoC per year as the product size and the number of created bug reports per year as the number of known defects. This provides us with a metric per year which we can then utilize to measure the impact of PMD usage on external software quality (Fenton and Bieman 2014). As we have projects in our dataset which switched ITS and as we need full development years we discard the first year for which we have defects in our data.

4.5 Analysis Procedure

In our case study, we investigate different questions which require a different analysis procedures. For RQ1.1, we aggregate the plain sum of ASAT warnings per commit over the projects development and the warning density as defined in Section 4.3.3. Then, we fit regression lines and calculate the F-Score as well as the slope of the regression to get the general trend.

In the case of RQ1.2 we do the same, but for completeness we additionally calculate the delta of the last and the first commit of the data as well as the number of remaining warnings per kLLoC. In order to aggregate data of all projects we calculate the mean and median of the data.

The short and long term impact of PMD on the number of ASAT warnings in RQ2.1 and RQ2.2 is measured via the number of projects for RQ2.1 and the median of slopes of the trend line for all years following PMD introduction per project for RQ2.2. The slopes are calculated via the warning density but without overlapping rules from FindBugs and Checkstyle.

For RQ2.3, we sum the number of rule changes per year for projects using PMD and correlate them via Kendall’s τ and Spearman’s ρ to the warning density trends of the rules used (R+e+t).

To answer the research questions RQ2.4 and RQ2.5, we measure the difference between two samples. We first investigate the distribution of our data via the Shapiro-Wilk test (Wilk and Shapiro 1965) for normality and Levene’s test (Levene 1960) for variance homogeneity. As these tests revealed that the data is non-normal with a homogeneous variance, we decided to use the Mann-Whitney-U test (Mann and Whitney 1947). Although the Mann-Whitney-U test is a ranked test we still talk about differences in median for the sake of simplicity. In both research questions, we measure the difference between the years of PMD usage and the years where PMD was not used. Partial use in a year is excluded from the analysis. We chose a significance level of α = 0.05, after Bonferroni (Abdi 2007) correction for 24 statistical tests, we reject the H0 hypothesis at p < 0.002. The difference in median between both samples is not significant every time for p < 0.002. Therefore, only for the last comparison of RQ2.4 and for RQ2.5 we also calculate effect size and confidence interval.

To calculate the effect size of the Mann-Whitney-U test, we utilize the fact that for sample sizes > 8 the U test statistic is approximately normally distributed (Mann and Whitney 1947). We first perform a z-standardization (Kreyszig 2000). We are assuming that our sample’s mean and standard deviation are a good approximation of the populations mean and standard deviation. After we calculate z we can calculate the effect size r. A value of r < 0.3 is considered a small effect, 0.3 ≤ r ≤ 0.5 is a medium effect and 0.5 < r is a strong effect (Cohen 1988). For the confidence interval we follow (Campbell and Gardner 1988) who use the K-th smallest to the K-th largest difference between two samples as the interval. The confidence interval then consists of the K-th difference and the max(n,m) − K-th difference between both samples.

4.6 Replication Kit

All extracted data can be found online (Trautsch et al. 2020). The code for creation of the tables and figures used in this paper as well as a dynamic view of warning density, LLoC and warning sum for each project is included.

5 Case Study Results

In this section we present the results of our study. This section is split into two parts, one for each of our main research questions.

5.1 RQ1: How are ASAT warnings evolving over time?

Our first research question considers ASAT warning evolution over the complete lifetime of each project. We do not consider PMD usage in the build process, custom rulesets or the availability of warnings in PMD at the time of the commit in this section. We use all PMD rules that are available. Table 4 shows the trend of ASAT warnings for every project and year as well as the approximate change per year over all years. Furthermore, the table includes the trend over the complete lifetime of the project with two base values: the sum of ASAT warnings S and the warning density R. The trends for single years are calculated based on warning density. The arrows indicate the trend of the ASAT warnings. A downwards arrow indicate a positive trend, i.e., the warning density declines, an upwards arrow negative trend, i.e., the warning density increases. If our criteria for the regression fits are not met, i.e., the F-Score is below 1 and the corresponding p-value is above 0.05 a straight rightway arrow is used. Hence, the straight rightway arrow indicates that there was no significant change.

5.1.1 RQ1.1: Is the number of ASAT warnings generally declining over time?

Table 4 shows, that if we consider the complete lifetime of the project warning density (R) increases in only 8 of 54 projects. The majority of our study subjects improve with regard to warning density. If we only consider the sum (S) the picture is not as clear, here we have more negative trends, i.e., the number of ASAT warnings increase. This is expected as the number of ASAT warnings usually increases with addition of new code and both are positively correlated as mentioned previously. This shows that if we consider warning density to be a code quality measure, that the code quality steadily increases in most projects. We also include the value of the slope of the trend for warning density (R p.a.) in the table, which indicates a change of warning density in years over the complete lifetime and on average over all projects. We exclude projects where the slope does not met our criteria for F-Score. The value in column R p.a. quantifies the average change in warning density per year, e.g., commons-math removes on average 5 ASAT warnings per 1000 Logical Lines of Code per year. When we consider the mean of all projects we see that on overage 3.5 ASAT warnings per 1000 LLoC are removed per year.

5.1.2 RQ1.2: Which warning types have declined or increased the most over time?

To answer the next research question, we consider how groups of rules have changed in their evolution over the projects lifetime. In this case, we not only report the slope of the trend but also report the delta of the first warning density measurement per project and the last measurement. This provides us with a delta of the absolute number of ASAT warnings per kLLoC per warning group and severity. Due to different project lifetimes we measure these numbers per project and then average the values to end up with a number that encompasses all of our data.

Table 5 contains all rule groups and severities in our data provided by PMD. It contains the slope of the trend, the average change of warning density per project over the complete lifetime of the project and the remaining warnings per kLLoC. We can see that, e.g., on average a project removed 7 naming rule warnings per kLLoC over its complete lifetime and still has 7.5 warnings per kLLoC left. When considering the trend, we can see that each project, on average, removes 0.42 naming rule warnings per kLLoC per year. Moreover, we see that each project on average resolves 34.03 warnings per kLLoC regardless of its type or severity over its complete lifetime and still has 58.06 warnings per kLLoC left.

These changes in the number of occurrences of rules by type can hint at potential changes in coding standards in the the years between the beginning of 2002 and end of 2017. Most prominently brace, design and naming rules, which consist of best practices regarding code blocks and naming conventions, e.g., an if should be followed by braces even if it is followed only by a single instruction and class names should be in camel case. Design rules contain best practices regarding overall code structure, e.g., avoiding deeply nested if statements and simplify boolean returns. We can see that the trend for specialized rules like Java and jakarta logging rules is steeper than naming rules, although when we consider the delta it is clear that naming rules are removed far more by number. Moreover, when we consider the median (MEDR p.a.) instead of the mean trend (MR p.a.) we can see that brace, design, and naming rules also have high median trends. A complete list of the rules and their groups as well as their severities is given in the Appendix.

The two groups of warnings that are increasing by delta, although only slightly, are finalizer and import statement rules. By mean trend only import statement and clone implementation rule violations are increasing slightly. Finalizer rules are concerned with the correct implementation of finalize() which is called by the garbage collector of Java. Import statement rules contain rules regarding duplicate imports, unused imports and unnecessary imports, e.g., java.lang or imports of classes from the same package. Clone implementation rules are focused on checking implementations of clone() methods.

Regarding the severity of the warnings we see that minor severity warnings are resolved the most, major severity warnings second most and critical warnings last. When we calculate the percentages of reduction in warning density by severity we see that minor and major are reduced by about 37% each while critical by about 27%. This may indicate that developers do not necessarily try to remove all critical warnings. However, this could also be an indication of critical severity warnings being more prone to false positives.

The types of warning that declined the most may hint at developer preference or possibly easy resolution of reported warnings. The declining of naming, brace and design rules may also be a consequence of changing coding standards or, more generally, a maturation of Java software coding style. The results may also hint at some rules which are ignored by developers. The density of import statement rules is increasing. This may indicate that this type of rule is more often ignored by developers.

5.2 RQ2: What is the impact of using PMD?

This part of the study discards every commit up until the point in time Maven was introduced as a build system. Although we shorten the project history that is available for analysis, keeping only commits with a Maven buildfile allows us to be certain that we detect the ASAT inclusion via the Maven configuration. Moreover, this allows us to read custom ruleset definitions and source directories. Utilizing the source directories from the Maven configuration narrows the scope for the files to code only files. We effectively discard tests and tooling which are not part of the build process. Our aim is to be as detailed as possible and counting only the rules that were available at the point in time of the commit. We also include only files that were part of the analysis if the projects developers had run the ASAT via the buildfile.

5.2.1 RQ2.1: What is the short term impact of PMD on the number of ASAT warnings?

Table 6 shows the trends of ASAT warnings for full years of development. The color indicates if PMD was used for all commits that year: green indicates PMD was used for the complete year, red indicates no use of PMD for the complete year, black indicates partial usage due to introduction or removal of PMD from the buildfile during that year. In seven of the 54 projects listed in Table 6, PMD was removed at least once. We inspected every case to investigate the reasons for the removal.

Archiva removed PMD in 2012 when they moved reporting to a parent pom which did not include PMD anymoreFootnote 13. Neither the commit message, nor the project documentation mention whether this removal is accidental or not.

Commons-bcel briefly introduced and then removed PMD in 2008. The removal does not mention PMD or reports of the build system. This brief introduction happened at the same time as the move from Ant to Maven as a build system. This indicates that the developers were testing features of Maven. In 2014 the project included PMD again in its buildfile. The trend of warnings is declining nonetheless.

Commons-compress removed and re-introduced PMD in short order while configuring the build system in multiple commits. PMD is mentioned explicitly in the commit it was re-added.

Commons-dbcp removed PMD in 2014 but that year still shows a declining trend. The commit message states that the removal was due to switching to FindBugs. Although the year is not part of this study, PMD was re-added to the build system in 2018.

Commons-math changed the ASAT configuration in 2009 - 2011 so that there were at least some commits without an active PMD configuration. Therefore, these are colored black in Table 6. Those years also had a declining trend. The commit message indicate that in 2009 the reporting section of which PMD is part of was dropped due to a release and later added again. In 2010 PMD was dropped due to compatibility problems and enabled again in 2011.

Commons-validator had some commits in 2008 with PMD enabled. The removal was a conscious decision as it is mentioned in the commit message although the reason is missing. PMD was added again in 2014.

Tika removed PMD in 2009 and never re-introduced it. The commit message mentions removing obsolete reporting from the parent pom. This indicates that the developers did not act on reported PMD warnings, either because they ignored them completely or because they found that there were too many false positives.

We are only considering projects where we can determine the time when PMD was introduced. If it was either introduced together with Maven as a build tool or was introduced before Maven we do not consider it here. We consider projects which introduced PMD and at least used it for a full year afterwards, i.e., a black arrow followed by a green arrow in Table 6. The short term impact as estimated by the trend of warning density for 15 projects where PMD was used at least once is declining in 9 projects while 6 projects have an increase in warning trend. While we expected to see a drop in ASAT warnings after introduction of PMD, this is only the case in 9 of the 15 projects we consider here. An explanation for this result could be that the developers introduce the ASAT but do not immediately scrutinize enough code to make a difference in the short term.

5.2.2 RQ2.2: What is the long term impact of PMD on the number of ASAT warnings?

Table 7 shows the number of ASAT warnings over the projects lifetime from the point in time where Maven was used as a build system, i.e. the point in time where we are sure that we can capture the effective rules of the ASAT. S is the plain sum of the number of warnings, R is the warning density. R+t is the warning density with time-correction where we only count the warnings that PMD supported at the time of the commit. R+d+t is the warning density with only the rules counted that the Maven PMD plugin has enabled by default with time-correction. R+e+t is the warning density with only the rules counted that are definitely enabled via the parsed Maven configuration file, i.e., the most exact and only available in projects where we have the PMD plugin enabled in the Maven configuration file. Figure 3 visualizes this information using the project Commons-lang as an example. The red line represents the number of rules considered, the blue line is the number of ASAT warnings which is a sum (S) in the first subplot and warning density (R) in all following subplots. The orange line is the regression line. The number of rules is constant if no time-correction is applied. Figure 3 also shows that for the effective ruleset, we only count from the point of inclusion of the ASAT. Otherwise we would skew the data in this case. We should also mention that jumps in effective rules can be due to inclusion of new rules by the developers or by inclusion of new rules for PMD due to group expansion, i.e., the project configures all rules for category A, PMD adds new rules for category A at that point in time which results in rising number of rules considered.

A first interesting result is that if we look at the warning density, there is a downward or neutral trend for all but 13 projects. This means independent of the presence of PMD in the buildfile the overall quality of the code per kLLoC with regards to ASAT warnings improves in most projects. This could be for example through changes in coding style coinciding with some ASAT rules, e.g., no if statement without curly braces. The number of projects is higher than in the previous Section 5.1 where we considered the complete lifetime of the project. In Section 5.1, we observed a rising trend of warning density in only 10 projects.

If we only consider the effective rules (R+e+t) the picture is not that clear, which means even though the developers have the ability to look at the reports containing these warnings the overall quality per LLoC does not always improve. This could be due to perceived or real false positives of the reporting ASAT which are ignored by the developers.

To answer RQ2.2 we refer to Table 6 again and note the green trends following the introduction of PMD in black. If we add up the slopes of the subsequent years after the introduction of the ASAT, we can estimate the long term impact. We notice that we have more positive years than negative years in our data following the introduction of an ASAT. Positive years are identified by a decreasing warning density whereas negative years are identified by a increasing warning density. On a more quantitative note we can sum the slopes of years following the introduction of the ASAT which we report in Table 8. We are not listing mina-sshd even though it uses PMD because it was only introduced in 2017 which is the last year of our data, therefore it is excluded from the long term impact analysis.

Table 8 shows the median change in warning density per year, e.g., commons-lang decreases the number of warnings per kLLoC by 1.7 per year, which is almost the same than its overall decrease over all years (1.8) which can be seen in Table 4. We can also see that the average change per project is 2.3 which is less than the mean over all projects over all years reported in Table 4 which was 3.5. Nevertheless the projects predominantly show a negative median which indicates a positive trend in the number of ASAT warnings, i.e., warnings decrease. Only 5 projects, comons-rdf, commons-beanutils, commons-validator, cayenne and commons-imaging have a positive median, i.e., a majority of negative trends of warning density after PMD was introduced. The long term impact as estimated by the trend of warning density is positive in 19 of 24 projects. On average each project removed 2.3 warnings per kLLoC each year after PMD was introduced in the buildfile. Thus we can further conclude that the long term impact of PMD on warning density is better by trend alone than the observed short term impact. Although, its impact is weaker than the overall trend of warning density which encompasses the years where PMD was not present in the buildfile. This may be an effect of changing of coding style as the rules that changed the most are related to naming and style (see Section 5.1.2).

5.2.3 RQ2.3: Does the active usage of custom rules for PMD correlate with higher ASAT warning removal?

We first extract all changes to the buildfile and specifically to the custom rules as shown in Table 9. It shows the number of rules changed over the project lifetime and the number of commits where the build file or a configuration file was changed. To give a perspective regarding the number of commits we additionally included the mean and median for rule and build changes.

The sum of rule changes is the sum of all deltas of rule changes, this includes additions and removals of rules. As we also count the default rules when the ASAT is introduced there is a minimum of 45 rules that are changed if PMD is introduced and there are no custom rules right from the start. If custom rules are added or removed later this number increases.

The true relation to the trends can be seen in Table 10, it shows the correlation between the number of rule changes to the general trend of ASAT warnings of the project over each year where the ASAT was used. The results for this research question could be seen as inevitable because we do not have a lot of rule changes. Nevertheless, we find that 12 of 25 projects have at least performed some changes to their PMD rulesets.

While this may sound discouraging to developers, we note that the while the correlation is negligible it retains a negative sign for both correlation measures. This means that while rule changes increase the warning density decreases. However, other factors are probably also important for developers which profit from a well maintained rule set, e.g., acceptance of the ASAT by other developers.

5.2.4 RQ2.4: Is there a difference in ASAT warning removal trends whether PMD is included in the build process or not?

We first have to split our data into two groups, one group contains all years from all projects where PMD was used as indicated by its inclusion in the buildfile, and the other contains all the other years. We then investigate our two samples for differences. We want to know if the two groups have different warning trends and if this difference is statistically significant. We now describe the groups of warnings considered here. The R+t contains the time corrected warning density which contains all possible warnings that were available to the developers at the time of the commit, i.e., a warning added in 2017 would not be included in the warning density of a commit in 2016. R+d+t contains only the warning density of only the default rules that are enabled by PMD, they are also time corrected. R+e+t contains the time corrected effective rules, this contains the default rules except in cases where developers added custom rule sets. If custom rule sets are found, they are used exclusively. R+e+t+o contain the time corrected effective rules without overlapping rules. We subtract PMD warnings which are also reported by other ASATs if the project uses them, we consider FindBugs and Checkstyle. This removes possible influence in the no PMD group.

The Tables 11, 12, 13, 14 show a difference in the trends of the ASAT warnings between non PMD usage and PMD usage but it is not statistically significant. Table 15 completes the reporting for the Mann-Whitney-U test, it includes sample sizes and the median of the samples. The sample sizes are changing because we remove incomplete years of ASAT usage, overlapping rules and we also remove insignificant trends as described in Section 4.3.

The reason we found no significant difference could be that the changes resulting from ASAT usage in the buildfile are too small when considering the general number of changes developers apply due to normal code maintenance work. To remove potential influences from overlapping rules of PMD with FindBugs and Checkstyle, we removed them prior to the test in Table 14. This did not change the results significantly. Overall, we can see that the results are not significant, even as we get more detailed, i.e., from just all rules to only the effective rules with removed overlapping rules from other ASATs.

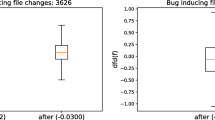

These results are surprising but looking at our data we can see that there is an effect of PMD usage, just not in a general code quality sense by utilizing the warning density. If we look at the raw sum of ASAT warnings which we have seen to increase in almost all projects, we can detect an effect. In Fig. 4, we can see that for most projects the slope of the trend of warning density per year is near 0 whereas for the non PMD using years it is higher.

A possible explanation for this data is, that projects which utilize PMD scrutinize most of the new code, which results in a rising trend of ASAT warnings but only slightly due to some left over warnings or ignored false positive warnings. In contrast, for projects which do not utilize PMD the trend of the sum of ASAT warnings is rising more steeply.

As shown in Table 16 in case we utilize the sum of all ASAT warnings, the difference is significant, albeit small.

5.2.5 RQ2.5: Is there a difference in defect density whether PMD is included in the build process or not?

We extract issues for all projects from the ITS created in a certain year and then calculate the defect density as described in Section 4.4.3. We then build two groups again for years of development where PMD was used and compare it to the second group of years of development where PMD was not used. Instead of the slope of the ASAT warning trends, we now compare the defect densities of the two groups. We only include years in which PMD was included in the buildfile for every commit or for none to mitigate problems of partial use. The defect density contains only issues marked as a bug by the developers, so we discard improvements, documentation changes. Moreover, the issue type used is the one at the end of the data collection. If an issue was misclassified and the classification was changed at some point, the changed classification is the one we use. This also removes duplicate bug reports from the data, if the developers marked the duplicate bug report as duplicate or invalid as is customary in that case.

Figure 5 shows the defect densities of the two groups, we can see that PMD using years have a slight advantage of less defect density. Table 17 contains the significance test for whether there is a difference between the two groups and its prerequisites. We can see that years in which PMD is present in the buildfile show a statistically significant difference of defect densities. To complete the reporting of the Mann-Whitney-U test in Table 17, the sample sizes are 132 and 249 for years where PMD was used and years where PMD was not used. The respective median values are 0.89500 and 1.67743. Resulting in a difference in median of 0.78243 between both samples. The 95% confidence interval of the difference in median is (0.36038, 0.86744).

This result is very coarse grained. We consider defect density per year which can only hint at a correlation instead of a direct causal relation. However, the ASAT we consider in this study contains a broad set of rules some of which also pertain to more generic maintainability and readability best practices. This may have a more indirect or long term effect on the quality, which is why we decided to include RQ2.5 in this way. However, to further validate this result and include confounding factors we build a regularized linear regression model which includes these factors to see if PMD usage still is of importance. To this end we enhanced the available data with additional features per project per year. We include the number of commits, the number of distinct developers, the year, the number of commits in which PMD was used / not used and the project name as a number. As a popularity proxy we include the Github information from that project, namely stars and forks. We train the linear regression model with this data and give the resulting coefficients in Table 18.

We can see that the regularization of the model removes the project number, and the year as well as the number of commits where PMD was used (although, we note the negative sign). The number of forks, commits, authors, and stars are more important and not removed by the regularization. The most important feature is the number of commits in which PMD was not used which indicates that it is an important factor when determining defect density. We also note that except for the number of commits in which PMD was used we retain positive signs on the coefficients of the model. The interpretation is that these factors have a detrimental effect on defect density, i.e., as #stars or #commits without PMD increases, so does defect density.

6 Discussion

The sum of ASAT warnings is increasing in most of our projects. As the number of ASAT warnings is correlated with the logical lines of code as shown in Table 3 this is not surprising. The rising size of the projects is in line with the rules of software evolution (Lehman 1996) which claim that E-Type softwareFootnote 14 continues to increase in size. There is no theory for explaining the continued growth of ASAT warnings but it could be interpreted as an indication that some warnings are ignored by developers because they may be deemed unnecessary or false positives (warnings in code without problems). An increasing number of ASAT warnings is also supported by the raw data provided by (Marcilio et al. 2019) in their replication kit. Although Marcilio et al. investigate real usage of Sonarcube by developers and primarily the resolution times of ASAT warnings, they provide the dates where ASAT warnings are opened and closed. After transforming their data to show the sum of open ASAT warnings for given days it shows rising sums of ASAT warnings for almost all of the projects. Furthermore, current research found that only a small fraction of ASAT warnings are fixed by developers (Liu et al. 2018; Marcilio et al. 2019; Kim and Ernst 2007b).

Table 5 provide us with additional interesting insights. First of all, code quality, if measured by warning density, is increasing. Second, the different types of ASAT warnings evolve differently. The order of the ASAT removal trends provided in Table 5 shows which types of ASAT warnings developers removed most in our candidate projects from 2001-2017. This provides us with a hint of what issues developers deemed most important in that timeframe. As this first part of our study is independent of ASAT usage, we can not quantify the influence of PMD or other ASATs, i.e., Checkstyle or FindBugs. Nevertheless, the results show that a certain importance is assigned to code readability and maintainability by the developers. This result is in line with research by (Beller et al. 2016) who found that the majority of actively enabled and disabled rules are maintainability-related. Beller et al. studied configuration changes. Our work expands on the work of Beller et al. and confirms that not only were the rules more often changed for maintainability related warnings, they were also globally resolved the most. This finding is also supported by (Zampetti et al. 2017) who analyzed CI build logs for ASAT warnings. They found that most builds break because of coding standard violations. However, checking adherence to coding standards via CI quality gates is an industry practice, which is probably a contributing factor. The most frequently fixed warnings found by (Marcilio et al. 2019) also contain rules regarding naming conventions and coding style. The only groups of ASAT warnings for which we found more introductions than removals in our trend analysis were import and clone rules. If we measure by warning density delta between the first and last commit of our analysis period, the only increasing rules are import and finalizer rules. However, as the number of rules here is small and the delta of the change is also small we can not draw any conclusions from this result.

As previously explained we think of code containing less ASAT warnings per line as higher quality code. In this case study we found that the warning density is decreasing, i.e., the overall quality is increasing. This is a positive result for the studied open source projects and may also be a positive result for software development in general.

However, we could not measure a significant difference between the trend of warning density in years of development between PMD usage and no PMD usage. Although, if we do not consider warning density but the raw sum of warnings there is indeed a measurable, significant difference. This could be a result of a flattening trend of ASAT warning removal after some time which means the LLoC then becomes the dominating factor of the warning density equation. This can be seen as evidence of industry best practices like utilizing static analysis tools only on new code as reported by Google (Sadowski et al. 2018) and Facebook (Distefano et al. 2019). Further evidence of this best practice is shown in the results of short and long term impact of PMD. We found that shortly after the introduction of PMD only 9 of 15 projects show decreasing warning density whereas 19 of 24 projects show decreasing warning density in the years following PMD introduction. To the best of our knowledge this would be the first publication to empirically find this effect in open source projects.