Abstract

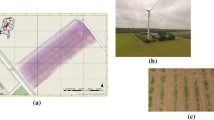

This work introduces a novel approach to remotely count and monitor potato plants in high-altitude regions of India using an unmanned aerial vehicle (UAV) and an artificial intelligence (AI)-based deep learning (DL) network. The proposed methodology involves the use of a self-created AI model called PlantSegNet, which is based on VGG-16 and U-Net architectures, to analyze aerial RGB images captured by a UAV. To evaluate the proposed approach, a self-created dataset of aerial images from different planting blocks is used to train and test the PlantSegNet model. The experimental results demonstrate the effectiveness and validity of the proposed method in challenging environmental conditions. The proposed approach achieves pixel accuracy of 98.65%, a loss of 0.004, an Intersection over Union (IoU) of 0.95, and an F1-Score of 0.94. Comparing the proposed model with existing models, such as Mask-RCNN and U-NET, demonstrates that PlantSegNet outperforms both models in terms of performance parameters. The proposed methodology provides a reliable solution for remote crop counting in challenging terrain, which can be beneficial for farmers in the Himalayan regions of India. The methods and results presented in this paper offer a promising foundation for the development of advanced decision support systems for planning planting operations.

Similar content being viewed by others

Data availability

The data generated during and/or analyzed during the current study are available from the corresponding author on reasonable request. The code will also be given on reasonable request. Moreover, data will be uploaded in GitHub after the publication of this article.

References

Alzadjali, A., Alali, M. H., Veeranampalayam Sivakumar, A. N., Deogun, J. S., Scott, S., Schnable, J. C., & Shi, Y. (2021). Maize tassel detection from UAV imagery using deep learning. Frontiers in Robotics and AI, 8, 600410.

Chowdhury, P. N., Shivakumara, P., Nandanwar, L., Samiron, F., Pal, U., & Lu, T. (2022). Oil palm tree counting in drone images. Pattern Recognition Letters, 153, 1–9.

Egi, Y., Hajyzadeh, M., & Eyceyurt, E. (2022). Drone-computer communication based tomato generative organ counting model using YOLO V5 and deep-sort. Agriculture, 12(9), 1290.

Fan, Z., Lu, J., Gong, M., Xie, H., & Goodman, E. D. (2018). Automatic tobacco plant detection in UAV images via deep neural networks. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 11(3), 876–887.

He, K., Gkioxari, G., Dollár, P., & Girshick, R. (2017). Mask r-cnn. In Proceedings of the IEEE international conference on computer vision pp. 2961–2969

Karami, A., Crawford, M., & Delp, E. J. (2020). Automatic plant counting and location based on a few-shot learning technique. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 13, 5872–5886.

Kitano, B. T., Mendes, C. C., Geus, A. R., Oliveira, H. C., & Souza, J. R. (2019). Corn plant counting using deep learning and UAV images. IEEE Geoscience and Remote Sensing Letters

Li, H., Wang, P., & Huang, C. (2022). Comparison of deep learning methods for detecting and counting sorghum heads in UAV imagery. Remote Sensing, 14(13), 3143.

Lin, Z., & Guo, W. (2020). Sorghum panicle detection and counting using unmanned aerial system images and deep learning. Frontiers in Plant Science, 11, 534853.

Machefer, M., Lemarchand, F., Bonnefond, V., Hitchins, A., & Sidiropoulos, P. (2020). Mask R-CNN refitting strategy for plant counting and sizing in UAV imagery. Remote Sensing, 12(18), 3015.

Mukhtar, H., Khan, M. Z., Khan, M. U. G., Saba, T., & Latif, R. (2021). Wheat plant counting using UAV images based on semi-supervised semantic segmentation. In: 2021 1st International conference on artificial intelligence and data analytics (CAIDA) (pp. 257–261). IEEE

Neupane, B., Horanont, T., & Hung, N. D. (2019). Deep learning based banana plant detection and counting using high-resolution red-green-blue (RGB) images collected from unmanned aerial vehicle (UAV). PLoS ONE, 14(10), e0223906.

Osco, L. P., de Arruda, M. D. S., Gonçalves, D. N., Dias, A., Batistoti, J., de Souza, M., ..., & Gonçalves, W. N. (2021). A CNN approach to simultaneously count plants and detect plantation-rows from UAV imagery. ISPRS Journal of Photogrammetry and Remote Sensing, 174, 1–17.

Rahnemoonfar, M., & Sheppard, C. (2017). Deep count: Fruit counting based on deep simulated learning. Sensors, 17(4), 905.

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-net: convolutional networks for biomedical image segmentation. In: Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, Proceedings, Part III 18, pp. 234–241

Thakur, D., Kumar, Y., Kumar, A., & Singh, P. K. (2019). Applicability of wireless sensor networks in precision agriculture: A review. Wireless Personal Communications, 107, 471–512.

Thakur, D., Kumar, Y., Singh, P. K., & Juneja, A. (2022). Measuring environmental parameters and irrigation for rose crops using cost effective WSNs model. In 2022 3rd International conference on issues and challenges in intelligent computing techniques (ICICT) (pp. 1–6). IEEE.

Thakur, D., Saini, J. K., & Srinivasan, S. (2023a). DeepThink IoT: The strength of deep learning in internet of things. Artificial Intelligence Review, 1–68

Thakur, D., Saini, J. K., & Srinivasan, S. (2023b). Fine tuned single shot detector for finding disease patches in leaves. In International conference on agriculture-centric computation (pp. 1–14). Cham: Springer Nature Switzerland.

Uzal, L. C., Grinblat, G. L., Namías, R., Larese, M. G., Bianchi, J. S., Morandi, E. N., & Granitto, P. M. (2018). Seed-per-pod estimation for plant breeding using deep learning. Computers and Electronics in Agriculture, 150, 196–204.

Valente, J., Sari, B., Kooistra, L., Kramer, H., & Mücher, S. (2020). Automated crop plant counting from very high-resolution aerial imagery. Precision Agriculture, 21, 1366–1384.

Wang, X., Yang, W., Lv, Q., Huang, C., Liang, X., Chen, G., ..., & Duan, L. (2022). Field rice panicle detection and counting based on deep learning. Frontiers in Plant Science, 13:966495

Author information

Authors and Affiliations

Contributions

DT played a key role in the development and implementation of the AI-based Deep Learning network, PlantSegNet. He was responsible for conceptualizing the network architecture, drawing inspiration from VGG-16 and U-Net models, and adapting them for plant counting in aerial images. He curated and organized the self-created dataset of aerial images from different planting blocks, ensuring it was diverse and representative of the challenging environmental conditions in high-altitude regions. Divyansh Thakur conducted extensive experiments to train and test the PlantSegNet model using the dataset, and he analyzed the results to validate the proposed methodology’s effectiveness and reliability. He was involved in comparing PlantSegNet with other existing models, such as Mask-RCNN and U-Net, to demonstrate its superiority in terms of performance parameters. Divyansh Thakur contributed to writing and revising sections of the paper related to the AI model, the experimental setup, and the evaluation of results.

SS contributed to the selection and setup of the UAV and remote sensing equipment used to capture the aerial RGB images for analysis. He assisted in the field experiments, overseeing the data collection process and managing any technical challenges encountered during the UAV flights and image capture. Srikant Srinivasan actively participated in the discussions related to the paper's methodology, results interpretation, and conclusions drawn from the study.

All authors contributed to the critical review of the manuscript and approved the final version for submission.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Declarations

All authors have read, understood, and have complied as applicable with the statement on “Ethical responsibilities of Authors” as found in the Instructions for Authors.

Ethics approval

The reported work did not involve any human’s participation and/or did not harm the welfare of animals.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Thakur, D., Srinivasan, S. AI-PUCMDL: artificial intelligence assisted plant counting through unmanned aerial vehicles in India’s mountainous regions. Environ Monit Assess 196, 406 (2024). https://doi.org/10.1007/s10661-024-12550-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10661-024-12550-0