Abstract

We show how experimental results can be generalized across diverse populations by leveraging knowledge of local mechanisms that produce the outcome of interest, only some of which may differ in the target domain. We use structural causal models and a refined version of selection diagrams to represent such knowledge, and to decide whether it entails the invariance of probabilities of causation across populations, which then enables generalization. We further provide: (i) bounds for the target effect when some of these conditions are violated; (ii) new identification results for probabilities of causation and the transported causal effect when trials from multiple source domains are available; as well as (iii) a Bayesian approach for estimating the transported causal effect from finite samples. We illustrate these methods both with simulated data and with a real example that transports the effects of Vitamin A supplementation on childhood mortality across different regions.

Similar content being viewed by others

Notes

Available in https://github.com/carloscinelli/generalizing.

Russian Roulette consists of loading a bullet into a revolver, spinning the cylinder, pointing the gun at one’s own head and then pulling the trigger. We do not recommend attempting this.

The arrow \(X\rightarrow Y\) comprises, of course, many intermediate mechanisms (such as loading the gun, spinning the cylinder, pulling the trigger) that are not modeled explicitly.

Note that, although reasonable, one cannot take this assumption for granted—it could be the case that revolvers used for Russian Roulette in New York have a different number of chambers than those used in Los Angeles. The absence of a selection node pointing to B encodes the assumption that this is not the case.

Although here we have \(Y_0 = H\) for simplicity, this need not be the case. The same argument would hold, for instance, if we define H to be a random variable with arbitrary cardinality and \(Y= g(H)\vee (X\wedge B)\), where \(g(H) \in \{0, 1\}\). Likewise, “see the appendix” for an example where the treatment variable X is continuous and the same strategy adopted here can be employed.

Since some relationships in the graph may be deterministic, conditional independencies other than those revealed by d-separation (with lower-case d) may be present. A complete criterion for DAGs with deterministic nodes is given by the D-separation criterion (with capital D) of [5]. Moreover, note arrows between potential outcomes need not convey causal influence; their purpose is merely to ensure that the correct conditional independencies among variables are encoded in the graph, as derived from the structural equations. Finally, here we are not treating the question of how scientists acquire scientific knowledge in the form of a functional specification such as Eq. 1. Rather, our task is more modest: given that scientists sometimes have knowledge of mechanisms, how can we leverage some of that knowledge for identification.

The right-hand side of this expression is known as the “relative difference,” or “susceptibility.” Simple algebra shows that \(\frac{P(Y_{1} = 1) - P(Y_0 = 1)}{1-P(Y_0 = 1)} = 1 - \frac{1- P(Y_1 = 1)}{1-P(Y_0 = 1)}\), where the quantity \(\frac{1- P(Y_1 = 1)}{1-P(Y_0 = 1)}\) is known as the “survival ratio.” Since under the assumption of monotonicity these estimands identify \({\text {PS}}_{01}\), and \({\text {PS}}_{01}\) is invariant across domains, it thus follows that the “relative difference” and the “survival ratio” will also be equal between populations. Huitfeldt et al. [10] suggested using this fact as a rationale for assuming homogeneity of effect measures across domains, a common heuristic among epidemiologists for approaching generalizability problems. These equivalences, however, break down without monotonicity; in that case, the “relative difference” is a lower bound for the probability of sufficiency [26], as we discuss next.

For example, under the assumption of monotonicity, we have that \({\text {PN}}_{01} = \frac{P(Y_{1} = 1) - P(Y_0 = 1)}{P(Y_1 = 1)} \) [16]. This last estimand is known as the “excess-risk-ratio,” and algebra also shows that \(\frac{P(Y_{1} = 1) - P(Y_0 = 1)}{P(Y_1 = 1)} = 1 - \frac{1}{P(Y_1 =1)/P(Y_0 = 1)}\), where \(\frac{P(Y_1 =1)}{P(Y_0 = 1)}\) is the “risk ratio.” Thus in this setting, both the “excess-risk-ratio” and the “risk ratio” would be equal across domains. Without monotonicity, the “excess-risk-ratio” is a lower bound on the probability of necessity [26].

The 95% credible intervals for the risk difference and risk ratio are 0.008–0.04 and 1.009–1.042, respectively. Alternatively, if one prefers inferences on the bounds, we have 95% credible intervals of: 0.955–0.975 for the lower bound, 0.991–0.994 for the upper bound, and 0.002–0.020 for the lower bound of the risk difference (i.e, \(P^{*L}_{11} - P^*_{01}\)).

Call these new causes C. The new structural equation for Y now reads \(Y = (H \vee (X \wedge B)) \wedge \lnot C\). This leads to \(Y_0 = H \wedge \lnot C\) and \(Y_1 = Y_0 \vee (B \wedge \lnot C)\). Note this creates the colliding path \(S \rightarrow H \rightarrow Y_0 \leftarrow C \rightarrow Y_1\), thus forbidding the conclusion that \(Y_1 \perp \!\!\!\!\perp S \mid Y_0\), even when there is no selection node pointing directly to C. For another illustration of when collider bias may arise, see the appendix.

This could arise, for instance, as a result of population stratification.

References

Bareinboim E, Pearl J. Causal inference and the data-fusion problem. Proc Natl Acad Sci. 2016;113(27):7345–52.

Cinelli C, Hazlett C. Making sense of sensitivity: extending omitted variable bias. J R Stat Soc Ser B (Stat Methodol). 2020;82:39–67.

Cinelli C, Kumor D, Chen B, Pearl J, Bareinboim E. Sensitivity analysis of linear structural causal models. In: International conference on machine learning; 2019.

Dahabreh IJ, Petito LC, Robertson SE, Hernán MA, Steingrimsson JA. Toward causally interpretable meta-analysis: transporting inferences from multiple randomized trials to a new target population. Epidemiology. 2020;31(3):334–44.

Geiger D, Verma T, Pearl J. Identifying independence in Bayesian networks. Networks. 1990;20(5):507–34.

Gelman A, Carlin JB, Stern HS, Dunson DB, Vehtari A, Rubin DB. Bayesian data analysis. Boca Raton: CRC Press; 2013.

Gustafson P. Bayesian inference for partially identified models: exploring the limits of limited data, vol. 140. Boca Raton: CRC Press; 2015.

Hartman E, Grieve R, Ramsahai R, Sekhon JS. From SATE to PATT: combining experimental with observational studies to estimate population treatment effects. J R Stat Soc Ser A (Stat Soc). 2015;10:1111.

Huitfeldt A. Effect heterogeneity and external validity in medicine; 2019. https://www.lesswrong.com/posts/wwbrvumMWhDfeo652/.

Huitfeldt A, Goldstein A, Swanson SA. The choice of effect measure for binary outcomes: introducing counterfactual outcome state transition parameters. Epidemiol Methods. 2018;7(1):20160014.

Huitfeldt A, Swanson SA, Stensrud MJ, Suzuki E. Effect heterogeneity and variable selection for standardizing causal effects to a target population. Eur J Epidemiol. 2019;34:1119–29.

Lu Y, Scharfstein DO, Brooks MM, Quach K, Kennedy EH. Causal inference for comprehensive cohort studies; 2019. arXiv:1910.03531.

Mueller S, Pearl J. Which patients are in greater need: a counterfactual analysis with reflections on covid-19. In: Causal analysis in theory and practice; 2020. https://ucla.in/39Ey8sU.

Muhilal PD, Idjradinata YR, Muherdiyantiningsih KD. Vitamin a-fortified monosodium glutamate and health, growth, and survival of children: a controlled field trial. Am J Clin Nutr. 1988;48(5):1271–6.

Pearl J. Causal diagrams for empirical research. Biometrika. 1995;82(4):669–88.

Pearl J. Probabilities of causation: three counterfactual interpretations and their identification. Synthese. 1999;121(1–2):93–149.

Pearl J. Causality. Cambridge: Cambridge University Press; 2009.

Pearl J. Causes of effects and effects of causes. Sociol Methods Res. 2015;44(1):149–64.

Pearl J. Sufficient causes: on oxygen, matches, and fires. J Causal Inference. 2019;7(2):1–11.

Pearl J, Bareinboim E. External validity: from do-calculus to transportability across populations. Stat Sci. 2014;29(4):579–95.

Plummer M. rjags: Bayesian graphical models using MCMC. R package version. 2016;4(6).

Plummer M, et al. Jags: a program for analysis of Bayesian graphical models using Gibbs sampling. In: Proceedings of the 3rd international workshop on distributed statistical computing, vol. 124. Vienna, Austria; 2003. p. 1–10.

Richardson TS, Evans RJ, Robins JM. Transparent parameterizations of models for potential outcomes. Bayesian Stat. 2011;9:569–610.

Silva R, Evans R. Causal inference through a witness protection program. J Mach Learn Res. 2016;17(1):1949–2001.

Sommer A, Djunaedi E, Loeden A, Tarwotjo I, West K, Tilden JR, Mele L, Group AS, et al. Impact of vitamin a supplementation on childhood mortality: a randomised controlled community trial. Lancet. 1986;327(8491):1169–73.

Tian J, Pearl J. Probabilities of causation: bounds and identification. Ann Math Artif Intell. 2000;28(1–4):287–313.

Tikka S, Hyttinen A, Karvanen J. Identifying causal effects via context-specific independence relations. In: Advances in neural information processing systems; 2019. p. 2800–10.

West KP Jr, Katz J, LeClerq SC, Pradhan E, Tielsch JM, Sommer A, Pokhrel R, Khatry S, Shrestha S, Pandey M. Efficacy of vitamin a in reducing preschool child mortality in Nepal. Lancet. 1991;338(8759):67–71.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We thank Anders Huitfeldt, Ricardo Silva, and anonymous reviewers for valuable comments and feedback. This research was supported in parts by grants from Defense Advanced Research Projects Agency [#W911NF-16-057], National Science Foundation [#IIS-1302448, #IIS-1527490, and #IIS-1704932], and Office of Naval Research [#N00014-17-S-B001].

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

An example with continuous treatment

Here we provide a simple example in which, although the treatment variable is continuous, the relevant dependencies among potential outcomes are still amenable to graphical representation. Suppose we have the same selection diagram as in Fig. 2b, but now let X, B, and H all be continuous variables. Next, consider the following functional specification for the structural equation of Y,

where \(I(\cdot )\) denotes the indicator function. Now note from Equation 4 we can derive the potential outcomes \(Y_0 = I(H>0)\) for \(x =0\), and, \(Y_x = I(H>0) \vee I(xB>0) = Y_0 \vee I(xB>0)\), for \(x \ne 0\). We can thus draw the same modified selection diagram as in Fig. 3, but now replacing \(Y_1\) with \(Y_x\), leading to the conclusion that \(Y_{x}\perp \!\!\!\!\perp S \mid Y_{0}\), for all \(x \ne 0\).

Proofs

Bounds with a single source population

Here we show how to obtain the bounds of Theorem 1. To simplify notation, let \(P_{ij}:=P(Y_{i} = j)\), \(P^*_{ij}:= P^*(Y_{i} = j)\), \({\text {PS}}_{10} := P^*(Y_1 = 0 |Y_0 = 1) = P(Y_1 = 0|Y_0 =1)\) and \({\text {PS}}_{01} = P^*(Y_1 = 1|Y_0 = 0)= P(Y_1 = 1|Y_0 = 0)\). The target function to be optimized is \(P^*_{11}\), which can be written as,

Our goal is to pick \({\text {PS}}_{10}\) and \({\text {PS}}_{01}\) such that it maximizes (or minimizes) Eq. 5 subject to the following constraints: (i) \({\text {PS}}_{10}\) and \({\text {PS}}_{01}\) need to be between zero and one (since \({\text {PS}}_{10}\) and \({\text {PS}}_{01}\) need to be valid probabilities); and, (ii) \({\text {PS}}_{10}\) and \({\text {PS}}_{01}\) must conform to the observed results of the trial in the source domain, that is, \(P_{11} = (1-{\text {PS}}_{10})P_{01} + {\text {PS}}_{01}(1-P_{01}) \). Thus, our optimization problem is,

To simplify the problem, we can use the equality constraint \( P_{11} = (1-{\text {PS}}_{10})P_{01} + {\text {PS}}_{01}(1-P_{01}) \) to eliminate one of the variables. For instance, writing \({\text {PS}}_{10}\) in terms of \(PS_{01}\) gives us,

Which results in a new target function,

where \(RR = \frac{P_{11}}{P_{01}}\) is the causal risk-ratio in the trial of the source domain \(\Pi \). Since \(0 \le (1-{\text {PS}}_{10}) \le 1\), the substitution also results in additional constraints on \({\text {PS}}_{01}\),

Thus, define the lower and upper bounds on \({\text {PS}}_{01}\) as

Our new maximization problem can be written as,

Since the target function is linear, the maximum occurs at the extreme points of \({\text {PS}}_{01}\). The same reasoning holds for the minimization problem. Thus, we have that,

where

and

Informativeness of the bounds

We now derive the width of the bounds for \(P^*_{11}\) for the case when the bounds for \({\text {PS}}_{01}\) do not reach 0 nor 1 (this will happen when both \(P_{11} > P_{01}\) and \(P_{11} < 1 - P_{01}\)). Define the width W of the bounds as the difference between the upper and lower bound of \(P^*_{11}\), that is,

Expanding the terms we obtain,

Thus, when the bounds for \({\text {PS}}_{01}\) are “interior,” the informativeness of the bounds depend only on \(P_{01}\) and \(P^*_{01}\). Moreover, even if the bounds for \({\text {PS}}_{01}\) are “wide,” the bounds for \(P^*_{11}\) may be “narrow,” provided the baseline risks of the source and target population are close enough.

Identification with multiple source domains

We now show how to obtain the identification results of Theorems 2 and 3. Consider two source populations \(\Pi ^a\) and \(\Pi ^b\). Again, to simplify notation, let \(P^a_{ij}:=P^a(Y_i = j)\), \(P^b_{ij}:=P^a(Y_i = j)\), \({\text {PS}}_{10}:= P^a(Y_1 = 0 |Y_0 = 1) = P^b(Y_1 = 0 |Y_0 = 1) = P^*(Y_1 = 0|Y_0 =1)\) and \({\text {PS}}_{01}:= P^a(Y_1 = 1|Y_0 = 0)= P^b(Y_1 = 1|Y_0 = 0) = P^*(Y_1 = 1|Y_0 = 0)\).

First note that \({\text {PS}}_{10}\) and \({\text {PS}}_{01}\) are identified from the experimental data in \(\Pi ^a\) and \(\Pi ^b\). Using the law of total probability for \(P^a_{11}\) and \(P^b_{11}\) write,

We thus have a system of two equations and two unknowns,

Yielding the solution,

Which amounts to:

All values of the RHS can be computed from the experimental data of \(\Pi ^a\) and \(\Pi ^b\). Note that, since \({\text {PS}}_{10}\) and \({\text {PS}}_{01}\) must be between 0 and 1, not all solutions are valid. Therefore, two domains already entail some testable implications—if either \({\text {PS}}_{10}\) and \({\text {PS}}_{01}\) are not valid probabilities, this means that the assumption that the probabilities of sufficiency are invariant across domains is false. If we add a third or more source domains, it is easy to see that we will have three or more equations but still only two unknowns, and the system is thus over-identified.

Once in possession of \({\text {PS}}_{10}\) and \({\text {PS}}_{01}\), we can transport the causal effect to the target population \(\Pi ^*\) by appealing again to the law of total probability,

Finally, we note that all probabilities of causation, as discussed in [26], are also identifiable in this setting. First, consider the probability of necessity and sufficiency, \(\text {PNS} = P(Y_1 = 1, Y_0 = 0)\) for \(\Pi ^a\). Using the chain rule, PNS can be written as,

Note \({\text {PS}}_{01}\) was already identified, and \(P^a(Y_0 = 0)\) is given by the trial data in \(\Pi ^a\), thus rendering \(\text {PNS}^a\) identifiable. Similar reasoning holds for \(\Pi ^b\).

For the probability of necessity, define \({\text {PN}}_{01}:= P(Y_0 = 0 \mid Y_1 = 1)\). Due to the randomization of X, \({\text {PN}}_{01}\) coincides with Tian and Pear’s probability of necessity during the trial (not the observational PN), by the same argument we provide for PS in the main text. The final step is to note that,

The numerator is simply the PNS, which we have already identified, and the denominator is given by the trial data in \(\Pi ^a\). Again, analogous argument can be given for \(\Pi ^b\).

Modeling functional constraints

To illustrate the usefulness of explicitly modeling functional constraints in a structural framework, we apply the same modeling strategy of the paper in an example described in Huitfeldt et al. [10, p. 11]:

Consider a team of investigators who are interested in the effect of antibiotic treatment on mortality in patients with a specific bacterial infection (...) the investigators believe that the response to this antibiotic is completely determined by an unmeasured bacterial gene, such that only those who are infected with a bacterial strain with this gene respond to treatment. The prevalence of this bacterial gene is equal between populations, because the populations share the same bacterial ecosystem (...) if the investigators further believe that the gene for susceptibility reduces the mortality in the presence of antibiotics, but has no effect in the absence of antibiotics, they will conclude that G may be equal between populations.

Here the conclusion that G may be equal between populations is equivalent to claiming \(Y_1 \perp \!\!\!\!\perp S \mid Y_0\). But is the description above sufficient for substantiating this claim? Figure 9 shows two models compatible with the description, yet leading to two opposite conclusions.

Two selection diagrams compatible with the verbal description of Huitfeldt et al. [10, page 11]. Yet, model a implies \(Y_1 \perp \!\!\!\!\perp S \mid Y_0\), and model b implies the opposite; conditioning on \(Y_0\) opens the colliding path \(S \rightarrow U \leftrightarrow BG \rightarrow Y_1\)

Let the variable A represent the binary treatment (antibiotic), Y represent the binary outcome (mortality), BG stand for the presence or absence of the “bacterial gene” and finally let U be a binary variable that summarizes all other factors that may cause death (\(Y=1\)). The description of the problem suggests the functional specification,

showing the antibiotics and the bacterial gene both helping to reduce mortality (\(\lnot \) denotes the logical “not”). Equation 28 entails the potential outcomes \(Y_{0} = U\) and \(Y_{1} = U \wedge (\lnot BG) = Y_{0 }\wedge (\lnot BG)\), which are explicitly shown in both diagrams as dictated by the functional specification. Moreover, in both models the prevalence of the bacterial gene BG is equal between populations (i.e., \(BG \perp \!\!\!\!\perp S\)). In the model of Fig. 9a, as in our previous analysis, we indeed conclude that \(Y_1 \perp \!\!\!\!\perp S \mid Y_0\), and that \(P^*(Y_{1})\) is transportable. However, in the model of Fig. 9b, there is an unmeasured confounder between BG and U.Footnote 15 Conditioning on \(Y_0\) (a child of a collider) opens the colliding path \(S \rightarrow U \leftrightarrow BG \rightarrow Y_1\), thus not licensing the independence \(Y_1 \perp \!\!\!\!\perp S \mid Y_0\).

Bayesian estimation

Multiple source domains

In this section we show how to extend the probabilistic graphical model of the section “A Bayesian approach to estimation” to two or more sources. Let us start with two source populations \(\Pi ^a\) and \(\Pi ^b\), and one target domain \(\Pi ^*\). The observed data is now \({\mathcal {D}}\,=\,\{n^a_0, n^a_1, n^*_0, n^b_0, n^b_1\}\), all with binomial distributions:

We also have the following deterministic relationships for \(P^a_{11}\), \(P^b_{11}\) and \(P^*_{11}\):

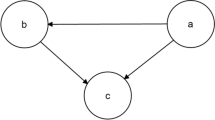

The probabilistic graphical model for this case is shown in Fig. 10.

Probabilistic graphical model with two source populations \(\Pi ^a\), \(\Pi ^b\) and one target population \(\Pi ^*\). Gray nodes (\(n^a_0\), \(n^a_1\), \(n_0^*\), \(n^b_0\), \(n^b_1\)) denote observed variables. White notes denote latent parameters (\(P^a_{01}\), \(P^a_{11}\), \({\text {PS}}_{10}\), \({\text {PS}}_{01}\), \(P^*_{11}\), \(P^*_{01}\), \(P^b_{11}\), \(P^b_{01}\)). Note that \(P^a_{11}\), \(P^*_{11}\) and \(P^b_{11}\) share the parameters \({\text {PS}}_{10}\) and \({\text {PS}}_{01}\), which are invariant across populations

Thus, one needs to place priors on the parent nodes only, and then perform inference as before. The extension to more than two populations follows the same logic. It is worth noting that, as we have seen in the section “Building the structural model” with two or more source populations the model entails testable implications. Therefore, we advise researchers to check whether the data is compatible with the model [6].

Finally, similarly to the discussion in the section “A Bayesian approach to estimation” a simpler modeling alternative here is to place priors only on the parameters of the observed data directly, and make inferences using the posterior of the functionals of the observed data that identify the target quantities.

Replication code

R code to replicate the estimation examples using JAGS [22] and the package rjags [21] is provided in the online supplemental material.

Rights and permissions

About this article

Cite this article

Cinelli, C., Pearl, J. Generalizing experimental results by leveraging knowledge of mechanisms. Eur J Epidemiol 36, 149–164 (2021). https://doi.org/10.1007/s10654-020-00687-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10654-020-00687-4