Abstract

Previous research has shown that three comparison types are involved in the formation of students’ academic self-concepts: social comparisons (where students compare their achievement with their classmates), dimensional comparisons (where students compare their achievement in different subjects), and temporal comparisons (where students compare their achievement across time). The 2I/E model provides a framework to describe the joint effects of these comparisons. To date, it has been tested in 12 empirical studies. However, integration of these findings is lacking, especially in terms of yielding reliable estimates of the strength of social, dimensional, and temporal comparison effects. We therefore conducted an individual participant data (IPD) meta-analysis, in which we reanalyzed the data used in all prior 2I/E model studies (N = 45,248). This IPD meta-analysis provided strong support for the 2I/E model: There were moderate social comparison effects, small to moderate dimensional comparison effects, and small temporal comparison effects on students’ math and verbal self-concepts. Moreover, several moderating variables affected the strength of these effects. In particular, the social and temporal comparison effects were stronger in studies using grades instead of test scores as achievement indicators. Older students showed weaker social comparison effects but stronger dimensional comparison effects compared to younger students. Social comparison effects were also stronger in academic track schools compared to nonacademic track schools. Gender and migration background had only very small impacts on the strength of single comparison effects. In sum, this IPD meta-analysis significantly enhances our knowledge of comparison making in the process of students’ self-concept formation.

Similar content being viewed by others

Subject-specific academic self-concepts can be defined as students’ perceptions of their abilities in different school subjects. They are significant predictors of important educational outcomes, such as performance (e.g., Marsh & Craven, 2006), motivation (e.g., Guay et al., 2010), test anxiety (e.g., Arens et al., 2017), and course choice (e.g., Jansen et al., 2021). As such, they number among the most important constructs in educational psychology.

Among the multiple factors involved in students’ academic self-concept formation, three types of comparison have been shown to be particularly relevant self-concept predictors (e.g., Wigfield et al., 2020): social comparisons (where students compare their achievement in a subject with their classmates’ achievements in the same subject), dimensional comparisons (where students compare their achievement in one subject with their achievement in another subject), and temporal comparisons (where students compare their achievement in a subject with their prior achievement in that subject). Whereas social, dimensional, and temporal comparisons with lower achievements of others, in other subjects, or from former times usually increase students’ self-concepts, social, dimensional, and temporal comparisons with higher achievements of others, in other subjects, or from former times usually result in self-concept decreases (e.g., Wolff et al., 2018b).

Social, dimensional, and temporal comparisons have in common that they are all embedded in a specific comparison theory: social comparison theory (Festinger, 1954), dimensional comparison theory (Möller & Marsh, 2013), or temporal comparison theory (Albert, 1977), respectively. Although the role of each of these three comparisons in the process of self-concept development has been studied for decades (e.g., Skaalvik & Skaalvik, 2004), however, it is only in recent years that researchers have focused on the simultaneous effects of social, dimensional, and temporal comparisons. In particular, this line of research was advanced by the development of the 2I/E model (Wolff et al., 2019a, b), which integrates all three comparisons and allows the examination of their joint effects on students’ academic self-concepts. To date, the 2I/E model has been tested in 12 empirical studies (Wolff et al., 2018b, 2019a, b, 2020, 2021a). However, these studies differed in terms of sample characteristics (e.g., age), achievement operationalization (grades vs. test scores), and temporal comparison periods (ranging from 0.5 to 2 years). Moreover, the studies considered different numbers of subjects, and some studies included additional covariates. These differences make it difficult to compare the results over the individual studies in order to draw general conclusions about the strength of social, dimensional, and temporal comparison effects.

In the present research, we therefore bring together the 12 studies that have tested the 2I/E model, to yield reliable estimates of the strength of social, dimensional, and temporal comparison effects on students’ self-concepts. Furthermore, we examine several factors that may moderate these effects. For this purpose, we conducted an individual participant data (IPD) meta-analysis in which we reanalyzed the data used in all prior 2I/E model studies. This approach—considered “the gold standard of meta-analyses” (Kaufmann et al., 2016, p. 157)—has at least three central advantages compared to classic aggregated person data (APD) meta-analyses, which synthesize data from studies using the summary statistics presented in the respective publications (e.g., Cooper & Patall, 2009). First, the IPD approach allowed us to recalculate the 2I/E models from prior studies, while including the same variables and conducting the same statistical analyses. Thus, the results could not be biased by methodological differences between the original 2I/E model studies. At the same time, we were able to test the 2I/E model on the basis of a significantly larger sample, and thus with substantially higher power, compared to the original studies. Second, the IPD approach allowed us to examine not only moderating variables with different manifestations between studies (e.g., achievement operationalization), but also student characteristics that usually show no strong variation between studies. For example, with the IPD approach, we were able to investigate whether and how the strength of comparison effects differs between girls and boys. In contrast, in a classical APD analysis, one could only examine whether and how the strength of comparison effects depends on the gender ratio in single 2I/E model studies (which hardly differs from study to study). Finally, the IPD approach has the advantage that it avoids aggregation bias. For example, ecological fallacy, which arises when relations between variables at the group level differ from the relations between the same variables at the individual level (Robinson, 1950), can lead to misleading conclusions in APD meta-analyses. Such problems do not arise in IPD meta-analysis because it is the raw data that are analyzed.

To sum up, our meta-analysis goes beyond prior meta-analyses examining comparison effects on students’ self-concepts in that it is the first that considers the joint effects of social, dimensional, and temporal comparisons, but also due to its methodological advantages. In particular, the IPD approach allows us to examine the moderating influences of variables that could not be considered in prior APD meta-analyses, such as Möller et al.’s (2020) recent meta-analysis of the joint effects of social and dimensional comparisons on students’ academic self-concepts (see below). Accordingly, our research significantly enhances our knowledge not only of the strength of social, dimensional, and temporal comparison effects, but also of factors that may affect the strength of these effects in the process of students’ self-concept formation.

The 2I/E Model: an Extension of the Classic I/E Model

As noted in the Introduction, the 2I/E model describes the simultaneous effects of social, dimensional, and temporal comparisons on students’ math and verbal self-concepts. Wolff et al. (2019a, b) developed it on the basis of Marsh’s (1986) classic internal/external frame of reference (I/E) model, which considers social comparisons (an external comparison) and dimensional comparisons (an internal comparison) in the process of self-concept formation, by adding temporal comparisons as a second internal comparison integrated into this framework. The classic I/E model and the 2I/E model share the core assumption that comparison effects can be detected by regressing students’ self-concepts on their achievements. However, the approach of the 2I/E model is more complex. In the following sections, we thus briefly introduce the classic I/E model before describing the 2I/E model in detail. Subsequently, we make some comments relating to the I/E model and the 2I/E model that are of particular relevance to this meta-analysis.

The Classic I/E Model

Figure 1 shows the classic I/E model. Originally, Marsh (1986) developed it to explain why the correlation between students’ math and verbal self-concepts is usually close to zero, although students’ math and verbal achievements show strong positive correlations. Marsh argued that this seemingly paradoxical finding resulted from the joint operation of social and dimensional comparisons. On the one hand, students compared their achievement in one subject with their classmates’ achievements in the same subject. These social comparisons would lead to positive effects of achievements on self-concepts within subjects. On the other hand, students compared their math and verbal achievements with each other. In the I/E model, these dimensional comparisons would result in negative effects of achievements on self-concepts between subjects.

The classic I/E model. The social comparison effects are assumed to be positive and stronger than the dimensional comparison effects, which are assumed to be negative. However, it should be considered that the effects of achievements on self-concepts within subjects not only result from social comparisons, but also from dimensional comparisons in particular (see section “Additional Comments on the I/E Model and 2I/E Model”)

Empirically, researchers have examined the I/E model assumptions in more than 100 studies to date. Given this high number of studies, Möller et al. (2020) recently conducted a meta-analysis, including all studies testing the I/E model published up to April 2018 (see also Möller et al., 2009, for an earlier meta-analysis). Overall, these authors found strong support for the I/E model. Whereas the effects of students’ math achievement on their math self-concept (β = .55) and of their verbal achievement on their verbal self-concept (β = .46) were strongly positive, the effects of students’ verbal achievement on their math self-concept (β = −.19) and of their math achievement on their verbal self-concept (β = −.16) were slightly negative. In addition, experimental studies further corroborated the assumption of the I/E model that the effects of achievements on self-concepts represent effects of social and dimensional comparisons (e.g., Möller & Köller, 2001; Strickhouser & Zell, 2015; Wolff & Möller, 2021).

The 2I/E Model

Although the assumptions of the classic I/E model have found empirical support in numerous studies, these studies share the limitation that they have usually neglected temporal comparisons as a third comparison type that may affect students’ self-concepts. On the basis of empirical findings from experimental, field, and vignette studies that showed an influence of temporal comparisons on students’ self-concepts beyond the influence of social and dimensional comparisons (Müller-Kalthoff et al., 2017; Wolff et al., 2018b), Wolff et al. (2019a, b) therefore extended the I/E model into the 2I/E model. Figure 2 presents this extension. As shown there, the 2I/E model describes the effects of students’ math and verbal achievements on their math and verbal self-concepts in a more differentiated fashion than the classic I/E model by considering achievement information from different points in time, and separating students’ achievement levels from their achievement changes. Students’ achievement levels are defined as their average achievements during a specific period. Students’ achievement changes refer to changes in their achievements within this period. Similarly to the classic I/E model, the 2I/E model predicts positive effects of students’ achievement levels on their self-concepts within subjects, which should result from social and dimensional comparisons, as well as negative effects of students’ achievement levels on their self-concepts between subjects, which should result from dimensional comparisons. Furthermore, the 2I/E model predicts positive effects of students’ achievement changes on their self-concepts within subjects, which should represent effects of temporal comparisons.Footnote 1

The 2I/E model. The social comparison effects are assumed to be positive and stronger than the dimensional comparison effects. The dimensional comparison effects are assumed to be negative and stronger than the temporal comparison effects. The temporal comparison effects are assumed to be positive. However, it should be considered that the effects of achievement levels on self-concepts within subjects not only result from social comparisons, but also from dimensional comparisons in particular (see section “Additional Comments on the I/E Model and 2I/E Model”)

The 2I/E model makes predictions not only about the direction of the comparison effects, but also concerning their strength: According to the model, the social comparison effects should be stronger than the dimensional comparison effects, and the dimensional comparison effects should be stronger than the temporal comparison effects. On the one hand, these predictions are based on empirical findings on the joint effects of social, dimensional, and temporal comparisons from studies using other methodological approaches (e.g., Wolff et al., 2018b). On the other hand, they stem from theoretical deliberations concerning the significance of social, dimensional, and temporal comparisons in students’ everyday lives.

Specifically, Wolff et al., (2019b) argued that social comparisons should have the strongest impact on students’ self-concepts, because this comparison type might be the most important one in our society (see also Van Yperen & Leander, 2014). For example, if we ask how well a student performs in a school subject, we usually want to know how capable this student is in relation to his or her classmates, rather than to his or her achievement in other subjects or in the past. If we want to get a job or a college place, it is crucial that we outperform our competitors, but less so that we show better performance in the relevant domain compared to another domain or to our prior performance. In line with this, teachers often assign grades according to students’ performance in relation to their classmates, but usually not in relation to their grades in other subjects or to their prior grades. However, even if social comparisons were irrelevant to academic or vocational success (e.g., because the way teachers assigned their grades was exclusively criterion-based), social comparison information would still be the most salient comparison information in many contexts, and thus might affect students’ self-concepts more strongly than would dimensional or temporal comparison information. In particular, in the classroom, students are continuously confronted with the performance of others. It is likely that this exposure prompts social comparisons and—in the words of Festinger (1954, p. 117)—activates students’ “drive to evaluate [their] abilities” in comparison to others.

Following social comparisons, dimensional comparisons between math and verbal achievements should have the strongest effects on students’ self-concepts, according to the 2I/E model. One reason for this might be the fact that dimensional comparison information should be more salient in the classroom than temporal comparison information. Every day, students receive subject-specific achievement feedback in different subjects. Moreover, their subject-specific achievements are transparently juxtaposed on their report cards. In contrast, developments in students’ achievements are still addressed relatively rarely in everyday school life (Heckhausen, 1991; Rheinberg & Krug, 2005).

Another reason for the stronger effects of dimensional comparisons in relation to temporal comparisons may be the fact that dimensional comparisons are of particular importance for students with regard to decisions about educational and vocational specialization (e.g., Nagy et al., 2006; von Keyserlingk et al., 2021). Dimensional comparisons provide students with valuable information about their strengths and weaknesses in different subjects. Consequently, they might be of high relevance when students have to make decisions in which areas to invest their limited resources (Möller & Marsh, 2013; Wolff et al., 2018a). Nevertheless, temporal comparisons may also provide important information regarding specialization decisions, as they can give students clues that they may have overestimated or underestimated their abilities in the past, thus alerting them to possible risks of failure or chances of success in the future. With this in mind, it is plausible that students also consider their achievement changes when evaluating their abilities. Still, it is likely that achievement changes do not lead to drastic changes in students’ self-concepts, considering Albert’s (1977) postulate of a human drive to maintain a consistent self-perception over time. This drive implies a desire for rather stable self-concepts, which also corresponds to empirical findings illustrating the stability of academic self-concepts (e.g., Wigfield et al., 1997).

As outlined in the introduction, the 2I/E model has been tested in 12 empirical studies that considered students’ achievements and self-concepts in the math and verbal domain (Wolff et al., 2018b, 2019a, b, 2020, 2021a). Overall, these studies provided strong support for the model’s assumptions. However, the comparison effects showed significant differences between the studies. Specifically, the effects of achievement levels on self-concepts within subjects ranged from β = .29 to β = .94, the effects of achievement levels on self-concepts between subjects ranged from β = −.46 to β = −.01, and the effects of achievement changes on self-concepts within subjects ranged from β = .04 to β = .29. In part, these differences can be explained by the inclusion of additional covariates in some studies. For example, some studies controlled for prior self-concepts in the 2I/E model, which might have increased the temporal comparison effects but decreased the social and dimensional comparison effects (cf. Wolff et al., 2020). Still, there are also other study-specific characteristics (especially: achievement operationalization and temporal comparison periods) and sample-specific characteristics (e.g., age, track) that may have influenced the strength of the comparison effects. Hence, there is a need for a comprehensive meta-analysis that integrates the existing studies of the 2I/E model to yield reliable estimates for the strength of the comparison effects and provide explanations for the differences in the comparison effects across studies. Given the differences in the model specifications between the existing 2I/E model studies, this meta-analysis should reanalyze the data of these studies, using a unified statistical approach.

Additional Comments on the I/E Model and 2I/E Model

In this section, we make a series of important comments that apply to both the I/E model and the 2I/E model and that should be kept in mind in considering this meta-analysis.

First, we emphasize again that the explanation of the emergence of the effects in the I/E model and the 2I/E model as resulting from comparison processes is an interpretation. In fact, while these models examine the relations between achievement and self-concept variables, students are not directly asked how they compare their achievements. Nevertheless, the interpretation of comparison effects in the I/E model and 2I/E model is based on extensive theoretical considerations on the representation of comparison effects in regression models by achievement variables. Moreover, this interpretation is supported by empirical findings from studies that, using other methodological approaches, have also found evidence for the joint effects of social and dimensional or social, dimensional, and temporal comparisons in the process of students’ self-concept formation. Specifically, these were experimental studies in which students received manipulated achievement feedback (e.g., Möller & Köller, 2001; Wolff et al., 2018b) and field studies in which students were directly asked to compare their achievements (e.g., Müller-Kalthoff et al., 2017; Wolff et al., 2018b). In this article, therefore, we follow this interpretation of the 2I/E model that the effects of students’ achievement levels and achievement changes on their self-concepts result from comparisons, although it is acknowledged that other factors may also contribute to the emergence of these effects.

Second, we would like to note that the term “effect”, used to describe the paths in the I/E model and 2I/E model, has potential to be misleading. Although there is evidence for causal influences of social, dimensional, and temporal comparisons on self-concepts from experimental studies (Müller-Kalthoff et al., 2017; Wolff et al., 2018b), studies testing the I/E model and 2I/E model—even with longitudinal data—do not allow for such causal conclusions. However, since Marsh (1986), the term effect has been used to describe the relations between achievements and self-concepts in the I/E model, and it runs through the literature on the I/E model and 2I/E model. Moreover, in the statistical sense, the relations between achievements and self-concepts can be called effects, as they represent directed relations. With this in mind, we stay with the established effect label in the present meta-analysis, while making the point that the regression analytic approach of the 2I/E model does not allow us to draw causal conclusions.

Third, we point out that neither the I/E model nor the 2I/E model claim to represent all determinants involved in the formation of students’ academic self-concepts. Rather, as is usually the case with scientific models, the I/E model and 2I/E model are simplified representations of reality. Their goal is to describe the simultaneous operation of multiple comparisons in the formation of subject-specific self-concepts. Nevertheless, there are a number of other factors that play a role in students’ self-concept formation, such as gender stereotypes (e.g., Nosek et al., 2002), significant others (e.g., Fredricks & Eccles, 2002), or attribution strategies (e.g., Weiner, 1986). In more complex models, such as the expectancy-value model (e.g., Wigfield et al., 2020), many of these factors are combined. However, unlike the I/E model and the 2I/E model, these models usually cannot be fully empirically tested in one single study, due to their high complexity.

Fourth, it is important to note that the classic I/E model and 2I/E model describe the effects of social, dimensional, and temporal comparisons only in relation to one particular comparison standard, although students may conduct several comparisons of the same type with different comparison standards when they form their self-concepts. Specifically, dimensional comparisons in the classic I/E model and 2I/E model only refer to comparisons between math and verbal achievements. This specification is reasonable insofar as studies testing the I/E model and 2I/E model with different subjects have shown that dimensional comparisons between math and verbal achievements usually show the strongest effects on students’ academic self-concepts (see Möller et al., 2020, for a meta-analysis). Nevertheless, these studies have also indicated that other dimensional comparisons (e.g., between achievements in two verbal subjects) are also involved in students’ self-concept formation.

Temporal comparisons in the 2I/E model refer to comparisons between achievement measures at the time points considered in the particular model. In previous studies, the selection of these time points mainly resulted from the availability of data. Still, it should be kept in mind that—similarly to dimensional comparisons—students are likely to compare their current achievements with several prior achievements when assessing their self-concepts.

Finally, social comparisons in the I/E model and 2I/E model refer to comparisons of students’ achievements with the achievements of all other students examined in the respective study. However, the specification of the social comparison effects in the I/E model and 2I/E model can be viewed somewhat critically, given that there are other models of academic self-concept formation in which social comparisons are specified in relation to more local reference groups. For example, in studies testing the big-fish-little-pond effect (BFLPE; e.g., Marsh et al., 2008), the mean achievement of a specific reference group (e.g., class or school) is included in the model as an additional self-concept predictor to examine the effects of social comparisons with that specific reference group (see also Guo et al., 2018, for a discussion of social comparison effects in I/E model vs. BFLPE studies). Moreover, it is worth noting that there are models of academic self-concept formation that have chosen a term other than social comparison effect to label the effects of achievements on self-concepts within subjects. For example, in the reciprocal effect model (Marsh & Craven, 2006), these effects are called skill-development effects (see also Calsyn & Kenny, 1977). Although researchers have used social comparisons to explain them (e.g., Möller et al., 2011), it is important to consider that these effects could also result from other cognitive processes, such as normative comparisons (“How good am I compared to a general standard?”). Nevertheless, in this article, we follow the tradition of the I/E model and interpret the effects of achievement levels on self-concepts within subjects in the 2I/E model as social comparison effects. We do this because we view social comparisons as the central mechanism for the emergence of these effects, given that normative standards are likely to be shaped by achievement distributions shown in social comparison groups.

However, for the present meta-analysis, we make an important modification to the 2I/E model to ensure adequate interpretation of the social comparison effects: Instead of using achievement level in one subject to predict self-concept in the other subject, we include the difference between students’ math and verbal achievement levels as a self-concept predictor in the 2I/E model (see Fig. 3). This modification is necessary because otherwise, dimensional comparison effects (defined as the effects of the difference between students’ math and verbal achievement levels on their self-concepts) would manifest themselves both in the effects of achievement levels on self-concepts between subjects (as intended) and in the effects of achievement levels on self-concepts within subjects (which is not intended).

The adapted 2I/E model tested in “The Present Research”. This adaptation of the 2I/E model uses the difference between students’ math and verbal achievement levels to specify the dimensional comparison effects. In contrast to the original 2I/E model, each effect of the achievement variables on the self-concept variables thus represents one specific comparison type. The social comparison effects are assumed to be stronger than the dimensional comparison effects, which are assumed to be stronger than the temporal comparison effects. Moreover, all comparison effects are assumed to be positive

Wolff (2021b) recently demonstrated this relationship for the I/E model, but his reasoning can equally be applied to the 2I/E model. Specifically, he argued that a psychologically sound operationalization of dimensional comparison effects should consider the difference between students’ verbal and math achievements as a self-concept predictor (e.g., “How much worse/better am I in math compared to English?”). In contrast, the I/E model considers students’ absolute math and verbal achievements as predictors of their self-concepts. For example, the equation MSC = W × MACH + B × VACH (with MSC = math self-concept, MACH = math achievement, VACH = verbal achievement, W = I/E model effect within subjects, and B = I/E model effect between subjects) describes how in the I/E model, math self-concept is regressed on math achievement and verbal achievement. This equation is equivalent to the equation MSC = (W + B) × MACH + B × (VACH − MACH), which includes students’ achievement difference as a self-concept predictor and thus entails adequate specification of the dimensional comparison effect. However, in this equation the social comparison effect (W + B) equals the sum of the (positive) effect of math achievement on math self-concept in the I/E model and the (negative) effect of verbal achievement on math achievement in the I/E model, while the dimensional comparison effect (B) is the same in both equations. Thus, in the I/E model, the effects of achievements on self-concepts within subjects represent not only effects of social comparisons but also the difference between (positive) social comparison effects and (negative) dimensional comparison effects. Concerning Möller et al.’s (2020) meta-analysis of the I/E model, for example, this implies that the social comparison effects (i.e., the effects of achievements on self-concepts within subjects without controlling for achievement in the other subject) were only moderate: about β = .36 (= .55–.19) in the math domain and β = .30 (= .46–.16) in the verbal domain.

Similarly to the I/E model, the effects of achievement levels on self-concepts within subjects in the 2I/E model also represent not only social comparison effects (defined as effects of achievement levels on self-concepts within subjects without controlling for other variables) but the difference between (positive) social comparison effects and (negative) dimensional comparison effects. This insight, and the resulting modification of the 2I/E model (as shown in Fig. 3), is of great importance for the present meta-analysis, especially because we aim to investigate how different moderating variables influence the strength of social, dimensional, and temporal comparison effects. For this purpose, it is necessary to avoid confounding the influences of several comparison types in one model path (specifically: the influences of social and dimensional comparisons in the path from achievement levels to self-concepts within subjects), and thus allow for a clear interpretation of all comparison effects in the model. Of course, however, it is also true for the alternative specification of the 2I/E model that (as in its original specification) other factors may also contribute to the emergence of the comparison effects, as discussed above.

The Present Research

In the present research, we reanalyzed the data of all 2I/E model studies published so far in a comprehensive IPD meta-analysis. By doing so, we aimed to generate deeper knowledge about the absolute and relative strengths of social, dimensional, and temporal comparison effects in the process of students’ academic self-concept formation. Moreover, we aimed to significantly enhance our knowledge of the impact of several factors that may moderate the strength of these comparison effects. In contrast to prior 2I/E model studies, we included the difference between students’ math and verbal achievement levels as a self-concept predictor, so that we could isolate each effect in the 2I/E model to one specific comparative type. More specifically, we regressed students’ math self-concept on their math achievement level, the difference between their math and verbal achievement levels, and their math achievement change. Similarly, we regressed students’ verbal self-concept on their verbal achievement level, the difference between their verbal and math achievement levels, and their verbal achievement change (see Fig. 3). On the basis of the empirical findings of prior studies examining the joint effects of comparisons (especially those testing the 2I/E model) and the theoretical deliberations concerning the joint effects of social, dimensional, and temporal comparisons, presented above, we expected to find moderate to strong positive social comparison effects (Hypothesis 1), small to moderate positive dimensional comparison effects (Hypothesis 2), and small positive temporal comparison effects (Hypothesis 3) in our 2I/E model, including data of all prior 2I/E model studies.Footnote 2 In addition, we made assumptions concerning the influence of particular moderating variables, which we considered in our meta-analysis.

Achievement Operationalization

In the existing 2I/E model studies, researchers have used either grades or scores from standardized tests to operationalize students’ achievements. However, it is likely that the comparison effects in the 2I/E model are stronger where grades are used. Grades represent an immediate and salient form of achievement feedback for students, which can be of high importance for their future lives (e.g., concerning the transition to the next grade level or opportunities on the labor market). In contrast, students are often not even aware of their (exact) performance on standardized tests (e.g., Marsh et al., 2014b; Wolff et al., 2019b). Furthermore, teachers often assign grades as a function of the specific achievement distribution in their classes, such that the best and worst students in each class tend to get the highest and lowest grades, respectively (grading-on-a-curve). In contrast, test scores are an indicator of students’ achievements in relation to a larger group of students (i.e., the population of students who took part in the achievement test). However, research on the local dominance effect (Alicke et al., 2010) has shown that individuals prefer local comparison information to more general comparison information. Accordingly, when estimating their self-concepts, students should rely on their grades more than on their results in standardized achievement tests (Marsh et al., 2014a, b; Wolff et al., 2019b).

In line with the assumption that comparison effects are stronger if grades are used as achievement indicators, Möller et al.’s (2020) meta-analysis of the classic I/E model revealed stronger I/E model effects within subjects for studies using grades rather than test scores as achievement indicators. Marsh et al. (2014b), comparing the I/E model effects depending on achievement operationalization within the same sample, even found stronger effects within and between subjects for grades as achievement indicators. Wolff et al., (2019b) were the first to compare the effects in the 2I/E model as a function of achievement operationalization, likewise in the same sample. In two studies, these authors found that most comparison effects were stronger if grades were used to operationalize students’ achievements, although one-dimensional comparison effect between math and verbal subjects (an effect of student’ English achievement on their math self-concept) was stronger using test scores. On the basis of these findings, we expected to find stronger social comparison effects (Hypothesis 4) and stronger temporal comparison effects (Hypothesis 5) in our meta-analysis where studies used grades rather than test scores as achievement indicators. However, we made no assumption concerning the impact of achievement operationalization on the strength of dimensional comparison effects, given the contradictory findings from prior research.

Comparison Period

A particularly relevant gap in the research on temporal comparisons concerns the question as to what period of time students (prefer to) consider when evaluating their achievement changes. In the 2I/E model studies, temporal comparison effects were usually operationalized depending on the available data, resulting in temporal comparison periods ranging from 0.5 to 2 years. Although the 2I/E model could be replicated while considering the different periods, however, it is conceivable that students would prefer a particular interval when comparing their achievement over time, and that different temporal comparison periods would lead to stronger or less strong temporal comparison effects in the 2I/E model. For example, it would be possible that students prefer short temporal comparison periods of one semester, because this period provides them with information about the most recent developments in their achievement. Moreover, students are more likely to remember their achievement from 6 months ago compared to that further in the past. Nevertheless, students could also prefer longer temporal comparison periods, because such periods make the occurrence of achievement changes more likely and provide students with a basis for assessing the longer-term trend of their achievement development, independent of possible short-term fluctuations. In addition, previous research has shown that social and dimensional comparison effects are stronger, the more dissimilar the individuals or subjects being compared (e.g., Huguet et al., 2009; Jansen et al., 2015; Marsh et al., 2014b; Mussweiler et al., 2004). Similarly, one could speculate that temporal comparison effects are also stronger when students compare more dissimilar (i.e., more distant) points in time. To sum up, there are arguments for students preferring either short or long comparison periods. For this reason, we left it as an open research question whether and how comparison periods affect the temporal (and social and dimensional) comparison effects in the 2I/E model.

Age

Competition in the classroom is likely to increase when students get older, and closer to applying for college and apprenticeships, where they compete with their peers. Similarly, students’ need to know their strengths and weaknesses should increase with age because students usually have to specialize in one area after high school at the latest. Accordingly, it is plausible that the importance of social and dimensional comparisons would increase as students get older. Empirically, researchers have found support for this assumption (e.g., Fang et al., 2018; Möller et al., 2020). For example, in Möller et al.’s (2020) meta-analysis of the classic I/E model, the social and dimensional comparison effects were stronger for older students compared to younger students. Furthermore, in another meta-analysis on dimensional comparisons, Wan et al. (2021) showed that the correlation between students’ math and verbal self-concepts decreases with age, indicating an increase of dimensional comparison effects. In accord with these findings, we expected that students’ age would also have positive effects on the strength of the social comparison effects (Hypothesis 6) and the dimensional comparison effects (Hypothesis 7) in the 2I/E model. However, we did not expect that students’ age would affect the strength of the temporal comparison effects, because such comparisons should be similarly relevant for students of different age groups in keeping track of their achievement developments and considering them when assessing their self-concepts (Hypothesis 8).

Track

It would be reasonable for social, dimensional, and temporal comparisons to be similarly important for students in forming their self-concepts both in academic and nonacademic track schools. At both school types, students are taught together with peers in the classroom and receive achievement feedback in various subjects at regular intervals. Moreover, it is important for students in both school types to be able to assess their subject-specific abilities and thus use the comparison information available to them. In line with these deliberations, Wolff et al. (2019a, b) demonstrated the invariance of the 2I/E model effects between students of academic and nonacademic track schools in three studies. Furthermore, Arens et al., (2017, 2018), Jansen et al. (2015), and Möller et al. (2011) provided evidence for the assumption that social and dimensional comparison effects do not differ between students in academic and nonacademic track schools. In contrast to these studies, however, Möller et al. (2014) found stronger I/E model effects within subjects, indicating stronger influences of social comparisons, for students in academic track schools. Theoretically, this finding could be explained by a stronger achievement orientation in academic track schools, which would explain why students in such schools might be more concerned about their achievement and feel a greater need to evaluate their relative standing (Arens et al., 2018; Van Houtte, 2004). Given Möller et al.’s (2014) findings, we left it as an open research question whether the social comparison effects in the 2I/E model would differ between students of academic and nonacademic track schools. Nevertheless, we expected similarly strong dimensional comparison effects (Hypothesis 9) and temporal comparison effects (Hypothesis 10) for both groups of students.

Gender

Theoretically, there is little reason to believe that comparisons have different impacts on the self-concepts of girls and of boys. For both genders, it should be similarly important to know how well they perform in comparison to their peers, where their strengths and weaknesses lie, and how their performance has developed over time (e.g., for planning their future careers). The fact that there is not much empirical evidence for gender differences in comparison effects on academic self-concepts is consistent with this reasoning. For example, Möller et al. (2020) found no difference in the I/E model effects as a function of the gender ratio in the studies included in their meta-analysis. Furthermore, several I/E model studies comparing girls and boys within the same sample demonstrated the invariance of the I/E model across gender (e.g., Arens et al., 2017; Marsh & Yeung, 1998; Möller et al., 2011; Tay et al., 1995). However, some I/E model studies have found gender differences in the comparison effects, although the pattern of findings is not consistent. For example, Skaalvik and Rankin (1990) found a stronger positive effect of verbal achievement on verbal self-concept for boys and a stronger negative effect of verbal achievement on math self-concept for girls. In contrast, Möller et al. (2014) found a stronger positive effect of math achievement on math self-concept for boys, and Nagy et al. (2006), who tested the I/E model including math and biology, found a negative effect of math achievement on biology self-concept for boys only. Given these partially contradictory findings, we abstained from formulating hypotheses concerning gender differences in the strength of social, dimensional, and temporal comparison effects, but examined the influence of gender on the comparison effects in the 2I/E model in an exploratory manner. Through our comprehensive analysis of multiple studies, we aimed to shed further light on the question of whether and how the influence of comparisons on students’ self-concepts differs between girls and boys.

Migration Background

To date, researchers have not paid much attention to the question of whether students with a migration background differ from those without such a background, in the extent to which their self-concepts are influenced by comparisons. Possibly, this is due to the fact that finding differences in the strength of comparison effects between these groups does not seem very likely. In one study, Möller et al. (2014) found that the I/E model effects did not differ between students with and those without a migration background. On the basis of this finding, we also expected no influence of migration background on the strength of the social comparison effects (Hypothesis 11), dimensional comparison effects (Hypothesis 12), and temporal comparison effects (Hypothesis 13) in the 2I/E model.

Method

Data

In our meta-analysis, we considered all studies that have investigated the 2I/E model, including students’ achievements and self-concepts in math and their first language, and were published up to July 2021. To identify these studies, we were able to benefit from our extensive knowledge of the literature on this field of research. Moreover, we conducted a literature search as a cross-check by searching for relevant studies in the PsycArticles, PsycINFO, and PSYNDEX databases, using the search term “2I/E model” OR (“social” AND “temporal” AND “dimensional”). However, this literature search did not reveal any 2I/E model studies other than those of which we were already aware.

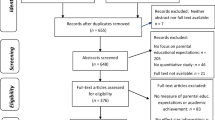

Overall, we identified 12 studies of the 2I/E model, published in five articles (Wolff et al., 2018b, 2019a, b, 2020, 2021a). Since two studies testing the 2I/E model used both grades and test scores (Wolff et al., 2019b, Studies 1 and 2), we formally split these into two sub-studies. However, we excluded four other studies (Wolff et al., 2018b, Studies 3a and 3b, 2019a, Study 1, 2021a, Study 1) that analyzed data already examined in another study. Moreover, we excluded a sub-study that tested the 2I/E model using parents’ ratings of their children’s competencies as criteria (Wolff et al., 2020), since the focus of our meta-analysis was on comparison effects on students’ self-concepts. From two studies that tested the 2I/E model including multiple subjects (Wolff et al., 2019b, Studies 2 and 3), we only considered the data necessary to analyze the classic 2I/E model, including math and students’ first language. To sum up, our data preparation resulted in 10 datasets to be analyzed in our IPD meta-analysis, including N = 45,248 cases. Table 1 provides an overview of these datasets. Figure 4 illustrates the process of study selection.

We note that we included in our meta-analysis only data that had been analyzed within the framework of the 2I/E model. We did not search for additional raw data that theoretically would have allowed us to compute a 2I/E model, because our goal was to perform a meta-analysis of existing 2I/E model studies, rather than to conduct a (barely manageable) series of primary studies for subsequent meta-analysis. This approach also had the advantage that not only the selection of studies included in our meta-analysis but also the operationalization of variables in each study (e.g., selection of items to operationalize self-concepts) was unambiguous and not subject to a degree of arbitrariness.

Variables

Self-Concept

Across all studies, students’ self-concepts in math and their first language were measured using 21 different self-concept items. Given this heterogeneity, we averaged the subject-specific items within the datasets and standardized the means to obtain comparable self-concept values across the datasets. All items were coded such that higher values indicated higher self-concepts. Students’ self-concepts were usually measured at the end of the comparison period or shortly thereafter. No self-concept measurement took place later than half a year after the last achievement data were collected.

Achievement

In line with the 2I/E model, we used students’ achievements in math and their first language at the beginning and at the end of the temporal comparison period to calculate their subject-specific achievement levels (by averaging their subject-specific achievements) and achievement changes (by calculating the difference between their subject-specific achievements at the end and the beginning of the comparison period). Subsequently, as with students’ self-concepts, we standardized their subject-specific achievement levels and achievement changes within the datasets to obtain comparable values across the datasets. All achievement scores were coded such that higher values indicated higher achievements.

Achievement Operationalization

Students’ achievements were measured using either grades or test scores. Thus, we coded the type of achievement operationalization as a dummy variable (0 = grades, 1 = test scores).

Comparison Period

In the different studies, the temporal comparison period (i.e., the distance between the achievement measures) ranged from 0.5 to 2 years. Accordingly, we coded the length of the comparison period as a continuous variable.

Age

We coded students’ age at the time of self-concept assessment as a continuous variable. If age was reported in years and months, we only considered age in years, to obtain comparable data across all datasets.

Track

We created three dummy variables, indicating whether the students attended a school of the academic track (0 = no academic track, 1 = academic track), a school of the nonacademic track (0 = no nonacademic track, 1 = nonacademic track), or a school in a phase of schooling or school system without tracking (0 = tracking, 1 = no tracking).

Gender

We coded students’ gender as a dummy variable (0 = males, 1 = females).

Migration Background

We coded students’ migration background as a dummy variable (0 = no migration background, 1 = migration background). Because migration background was measured differently in the various datasets, we had to use different criteria for coding, depending on the information available. Specifically, we coded migration background if it was noted in the dataset that the student had a migration background or was a foreigner (Datasets 1 and 2), if the student was not a citizen of the country in which the data collection took place (Datasets 3 and 4), if the student’s native language was not the same as the national language of the country in which the data collection took place (Datasets 5 and 7), if at least one parent did not come from the country in which the data collection took place (Dataset 6), if the student’s ethnicity was not Caucasian in a sample in which 89% of the students belonged to this ethnic group (Dataset 8), or if the student was not born in the country in which the data collection took place (Datasets 9 and 10).

Analyses

To conduct our meta-analysis, we merged the 10 single datasets. Subsequently, we used this pooled dataset to calculate six regression models in Mplus 7 (Muthén & Muthén, 2015). In the first two models, we tested the 2I/E model without moderating variables. Thus, we regressed students’ math self-concept on their math achievement level, the difference between their math and verbal achievement levels, and their math achievement change (Model 1a) and students’ verbal self-concept on their verbal achievement level, the difference between their verbal and math achievement levels, and their verbal achievement change (Model 1b). Afterwards, we extended these models by additionally regressing students’ math self-concept (Model 2a) and verbal self-concept (Model 2b) on achievement operationalization, comparison period, age, gender, and migration background, as well as the product terms of these five moderating variables and the three achievement variables, to examine how the moderating variables affected the comparison effects. Finally, we replaced age with the two dummy variables, indicating whether the students attended an academic track school or a nonacademic track school (i.e., no tracking served as reference category), and regressed students’ math self-concept (Model 3a) and verbal self-concept (Model 3b) on the six moderating variables, the three achievement variables, and the resulting product terms of these moderating and achievement variables. To examine whether the strength of the comparison effects differed between students from academic and nonacademic track schools, we used the Model Constraint option in Mplus to compare the moderating effects of the two dummy variables, indicating an academic or a nonacademic track school. We did not include age and two tracking variables into the same model, so as to avoid problems resulting from multicollinearity because of a very strong correlation between age and students in schools without tracking (see “Preliminary Analyses” section).

To facilitate the interpretation of our results, we standardized all continuous moderating variables in the pooled dataset before calculating the product terms (students’ achievement levels, achievement changes, and self-concepts had already been standardized before the single datasets were merged). Moreover, we used the standardized achievement levels to calculate the differences between the achievement levels (i.e., we subtracted two variables with the same metric from each other). For model estimation, we used the robust maximum likelihood (MLR) estimator. In addition, we used the complex modeling procedure implemented in Mplus to correct the estimated standard errors for the hierarchical structure of the data (students from different studies). To deal with missing values, we ran 20 multiple imputations in each of the single datasets before calculating the mean self-concept scores, achievement levels, and achievement changes within these datasets. Accordingly, we also conducted our analyses using 20 imputed pooled datasets. The MPlus syntax and output can be found in the Supplemental Material.

Results

Preliminary Analyses

Table 2 shows the bivariate correlations of all variables considered in our meta-analysis. In line with prior studies, the correlation between students’ math and verbal self-concepts was close to zero and nonsignificant (r = .02, p = .71), whereas the correlation between their math and verbal achievement levels was strongly positive (r = .65, p < .001). Students’ math and verbal achievement changes also showed a positive correlation (r = .26, p < .001).

Concerning the problem of multicollinearity in multiple regression analyses, it is important to note that most correlations among the predictor variables considered in one 2I/E model were r ≤|.32|. Apart from the correlations among the three dummy variables, indicating school tracking (−.57 ≤ r ≤ −.43, all p ≤ .04), only students’ achievement difference showed strong correlations with their achievement levels (|r|= .42, p < .001). However, these correlations were substantially weaker than the correlation between students’ math and verbal achievement levels, both of which had been integrated into the same model in prior studies testing the 2I/E model. Furthermore, the correlations were reasonable, since stronger achievement differences imply more extreme absolute achievements.

As already noted in the “Analyses” section, there was also a very strong correlation between age and students in schools without tracking (r = −.77, p < .001). Since this correlation might have led to multicollinearity problems, we examined possible moderating effects of age and tracking on the comparison effects in the 2I/E model in separate analyses.

2I/E Model

Table 3 presents the results of the meta-analysis. In the first step, we considered the comparison effects in Model 1a and Model 1b, which tested the 2I/E model without moderating variables. In line with Hypothesis 1, we found moderate social comparison effects on students’ math self-concept (B = .37, p < .001, β = .37) and verbal self-concept (B = .31, p < .001, β = .31). In line with Hypothesis 2, we found a moderate dimensional comparison effects on students’ math self-concept (B = .34, p < .001, β = .29) and a small dimensional comparison effect on students’ verbal self-concept (B = .21, p < .001, β = .18). Furthermore, we found small temporal comparison effects on students’ math self-concept (B = .09, p < .001, β = .09) and verbal self-concept (B = .11, p < .001, β = .11), which supported Hypothesis 3.

In the second step, we investigated how the strength of the social, dimensional, and temporal comparison effects in the 2I/E model depended on the moderating variables considered in our meta-analysis. To this end, we examined the moderating effects for each moderating variable, except for either the tracking variables (Model 2a and Model 2b) or age (Model 3a and Model 3b). We note that the moderating effects examined in both analyses were very similar: The same moderating effects were statistically significant, and these effects hardly differed from each other (all ∆β ≤ .01). In the following, we therefore report only the results of Model 2a and Model 2b (apart from in the “Track” section, where we refer to the results of Model 3a and Model 3b).

Achievement Operationalization

The type of achievement operationalization significantly affected the strength of all types of comparison effects. In line with Hypothesis 4, the social comparison effects were stronger for grades than for test scores (math: B = −.13, p < .001, β = −.09; verbal: B = −.12, p < .001, β = −.08). In line with Hypothesis 5, the temporal comparison effects were also stronger for grades (math: B = −.07, p < .001, β = −.05; verbal: B = −.08, p < .001, β = −.06). However, the dimensional comparison effect on students’ math self-concept was stronger for test scores (B = .08, p = .04, β = .04), whereas the strength of the dimensional comparison effect on students’ verbal self-concept was not significantly affected by the type of achievement operationalization (B = −.04, p = .30).

Comparison Period

The temporal comparison period had no significant influence on any comparison effect (all |B|≤ .04, p ≥ .09).

Age

In contrast to Hypothesis 6, we found weaker social comparison effects for older students compared to younger students (math: B = −.06, p < .01, β = −.06; verbal: B = −.03, p < .01, β = −.03). However, we found support for Hypothesis 7, since the dimensional comparison effects were stronger for older students (math: B = .07, p < .001, β = .06; verbal: B = .03, p = .01, β = .03). Moreover, Hypothesis 8 found partial support because students’ age had no significant impact on the strength of the temporal comparison effect on verbal self-concept (B = .00, p = .89). The temporal comparison effect on math self-concept was significantly lower for older students but the effect was very small (B = −.01, p = .02, β = −.01).Footnote 3

Track

School track showed a number of significant influences on the strength of the comparison effects: Compared to the reference group of students in schools without tracking, we found weaker social comparison effects on math self-concept in academic track schools (B = −.09, p = .02, β = −.06) and nonacademic track schools (B = −.17, p < .001, β = −.10), as well as weaker social comparison effects on verbal self-concept in nonacademic track schools (B = −.07, p < .001, β = −.04), but not in academic track schools (B = .01, p = .78). The dimensional comparison effects were stronger in academic track schools (math: B = .19, p < .001, β = .10; verbal: B = .09, p < .001, β = .05) and nonacademic track schools (math: B = .18, p < .001, β = .10; verbal: B = .07, p < .01, β = .04). Moreover, there were weaker temporal comparison effects on math self-concept in academic track schools (B = −.05, p < .01, β = −.03) and nonacademic track schools (B = −.03, p = .05, β = −.02), whereas the temporal comparison effects on verbal self-concept did not differ between students in schools without tracking and schools of the academic track (B = .01, p = .74) or nonacademic track (B = .02, p = .50). Overall, these results closely reflect the findings on the moderating effects of students’ age on the strength of the comparison effects, reported in the previous section.

More interesting, therefore, was the comparison of the moderating effects between students from academic and nonacademic track schools: Here, we found stronger social comparison effects in academic track schools compared to nonacademic track schools (math: ∆B = .07, p < .001, ∆β = .04; verbal: ∆B = .08, p < .01, ∆β = .05). As predicted in Hypothesis 9, the strength of the dimensional comparison effects did not differ between academic and nonacademic track schools (math: ∆B = .01, p = .58; verbal: ∆B = .02, p = .31). Hypothesis 10 found partial support since the temporal comparison effects on students’ verbal self-concept did not differ between academic and nonacademic track schools (∆B = −.01, p = .79), whereas the temporal comparison effects on math self-concept were—although only very slightly—weaker for students in academic track schools (∆B = −.03, p < .01, ∆β = −.01). It is also interesting to note that students in nonacademic track schools had higher self-concepts compared to students in academic track schools. In fact, students in nonacademic track schools even had higher self-concepts than students in schools without tracking (math: B = .04, p < .01, β = .02; verbal: B = .02, p = .03, β = .01), whereas students in academic track schools had lower self-concepts compared to students in schools without tracking (math: B = −.08, p < .001, β = −.03; verbal: B = −.07, p < .01, β = −.03).

Gender

Students’ gender significantly affected the strength of the social and dimensional comparison effects on students’ math self-concept: Both effects were stronger for girls compared to boys (social: B = .02, p = .02, β = .02; dimensional: B = .07, p < .001, β = .04). In contrast, students’ gender had no significant impact on the strength of the other comparison effects (all |B|≤ .03, p ≥ .05). Boys had significantly higher math self-concepts than girls (B = −.32, p < .001, β = −.16), whereas girls had significantly higher verbal self-concepts than boys (B = .12, p < .001, β = .06).

Migration Background

In contrast to Hypothesis 11, the strength of the social comparison effects was significantly lower for students with a migration background compared to students without a migration background (math: B = −.05, p < .01, β = −.02; verbal: B = −.05, p < .001, β = −.03). However, in line with Hypothesis 12 and Hypothesis 13, students’ migration background had no significant impact on the strength of the dimensional and temporal comparison effects (all |B|≤ .03, p ≥ .06). Students with a migration background showed significantly higher math self-concepts compared to students without a migration background (B = .15, p < .001, β = .06).

Discussion

The present meta-analysis significantly enhances our knowledge of the joint effects of social, dimensional, and temporal comparisons in the process of academic self-concept formation. By reanalyzing the data of all existing 2I/E model studies in a comprehensive IPD meta-analysis, we were able to obtain reliable estimators of the strength of these comparison effects, on the basis of a large student sample. Moreover, we were able to gain new important insights into how various study- and student-related factors affect the strength of social, dimensional, and temporal comparison effects.

Mean Effects of Social, Dimensional, and Temporal Comparisons

In line with prior studies that had tested the 2I/E model by analyzing single datasets, our meta-analysis provided substantive empirical support for the 2I/E model. Consistent with our hypotheses, we found moderate social comparison effects, small to moderate dimensional comparison effects, and small temporal comparison effects on students’ math and verbal self-concepts. However, it is remarkable that the social comparison effects, and especially the dimensional comparison effects, on students’ math self-concept were significantly stronger than the social and dimensional comparison effects on their verbal self-concept (social: ∆β = .06; dimensional: ∆β = .11). This finding corresponds to the findings of Möller et al.’s (2020) meta-analysis of the classic I/E model, which also revealed slightly stronger social and dimensional comparison effects on students’ math self-concept. It suggests that students take more account of achievement information in estimating their math self-concept than they do with their verbal self-concept. As proposed by Helm and Möller (2017), this difference in the strength of the social and dimensional comparison effects on students’ math versus verbal self-concept could result from the fact that students’ math self-concept is more dependent on school experiences. In their everyday lives outside of school, students usually have fewer opportunities to receive feedback on their math performance compared to their verbal performance. Therefore, they may consider achievement feedback at school more strongly when assessing their math self-concept than their verbal self-concept (see also Wolff et al., 2019b).

In contrast to the social and dimensional comparison effects, the temporal comparison effects on students’ math and verbal self-concept hardly differed from each other in our meta-analysis (∆β = −.02). Possibly, this is because everyday life (unlike grades) typically does not provide students with nuanced feedback about their achievement changes, either in the math domain or in the verbal domain. With this in mind, it makes sense that students may consider their math and verbal achievement changes in both domains to a similar degree when assessing their self-concepts.

Moderators of Social, Dimensional, and Temporal Comparison Effects

In addition to the mean effects of social, dimensional, and temporal comparisons across the existing 2I/E model studies, our meta-analysis examined several potential moderators of social, dimensional, and temporal comparison effects. These analyses yielded a number of exciting findings. Although most of our hypotheses found empirical support, this was not the case for all of them.

Achievement Operationalization

We found a high number of significant moderating effects for the type of achievement operationalization: In accord with our assumptions, the social and temporal comparison effects on students’ math and verbal self-concept were stronger for studies using grades rather than test scores as achievement indicators. This finding supports the assumption that when making comparisons students consider their grades more strongly than their scores in standardized achievement tests. In particular, this may be because grades represent the more immediate and salient form of achievement feedback that students receive. The stronger social comparison effects found for grades are in accord with prior findings from studies testing the classic I/E model, especially Möller et al.’s (2020) meta-analysis. However, they go beyond these findings, since we controlled for both dimensional and temporal comparison effects in our meta-analysis. Furthermore, the finding that the temporal comparison effects were stronger in studies using grades instead of test scores as achievement indicators significantly extends research on comparison effects in the process of self-concept formation, as we have shown this for the first time in a meta-analysis.

Interestingly, we found a different picture for the impact of achievement operationalization on the strength of the dimensional comparison effects in the 2I/E model. While achievement operationalization had no significant influence on the strength of the dimensional comparison effect on students’ verbal self-concept, the dimensional comparison effect on students’ math self-concept was even stronger for test scores. We note that this latter finding should be interpreted with caution, since it results in particular from an exceptionally strong dimensional comparison effect on students’ math self-concept in one specific 2I/E model study using test points as achievement indicators (Wolff et al., 2019b, Study 2). Nevertheless, it seems likely that dimensional comparison effects are at least not stronger for grades than they are for test scores. This conclusion is further corroborated by the results of Möller et al. (2020), who found no significant influence of achievement operationalization on the strength of the dimensional comparison effects in their meta-analysis of the classic I/E model.

It is possible that only social and temporal, but not dimensional comparison effects are stronger for grades than for test scores, because the criteria for achieving certain grades differ relatively strongly between math and verbal subjects (e.g., influence of participation in the classroom) and because math teachers often assign more extreme grades than do teachers in verbal subjects (e.g., Wolff et al., 2019b). Thus, it could be more difficult for students to compare their grades in math and verbal subjects with each other than to compare their grades in math or their first language with their classmates’ grades or with their prior grades in the same subject. In contrast, student may perceive it as relatively easy to compare their results in math and verbal achievement tests with each other if the results in both tests are presented using the same metric (e.g., percentile ranks).

Comparison Period

It is interesting that we found no significant influence of the temporal comparison period on the strength of the comparison effects in the 2I/E model. Thus, it seems that students do not have a general preference for a specific period (between 0.5 and 2 years) to take recourse to when assessing their self-concepts over time. In particular, this finding is of great interest with regard to the effects of temporal comparisons, because it contradicts, among others, the assumption that there may be a distance continuum, representing the proximity of time points and relating to the strength of temporal comparison effects.

Nonetheless, readers should keep in mind that our meta-analysis compared temporal comparison periods across studies conducted with different students at different phases of their school career. For example, it is conceivable that the preference for a particular temporal comparison period would differ as a function of students’ age. Students who have just transitioned from elementary to secondary school may be particularly interested in comparing their grades on their first report card at secondary school with their grades on their last report card at elementary school to see how their achievement has changed following the transition. In contrast, students at the end of high school could prefer longer comparison periods, as they tend to analyze their entire achievement development at high school. For a more specific investigation of the relation between temporal comparison periods and comparison effects, future research should, therefore, examine comparison effects as a function of different comparison periods using the same sample and compare these relations across student samples of different age groups. This approach would make it possible to draw even more substantiated conclusions regarding a potential influence of the temporal comparison period on the strength of comparison effects. Nonetheless, on the basis of the findings of our meta-analysis, we would conclude that such an influence does not exist or is at most negligibly small.

Age

Our investigation of the strength of the comparison effects in the 2I/E model as a function of age revealed one unexpected result. In contrast to our assumptions, we found weaker rather than stronger social comparison effects for older students. At first glance, this finding seems counterintuitive. However, it is more reasonable when one takes into account that we only examined students from grade 6 onward. On the one hand then, our sample did not include students in their first years of schooling, for whom previous studies have found relatively weak social comparison effects (Möller et al., 2020). On the other hand, the youngest students in our sample were in the phase of transition from elementary to secondary school, and research has shown that social comparisons play a particularly important role in this phase, when students have to reevaluate their competencies in a new social comparison group (e.g., Dijkstra et al., 2008; Feldlaufer et al., 1988).

Unlike the social comparison effects, we found an increase in the dimensional comparison effects as a function of students’ age. This finding was in line with our assumptions. It underpins the assumption that dimensional comparisons become more important with greater age, when students are increasingly forced to choose between different domains—for example, when they have to decide on a profession (e.g., von Keyserlingk et al., 2021).

Also consistently with our assumptions, age showed no significant effect on the strength of the temporal effect on students’ verbal self-concept. Furthermore, the strength of the temporal comparison effect on students’ math self-concept hardly depended on students’ age: Although this moderating effect was statistically significant (implying stronger temporal comparison effects on verbal self-concepts for younger students), it was very close to zero (β = −.01). We therefore conclude that overall, temporal comparisons have a similarly strong impact on the self-concepts of students in different age groups.

Track

Our comparison of students from academic and nonacademic track schools yielded relevant differences in the comparison effects only for social comparisons. In line with Möller et al. (2014), the social comparison effects were stronger for students in academic track schools. This finding is consistent with our considerations presented above that a higher achievement orientation in academic track schools could encourage social comparisons.

The dimensional comparison effects did not differ statistically significantly between students from academic and nonacademic track schools. This finding is reasonable when it is considered that students of both school types need to know where their strengths and weaknesses lie, at the latest when it comes to vocational specializations.

Similarly to the dimensional comparison effects, the temporal comparison effect on students’ verbal self-concept also did not differ statistically significantly between students from academic and nonacademic track schools. Moreover, the temporal comparison effect on students’ math self-concept was stronger for students in nonacademic track schools, but this difference between the school tracks was so small that it was of no practical significance (∆β = −.01). As with the moderating effect of students’ age on the strength of the temporal comparison effects, we thus conclude that temporal comparisons have a similarly strong impact on the self-concepts of students in academic and nonacademic track schools.

Gender

Our comparison of the comparison effects in the 2I/E model between girls and boys yielded slightly stronger social and dimensional comparison effects on girls’ math self-concepts. To explain this finding, one could speculate that girls are more insecure than boys in assessing their math ability due to the ongoing debate about the objectively untenable gender stereotype that girls are less talented in math than are boys (e.g., Niepel et al., 2020; Wolff, 2021a). For this reason, girls could pay more attention to how they perform in math in relation to their peers and to their first language. However, it should be considered that the effects of gender on the strength of the comparison effects were very weak in our meta-analysis. Therefore, we conclude that social, dimensional, and temporal comparison effects differ very little at most between girls and boys. This conclusion is also in accord with findings from studies demonstrating the invariance of the I/E model across gender (Arens et al., 2017; Marsh & Yeung, 1998; Möller et al., 2011; Tay et al., 1995).

Migration Background

In contrast to our assumptions, we found slightly weaker social comparison effects for students with a migration background compared to students without a migration background. It is possible that this finding results from the fact that students with a migration background, compared to students without a migration background, tend to prefer to comparing their achievement with more specific social groups, including peers who are largely or exclusively of a migration background (cf. Huguet et al., 2001; Zander et al., 2014). However, although the moderating effects of migration background were consistent for both domains, we note that these effects were very small. As with the moderating effect of gender, we conclude therefore that social, dimensional, and temporal comparison effects differ very little at most between students with and without a migration background.

Strengths, Limitations, and Directions for Future Research

The present meta-analysis has many strengths. Perhaps most importantly, to the best of our knowledge, it is not only the first meta-analysis of the joint effects of social, dimensional, and temporal comparisons in the process of students’ self-concept formation, but also the first meta-analysis of comparison effects using the IPD approach. This approach has enabled us to integrate and reanalyze the existing 2I/E model studies using the same analytical strategy, examine variables with manifestations that usually vary within studies rather than between studies, and avoid aggregation bias. Moreover, our meta-analysis—unlike prior studies testing the 2I/E and the I/E model (including prior meta-analyses of the I/E model)—used the difference between students’ math and verbal achievement levels as a self-concept predictor to represent the effects of dimensional comparisons. Thus, we were able to clearly separate social and dimensional (and temporal) comparison effects from each other, to determine their absolute and relative strengths, and to examine their dependency on different moderating variables.

Despite the strengths of our meta-analysis, it also had some limitations. In particular, it is noteworthy that we pooled data from studies that differed from each other not only in respect to the variables controlled for in our analyses. Especially, the 2I/E model studies considered in our meta-analysis used different self-concept and achievement operationalizations. To address this problem, we standardized the self-concept and achievement variables. Still, it would have been beneficial if all students had responded to the same self-concept items and if students’ achievements had been measured using the same rating systems, in order to further enhance comparability across the different studies.