Abstract

Policymakers, conceptualized here as principals, disagree as to whether US student performance has changed over the past half century. To inform conversations, agents administered seven million psychometrically linked tests in math (m) and reading (rd) in 160 survey waves to national probability samples of cohorts born between 1954 and 2007. Estimated change in standard deviations (sd) per decade varies by agent (m: –0.10sd to 0.27sd, rd: –0.02sd to 0.12sd). Consistent with Flynn effects, median trends show larger gains in m (0.19sd) than in rd (0.04sd), though rates of progress for cohorts born since 1990 have increased in rd but slowed in m. Greater progress is shown by students tested at younger ages (m: 0.31sd, rd: 0.08sd) than when tested in middle years of schooling (m: 0.17sd, rd: 0.03sd) or toward the end of schooling (m: 0.06sd, rd: 0.02sd). Young white students progress more slowly (m: 0.28sd, rd: 0.09sd) than Asian (m: 46sd, rd: 0.28sd), black (m: 0.36sd, rd: 0.19sd), and Hispanic (m: 0.29sd, rd: 0.13sd) students. These ethnic differences generally attenuate as students age. Young students in the bottom quartile of the SES distribution show greater progress than those in the top quartile (difference in m: 0.08sd, in rd: 0.15sd), but the reverse is true for older students. Moderators likely include not only changes in families and schools but also improvements in nutrition, health care, and protection from contagious diseases and environmental risks. International data suggest that subject and age differentials may be due to moderators more general than just the United States.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Principals require agents whenever they lack sufficient time or expertise to pursue their objectives directly (Bendor & Meirowitz, 2004; Epstein & O’Halloran, 1994; Gailmard, 2014; Holmström, 1984; Huber & Shipan, 2002; Volden, 2002). But when principals rely upon agents, they risk inefficiencies due to adverse selection and moral hazard, the shirking and diversion of resources to purposes agents prefer (Hayne & Salterio, 2014). Risks are especially large when principals do not rank objectives clearly or disagree as to their relative importance (Bernheim & Whinston, 1986; Laffont & Martimort, 2002; Laffont & Tirole, 1993; Olsen, 2014; Papadopoulos, 2014, p. 281), a not-infrequent occurrence in a federal system where power is shared among principals and boundary organizations are used to limit undue political influence over scientific research. To minimize risks, principals may use multiple agents (Braun & Guston, 2003).

We use the principal-agent model to interpret the efforts of policymakers to elicit information about the rate of educational progress by US student cohorts. To obtain the information, principals employ agents who have the resources and skills needed to measure repeatedly the performance of representative samples of the school-age population. Since 1971, four agents have administered 160 waves of 17 psychometrically linked test surveys of student achievement in math and reading to national probability samples of US student cohorts at various ages.Footnote 1 The surveys have received a good deal of public and scholarly attention, but prior research has not given systematic consideration to the range of estimates provided by agents who have varied purposes, survey designs, and test content. We use individual level restricted-use student data available from the US National Center for Education Statistics (NCES) to trace the progress in math and reading achievement by cohorts of US students identified by four agencies. Although agents report estimates of student progress per decade that range in math (m) from: –0.10 standard deviations (sd) to 0.27sd and in reading (rd) from –0.02sd to 0.12sd, median estimates show upward trends in both subjects regardless of age tested. However, one agent reports a downward trend in the performance of students at age 15.

Since principals do not have consistent preferences for a particular agent, we avoid giving undue emphasis to outliers by estimating true trends with median rather than mean estimates across surveys. To identify potential moderators, we compare our findings to estimates of trends in intelligence known as the “Flynn effects” literature (Herrnstein & Murray, 1994, p.307).Footnote 2 Recent meta-analyses of Flynn effects find greater growth in fluid reasoning (ability to analyze abstract relationships) than in crystallized knowledge (understanding the empirical world) (Pietschnig & Voracek, 2015; Trahan et al., 2014). We find similar differences between math and reading trends. The median upward trend by cohorts of students is steeper in m (0.19sd) than in rd (0.04 sd). Cohorts of 4th-grade students in other countries have also made more progress in math than in reading, suggesting common moderators are at work. Also consistent with Flynn effect estimates, we find steeper upward trends for students when tested at a younger age and a flattening in the upward math trend for the most recent period.

We observe heterogeneities. Young boys are making slightly more progress than young girls, but gender differences disappear when students are tested at an older age. Young white students progress more slowly (m: 0.28sd, rd: 0.09sd) than Asian (m: 46sd rd: 0.28sd), black (m: 0.36sd, rd: 0.19sd), and Hispanic (m: 0.29sd, rd: 0.13sd) students.Footnote 3 Except for Asians, these differences attenuate when students are tested at an older age. The advance for those from households in the lowest quartile of the socioeconomic status (SES) distribution is steeper than for those in the highest quartile among those tested at a younger age (difference in m: 0.08sd, in rd: 0.15sd). That advantage attenuates and reverses itself at older ages for white, black, and, to a lesser extent, Hispanic students, but not for Asian ones. Moderators that account for overall trends remain uncertain, but greater gains in math than in reading, especially at younger ages, could be due to improvements in neonatal and early-childhood health and well-being. Family-school interactions may account for differential trends by ethnicity and SES.

The remainder of the paper is organized in the following fashion: 1) the principal-agent problem in estimations of student achievement trends; 2) review of prior research on trends in education studies of student performance and psychometric studies of intelligence; 3) data collection and organization; 4) methodology; 5) results; and 6) discussion.

The Principal-Agent Problem in Estimations of Student Achievement Trends

Principals (policymakers) have regularly expressed concern about—and the desire to measure—rates of educational progress made by US students at various ages and from differing backgrounds. The US National Commission on Excellence in Education (NCEE) claims that “the educational foundations of our society are presently being eroded by a rising tide of mediocrity that threatens our very future as a Nation and a people” (NCEE, 1983, p. 5). According to Hirsch (1987, p. 7), the National Assessment of Educational Progress (NAEP) provides persuasive “evidence for the decline in shared knowledge.” That claim has been echoed in books with such disturbing titles as The Literacy Hoax (Copperman (1978), Dumbing Down Our Kids (Sykes, 1995), The Decline of Intelligence in America (Itzkoff, 1994), and The Dumbest Generation (Bauerlein, 2008). On the other side, supporters say schools are improving, students are making progress, and that claims of deterioration are false (Berliner & Biddle, 1996; Bracey, 1992).

These writers are addressing an important question. The well-being of the next generation—and the country as a whole—depends upon continuing progress in student math and reading achievement. Higher levels of achievement have positive impacts on college attainment, future earnings, teenage pregnancy rates, physical and mental health, political participation, and many other life outcomes (Borghans et al., 2016; Chetty et al., 2014). Nations that show higher average levels of student achievement enjoy steeper rates of economic growth (Hanushek & Woessmann, 2008, 2012).

Given the importance of the topic, policymakers have sought information on changes in student achievement across cohorts (Jones & Olkin, 2004; Messick et al., 1983; NAEP, 2002). Policy statements, legislative enactments, and commitment of government resources to the collection and distribution of information on student cohort trends in performance reflect the strong interest of principals in gathering information on changes in student achievement. Indeed, the US Commissioner of Education was directed by a statute enacted in 1867 to “report annually on the progress of students in the United States” (Mullis, 2019), though it would take a century before serious efforts to fulfill this mandate were undertaken. Once the project began in earnest, principals confronted challenges that may be conceptualized as instances of the principal-agent problem (Bovens et al., 2014). Lacking the requisite technical skills, principals select one or more agents (survey organizations) with the technical capacity to gather the data (Braun & Guston, 2003). The assignment of the task to an agent creates moral hazards, as agents may shirk certain tasks or pursue goals that differ from those of principals. Also, agents may have difficulty interpreting the preferences of principals, especially when they have multiple unranked, not necessarily consistent, objectives. Further, principals, to broaden political support, may express goals and preferences in ambiguous terms (Shepsle, 1972). To reach consensus, objectives may be articulated in vague language that leaves specific goals unranked. Agents with superior access to technical information may choose to comply with ambiguously stated objectives in ways consistent with their own preferences. Multiple agents may thus take different approaches when gathering information.

Multiple principals are likely in federal systems. Shared power requires the consent and cooperation among tiers of government, such as a federal department, state agencies, and local school districts. When government is tiered, actors can be both principals and agents. For example, a federal bureau (say, for example, the US Department of Education) may serve as the agent of policymakers (Congress and the president), but the bureau may then serve as the principal when asking lower-level agents (policymaking committees, state and local governments, professional survey organizations) to act on its behalf. In scientific research, “boundary organizations,” acting as both agents for policymakers and principals for research organizations, are often established to minimize undue political influence and stabilize the relationship between the policymaking principals and scientific research (Van der Meulen, 1998). Their task is to “internalize the provisional and ambiguous character of the boundary” between policy choice and scientific execution (Guston, 2000, p. 29). But if boundary organizations are broadly representative of diverse institutions and interests, they provide an opportunity for principals to introduce new, unranked objectives (Shove, 2003). Should this occur, say Braun and Guston (2003, p. 307), “the means of principals’ influence over scientists . . . is extremely limited.”

In the case at hand, principals in the USA seek information on changes in the performance of student cohorts over time. But some have other objectives as well, including comparison of performances of US students with those in other countries, assessment of performance relative to that expected on curriculum taught by schools, and information on student preparation for entering society toward the end of schooling. These objectives, though interrelated, are not identical and at times may be inconsistent. To the extent that agencies focus on alternative objectives, the data they collect may show varied estimates of performance trends.

Agents

Four agents have administered 160 waves of 17 temporally linked surveys of achievement in math and/or reading to nationally representative cohorts of US students for various portions of the past half century: 1) the Long-Term Trend (LTT) version of NAEP, 2) the main version of NAEP, 3) the International Association for the Evaluation of Educational Achievement (IEA), which administers Trends in International Math and Science Study (TIMSS) and Progress in International Reading Literacy Study (PIRLS), and 4) the Program for International Student Assessment (PISA). The 17 temporally linked surveys are in math and/or reading for ages 9, 13, and 17 in LTT, grades 4, 8, and 12 in NAEP, grades 4 and 8 in TIMSS, grade 4 in PIRLS, and age 15 in PISA. (For a list of surveys, waves, and age/grade groups, see Appendix, Table 10) Each survey has specific purposes, sampling frames, and test content that differentiates it from the others. Several agents administer waves of tests in multiple subjects, but the largest number of tests are available for m and rd. We limit this analysis to those two subjects to obtain the best available data on change over time and consistency of results by agent. We use data on trends in achievement in other countries to explore possible moderators.

The National Center for Education Statistics (NCES) within the US Department of Education, an agent acting on behalf of Congress and the president, has functioned as a principal overseeing the collection of data for all four of these agents for much of the period.Footnote 4 NCES is a boundary organization designed to insulate the collection of education information from political influences emanating from the US Secretary of Education, who is otherwise responsible for education policy and programs. Unlike the secretary, who serves at the will of the president, the NCES commissioner is appointed by the president to a fixed 6-year term.Footnote 5

Since 1988, NCES has been responsible for contracts that structure the design, administration, data analysis, and reports of all surveys by the four agents. Given the fact that all agents report to one government bureau, it might be thought all surveys would be guided by the principals’ ranked objectives. But, as we shall see, NCES is not the only principal. Further, its own objectives are ambiguous. On the one hand, in all four surveys it seeks to track changes in the performance of cohorts of students. But NCES also tries to estimate student performance against the perceived current curriculum, to compare US student performance with that of students in other countries, and to measure student preparation for entry into society at the close of secondary education. Emphasis on one of these goals rather than another could generate alternative estimates of the progress of student cohorts.

LTT

The collection of information on changes in student cohort performance in the USA begins in 1967 with a compact signed by each of the fifty US states creating the Education Commission of the States (ECS), which accepted responsibility for the direction of LTT in 1967, though costs were to be borne by the US Office of Education. (Gilford, 2004; Lehmann, 2004).Footnote 6 Unable to reach a consensus on tests that obtained information about student performance in individual states or school districts, ECS agreed to report only overall results from a nationally representative sample of students by gender, ethnicity, and size of community (Lehmann, 2004; Messick et al., 1983, pp. 1–15). In 1998 Congress shifted responsibility for overseeing LTT to the National Assessment Governing Board (NAGB), a boundary organization consisting of 26 members appointed by the US Secretary of Education (Bourque, 2004). By law, NAGB must include “governors, state legislators, local and state school officials, educators and researchers, business representatives, and members of the general public” (NAGB, 2021). The legislation limits the authority of the secretary, apparently to preclude inappropriate political influence over the collection of sensitive information, saying “in the exercise of its functions, powers, and duties, the Board shall . . . be independent of the Secretary and the other offices and officers of the Department of Education.”Footnote 7 But as a chair of NAGB observed (Olkin, 2004, p. 259):

There is tension, some of it built into the current structure where, for example, NAGB is supposed to set the policy but NCES . . . manages the contract. A lot of policy is contained in the contract, so that, although NAGB can set policy, NCES need not carry it out.

Acting as boundary organizations between the US Department of Education and survey agents, ECS and NAGB have overseen administration of LTT to 16 waves of nationally representative samples of cohorts born as early as 1954 in reading and 1961 in math (Tables 1, 10). The most recent cohort for which information is available for this analysis was born in 2002. Because the purpose of LTT is limited to tracking nationwide trends, LTT collects only a few thousand observations in each survey wave (Table 1). Samples are drawn at 9, 13, and 17 years. Though it estimates performance for a group defined by age that remains stable over time, LTT has not always been a perfectly constant measure of student performance across survey waves. As one commentator observes, “The objectives and test items became more closely aligned with school curricula; . . . its sampling, which had included young adults and out-of-school youth, was narrowed to those in school” (Stedman, 2009, p. 3). However, others fault LTT for maintaining consistent testing frameworks that “become increasingly irrelevant by failing to reflect changes in curricula and instructional practice” (Pellegrino et al., 1999, p. 78).

NAEP

In the same 1988 legislation that Congress shifted responsibility for the administration of LTT from ECS to NAGB, it authorized NAGB to launch other tests in m and rd to representative samples of students in every state as well as in the nation as a whole (Bourque, 2004).Footnote 8 Students are assessed on material aligned to the curriculum expected to have been taught by that grade as well as being tracked to ascertain change in performance over time. To obtain representative samples in each state, each survey wave includes over one hundred thousand observations (Table 1).Footnote 9 To meet both objectives, the boundary organization asks agents to design additional surveys different from LTT surveys in purpose, sampling frame, and test content. Specifically, the law establishing NAEP calls for performance measurement in grades 4, 8, and 12 on tests that adjust to changes in the perceived curriculum (Jones & Olkin, 2004). Since the age composition of a grade varies from one test administration to the next, and perceptions of the curriculum have shifted, results may not be comparable over time (Mosher, 2004; Mullis, 2004).

Still, most NAEP tests since 1990 have been psychometrically linked from one administration to the next. Before that date, agents interpreted the objectives of principals to be mainly concerned with obtaining valid information about school performance in individual states at a particular point in time. But they were then temporally linked by using “bridging questions” administered to tests in adjacent waves. We limit this analysis to cohorts tested as of this date. However, we exclude NAEP 12th-grade math tests administered during the 1990s, because these were suspended by NAEP for 5 years until a revised version not psychometrically linked to prior ones was administered. The decisions indicate NAGB’s dissatisfaction with the reliability and validity of its earlier version of the 12th-grade math test. The revised version of the NAEP 12th-grade math tests reflects changes in high school standards and coursework, and changes in test administration practices (Grigg et al., 2007, pp. 14–15). The revisions unlinked the new NAEP waves from their predecessors. For these reasons, trends in NAEP math for students tested in grade 12 are included only for students born after 1987.

TIMSS-PIRLS

The IEA also serves as a boundary organization in that it has remained a private, nonprofit agency somewhat insulated from political influence. The organization, established in the 1960s, administers tests in math and science to students in grades 4 and 8 (TIMSS) and in literacy in grade 4 (PIRLS) in numerous countries. Similar to NAEP, the original purpose of the TIMSS survey was to compare student performance in math and science across countries against a perceived international curriculum offered at these grade levels, and the first two administrations of TIMSS were not temporally linked to one another. TIMSS was redesigned after a conference attended by President George H. W. Bush and 49 of the 50 governors was held in Charlottesville, Virginia. The participants pledged that by the year 2000 “U.S. students will be first in the world in mathematics and science achievement” (Klein, 2014). To measure progress toward that goal, the third administration of TIMSS, launched in 1995, “introduced a number of innovations that enhanced its validity and reliability,” including bridging questions that allowed for tracking of trends. The changes were facilitated by additional funding “by NCES and the National Science Foundation” (Mullis & Martin, 2007, p. 13). TIMSS has since been administered at four-year intervals for cohorts born between 1981 and 2005. However, the test has remained faithful to its original mission by continuing to measure performance against a perceived curriculum, as indicated by data collections organized by grade level rather than by the students’ age.

To measure comprehension of a curriculum, test items are drawn from frameworks “organized around two dimensions: a content dimension specifying the content to be assessed and a cognitive dimension specifying the thinking processes to be assessed.” TIMSS says that “the majority of” its items “assess students’ applying and reasoning skills” (IEA, 2021). Like NAEP, the questions TIMSS asks are “knowledge oriented”; its questions are “direct and abstract.” It asks students, “What do you know?” (Hutchison & Schagen, 2007, p. 54).

For years, IEA did not administer a reading or literacy test because principals did not think valid comparisons across language groups were possible. But in 2001 NCES administered PIRLS, a literacy test, to students in grade 4 (Kastberg et al., 2013). The principals’ purposes are to assess student performance against an understanding of the literacy children should be expected to achieve by grade 4, compare performances across nations, and estimate temporal change (Mullis & Martin, 2015). Results are available for cohorts born between 1991 and 2006. Information on student ethnicity is missing for 2001 and 2016, as the restricted-use data set is not available from NCES for these years. In sum, PIRLS, like TIMSS, seeks to reconcile measurement of student comprehension of the existing curriculum in many countries and to track cohort changes in performances.

PISA

PISA surveys student performance at age 15 in m and rd every 3 years. Cohorts analyzed in this paper were born between 1985 and 2000. NCES has fully participated in and covered the cost of PISA administration and reporting within the USA.Footnote 10 Despite criticism of PISA by some educators, Footnote 11 US officials have endorsed PISA findings, even when test results are disappointing.Footnote 12

PISA is an agent of the Organization for Economic Co-operation and Development (OECD), a 37-member international organization of industrialized countries that collects statistics and information on the economic and social well-being of member countries. The number of principals responsible for the administration is even more extensive than for the other three surveys. The Office of the OECD’s Secretary-General serves as a boundary organization that insulates the collection of survey information on student achievement from the political influence by governments of member countries. When PISA results are published, its reports state “opinions . . . and arguments,” which are “published under the responsibility of the Secretary-General of the OECD,” but “do not necessarily reflect the official views of OECD member countries” (OECD, 2013, p. 6). Yet the cooperation of principals in each participating country is necessary for both data collection and usually for the recovery of costs.

PISA differs from TIMMS and NAEP in that it seeks to measure “preparation for life” rather than performance against the school’s curriculum.Footnote 13 For example, PISA states that the math literacy that it assesses is “an individual’s capacity to identify and understand the role that mathematics plays in the world, make well-founded judgments, and use and engage with mathematics in ways that meet one’s needs as a constructive, concerned, and reflective citizen (US Department of Education, 2014).” Given this purpose, PISA tests are designed along lines different from those administered by NAEP and TIMSS. In a thoughtful comparison of test items across the various tests, Hutchison and Schagen (2007, p. 254) state the difference in these words:

PISA items are aimed at life skills while TIMSS items are more knowledge oriented. Where TIMSS questions are more direct and abstract, PISA questions are more lengthy and wordy. TIMSS asks, “What do you know?” while PISA asks “What can you do?”

Summary

Principals that have ambiguous and not always consistent objectives track change in test performance by cohorts of students by asking four agents, which have their own interests and preferences, to administer tests to representative samples of students. Although all four agents link their surveys psychometrically in ways that allow for tracking cohort trends over time, each interprets the objectives of principals in distinctive ways that reflect the agents’ own interests and purposes. Originally asked by its boundary organization not to report results for states, districts, or schools, LTT focuses only on national trends. However, even LTT has modified its sampling design and test content in response to perceived curriculum changes. NAEP has been asked by NAGB, its broadly representative boundary organization, to adjust its tests to changing curricular objectives and to measure student performance in every state to facilitate implementation of federal accountability goals. TIMSS-PIRLS is directed by IEA, an international nonprofit organization, which acts as a boundary organization that mediates relationships between survey agents and the governments of cooperating countries. Its objectives include both tracking performance over time and estimating student performance against international expectations of what is to be learned by a certain grade. PISA, an agent of OECD, serves as a boundary organization that mediates relationships between survey agents and the principals of participating countries. It seeks to measure student readiness for participation in the social and economic world when students are age 15, when many countries end compulsory schooling. In sum, agencies report to different principals and boundary organizations that have varying objectives. Given the differences, estimates of trends in student performance are likely to vary by agent, generating noisy data that complicate the principals’ task of ascertaining true trends in student performance. It remains to be seen whether clear signals can be detected amid the buzz.

Scholarly Research

Research on cohort change in student achievement is bifurcated between education research and intelligence studies by psychometricians. In this selective review, we bring together key results from two traditions that have generally ignored one another. (See Table 9 for a summary of findings.)

Sociology and Economics of Education

Numerous studies have made extensive use of surveys administered by the four agents, but the literature has yet to compare systematically their estimated rates of cohort progress. Education research provides estimates of divides by socioeconomic status (SES), gender, ethnicity, and school sector, and in the process casts light on progress in student performance. Some find modest gains in student achievement (Hanushek et al., 2012), chiefly in math (Campbell et al., 1996). Reardon et al. (2012), p. 23) reports steeper upward trends in math than in reading on the LTT. Studies on ethnic achievement gaps report substantial closing of the black-white gap, but a flat trend in the most recent period (Jencks & Phillips, 1998; Magnuson, Rosenbaum, & Waldfogel, 2008). Hedges and Nowell (1998) report closing of gaps between white and nonwhite students over a similar period. Miller (1995) finds a closing of Hispanic-white gaps and Asian-white gaps. Hedges and Nowell (1995) find more males than females among high-scoring individuals in math, but not in reading. Others have looked at the influence of family structure on changes in student achievement (Grissmer et al., 1994) and the black-white gap (Phillips et al., 1998). Elsewhere, Reardon (2011) draws upon surveys that are not psychometrically linked and finds income achievement gaps to have widened between students from households in the top and bottom 10% of the income distribution. Other studies find either no clear trend, or a flat trend, or declining trends in income or SES achievement gaps in the USA (Broer et al., 2019; Chmielewski, 2019; Hanushek et al., 2020; Hashim et al., 2020; Hedges & Nowell, 1998; OECD, 2018). Both Hanushek et al. (2020) and Hashim et al. (2020) find steeper upward trends in academic growth for those tested at a younger age. Similarly, Bloom et al. (2008) show annual gains on seven nationally normed tests are largest in elementary grades, attenuation of gains in early adolescence, and minimal gains at grade 12. Shakeel and Peterson (2020) show greater increases in math and reading achievement on NAEP at charter schools than at district schools for those tested between 2005 and 2017. Matheny et al. (2021) report growing gaps on state tests for school districts comprising students from contrasting social and ethnic backgrounds. Yet all of these studies, each valuable in its own way, leave unanswered an overarching question: What signals emerge from the results of all psychometrically linked surveys?

Intelligence Studies

Changes in intelligence by representative samples of cohorts are known as the “Flynn effect” (Herrnstein & Murray, 1994, p. 307).Footnote 14 (See Table 9) James Flynn discerned a positive trend in the intelligence quotient (IQ) of about three points per decade among those who took the Stanford-Binet intelligence tests (Flynn, 1984). Rodgers and Wänström (2007) show a Flynn effect for math (but not for reading) in the Children of the National Longitudinal Survey of Youth (NLSYC) PIAT-Math at each age between 5 and 13. Using the same data set, Ang, Rodgers, and Wänström (2010) find Flynn effects for subgroups differentiated by gender, race, maternal education, income, and locale. A Flynn effect has also been identified among high-ability students who took the SAT and ACT (Wai & Putallaz, 2011). Pietschnig and Voracek (2015), hereinafter PV, conduct a meta-analysis of 219 multiple administrations of the same (or similar) intelligence tests over the period 1909–2013 to cohorts of representative individuals from similar populations throughout the world. In general, they confirm Flynn’s findings of average gains in IQ, gains of approximately 3 points every decade during this period. However, they find variation by region, and they find that the size of IQ gains has diminished in recent decades in industrialized countries. They also report for the most recent decades that there are larger IQ gains in Asia and Africa than in Europe and the Americas (Argentina, Brazil, Canada, Dominica, and the USA). Similarly, Meisenberg and Woodley (2013) report higher gains on the PISA and the TIMSS tests in countries with lower test scores at the beginning of the time period, from which they conclude that intelligence gains fade as a country’s level of economic and social development reaches higher levels.

Like other psychometricians, PV distinguish between two subtypes of intelligence—fluid reasoning and crystallized knowledge (Pinker, 2018, pp. 240–245). Fluid reasoning is the ability to perform “reasoning-based tasks that can be solved with (virtually) no prior knowledge,” while crystallized knowledge “consists of knowledge-based questions that cannot be solved by reasoning” alone (Pietschnig & Voracek, 2015, p. 284). Put another way, measures of fluid reasoning estimate the ability to perform analytical tasks (such as providing the next number in an arithmetic progression), and measures of crystallized knowledge estimate the ability to answer empirical questions (such as the name of a capital or the meaning of a word). Surprisingly, PV report that it is fluid reasoning, not crystallized knowledge, that has increased at the more rapid rate in recent decades. Between 1985 and 2013, the gains in fluid reasoning have increased by approximately 2.2 IQ points per decade, down from 4.3 IQ points between 1952 and 1985 (p. 285). Still, these recent gains in fluid reasoning, if less than previous gains, are considerably larger than the gains of 0.04 IQ points per decade in crystallized knowledge between 1987 and 2011 (p. 285), down from 3 points per decade between 1962 and 1987. Others report similar differentials in the rate of progress for these two subtypes of intelligence in Anglo-American countries (Jensen, 1998, pp. 319–320; Lynn, 2009a; Nisbett et al., 2012). Finn et al. (2014) find a Pearson correlation of 0.53 between fluid reasoning and math scores, but only a 0.36 correlation with reading performance, for a selected population of Massachusetts students. Rindermann and Thompson (2013) search for Flynn effects across waves of LTT surveys. They show mean increases in math performance of 2.37 IQ points per decade but only 0.54 points in reading, a finding that accords with PV’s results that identify steeper upward trends in fluid reasoning than in crystallized knowledge.

Summary

The existing literature on student progress in math and reading is bifurcated and fragmentary, although most studies suggest that progress has been made on one or more surveys at least for some students at certain ages in some subjects during some of the period. What remains to be considered is the consistency in the estimates of progress in both subjects for students at various ages and for important subgroups. Thus, we report the following: 1) consistency of estimates policymakers received from agents asked to survey student progress; 2) differences in the rate of progress in math and reading; 3) variation in trends by age at which a child is tested; 4) changes in the rate of progress over time, giving special attention to the most recent period for which intelligence studies find a slowdown in IQ growth in industrialized societies; and 5) heterogeneities by gender, ethnicity, and SES, as well as by SES quartile for each ethnic group.

Data

To measure cohort progress, the four agents periodically administered 160 waves of tests in math and reading to national probability samples of US cohorts born between 1954 and 2007. (For the dates of each test administration, see Appendix Table 10) The period each survey covers and the number of observations for each set of waves are shown in Table 1. We use the individual-student data from the NCES restricted-use data set available to qualified researchers.Footnote 15 Approximately seven million student-level observations of US students and approximately four and a half million observations of non-US students are used in this analysis. Other features of each survey are discussed in the principal-agent section above.

Ethnicity

The definition of ethnicity used here depends heavily upon those employed by the surveys, which vary somewhat. (See Appendix Table 12 for a comparison of definitions and the measures taken to standardize the definition.)

The ethnic composition of those tested changes substantially over the fifty-year period (Table 11). The percentage of white students taking the LTT math test declines from 81% in its first wave in 1978 to 55% for its latest wave in 2012. The percentage of black students ticks only slightly upward (from 13 to 14%) over the period, but the percentage of Hispanic students increases dramatically from 5 to 23%, and the percentage of Asian students (including those from the Pacific Islands) jumps from 1 to 6%. Despite marginal agency differences in ethnicity definitions (Table 12), similar trends are observed across all surveys.

Parental Education

Other than in the TIMSS and PIRLS 4th-grade survey waves, estimates of parents’ educational attainment are available. Although students are asked about their parents’ education in different ways (Table 13), and all surveys allow for classification of the educational variable into one of four categories—whether or not the parent with the higher attainment level has 1) a 4-year college degree; 2) at least some postsecondary education, 3) no more than a high school diploma; or 4) no high school diploma. Parental education indicators are available for 15 surveys.

Possession Index

None of the agencies ask children about the annual income of the household. Instead, they inquire about various items that may or may not be available in the child’s home. The possession index is a simple count of the number of items reported to be in the household. This measure provides a crude proxy for household permanent income. The correlation between the number of household possessions and household annual income is 0.36 in the Education Longitudinal Study (ELS), 2002, which contains information on both variables. Although the correlation is admittedly lower than desirable, the possession index provides a rough measure of permanent income that provides an alternative to eligibility for free or reduced-price lunch, an annual income indicator discussed in the Appendix. Reporting errors may be particularly large when younger students are asked about items with which they are not familiar. Also, the meaning of any home possession may vary with time. For example, a computer is rare among cohorts at the beginning of this period but common for those at the end of it. TIMSS and PISA inquire about many more possessions than LTT and NAEP do (Table 14), so it is important to estimate relationships between possessions and achievement separately for each survey. The number of possessions also varies within surveys. For the index, we count only the items that are available across all waves of each survey. Furthermore, we measure the variables consistently across all waves within a survey. The possession index is available for 17 surveys.

SES Index

The SES index, our preferred measure of SES, is estimated by extracting the first component from a principal component analysis obtained from the possession index and the original scale ranking of the parental education categories. To obtain as consistent a measure of SES as possible within each survey, we use the same measure of parental education and the same measure of household possessions across survey waves, even though on some waves additional information is available. The SES index is available for 15 surveys.

Methodology

We use the standard deviation (sd) of initial cohorts to calculate the distance (in sd) of the test-score distribution for all subsequent cohorts tested in each survey, subject, and age or grade. For each of these distributions, we estimate trends in mean performances over time by calculating the distance (in sd) of the test-score distribution for each cohort’s performance in each survey, subject, and age or grade level from the means of the initial cohort observations, which are set to zero. The assessments do not administer the full test to any one student. Instead, they estimate the performance of students on the test from their performance on the section administered to them. Each assessment provides various plausible values of that performance. Our empirical models use the second plausible value from each wave of the assessments. We arbitrarily use the second plausible value from each wave of the assessments. Results are robust to use of each of the first five plausible values and for the average of the five estimates. Survey weights are applied in all estimations.

Following Reardon (2011) and Hanushek et al. (2020), we extract the performance trend with a quadratic function of the birth cohort for each year of the test administered with the following equations:

O is the achievement score for student i, by subject s, assessment g, grade/age γ, and cohort t; α is the achievement trend, and ε is the error term. The results are robust to estimations from linear and cubic functions (Figs. 9, 10 and 11).

We estimate the overall change and its associated standard error from the fitted point estimate and standard errors of the start and end points for each model (Feiveson, 1999; Gould, 1996; Oehlert, 1992; Phillips & Park, 1988). We base our estimates on the delta method that calculates the variance, standard error, and Wald test statistic (z-test) from the nonlinear transformations of the estimated parameter vector from the fitted model. We repeat the above process separately for each subgroup analysis. We carry out all analyses in STATA 16.

Results

Unless otherwise indicated, all results are reported in sd per decade, which allows for direct comparisons with the Flynn effect originally estimated to be 3 IQ points or 0.21sd per decade. To avoid giving undue weight to outliers, we report median rather than the mean estimates of rates of cohort progress when summarizing results from several surveys. When summarizing results by the age a student is tested, we group surveys as follows: younger (age 9 and 4th grade); early adolescence (ages 13–15 and 8th grade); and older (age 17 and 12th grade). In estimates of differences in trends between dichotomously defined subgroups, a positive valence indicates more rapid progress by the group generally thought to be disadvantaged. A negative valence indicates the more-advantaged group is making the greater progress, not that the trend is downward.

Table 1 displays the total amount of change and the rate of change in sd per decade observed by all the waves in each survey for each subject and age or grade level. The statistical significance of each trend line is shown in columns 5 and 9. But since the waves for each survey differ both in number and the period covered, we show in columns 6 and 10 the change per decade in sd, which allows for more direct comparisons across surveys. Inspection of these columns reveals that, except for the PISA estimates, all have a positive sign indicating at least some progress in student achievement.

Agency Effects

Principals receive varying estimates of change in cohort achievement from the four agents gathering this information. The range of estimates is 0.37sd per decade in m and 0.14sd in rd (m: –0.10sd to 0.27sd, rd: –0.02sd to 0.12sd). (See Table 1) NAEP and TIMSS-PIRLS estimate the steepest upward trends. NAEP estimates that the median trend for students is 0.27sd per decade in m and 0.08sd in rd; TIMSS-PIRLS estimates that the median trend is 0.25sd in m and 0.09sd in rd (Tables 2 and 3). Note that both agents adjust tests to fit the contemporary curriculum expected to be taught. The median estimate trend provided by LTT, which apparently does not adjust questions to fit the curriculum, is a more modest 0.15sd per decade in m and just 0.03sd in rd.

It is true that agents are not always testing students at the same age. But major differences between estimates are nonetheless present even when students are tested at about the same age. PISA, which estimates student preparation for participation in the postsecondary world at age 15, estimates a sizable negative median trend of –0.10sd in m and a marginally negative trend of –0.02sd in rd. NAEP estimates that the median trend for students in 8th grade (who are only slightly younger) is 0.27sd per decade in m and 0.12sd in rd. That is a difference of 0.37sd in m and 0.14sd in rd. Given the range of estimates among agents, it is risky to rely upon surveys by any of them to reach conclusions about progress in student achievement. We report median effects from all linked surveys.

Math-Reading Differentials in the USA

The median decadal trend in m comes to 0.19sd, but it is only 0.04sd in rd (Tables 2 and 3). If extrapolated to a fifty-year period, the cohort gains in m would be 0.95sd, but just 0.20sd in rd. The largest difference between the two subjects is for students in 4th grade who took the NAEP tests; change per decade is 0.39sd for m but only 0.08sd for rd. PISA observes the smallest difference between the two subjects, –0.10sd in m and –0.02sd in rd.

Differential Trends by Age

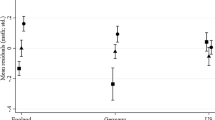

The steepest upward trend is observed among students tested in m at a younger age (Fig. 1). For those age 9 or in the 4th grade, the decadal rate of change in this subject varies between 0.23sd on the LTT to 0.39sd on the NAEP. For those tested in early adolescence, the decadal rate of change is more modest, varying between a negative trend on the PISA (–0.10sd) to positive trends on the LTT (0.15sd), the TIMSS (0.19sd), and the NAEP (0.27sd). For older students, the progress proceeds at a rate of no more than 0.05sd on the NAEP and 0.06sd on the LTT. The international comparisons reveal a similar pattern of larger rate of progress in grade 4 than grade 8 math on the TIMSS (Tables 16 and 17).

On rd tests, it is less clear that cohort advances vary by the age at which a student is tested. In fact, there is only modest progress detected for any age group. For those at age 9 or in grade 4, the decadal rate of progress is 0.05sd on the LTT test, 0.08sd on the NAEP test, and 0.09sd on the PIRLS test. For those in early adolescence, the rate of change is about the same as for the younger students on the LTT test (0.03sd) and the NAEP test (0.12sd), but negative on the PISA test (–0.02sd). For older students, both estimates show little change by decade—0.03sd on the NAEP test and hardly any change at all on the LTT test. In sum, progress in rd appears to have been very modest for young students as well as for those in early adolescence, and minimal among those tested at an older age.

Math-Reading Differentials in Other Countries and Regions

To see whether or not subject and age differentials may be due to moderators more general than factors limited to the United States, we track trends on TIMSS-PIRLS and PISA in other countries and regions for which this information is available. The 4th-grade TIMSS-PIRLS surveys show larger cohort advances in m (0.24sd) than in rd (0.05sd). When TIMSS-PIRLS samples are restricted to industrialized nations (OECD members), larger differences between m (0.21sd) and rd (0.02sd) trends are observed. In non-OECD countries and regions, progress in m (0.28sd) moderately outpaces gains in rd (0.19sd).

When one looks at specific countries in which 4th-grade students have taken both TIMSS and PIRLS tests, progress is with one exception always greater in m than in rd. In the UK, decadal change on m is 0.36sd but only 0.06sd in rd. In New Zealand, these numbers are 0.17sd, and –0.04sd, respectively. In Iran, the numbers are 0.33sd and 0.19sd, and in Hungary, they are 0.13sd and 0.04sd. In the USA, it is 0.20sd for m, and 0.08sd for rd. The one exception is Singapore, where m progress (0.26sd) trails rd (0.33sd). It is clearly possible for a country to educate its citizens in rd at a rate that outpaces the m trend, as Singapore shows, but, in general, the differential rate of progress for the two subjects observed in the USA appears in other industrialized countries as well. TIMSS also reveals a larger rate of progress in grade 4 than in grade 8 m (Tables 16 and 17). In sum, differential trends on TIMSS-PIRLS by subject and by age tested in most industrialized countries for which data are available are consistent with PV’s analyses of differential trends in fluid reasoning and crystallized knowledge intelligence.

PISA does not reveal similar differentials between m and rd performance among 15-year-old test-takers (Tables 16, 17, 18, and 19). Instead, PISA finds minor difference in trends for the two subjects within the jurisdictions it surveys (–0.04sd in m; –0.01sd in rd). That could well be a function of the greater demands on crystallized knowledge required by the PISA m test, a topic we explore in the discussion section.

Progress Persistence

To see whether rates of progress have persisted over time, we divide cohorts between those born before and as of 1990. As shown in Table 4, the median difference in m trends before and as of this date comes to –0.08sd per decade, an indication of slowing progress. Yet there is considerable variation across surveys. The climb in m performances on the LTT test for students at age 9 slide from 0.22sd per decade for tests administered to cohorts born before 1990 to 0.16sd per decade for those born subsequently. But at age 13, the trend picks up from 0.13sd for those born before that date to 0.21sd for more recent cohorts. At age 17, LTT m test performance declines from 0.10sd for cohorts born before 1990 to 0.02sd later on.

NAEP m trends rise less steeply as of 1990. In the 4th grade, the trend shifts upward at a substantial rate of 0.65sd per decade prior to 1990 but slows to 0.21sd in the more recent period. In the 8th grade, the progress slips from 0.44sd per decade for those born before 1990 to 0.08sd subsequently. They also perform better on the TIMSS m test if born before (0.35sd) rather than later (0.14sd). However, the steep decline on the PISA test for those born prior to 1990 (–0.36sd) slows among those born subsequently (–0.06sd). Summing up, math performance fails to persist at the same rate among cohorts born as of 1990 in five of the seven surveys for which a comparison can be made. The PISA m test proves to be an exception to the dominant pattern, as is cohort performance at age 13 on the LTT test.

In rd, progress accelerates for cohorts born as of 1990. The median difference in trends before and as of that date is 0.08sd per decade. Once again, surveys vary. On the LTT test administered to those at age 9, progress is greater in the more recent period (0.15sd) than before 1990 (0.01sd). The same is true for the 4th-grade NAEP test: before 1990 (–0.04sd); later (0.07sd). In early adolescence, the LTT test again shows greater progress for the more recent period (before 0.01sd; later 0.22sd). The NAEP test does not (before 0.22sd; later 0.10sd). However, the PISA test points in a less negative direction (before –0.31sd; later –0.04sd). Among those nearing the end of schooling, the LTT test (before, 0.00sd; later 0.09sd) shows an increase; however, the NAEP test (before, 0.05sd; later –0.07sd) shows quite the opposite. In other words, progress persistence (or at least less retrogression) in rd is observed in five of seven surveys. The NAEP tests administered to those in 8th and 12th grades are the outliers.

In summary, birth cohorts born as of 1990, as compared to those born before that date, show a median trend that is –0.08sd per decade in m but 0.08sd per decade in rd. Five of seven surveys reveal a more gradual trend upward in m after 1990, and five of the seven surveys show a steeper one in rd after that date. The pattern is consistent with research on intelligence, which suggests a moderation of growth in fluid reasoning but not necessarily any change in crystallized knowledge. Still, the number of comparisons is few, and results are not uniform across surveys (Figs. 3, 4, 5, 6, 7 and 8). Moreover, in most cases we are unable to improve model fits significantly by using quadratic or cubic models rather than a linear one that assumes constant growth over time (Figs. 9, 10 and 11). That implies that change has occurred steadily over the past fifty years undisturbed by the many apparent perturbations that might have disrupted the learning process.

The exceptions to this generalization are the NAEP surveys in both 4th grade and 8th grade m, which show a steep upward trend for birth cohorts born in the 1970s and 1980s, with only a modest increment subsequently. This exception would be easier to interpret were it not contradicted by the steady but less dramatic increase in the LTT throughout the entire period. Altogether, signals are uncertain, though recent progress seems to have been greater in rd than in m.

Heterogeneities

Gender, ethnic, and SES heterogeneities are observed. For gender, the median trend reveals a male advantage at an early age, but that fades by the end of secondary school. For ethnicity, we detect moderately slower gains for white than for Asian, African American, and Hispanic students. For SES, we observe somewhat larger gains for the lowest quartile as compared to the top quartile of the distribution when students are tested at a younger age, but that advantage attenuates for white, African American, and, to a lesser extent, Hispanic students. That pattern is not observed for Asian students, however.

Gender

There are not large differences in performance trends by gender. Across all surveys, the median differences in the achievement advantage of males trends downward in m (–0.02sd), but in rd (0.02sd) it moves in the opposite direction (Tables 20, 21 and 22). The male advantage is more noticeable when students are tested at a younger age (0.03sd in m, 0.04sd in rd) than in early adolescence (zero in m, 0.01sd in rd). The male achievement advantage disappears (–0.01sd in m and zero in rd) when students are tested at an older age.

Ethnicity

In nearly all surveys, trends in performances of nonwhite cohorts shift upward more rapidly than those for white ones (Fig. 2, Tables 5, 6, 7). Only small differences are observed between rd and m. The median difference in Hispanic-white and black-white progress on all m surveys is 0.11sd per decade. The median Asian-white difference is 0.09sd. Among those of elementary-school age, the median difference is largest between white and Asian (0.18sd). On the four tests given in m to students in early adolescence, the median differences in the upward trend between white students and Asian, Hispanic, and black students are 0.12sd, 0.11sd and 0.09sd per decade, respectively (Tables 2 and 3). The relative position of white students usually continues to lag in high school. On the LTT m test, the decadal change for white students (0.09sd) trails that for black (0.15sd) and Hispanic (0.16sd) students, but it outpaces the decadal change registered by Asian cohorts (0.05sd). On the NAEP m test, white progress is 0.09sd per decade, less than that of both Hispanic (0.16sd) and Asian (0.21sd) students, but greater than that of African American ones (0.07sd).

Differences in trends by ethnicity are about the same for rd as for m performances. In the former subject, the largest difference is between Asians and whites, a median difference for all surveys of 0.15sd per decade. The median white-black and white-Hispanic differences in rd are 0.09sd and 0.06sd per decade.

SES

When cohort progress is estimated by quartiles of the SES distribution, 10 of the 15 estimates show marginally larger upward trends for those in the lowest quartile than for those in the top quartile (Table 8). Four show more progress for those from the highest SES backgrounds, and one shows no difference. The trend lines vary by the point in the life cycle when the student is tested, and the differences are more favorable for disadvantaged students tested at a younger age. For those tested in m at the youngest age, the median trend is 0.08sd larger for the bottom quartile; for those tested in early adolescence, it is 0.03sd; and for the oldest cohort, the top quartile shows more progress than the lowest quartile (–0.03sd) (Table 2). The median differential in rd is large for students tested at the youngest age (0.15sd), less for those tested in early adolescence (0.11sd); and for those tested toward the end of secondary education, the trends are more favorable for the top quartile (–0.04sd) (Table 3). The PISA test, which is an outlier, shows a differential at age 15 in favor of the lowest SES quartile of 0.24sd in m and 0.29sd in rd.

Consistency of SES Effects Across Ethnic Groups

NAEP has enough observations in each wave to estimate with precision achievement progress by SES quartile for each ethnic group. We report the differences between the highest and lowest SES quartiles on NAEP tests for each ethnic group in Tables 2 and 3 (see Appendix Tables 23, 24, 25 and 26 for detailed analyses). To avoid dependence on NAEP, we also report median trends from all surveys, even though the other estimations are less precise. As elsewhere, differentials are given a positive valence if the lowest SES quartile shows greater progress than the highest quartile.

Results from both the NAEP test and from the median of all surveys vary by age. When white and African American students are tested at a young age, gains that cohorts of students made in the lowest SES quartile are substantially larger than those that the cohorts made in the highest SES quartile. But the trend toward greater achievement equality attenuates by early adolescence, and it reverses itself by the time students reach the end of secondary schooling, with the top SES quartile among the older students showing a steeper upward trend than the lowest SES quartile. Attenuation is less apparent for Hispanic students and especially so for Asian students.

The downward shift in progress made by low relative to high SES students is quite pronounced for white students. On the NAEP, the lowest SES quartile of white students tested at a younger age outpaces the top quartile in m by 0.06sd per decade, but the advantage shifts to the highest quartile (–0.01sd) in early adolescence, increasing to –0.11sd by the end of secondary education. On the median for all surveys, these numbers are 0.04sd, 0.05sd, and –0.04sd, respectively. On rd tests, the relative rate of progress by SES on the NAEP declines from 0.20sd at the younger age to 0.04sd in early adolescence, then reverses to –0.14sd for older students; the median for all surveys declines from an upward trend of 0.15sd to 0.00sd, then reverses itself to –0.10sd.

For African American students, a similar pattern is apparent. The rate of cohort progress among the lowest quartile of students tested on NAEP’s m exam tested at the younger age outpaces the highest quartile by 0.14sd, but that advantage fades to 0.08sd in early adolescence and then dramatically reverses itself (–0.22sd). The median trend numbers for all surveys are less dramatic but otherwise quite similar: 0.11sd, –0.03sd, and –0.09sd, respectively. On the NAEP rd test, the relative advantage of the lowest quartile in the rate of progress once again slows as students age: 0.15sd, 0.06sd, and –0.02sd. The median for all surveys shows a similar pattern: 0.12sd, 0.06sd, and 0.01sd.

Among Hispanic students, a similar pattern is apparent on the NAEP tests but less so on median results from all surveys. Those students in the lowest quartile tested on the NAEP exam in m at a younger age show gains relative to those in the highest quartile of 0.22sd. The advantage fades to 0.10sd in early adolescence and turns negative (–0.06sd) at the end of secondary education. On the rd test, the numbers are 0.28sd, 0.09sd, and 0.18sd. The median trends for all surveys do not vary as much in either m (0.10sd, 0.03sd, and 0.01sd) or in rd (0.17sd, 0.17sd, and 0.11sd).

The progress shown by the lowest SES quartile among Asian students shows a somewhat different pattern than for white and African American students. On the NAEP test, students at a younger age show gains in m registered by the lowest quartile that exceed the top quartile by 0.08sd and in rd 0.24sd per decade. In early adolescence, the lowest quartile trails the top quartile in m (–0.03sd), but exceeds it in rd (0.12sd). Among older students, the lowest SES quartile continues to outpace the highest quartile on both m (0.02sd) and rd (0.12sd) tests. Median trends from all surveys in both subjects show greater growth for the lowest quartile across all three age groups—in m 0.19sd, 0.08sd, and 0.09sd; in rd 0.06sd, 0.11sd, and 0.12sd, respectively. In sum, there is little evidence of a downward shift in the rate of progress made by low-SES (relative to high SES) Asian students as they age.

Consistency of Effects Across Different SES Indicators

As mentioned, the SES index is constructed from student reports of parental education and possessions in the home. To ascertain whether we obtain similar results with alternative SES indicators, we estimate trends separately by parental education and by possessions in the home. In addition, we estimate SES trends by eligibility for free and reduced-price lunch, and family structure. For all of these alternative ways of measuring SES, we generally see more progress for students from less-advantaged backgrounds than from those who are more advantaged (see text in Appendix, Tables 15 and 27, 28, 29, 30, 31, 32, 33, 34, and 35 for details).

Discussion

Our discussion proceeds as follows: 1) summary; 2) study limitations; 3) m-rd differentials; 4) agency effects; 5) age differentials; 6) ethnic differences; 7) SES differences; and 8) trend persistence.

Summary

Despite agency effects, many findings are robust to most estimations. Most important, progress in student achievement by cohorts of students in the USA is observed in 15 of 17 surveys. Only the two PISA surveys suggest retrogression. Much greater progress is observed in m (median estimate: 0.19sd) than in rd (0.04sd). A similar differential is observed in other countries for 4th-grade performance on TIMSS m and PIRLS rd tests.

Upward trends in m are steeper for students tested at a younger age (0.31sd) than for those tested in the middle years (0.17sd) or toward the end of high school (0.06sd), but rd gains, always less than m gains, do not vary as much by age tested (0.08sd, 0.03sd, 0.02sd for the three categories, respectively). Heterogeneous effects by gender are small. By comparison, a number of ethnic heterogeneities are sizable. The trend in both rd and m is more steeply upward for cohorts of nonwhite students than for white ones. The largest group difference is the median trend disparity between Asian and white cohorts (0.09sd in m and 0.15sd in rd). The median differentials between Hispanic and white cohorts are 0.11sd in m and 0.06sd in rd. The black-white differentials are 0.11sd in m and 0.09sd in rd. When students are tested at an earlier age, the median upward trend is greater for Asian, white, black, and Hispanic students in the lowest SES categories than for those in the highest ones, but for all except Asians that difference fades as students age. For the other three ethnic groups, the highest SES quartile shows a greater rate of progress when students are tested as they are about to leave secondary schooling. Among Asian students, the lowest quartile shows greater progress (except for 8th grade LTT rd and NAEP m) no matter at what age the student is tested. The rate of change for cohorts born as of 1990 differs from those born earlier, but the direction of the shift depends on the subject. In m, the median estimate indicates a recent flattening of the upward trend (–0.08sd), but in rd a steeper upward trend (0.08) is registered.

Limitations

Although this descriptive study is unable to estimate causal effects, it does address an important question—how much progress in math and reading have cohorts of US students made over the past half century? The question is of interest to principals for the very good reason that the rate of student progress is critical both for future generations and for the nation’s well-being. Results should be interpreted cautiously because large agent effects are observed. To minimize dependence on any specific data-collection strategy, we focus on consistency of results across agents and, to minimize influence of outliers on the estimates, we report median—not mean—results when summarizing information across surveys.

Estimates of heterogeneities are subject to some classification measurement error. Student background characteristics are not measured in identical ways by all agents (see Tables 12, 13, 14 and 15). For survey waves conducted by each agent, we standardize education and possession indicators used in the SES index, but the meaning of categories may change with the passage of time. For example, a high school diploma may have a different meaning at the end of the period than it does at its beginning.

Math-Reading Differentials

The decadal rates of change in m (median estimate: 0.19sd) than in rd (0.04sd) parallel closely those for the two major types of intelligence. PV’s meta-analysis shows that in recent decades fluid reasoning intelligence has trended upward in industrialized societies at a rate of 0.15sd per decade, a much steeper rate than the 0.03sd upward trend for crystallized knowledge. Similar m-rd differentials appear in nearly all industrialized countries that participate in both the 4th-grade TIMSS and PIRLS surveys, suggesting that moderators are more general than characteristics of family practices or educational systems specific to any one country.

The parallel trends observed here and by PV’s meta-analysis of the two major types of intelligence is probably more than coincidental. The typical m test places greater demands on fluid reasoning intelligence than the typical rd exam does. Mathematics has two domains—language and content (Hole et al., 2018; Shoenfield, 1967). M language consists of definitions such as the symbols in the following equation: X =2 +2. X refers to an unknown number, = to “equal” and + to “plus.” M content consists of theorems about the logical relationships of symbols to one another. One acquires crystallized knowledge as one learns the language of mathematics. But once m language is learned, fluid reasoning is used to manipulate symbols to deduce theorems from defined axioms and solve formulas on tests aligned to school curricula. However, the application of formulas and theorems to real-life problems demands crystallized knowledge of the social and physical environment that goes beyond the calculation of abstract relationships. By contrast, rd performance depends heavily on crystallized knowledge.

Research shows fluid intelligence to be more strongly associated with mathematics than reading (Peng et al., 2019; Spinath et al., 2010; Sternberg et al., 2008), probably because items that test content require reasoning ability in that they require the manipulation of abstract rules and principles (Ackerman & Lohman, 2003; Blair et al., 2005; Geary, 2011). Items on rd tests demand comprehension of elements of the empirical world, including the meaning of words, sentences, paragraphs, and features of the external environment (Barbarin et al., 2008). A longitudinal study of preschool children finds that emergent school vocabulary is associated with gains in verbal intelligence (crystallized knowledge), but not with gains in fluid intelligence (van Tuijl & Leseman, 2007). The study also shows emergent m skills to be associated with gains in fluid reasoning. Studies in neurobiology and brain imaging have identified a distinction between fluid and crystallized intelligence (Blair, 2006; Horn & McArdle, 2007), which show the former to be associated with the brain’s prefrontal cortex. Waltz et al. (1999) find that damage to the prefrontal cortex appears to have little effect on crystallized intelligence.

PV say the differential rate of change for the two types of intelligence could be due to improved nutrition and health care (Lynn, 2009b), disease containment (Eppig et al., 2010; Van Panhuis et al., 2013), and reductions in environmental risks (for example, lead poisoning (Kaufman et al., 2014) and air pollution (Chay & Greenstone, 2003). All these improvements appear to enhance the brain’s analytical capacities during prenatal and infant stages of the life cycle. For example, “fending off aversive pathogens necessitates considerable amounts of energy, thereby removing important resources from brain development in early childhood” (Pietschnig & Voracek, 2015, p. 293). If student performances on tests of m content depend more on fluid reasoning than on crystallized knowledge, but tests of rd content are largely dependent upon the acquisition of crystalized knowledge, then the greater cohort progress in m than rd may be due to factors operating in early childhood and even prior to birth, when brain capacity is most malleable.

Agency Effects

The four survey agents asked to track changes in student achievement are to be applauded for their ambition, objectivity, resourcefulness, and endurance. But the answer to the basic question—how much progress? —remains elusive. Agency effects are substantial in part because temporal linkage via bridging questions—items repeated across waves—is not an exact science. Even more important, agents are responding to direction from principals with multiple, unranked, not always consistent, objectives. Although principals agree that tracking trends is one of their objectives, at least some of them also want the agents to measure student performance against the perceived contemporary curriculum, to compare performances across countries, and to estimate student preparation for life beyond schooling. As a result, trend estimates are likely to vary by an amount that well exceeds that which can be attributed to random sampling variation. At early adolescence, the median rd estimate varies from –0.02sd per decade on the PISA test to 0.12sd per decade on the NAEP test, a range of 0.14sd per decade. In m, the median estimate ranges wildly from –0.10sd per decade retrogression on the PISA m test to 0.27sd on the NAEP m test, a range of 0.37sd per decade. Depending on which agency’s estimate is taken as authoritative, the public commentator can either lament the downward dive in American education or celebrate extraordinary advances forward.

NAEP and TIMSS-PIRLS Outliers

Two surveys—the NAEP and the TIMSS-PIRLS—show the most cohort progress. Both are designed to test knowledge and skills against the contemporary curriculum. Both agencies could be overestimating the rate of progress if the current curriculum sets lower expectations than those set previously. In other words, both NAEP and TIMSS-PIRLS could be adjusting the difficulty of their tests downward in the process of fixing questions to a contemporary curriculum.

PISA Math Outlier

The negative trend in PISA m (–0.10sd per decade) stands out as an exception to the positive m trends estimated by the other agents. The result is not limited to the USA. Across the industrialized world, PISA finds hardly any gains in either m or rd, even while TIMSS shows large gains in m at 4th grade. Meanwhile, PIRLS finds only small gains in 4th grade rd. That the TIMMS-PIRLS findings show sharp differences between subjects in many countries, while PISA finds hardly any, suggests that PISA places relatively equal emphasis on crystallized knowledge in both its m and rd tests while the TIMSS m performance does not.

Since PISA testing only began with the cohort born in 1985, it might be thought that PISA m has identified a recent downturn in performance at age 15. But other surveys, though at times reporting diminished m progress for cohorts born as of 1990, do not show the same retrogression (Table 4). Another possible explanation could be the reliability of tests administered at age 15. Some have argued that contemporary high-school students, even those as young as age 15, are taking low-stakes tests less seriously than cohorts did in the past (Rindermann & Thompson, 2013). As testing has become increasingly pervasive and controversial in popular discourse, older students may increasingly see PISA math as a test that can be treated less seriously than other high-stakes tests (SAT, ACT, Advanced Placement, and the like). This phenomenon could generate the appearance of deterioration in student achievement. But if the cause is increasing test skepticism, it should also appear on LTT and NAEP tests administered to still older students. It is true that cohorts of these older students do not display the same growth rate as cohorts tested at a younger age, but neither do they show the pronounced negative trend that PISA m reports.

It is more likely the PISA m exception is due to the greater demands PISA places on crystallized knowledge relative to fluid reasoning. Given PISA’s focus on “preparation for life,” its tests are less focused than TIMSS’ on reasoning, solving routine problems, and the application of concepts to numerical problems (Dossey et al., 2006). According to Hole et al. (2018), p. 15), “more than two-thirds of the PISA mathematics items are independent of both mathematical results (theorems) and formulas.” Both they and Wu (2009b) conclude that m theory is tested more frequently by TIMSS than by PISA. Wu (2009b, p. 7) says “around half of the TIMSS items are not likely to appear in the PISA test. This proportion is surprisingly high. It could mean that a large part of mathematics taught in schools is not included in the PISA test.” Nohara and Goldstein (2001) find that 97% of PISA items dealt with real-life situations (items requiring knowledge of the world), whereas only 48% items in NAEP and 44% items in TIMSS are so classified. Others also report that PISA has a disproportionately higher representation of items classified as data items than either NAEP or TIMSS (Grønmo & Olsen, 2008; Neidorf et al., 2006; Nohara & Goldstein, 2001; Wu, 2009a). Wu (2009a) says the difference in the content of PISA and TIMSS tests is the most likely explanatory factor for differential performances on the two tests in the countries she observed. Wu (2009a, pp. 44–45) also reports “there is a very high correlation between PISA mathematics and PISA reading scores. . . . The overlap between document reading (e.g., graphs, charts and tables) and data interpretation in mathematics becomes blurred.”

PISA trends differ from those reported by other surveys in still another important respect: PISA shows much less retrogression for students in the bottom than in the top quarter of the SES distribution. The PISA test could be the one and only honest broker, telling the truth about the “best and the brightest” in the USA. Or it could be that the bar set for those for whom it has the highest expectations has been drifting upward. PISA could be inadvertently setting steadily higher standards for what it takes to be prepared to assume high status positions in the larger society, as demands for high performance appear to shift upward.

At the same time, PISA’s desire to expand its reach across the globe could inadvertently encourage the selection of less-demanding questions for those preparing for low-status occupations. There is no reason to think that PISA is deliberately adjusting its tests in this manner. Still, PISA has been able, with increasing success, to enlist the participation of non-OECD countries with a high density of low-SES students (NCES, 2021b). Further, PISA, more than the other agents, has cut the link between curriculum and testing, leaving the agency to imagine for itself what is needed to be ready for life at age 15. There is no direct evidence that the difficulty of PISA test questions has been drifting upward for high-SES students, downward for low-SES ones, but trends by SES reported by PISA are strikingly different from those observed by the other agents. Whatever the cause of its exceptional findings, the range of results across agents emphasizes the danger of ignoring agency effects when estimating trends.

Age Effects

We generally observe steeper upward trends in m for students tested at a younger age than for those tested at an older one. The age differential is more pronounced in m than in rd. TIMSS also shows much steeper gains in other industrialized countries for students tested in m at a younger age rather than in early adolescence. All these findings are consistent with intelligence research that suggests gains in fluid reasoning are realized in early childhood, perhaps because of improvements in nutrition and reductions in disease and environmental hazards (Lynn, 2013; Pietschnig & Voracek, 2015). Others have found the association between fluid intelligence and academic performance decreases as the child grows older (Ackerman & Lohman, 2003; Willingham, 1974). The early stages of developing foundational skills in numerical knowledge and calculation involve fluid intelligence (Fuchs et al., 2006; Östergren & Träff, 2013). In later stages children can retrieve mathematical facts for the foundational skills from memory, thereby reducing the role of fluid intelligence (Locuniak & Jordan, 2008), though other complex mathematical tasks such as fractions and algebra which are built on foundational skills, continue to place demands upon fluid reasoning (Fuchs et al., 2006; Jordan et al., 2013).

Age differentials might be driven by improved test-taking instruction combined with better coordination between instruction and test items (teaching to the test) among younger students. But these are low-stakes tests not used for any accountability purposes at the student, teacher, or school level. Nor can this explanation account for the larger gains in m relative to rd in the many industrialized countries that do not have school accountability testing. O’Keefe and Rodgers (2020) show that Flynn effects could be overestimated in studies that compare students of different ages on age-normed tests. That can have little effect on the results reported here, since changes over time are estimated by tracking performances of cohorts born at different times at a time when they have reached the same age or grade level.