Abstract

This paper presents the first functional encryption \((\textsf{FE})\) scheme for the attribute-weighted sum functionality that supports the uniform model of computation. In such an FE scheme, encryption takes as input a pair of attributes (x, z) where x is public and z is private. A secret key corresponds to some weight function f, and decryption recovers the weighted sum f(x)z. In our scheme, both the public and private attributes can be of arbitrary polynomial lengths that are not fixed at system setup. The weight functions are modelled as \(\text {Logspace Turing machines}\). Prior schemes could only support non-uniform Logspace. The proposed scheme is proven adaptively simulation secure under the well-studied symmetric external Diffie–Hellman assumption against an arbitrary polynomial number of secret key queries both before and after the challenge ciphertext. This is the best possible security notion that could be achieved for FE. On the technical side, our contributions lie in extending the techniques of Lin and Luo [EUROCRYPT 2020] devised for indistinguishability-based payload hiding attribute-based encryption for uniform Logspace access policies and the “three-slot reduction” technique for simulation-secure attribute-hiding FE for non-uniform Logspace devised by Datta and Pal [ASIACRYPT 2021] to the context of simulation-secure attribute-hiding FE for uniform Logspace.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Functional encryption (FE), formally introduced by Boneh et al. [24] and O’Neill [69], redefines the classical encryption procedure with the motivation to overcome the limitation of the “all-or-nothing” paradigm of decryption. In a traditional encryption system, there is a single secret key such that a user given a ciphertext can either recover the whole message or learns nothing about it, depending on the availability of the secret key. FE in contrast provides fine grained access control over encrypted data by generating artistic secret keys according to the desired functions of the encrypted data to be disclosed. More specifically, in a public-key FE scheme for a function class \(\mathcal {F}\), there is a setup authority which produces a master secret key and publishes a master public key. Using the master secret key, the setup authority can derive secret keys or functional decryption keys \(\textsf{SK}_f\) associated with functions \(f \in \mathcal {F}\). Anyone can encrypt messages \(\textsf{msg}\) belonging to a specified message space \(\textsf{msg}\in \mathbb {M}\) using the master public key to produce a ciphertext \(\textsf{CT}\). The ciphertext \(\textsf{CT}\) along with a secret key \(\textsf{SK}_f\) recovers the function of the message \(f(\textsf{msg})\) at the time of decryption, while unable to extract any other information about \(\textsf{msg}\). More specifically, the security of FE requires collusion resistance meaning that any polynomial number of secret keys together cannot gather more information about an encrypted message except the union of what each of the secret keys can learn individually.

By this time, we have a plethora of exciting works on \(\textsf {FE}\). These works can be broadly classified in two categories. The first line of works attempted to build \(\textsf{FE}\) for general functionalities [12,13,14,15,16,17, 20, 23, 25,26,27,28, 34, 41,42,50, 52, 53, 55, 60, 74]. However, those constructions were either only secure against bounded collusion and/or extremely inefficient. With the motivation to overcome these limitations, a second line of work attempted to design efficient FE schemes supporting arbitrary collusion of users for practically relevant functionalities, e.g., linear/quadratic functions [1,2,3,4,5,6,7,8,9,10,11, 21, 29, 32, 33, 35, 36, 38, 40, 54, 56, 61, 63,64,65,66, 70,71,72, 76, 77]. In this work, we advance the state of the art along the latter research direction.

\(\textsf {FE}\) for Attribute-Weighted Sum Recently, Abdalla et al. [8] and Datta and Pal [38] studied FE schemes for a new class of functionalities termed as “attribute-weighted sums” (\(\textsf{AWS}\)). This is a generalization of the inner product functional encryption (IPFE) [3, 11]. In such a scheme, an attribute pair (x, z) is encrypted using the master public key of the scheme, where x is a public attribute (e.g., demographic data) and z is a private attribute containing sensitive information (e.g., salary, medical condition, loans, college admission outcomes). A recipient having a secret key corresponding to a weight function f can learn the attribute-weighted sum f(x)z. The attribute-weighted sum functionality appears naturally in several real life applications. For instance, as discussed by Abdalla et al. [8] if we consider the weight function f as a Boolean predicate, then the attribute-weighted sum functionality f(x) would correspond to the average z over all users whose attribute x satisfies the predicate f. Important practical scenarios include average salaries of minority groups holding a particular job (\(z = \) salary) and approval ratings of an election candidate amongst specific demographic groups in a particular state (\(z = \) rating).

The works of [8, 38] considered a more general case of the notion where the domain and range of the weight functions are vectors, in particular, the attribute pair of public/private attribute vectors \((\varvec{x}, \varvec{z})\), which is encrypted to a ciphertext \(\textsf{CT}\). A secret key \(\textsf{SK}_f\) generated for a weight function f allows a recipient to learn \(f(\varvec{x})^{\top } \varvec{z}\) from \(\textsf{CT}\) without leaking any information about the private attribute \(\varvec{z}\).

The FE schemes of [8, 38] support an expressive function class of arithmetic branching programs (ABPs) which captures non-uniform Logspace computations. Both schemes were built in asymmetric bilinear groups of prime order and are proven secure in the simulation-based security model, which is known to be the desirable security model for FE [24, 69], under the (bilateral) k-Linear (k-Lin)/(bilateral) Matrix Diffie–Hellman (\(\textsf{MDDH}\)) assumption. The FE scheme of [8] achieves semi-adaptive security, where the adversary is restricted to making secret key queries only after making the ciphertext queries, whereas the FE scheme of [38] achieves adaptive security, where the adversary is allowed to make secret key queries both before and after the ciphertext queries.

However, as mentioned above, ABP is a non-uniform computational model. As such, in both the FE schemes [8, 38], the length of the public and private attribute vectors must be fixed at system setup. This is clearly a bottleneck in several applications of this primitive especially when the computation is done over attributes whose lengths vary widely among ciphertexts and are not fixed at system setup. For instance, suppose a government hires an external audit service to perform a survey on average salary of employees working under different job categories in various companies to resolve salary discrepancy. The companies create salary databases (X, Z) where \(X = (x_i)_i\) contains public attributes \(x_i = (\text {job title}, \text {department}, \text {company name})\) and \(Z = (z_i)_i\) includes private attribute \(z_i = \text {salary}\). To facilitate this auditing process without revealing individual salaries (private attribute) to the auditor, the companies encrypt their own database (X, Z) using an FE scheme for AWS. The government provides the auditor a functional secret key \(\textsf{SK}_f\) for a function f that takes input a public attribute X and outputs 1 for \(x_i\)’s for which the “job title” matches with a particular job, say manager. The auditor decrypts ciphertexts of the various companies using \(\textsf{SK}_f\) and calculates the average salaries of employees working under that job category in those companies. Now, if the existing FE schemes for AWS [8, 38] supporting non-uniform computations are employed then to make the system sustainable the government would have to fix a probable size (an upper bound) of the number of employees in all the companies. Also, the size of all ciphertexts ever generated would scale with that upper bound even if the number of employees in some companies, at the time of encryption, are much smaller than that upper bound. This motivates us to consider the following problem.

Open problem Can we construct an \(\textsf{FE}\) scheme for \(\textsf{AWS}\)in some uniform computational model capable of handling public/private attributes of arbitrary length?

Our results This work resolves the above open problem. For the first time in the literature, we formally define and construct an FE scheme for unbounded \(\textsf{AWS}\) (\(\textsf{UAWS}\)) functionality where the setup only depends on the security parameter of the system and the weight functions are modeled as \(\text {Turing machines}\). The proposed \(\textsf{FE}\) scheme supports both public and private attributes of arbitrary lengths. In particular, the public parameters of the system are completely independent of the lengths of attribute pairs. Moreover, the ciphertext size is compact meaning that it does not grow with the number of occurrences of a specific attribute in the weight functions which are represented as \(\text {Logspace Turing machines}\). As a special case, we also obtain a FE scheme for attribute-weighted sums where the weight functions are modelled as deterministic finite automata (DFA). The schemes are adaptively simulation secure against the release of an unbounded (polynomial) number of secret keys both before and after the challenge ciphertext. As noted in [24, 69], simulation security is the best possible and the most desirable model for FE. Moreover, simulation-based security also captures indistinguishability-based security but the converse does not hold in general.

Our FE for \(\textsf{UAWS}\) is proven secure in the standard model based on the symmetric external Diffie–Hellman (SXDH) assumption in the asymmetric prime-order pairing groups. Our main result in the paper is summarized as follows.

Theorem 1

(Informal) Assuming the \(\textsf{SXDH}\) assumption holds in asymmetric pairing groups of prime-order, there exists an adaptively simulation secure \(\textsf{FE}\) scheme for the attribute weighted sum functionality with the weight functions modeled as \(\text {Logspace Turing machines}\) such that the lengths of public and private attributes are unbounded and can be chosen at the time of encryption, the ciphertexts are compact with respect to the multiple occurrences of attributes in the weight functions.

Viewing \(\textsf{IPFE}\) as a special case of \(\textsf{FE}\) for \(\textsf{AWS}\), we also obtain the first adaptively simulation secure \(\textsf{IPFE}\) scheme for unbounded length vectors (UIPFE), i.e., the length of the vectors is not fixed in setup. Observe that all prior simulation secure \(\textsf{IPFE}\) [8, 10, 38, 76] could only support bounded length vectors, i.e., the lengths must be fixed in the setup. On the other hand, the only known construction of UIPFE [71] is proven secure in the indistinguishability-based model.

The proposed FE construction is semi-generic and extends the frameworks of the works of Lin and Luo [62] and Datta and Pal [38]. Lin and Luo [62] develop an adaptively secure attribute-based encryption (ABE) scheme for Logspace Turing machines proven secure in the indistinguishability-based model. Although the input length of their ABE is unbounded, but an ABE is an “all-or-nothing” type primitive which fully discloses the message to a secret key generated for accepting policies. Further, the ABE of [62] is only payload hiding secure meaning that the ciphertexts themselves can leak sensitive information about the associated attributes. In contrast, our FE for UAWS provides more fine grained encryption methodologies where the ciphertexts reveal nothing about the private part of associated attributes but their weighted sums. Our FE construction depends on two building blocks, an arithmetic key garbling scheme (AKGS) for \(\text {Logspace Turing machines}\) which is an information-theoretic tool and a function hiding (bounded) slotted \(\textsf{IPFE}\) scheme which is a computational primitive. An important motivation of [62] is to achieve compact ciphertexts for ABEs. In other words, they get rid of the so-called one-use restriction from prior adaptively secure ABEs [19, 30, 31, 57,58,59, 67, 68, 75] by replacing the core information-theoretic step with the computational primitive of function hiding slotted IPFE. The FE of [38] is able to accomplish this property for non-uniform computations by developing a three-slot encryption technique. Specifically, three slots are utilized to simulate the label functions obtained from the underlying AKGS garbling for pre-ciphertext secret keys. Note that, the three-slot encryption technique is an extension of dual system encryption methodologies [57, 58, 73]. In this work, we extend their frameworks [38, 62] to avoid the one-use restriction in the case of FE for UAWS that computes weights via \(\text {Logspace Turing machines}\). It is non-trivial to implement such three-slot techniques in the uniform model. The main reason behind this fact is that in case of ABPs [38] the garbling randomness can be sampled knowing the size of ABPs, and hence the garbling algorithm is possible to run while generating secret keys. However, in the case of \(\textsf{AKGS}\) for \(\text {Logspace Turing machines}\), the garbling randomness depends on the size of the \(\text {Turing machine}\) as well as its input lengths. Consequently, it is not possible to execute the garbling in the key generation or encryption algorithms as the information about the garbling randomness is distributed between these two algorithms. We tackle this by developing a more advanced three-slot encryption technique with distributed randomness which enables us to carry out such a sophisticated procedure for \(\text {Logspace Turing machines}\).

Our FE for UAWS is a one-slot scheme. This means one pair of public–private attribute can be processed in a single encryption. An unbounded-slot FE for UAWS [8] enables us to encrypt unbounded many such pairs in a single encryption. Abdalla et al. [8] devise a generic transformation for bootstrapping from one-slot to unbounded-slot scheme. However, this transformation only works if the underlying one-slot scheme is semi-adaptively secure [38]. Thus, if we restrict our scheme to semi-adaptive security then using such transformations [8, 38] our one-slot FE scheme can be bootstrapped to support unbounded slots.

Current vs. preliminary versions A preliminary version [39] of this work has appeared in Asiacrypt 2022. This paper includes a significant and considerable amount of technical contributions compared to the preliminary version [39]. The previous version contains only the constructions of our single key, single ciphertext secure one-slot FE scheme and the one-slot FE scheme for Logspace without providing any formal treatment to the security analysis of these protocols. The preliminary version presents a very high level idea about the security analysis. Therefore, most of our technical contributions are not formalized in that version. We emphasize that representing and formalizing a proper sequence of hybrid experiments for the security analysis are crucial for understanding the technical challenges and their solutions which we provide in the current version. Especially, we not only describe a proof sketch (for each security analysis) but also depict the hybrid experiment in several tables (see Sects. 5 and 6) that clearly gives a concrete idea about the steps to prove the adaptive simulation security of our schemes. For example, the three-slot reduction mechanism devised in this paper for handling the pre-ciphertext keys of the one-slot FE scheme for \(\text {Logspace Turing machines}\) are described in Tables 14, 15 and 16. Moreover, in Sect. 7 of the current version, we build a simpler FE scheme for attribute-weighted sums for deterministic finite automata or DFA. Note that, weight functions realized by DFA captures many real-life applications involving computation on unbounded data (or attributes) such as network logging, tax returns and virus scanners. Hence, our FE for DFA becomes more effective compared to the FE for \(\text {Logspace Turing machines}\) for such potential applications.

Organization We discuss a detailed technical overview of our results in Sect. 2. We provide useful notations, related definitions, and complexity assumptions in Sect. 3. We give a description of AKGS construction for evaluating Turing machines via a sequence of matrix multiplications in Sect. 4. Our construction of a single key and single ciphertext secure \(\textsf{FE}\) scheme for \(\textsf{UAWS}\) can be found in Sect. 5. We provide the complete security analysis of the scheme in Sect. 5.2. Next, we build our full fledge 1-slot \(\textsf{FE}\) scheme for \(\textsf{UAWS}\) and prove its security in Sect. 6. We present our FE scheme for attribute-weighted sums for DFA in Sect. 7.

2 Technical overview

We now present an overview of our techniques for achieving an \(\textsf{FE}\) scheme for \(\textsf{AWS}\) functionality which supports the uniform model of computations. We consider prime-order bilinear pairing groups \((\mathbb {G}_1, \mathbb {G}_2, \mathbb {G}_{T }, g_1, g_2, e)\) with a generator \(g_{T } = e(g_1, g_2)\) of \(\mathbb {G}_{T }\) and denote \([\![a]\!]_i\) by an element \(g_i^a \in \mathbb {G}_i\) for \(i \in \{1, 2, T \}\). For any vector \(\varvec{z}\), the k-th entry is denoted by \(\varvec{z}[k]\) and [n] denotes the set \(\{1, \ldots , n\}\).

The unbounded AWS functionality In this work, we consider an unbounded \(\textsf{FE}\) scheme for the \(\textsf{AWS}\) functionality for \(\text {Logspace Turing machines}\) (or the class of \(\textsf {L}\)), in shorthand it is written as \(\textsf{UAWS}^{\textsf {L}}\). More specifically, the setup only takes as input the security parameter of the system and is independent of any other parameter, e.g., the lengths of the public and private attributes. \(\textsf{UAWS}^{\textsf {L}}\) generates secret keys \(\textsf{SK}_{(\varvec{M}, \mathcal {I}_{\varvec{M}})}\) for a tuple of \(\text {Turing machines}\) denoted by \(\varvec{M} = \{M_k\}_{k \in \mathcal {I}_{\varvec{M}}}\) such that the index set \(\mathcal {I}_{\varvec{M}}\) contains any arbitrary number of \(\text {Turing machines}\) \(M_k \in \textsf {L}\). The ciphertexts are computed for a pair of public–private attributes \((\varvec{x}, \varvec{z})\) whose lengths are arbitrary and are decided at the time of encryption. Precisely, the public attribute \(\varvec{x}\) of length N comes with a polynomial time bound \(T= \textsf{poly}(N)\) and a logarithmic space bound \(S\), and the private attribute \(\varvec{z}\) is an integer vector of length n. At the time of decryption, if \(\mathcal {I}_{\varvec{M}}\subseteq [n]\) then it reveals an integer value \(\sum _{k \in \mathcal {I}_{\varvec{M}}} M_k(\varvec{x})\varvec{z}[k]\). Since \(M_k(\varvec{x})\) is binary, we observe that the summation selects and adds the entries of \(\varvec{z}\) for which the corresponding \(\text {Turing machine}\) accepts the public attribute \(\varvec{x}\). On the other hand, if \(\mathcal {I}_{\varvec{M}}]\) is not contained in [n] then the decryption cannot recover a meaningful information. An appealing feature of the functionality is that the secret key \(\textsf{SK}_{(\varvec{M}, \mathcal {I}_{\varvec{M}})}\) can decrypt ciphertexts of unbounded length attributes in unbounded time/(logarithmic) space bounds. In contrast, existing \(\textsf{FE}\) for \(\textsf{AWS}\)s [8, 38] are designed to handle non-uniform computations that can only handle attributes of bounded lengths and the public parameters grows linearly with the lengths. Next, we describe the formulation of \(\text {Turing machines}\) in \(\textsf {L}\) considered in \(\textsf{UAWS}^{\textsf {L}}\).

Turing machines formulation We introduce the notations for Logspace Turing machines (\(\textsf{TM}\)) over binary alphabets. A \(\text {Turing machine}\) \(M = (Q, \varvec{y}_{\textsf{acc}}, \delta )\) consists of Q states with the initial state being 1 and a characteristic vector \(\varvec{y}_{\textsf{acc}} \in \{0, 1\}^Q\) of accepting states and a transition function \(\delta \). When an input \((\varvec{x}, N, T, S)\) with length N and time, space bounds \(T, S\) is provided, the computation of \(M|_{N, T, S}(\varvec{x})\) is performed in \(T\) steps passing through configurations \((\varvec{x}, (i, j, \varvec{W}, q))\) where \(i \in [N]\) is the input tape pointer, \(j \in [S]\) is the work tape pointer, \(\varvec{W} \in \{0, 1\}^{S}\) the content of work tape, and \(q \in [Q]\) the state under consideration. The initial internal configuration is \((1, 1, {{\textbf {0}}}_{S}, 1)\) and the transition function \(\delta \) determines whether, on input \(\varvec{x}\), it is possible to move from one internal configuration \((i, j, \varvec{W}, q)\) to the next \(((i', j', \varvec{W}', q'))\), namely if \(\delta (q, \varvec{x}[i], \varvec{W}[j]) = (q', w', \mathrm {\varDelta } i, \mathrm {\varDelta } j)\). In other words, the transition function \(\delta \) on input state q, an input bit \(\varvec{x}[i]\) and an work tape bit \(\varvec{W}[j]\), outputs the next state \(q'\), the new bit \(w'\) overwriting \(w = \varvec{W}[j]\) by \(w' = \varvec{W}'[j]\) (keeping \(\varvec{W}[j''] = \varvec{W}'[j'']\) for all \(j \ne j''\)), and the directions \(\mathrm {\varDelta } i, \mathrm {\varDelta } j\in \{0, \pm 1\}\) to move the input and work tape pointers.

Our construction of adaptively simulation secure \(\textsf{UAWS}^{\textsf {L}}\) depends on two building blocks: \(\textsf{AKGS}\) for \(\text {Logspace Turing machines}\), an information-theoretic tool and slotted \(\textsf{IPFE}\), a computationally secure tool. We only need a bounded slotted \(\textsf{IPFE}\), meaning that the length of vectors of the slotted \(\textsf{IPFE}\) is fixed in the setup, and we only require the primitive to satisfy adaptive indistinguishability based security. Hence, our work shows how to (semi-)generically bootstrap a bounded \(\textsf{IPFE}\) to an unbounded \(\textsf{FE}\) scheme beyond the inner product functionality. Before going to describe the \(\textsf{UAWS}^{\textsf {L}}\), we briefly discuss these two building blocks.

AKGS for Logspace Turing machines In [62], the authors present an ABE scheme for \(\text {Logspace Turing machines}\) by constructing an efficient \(\textsf{AKGS}\) for sequence of matrix multiplications over \(\mathbb {Z}_p\). Thus, their core idea was to represent a \(\text {Turing machine}\) computation through a sequence of matrix multiplications. An internal configuration \((i, j, \varvec{W}, q)\) is represented as a basis vector \(\varvec{e}_{(i, j, \varvec{W}, q)}\) of dimension \(NS2^{S}Q\) with a single 1 at the position \((i, j, \varvec{W}, q)\). We define a transition matrix given by

such that \(\varvec{e}_{(i, j, \varvec{W}, q)}^{\top }{{\textbf {M}}}(\varvec{x})\) \(=\) \(\varvec{e}_{(i', j', \varvec{W}', q')}^{\top }\). This holds because the \(((i, j, \varvec{W}, q), (i', j', \varvec{W}', q'))\)-th entry of \({{\textbf {M}}}(\varvec{x})\) is 1 if and only if there is a valid transition from \((q, \varvec{x}[i], \varvec{W}[j])\) to \((q', \varvec{W}'[j], i'-i, j'-j)\). Therefore, one can write the \(\text {Turing machine}\) computation by right multiplying the matrix \({{\textbf {M}}}(\varvec{x})\) for \(T\) times with the initial configuration \(\varvec{e}_{(1, 1, \varvec{0}_{S}, 1)}^{\top }\) to reach of one of the final configurations \({{\textbf {1}}}_{[N]\times [S]\times \{0, 1\}^{S}} \otimes \varvec{y}_{\textsf{acc}}\). In other words, the function \(M|_{N, T, S}(\varvec{x})\) is written as

Thus, [62] constructs an \(\textsf{AKGS}\) for the sequence of matrix multiplications as in Eq. (1). Their \(\textsf{AKGS}\) is inspired from the randomized encoding scheme of [18] and homomorphic evaluation procedure of [22]. Given the function \(M|_{N, T, S}\) over \(\mathbb {Z}_p\) and two secrets \(z, \beta \), the garbling procedure computes the label functions

and outputs the coefficients of these label functions \(\ell _{\textsf{init}}, \varvec{\ell }_t = (\varvec{\ell }_{t, \theta })_{\theta }\) where \(\theta = (i, j, \varvec{W}, q)\) and \(\varvec{r}_t \leftarrow \mathbb {Z}_p^{[N] \times [S] \times \{0, 1\}^{S} \times [Q]}\). To compute the functional value for an input \(\varvec{x}\), the evaluation procedure adds \(\ell _{\textsf{init}}\) with a telescoping sum \(\varvec{e}_{(1, 1, \varvec{0}_{S}, 1)}^{\top }\cdot \sum _{t= 1}^{T} ({{\textbf {M}}}_{N, S}(\varvec{x}))^{t-1} \varvec{\ell }_t\) and outputs \(zM|_{N, T, S}(\varvec{x}) + \beta \). More precisely, it uses the fact that

A crucial and essential property that the \(\textsf{AKGS}\) have is the linearity of evaluation procedure, meaning that the procedure is linear in the label function values \(\ell \)s and, hence can be performed even if the \(\ell \)s are available in the exponent of a group. Lin and Luo identify two important security notions of \(\textsf{AKGS}\), jointly called piecewise security. Firstly, \(\ell _{\textsf{init}}\) can be reversely sampled given a functional value and all other label values, which is known as the reverse sampleability. Secondly, \(\ell _{t}\) is random with respect to the subsequent label functions \(L_{t', \theta }\) for all \(t' > t\) and z, which is called the marginal randomness.

Function hiding slotted IPFE A normal \(\textsf{IPFE}\) computes inner product between two vectors \(\varvec{v}\) and \(\varvec{u}\) using a secret key \(\textsf{IPFE}.\textsf{SK}_{\varvec{v}}\) and a ciphertext \(\textsf{IPFE}.\textsf{CT}_{\varvec{u}}\). The \(\textsf{IPFE}\) is said to satisfy indistinguishability-based security if an adversary having received many functional secret keys \(\{\textsf{IPFE}.\textsf{SK}_{\varvec{v}}\}\) remains incapable to extract any information about the message vector \(\varvec{u}\) except the inner products \(\{{\varvec{v}} \cdot {\varvec{u}}\}\). It is easy to observe that if encryption is done publicly then no security can be ensured about \(\varvec{v}\) from the secret key \(\textsf{IPFE}.\textsf{SK}_{\varvec{v}}\) [36] due to the linear functionality. However, if the encryption algorithm is private then \(\textsf{IPFE}.\textsf{SK}_{\varvec{v}}\) can be produced in a fashion to hide sensitive information about \(\varvec{v}\). This is termed as function hiding security notion for private key \(\textsf{IPFE}\). Slotted \(\textsf{IPFE}\) [64] is a hybrid of public and private \(\textsf{IPFE}\) where vectors are divided into public and private slots, and function hiding is only guaranteed for the entries in the private slots. Further, Slotted \(\textsf{IPFE}\)s of [62, 64] generate secret keys and ciphertexts even when the vectors are given in the exponent of source groups whereas decryption recovers the inner product in the target group.

2.1 From all-or-nothing to functional encryption

We are all set to describe our approach to extend the framework of [62] from all-or-nothing to functional encryption for the uniform model of computations. In a previous work of Datta and Pal [38], an adaptively secure FE for \(\textsf{AWS}\) functionality was built for a non-uniform model of computation, ABPs to be precise. Their idea was to garble a function \(f_k(\varvec{x})\varvec{z}[k] + \beta _k\) during key generation (keeping \(\varvec{z}[k]\) and \(\varvec{x}\) as variables) and compute IPFE secret keys to encode the m labels, and a ciphertext associated to a tuple \((\varvec{x}, \varvec{z})\) consists of a collection of \(\textsf{IPFE}\) ciphertexts which encode the attributes:

Therefore, using the inner product functionality, decryption computes the actual label values with \(\varvec{x}, \varvec{z}[k]\) as inputs and recovers \(f_k(\varvec{x})\varvec{z}[k] + \beta _k\) for each k, and hence finally \(\sum _{k} f_k(\varvec{x})\varvec{z}[k]\). However, this approach fails to build \(\textsf{UAWS}^{\textsf {L}}\) because we can not execute the \(\textsf{AKGS}\) garbling for the function \(M_k|_{N, T, S}(\varvec{x})\varvec{z}[k]+\beta _k\) at the time of generating keys. More specifically, the garbling randomness depends on parameters \(N, T, S, n\) that are unknown to the key generator. Note that, in contrast to the \(\textsf {ABE}\) of [62] where \(\varvec{z}\) can be viewed as a payload (hence \(n=1\)), the \(\textsf{UAWS}\) functionality has an additional parameter n (length of \(\varvec{z}\)) the value of which is chosen at the time of encryption. Moreover, the compactness of \(\textsf{UAWS}^{\textsf {L}}\) necessitates the secret key size \(|\textsf{SK}_{(\varvec{M}, \mathcal {I}_{\varvec{M}})}| = O(|\mathcal {I}_{\varvec{M}}|Q)\) to be linear in the number of states Q and the ciphertext size \(|\textsf{CT}_{(\varvec{x}, T, S)}| = O(n TN S2^{S})\) be linear in \(TN S2^{S}\).

The obstacle is circumvented by the randomness distribution technique used in [62]. Instead of computing the \(\textsf{AKGS}\) garblings in key generation or encryption phase, the label values are produced by a joint effort of both the secret key and ciphertext. To do so, the garbling is executed under the hood of \(\textsf{IPFE}\) using pseudorandomness, instead of true randomness. That is, some part of the garbling randomness is sampled in key generation whereas the rest is sampled in encryption. More specifically, every true random value \(\varvec{r}_t[(i, j, \varvec{W}, q)]\) is written as a product \(\varvec{r}_{\varvec{x}}[(t,i,j,\varvec{W})]\varvec{r}_{k, f}[q]\) where \(\varvec{r}_{\varvec{x}}[(t,i,j,\varvec{W})]\) is used in the ciphertext and \(\varvec{r}_{k, f}[q]\) is utilized to encode the transition blocks of \(M_k\) in the secret key. To enable this, the transition matrix associated to \(M_k\) is represented as follows:

\(\begin{array}{ l} {{\textbf {M}}}(\varvec{x})[(i, j, \varvec{W}, q),(i', j', \varvec{W}', q')] \\ = \delta ^{(?)}((i, j, \varvec{W}, q), (i', j', \varvec{W}', q'))\times ~{{\textbf {M}}}_{\varvec{x}[i], \varvec{W}[j], \varvec{W}'[j], i'-i, j'-j}[q, q'] \end{array}\)

where \(\delta ^{(?)}((i, j, \varvec{W}, q), (i', j', \varvec{W}', q'))\) is 1 if there is a valid transition from the configuration \((i, j, \varvec{W}, q)\) to \((i', j', \varvec{W}', q')\), otherwise 0. Therefore, every block of \({{\textbf {M}}}(\varvec{x})[(i, j, \varvec{W}, q),(i', j', \varvec{W}', q')]\) is either a \(Q \times Q\) zero matrix or a transition block that belongs to a small set

The \((i, j, \varvec{W}, q)\)-th block row \({{\textbf {M}}}_{\tau } = {{\textbf {M}}}_{x, w, w', \mathrm {\varDelta } i, \mathrm {\varDelta } j}\) appears at \({{\textbf {M}}}_{N, S}(\varvec{x})[(i, j, \varvec{W}, \textvisiblespace ), (i', j', \varvec{W}', \textvisiblespace )]\) if \(x = \varvec{x}[i], w = \varvec{W}[j], \mathrm {\varDelta } i= i' - i, \mathrm {\varDelta } j= j' - j\), and \(\varvec{W}'\) is \(\varvec{W}\) with j-th entry changed to \(w'\). Thus, every label \(\varvec{\ell }_{k, t}[\mathfrak {i}, q]\) with \(\mathfrak {i} = (i, j, \varvec{W})\) can be decomposed as inner product \({\varvec{v}_{k, q}} \cdot {\varvec{u}_{k, t, i, j, \varvec{W}}}\). More precisely,

so that one can set the vectors

where \(c_{\tau }(\varvec{x}; \varvec{r}_{\varvec{x}})\) (a shorthand of the notation \(c_\tau (\varvec{x},t,i,j,\varvec{W};\varvec{r}_{\varvec{x}})\) [62]) is given by

Similarly, the other labels can be decomposed: \(\ell _{k, \textsf{init}} = (\varvec{r}_{k, f}[1], \beta _k, 0)\cdot (\varvec{r}_{\varvec{x}}[(0, 1, 1, \varvec{0}_{S})], 1, 0) = \beta _k + \varvec{e}_{(1, 1, \varvec{0}_{S}, 1)}^{\top }\varvec{r}_0\) and \(\varvec{\ell }_{k, T+1}[(\mathfrak {i}, q)] = {\widetilde{\varvec{v}}_{k, q}} \cdot {\widetilde{\varvec{u}}_{k, T+1, i, j, \varvec{W}}} = -\varvec{r}_{T}[(\mathfrak {i}, q)] + \varvec{z}[k] \varvec{y}_{k, \textsf{acc}}[q]\) where

A first attempt Armed with this, we now present the first candidate \(\textsf{UAWS}^{\textsf {L}}\) construction in the secret key setting which supports a single key. We consider two independent master keys \(\textsf {imsk}\) and \(\widetilde{\textsf {imsk}}\) of \(\textsf{IPFE}\). For simplicity, we assume the length of the private attribute \(\varvec{z}\) is the same as the number of \(\text {Turing machines}\) present in \(\varvec{M} = (M_k)_{k \in \mathcal {I}_{\varvec{M}}}\), i.e., \(n = |\mathcal {I}_{\varvec{M}}|\). We also assume that each Turing machine in the secret key shares the same set of states.

Observe that the inner products between the ciphertext and secret key vectors yield the label values \([\![\ell _{k, \textsf{init}}]\!]_{T }, [\![\varvec{\ell }_{k, t}]\!]_{T } = [\![(\varvec{\ell }_{k, t, \theta })_{\theta }]\!]_{T }\) for \(\theta = (i, j, \varvec{W}, q)\). Now, the evaluation procedure of \(\textsf{AKGS}\) is applied to obtain the partial values \([\![\varvec{z}[k]M_k|_{N, T, S}(\varvec{x}) + \beta _k]\!]_{T }\). Combining all this values gives the required attribute weighted sum \(\sum _{k}M_k|_{N, T, S}(\varvec{x})\varvec{z}[k]\) Since \(\sum _k \beta _k = 0\).

However, this scheme is not fully unbounded, in particular, the setup needs to know the length of the private attribute. To realise this, let us try to prove the security of the scheme. The main idea of the proof would be to make all the label values \((\varvec{\ell }_{k, t, \theta })_{\theta }\) truly random and simulated except the initial labels \(\ell _{k, \textsf{init}}\) so that one can reversely sample \(\ell _{k, \textsf{init}}\) hardcoded with a desired functional value. Suppose, for instance, the single secret key is queried before the challenge ciphertext. In this case, the honest label values are first hardwired in the ciphertext vectors and then the labels are transformed into their simulated version. This is because the ciphertext vectors are computed after the secret key. So, the first step is to hardwire the initial label values \(\ell _{k, \textsf{init}}\) into the ciphertext vector \(\varvec{u}_{\textsf{init}}\), which indicates that the length of \(\varvec{u}_{\textsf{init}}\) must grow with respect to the number of \(\ell _{k, \textsf{init}}\)’s. The same situation arises while simulating the other label values through \(\varvec{u}_{t, \mathfrak {i}}\). In other words, we need to know the size of \(\mathcal {I}_{\varvec{M}}\) or the length of \(\varvec{z}\) in setup, which is against our desired functionality.

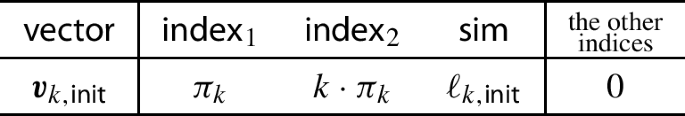

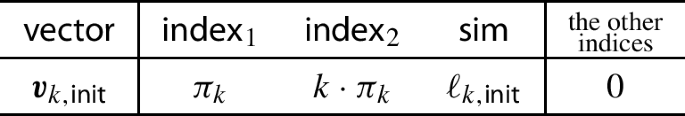

To tackle this, we increase the number of \(\varvec{u}_{\textsf{init}}\) and \(\varvec{u}_{t<T, \mathfrak {i}}\) in the above system. More specifically, each of these vectors are now computed for all \(k \in [n]\), just like \(\widetilde{\varvec{u}}_{k, T+1, \mathfrak {i}}\). Although this fixes the requirement of unboundedness of the system, there is another issue related to the security that must be solved. Note that, in the current structure, there is a possibility of mix-and-match attacks since, for example, \(\widetilde{\varvec{u}}_{k_1, T+1, \mathfrak {i}}\) can be paired with \(\widetilde{\varvec{v}}_{k_2, q}\) and this results in some unwanted attribute weighted sum of the form \(\sum _{k \ne k_1, k_2}M_k(\varvec{x})\varvec{z}[k] + M_{k_1}(\varvec{x})\varvec{z}[k_2] + M_{k_2}(\varvec{x})\varvec{z}[k_1]\). We employ the index encoding technique used in previous works achieving unbounded ABE or \(\textsf{IPFE}\) [68, 71] to overcome the attack. In particular, we add two extra dimensions \(\rho _k(-k, 1)\) in the ciphertext and \(\pi _k(1, k)\) in the secret key for encoding the index k in each of the vectors of the system. Observe that for each \(\text {Turing machine}\) \(M_k\) an independent randomness \(\pi _k\) is sampled. It ensures that an adversary can only recover the desired attribute weighted sum and whenever vectors from different indices are paired only a garbage value is obtained.

Combining the ideas After combining the above ideas, we describe our \(\textsf{UAWS}^{\textsf {L}}\) supporting a single key as follows.

Although the above construction satisfies our desired functionality, preserves the compactness of ciphertexts and resists the aforementioned attack, we face multiple challenges in adapting the proof ideas of previous works [38, 62, 71].

Security challenges and solutions Next, we discuss the challenges in proving the adaptive simulation security of the scheme. Firstly, the unbounded \(\textsf{IPFE}\) scheme of Tomida and Takashima [71] is proved in the indistinguishability-based model whereas we aim to prove simulation security which is much more challenging. The work closer to ours is the FE for \(\textsf{AWS}\) of Datta and Pal [38], but it only supports a non-uniform model of computation and the inner product functionality is bounded. Moreover, since the garbling randomness is distributed in the secret key and ciphertext vectors, we can not adapt their proof techniques [38, 71] in a straightforward manner. Although the ABE scheme of Lin and Luo [62] handles a uniform model of computation, they only consider all-or-nothing type encryptions and hence the adversary is allowed to query secret keys which always fail to decrypt the challenge ciphertext. In contrast, we construct a more advanced encryption mechanism which overcomes all the above constraints of prior works, i.e., our \(\textsf{UAWS}^{\textsf {L}}\) is an adaptively simulation secure functional encryption scheme that supports unbounded inner product functionality with a uniform model of computations over the public attributes.

Our proof technique is inspired by that of [38, 62]. One of the core technical challenges is involved in the case where the secret key is queried before the challenge ciphertext. Thus, we focus more on “\(\textsf {sk}\) queried before \(\textsf {ct}\)” in this overview. As noted above, in the security analysis of [62] the adversary \(\mathcal {A}\) is not allowed to decrypt the challenge ciphertext and hence they completely randomize the ciphertext in the final game. However, since we are building a FE scheme any secret key queried by \(\mathcal {A}\) should be able to decrypt the challenge ciphertext. For this, we use the pre-image sampleability technique from prior works [37, 38]. In particular, the reduction samples a dummy vector \(\varvec{d} \in \mathbb {Z}_p^n\) satisfying \(\sum _{k}M_k|_{N, T, S}(\varvec{x})\varvec{z}[k] = \sum _{k}M_k|_{N, T, S}(\varvec{x})\varvec{d}[k]\) where \(\varvec{M} = (M_k)_k\) is a pre-challenge secret key. To plant the dummy vector into the ciphertext, we first need to make all label values \(\{\ell _{k, t, \mathfrak {i}, q}\}\) truly random depending on the terms \(\varvec{r}_{k, f}[q]\varvec{r}_{\varvec{x}}[t-1, \mathfrak {i}]\)’s and then turn them into their simulated forms, and finally traverse in the reverse path to get back the original form of the ciphertext with \(\varvec{d}\) taking place of the private attribute \(\varvec{z}\). In order to make all these labels truly random, the honest label values are needed to be hardwired into the ciphertext vectors (since these are computed later) so that we can apply the DDH assumption in \(\mathbb {G}_1\) to randomize the term \(\varvec{r}_{k, f}[q]\varvec{r}_{\varvec{x}}[t-1, \mathfrak {i}]\) (hence the label values). However, this step is much more complicated than in [62] since there are two independent \(\textsf{IPFE}\) systems in our construction and \(\varvec{r}_{k, f}[q]\) appears in both \(\varvec{v}_{k, q}\) and \(\widetilde{\varvec{v}}_{k, q}\) (i.e., in both \(\textsf{IPFE}\) systems). We design a two-level nested loop running over q and t for relocating \(\varvec{r}_{k, f}[q]\) from \(\varvec{v}\)’s and \(\widetilde{\varvec{v}}_{k, q}\) to \(\varvec{u}\)’s and \(\widetilde{\varvec{u}}_{k, T+1, \mathfrak {i}}\). To this end, we note that the case of “\(\textsf {sk}\) queried after \(\textsf {ct}\)” is simpler where we embed the reversely sampled initial label values into the secret key. Before going to discuss the hybrids, we first present the simulator of the ideal world.

Security analysis We use a three-step approach and each step consists of a group of hybrid sequence. At a very high level, we discuss the case of “\(\textsf {sk}\) queried before \(\textsf {ct}\)”. In this overview, for simplicity, we assume that the challenger knows the length of \(\varvec{z}\) while it generates the secret key.

First group of hybrids The reduction starts with the real scheme. In the first step, the label function \(\ell _{k, \textsf{init}}\) is reversely sampled with the value \(M_k[\varvec{x}]\varvec{z}[k] + \beta _k\) which is hardwired in \(\varvec{u}_{k, \textsf{init}}\).

\( \begin{aligned}&\begin{array}{r l c c c c c c c c} \varvec{v}_{k, \textsf{init}} &{} = (&{} \cdots , &{} \boxed {1},&{} \boxed {0},&{} 0 &{}~ \Vert ~&{} 0, &{} \varvec{0}&{}),\\ \varvec{v}_{k, q} &{} = (&{} \cdots , &{} -\varvec{r}_{k, f}[q],&{} 0,&{} ({{\textbf {M}}}_{k, \tau }\varvec{r}_{k, f})[q] &{}~ \Vert ~&{} \boxed {\varvec{s}_{k, f}[q]}, &{} \varvec{0}&{}),\\ \widetilde{\varvec{v}}_{k, q} &{} = (&{} \cdots , &{} -\varvec{r}_{k, f}[q],&{} \varvec{y}_{k, \textsf{acc}}[q]&{} &{}~ \Vert ~&{} 0, &{}\varvec{0}&{}) \end{array}\\&\begin{array}{r l c c c c c c c c} \varvec{u}_{k, \textsf{init}} &{} = (&{} \cdots , &{} \boxed {\ell _{k, \textsf{init}}},&{} \boxed {0},&{} 0, &{}~ \Vert ~&{} 0, &{} \varvec{0}&{}),\\ \varvec{u}_{k, t<T, \mathfrak {i}} &{} = (&{}\cdots , &{} \varvec{r}_{\varvec{x}}[t-1, \mathfrak {i}],&{} 0,&{} c_{\tau }(\varvec{x}; \varvec{r}_{\varvec{x}})&{} ~ \Vert ~&{} 0, &{} \varvec{0}&{}),\\ \widetilde{\varvec{u}}_{k, T+1, \mathfrak {i}} &{} = (&{} \cdots , &{} \varvec{r}_{\varvec{x}}[T, \mathfrak {i}],&{} \varvec{z}[k]&{} &{}~ \Vert ~&{} \boxed {\varvec{s}_{\varvec{x}}[T+1, \mathfrak {i}]}, &{}\varvec{0}&{}) \end{array} \end{aligned}\)

where \(\ell _{k, \textsf{init}} \leftarrow \textsf {RevSamp}((M_k, \varvec{x}, M_k[\varvec{x}]\varvec{z}[k] + \beta _k, \{\ell _{k, t, \mathfrak {i},q}\})\) and \(\ell _{k, t, \mathfrak {i},q}\)’s are computed honestly. Note that, the secret values \(\{\beta _k\}\) are sampled depending on whether the queried key is eligible for decryption. More specifically, if \(\mathcal {I}_{\varvec{M}}\subseteq [n]\), then \(\beta _k\)’s are sampled as in the original key generation algorithm, i.e., \(\sum _k \beta _k = 0\). On the other hand, if \(\text {max} \mathcal {I}_{\varvec{M}}> n\) then \(\beta _k\)’s are sampled uniformly at random, i.e., they do not necessarily be secret shares of zero. This can be done by the function hiding property of \(\textsf{IPFE}\) which ensures that the distributions \(\{ \{\textsf{IPFE}.\textsf{SK}_{\varvec{v}_k^{(\mathfrak {b})}}\}_{k \in [n+1, |\mathcal {I}_{\varvec{M}}|]} , \{\textsf{IPFE}.\textsf{CT}_{\varvec{u}_{k'}}\}_{ k' \in [n]} \}\) for \( \mathfrak {b} \in \{0, 1\}\) are indistinguishable where

\(\begin{array}{r l c c c c c c l} \varvec{v}_k^{(\mathfrak {b} )} &{}= (&{} \pi _k,&{} k\cdot \pi _k,&{} \varvec{0},&{} \beta _k + \mathfrak {b}\cdot r_k,&{} \varvec{0}&{}) &{}\text {~ for }k \in [n+1, |\mathcal {I}_{\varvec{M}}|], r_k \leftarrow \mathbb {Z}_p \\ \varvec{u}_{k'} &{} = (&{} -k' \cdot \rho _{k'},&{} \rho _{k'},&{} \varvec{0},&{} 1,&{} \varvec{0}&{})&{}\text {~ for }k' \in [n]\\ \end{array}\)

Thus, the indistinguishability between the group of hybrids can be guaranteed by the piecewise security of \(\textsf{AKGS}\) and the function hiding security of \(\textsf{IPFE}\).

Second group of hybrids The second step is a loop. The purpose of the loop is to change all the honest label values \(\ell _{k, t, \mathfrak {i}, q}\) to simulated ones that take the form \(\ell _{k, t, \mathfrak {i}, q} = \varvec{s}_{\varvec{x}}[t, \mathfrak {i}]\varvec{s}_{k, f}[q]\) where \(\varvec{s}_{\varvec{x}}[t, \mathfrak {i}]\) is hardwired in \(\varvec{u}_{k, t, \mathfrak {i}}\) or \(\widetilde{\varvec{u}}_{k, T+1, \mathfrak {i}}\) and \(\varvec{s}_{k, f}[q]\) is hardwired in \(\varvec{v}_{k,q}\) or \(\widetilde{v}_{k, q}\).

The whole procedure is executed in via a two-level loop with outer loop running over t and inner loop running over q (both in increasing order). In each iteration of the loop, we move all occurrences of \(\varvec{r}_{k, f}[q]\) into the \(\varvec{u}\)’s in one shot and hardwire the honest labels \(\ell _{k, t, \mathfrak {i}, q}\) into \(\varvec{u}_{k, t, \mathfrak {i}}\) for all \(\mathfrak {i}\). Below we present two crucial intermediate hybrids of the loop when \(t \le T\).

where  and

and  indicate the presence of \(\varvec{r}_{k, f}[q]\) in their respective positions. The indistinguishability can be argued using the function hiding security of \(\textsf{IPFE}\). Next, by invoking DDH in \(\mathbb {G}_1\), we first make \(\varvec{r}_{\varvec{x}}[t-1, \mathfrak {i}]\varvec{r}_{k, f}[q]\) truly random for all \(\mathfrak {i}\) and then transform the label values into their simulated form \(\ell _{k, \mathfrak {i}, q} = \varvec{s}_{\varvec{x}}[t, \mathfrak {i}]\varvec{s}_{k, f}[q]\) again by using DDH in \(\mathbb {G}_1\) for all \(\mathfrak {i}\). We emphasize that the labels \(\ell _{k,T+1, \mathfrak {i}, q }\) are kept as honest and hardwired when the loop runs for \(t \le T\). Finally, the terms \(\varvec{s}_{k, f}[q]\) are shifted back to \(\varvec{v}_{k, q}\) or \(\widetilde{\varvec{v}}_{k, q}\).

indicate the presence of \(\varvec{r}_{k, f}[q]\) in their respective positions. The indistinguishability can be argued using the function hiding security of \(\textsf{IPFE}\). Next, by invoking DDH in \(\mathbb {G}_1\), we first make \(\varvec{r}_{\varvec{x}}[t-1, \mathfrak {i}]\varvec{r}_{k, f}[q]\) truly random for all \(\mathfrak {i}\) and then transform the label values into their simulated form \(\ell _{k, \mathfrak {i}, q} = \varvec{s}_{\varvec{x}}[t, \mathfrak {i}]\varvec{s}_{k, f}[q]\) again by using DDH in \(\mathbb {G}_1\) for all \(\mathfrak {i}\). We emphasize that the labels \(\ell _{k,T+1, \mathfrak {i}, q }\) are kept as honest and hardwired when the loop runs for \(t \le T\). Finally, the terms \(\varvec{s}_{k, f}[q]\) are shifted back to \(\varvec{v}_{k, q}\) or \(\widetilde{\varvec{v}}_{k, q}\).

After the two-label loop finishes, the reduction run an additional loop over q with t fixed at \(T+1\) to make the last few label values \(\ell _{k, T+1, \mathfrak {i}, q}\) simulated. The indistinguishability between the hybrids follows from a similar argument as in the two-level loop.

Third group of hybrids After all the label values \(\ell _{k, t, \mathfrak {i}, q}\) are simulated, the third step uses a few more hybrids to reversely sample \(\ell _{1, \textsf{init}}\) and \(\ell _{k, \textsf{init}}|_{k>1}\) with the hardcoded values \(\varvec{M}(\varvec{x})^{\top } \varvec{z} + \beta _1\) and \(\beta _k|_{k>1}\) respectively. This can be achieved through a statistical transformation on \(\{\beta _k|~\sum _{k}\beta _k = 0\}\). Finally, we are all set to insert the dummy vector \(\varvec{d}\) in place of \(\varvec{z}\) keeping \(\mathcal {A}\)’s view identical.

where all the label values \(\{\ell _{k, t, \mathfrak {i}, q}\}\) are simulated and the initial label values are computed as follows

\(\begin{aligned} \ell _{1, \textsf{init}}&\leftarrow \textsf {RevSamp}(M_1, \varvec{x}, \varvec{M}(\varvec{x})^{\top } \varvec{d} + \beta _1, \{\ell _{k, t, \mathfrak {i}, q}\}),\\ \ell _{k, \textsf{init}}&\leftarrow \textsf {RevSamp}(M_k, \varvec{x}, \beta _k, \{\ell _{k, t, \mathfrak {i}, q}\}), ~\text { for all }k>1\\ \end{aligned}\)

From this hybrid we can traverse in the reverse direction all the way to the very first hybrid while keeping the private attribute as \(\varvec{d}\). We also rearrange the elements using the security of \(\textsf{IPFE}\) so that the distribution of the ciphertext does not change with the occurrence of the secret key whether it comes before or after the ciphertext. This is important for the public key \(\textsf{UAWS}^{\textsf {L}}\). The formal security is discussed in Theorem 3.

From single key to full-fledge \(\textsf{UAWS}^{\textsf {L}}\) The next and final goal is to bootstrap the single key, single ciphertext secure \(\textsf{UAWS}^{\textsf {L}}\) to a public key \(\textsf{UAWS}^{\textsf {L}}\) scheme that supports releasing many secret keys and ciphertexts. Observe that our secret key \(\textsf{UAWS}^{\textsf {L}}\) already supports multiple keys and single ciphertext. However, it fails to remain secure if two ciphertexts are published. This is because the piecewise security of \(\textsf{AKGS}\) can not be guaranteed if the label functions are reused. Our bootstrapping procedure takes inspiration from prior works [38, 62], that is to sample a random multiplier \(s \leftarrow \mathbb {Z}_p\) at the time of encryption, which will randomize the label values in the exponent of \(\mathbb {G}_2\). In particular, using \(\textsf{IPFE}\) security the random multiplier s is moved to the secret key vectors where the DDH assumption ensures that \(s\varvec{\ell }_{k, t, \mathfrak {i}, q}\)’s are pseudorandom in the exponent of \(\mathbb {G}_2\). To upgrade the scheme into the public key setting, we employ the Slotted \(\textsf{IPFE}\) that enables encrypting into the public slots using the public key whereas the function hiding security still holds in the private slots. We describe below our public key \(\textsf{UAWS}^{\textsf {L}}\) scheme.

The slots at the left/right of “\(~ \Vert ~\)” are public/private. The ciphertexts are computed using only the public slots and the private slots are utilized only in the security analysis. At a very high level, we utilize the triple-slot encryption technique devised in [38] to simulate the pre-challenge secret keys with a dummy vector encoded into the ciphertext and hardwire the functional value into the post-challenge secret keys. As mentioned earlier, the triple-slot encryption technique [38] was devised for the non-uniform model which crucially uses the fact that the garbling randomness can be (fully) sampled in the key generation process. This does not hold in our setting. Thus, we design a more advanced three-slot encryption technique that is compatible with distributed randomness of \(\textsf{AKGS}\) garbling procedure. More specifically, we add one additional hidden subspace in order to realize such sophisticated mechanism for \(\text {Logspace Turing machines}\). This additional subspace enables us to simulate the post-ciphertext secret keys with distributed randomness. However, shuttle technical challenges still remain to be overcome due to the structure of \(\textsf{AKGS}\) for \(\text {Logspace Turing machines}\). We prove the security of the scheme in Theorem 4.

3 Preliminaries

In this section, we provide the necessary definitions and backgrounds that will be used in the sequence.

Notations We denote by \(\lambda \) the security parameter that belongs to the set of natural number \(\mathbb {N}\) and \(1^\lambda \) denotes its unary representation. We use the notation \(s \leftarrow S\) to indicate the fact that s is sampled uniformly at random from the finite set S. For a distribution \(\mathcal {X}\), we write \(x \leftarrow \mathcal {X}\) to denote that x is sampled at random according to the distribution \(\mathcal {X}\). A function \(\textsf{negl}: \mathbb {N} \rightarrow \mathbb {R}\) is said to be a negligible function of \(\lambda \), if for every \(c \in \mathbb {N}\) there exists a \(\lambda _c \in \mathbb {N}\) such that for all \(\lambda > \lambda _c\), \(|\textsf{negl}(\lambda )|< \lambda ^{-c}\).

Let Expt be an interactive security experiment played between a challenger and an adversary, which always outputs a single bit. We assume that \(\textsf {Expt}_{\mathcal {A}}^{\textsf {C}}\) is a function of \(\lambda \) and it is parametrized by an adversary \(\mathcal {A}\) and a cryptographic protocol \(\textsf {C}\). Let \(\textsf {Expt}_{\mathcal {A}}^{\textsf {C}, 0}\) and \(\textsf {Expt}_{\mathcal {A}}^{\textsf {C}, 1}\) be two such experiment. The experiments are computationally/statistically indistinguishable if for any PPT/computationally unbounded adversary \(\mathcal {A}\) there exists a negligible function \(\textsf{negl}\) such that for all \(\lambda \in \mathbb {N}\),

\( \textsf {Adv}_{\mathcal {A}}^{\textsf {C}}(\lambda ) = \big |\textrm{Pr}\big [1 \leftarrow \textsf {Expt}_{\mathcal {A}}^{\textsf {C}, 0}(1^\lambda )\big ] - \textrm{Pr}\big [1 \leftarrow \textsf {Expt}_{\mathcal {A}}^{\textsf {C}, 1}(1^\lambda )\big ]\big | < \textsf{negl}(\lambda )\)

We write \(\textsf {Expt}_{\mathcal {A}}^{\textsf {C}, 0} {\mathop {\approx }\limits ^{c}} \textsf {Expt}_{\mathcal {A}}^{\textsf {C}, 1}\) if they are computationally indistinguishable (or simply indistinguishable). Similarly, \(\textsf {Expt}_{\mathcal {A}}^{\textsf {C}, 0} {\mathop {\approx }\limits ^{s}} \textsf {Expt}_{\mathcal {A}}^{\textsf {C}, 1}\) means statistically indistinguishable and \(\textsf {Expt}_{\mathcal {A}}^{\textsf {C}, 0} \equiv \textsf {Expt}_{\mathcal {A}}^{\textsf {C}, 1}\) means they are identically distributed.

Sets and indexing For \(n \in \mathbb {N}\), we denote [n] the set \(\{1, 2, \dots , n\}\) and for \(n, m \in \mathbb {N}\) with \(n < m\), we denote [n, m] be the set \(\{n, n+1, \dots , m\}\). We use lowercase boldface, e.g., \(\varvec{v}\), to denote column vectors in \(\mathbb {Z}_p^n\) and uppercase boldface, e.g., \({{\textbf {M}}}\), to denote matrices in \(\mathbb {Z}_p^{n \times m}\) for \(p,n,m \in \mathbb {N}\). The i-th component of a vector \(\varvec{v} \in \mathbb {Z}_p^n\) is written as \(\varvec{v}[i]\) and the (i, j)-th element of a matrix \({{\textbf {M}}} \in \mathbb {Z}_p^{n \times m}\) is denoted by \({{\textbf {M}}}[i, j]\). The transpose of a matrix \({{\textbf {M}}}\) is denoted by \({{\textbf {M}}}^{\top }\) such that \({{\textbf {M}}}^{\top }[i, j] = {{\textbf {M}}}[j, i]\). To write a vector of length n with all zero elements, we write \(\varvec{0}_n\) or simply \(\varvec{0}\) when the length is clear from the context. Let \(\varvec{u}, \varvec{v} \in \mathbb {Z}_p^n\), then the inner product between the vectors is denoted as \({\varvec{u}} \cdot {\varvec{v}} = \varvec{u}^{\top } \varvec{v} = \sum _{i \in [n]} \varvec{u}[i]\varvec{v}[i] \in \mathbb {Z}_p\). We define generalized inner product between two vectors \(\varvec{u} \in \mathbb {Z}_p^{\mathcal {I}_1}, \varvec{v} \in \mathbb {Z}_p^{\mathcal {I}_2}\) by \({\varvec{u}} \cdot {\varvec{v}} = \sum _{i \in \mathcal {I}_1 \cap \mathcal {I}_2} \varvec{u}[i]\varvec{v}[i]\).

Tensor products Let \(\varvec{u} \in \mathbb {Z}_p^{\mathcal {I}_1}\) and \(\varvec{v} \in \mathbb {Z}_p^{\mathcal {I}_2}\) be two vectors, their Kronecker product \(\varvec{w} = \varvec{u} \otimes \varvec{v}\) is a vector in \(\mathbb {Z}_p^{\mathcal {I}_1 \times \mathcal {I}_2}\) with entries defined by \(\varvec{w}[(i, j)] = \varvec{u}[i]\varvec{v}[j]\). For two matrices \({{\textbf {M}}}_1 \in \mathbb {Z}_p^{\mathcal {I}_1 \times \mathcal {I}_2}\) and \({{\textbf {M}}}_1 \in \mathbb {Z}_p^{\mathcal {I}_1' \times \mathcal {I}_2'}\),their Kronecker product \({{\textbf {M}}} = {{\textbf {M}}} = {{\textbf {M}}}_1 \otimes {{\textbf {M}}}_2\) is a matrix in \(\mathbb {Z}_p^{(\mathcal {I}_1\times \mathcal {I}_1')\times \mathcal {I}_2\times \mathcal {I}_2'}\) with entries defined by \({{\textbf {M}}}[(i_1, i_1'), (i_2, i_2')] ={{\textbf {M}}}_1[i_1, i_2]{{\textbf {M}}}_2[i_1', i_2'] \).

Currying Currying is the product of partially applying a function or specifying part of the indices of a vector/matrices, which yields another function with fewer arguments or another vector/matrix with fewer indices. We use the usual syntax for evaluating a function or indexing into a vector/matrix, except that unspecified variables are represented by “ ”. For example, let \({{\textbf {M}}} \in \mathbb {Z}_p^{([\mathcal {I}_1] \times [\mathcal {I}_2])\times ([\mathcal {J}_1] \times [\mathcal {J}_2])}\) and \(i_1 \in \mathcal {I}_1, j_2 \in \mathcal {J}_2\), then \({{\textbf {M}}}[(i_1, \textvisiblespace ), (\textvisiblespace , j_2)]\) is a matrix \({{\textbf {N}}} \in \mathbb {Z}_p^{[\mathcal {I}_2] \times [\mathcal {J}_2]}\) such that \({{\textbf {N}}}[i_2, j_1] = {{\textbf {M}}}[(i_1, i_2), (j_1, j_2)]\) for all \(i_2 \in \mathcal {I}_2, j_1\in \mathcal {J}_1\).

”. For example, let \({{\textbf {M}}} \in \mathbb {Z}_p^{([\mathcal {I}_1] \times [\mathcal {I}_2])\times ([\mathcal {J}_1] \times [\mathcal {J}_2])}\) and \(i_1 \in \mathcal {I}_1, j_2 \in \mathcal {J}_2\), then \({{\textbf {M}}}[(i_1, \textvisiblespace ), (\textvisiblespace , j_2)]\) is a matrix \({{\textbf {N}}} \in \mathbb {Z}_p^{[\mathcal {I}_2] \times [\mathcal {J}_2]}\) such that \({{\textbf {N}}}[i_2, j_1] = {{\textbf {M}}}[(i_1, i_2), (j_1, j_2)]\) for all \(i_2 \in \mathcal {I}_2, j_1\in \mathcal {J}_1\).

Coefficient vector Let \(f: \mathbb {Z}_p^{\mathcal {I}} \rightarrow \mathbb {Z}_p\) be an affine function with coefficient vector \({{\textbf {f}}} \in \mathbb {Z}_p^{\mathcal {S}}\) for \(\mathcal {S} = \{ \textsf {const} \} \cup \{\textsf {coef}_i | ~i\in \mathcal {I}\}\). Then for any \(\varvec{x} \in \mathbb {Z}_p^{\mathcal {I}}\), we have \(f(\varvec{x}) = {{\textbf {f}}}[\textsf {const}] + \sum _{i \in \mathcal {I}} {{\textbf {f}}}[\textsf {coef}_i] \varvec{x}[i]\).

3.1 Bilinear groups and hardness assumptions

We use a pairing group generator \(\mathcal {G}\) that takes as input \(1^\lambda \) and outputs a tuple \(\textsf{G} = (\mathbb {G}_1, \mathbb {G}_2, \mathbb {G}_{T }, g_1, g_2, e)\) where \(\mathbb {G}_1, \mathbb {G}_2, \mathbb {G}_{T }\) are groups of prime order \(p = p(\lambda )\) and \(g_i\) is a generator of the group \(\mathbb {G}_i\) for \(i \in \{1, 2\}\). The map \(e : \mathbb {G}_1 \times \mathbb {G}_2 \rightarrow \mathbb {G}_{T }\) satisfies the following properties:

-

bilinear: \(e(g_1^a, g_2^b) = e(g_1, g_2)^{ab}\) for all \(a, b \in \mathbb {Z}_p\).

-

non-degenerate: \(e(g_1, g_2)\) generates \(\mathbb {G}_{T }\).

The group operations in \(\mathbb {G}_i\) for \(i \in \{1, 2, T \}\) and the map e are efficiently computable in deterministic polynomial time in the security parameter \(\lambda \). For a matrix \({{\textbf {A}}}\) and each \(i \in \{1, 2, T \}\), we use the notation \([\![{{\textbf {A}}}]\!]_i\) to denote \(g_i^{{{\textbf {A}}}}\) where the exponentiation is element-wise. The group operation is written additively while using the bracket notation, i.e. \([\![{{\textbf {A}}} + {{\textbf {B}}}]\!]_i = [\![{{\textbf {A}}}]\!]_i + [\![{{\textbf {B}}}]\!]_i\) for matrices \({{\textbf {A}}}\) and \({{\textbf {B}}}\). Observe that, given \({{\textbf {A}}}\) and \([\![{{\textbf {B}}}]\!]_i\), we can efficiently compute \([\![{{\textbf {A}}}{{\textbf {B}}}]\!]_i = {{\textbf {A}}}\cdot [\![{{\textbf {B}}}]\!]_i\). We write the pairing operation multiplicatively, i.e. \(e([\![{{\textbf {A}}}]\!]_1, [\![{{\textbf {B}}}]\!]_2) = [\![{{\textbf {A}}}]\!]_1[\![{{\textbf {B}}}]\!]_2 = [\![{{\textbf {A}}}{{\textbf {B}}}]\!]_{T }\).

Assumption 1

(Symmetric external Diffie–Hellman assumption) We say that the \(\textsf{SXDH}\) assumption holds in a pairing group \(\textsf{G} = (\mathbb {G}_1, \mathbb {G}_2, \mathbb {G}_{T }, g_1, g_2, e)\) of order p, if the \(\textsf{DDH}\) assumption holds in \(\mathbb {G}_i\), i.e., \(\{[\![a]\!]_i, [\![b]\!]_i, [\![ab]\!]_i\} \approx \{[\![a]\!]_i, [\![b]\!]_i, [\![c]\!]_i\}\) for \(i \in \{1, 2, T \}\) and \(a, b, c \leftarrow \mathbb {Z}_{p}\).

3.2 Turing machine formulation

In this subsection, we describe the main computational model of this work, which is Turing machines with a read-only input and a read-write work tape. This type of \(\text {Turing machines}\) are used to handle decision problems belonging to space-bounded complexity classes such as Logspace predicates. We define below \(\text {Turing machines}\) with time complexity \(T\) and space complexity \(S\). The \(\text {Turing machine}\) can either accept or reject an input string within this time/space bound. We also stick to the binary alphabet for the shake of simplicity.

Definition 1

(\(\text {Turing machine}\) with time/space bound computation) [62] A (deterministic) \(\text {Turing machine}\) over \(\{0, 1\}\) is a tuple \(M = (Q, \varvec{y}_{\textsf{acc}}, \delta )\), where \(Q \ge 1\) is the number of states (we use [Q] as the set of states and 1 as the initial state), \(\varvec{y}_{\textsf{acc}} \in \{0, 1\}^Q\) indicates whether each state is accepting, and

is the state transition function, which, given the current state q, the symbol x on the input tape under scan, and the symbol w on the work tape under scan, specifies the new state \(q'\), the symbol \(w'\) overwriting w, the direction \(\mathrm {\varDelta } i\) to which the input tape pointer moves, and the direction \(\mathrm {\varDelta } j\) to which the work tape pointer moves. The machine is required to hang (instead of halting) once it reaches on the accepting state, i.e., for all \(q \in [Q]\) such that \(\varvec{y}_{\textsf{acc}}[q] = 1\) and all \(x, w \in \{0, 1\}\), it holds that \(\delta (q, x, w) = (q, w, 0, 0)\).

For input length \(N\ge 1\) and space complexity bound \(S\ge 1\), the set of internal configurations of M is

where \((i, j, \varvec{W}, q)\in \mathcal {C}_{M,N,S}\) specifies the input tape pointer \(i \in [N]\), the work tape pointer \(j \in [S]\), the content of the work tape \(\varvec{W} \in \{0, 1\}^{S}\) and the machine state \(q \in [Q]\).

For any bit-string \(\varvec{x} \in \{0, 1\}^N\) for \(N \ge 1\) and time/space complexity bounds \(T, S\ge 1\), the machine M accepts \(\varvec{x}\) within time \(T\) and space \(S\) if there exists a sequence of internal configurations (computation path of \(T\) steps) \(c_0, \ldots , c_{T} \in \mathcal {C}_{M,N,S}\) with \(c_t = (i_t, j_t, \varvec{W}_t, q_t)\) such that

Denote by \(M|_{N, T, S}\) the function \(\{0, 1\}^N \rightarrow \{0, 1\}\) mapping \(\varvec{x}\) to whether M accepts \(\varvec{x}\) in time \(T\) and space \(S\). Define \(\textsf {TM} = \{M |~ M\text { is a Turing machine}\}\) to be the set of all \(\text {Turing machines}\).

Note that, the above definition does not allow the \(\text {Turing machines}\) moving off the input/work tape. For instance, if \(\delta \) specifies moving the input pointer to the left/right when it is already at the leftmost/rightmost position, there is no valid next internal configuration. This type of situation can be handled by encoding the input string described in [62]. The problem of moving off the work tape to the left can be managed similarly, however, moving off the work tape to the right is undetectable by the machine, and this is intended due to the space bound. That is, when the space bound is violated, the input is silently rejected.

3.3 Functional encryption for unbounded attribute-weighted sum for Turing machines

We formally present the syntax of FE for unbounded attribute-weighted sum (\(\textsf{AWS}\)) and define adaptive simulation security of the primitive. We consider the set of all \(\text {Turing machines}\) \(\textsf {TM} = \{M |~ M\text { is a Turing machine}\}\) with time bound \(T\) and space bound \(S\).

Definition 2

(The AWS functionality for Turing machines) For any \(n, N \in \mathbb {N}\), the class of attribute-weighted sum functionalities is defined as

\( \begin{aligned} \left\{ \begin{array}{l}((\varvec{x} \in \{0, 1\}^N, 1^T, 1^{2^S}), \varvec{z} \in \mathbb {Z}_p^n) \mapsto \varvec{M}(\varvec{x})^{\top } \varvec{z} \text { where }\\ \varvec{M}(\varvec{x})^{\top } \varvec{z} = \sum _{k \in \mathcal {I}_{\varvec{M}}} \varvec{z}[k] \cdot M_k(\varvec{x}) \Bigg |~~ \begin{matrix} N, T, S\ge 1, \\ M_k\in \textsf{TM}\ \forall k\in [n], \\ \mathcal {I}_{\varvec{M}} \subseteq [n] \text { with } |\mathcal {I}_{\varvec{M}}| \ge 1 \end{matrix}\end{array}\right\} \end{aligned}\)

Definition 3

(Functional encryption for attribute-weighted sum) An unbounded-slot FE for unbounded attribute-weighted sum associated to the set of \(\text {Turing machines}\) \(\textsf {TM}\) and the message space \(\mathbb {M}\) consists of four PPT algorithms defined as follows:

\(\textsf {Setup}(1^\lambda )\) The setup algorithm takes as input a security parameter and outputs the master secret-key \(\textsf{MSK}\) and the master public-key \({\textsf{MPK}}\).

\(\textsf {KeyGen}(\textsf{MSK}, (\varvec{M}, \mathcal {I}_{\varvec{M}}))\) The key generation algorithm takes as input \(\textsf{MSK}\) and a tuple of \(\text {Turing machines}\) \(\varvec{M} = (M_k)_{k \in \mathcal {I}_{\varvec{M}}}\). It outputs a secret-key \(\textsf{SK}_{(\varvec{M},\mathcal {I}_{\varvec{M}})}\) and makes \((\varvec{M}, \mathcal {I}_{\varvec{M}})\) available publicly.

\(\textsf {Enc}({\textsf{MPK}}, ((\varvec{x}_i, 1^{T_i}, 1^{2^{S_i}}), \varvec{z}_i)_{i \in [\mathcal {N}]})\) The encryption algorithm takes as input \({\textsf{MPK}}\) and a message consisting of \(\mathcal {N}\) number of public–private pair of attributes \((\varvec{x}_i, \varvec{z}_i) \in \mathbb {M}\) such that the public attribute \(\varvec{x}_i \in \{0, 1\}^{N_i}\) for some \(N_i \ge 1\) with time and space bounds given by \(T_i, S_i \ge 1\), and the private attribute \(\varvec{z}_i \in \mathbb {Z}_p^{n_i}\). It outputs a ciphertext \(\textsf{CT}_{(\varvec{x}_i, T_i, S_i)}\) and makes \((\varvec{x}_i, T_i, S_i)_{i \in [\mathcal {N}]}\) available publicly.

\(\textsf {Dec}((\textsf{SK}_{(\varvec{M},\mathcal {I}_{\varvec{M}})}, (\varvec{M}, \mathcal {I}_{\varvec{M}})), (\textsf{CT}_{(\varvec{x}_i, T_i, S_i)}, (\varvec{x}_i, \) \(T_i, S_i)_{i \in [\mathcal {N}]}))\) The decryption algorithm takes as input \(\textsf{SK}_{(\varvec{M},\mathcal {I}_{\varvec{M}})}\) along with the tuple of \(\text {Turing machines}\) and index sets \((\varvec{M}, \mathcal {I}_{\varvec{M}})\), and a ciphertext \(\textsf{CT}_{(\varvec{x}_i, T_i, S_i)}\) along with a collection of associated public attributes \((\varvec{x}_i, T_i, S_i)_{i \in [\mathcal {N}]}\). It outputs a value in \(\mathbb {Z}_p\) or \(\perp \).

Correctness The unbounded-slot FE for unbounded attribute-weighted sum is said to be correct if for all \(((\varvec{x}_i \in \{0, 1\}^{N_i}, 1^{T_i}, 1^{2^{S_i}}), \varvec{z}_i \in \mathbb {Z}_p^{n_i})_{i \in [\mathcal {N}]}\) and for all \((\varvec{M} = (M_k)_{k \in \mathcal {I}_{\varvec{M}}}, \mathcal {I}_{\varvec{M}})\), we get

We now define the adaptively simulation-based security of FE for unbounded attribute-weighted sum for \(\text {Turing machines}\).

Definition 4

(Adaptive simulation security) Let \((\textsf {Setup}, \textsf {KeyGen}, \textsf {Enc}, \textsf {Dec})\) be an unbounded-slot FE for unbounded attribute-weighted sum for \(\textsf {TM}\) and message space \(\mathbb {M}\). The scheme is said to be \((\mathrm {\Phi }_{\textsf {pre}}, \mathrm {\Phi }_{\textsf{CT}}, \mathrm {\Phi }_{\text {\textsf {post}}})\)-adaptively simulation secure if for any PPT adversary \(\mathcal {A}\) making at most \(\mathrm {\Phi }_\textsf{CT}\) ciphertext queries and \(\mathrm {\Phi }_{\textsf {pre}}, \mathrm {\Phi }_{\text {\textsf {post}}}\) secret key queries before and after the ciphertext queries respectively, we have \(\text {\textsf {Expt}}_{\mathcal {A}, \text {\textsf {real}}}^{\text {\textsf {UAWS}}}(1^\lambda ) {\mathop {\approx }\limits ^{c}} \text {\textsf {Expt}}_{\mathcal {A}, \text {\textsf {ideal}}}^{\text {\textsf {UAWS}}}(1^\lambda )\), where the experiments are defined as follows. Also, an unbounded-slot FE for attribute-weighted sums is said to be \((\textsf{poly}, \mathrm {\Phi }_{\textsf{CT}}, \textsf{poly})\)-adaptively simulation secure if it is \((\mathrm {\Phi }_{\textsf {pre}}, \mathrm {\Phi }_{\textsf{CT}}, \mathrm {\Phi }_{\text {\textsf {post}}})\)-adaptively simulation secure as well as \(\mathrm {\Phi }_{\textsf {pre}}\) and \(\mathrm {\Phi }_{\text {\textsf {post}}}\) are unbounded polynomials in the security parameter \(\lambda \).

3.4 Function-hiding slotted inner product functional encryption

Definition 5

(Slotted inner product functional encryption) [62] Let \(\textsf{G} = (\mathbb {G}_1, \mathbb {G}_2, \mathbb {G}_{T }, g_1, g_2, e)\) be a tuple of pairing groups of prime order p. A slotted inner product functional encryption (\(\textsf{IPFE}\)) scheme based on \(\textsf{G}\) consists of 5 efficient algorithms:

\(\textsf{IPFE}.\textsf {Setup}(1^\lambda , S_{\textsf{pub}}, S_{\textsf{priv}})\) The setup algorithm takes as input a security parameter \(\lambda \) and two disjoint index sets, the public slots \(S_{\textsf{pub}}\) and the private slots \(S_{\textsf{priv}}\). It outputs the master secret-key \(\textsf{IPFE}.\textsf{MSK}\) and the master public-key \(\textsf{IPFE}.{\textsf{MPK}}\). Let \(S = S_{\textsf{pub}} \cup S_{\textsf{priv}}\) be the whole index set and \(|S|, |S_{\textsf{pub}}|, |S_{\textsf{priv}}|\) denote the number of indices in S, \(S_{\textsf{pub}}\) and \(S_{\textsf{priv}}\) respectively.

\(\textsf{IPFE}.\textsf {KeyGen}(\textsf{IPFE}.\textsf{MSK}, [\![\varvec{v}]\!]_2)\) The key generation algorithm takes as input \(\textsf{IPFE}.\textsf{MSK}\) and a vector \([\![\varvec{v}]\!]_2 \in \mathbb {G}_2^{|S|}\). It outputs a secret-key \(\textsf{IPFE}.\textsf{SK}\) for \(\varvec{v} \in \mathbb {Z}_p^{|S|}\).

\(\textsf{IPFE}.\textsf {Enc}(\textsf{IPFE}.\textsf{MSK}, [\![\varvec{u}]\!]_1)\) The encryption algorithm takes as input \(\textsf{IPFE}.\textsf{MSK}\) and a vector \([\![\varvec{u}]\!]_1 \in \mathbb {G}_1^{|S|}\). It outputs a ciphertext \(\textsf{IPFE}.\textsf{CT}\) for \(\varvec{u} \in \mathbb {Z}_p^{|S|}\).

\(\textsf{IPFE}.\textsf {Dec}(\textsf{IPFE}.\textsf{SK}, \textsf{IPFE}.\textsf{CT})\) The decryption algorithm takes as input a secret-key \(\textsf{IPFE}.\textsf{SK}\) and a ciphertext \(\textsf{IPFE}.\textsf{CT}\). It outputs an element from \(\mathbb {G}_{T }\).

\(\textsf{IPFE}.\textsf {SlotEnc}(\textsf{IPFE}.{\textsf{MPK}}, [\![\varvec{u}]\!]_1)\) The slot encryption algorithm takes as input \(\textsf{IPFE}.{\textsf{MPK}}\) and a vector \([\![\varvec{u}]\!]_1 \in \mathbb {G}_1^{|S_{\textsf{pub}}|}\). It outputs a ciphertext \(\textsf{IPFE}.\textsf{CT}\) for \((\varvec{u}|| \varvec{0}_{|S_{\textsf{priv}}|}) \in \mathbb {Z}_p^{|S|}\).

Correctness The correctness of a slotted \(\textsf{IPFE}\) scheme requires the following two properties.

-

Decryption Correctness: The slotted \(\textsf{IPFE}\) is said to satisfy decryption correctness if for all \(\varvec{u}, \varvec{v} \in \mathbb {Z}_p^{|S|}\), we have

$$\begin{aligned} \begin{array}{l} \textrm{Pr} \left[ \begin{array}{l} \textsf {Dec}(\textsf{IPFE}.\textsf{SK}, \textsf{IPFE}.\textsf{CT}) = [\![{\varvec{v}} \cdot {\varvec{u}}]\!]_{T }: \\ (\textsf{IPFE}.\textsf{MSK}, \textsf{IPFE}.{\textsf{MPK}}) \leftarrow \textsf {Setup}(1^\lambda , S_{\textsf{pub}}, S_{\textsf{priv}}), \\ \textsf{IPFE}.\textsf{SK}\leftarrow \textsf {KeyGen}(\textsf{IPFE}.\textsf{MSK}, [\![\varvec{v}]\!]_2), \\ \textsf{IPFE}.\textsf{CT}\leftarrow \textsf {Enc}(\textsf{IPFE}.\textsf{MSK}, [\![\varvec{u}]\!]_1) \end{array} \right] = 1 \end{array} \end{aligned}$$ -

Slot-Mode Correctness: The slotted \(\textsf{IPFE}\) is said to satisfy the slot-mode correctness if for all vectors \(\varvec{u} \in \mathbb {Z}_p^{|S_{\textsf{pub}}|}\), we have

$$\begin{aligned} \begin{array}{l} \Bigg \{ \begin{array}{l} (\textsf{IPFE}.\textsf{MSK}, \textsf{IPFE}.{\textsf{MPK}}, \textsf{IPFE}.\textsf{CT}) :\\ (\textsf{IPFE}.\textsf{MSK}, \textsf{IPFE}.{\textsf{MPK}}) \leftarrow \textsf {Setup}(1^\lambda , S_{\textsf{pub}}, S_{\textsf{priv}}), \\ \textsf{IPFE}.\textsf{CT}\leftarrow \textsf {Enc}(\textsf{IPFE}.\textsf{MSK}, [\![\varvec{u}|| \varvec{0}_{|S_{\textsf{priv}}|}]\!]_1) \end{array} \Bigg \}\\ \\ \equiv \Bigg \{ \begin{array}{l} (\textsf{IPFE}.\textsf{MSK}, \textsf{IPFE}.{\textsf{MPK}}, \textsf{IPFE}.\textsf{CT}) : \\ (\textsf{IPFE}.\textsf{MSK}, \textsf{IPFE}.{\textsf{MPK}}) \leftarrow \textsf {Setup}(1^\lambda , S_{\textsf{pub}}, S_{\textsf{priv}}), \\ \textsf{IPFE}.\textsf{CT}\leftarrow \textsf {SlotEnc}(\textsf{IPFE}.{\textsf{MPK}}, [\![\varvec{u}]\!]_1) \end{array} \Bigg \} \end{array} \end{aligned}$$

Security Let \((\textsf{IPFE}.\textsf {Setup}, \textsf{IPFE}.\textsf {KeyGen},\) \(\textsf{IPFE}.\textsf {Enc},\) \(\textsf{IPFE}.\textsf {Dec},\) \(\textsf{IPFE}.\textsf {SlotEnc})\) be a slotted \(\textsf{IPFE}\). The scheme is said to be adaptively function-hiding secure if for all PPT adversary \(\mathcal {A}\), we have \(\text {\textsf {Expt}}_{\mathcal {A}}^{\text {\textsf {FH-IPFE}}}(1^\lambda , 0) {\mathop {\approx }\limits ^{c}} \text {\textsf {Expt}}_{\mathcal {A}}^{\text {\textsf {FH-IPFE}}}(1^\lambda , 1)\), where the experiment \(\text {\textsf {Expt}}_{\mathcal {A}}^{\text {\textsf {FH-IPFE}}}(1^\lambda , b)\) for \(b \in \{0,1\}\) is defined as follows:

where \(\varvec{v}_j|_{S_{\textsf{pub}}}\) represents the elements of \(\varvec{v}_j\) sitting at the indices in \(S_{\textsf{pub}}\).

Lemma 1

[61, 62] Let \(\textsf{G} = (\mathbb {G}_1, \mathbb {G}_2, \mathbb {G}_{T }, g_1, g_2, e)\) be a tuple of pairing groups of prime order p and \(k \ge 1\) an integer constant. If \(\text {\textsf {MDDH}}_k\) holds in both groups \(\mathbb {G}_1, \mathbb {G}_2\), then there is an adaptively function-hiding secure \(\textsf{IPFE}\) scheme based on \(\textsf{G}\).

3.5 Arithmetic key garbling scheme for \(\text {Turing machines}\)

Lin and Luo [62] introduced arithmetic key garbling scheme (\(\textsf{AKGS}\)). The notion of \(\textsf{AKGS}\) is an information theoretic primitive, inspired by randomized encodings [18] and partial garbling schemes [51]. It garbles a function \(f: \mathbb {Z}_p^n \rightarrow \mathbb {Z}_p\) (possibly of size \((m+1)\)) along with two secrets \(z, \beta \in \mathbb {Z}_p\) and produces affine label functions \(L_1, \dots , L_{m+1} : \mathbb {Z}_p^n \rightarrow \mathbb {Z}_p\). Given f, an input \(\varvec{x} \in \mathbb {Z}_p^n\) and the values \(L_1(\varvec{x}), \dots , L_{m+1}(\varvec{x})\), there is an efficient algorithm which computes \(z f(\varvec{x}) + \beta \) without revealing any information about z and \(\beta \). Lin and Luo [62] additionally design \(\textsf{AKGS}\) for \(\text {Turing machines}\) with time/space bounds. Many parts of this section are taken from the Sections 5 and 7.1 of [62]. Thus, the reader familiar with the notion of \(\textsf{AKGS}\) for \(\text {Turing machines}\) can skip this section. We define \(\textsf{AKGS}\) for the function class

for the set of all time/space bounded \(\text {Turing machine}\) computations. We refer to [62] for a detailed discussion on the computation of \(\text {Turing machines}\) as a sequence of matrix multiplications, and the construction of \(\textsf{AKGS}\) for matrix multiplication.

Definition 6

(Arithmetic key garbling scheme (\(\textsf{AKGS}\))) [62] An arithmetic garbling scheme (\(\textsf{AKGS}\)) for the function class \(\mathcal {F}\), consists of two efficient algorithms:

\(\textsf{Garble}((M, 1^{N}, 1^{T}, 1^{2^{S}}, p), z, \beta )\) The garbling is a randomized algorithm that takes as input a tuple of a function \(M|_{N, T, S}\) over \(\mathbb {Z}_p\) from \(\mathcal {F}\), an input length N, a time bound \(T\), a space bound \(S\) with \(N, T, S\ge 1\), a prime p, and two secret integers \(z, \beta \in \mathbb {Z}_p\). It outputs a set of affine functions \(L_{\textsf{init}}, (L_{t, \theta })_{t \in [T+1], \theta \in \mathcal {C}_{M,N,S}}: \mathbb {Z}_p^{N} \rightarrow \mathbb {Z}_p\) which are called label functions that specifies how an input of length N is encoded as labels. Pragmatically, it outputs the coefficient vectors \(\varvec{\ell }_{\textsf{init}}, (\varvec{\ell }_{t, \theta })_{t \in [T+1], \theta \in \mathcal {C}_{M,N,S}}\).

\(\textsf{Eval}((M, 1^{N}, 1^{T}, 1^{2^{S}}, p), \varvec{x}, \ell _{\textsf{init}},\) \((\ell _{t, \theta })_{t \in [T+1],\theta \in \mathcal {C}_{M,N,S}})\) The evaluation is a deterministic algorithm that takes as input a function \(M|_{N, T, S}\) over \(\mathbb {Z}_p\) from \(\mathcal {F}\), an input vector \(\varvec{x} \in \mathbb {Z}_p^{N}\) and the integers \(\ell _{\textsf{init}}, (\ell _{t, \theta })_{t \in [T+1], \theta \in \mathcal {C}_{M,N,S}} \in \mathbb {Z}_p\) which are supposed to be the values of the label functions at \(\varvec{x} \in \mathbb {Z}_p^N\). It outputs a value in \(\mathbb {Z}_p\).

Correctness The \(\textsf{AKGS}\) is said to be correct if for all tuple \((M, 1^{N}, 1^{T}, 1^{2^{S}}, p)\), integers \(z, \beta \in \mathbb {Z}_p\) and \(\varvec{x} \in \mathbb {Z}_p^N\), we have

The scheme have deterministic shape, meaning that the number of label functions, \(m= 1+ (T+1)NS2^{S} Q\), is determined solely by the tuple \((M, 1^{N}, 1^{T}, 1^{2^{S}}, p)\), independent of \(z, \beta \) and the randomness in \(\textsf{Garble}\). The number of label functions m is called the garbling size of \(M|_{N, T, S}\) under this scheme. For the sake of simpler representation, let us number the label values (or functions) as \(1, \ldots , m\) in the lexicographical order where the first two label values are \(\ell _{\textsf{init}}, \ell _{(1, 1, 1, \varvec{0}_{S}, 1)}\) and the last label value is \(\ell _{(T+1, N, S, \varvec{1}^{S}, Q)}\).

Linearity The \(\textsf{AKGS}\) is said to be linear if the following conditions hold:

-

\(\textsf {Garble}((M, 1^{N}, 1^{T}, 1^{2^{S}}, p), z, \beta )\) uses a uniformly random vector \(\varvec{r} \leftarrow \mathbb {Z}_p^{m}\) as its randomness, where m is determined solely by \((M, 1^{N}, 1^{T}, 1^{2^{S}}, p)\), independent of \(z, \beta \).

-

The coefficient vectors \(\varvec{\ell }_1, \dots , \varvec{\ell }_{m}\) produced by \(\textsf {Garble}((M, 1^{N}, 1^{T}, 1^{2^{S}}, p), z, \beta )\) are linear in \((z, \beta , \varvec{r})\).

-

\(\textsf {Eval}((M, 1^{N}, 1^{T}, 1^{2^{S}}, p), \varvec{x}, \ell _1, \ldots , \ell _{m})\) is linear in \(\ell _1, \dots , \ell _{m}\).

For our \(\textsf{UAWS}\), we consider the piecewise security notion of \(\textsf{AKGS}\) defined by Lin and Luo [62]Footnote 1.

Definition 7

(Piecewise security of \(\textsf{AKGS}\)) [62] An \(\textsf{AKGS}= (\textsf{Garble}, \textsf{Eval})\) for the function class \(\mathcal {F}\) is piecewise secure if the following conditions hold:

-

The first label value is reversely sampleable from the other labels together with \((M, 1^{N}, 1^{T}, 1^{2^{S}}, p)\) and \(\varvec{x}\). This reconstruction is perfect even given all the other label functions. Formally, there exists an efficient algorithm \(\textsf{RevSamp}\) such that for all \(M|_{N, T, S} \in \mathcal {F}, z, \beta \in \mathbb {Z}_p\) and \(\varvec{x} \in \mathbb {Z}_p^N\), the following distributions are identical:

-