Abstract

This paper proposes a new Bayesian network learning algorithm, termed PCHC, that is designed to work with either continuous or categorical data. PCHC is a hybrid algorithm that consists of the skeleton identification phase (learning the relationships among the variables) followed by the scoring phase that assigns the causal directions. Monte Carlo simulations clearly show that PCHC is dramatically faster, enjoys a nice scalability with respect to the sample size, and produces Bayesian networks of similar to, or of higher accuracy than, a competing state of the art hybrid algorithm. PCHC is finally applied to real data illustrating its performance and advantages.

Similar content being viewed by others

Notes

For a general definition of causality related to economics and econometrics see Hoover (2017).

Also known as Bayes networks, belief networks, decision networks, Bayes(ian) models or probabilistic directed acyclic graphical models.

For a systematic review of BN applications to water resources in general, the reader is referred to Phan et al. (2016).

For a review of BNs with applications to financial econometrics see Ahelegbey (2016).

The focus of the current paper is on static (cross-sectional) data. However, one could apply the technique proposed to panel data for each time period separately and model the evolution of the network.

For a literature review on graphical models and causal inference in econometrics the reader is addressed to Kwon and Bessler (2011).

They concentrated on undirected exponential random graph models that are in fact close relatives of the skeleton of a BN.

PC stands for Peter and Clark, named after Peter Spirtes and Clark Glymour, the names of the two researchers who invented it (Spirtes and Glymour 1991) and it is one of the oldest BN learning algorithms.

The scoring phase is also employed by the MMHC algorithm.

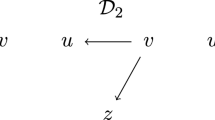

Two DAGs are called Markov equivalent if and only if they have the same skeleton and the same V-structures (Verma and Pearl 1991).

Assuming that the relationships of the predictors and the response variables can be represented via a BN.

As opposed to fully score-based methods, hybrid methods are considerably faster as the scoring takes place in a constrained space.

This is the Max–Min heuristic.

A simple way to compute this is via the Pearson correlation of the residuals of the two linear regression models of X on \(\mathbf{Z}\) and of Y on \(\mathbf{Z}\). Note that a faster approach is via the correlation matrix.

The implementation of the PC algorithm’s skeleton identification phase in the R package pchc carries out this task via sorting of the p-values.

The term equivalent refers to their attractive property of giving the same score to equivalent structures (Markov equivalent BNs) i.e., structures that are statistically indistinguishable (Tsamardinos et al. 2006).

This implies that every time an edge removal, or arrow direction is implemented, a check for cycles is performed. If cycles are created, the operation is cancelled regardless of increasing the score.

For more information see Tsamardinos et al. (2006).

All scores for continuous data are based on the rather unrealistic multivariate normality assumption, yet they have proved successful.

For large sample sizes BIC converges to \(\log \)(BDe).

Normality assumption is known to have limited effect in linear regression for instance, where the consistency of the regression parameters is not affected when this assumption is not met.

See Tsamardinos and Borboudakis (2010) for more details.

This is defined as the number of operations required to make the estimated graph equal to the true graph. Instead of the true DAG, the Markov equivalence graph of the true BN; that is some edges have been un-oriented as their direction cannot be statistically decided. The transformation from the DAG to the Markov equivalence graph was carried out using Chickering’s algorithm Chickering (1995).

The speed-up factor of MMHC to PCHC is defined as the ratio of the duration of MMHC to PCHC. Both the PCHC and the MMHC algorithms have been implemented in C++, henceforth the comparison of the execution times is fair at the programming language level.

The average number of connecting edges, neighbours or adjacent variables.

The same conclusions were drawn for the BNs constructed with categorical data.

In practice this means, that some directions are difficult to be correctly pointed, i.e. even with infinite sample sizes, it is impossible to identify the correct orientation, unless prior knowledge is available.

Greene (2003) used the number of major derogatory credit reports as the response variable.

These purposes apply also in the continuous data case.

It is also true that more tuning is needed, more prior knowledge must be added until to make the graph as realistic as possible, but this task is outside the scope of this paper.

References

Agresti, A. (2002). Categorical data analysis. In Wiley series in probability and statistics (2nd ed.). Wiley.

Ahelegbey, D. F. (2016). The econometrics of Bayesian graphical models: A review with financial application. Journal of Network Theory in Finance, 2(2), 1–33.

Aliferis, C. F., Statnikov, A. R., Tsamardinos, I., Mani, S., & Koutsoukos, X. D. (2010). Local causal and Markov blanket induction for causal discovery and feature selection for classification Part I : Algorithms and Empirical Evaluation. Journal of Machine Learning Research, 11, 171–234.

Baba, K., Shibata, R., & Sibuya, M. (2004). Partial correlation and conditional correlation as measures of conditional independence. Australian and New Zealand Journal of Statistics, 46(4), 657–664.

Barton, D., Saloranta, T., Moe, S., Eggestad, H., & Kuikka, S. (2008). Bayesian belief networks as a meta-modelling tool in integrated river basin management—Pros and cons in evaluating nutrient abatement decisions under uncertainty in a Norwegian river basin. Ecological Economics, 66(1), 91–104.

Beinlich, I. A., Suermondt, H. J., Chavez, R. M., & Cooper, G. F. (1989). The ALARM monitoring system: A case study with two probabilistic inference techniques for belief networks. In AIME 89 (pp. 247–256). Springer.

Berger, T., & Troost, C. (2014). Agent-based modelling of climate adaptation and mitigation options in agriculture. Journal of Agricultural Economics, 65(2), 323–348.

Bhat, N., Farias, V. F., Moallemi, C. C., & Sinha, D. (2020). Near optimal AB testing. Management Science (To appear).

Blodgett, J. G., & Anderson, R. D. (2000). A Bayesian network model of the consumer complaint process. Journal of Service Research, 2(4), 321–338.

Boucher, V., & Mourifié, I. (2017). My friend far, far away: A random field approach to exponential random graph models. The Econometrics Journal, 20(3), S14–S46.

Bouckaert, R. R. (1995). Bayesian belief networks: From construction to inference. Ph.D. Thesis, University of Utrecht.

Buntine, W. (1991). Theory refinement on Bayesian networks. In Uncertainty Proceedings (pp. 52–60). Elsevier.

Caraiani, P. (2013). Using complex networks to characterize international business cycles. PLoS ONE, 8(3), e58109.

Cerchiello, P., & Giudici, P. (2016). Big data analysis for financial risk management. Journal of Big Data, 3(1), 18.

Chen, P., & Chihying, H. (2007). Learning causal relations in multivariate time series data. Economics: The Open-Access, Open-Assessment E-Journal, 1, 11.

Chen, P., & Hsiao, C. Y. (2010). Causal inference for structural equations: With an application to wage-price spiral. Computational Economics, 36(1), 17–36.

Chickering, D. M. (1995). A transformational characterization of equivalent Bayesian network structures. In Proceedings of the eleventh conference on uncertainty in artificial flam intelligence (pp. 87–98). Morgan Kaufmann Publishers Inc.

Chickering, D. M. (2002). Optimal structure identification with greedy search. Journal of Machine Learning Research, 3(Nov), 507–554.

Chong, C., & Kluppelberg, C. (2018). Contagion in financial systems: A Bayesian network approach. SIAM Journal on Financial Mathematics, 9(1), 28–53.

Cooper, G. F., & Herskovits, E. (1992). A Bayesian method for the induction of probabilistic networks from data. Machine Learning, 9(4), 309–347.

Cowell, R. G., Verrall, R. J., & Yoon, Y. (2007). Modeling operational risk with Bayesian networks. Journal of Risk and Insurance, 74(4), 795–827.

Cugnata, F., Kenett, R., & Salini, S. (2014). Bayesian network applications to customer surveys and InfoQ. Procedia Economics and Finance, 17, 3–9.

Fennell, P. G., O’Sullivan, D. J., Godin, A., & Kinsella, S. (2016). Is it possible to visualise any stock flow consistent model as a directed acyclic graph? Computational Economics, 48(2), 307–316.

Friedman, J., Hastie, T., & Tibshirani, R. (2001). The elements of statistical learning. New York: Springer.

Geiger, D., & Heckerman, D. (1994). Learning Gaussian networks. In Proceedings of the 10th international conference on uncertainty in artificial intelligence (pp. 235–243). Morgan Kaufmann Publishers Inc.

Glymour, C. N. (2001). The mind’s arrows: Bayes nets and graphical causal models in psychology. Cambridge: MIT Press.

Greene, W. H. (2003). Econometric analysis. Bengaluru: Pearson Education India.

Gupta, S., & Kim, H. W. (2008). Linking structural equation modeling to Bayesian networks: Decision support for customer retention in virtual communities. European Journal of Operational Research, 190(3), 818–833.

Häger, D., & Andersen, L. B. (2010). A knowledge based approach to loss severity assessment in financial institutions using Bayesian networks and loss determinants. European Journal of Operational Research, 207(3), 1635–1644.

Hahsler, M., Chelluboina, S., Hornik, K., & Buchta, C. (2011). The arules R-package ecosystem: Analyzing interesting patterns from large transaction datasets. Journal of Machine Learning Research, 12, 1977–1981.

Heckerman, D., Geiger, D., & Chickering, D. M. (1995). Learning Bayesian networks: The combination of knowledge and statistical data. Machine Learning, 20(3), 197–243.

Hoover, K. D. (2017). Causality in economics and econometrics (pp. 1–13). London: Palgrave Macmillan.

Hosseini, S., & Barker, K. (2016). A Bayesian network model for resilience-based supplier selection. International Journal of Production Economics, 180, 68–87.

Kalisch, M., & Bühlmann, P. (2007). Estimating high-dimensional directed acyclic graphs with the PC-algorithm. Journal of Machine Learning Research, 8(Mar), 613–636.

Kwok, S. K. P. (2010). Power-saving algorithms in electricity usage-comparison between the power saving algorithms and machine learning techniques. In 2010 IEEE conference on innovative technologies for an efficient and reliable electricity supply (pp. 246–251). IEEE.

Kwon, D. H., & Bessler, D. A. (2011). Graphical methods, inductive causal inference, and econometrics: A literature review. Computational Economics, 38(1), 85–106.

Lam, W., & Bacchus, F. (1994). Learning Bayesian belief networks: An approach based on the MDL principle. Computational Intelligence, 10(3), 269–293.

Langarizadeh, M., & Moghbeli, F. (2016). Applying Naive Bayesian networks to disease prediction: A systematic review. Acta Informatica Medica, 24(5), 364.

Leong, C. K. (2016). Credit risk scoring with Bayesian network models. Computational Economics, 47(3), 423–446.

Mele, A. (2017). A structural model of dense network formation. Econometrica, 85(3), 825–850.

Neapolitan, R. E. (2003). Learning Bayesian networks. Upper Saddle River: Pearson Prentice Hall.

Papadakis, M., Tsagris, M., Dimitriadis, M., Fafalios, S., Tsamardinos, I., & Fasiolo, M., et al. (2020). Rfast: A collection of efficient and extremely fast R functions. https://CRAN.R-project.org/package=Rfast, R package version 1.9.9.

Papadimitriou, T., Gogas, P., & Sarantitis, G. A. (2016). Convergence of European business cycles: A complex networks approach. Computational Economics, 47(2), 97–119.

Pearl, J. (1988). Probabilistic reasoning in intelligent systems: Networks of plausible reasoning. Los Altos: Morgan Kaufmann Publishers.

Phan, T. D., Smart, J. C., Capon, S. J., Hadwen, W. L., & Sahin, O. (2016). Applications of Bayesian belief networks in water resource management: A systematic review. Environmental Modelling and Software, 85, 98–111.

R Core Team. (2020). R: A language and environment for statistical computing. R foundation for statistical computing, Vienna, Austria, https://www.R-project.org/.

Sanford, A., & Moosa, I. (2015). Operational risk modelling and organizational learning in structured finance operations: A Bayesian network approach. Journal of the Operational Research Society, 66(1), 86–115.

Sarantitis, G. A., Papadimitriou, T., & Gogas, P. (2018). A network analysis of the United Kingdom’s consumer price index. Computational Economics, 51(2), 173–193.

Scutari, M. (2010). Learning Bayesian networks with the bnlearn R package. Journal of Statistical Software, 35(3), 1–22.

Sheehan, B., Murphy, F., Ryan, C., Mullins, M., & Liu, H. Y. (2017). Semi-autonomous vehicle motor insurance: A Bayesian network risk transfer approach. Transportation Research Part C: Emerging Technologies, 82, 124–137.

Sherif, F. F., Zayed, N., & Fakhr, M. (2015). Discovering Alzheimer genetic biomarkers using Bayesian networks. Advances in Bioinformatics, 2015.

Sickles, R. C., & Zelenyuk, V. (2019). Measurement of productivity and efficiency. Cambridge: Cambridge University Press.

Spiegler, R. (2016). Bayesian networks and boundedly rational expectations. The Quarterly Journal of Economics, 131(3), 1243–1290.

Spirtes, P., & Glymour, C. (1991). An algorithm for fast recovery of sparse causal graphs. Social Science Computer Review, 9(1), 62–72.

Spirtes, P., Glymour, C. N., & Scheines, R. (2000). Causation, prediction, and search. Cambridge: MIT Press.

Sriboonchitta, S., Liu, J., Kreinovich, V., & Nguyen, H. T. (2014). Vine copulas as a way to describe and analyze multi-variate dependence in econometrics: Computational motivation and comparison with Bayesian networks and fuzzy approaches. In Modeling dependence in econometrics (pp. 169–184). Springer.

Sun, L., & Erath, A. (2015). A Bayesian network approach for population synthesis. Transportation Research Part C: Emerging Technologies, 61, 49–62.

Tavana, M., Abtahi, A. R., Di Caprio, D., & Poortarigh, M. (2018). An artificial neural network and Bayesian network model for liquidity risk assessment in banking. Neurocomputing, 275, 2525–2554.

Tsagris, M. (2019). Bayesian network learning with the PC algorithm: An improved and correct variation. Applied Artificial Intelligence, 33, 101–123.

Tsagris, M. (2020). pchc: Bayesian network learning with the PCHC algorithm. https://CRAN.R-project.org/package=pchc, R package version 0.2.

Tsagris, M., & Tsamardinos, I. (2019). Feature selection with the R package MXM. F1000Research, 7.

Tsamardinos, I., & Aliferis, C. F. (2003). Towards principled feature selection: Relevancy, filters and wrappers. In Proceedings of the ninth international workshop on artificial intelligence and statistics. Key West, FL: Morgan Kaufmann Publishers.

Tsamardinos, I., & Borboudakis, G. (2010). Permutation testing improves Bayesian network learning. In Joint European conference on machine learning and knowledge discovery in databases (pp. 322–337). Springer.

Tsamardinos, I., Brown, L. E., & Aliferis, C. F. (2006). The max-min hill-climbing Bayesian network structure learning algorithm. Machine Learning, 65(1), 31–78.

Verma, T., & Pearl, J. (1991). Equivalence and synthesis of causal models. In Proceedings of the sixth conference on uncertainty in artificial intelligence (pp. 220–227).

Wu, T. T., & Lange, K. (2008). Coordinate descent algorithms for lasso penalized regression. The Annals of Applied Statistics, 2(1), 224–244.

Xue, J., Gui, D., Zhao, Y., Lei, J., Zeng, F., Feng, X., et al. (2016). A decision-making framework to model environmental flow requirements in oasis areas using Bayesian networks. Journal of Hydrology, 540, 1209–1222.

Xue, J., Gui, D., Lei, J., Sun, H., Zeng, F., & Feng, X. (2017). A hybrid Bayesian network approach for trade-offs between environmental flows and agricultural water using dynamic discretization. Advances in Water Resources, 110, 445–458.

Acknowledgements

The author would like to acknowledge Stefanos Fafalios, Nikolaos Pandis and Zacharias Papadovasilakis for reading an earlier draft of this manuscript and the reviewers whose comments improved the paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tsagris, M. A New Scalable Bayesian Network Learning Algorithm with Applications to Economics. Comput Econ 57, 341–367 (2021). https://doi.org/10.1007/s10614-020-10065-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-020-10065-7