Abstract

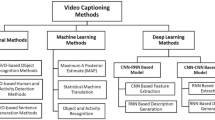

Automatic generation of video descriptions in natural language, also called video captioning, aims to understand the visual content of the video and produce a natural language sentence depicting the objects and actions in the scene. This challenging integrated vision and language problem, however, has been predominantly addressed for English. The lack of data and the linguistic properties of other languages limit the success of existing approaches for such languages. In this paper we target Turkish, a morphologically rich and agglutinative language that has very different properties compared to English. To do so, we create the first large-scale video captioning dataset for this language by carefully translating the English descriptions of the videos in the MSVD (Microsoft Research Video Description Corpus) dataset into Turkish. In addition to enabling research in video captioning in Turkish, the parallel English–Turkish descriptions also enable the study of the role of video context in (multimodal) machine translation. In our experiments, we build models for both video captioning and multimodal machine translation and investigate the effect of different word segmentation approaches and different neural architectures to better address the properties of Turkish. We hope that the MSVD-Turkish dataset and the results reported in this work will lead to better video captioning and multimodal machine translation models for Turkish and other morphology rich and agglutinative languages.

Similar content being viewed by others

Notes

Note that the original MSVD dataset also contains annotations obtained for many different languages. The number of these multilingual descriptions is, however, very low compared to the number of original English descriptions. Moreover, these descriptions were not shared with the community.

We make our dataset publicly available at https://hucvl.github.io/MSVD-Turkish/.

Pre-processing consists of lowercasing, length filtering with minimum token count set to 5, punctuation removal and deduplication.

It should be noted we reused the hyper-parameters from the NMT experiments and did not conduct a hyper-parameter search for our MMT models.

References

Aafaq N, Mian A, Liu W, Gilani SZ, Shah M (2019) Video description: a survey of methods, datasets and evaluation metrics and description. ACM Comput Surv 52(6):1–37

Akin AA, Akin MD (2007) Zemberek, an open source NLP framework for Turkic languages

Bahdanau D, Cho K, Bengio Y (2015) Neural machine translation by jointly learning to align and translate. In: Proc. International Conference on Learning Representations (ICLR), San Diego, California, USA, arXiv:1409.0473

Barbu A, Bridge A, Burchill Z, Coroian D, Dickinson S, Fidler S, Michaux A, Mussman S, Narayanaswamy S, Salvi D, Schmidt L, Shangguan J, Siskind JM, Waggoner J, Wang S, Wei J, Yin Y, Zhang Z (2012) Video in sentences out. In: Proc. 28th Conference on Uncertainty in Artificial Intelligence (UAI2012), Catalina Island, California, USA, arXiv:1204.2742,

Barrault L, Bougares F, Specia L, Lala C, Elliott D, Frank S (2018) Findings of the third shared task on multimodal machine translation. In: Proc. Third Conference on Machine Translation, Volume 2: Shared Task Papers, Association for Computational Linguistics, Brussels, Belgium, pp 308–327, http://www.aclweb.org/anthology/W18-6402

Caglayan O (2019) Multimodal Machine Translation. Theses, Université du Maine, https://tel.archives-ouvertes.fr/tel-02309868

Caglayan O, Aransa W, Bardet A, García-Martínez M, Bougares F, Barrault L, Masana M, Herranz L, van de Weijer J (2017a) LIUM-CVC submissions for WMT17 multimodal translation task. In: Proc. Second Conference on Machine Translation, Volume 2: Shared Task Papers, Association for Computational Linguistics, Copenhagen, Denmark, pp 432–439, http://www.aclweb.org/anthology/W17-4746

Caglayan O, Aransa W, Wang Y, Masana M, García-Martínez M, Bougares F, Barrault L, van de Weijer J (2016a) Does multimodality help human and machine for translation and image captioning? In: Proc. First Conference on Machine Translation, Association for Computational Linguistics, Berlin, Germany, pp 627–633, http://www.aclweb.org/anthology/W/W16/W16-2358

Caglayan O, Barrault L, Bougares F (2016b) Multimodal attention for neural machine translation. Computing Research Repository arXiv:1609.03976

Caglayan O, García-Martínez M, Bardet A, Aransa W, Bougares F, Barrault L (2017b) NMTPY: A flexible toolkit for advanced neural machine translation systems. Prague Bull Math Linguistics 109:15–28. https://doi.org/10.1515/pralin-2017-0035, https://ufal.mff.cuni.cz/pbml/109/art-caglayan-et-al.pdf

Calixto I, Elliott D, Frank S (2016) DCU-UvA multimodal MT system report. In: Proceedings of the First Conference on Machine Translation, Association for Computational Linguistics, Berlin, Germany, pp 634–638, http://www.aclweb.org/anthology/W/W16/W16-2359

Calixto I, Liu Q (2017) Incorporating global visual features into attention-based neural machine translation. In: Proc. Conference on Empirical Methods in Natural Language Processing (EMNLP), Association for Computational Linguistics, Copenhagen, Denmark, pp 992–1003, https://www.aclweb.org/anthology/D17-1105

Chen D, Dolan W (2011) Collecting highly parallel data for paraphrase evaluation. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics (ACL): Human Language Technologies, Association for Computational Linguistics, Portland, Oregon, USA, pp 190–200, https://www.aclweb.org/anthology/P11-1020

Chen Y, Wang S, Zhang W, Huang Q (2018) Less is more: Picking informative frames for video captioning. In: Proc. European Conference on Computer Vision (ECCV), Munich, Germany, pp 367–384

Cho K, van Merrienboer B, Gulcehre C, Bahdanau D, Bougares F, Schwenk H, Bengio Y (2014) Learning phrase representations using rnn encoder–decoder for statistical machine translation. In: Proc. Conference on Empirical Methods in Natural Language Processing (EMNLP), Association for Computational Linguistics, Doha, Qatar, pp 1724–1734, http://www.aclweb.org/anthology/D14-1179

Das P, Xu C, Doell R, Corso J (2013) A thousand frames in just a few words: Lingual description of videos through latent topics and sparse object stitching. In: Proc. IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Portland, Oregon, USA, pp 2634–2641

Denkowski M, Lavie A (2014) Meteor universal: Language specific translation evaluation for any target language. In: Proc. 9th Workshop on Statistical Machine Translation, Association for Computational Linguistics, Baltimore, Maryland, USA, pp 376–380, https://doi.org/10.3115/v1/W14-3348,

Donahue J, Hendricks LA, Guadarrama S, Rohrbach M, Venugopalan S, Saenko K, Darrell T (2015) Long-term recurrent convolutional networks for visual recognition and description. In: Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, Massachusetts, USA, arXiv:1411.4389

Elliott D, Frank S, Barrault L, Bougares F, Specia L (2017) Findings of the second shared task on multimodal machine translation and multilingual image description. In: Proc. 2nd Conference on Machine Translation, Volume 2: Shared Task Papers, Association for Computational Linguistics, Copenhagen, Denmark, pp 215–233, http://www.aclweb.org/anthology/W17-4718

Elliott D, Frank S, Sima’an K, Specia L (2016) Multi30k: Multilingual english-german image descriptions. In: Proc. 5th Workshop on Vision and Language, Association for Computational Linguistics, Berlin, Germany, pp 70–74, http://anthology.aclweb.org/W16-3210

Elliott D, Kádár À (2017) Imagination improves multimodal translation. In: Proc. 8th International Joint Conference on Natural Language Processing (IJCNLP) (Volume 1: Long Papers), Asian Federation of Natural Language Processing, Taipei, Taiwan, pp 130–141, http://aclweb.org/anthology/I17-1014

Gella S, Lewis M, Rohrbach M (2018) A dataset for telling the stories of social media videos. In: Proc. Conference on Empirical Methods in Natural Language Processing (EMNLP), Association for Computational Linguistics, Brussels, Belgium, pp 968–974, https://doi.org/10.18653/v1/D18-1117,

Guadarrama S, Krishnamoorthy N, Malkarnenkar G, Venugopalan S, Mooney R, Darrell T, Saenko K (2013) Youtube2text: Recognizing and describing arbitrary activities using semantic hierarchies and zero-shot recognition. In: Proc. IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, pp 2712–2719, http://www.cs.utexas.edu/users/ai-labpub-view.php?PubID=127409

Hakeem A, Sheikh Y, Shah M (2004) \(case^e\): A hierarchical event representation for the analysis of videos. Proc. Association for the Advancement of Artificial Intelligence (AAAI), San Jose, California, USA, pp 263–268

Hanckmann P, Schutte K, Burghouts GJ (2012) Automated textual descriptions for a wide range of video events with 48 human actions. In: Proc. European Conference on Computer Vision (ECCV), Firenze, Italy

He K, Xiangyu Z, Shaoqing R, Sun J (2016) Deep residual learning for image recognition. In: Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, Nevada, USA, pp 770–778, https://doi.org/10.1109/CVPR.2016.90

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Huang PY, Liu F, Shiang SR, Oh J, Dyer C (2016) Attention-based multimodal neural machine translation. In: Proc. First Conference on Machine Translation, Association for Computational Linguistics, Berlin, Germany, pp 639–645, http://www.aclweb.org/anthology/W/W16/W16-2360

Inan H, Khosravi K, Socher R (2016) Tying word vectors and word classifiers: A loss framework for language modeling. CoRR arXiv:1611.01462,

Kingma D, Ba J (2014) Adam: A method for stochastic optimization. CoRR arXiv:1412.6980

Kojima A, Tamura T, Fukunaga K (2012) Natural language description of human activities from video images based on concept hierarchy of actions. Int J Comput Vision (IJCV) 50(2):171–184

Krippendorff K (1970) Estimating the reliability, systematic error and random error of interval data. Educ Psychol Measur 30(1):61–70. https://doi.org/10.1177/001316447003000105

Krishna R, Hata K, Ren F, Li F, Niebles JC (2017) Dense-captioning events in videos. arXiv:1705.00754,

Krishnamoorthy N, Malkarnenkar G, Mooney R, Saenko K, Guadarrama S (2013) Generating natural-language video descriptions using text-mined knowledge. In: Proc. Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL): Human Language Technologies, Association for Computational Linguistics, Atlanta, Georgia, USA, pp 10–19, https://www.aclweb.org/anthology/W13-1302

Kudo T, Richardson J (2018) Sentencepiece: A simple and language independent subword tokenizer and detokenizer for neural text processing. In: Proc. Conference on Empirical Methods in Natural Language Processing (EMNLP): System Demonstrations, Association for Computational Linguistics, Brussels, Belgium, pp 66–71, http://www.aclweb.org/anthology/D18-2012

Libovický J, Helcl J (2017) Attention strategies for multi-source sequence-to-sequence learning. In: Proc. 55th Annual Meeting of the Association for Computational Linguistics (ACL) (Volume 2: Short Papers), Association for Computational Linguistics, Vancouver, Canada, pp 196–202, https://doi.org/10.18653/v1/P17-2031

Lin CY (2004) ROUGE: A package for automatic evaluation of summaries. Proc. Annual Meeting of the Association for Computational Linguistics (ACL), Barcelona, Spain, pp 74–81

Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft COCO: Common objects in context. In: Proc. European Conference on Computer Vision (ECCV), Springer, Zurich, Switzerland, pp 740–755

Li Y, Song Y, Cao L, Tetreault JR, Goldberg L, Jaimes A, Luo J (2016) TGIF: A new dataset and benchmark on animated GIF description. In: Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, Nevada, USA, arXiv:1604.02748,

Ott M, Edunov S, Baevski A, Fan A, Gross S, Ng N, Grangier D, Auli M (2019) fairseq: A Fast, Extensible Toolkit for Sequence Modeling. In: Proc. Conference of the North American Chapter of the Association for Computational Linguistics (NAACL): Human Language Technologies, Minneapolis, Minnesota, USA

Papineni K, Roukos S, Ward T, Zhu WJ (2002) Bleu: A method for automatic evaluation of machine translation. In: Proc. 40th Annual Meeting on Association for Computational Linguistics, Association for Computational Linguistics, Philadelphia, Pennsylvania, USA, pp 311–318, https://doi.org/10.3115/1073083.1073135

Plummer BA, Wang L, Cervantes CM, Caicedo JC, Hockenmaier J, Lazebnik S (2015) Flickr30k entities: Collecting region-to-phrase correspondences for richer image-to-sentence models. In: Proc. IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, pp 2641–2649, https://doi.org/10.1109/ICCV.2015.303

Post M (2018) A call for clarity in reporting BLEU scores. In: Proceedings of the Third Conference on Machine Translation: Research Papers, Association for Computational Linguistics, Brussels, Belgium, pp 186–191, https://doi.org/10.18653/v1/W18-6319

Press O, Wolf L (2017) Using the output embedding to improve language models. In: Proc. 15th Conference of the European Chapter of the Association for Computational Linguistics, Valencia, Spain, arXiv:1608.05859,

Qi P, Zhang Y, Zhang Y, Bolton J, Manning CD (2020) Stanza: A Python natural language processing toolkit for many human languages. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, https://nlp.stanford.edu/pubs/qi2020stanza.pdf

Regneri M, Rohrbach M, Wetzel D, Thater S, Schiele B, Pinkal M (2013) Grounding action descriptions in videos. Trans Assoc Comput Linguist 1:25–36

Rohrbach M, Amin S, Andriluka M, Schiele B (2012) A database for fine grained activity detection of cooking activities. In: Proc. IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), IEEE Computer Society, Providence, Rhode Island, USA, pp 1194–1201

Rohrbach M, Qiu W, Titov I, Thater S, Pinkal M, Schiele B (2013) Translating video content to natural language descriptions. In: Proc. IEEE International Conference on Computer Vision (ICCV), IEEE Computer Society, Sydney, Australia, pp 433–440

Rohrbach A, Rohrbach M, Tandon N, Schiele B (2015) A dataset for movie description. In: Proc. IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Boston, Massachusetts, USA, pp 3202–3212, https://doi.org/10.1109/CVPR.2015.7298940

Rohrbach A, Torabi A, Rohrbach M, Tandon N, Pal C, Larochelle H, Courville A, Schiele B (2017) Movie description. Int J Comput Vision 123(1):94–120. https://doi.org/10.1007/s11263-016-0987-1

Senina A, Rohrbach M, Qiu W, Friedrich A, Amin S, Andriluka M, Pinkal M, Schiele B (2014) Coherent multi-sentence video description with variable level of detail. arXiv:1403.6173

Sennrich R, Haddow B, Birch A (2016) Neural machine translation of rare words with subword units. In: Proc. 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Association for Computational Linguistics, Berlin, Germany, pp 1715–1725, http://www.aclweb.org/anthology/P16-1162

Sennrich R, Firat O, Cho K, Birch-Mayne A, Haddow B, Hitschler J, Junczys-Dowmunt M, Läubli S, Miceli Barone A, Mokry J, Nadejde M (2017) Nematus: a toolkit for neural machine translation. In: Proceedings of the EACL 2017 Software Demonstrations, Association for Computational Linguistics (ACL), Valencia, Spain, pp 65–68

Sigurdsson GA, Varol G, Wang X, Farhadi A, Laptev I, Gupta A (2016) Hollywood in homes: Crowdsourcing data collection for activity understanding. arXiv:1604.01753,

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556, cite arxiv:1409.1556

Specia L, Frank S, Sima’an K, Elliott D (2016) A shared task on multimodal machine translation and crosslingual image description. In: Proc. of the First Conference on Machine Translation, Association for Computational Linguistics, Berlin, Germany, pp 543–553, http://www.aclweb.org/anthology/W/W16/W16-2346

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: A simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958

Srivastava N, Mansimov E, Salakhutdinov R (2015) Unsupervised learning of video representations using lstms. arXiv:1502.04681,

Sulubacak U, Caglayan O, Grönroos SA, Rouhe A, Elliott D, Specia L, Tiedemann J (2019) Multimodal machine translation through visuals and speech. arXiv:191112798

Sutskever I, Vinyals O, Le QV (2014) Sequence to sequence learning with neural networks. In: Proc. 27th International Conference on Neural Information Processing Systems (NeurIPS), MIT Press, Montreal, Canada, pp 3104–3112, http://dl.acm.org/citation.cfm?id=2969033.2969173

Thomason J, Venugopalan S, Guadarrama S, Saenko K, Mooney R (2014) Integrating language and vision to generate natural language descriptions of videos in the wild. In: Proc. International Conference on Computational Linguistics (COLING): Technical Papers, Dublin, Ireland, pp 1218–1227, https://www.aclweb.org/anthology/C14-1115

Torabi A, Pal CJ, Larochelle H, Courville AC (2015) Using descriptive video services to create a large data source for video annotation research. arXiv:1503.01070,

Unal ME, Citamak B, Yagcioglu S, Erdem A, Erdem E, Cinbis NI, Cakici R (2016) TasvirEt: A benchmark dataset for automatic Turkish description generation from images. In: Proc. 24th Signal Processing and Communication Application Conference (SIU), pp 1977–1980, https://doi.org/10.1109/SIU.2016.7496155

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Lu, Polosukhin I (2017) Attention is all you need. In: Guyon I, Luxburg UV, Bengio S, Wallach H, Fergus R, Vishwanathan S, Garnett R (eds) Advances in Neural Information Processing Systems 30, Curran Associates, Inc., pp 5998–6008, http://papers.nips.cc/paper/7181-attention-is-all-you-need.pdf

Vaswani A, Bengio S, Brevdo E, Chollet F, Gomez AN, Gouws S, Jones L, Kaiser L, Kalchbrenner N, Parmar N, Sepassi R, Shazeer N, Uszkoreit J (2018) Tensor2tensor for neural machine translation. arXiv:1803.07416

Vedantam R, Lawrence Zitnick C, Parikh D (2015) Cider: Consensus-based image description evaluation. In: Proc. IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Boston, Massachusetts, USA, pp 4566–4575

Venugopalan S, Rohrbach M, Donahue J, Mooney R, Darrell T, Saenko K (2015) Sequence to Sequence-Video to Text. In: Proc. IEEE International Conference on Computer Vision (ICCV), Santiago, Chile

Wang X, Chen W, Wu J, Wang Y, Wang WY (2018) Video captioning via hierarchical reinforcement learning. In: Proc. IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, Utah, United States, arXiv:1711.11135

Wang X, Wu J, Chen J, Li L, Wang YF, Wang WY (2019) Vatex: A large-scale, high-quality multilingual dataset for video-and-language research. In: Proc. IEEE International Conference on Computer Vision (ICCV), Seoul, Korea

Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhudinov R, Zemel R, Bengio Y (2015) Show, attend and tell: Neural image caption generation with visual attention. In: Proc. 32nd International Conference on Machine Learning (ICML), JMLR Workshop and Conference Proceedings, Lille, France, pp 2048–2057, http://jmlr.org/proceedings/papers/v37/xuc15.pdf

Xu J, Mei T, Yao T, Rui Y (2016) MSR-VTT: A Large Video Description Dataset for Bridging Video and Language. In: Proc. IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, Nevada, USA

Yao L, Torabi A, Cho K, Ballas N, Pal C, Larochelle H, Courville A (2015) Describing videos by exploiting temporal structure. In: Proc. IEEE International Conference on Computer Vision (ICCV), Santiago, Chile

Yoshikawa Y, Shigeto Y, Takeuchi A (2017) STAIR captions: Constructing a large-scale japanese image caption dataset. In: Proc. 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, Canada, arXiv:1705.00823,

Yu H, Wang J, Huang Z, Yang Y, Xu W (2016) Video paragraph captioning using hierarchical recurrent neural networks. In: Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, Nevada, USA, arXiv:1510.07712,

Zeng K, Chen T, Niebles JC, Sun M (2016) Title generation for user generated videos. In: Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, Nevada, USA, arXiv:1608.07068,

Zhou L, Kalantidis Y, Chen X, Corso JJ, Rohrbach M (2019) Grounded video description. In: Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, California, USA, arXiv:1812.06587

Zhou L, Xu C, Corso JJ (2017) Procnets: Learning to segment procedures in untrimmed and unconstrained videos. arXiv:1703.09788

Acknowledgements

This work was supported in part by GEBIP 2018 Award of the Turkish Academy of Sciences to E. Erdem, BAGEP 2021 Award of the Science Academy to A. Erdem, and the MMVC project funded by TUBITAK and the British Council via the Newton Fund Institutional Links grant programme (Grant ID 219E054 and 352343575). Lucia Specia, Pranava Madhyastha and Ozan Caglayan also received support from MultiMT (H2020 ERC Starting Grant No. 678017).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Citamak, B., Caglayan, O., Kuyu, M. et al. MSVD-Turkish: a comprehensive multimodal video dataset for integrated vision and language research in Turkish. Machine Translation 35, 265–288 (2021). https://doi.org/10.1007/s10590-021-09276-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10590-021-09276-y