Abstract

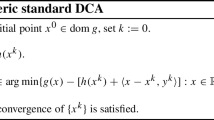

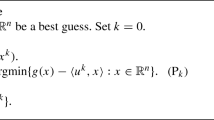

We introduce a new approach to apply the boosted difference of convex functions algorithm (BDCA) for solving non-convex and non-differentiable problems involving difference of two convex functions (DC functions). Supposing the first DC component differentiable and the second one possibly non-differentiable, the main idea of BDCA is to use the point computed by the subproblem of the DC algorithm (DCA) to define a descent direction of the objective from that point, and then a monotone line search starting from it is performed in order to find a new point which decreases the objective function when compared with the point generated by the subproblem of DCA. This procedure improves the performance of the DCA. However, if the first DC component is non-differentiable, then the direction computed by BDCA can be an ascent direction and a monotone line search cannot be performed. Our approach uses a non-monotone line search in the BDCA (nmBDCA) to enable a possible growth in the objective function values controlled by a parameter. Under suitable assumptions, we show that any cluster point of the sequence generated by the nmBDCA is a critical point of the problem under consideration and provides some iteration-complexity bounds. Furthermore, if the first DC component is differentiable, we present different iteration-complexity bounds and prove the full convergence of the sequence under the Kurdyka–Łojasiewicz property of the objective function. Some numerical experiments show that the nmBDCA outperforms the DCA, such as its monotone version.

Similar content being viewed by others

Data availability

The data that supports the findings of this study is available from the corresponding author upon request.

Notes

(H1) is not restrictive in the mathematical sense, but we will show in our numerical experiments that the parameter \(\sigma \) has an influence in the performance of the method.

References

Almeida, Y.T., Cruz Neto, J.X., Oliveira, P.R., Souza, J.C.O.: A modified proximal point method for DC functions on Hadamard manifolds. Comput. Optim. Appl. 76, 649–673 (2020)

An, L.T.H., Tao, P.D.: D.C. programming approach to the multidimensional scaling problem. In: From Local to Global Optimization (Rimforsa, 1997), vol. 53 of Nonconvex Optim. Appl., Kluwer Acad. Publ., Dordrecht, pp. 231–276 (2001)

An, N.T., Nam, N.M., Yen, N.D.: A D.C. algorithm via convex analysis approach for solving a location problem involving sets. J. Convex Anal. 23, 77–101 (2016)

Aragón Artacho, F.J., Fleming, R.M.T., Vuong, P.T.: Accelerating the DC algorithm for smooth functions. Math. Program. 95–118 (2018)

Aragón Artacho, F.J., Vuong, P.T.: The boosted difference of convex functions algorithm for nonsmooth functions. SIAM J. Optim. 30, 980–1006 (2020)

Attouch, H., Bolte, J., Redont, P., Soubeyran, A.: Proximal alternating minimization and projection methods for nonconvex problems: an approach based on the Kurdyka–Łojasiewicz inequality. Math. Oper. Res. 35, 438–457 (2010)

Attouch, H., Bolte, J., Svaiter, B.F.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, and regularized Gauss–Seidel methods. Math. Program. 137, 91–129 (2013)

Bagirov, A.M., Ugon, J.: Codifferential method for minimizing nonsmooth DC functions. J. Global Optim. 50, 3–22 (2011)

Bagirov, A.M., Ugon, J.: Nonsmooth DC programming approach to clusterwise linear regression: optimality conditions and algorithms. Optim. Methods Softw. 33, 194–219 (2018)

Beck, A.: First-Order Methods in Optmization, 1st edn. Society for Industrial and Applied Mathematics-SIAM and Mathematical Optimization Society (2017)

Beck, A., Hallak, N.: On the convergence to stationary points of deterministic and randomized feasible descent directions methods. SIAM J. Optim. 30, 56–79 (2020)

Beck, A., Teboulle, M.: Fast gradient-based algorithms for constrained total variation image denoising and deblurring problems. IEEE Trans. Image Process. 18, 2419–2434 (2009)

Bomze, I., Locatelli, M.: Undominated DC decompositions of quadratic functions and applications to branch-and-bound approaches. Comput. Optim. Appl. 28(2), 227–245 (2004)

Brimberg, J.: The Fermat–Weber location problem revisited. Math. Program. 71, 71–76 (1995)

Caselles, V., Chambolle, A., Cremers, D., Novaga, M., Pock, T.: An introduction to total variation for image analysis. Theoretical Foundations and Numerical Methods for Sparse Recovery. Comp. Appl. Math. 9, 263–340 (2010)

Clarke, F.: Optimization and Nonsmooth Analysis. Canadian Mathematical Society Series of Monographs and Advanced Texts. Wiley (1983)

Cruz Neto, J.X., Lopes, J.O., Santos, P.S.M., Souza, J.C.O.: An interior proximal linearized method for DC programming based on Bregman distance or second-order homogeneous kernels. Optimization 68, 1305–1319 (2019)

CruzNeto, J.X., Oliveira, P.R., Soubeyran, A., Souza, J.C.O.: A generalized proximal linearized algorithm for DC functions with application to the optimal size of the firm problem. Ann. Oper. Res. 289, 313–339 (2020)

Cuong, T.H., Yao, J.-C., Yen, N.D.: Qualitative properties of the minimum sum-of-squares clustering problem. Optimization 69, 2131–2154 (2020)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Ferrer, A., Martinez-Legaz, J.E.: Improving the efficiency of DC global optimization methods by improving the DC representation of the objective function. J. Glob. Optim. 43, 513–531 (2009)

de Oliveira, W.: Proximal bundle methods for nonsmooth DC programming. J. Glob. Optim. 75, 523–563 (2019)

de Oliveira, W.: The ABC of DC programming. Set-Valued Var. Anal. 28, 679–706 (2020)

de Oliveira, W., Tcheou, M.P.: An inertial algorithm for DC programming. Set-Valued Var. Anal. 27, 895–919 (2019)

Geremew, W., Nam, N.M., Semenov, A., Boginski, V., Pasiliao, E.: A DC programming approach for solving multicast network design problems via the Nesterov smoothing technique. J. Glob. Optim. 72, 705–729 (2018)

Gotoh, J.-Y., Takeda, A., Tono, K.: DC formulations and algorithms for sparse optimization problems. Math. Program. 169, 141–176 (2018)

Grapiglia, G.N., Sachs, E.W.: On the worst-case evaluation complexity of non-monotone line search algorithms. Comput. Optim. Appl. 68, 555–577 (2017)

Grapiglia, G.N., Sachs, E.W.: A generalized worst-case complexity analysis for non-monotone line searches. Numer. Algorithms (2020)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23, 707–716 (1986)

Hiriart-Urruty, J.-B., Lemaréchal, C.: Convex analysis and minimization algorithms. I, vol. 305 of Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences]. Springer, Berlin (1993). Fundamentals

Joki, K., Bagirov, A.M., Karmitsa, N., Mäkelä, M.M.: A proximal bundle method for nonsmooth dc optimization utilizing nonconvex cutting planes. J. Glob. Optim. 68, 501–535 (2017)

Joki, K., Bagirov, A.M., Karmitsa, N., Mäkelä, M.M., Taheri, S.: Double bundle method for finding Clarke stationary points in nonsmooth DC programming. SIAM J. Optim. 28, 1892–1919 (2018)

Khamaru, K., Wainwright, M.J.: Convergence guarantees for a class of non-convex and non-smooth optimization problems. J. Mach. Learn. Res. 20, Paper No. 154, 52 (2019)

Le Thi, H.A., Pham Dinh, T.: DC programming and DCA: thirty years of developments. Math. Program. 169, 5–68 (2018)

Locatelli, M., Schoen, F.: Global Optimization: Theory, Algorithms, and Applications, MOS-SIAM Ser. Optim. 15. SIAM, Philadelphia (2013)

Lou, Y., Zeng, T., Osher, S., Xin, J.: A weighted difference of anisotropic and isotropic total variation model for image processing. SIAM J. Imaging Sci. 8, 179–1823 (2015)

Moreau, J.J.: A proximité et dualité dans un espace Hilbertien. Bull. Soc. Math. Fr. 93, 273–299 (1965)

Moudafi, A., Maingé, P.-E.: On the convergence of an approximate proximal method for DC functions. J. Comput. Math. 24, 475–480 (2006)

Nam, N.M., Geremew, W., Reynolds, S., Tran, T.: Nesterov’s smoothing technique and minimizing differences of convex functions for hierarchical clustering. Optim. Lett. 12, 455–473 (2018)

Ordin, B., Bagirov, A.M.: A heuristic algorithm for solving the minimum sum-of-squares clustering problems. J. Glob. Optim. 61, 341–361 (2015)

Sachs, E.W., Sachs, S.M.: Nonmonotone line searches for optimization algorithms. Control Cybernet. 40, 1059–1075 (2011)

Souza, J.C.O., Oliveira, P.R., Soubeyran, A.: Global convergence of a proximal linearized algorithm for difference of convex functions. Optim. Lett. 10, 1529–1539 (2016)

Sun, W.-Y., Sampaio, R.J.B., Candido, M.A.B.: Proximal point algorithm for minimization of DC function. J. Comput. Math. 21, 451–462 (2003)

Tao, P.D., An, L.T.H.: Convex analysis approach to D.C. programming: theory, algorithms and applications. Acta Math. Vietnam 22, 289–355 (1997)

Tao, P.D., Souad, E.B.: Algorithms for solving a class of nonconvex optimization problems. Methods of subgradients. In: FERMAT Days 85: Mathematics for Optimization (Toulouse, 1985), vol. 129 of North-Holland Math. Stud., North-Holland, Amsterdam, pp. 249–271 (1986)

Toland, J.F.: On subdifferential calculus and duality in nonconvex optimization. Bull. Soc. Math. Fr. 60, 177–183 (1979)

Yin, P., Lou, Y., He, Q., Xin, J.: Minimization of \(\ell _{1-2}\) for compressed sensing. SIAM J. Sci. Comput. 37, A536–A563 (2015)

Zhang, H., Hager, W.W.: A nonmonotone line search technique and its application to unconstrained optimization. SIAM J. Optim. 14, 1043–1056 (2004)

Acknowledgements

O. P. Ferreira was partially supported in part by CNPq - Brazil Grants 304666/2021-1, J.C.O. Souza was supported in part by CNPq Grant 313901/2020-1. The project leading to this publication has received funding from the French government under the “France 2030” investment plan managed by the French National Research Agency (reference: ANR-17-EURE-0020) and from Excellence Initiative of Aix-Marseille University - A*MIDEX.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ferreira, O.P., Santos, E.M. & Souza, J.C.O. A boosted DC algorithm for non-differentiable DC components with non-monotone line search. Comput Optim Appl (2024). https://doi.org/10.1007/s10589-024-00578-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10589-024-00578-4

Keywords

- DC function

- Boosted difference of convex functions algorithm

- DC algorithm

- Non-monotone line search

- Kurdyka–Łojasiewicz property