Abstract

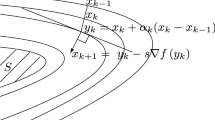

In a Hilbert space setting, we consider new first order optimization algorithms which are obtained by temporal discretization of a damped inertial autonomous dynamic involving dry friction. The function f to be minimized is assumed to be differentiable (not necessarily convex). The dry friction potential function \( \varphi \), which has a sharp minimum at the origin, enters the algorithm via its proximal mapping, which acts as a soft thresholding operator on the sum of the velocity and the gradient terms. After a finite number of steps, the structure of the algorithm changes, losing its inertial character to become the steepest descent method. The geometric damping driven by the Hessian of f makes it possible to control and attenuate the oscillations. The algorithm generates convergent sequences when f is convex, and in the nonconvex case when f satisfies the Kurdyka–Lojasiewicz property. The convergence results are robust with respect to numerical errors, and perturbations. The study is then extended to the case of a nonsmooth convex function f, in which case the algorithm involves the proximal operators of f and \(\varphi \) separately. Applications are given to the Lasso problem and nonsmooth d.c. programming.

Similar content being viewed by others

Data Availability

We do not have any associated data in a data repository, so our manuscript does not need a Data Deposition Information item.

References

Adly, S., Attouch, H.: Finite convergence of proximal-gradient inertial algorithms combining dry friction with Hessian-driven damping. SIAM J. Optim. 30(3), 2134–2162 (2020)

Adly, S., Attouch, H.: Finite time stabilization of continuous inertial dynamics combining dry friction with Hessian-driven damping. J. Conv. Anal. 28(2), 281–310 (2021)

Adly, S., Attouch, H.: First-order inertial algorithms involving dry friction damping. Math. Program. 193(1), 405–445 (2022)

Adly, S., Attouch, H., Cabot, A.: Finite time stabilization of nonlinear oscillators subject to dry friction. In: Nonsmooth Mechanics and Analysis, Adv. Mech. Math. 12, Springer, New York, pp. 289–304 (2006)

Álvarez, F., Attouch, H., Bolte, J., Redont, P.: A second-order gradient-like dissipative dynamical system with Hessian-driven damping. J. Math. Pures Appl. 81, 747–779 (2002)

Amann, H., Díaz, J.I.: A note on the dynamics of an oscillator in the presence of strong friction. Nonlinear Anal. 55, 209–216 (2003)

Attouch, H., Bolte, J., Redont, P., Soubeyran, A.: Proximal alternating minimization and projection methods for nonconvex problems, An approach based on the Kurdyka-Lojasiewicz inequality. Math. Oper. Res. 35(2), 438–457 (2010)

Attouch, H., Bolte, J., Svaiter, B.F.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, regularized Gauss-Seidel methods. Math. Program. 137(1), 91–129 (2013)

Attouch, H., Boţ, R.I., Csetnek, E.R.: Fast optimization via inertial dynamics with closed-loop damping. J. Eur. Math. Soc. (2022). https://doi.org/10.4171/JEMS/1231

Attouch, H., Buttazzo, G., Michaille, G.: Variational Analysis in Sobolev and BV Spaces: Applications to PDEs and Optimization, 2nd ed., MOS/SIAM Ser. Optim. 17, SIAM, Philadelphia (2014)

Attouch, H., Cabot, A.: Convergence rates of inertial forward-backward algorithms. SIAM J. Optim. 28(1), 849–874 (2018)

Attouch, H., Cabot, A., Chbani, Z., Riahi, H.: Accelerated forward-backward algorithms with perturbations. Appl. Tikhonov Regul. JOTA 179(1), 1–36 (2018)

Attouch, H., Chbani, Z., Fadili, J., Riahi, H.: First-order optimization algorithms via inertial systems with Hessian driven damping. Math. Program. (2020). https://doi.org/10.1007/s10107-020-01591-1

Attouch, H., Chbani, Z., Peypouquet, J., Redont, P.: Fast convergence of inertial dynamics and algorithms with asymptotic vanishing viscosity. Math. Program. Ser. B 168, 123–175 (2018)

Attouch, H., Fadili, J., Kungurtsev, V.: On the effect of perturbations, errors in first-order optimization methods with inertia and Hessian driven damping, arXiv:2106.16159v1 [math.OC] (2021)

Attouch, H., Peypouquet, J., Redont, P.: Fast convex minimization via inertial dynamics with Hessian driven damping. J. Differ. Equ. 261(10), 5734–5783 (2016)

Aujol, J.-F., Dossal, Ch.: Stability of over-relaxations for the Forward-Backward algorithm, application to FISTA. SIAM J. Optim. 25, 2408–2433 (2015)

Aujol, J.-F., Dossal, Ch., Fort, G., Moulines, E.: Rates of Convergence of Perturbed FISTA-based algorithms. (2019). hal-02182949. https://hal.archives-ouvertes.fr/hal-02182949

Aujol, J.-F., Dossal, Ch., Rondepierre, A.: Convergence rates of the Heavy-Ball method for quasi-strongly convex optimization. (2021). hal-02545245v2. https://hal.archives-ouvertes.fr/hal-02545245v2

Bach, F.: Statistical machine learning and convex optimization, StatMathAppli 2017, Fréjus - September (2017)

Balti, M., May, R.: Asymptotic for the perturbed heavy ball system with vanishing damping term. Evol. Equ. Control Theory 6, 177–186 (2017)

Bauschke, H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert spaces. CMS Books in Math, Springer (2011)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2, 183–202 (2009)

Bello-Cruz, Y., Gonalves, M.L.N., Krislock, N.: On inexact accelerated proximal gradient methods with relative error rules, preprint arXiv:2005.03766, (2020)

Bolte, J., Daniilidis, A., Ley, O., Mazet, L.: Characterizations of Lojasiewicz inequalities: subgradient flows, talweg, convexity. Trans. Amer. Math. Soc. 362(6), 3319–3363 (2010)

Boţ, R.I., Csetnek, E.R.: Second order forward-backward dynamical systems for monotone inclusion problems. SIAM J. Control. Optim. 54(3), 1423–1443 (2016)

Boţ, R.I., Csetnek, E.R., László, S.C.: A second order dynamical approach with variable damping to nonconvex smooth minimization. Appl. Anal. 99(3), 361–378 (2020)

Boţ, R.I., Csetnek, E.R., Laszló, S.C.: Tikhonov regularization of a second order dynamical system with Hessian damping. Math. Program. 189, 151–186 (2021)

Brézis, H.: Opérateurs maximaux monotones dans les espaces de Hilbert équations d’évolution. Lecture Notes, vol. 5. North-Holland, Amsterdam (1972)

Castera, C., Bolte, J., Févotte, C., Pauwels, E.: An Inertial Newton Algorithm For Deep Learning (2019). https://hal.inria.fr/hal-02140748/

Chambolle, A., Pock, T.: An introduction to continuous optimization for imaging. Acta Numer 25, 161–319 (2016)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

van den Dries, L.: Tame Topology and o-Minimal Structures, London Mathematical Society, Lecture Note Series, vol. 248. Cambridge University Press, Cambridge, UK (1998)

Haraux, A., Ghisi, M., Gambino, M.: Local and global smoothing effects for some linear hyperbolic equations with a strong dissipation. Trans. Amer. Math. Soc. 368, 2039–2079 (2016)

Hiriat-Urruty, J.-B.: How to Regularize a Difference of Convex Functions. J. Math. Anal. Appl. 162, 196–209 (1991)

Ioffe, A.: An invitation to tame optimization. SIAM J. Optim. 19(4), 1894–1917 (2009)

Kim, D.: Accelerated proximal point method for maximally monotone operators. Math. Program. (2021)

Le Thi, H.A., Pham Dinh, T.: The DC (difference of convex functions) Programming and DCA revisited with DC models of real world nonconvex optimization problems. Ann. Oper. Res. 133, 23–48 (2005)

Lin, T., Jordan, M.I.: A control-theoretic perspective on optimal high-order optimization, (2019) arXiv:1912.07168v1

Nesterov, Y.: A method of solving a convex programming problem with convergence rate \(O(1/k^2)\). Soviet Math. Dokl. 27, 372–376 (1983)

Nesterov, Y.: Introductory Lectures on Convex Optimization: Appl. Optim. 87, Kluwer, Boston, MA, (2004)

Peypouquet, J., Sorin, S.: Evolution equations for maximal monotone operators: asymptotic analysis in continuous and discrete time. J. Convex Anal. 17(3–4), 1113–1163 (2010)

Pham Dinh, T., Souad, E.B.: Algorithms for solving a class of nonconvex optimization problems. Methods of subgradients. J.B. Hiriart-Urruty (ed.) Fermat Days 85: Mathematics for Optimization, North-Holland Math. Stud. vol 129, pp. 249–271 (1986)

Polyak, B.T.: Some methods of speeding up the convergence of iterative methods. Z. Vylist Math. Fiz. 4, 1–17 (1964)

Schmidt, M., Le Roux, N., Bach, F.: Convergence rates of inexact proximal-gradient methods for convex optimization. In: NIPS’11-25, (2011), Granada. HAL inria-00618152v3

Shi, B., Du, S. S., Jordan, M. I., Su, W. J.: Understanding the acceleration phenomenon via high-resolution differential equations (2018). arXiv:1810.08907 [math.OC]

Solodov, M.V., Zavriev, S.K.: Error stability properties of generalized gradient-type algorithms. J. Optim. Theory Appl. 98, 663–680 (1998)

Su, W., Boyd, S., Candès, E.J.: A differential equation for modeling Nesterov’s accelerated gradient method. J. Mach. Learn. Res. 17, 1–43 (2016)

Toland, J.: Duality in nonconvex optimization. J. Math. Anal. Appl. 66, 399–415 (1978)

Villa, S., Salzo, S., Baldassarres, L., Verri, A.: Accelerated and inexact forward-backward. SIAM J. Optim. 23, 1607–1633 (2013)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest. All authors have reviewed and approved the final manuscript and its submission to the journal.

Additional information

This paper is dedicated to the memory of Prof. Asen L. Dontchev.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Adly, S., Attouch, H. & Le, M.H. First order inertial optimization algorithms with threshold effects associated with dry friction. Comput Optim Appl 86, 801–843 (2023). https://doi.org/10.1007/s10589-023-00509-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-023-00509-9

Keywords

- Proximal-gradient algorithms

- Inertial methods

- Dry friction

- Hessian-driven damping

- Soft thresholding

- Kurdyka–Lojasiewicz property

- Lasso problem

- D.c. optimization

- Errors