Abstract

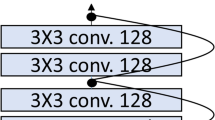

The Residual Networks of Residual Networks (RoR) exhibits excellent performance in the image classification task, but sharply increasing the number of feature map channels makes the characteristic information transmission incoherent, which losses a certain of information related to classification prediction, limiting the classification performance. In this paper, a Pyramidal RoR network model is proposed by analysing the characteristics of RoR and combining with the PyramidNet. Firstly, based on RoR, the Pyramidal RoR network model with channels gradually increasing is designed. Secondly, we analysed the effect of different residual block structures on performance, and chosen the residual block structure which best favoured the classification performance. Finally, we add an important principle to further optimize Pyramidal RoR networks, drop-path is used to avoid over-fitting and save training time. In this paper, image classification experiments were performed on CIFAR-10/100, SVHN and Adience datasets, and we achieved the current lowest classification error rates were 2.96, 16.40 and 1.59% on CIFAR-10/100 and SVHN, respectively. Experiments show that the Pyramidal RoR network optimization method can improve the network performance for image classification and effectively suppress the gradient disappearance problem in DCNN training.

Similar content being viewed by others

References

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436–444 (2015)

Zou, W.Y., Wang, X.Y., Sun, M., Lin, Y.: Generic object detection with dense neural patterns and regional. arXiv preprint arXiv:1404.4316 (2014)

Krizhenvshky, A., Sutskever, I., Hinton, G.: Imagenet classification with deep convolutional networks. In: Proceedings of the Advances in Neural Information Processing System, pp. 1097–1105 (2012)

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., Berg, A., Fei-Fei, L.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 115, 211–252 (2014)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition, arXiv preprint arXiv:1409.1556 (2014)

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recogniton, pp. 1–9 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition, arXiv preprint arXiv:1512.03385 (2015)

He, K., Sun, J.: Convolutional neural networks at constrained time cost, In: Proceedings of the IEEE Conference on Computer and Vision Pattern Recognition, pp. 5353–5360 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Identity mapping in deep residual networks, arXiv preprint arXiv:1603.05027 (2016)

Huang, G., Sun, Y., Liu, Z., Weinberger, K.: Deep networks with stochastic depth, arXiv preprint arXiv:1605.09382 (2016)

Zagoruyko, S., Komodakis, N.: Wide residual networks, arXiv preprint arXiv:1605.07146 (2016)

Zhang, K., Sun, M., Han, X., et al.: Residual networks of residual networks: multilevel residual networks. IEEE Trans. Circuit Syst. Video Technol. 99, 1 (2016)

Clevert, D.-A., Unterthiner, T., Hochreiter, S.: Fast and accurate deep network learning by exponential linear units (elus), arXiv preprint arXiv:1511.07289 (2015)

Trottier, L., Giguere, P., Chaib-draa, B.: Parametric exponential linear unit for deep convolutional neural networks, arXiv preprint arXiv:1605.09322 (2016)

Abdi, M., Nahavandi, S.: Multi-residual networks, arXiv preprint arXiv:1609.05672 (2016)

Krizhenvshky, A., Hinton, G.: Learning multiple layers of features from tiny images, M.Sc. thesis, Deptartment of Computer Science, University of Toronto, Toronto, ON, Canada (2009)

Netzer, Y., Wang, T., Coates, A., Bissacco, A., Wu, B., Ng, A.Y.: Reading digits in natural images with unsupervised feature learning, In: Proceedings of the NIPS Workshop Deep Learning and Unsupervised Feature Learning, pp. 1–9 (2011)

Eidinger, E., Enbar, R., Hassner, T.: Age and gender estimation of unfiltered faces[J]. IEEE Trans. Inf. Forensics Secur. 9(12), 2170–2179 (2014)

Bengio, Y., Simard, P., Frasconi, P.: Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 5(2), 157–166 (2014)

Shen, F., Zeng, G.: Weighted residuals for very deep networks, arXiv preprint arXiv:1605.08831 (2016)

Nair, V., Hinton, G.: Rectified linear units improve restricted Boltzmann machines, In: Proceedings of the International Conference on ICML pp. 807–814 (2010)

Han, D., Kim, J., Kim, J.: Deep pyramidal residual networks, In: Proceedings of the International Conference on CVPR (2017)

Huang, G., Liu, Z., Weinberger, K Q, et al.: Densely connected convolutional networks, arXiv preprint arXiv:1608.06993 (2016)

Wang, F., Jiang, M., Qian C, et al.: Residual Attention Network for Image Classification, In: Proceedings of the International Conference on CVPR (2017)

Chen, Y., Li, J., Xiao, H, et al.: Dual Path Networks, In: Proceedings of the International Conference on CVPR (2017)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift, arXiv preprint arXiv:1502.03167 (2015)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift, arXiv preprint arXiv:1502.03167 (2015)

Hinton, G., Srivastava, N., Krizhevsky, A., Weinberger, K.: Improving neural networks by preventing co-adaptation of feature detectors, arXiv preprint arXiv:1207.0580 (2012)

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15, 1929–1958 (2014)

Mishkin, D., Matas, J.: All you need is a good init, arXiv preprint arXiv:1511.06422 (2015)

Srivastava, R.K., Greff, K., Schmidhuber, J.: Highway networks, arXiv preprint arXiv:1505.00387 (2015)

Larsson, G., Maire, M., Shakhnarovich, G.: FractalNet: ultra-deep neural networks without residuals, arXiv preprint arXiv:1605.07648 (2016)

Shah, A., Shinde, S., Kadam, E., Shah, H.: Deep residual networks with exponential linear unit, arXiv preprint arXiv:1604.04112 (2016)

Targ, S., Almeida, D., Lyman, K.: Resnet in resnet: generalizing residual architectures, arXiv preprint arXiv:1603.08029 (2016)

Moniz, J., Pal, C.: Convolutional residual memory networks, arXiv preprint arXiv:1606.05262 (2016)

Xie, S., Girshick, R., Dollr P., et al.: Aggregated residual transformations for deep neural networks, arXiv preprint arXiv:1611.05431 (2016)

Yamada, Y., Iwamura, M., Kise, K.: Deep pyramidal residual networks with separated stochastic depth[J]. arXiv preprint arXiv:1612.01230 (2016)

Gross, S., Wilber, M.: Training and investigating residual nets[J]. Facebook AI Research, CA. http://torch.ch/blog/2016/02/04/resnets.html, (2016)

Acknowledgements

This work is supported by National Natural Science Foundation of China (Grant Nos. 61302163, 61302105 and 61501185), Hebei Province Natural Science Foundation (Grant Nos. F2015502062 and F2016502062) and the Fundamental Research Funds for the Central Universities (Grant No. 2018MS).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhang, K., Guo, L., Gao, C. et al. Pyramidal RoR for image classification. Cluster Comput 22 (Suppl 2), 5115–5125 (2019). https://doi.org/10.1007/s10586-017-1443-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-017-1443-x