Abstract

In its Sixth Assessment Report Cycle (AR6), the Intergovernmental Panel on Climate Change (IPCC) aims to strengthen the communication of its products. As the only mandatory part of IPCC reports specifically targeting a lay audience, the Frequently Asked Questions (FAQs) provide an opportunity for broader communication of key IPCC topics. AR6 has released three Special Reports that include FAQs, varying in number and structure, as well as the approach taken to develop them. Using these Special Report FAQs, in this essay, we take stock of current efforts to co-develop IPCC FAQs and provide recommendations to strengthen the impact of these highly useful yet currently under-utilised resources. Building on evidence from a user survey, text analysis and social media statistics, we find that bringing together IPCC authors and communication specialists to jointly develop the text and graphics increases the accessibility and usefulness of the FAQs. Efforts made for informative visuals additionally increase their impact on social media. To maximise the potential and impact of the IPCC FAQs, we recommend involving communication experts from the beginning of the drafting process to share responsibility, which requires sufficient resources to be allocated to the FAQs. We also suggest developing common FAQ guidelines across Working Groups so future assessment reports can ensure all FAQs are an effective and useful tool for IPCC communication.

We also hope that other scientific institutions and projects that wish to summarise scientific content for diverse audiences can benefit from our lessons learned.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Intergovernmental Panel on Climate Change (IPCC) reports and derivative products contain a wealth of material to be used for communications and outreach to multiple audiences. As the only mandatory part of the IPCC reports specifically focusing on a lay audience, the frequently asked questions (FAQs) provide an opportunity for broader communication than the science-policy interface that is usually targeted by IPCC reports. Contrary to outreach material produced by third parties, the FAQs follow the formal IPCC drafting process. The combination of the scientific rigour characteristic for the IPCC reports and the requirements for a broader readership makes them a unique climate communication tool.

IPCC FAQs reach out to the public, teachers and students, the media, as well as decision makers and practitioners. The IPCC Expert Meeting on Communications held in 2016 (IPCC, 2016, p47) concluded that FAQs ‘should be written clearly and elegantly’, ‘addressing key questions in the public domain’ and that ‘science writers should be involved closely in the production of FAQs’. These recommendations have initiated adjustments in the process for FAQ development. Also following advice from the meeting, communication experts were employed at each IPCC Working Group Technical Support Unit (TSU).

FAQs were introduced by Working Group I (WGI) in the Fourth Assessment Report (published 2007). In the Fifth Assessment Report (published 2013–2014), all three Working Groups adopted different approaches to develop FAQs for their respective reports. Differences remain in the Sixth Assessment Cycle (AR6) where each of the three Working Groups facilitated one of the Special ReportsFootnote 1: Working Group I TSU, the Special Report on Global Warming of 1.5 °C (SR1.5), Working Group II TSU, the Special Report on the Ocean and Cryosphere in a Changing Climate (SROCC), and Working Group III TSU, the Special Report on Climate Change and Land (SRCCL).

SR1.5, SROCC and SRCCL contain FAQs, as mandated by the IPCC Plenary (IPCC, 2009), although their number and structure differ, as well as the approach taken to develop them. At the time of this essay, the main Working Group assessment reports are still in development and aim to be released in 2021 and 2022.

2 The FAQ drafting process

As mandatory parts of IPCC reports, the FAQs must adhere to the formal drafting process,Footnote 2 which includes two rounds of review before the publication of the report.

The production of the three AR6 Special Reports was coordinated by the three Working Group Technical Support Units (TSUs). As in the previous IPCC cycle, different approaches to FAQ development were conducted, with some Special Reports leaving the FAQ development mainly to the authors of the report chapters whereas others were jointly or co-developed by the authors and communications specialists.

For the SR1.5, Working Group I conducted a user survey at the beginning of the drafting process to collect information to aid the selection of FAQs (see Section 3). Editorial support was provided throughout the production, including edits for communication scheduled before each draft submission. All of the SR1.5 FAQs were co-developed by communication experts and the report authors, with FAQs sometimes being initially drafted by TSU communication experts after scoping with the authors. External communication experts were encouraged to participate in the reviews. FAQs were around one page (about 950 words) and started with a summary paragraph.

In addition, each of the ten SR1.5 FAQs included a figure that visually answered the FAQ or a key concept central to the FAQ. The graphics incorporated principles from the IPCC Visual Style Guide (Gomis and Pidcock, 2020) that draws from cognitive science (e.g., Harold et al. 2016) to help create more effective visualisations for broader audiences. For example, each figure contained an explanatory title (usually the FAQ title) and a one-sentence subtitle with the take-home information from the graphic. All figures were co-developed by a contracted (visual) information designer and the SR1.5 authors, facilitated by TSU staff.

To inform the SROCC FAQ development, an online survey was shared with Focal Points (i.e. government representatives) and Observer Organisations (https://www.ipcc.ch/list-observer-organisations/) in parallel to the first review, leading to a more internal science-policy user focus in the results. The FAQs received editorial support throughout the process, including edits suggested prior to the submission of the report drafts. Some of the FAQs were drafted jointly by the report authors and the communications officer. External communication experts were encouraged to participate in the reviews. The target length of the FAQs was one page, accompanied by a short summary paragraph. Three of the seven SROCC FAQs contained figures, co-developed between report authors and TSU graphics experts, who also considered the visual style guide.

For SRCCL, no specific FAQ user survey was run, although responses from stakeholder consultationsFootnote 3 to help scope the report as a whole were considered in the FAQ development. The TSU communications officer supported authors in the selection of the FAQs. Suggested edits for readability were provided by the TSU for most FAQs before the submission of the report drafts. The SRCCL targeted 18 shorter FAQs of below 350 words and did not feature summaries or graphics.

Table 1 compares the different approaches of the AR6 special reports, indicating that SR1.5 FAQs have been more closely supported by science communicators than SROCC, itself more supported than SRCCL.

3 User survey for the Special Report on 1.5℃ FAQs

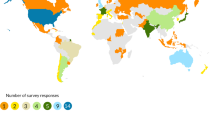

To guide FAQ selection, an online user survey (Online resource 1) was run early in the SR1.5 process. The survey was shared with IPCC Focal Points and Observer Organisations, as well as through IPCC social media. It gathered both internal and external views from potential users of the SR1.5 FAQs on what topics would interest them and what format they would prefer. This section focuses on the user survey results for only the SR1.5 as responses contain views from both internal and external users of the IPCC FAQs (Table 1).

The survey received 313 responses from 44 countries. The majority of the participants were located in Europe and North America (83%). Of the responses, 14% were from national governmental organisations or were IPCC Focal Points, 32% were from academia and research, 9% were from international organisations, 12% were from international or national non-governmental organisations, 11% were from other educational institutions including museums or zoos, 9% were from the private sector, 3% were from media institutions and 10% were from other types of organisations.

A majority of the respondents (63%) reported having previously used the FAQs. Where FAQs had not been previously used, the reason was largely due to an unawareness of their existence (96%). The uses most commonly stated were communication purposes, either in presentations for policymakers, colleagues or the general public; educational purposes, which included as university teaching material; for their own education/information, or they were recommended as reading material; or to be used as a basis for policy or NGO briefing notes or fact sheets.

The SR1.5 user survey also asked participants to list questions and topics for the FAQs to focus on. The most frequently requested topics were why focus on 1.5 °C global warming, the impacts and changes at 1.5 °C global warming, the feasibility of limiting global warming to 1.5 °C and how future scenarios could evolve including the options/required actions for change. Given options ranging from a maximum length of half a page to two pages and with/without figures, the most popular FAQ format was one page including a Fig. (42% of responses). Survey responses clearly favoured FAQs featuring visual elements (as opposed to text only), with a marked preference for infographics or interactive graphics (Fig. 1). Users also preferred online access to the FAQs compared to having a printed booklet.

Acknowledging that this is a small sample size, these indicative results informed decisions on the selection and format of the SR1.5 FAQs, namely that all FAQs should be around one page in length and feature a single figure. The selected SR1.5 FAQs also tried to cover the most commonly requested topics from the user survey (see list of the FAQs in Online resource 2).

4 Analysis and results

This section evaluates the final texts of the FAQs (Section 4.1) and as well as their reception on social media (Section 4.2).

4.1 Text analysis

For this essay, we use two statistical methods to assess the sentence structure of the FAQs and the amount of potentially technical terms included in the texts. Such methods can be useful to analyse large amounts of texts and provide initial, but limited, insights into their readability.

The Flesch Reading Ease index (FRE) assumes that readability is determined by the number of syllables per word and the number of words per sentence (Flesch 1948; Schriver 1990; DuBay 2004; Marnell 2008).Footnote 4 In use for more than 70 years, the FRE has previously been applied in the IPCC context (e.g. Barkemeyer et al. 2016; Stocker and Plattner, 2016). However, the FRE does not assess the actual words used or their technicality, the grammar or style of a given text, nor the context or general reading ability of its readers.

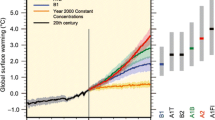

All FAQs of the three special reports score between fairly difficult and very confusing, which is below a ‘standard’ score or plain English.Footnote 5 Such scores, however, have even been observed for some Wikipedia entries or New York Times articles (Stewart 2017). Co-drafting between the authors and communication experts—as was the case for SR1.5 and SROCC (Section 2)—led to smaller ranges and higher median of the FRE. The FRE scores of the Special Reports of the Sixth Assessment Cycle range similarly to the FAQs of previous cycles, with a median score for SR1.5 and SROCC slightly higher, and for SRCCL slightly lower than previous reportsFootnote 6 (Fig. 2a, Online resource 2).

Box and Whisker plots showing the highest, lowest, median and average (a) Flesch Reading Ease scores and (b) De-Jargonizer scores for the SR1.5, SROCC and SRCCL FAQ texts. The position of the upper whisker represents the highest score. The boxes represent the lower and higher quartiles while the position of the lower whisker represents the lowest score. The line within each box marks the median and the cross indicates the average for each report

De-Jargonizer assesses the technicality of the language used in texts. This tool automatically determines how common words in a given text are, by comparing them to a database of words used by the BBC website (Rakedzon et al., 2017).Footnote 7 Words are assigned frequency levels—common, mid and rare—resulting in a ‘suitability score’, with higher scores indicating less technical texts. Similar to FRE results, de-Jargonizer scores show that the FAQs from SR1.5 and SROCC perform better than SRCCL, with the latter special report relying more heavily on mid-frequency and rare words (Fig. 2b, Online resource 2).

Our test reveals that words such as ‘livelihood’, ‘greenhouse’ or ‘adaptation’ fall into the mid-frequency category. Examples of rare words include ‘overfishing’ and ‘overshoot’. Being an element of IPCC reports, FAQs will always include terms such as these. Explanations using common words will aid the readability of the text for broader audiences, including non-native English speakers. This can nevertheless be challenging in shorter FAQs.

Automatic text analyses offer simple and fast indications of sentence complexity and language technicality on large amounts of text. Efforts that build on these initial insights, drawing further on the expertise of communications specialists, will likely make the FAQs more comprehensible. It is also worth considering that the increasing complexity of science-related texts might reflect the progress in the underlying science and a potentially growing capability of interested readers (Barkemeyer et al. 2016). A more detailed evaluation of the IPCC FAQs against the background of increasing context knowledge among users and popularity of climate-related topics would help to better tailor these texts to their audiences.

4.2 Social media statistics of FAQ-related posts

To assess the impact of the FAQs on social media, we looked at their performance after being shared on the official IPCC Twitter and Facebook accounts. The main metric considered is the engagement rate—i.e., the number of interactions with a post (e.g. clicks, likes, shares/retweets) divided by the total number of impressions (the number of times a tweet appears to users in either their timeline or search results). We look at the effect of sharing content on social media depending on the type of content (Section 4.2.1) and at the content from each Special Report (Section 4.2.2).

4.2.1 Statistics on the ‘type’ of content

Social media posts about FAQs typically include the title of the question, a link to the answer on the report website and a visual that can take 3 formsFootnote 8: (i) a figure, which comes from the FAQ itself; (ii) a card, which is a background photo with the name of the FAQ and the special report; or (iii) a gif, which is the dynamic version of a card; i.e. with a video in the background, instead of a photo. Posts with figures from the FAQ are the most popular type of content. They generate higher engagement rates (Fig. 3a, c) for Twitter and Facebook. On Twitter, posts with figures attract 1.5 and 2.1 times more clicks to the actual FAQ on the IPCC website than cards and gifs, respectively. Figures, especially those including a succinct answer as in SR1.5, are the only type of post that presents actual content of the FAQ, which could be another reason for their relative success on social media. Card and gif differences are more complex: generally, while gifs seem to trigger higher engagement rates, they also tend to be slightly less viewed than cards and they trigger less clicks on their links to the actual FAQ.

Box and Whisker plots showing the highest, lowest, median and average engagement rates on Twitter (a, b) and Facebook (c, d), when all the FAQ posts are sorted by type (a, c) and by Special Reports (c, d). The position of the upper whisker represents the highest score. The boxes represent the lower and higher quartiles while the position of the lower whisker represents the lowest score. Dots are outliers and numbers in brackets indicate the number of posts

4.2.2 Statistical comparison of the different Special Reports.

SR1.5 shows higher engagement rates of posts compared to the SROCC and the SRCCL (Fig. 3b, d). Figure posts of the SR1.5 led to engagement rates that are 25 and 17% higher on Twitter and Facebook, respectively, compared to cards and gifs post of the same report (not shown in Fig. 3). However, even non-figure SR1.5 posts show higher engagement rates than the two other reports on Twitter (not on Facebook). This implies that while the ‘figure effect’ may be part of the reason for higher performance, and it cannot explain all of the differences between the reports. This could be explained by a broader public interest in the topics covered in the SR1.5 compared to the SRCCL and SROCC and/or by the fact the user survey actively influenced the choice of FAQs answered by the report (Section 3.1).

Therefore, social media statistics strengthen the case for the inclusion of figures in the FAQs, which was already highlighted by the user survey.

5 Recommendations based on reported evidence and experiences

Our analysis suggests that co-developing the FAQs between IPCC authors and communication experts, with a focus on simpler, clearer texts and graphics and based on an informed understanding of audience needs, increases their readability, usability and impact. The following recommendations for future IPCC FAQs, as well as to other institutions that wish to summarise scientific content for diverse audiences, draw from our experiences as FAQ coordinators and communication specialists, and from analysis in this essay.

Overall, we suggest to allocate sufficient resources to dedicate ample time and personnel in order to maximise FAQ impact, and to develop common FAQ guidelines across Working Groups, so future IPCC cycles can ensure all FAQs are an effective and useful tool for communication. In future IPCC reports, science communication expertise would be easier to include through an alternative, co-developed drafting process that allows for shared responsibilities among authors, TSU staff, including the internal communication specialists and external support.

5.1 User surveys

User surveys, held at the beginning of the report-drafting process, can help to identify topics of interest from readers of the report. This is particularly beneficial for Special Reports, which are one-time reports that do not follow the structure of the traditional Working Group reports. User surveys complement the specialist expertise of climate science communicators who can offer guidance on relevant topics and framings that resonate with intended FAQ users. Surveys could also re-evaluate past IPCC FAQs upon which to learn from.

5.2 Text development

FAQs should strive for a well-developed structure, using simple sentences and comprehensible language. Any unavoidable complex terminology, as measured by text analysis tools for example, should be explained in the FAQ. Involving communication specialists throughout and more actively in the process produces clearer texts for broader and non-specialist audiences.

5.3 Use of visuals

Where resources allow, efforts should be made to include a figure in each FAQ as this allows for their content (and answers) to be shared more easily. Figures should have a consistent visual identity that adheres to visual style guidelines and content that summarises the FAQ answer (or a key concept that FAQ is based upon).

5.4 Reviews and user testing of FAQ drafts

Due to the confidentiality of the drafts, external feedback on the FAQs is obtained during the reviews. To maximise this opportunity, climate science communicators should be encouraged more to contribute to the reviews and the FAQs made more easily available to them. However, review comments are insufficient to replace a structured user testing that engages with a diverse target audience, including non-native English readers and translators.Footnote 9 Such testing could be facilitated by IPCC staff during review periods.

5.5 Publication and promotion

To increase their readership, FAQs should be promoted in ways that appeal to their target audiences. This includes highly visual-based social media posts, videos, podcasts or resources, for example, presented consistently on the IPCC website. Such products and activities can be included in IPCC communication strategies for launching reports. From a communication perspective, having FAQs feature short summaries, visually or in text form, favours content-sharing on social media, potentially increasing the visibility and reach of the FAQs.

5.6 Facilitation of a co-developed process

When drafting IPCC FAQs, a balance is needed between scientific expertise, communication expertise and knowledge of the IPCC. Incorporating IPCC understanding ensures a smoother FAQ drafting process. For example, user survey participants familiar with the IPCC process may suggest FAQ topics more in line with the report outlines and the IPCC mandate—therefore focusing the FAQs early on in the process. This balance benefits from TSU facilitation who bring IPCC knowledge. Therefore, we recommend that TSU budgets include staff resources to coordinate and support the process, particularly if external contractors, such as editors or information designers are involved.

Availability of data and materials

Data from the text analysis and blank surveys are included as online material. The rest is not available due to confidentiality issues.

Notes

Special Reports are IPCC reports that cover specific topics that are more interdisciplinary than the individual three Working Group Reports.

IPCC Procedures are available here. hyperlink lost. please add https://www.ipcc.ch/documentation/procedures/.

A summary report of the user group discussions is available here. https://www.ipcc.ch/site/assets/uploads/2018/07/sr2_stakeholder_consultation-final.pdf.

The formula reads: RE = 206.835–(1.015 × ASL)–(84.6 × ASW). RE readability ease, ASL average sentence length (i.e. the number of words divided by the number of sentences), ASW: Average number of syllables per word (i.e. the number of syllables divided by the number of words). Scores: 90–100: very easy, 80–89: easy, 70–79: fairly easy, 60–69: standard, 50–59: fairly difficult, 30–49: difficult, 0–29: very confusing. A score of 65 would represent Plain English. We used the online tool provided at https://readabilityformulas.com that clearly applied the original Flesch formula.

The FRE readability scores for the SR1.5 FAQs range from 21.1 to 52.2 (median 45.2), SROCC FAQs range from 32.2 to 49.7 (median 41.8) and SRCCL FAQs from 6.6 to 52.8 (median 29.2).

In the Fifth Assessment Report, FAQs range from 16.6 to 52.1 in WGI (median 39.0), 26.3 to 51 in WGII (median 37.3) and 9.4 to 67.9 for WGIII (median 34.3). The score for the FAQs of the Working Group I contribution to the Fourth Assessment Report—where FAQs were initially introduced—ranged from 29.2 to 55.1 (median 42.2).

De-Jargonizer calculates frequency levels -- common, mid, and rare -- for words in a given text based on word counts on the BBC sites (including science related channels), resulting in a “suitability score”, with higher scores indicating less technical texts. http://scienceandpublic.com.

Examples of the 3 forms can be found here with a figure of the SR1.5 https://twitter.com/IPCC_CH/status/1136298375408685057; a card of the SRCCL https://twitter.com/IPCC_CH/status/1243467675063455744 and a gif of the SROCC. https://twitter.com/IPCC_CH/status/1320667406327578626.

IPCC FAQs are translated into the 6 UN languages after approval of the Summary of Policymakers and acceptance of the report.

References

Barkemeyer R, Dessai S, Monge-Sanz B et al (2016) Linguistic analysis of IPCC summaries for policymakers and associated coverage. Nature Clim Change 6:311–316. https://doi.org/10.1038/nclimate2824

DuBay, W.H. (2004) The principles of readability http://www.impact-information.com/impactinfo/readability02.pdf. Accessed 10 November 2020.

Flesch R (1948) A new readability yardstick. J Appl Psychol 32(3):221–233. https://doi.org/10.1037/h0057532

Gomis, M., Pidcock, R., IPCC visual principles for authors, IPCC WGI Technical Support Unit. https://www.ipcc.ch/site/assets/uploads/2019/04/IPCC-visual-style-guide.pdf Accessed 10 November 2020.

Harold J, Lorenzoni I, Shipley TF, Coventry KR (2016) Cognitive and psychological science insights to improve climate change data visualization. Nat Clim Chang 6:1080–1089

IPCC (2009) REPORT OF THE 30th SESSION OF THE IPCC, Antalya, Turkey, 21–23 April 2009. https://www.ipcc.ch/site/assets/uploads/2018/05/final_report_30.pdf Accessed 20/12/2020

IPCC (2016) Meeting report of the intergovernmental panel on climate change expert meeting on communication [Lynn, J., M. Araya, Ø. Christophersen, I. El Gizouli, S.J. Hassol, E.M. Konstantinidis, K.J. Mach, L.A. Meyer, K. Tanabe, M. Tignor, R. Tshikalanke, J.-P. van Ypersele (eds.)]. World Meteorological Organization, Geneva, Switzerland, 229 pp.

Marnell, G. (2008): Measuring readability. Part 1: the spirit is willing but the Flesch is weak. Southern Communicator, 14, 12–16. Part 2: Validation and its pitfalls. Southern Communicator, 15, 17–21. https://www.abelard.com.au/readability%20statistics.pdf. Accessed 10 November 2020.

Rakedzon T, Segev E, Chapnik N, Yosef R, Baram-Tsabari A (2017) Automatic jargon identifier for scientists engaging with the public and science communication educators. PLoS ONE 12(8):e0181742. https://doi.org/10.1371/journal

Schriver K (1990) Evaluating text quality: the continuum from text-focused to reader-focused methods. IEEE Trans Prof Commun 32(4):238–255. https://doi.org/10.1109/47.44536

Stewart, J.E.J. (2017) Reinterpreting the Flesch Readability Scale for the information age. International Teacher Education Conference, Harvard University. https://www.academia.edu/41460041/Reinterpreting_the_Flesch_Readability_Scale_for_the_Information_Age Accessed 29 October 2020.

Stocker T, Plattner GK (2016) Making use of the IPCC’s powerful communication tool. Nature Clim Change 6:637–638. https://doi.org/10.1038/nclimate3010

Acknowledgements

The authors would like to thank Renée Van Diemen (Senior Scientist WGIII TSU), Valérie Masson Delmotte (Co-Chair IPCC WGI), Sina Löschke (Communications Manager WGII TSU), Melinda Tignor (Head of WGII TSU), Anna Pirani (Head of WGI TSU) and Jonathan Lynn (Head of Communications and Media Relations at the IPCC Secretariat) for their help drafting this manuscript. The authors are also very grateful to the 3 anonymous reviewers for their positive feedback and constructive comments on this manuscript, as well as Saffron O’Neill for her editing work on this special issue.

Funding

The research leading to these results was funded by the IPCC WGI and IPCC WGII TSUs.

Author information

Authors and Affiliations

Contributions

S.C. led the essay and analysed the SR1.5 user survey, M.N. conducted the text analysis of the FAQs and S.B. analysed the social media statistics collected by M.W. All authors contributed to the writing of the manuscript. R.P. contributed to the scoping and drafting of the manuscript. S.B., S.C. and N.H. created the figures for the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest but would like to disclose their current and previous roles in the IPCC: Sarah Connors is currently employed as Head of Science Team at the WGI TSU. Maike Nicolai was Communications Officer at the WGII TSU for SROCC. Sophie Berger is Science Officer at the WGI TSU. Melissa Walsh is Communications Officer at the IPCC Secretariat. Nigel Hawtin was Information Designer for the SR1.5 FAQs. Roz Pidcock, currently at Climate Outreach and an independent consultant, was previously employed as Head of Communications for IPCC WGI TSU. She is also serving as guest editor to this Special Issue in her independent consultant capacity.

Ethics approval

Retrospective approval was requested to the Université Paris-Saclay Research Ethics Committee (CER PS) but the application could not be processed because it ‘does not fall within the scope of the CER-PS mission, the data used are anonymised, no information sheet is necessary’.

Consent to participate

All participants were provided with full information regarding the survey and required to indicate their consent to participate on this basis prior to submitting their responses. All results have been anonymised and synthesised.

Code availability

Not applicable.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the topical collection “Climate Change Communication and the IPCC”, edited by Saffron O'Neill and Roz Pidcock.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Connors, S.L., Nicolai, M., Berger, S. et al. Co-developing the IPCC frequently asked questions as an effective science communication tool. Climatic Change 171, 10 (2022). https://doi.org/10.1007/s10584-021-03248-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10584-021-03248-0