Abstract

Decision-making in the area of coastal adaptation is facing major challenges due to ambiguity (i.e., deep uncertainty) pertaining to the selection of a probability model for sea level rise (SLR) projections. Possibility distributions are mathematical tools that address this type of uncertainty since they bound all the plausible probability models that are consistent with the available data. In the present study, SLR uncertainties are represented by a possibility distribution constrained by likely ranges provided in the IPCC Fifth Assessment Report and by a review of high-end scenarios. On this basis, we propose a framework combining probabilities and possibilities to evaluate how SLR uncertainties accumulate with other sources of uncertainties, such as future greenhouse gas emissions, upper bounds of future sea level changes, the regional variability of sea level changes, the vertical ground motion, and the contributions of extremes and wave effects. We apply the framework to evaluate the probability of coastal flooding by the year 2100 at a local, low-lying coastal French urban area on the Mediterranean coast. We show that when adaptation is limited to maintaining current defenses, the level of ambiguity is too large to precisely assign a probability model to future flooding. Raising the coastal walls by 85 cm creates a safety margin that may not be considered sufficient by local stakeholders. A sensitivity analysis highlights the key role of deep uncertainties pertaining to global SLR and of the statistical uncertainty related to extremes. The ranking of uncertainties strongly depends on the decision-maker’s attitude to risk (e.g., neutral, averse), which highlights the need for research combining advanced mathematical theories of uncertainties with decision analytics and social science.

Similar content being viewed by others

1 Introduction

Adaptation to future coastal flooding requires testing different adaptation pathways against different potential futures (Ranger et al. 2013; Haasnoot et al. 2013). Sea level rise (SLR) over the twenty-first century remains uncertain (Church et al. 2013; Kopp et al. 2014, 2017). Any impact assessments should therefore include a proper uncertainty quantification.

Two facets of uncertainty that are relevant to assessments of coastal impacts can be identified (e.g., Merz and Thieken 2009):

-

The first facet corresponds to aleatory uncertainty. It is generally related to randomness (variability) owing either to heterogeneity or to the random character of natural processes (i.e., stochasticity) such as the occurrences of extreme storm surges. The uncertainty factor can be described in statistical terms, and the range of possible futures can be captured with probabilities using a single and unique cumulative probability distribution function (CDF);

-

The second facet corresponds to epistemic uncertainty. Contrary to the first type, epistemic uncertainty is not intrinsic to the system under study and stems from the incomplete/imprecise nature of the available information, i.e., the limited knowledge on the physical environment or the engineered system under study. Epistemic uncertainty encompasses a broad range of situations from the scarcity of observations to a lack of knowledge or consensus pertaining to the appropriate conceptual models or CDFs used to represent uncertainty. The latter situation corresponds to “deep uncertainty” (Lempert et al. 2003).

In the area of SLR, deep uncertainties are mostly (but not exclusively) due to a lack of knowledge regarding ice melting, particularly in Antarctica (Ritz et al. 2015; DeConto and Pollard 2016; Kopp et al. 2017), which prevents the selection of a unique CDF for future sea level rise. A proper assessment of this type of uncertainty has important implications as originally evidenced by Ellsberg (1961) since it favors situations of “ambiguity,” which most decision-makers prefer to avoid (an effect called “ambiguity aversion”) (see also discussions by Stephens et al. (2017) and Wong and Keller (2017)).

To date, the different facets of uncertainty have mainly been addressed using the tools provided by probability theory (Hunter et al. 2013; Kopp et al. 2014, 2017; Buchanan et al. 2016; Le Cozannet et al. 2015; Horton et al. 2018) combined with expert knowledge (Church et al. 2013; Bamber and Aspinall 2013; Horton et al. 2014; Oppenheimer et al. 2016; Fuller et al. 2017). However, probabilistic approaches have limitations for properly handling deep uncertainty. Several authors (Dubois and Guyonnet 2011; Baudrit et al. 2006, 2007 and references therein, and more specifically in the climate change context in Bakker et al. 2017; Le Cozannet et al. 2017) have noted that the use of probabilities mixes the different uncertainty types into a single format, which provides information that is too precise given the available knowledge.

To overcome the shortcomings of the classic probabilistic setting, several alternative mathematical representation methods have been developed (see a comprehensive overview by Dubois and Guyonnet 2011). These methods are termed extraprobabilistic because they avoid the selection of a single CDF by bounding all the possible ones that are consistent with the available data/information. The feasibility of these approaches has been discussed for climate change impact assessments (e.g., Kriegler and Held 2005) and more recently for global SLR projections (Ben Abdallah et al. 2014; Le Cozannet et al. 2017). The latter study illustrates the concept using possibility distributions (Dubois and Prade 1988) for global projections based on the likely ranges from the IPCC Fifth Assessment Report (AR5) (Church et al. 2013) and on assumptions on high-end (HE) scenarios.

On this basis, the present study goes a step further by presenting a framework for local flooding impact assessments, which combines both possibilities and probabilities to address the whole spectrum of uncertainties in addition to deep uncertainties in SLR prediction (from global to local scales). We demonstrate the applicability of these concepts to a Mediterranean coastal area in France (representative of a local low-lying urban area exposed to storm surge and waves) with a top-down approach to support decision-making. The proposed procedure can easily be adapted to various decision contexts and applied to other coastal areas.

We describe the case study case in Sect. 2, and we provide the principles underlying the construction of possibility distributions in Sect. 3. In Sect. 4, we jointly propagate the different sources of uncertainties, represented by probabilities or possibilities, depending on the quality and the quantity of information available. We evaluate the impact on flooding probabilities and address the following two challenges in Sect. 5: (1) the integration of the risk attitude of decision-makers and (2) the analysis of the contribution of each source of uncertainty to the flooding probability.

2 Case study

The case study is located on the Mediterranean coast in southern France (Fig. 1). The urban area is a vulnerable zone located on a low-lying sand barrier between the sea and a lagoon. This site has experienced a series of severe storms in the past. In particular, the 1982 storm was one of the most damaging because it involved the overflow of low seawalls (Vinchon et al. 2011). Considering that similar conditions can lead to a rapid rise of water level behind coastal defenses, which cause a large amount of damage and threaten lives, we exclude wave overtopping events, during which the instantaneous water level occasionally exceeds the heights of embankments. Hence, we only consider overflow due to the highest water levels on the coast (hCOAST) exceeding a critical threshold of t = 2.15 m corresponding to the wall height (Idier et al. 2013).

Location of the study site (from Google Earth©): a regional setting; b local setting; c photo of the low seawall (Idier et al. 2013)

During the twenty-first century, hCOAST has been estimated following the approach of Nicholls et al. (2014), which consists of summing global SLR projections with other random variables that account for regional and local effects. This approach is justified in the Mediterranean region because (1) the complexity of processes taking place in the Mediterranean Sea prevents us from using published regional sea level projections in this area (Calafat et al. 2012; Adloff et al. 2018); (2) the published likely ranges for different regions (e.g., regional simulations of Church et al. 2013) only slightly differ (by ≈ 10 cm) from the likely ranges of global 2100 SLR projections; and (3) HE scenarios that are considered in the possibility approach (Sect. 3) rely on estimates of Antarctic melting, which remain highly imprecise today.

Following these assumptions, hCOAST is calculated as follows:

where

-

hGSLR(RCP, HE) is the global sea level rise (GSLR). This component is affected by epistemic uncertainties, which can be categorized as deep uncertainties due to difficulties in selecting the appropriate CDF. In the following analysis, we rely on two main sources of information (see Sect. 3.2): (1) the AR5 report (Church et al. 2013) and (2) assumptions regarding high-end (HE) scenarios.

-

hRSLR is the regional deviation (RSLR) from the global mean, which partially results from the current variability in the mean sea level due to the northern Mediterranean currents and future regional variability in sea level changes (Calafat et al. 2012; Adloff et al. 2018). Apart from the central and range values of these phenomena (estimated to be 0.0 ± 0.25 m by 2070 and beyond, see Le Cozannet et al. 2015: Appendix B), the scarcity of available data prevents an unambiguous selection of a single CDF, hence introducing deep uncertainties;

-

hVGM is the contribution of vertical ground motions, whose possible values are estimated based on an analysis of existing GNSS stationsFootnote 1 and the geologyFootnote 2. From this analysis, only incomplete interval data can be derived. The best estimate for the range of values is evaluated, i.e., [− 2, 0 mm/year], but more extreme values ranging [− 10, + 0.4 mm/year] cannot be excluded. Given the available data, the uncertainty is categorized as epistemic;

-

hextreme is the sum of the tidal level and the inverse barometric effect and wind setup; it is assumed to be stationary and is defined based on centennial events ranging from 1.3 to 2.0 m. The uncertainty on this component is mainly statistical in nature (aleatory) and is mathematically represented using a two-parameter Pareto distribution (Supplementary Material S5);

-

hwave is the wave setup, which is assumed to be stationary and defined based on the combination of observations and modeling results that lead to a range of possible values: [0.4, 0.8 m] (Gervais 2012). This component is mainly of statistical nature (aleatory) and is mathematically represented using a uniform CDF (Le Cozannet et al. 2015).

In the current study, we focus on a top-down approach, where the decision-makers are informed of the flooding probability Pf = Prob(hCOAST ≥ t) as the result of the accumulation of the aforementioned uncertain factors. The chosen time horizon is the year 2100 with a reference period of 1986–2005 to ensure consistency with the actual framework of coastal risk prevention in the study of interest. We consider two adaptation cases:

-

Case 1 is “adaptation as usual,” where the heights of the walls are held constant (t = 2.15 m) through maintenance works whenever appropriate;

-

Case 2 uses a “structural adaptation” measure, for which the height of coastal defenses is assumed to have increased to t = 3.00 m by 2100, which is currently considered a maximum height to preserve the recreational value of the seafront (related to the tourism and attractiveness of the site).

3 Construction of possibility distributions

Table 1 summarizes the assumptions for the uncertainty representation of the different factors described in Sect. 2. While the available data justify the selection of a unique CDF for extremes and wave effects, this is not the case for the GSLR, the RSLR, and the VGM, which are represented by possibility distributions.

3.1 Method

We rely on quantitative possibility theory (Dubois and Prade 1988), which is dedicated to handling incomplete information. We restrict the study to a numerical possibility distribution π : ℝ → [0, 1] to express our state of knowledge and to distinguish “what is plausible from what is less plausible” (Dubois and Prade 2016: Sect. 2.2); if π(x) = 1, then the value x is completely possible (=plausible), and if π(x) = 0, then the value x is not possible. Intervals (called α-cuts) πα = {e, π(e) ≥ α} contain all the values that have a degree of possibility of at least α. The intervals for α = 0 and α = 1 are called the support and the core, respectively.

Two different measures can be derived from such a distribution to analyze the question “does event A occur?”:

-

the possibility of A, Π(A) = supx ∈ Aπ(x), measures to what extent A is logically consistent with π;

-

the necessity of A, N(A) = 1 − Π(AC), where AC is the complement of A, measures to what extent A is certainly implied by π.

Both measures link the quantitative possibilities and probabilities by expressing “what is probable should be possible and what is certainly the case should be probable as well” (Dubois and Prade 2016: Sect. 2.5), meaning that N ≤ P ≤ Π where P is the probability of the considered event. This inequality shows why this theory interestingly represents incomplete probabilistic information, either induced by statistical data or by human-originated estimates (see the different situations in Supplementary Materials S1).

3.2 Application

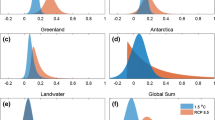

We first apply the method for GSLR projection by relying on the IPCC sea level rise projections, which are derived from model-based ensemble simulations of future sea level rise (Church et al. 2013). More precisely, the IPCC experts have derived a “likely range” for the GSLR, defined as having a probability of at least 66% and conditioned on the choice of the Representative Concentration Pathway (RCP) scenario (namely, RCP 2.6, 4.5, 6.0, and 8.5). We interpret this piece of information as an interval (denoted I) associated with the level of confidence (here α = 66%); this can be converted to a possibility distribution (Dubois and Prade 2016: Sect. 4.3.1) using a hat-shaped possibility distribution so that π(xGSLR) = 1 if the value xGSLR belongs to I, and 1 − α = 33% otherwise. This possibility distribution satisfies the constraints N(I) = 1 − Π(IC) = 66% and Π(I) = 100%, i.e., 66 % ≤ P(I) ≤ 100% (consistent with the IPCC information). From this procedure, the core of the GSLR possibility distribution is derived (Fig. 2a).

a Possibility distribution (thick line) derived from the likely ranges from the AR5 IPCC report for RCP 8.5 (by 2100 with reference period of 1986–2005) and from a weighed averaging of three distinct possibility distributions (dashed lines) related to three HE scenarios, namely, 1.5, 2, and 5 m with ranking values of 50, 40, and 10%, respectively (defined based on the literature review in Le Cozannet et al. 2017: Tables 1 and 2). b Translation of the possibility distribution from a into a probability box bounded by an upper (red) and lower (black) CDF. c Possibility distributions with 5 different ranking values (dashed lines) randomly drawn from a Dirichlet CDF with shape parameters of 50, 40, and 10%. d Translation of the possibility distributions from c into probability boxes

In the previous work by Le Cozannet et al. (2017), we chose to further constrain the distribution support with the data derived from an extensive literature review. Specifically, the right tail of the possibility distributions was constrained using recent studies on Antarctic ice sheet melting (e.g., Bamber and Aspinall 2013; Horton et al. 2014; Ritz et al. 2015; DeConto and Pollard 2016). Three HE scenarios (Fig. 2a), namely, a SLR of 1.5, 2, and 5 m by 2100 (see Le Cozannet et al. 2017: Tables 1 and 2), are used to define three distinct possibility distributions (the dashed lines in Fig. 2a), which are combined using a weighted average (the thick line in Fig. 2a). Based on a literature review, Le Cozannet et al. (2017) proposed the following ranking values: 50, 40, and 10% based on the representativeness of different sea level projections available in the scientific literature (Le Cozannet et al. 2017: Tables 1 and 2). These ranking values subjectively reflect a lack of consensus regarding the upper bound of sea level rise by 2100, but we acknowledge that they are themselves uncertain. This issue is further discussed in Sect. 3.3.

The derived distribution (Fig. 2b) formally represents the set of CDFs (called a probability box) bounded by an upper and a lower CDF in red and black in Fig. 2b, respectively.

For the RSLR and the VGM, the available information is more limited than for the GSLR, and simpler possibility distributions are constructed (Table 1). For the RSLR, only a best estimate and the spread are known, and a triangular possibility distribution is constructed assuming a core with no deviation with respect to the global mean and a support constrained by ± 0.25 m (by 2100) in the Mediterranean Sea (Sect. 2). Similarly, only interval-valued data are provided for the VGM, and a trapezoidal possibility distribution is defined with a core of [− 2, 0.0 mm/year] and a support of [− 10; 0.4 mm/year].

3.3 Uncertainty of the GSLR possibility distribution

The GSLR possibility distribution, though it avoids selecting a unique CDF, remains conditional on the sources of input information and is therefore itself defined by some uncertain input parameters, namely, its core and its right tail.

The first assumption is the use of the likely ranges from the AR5, which constrains the distribution core. Although the proposed framework is flexible and could include any other projection models (e.g., Kopp et al. 2014 or the recent update of 2017; Jackson and Jevrejeva 2016, among others), we chose to focus the study on the AR5 projections following the current practices of most coastal managers, who usually treat the IPCC Report as a baseline document. This choice guarantees that coastal adaptation practitioners are guided by a set of studies that have been critically assessed by the most prominent scientists in sea level science. We note, however, that a word of caution is needed with this approach, given that differences in timescales for updating sea level projections in the realms of research and operations may ultimately lead to systematic underestimations of impact and adaptation needs (Wong et al. 2017).

The likely ranges from the AR5 are conditioned on the RCP scenario. To account for the different scenarios, the core is bracketed by the minimum value of the lower bounds and by the maximum value of the upper bounds of the likely ranges from the AR5 (Supplementary Material S2). Note that from a local adaptation perspective, no preference can be given to any future climate forcing because they depend on greenhouse gas emissions that involve other levels of decision-making (Rockström et al. 2017).

The second assumption is related to the HE scenarios. The advantage of using a possibility distribution is to account not for specific scalar values but for interval-valued scenarios, i.e., [0.98, 1.5 m]; [1.5, 2 m]; and [2.0, 5.0 m], hence covering a wide range of possibilities in agreement with recent studies (e.g., 99th percentile used by Kopp et al. 2017; 95th percentile used by Wong et al. 2017). The subjectivity stems, however, from the ranking values (50, 40, and 10%, as proposed by Le Cozannet et al. 2017), which constrains the distribution’s right tail. For instance, other weighting schemes may consider a stronger consensus in favor of smaller upper bounds, which can be alleviated by following the best practices in statistical studies dealing with proportion estimates (Haas and Formery 2002) through a Dirichlet probabilistic model (with shape parameters corresponding to the best estimates of 50, 40, and 10%). This procedure enables the random generation of different ranking values to reflect the uncertainty in the assumptions of Le Cozannet et al. (2017). Figure 2 (bottom) depicts some instances of the derived distributions using ranking random values. By construction, the level of this uncertainty is “statistical.”

4 Combining probability and possibility distributions

4.1 Method

We jointly propagate probability and possibility distributions using the procedure developed by Baudrit et al. (2007) (Supplementary Material S3), which combines Monte Carlo–based random sampling of inverse CDFs for random variables (hwave and hextreme) and of the α-cuts (see Sect. 3) for the possibility distributions (hGSLR, hRSLR, and hVGM). We slightly modify the original procedure to account for the uncertainty in the construction of the GSLR possibility distribution (Sect. 3.3) so that its right tail is newly generated at each iteration using the ranking values randomly generated from a Dirichlet distribution. At each iteration, the minimum and maximum bounds for the coastal sea level hCOAST are evaluated, and the result takes the form of a set of intervals (Baudrit et al. 2006), which is postprocessed in the form of a probability box.

4.2 Application

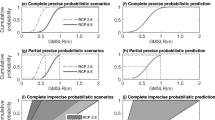

Figure 3a provides the lower and upper probability bounds for hCOAST derived from the propagation scheme using 5000 random draws, taking into account the whole spectrum of uncertainties (Table 1).

a Upper (red) and lower (black) probability bounds resulting from the propagation of statistical, scenario, and deep uncertainties. The distributions derived from a fully probabilistic analysis are in light blue (using the GSLR distribution provided by Kopp et al. 2014 (denoted K14 with RCP 2.6), dark blue (K14, RCP 4.5), and purple (K14, RCP 8.5). b Two examples of CDFs derived from the weighing procedure of both bounds using a risk aversion weight w = 0.50 (risk neutral) and w = 0.75 (moderately risk averse)

The gap between both probability bounds directly translates to “what is unknown.” This gap represents the imperfect state of knowledge in the study, which prevents the selection of a unique CDF for supporting decision-making regarding local flooding assessment without ambiguity. Therefore, Pf is not a precise and unique number but an interval; the width reflects the degree of ambiguity. For adaptation case 1, Pf ranges from 0.0 to 92.9%, whereas for adaptation case 2, Pf ranges from 0.0 to 27.0%.

For comparison purposes (Table 2), we complement the analysis with the results of a fully probabilistic analysis using the GSLR probability distribution from Kopp et al. (2014), denoted K14, conditional on RCP scenarios 2.6, 4.5, and 8.5 and assuming a triangular probability distribution for RSLR and VGM. In case 1, the K14 probabilistic approach shows that Pf ranges from ≈ 10 to 22% depending on the RCP scenario choice. Though this range is relatively wide, it still underestimates the gap of imperfect knowledge as highlighted by the proposed approach (i.e., with a wider range of > 90%). Clearly, the lack of knowledge related to all uncertain factors results in a situation with a very high degree of uncertainty, which makes the selection of a unique CDF barely achievable. Had the overall uncertainty been summarized using a single probability value, this problem would have been masked.

With structural adaptation (case 2), the range considering the three distributions of K14 is low-to-moderate ([≈ 1.4, 3.4%]), which suggests limited ambiguity in the quantified flooding hazard estimates. However, this result may give a greater degree of certainty than warranted. The nonnegligible range of 27% provided by the proposed approach nuances this result and suggests that ambiguity resulting from uncertain factors should be incorporated into the decision-making process (see Sect. 5.1).

5 Impact on the flooding probability

5.1 Integrating risk aversion

The advantage of the proposed approach is to measure the degree of ambiguity in Pf (i.e., the width of the probability interval). The degree of ambiguity turns out to be so large in our case (Sect. 4.2) that it can hinder the decision-making process. In this situation, the decision-maker may adopt different attitudes. Highly risk averse decision-makers may prefer to rely on the pessimistic bounds. However, this criterion may be too conservative because it neglects the information that leads to less pessimistic outcomes. This limitation justifies using decision-making criteria, which balance plausible and worst-case scenarios to derive an “effective” probability, similar to the limited degree of confidence criterion adapted for allowance estimates by Buchanan et al. (2016).

Following a similar idea, we rely on the criterion proposed by Dubois and Guyonnet (2011) to provide a single “effective” CDF. This CDF is derived by calculating the weighted average of the upper and lower bounds of the probability box considering different quantile levels from 0 to 100% (i.e., “horizontal” cuts). The weight w reflects the risk attitude of the decision-maker (i.e., the degree of risk aversion). If w = 1.0, more weight is given to the pessimistic bound (the black CDF in Fig. 3b), and the level of risk aversion of the decision-maker is the highest. If w = 0.0, the decision-maker is risk-prone and uses the optimistic bound. A w = 0.5 corresponds to a neutral attitude; i.e., the decision is based on both optimistic and pessimistic bounds without a preference between them (the green CDF in Fig. 3b). The postprocessing phase results in a single probability value Pw, which reflects both the imprecise nature of Pf and the risk attitude (aversion) of the decision-maker.

The benefit of our approach is to introduce “subjectivity” into the CDF selection only at the end of the uncertainty treatment, i.e., once the whole spectrum of uncertainty has been propagated and summarized in the form of a probability box. If the selection had been performed at the beginning of the uncertainty analysis, this initial assumption would have ultimately been lost in the propagation process, and the final result would not have been able to keep track of it (Dubois and Guyonnet 2011). For case 2, Pw reaches 3.6% (risk neutral, w = 0.50), which is the same order of magnitude as the K14 probabilistic results, hence suggesting a low flooding hazard zone. However, an increase in the risk aversion (Pw = 6.5% and Pw = 22.3% for w = 0.75 and 0.95, respectively) further suggests a nonnegligible impact of ambiguity and a need for a critical reflection on the input information (following the recommendation by Dessai and Hulme 2004).

5.2 Influence of the different uncertainty sources

A broad width of the Pf interval requires an exploration of the role of each uncertainty source in the decision-making process to assess to what extent future research may reduce these uncertainties. We aim to identify which uncertain factors affect Pw the most and while considering different risk attitudes. We conduct a sensitivity analysis based on the pinching approach of Ferson and Tucker (2006), which consists of evaluating how Pw evolves when each factor’s distribution is perturbed in turn (by fixing the considered CDF to a given quantile value or by fixing the considered possibility distribution to a given α-cut) (see the details in Supplementary Material S4).

Figure 4 provides the value of Pw averaged over all considered perturbation scenarios for each uncertainty factor. The larger the deviation from the vertical red line, the larger the importance of the considered factor. We note that for both adaptation cases, the GSLR and the extremes appear to be the most influential factors; this result is consistent with other studies (Buchanan et al. 2017; Garner et al. 2017). This result has practical implications for risk management because actions differ depending on the uncertainty type (Dubois 2010). The extreme water levels are considered statistical uncertainties and are therefore characterized by randomness, which means that, by nature, they cannot be reduced. Typically, only preventive or protective measures can be implemented to handle this type of uncertainty. Deep uncertainty is, however, related to the imperfect state of knowledge, particularly in the area of ice sheet modeling (Kopp et al. 2017; Horton et al. 2018). By definition, this source of uncertainty may be reduced if more knowledge is provided. Figure 4, and particularly the “w = 0.95 and case 2” diagram, suggests that decision-making should primarily be eased by improving research on the GSLR. Interestingly, the HE ranking has little effect on Pf in our case.

Flooding probability by 2100 (vertical red line) in the adaptation case 1 (top) and in the adaptation case 2 (bottom) considering different attitudes (risk neutral w = 0.50, moderately averse w = 0.75, and highly averse w = 0.95). The vertical red line provides the flooding probability when all uncertainties are propagated. The bars provide the flooding probability values averaged over all perturbation scenarios of the different uncertain factors using the pinching method of Ferson and Tucker (2006). The larger the deviation from the vertical line, the larger the importance of the considered factor

Figure 4 also highlights the key role of the decision-maker’s risk attitude (i.e., the weight w). In case 1, Fig. 4 (top) shows that Pw is more impacted by a change in w than by any perturbation of the input factors. Changes in the GSLR uncertainty representation would lead to only a ≈ 30% decrease in Pw (Fig. 4b, c), whereas an increase in w from 0.75 to 0.95 results in a large increase (of ≈ 50%) in Pw. This result means that, regardless of the decrease in the input uncertainty, an expected improvement of scientific knowledge would not help the decision-making process that much, which more strongly depends on the decision-maker’s attitude toward risk. However, in case 2, the impact of w is less pronounced, and Pw is driven by the scientific information received by the decision-maker. A better knowledge of key factors (in particular, GSLR) would, in that case, reduce the influence of risk aversion and therefore ease the decision-making process.

6 Discussion, concluding remarks, and ways forward

The current case study discusses the benefits of using distinct tools for uncertainty representation depending on the available data. A handful of methods exist to address this problem, but many lack practical recommendations to bring them to an operative state (as outlined by Flage et al. (2014) in their concluding remarks). Our work aims to fulfill this requirement by providing practical recommendations on how to (1) use the possibility distribution derived from the IPCC information to avoid making prior assumptions regarding the nature of the CDF; (2) implement uncertainty propagation from sea level rise to the coastal impact; and (3) provide users different and complementary information from the probabilistic and possibilistic viewpoints.

Specifically, our results highlight where the current knowledge prevents the assignment of a unique CDF to future flooding risks (Fig. 3). Within a top-down approach, we propose to inform the decision-maker with a single “effective” CDF, which reflects not only the impact of ambiguity but also his/her attitudes toward risk. If a large ambiguity exists, the procedure can ultimately rely on a sensitivity analysis to identify research areas where reducible uncertainty remains. The proposed approach constitutes the basis to extend the applicability of the proposed framework to decision contexts starting from the impact (e.g., flood damages) within a bottom-up approach, but without necessarily relying on probabilities (see the discussion by Dessai and Hulme 2004).

Our application shows that the benefit of the scientific information provided to decision-makers differs depending on the adaptation case. In the “adaptation as usual” case, the decision regarding coastal flooding management mostly depends on the attitude to risk; in the “structural adaptation” case, the flooding probability is more impacted by variations in the uncertain factors than in the “adaptation as usual” case and, more specifically, the amount of knowledge on the GSLR. These results call for strong interactions among the different actors, as underlined by other studies (e.g., Buchanan et al. 2016). Today, such interactions between scientific information, decision-makers, and users are recognized as essential in order to support adaptation (Monfray and Bley 2016). However, in the area of coastal adaptation, further research involving climate, coastal, and social science, as well as behavioral and decision analytics, is needed to evaluate to what extent the proposed uncertainty treatment framework can support the resolution of the problem of coastal adaptation (Bisaro and Hinkel 2016). With this view, the proposed possibility-based approach could provide a good basis to trigger and structure discussions on sea level rise uncertainties among experts of different horizons by providing viewpoints that supplement or complement those of probabilities. The proposed approach could constitute a key ingredient of future expert elicitation exercises, as done, for instance, by Bamber and Aspinall (2013) using probabilities. As discussed by Cooke (2004), this direction will require further research to strengthen the links between the possibility and decision theories. We argue that using the rigorous setting proposed by Dubois et al. (2001), for instance, can be a way forward to reach an operative state in this area.

References

Adloff F, Jordà G, Somot S, Sevault F, et al (2018) Improving sea level simulation in Mediterranean regional climate models. Clim Dyn 51(3):1167-1178

Bakker AM, Louchard D, Keller K (2017) Sources and implications of deep uncertainties surrounding sea-level projections. Clim Chang 140(3–4):339–347

Bamber JL, Aspinall WP (2013) An expert judgement assessment of future sea level rise from the ice sheets. Nat Clim Chang 3(4):424–427

Baudrit C, Guyonnet D, Dubois D (2006) Post-processing the hybrid method for addressing uncertainty in risk assessments. J Environ Eng 131:1750–1754

Baudrit C, Guyonnet D, Dubois D (2007) Joint propagation of variability and imprecision in assessing the risk of groundwater contamination. J Contam Hydrol 93(1):72–84

Ben Abdallah N, Mouhous-Voyneau N, Denoeux T (2014) Combining statistical and expert evidence using belief functions: application to centennial sea level estimation taking into account climate change. Int J Approx Reason 55(1):341–354

Bisaro A, Hinkel J (2016) Governance of social dilemmas in climate change adaptation. Nat Clim Chang 6(4):354

Buchanan MK, Kopp RE, Oppenheimer M, Tebaldi C (2016) Allowances for evolving coastal flood risk under uncertain local sea-level rise. Clim Chang 137(3–4):347–362

Buchanan MK, Oppenheimer M, Kopp RE (2017) Amplification of flood frequencies with local sea level rise and emerging flood regimes. Environ Res Lett 12(6):064009

Calafat FM, Chambers DP, Tsimplis MN (2012) Mechanisms of decadal sea level variability in the eastern North Atlantic and the Mediterranean Sea. J Geophys Res 117:C09022

Church JA, Clark PU, Cazenave A, Gregory JM, Jevrejeva S et al (2013) Sea level change. In: Climate Change 2013: the physical science basis. Contribution of working group I to the fifth assessment report of the intergovernmental panel on climate change. In: Stocker TF, Qin D, Plattner GK, Tignor M, Allen SK, Boschung J, Nauels A, Xia Y, Bex V, & Midgley PM (eds.) Cambridge University Press, Cambridge, United Kingdom and New York, NY, USA

Cooke R (2004) The anatomy of the squizzel: the role of operational definitions in representing uncertainty. Reliab Eng Syst Saf 85(1–3):313–319

DeConto RM, Pollard D (2016) Contribution of Antarctica to past and future sea-level rise. Nature 531(7596):591

Dessai S, Hulme M (2004) Does climate adaptation policy need probabilities? Clim Pol 4(2):107–128

Dubois D (2010) The role of epistemic uncertainty in risk analysis. In: Scalable uncertainty management. Deshpande, Amol, Hunter, Anthony (Eds). Lecture Notes in Computer Science. Springer Berlin Heidelberg, 6379:11–15

Dubois D, Guyonnet D (2011) Risk-informed decision-making in the presence of epistemic uncertainty. Int J Gen Syst 40(02):145–167

Dubois D, Prade H (1988) Possibility theory: an approach to computerized processing of uncertainty. Plenum, New York

Dubois D, Prade F (2016) Practical methods for constructing possibility distributions. Int J Intell Syst 31(3):215–239

Dubois D, Prade H, Sabbadin R (2001) Decision-theoretic foundations of qualitative possibility theory. Eur J Oper Res 128(3):459–478

Ellsberg D (1961) Risk, ambiguity, and the savage axioms. Q J Econ 75(4):643–669

Ferson S, Tucker WT (2006) Sensitivity analysis using probability bounding. Reliab Eng Syst Saf 91(10–11):1435–1442

Flage R, Aven T, Zio E, Baraldi P (2014) Concerns, challenges, and directions of development for the issue of representing uncertainty in risk assessment. Risk Anal 34(7):1196–1207

Fuller RW, Wong TE, Keller K (2017) Probabilistic inversion of expert assessments to inform projections about Antarctic ice sheet responses. PLoS One 12(12)

Garner AJ, Mann ME, Emanuel KA, Kopp RE et al (2017) Impact of climate change on New York City’s coastal flood hazard: increasing flood heights from the preindustrial to 2300 CE. Proc Natl Acad Sci 114:11861–11866

Gervais M (2012) Impacts morphologiques des surcotes et vagues de tempêtes sur le littoral méditerranéen (PhD thesis, Perpignan). 370 p. and annex

Haas A, Formery P (2002) Uncertainties in facies proportion estimation I. Theoretical framework: the Dirichlet distribution. Math Geol 34(6):679–702

Haasnoot M, Kwakkel JH, Walker WE, ter Maat J (2013) Dynamic adaptive policy pathways: a method for crafting robust decisions for a deeply uncertain world. Glob Environ Chang 23(2):485–498

Horton BP, Rahmstorf S, Engelhart SE, Kemp AC (2014) Expert assessment of sea-level rise by AD 2100 and AD 2300. Quat Sci Rev 84:1–6

Horton BP, Kopp RE, Garner AJ, Hay CC, Khan NS, Roy K, Shaw TA (2018) Mapping sea-level change in time, space and probability. Annu Rev Environ Resour 43:481–521

Hunter JR, Church JA, White NJ, & Zhang X (2013) Towards a global regionally varying allowance forsea-level rise, Ocean Eng 71:17–27

Idier D, Rohmer J, Bulteau T, & Delvallée E (2013). Development of an inverse method for coastal risk management. Nat Hazards Earth Syst Sci 13(4):999–1013.

Jackson LP, Jevrejeva S (2016) A probabilistic approach to 21st century regional sea-level projections using RCP and high-end scenarios. Glob Planet Chang 146:179–189

Kopp RE, Horton RM, Little CM, Mitrovica JX et al (2014) Probabilistic 21st and 22nd century sea-level projections at a global network of tide-gauge sites. Earth’s Future 2(8):383–406

Kopp RE, DeConto RM, Bader DA, Hay CC, et al (2017) Evolving understanding of Antarctic ice-sheet physics and ambiguity in probabilistic sea-level projections. Earth’s Future 5:1217–1233

Kriegler E, Held H (2005) Utilizing belief functions for the estimation of future climate change. Int J Approx Reason 39(2):185–209

Le Cozannet G, Rohmer J, Cazenave A, Idier D et al (2015) Evaluating uncertainties of future marine flooding occurrence as sea-level rises. Environ Model Softw 73:44–56

Le Cozannet G, Manceau JC, Rohmer J (2017) Bounding probabilistic sea-level projections within the framework of the possibility theory. Environ Res Lett 12(1):014012

Lempert RJ, Popper SW, Bankes SC (2003) Shaping the next one hundred years: new methods for quantitative, long-term policy analysis. Rand Corporation, Santa Monica

Merz B, Thieken AH (2009) Flood risk curves and uncertainty bounds. Nat Hazards 51(3):437–458

Monfray P, Bley D (2016) JPI Climate: a key player in advancing Climate Services in Europe. Clim Serv 4:61–64

Nicholls RJ, Hanson SE, Lowe JA, Warrick RA et al (2014) Sea-level scenarios for evaluating coastal impacts. Wiley Interdiscip Rev Clim Chang 5(1):129–150

Oppenheimer M, Little CM, Cooke RM (2016) Expert judgement and uncertainty quantification for climate change Nat. Clim Chang 6:445–451

Ranger N, Reeder T, Lowe J (2013) Addressing ‘deep’ uncertainty over long-term climate in major infrastructure projects: four innovations of the Thames Estuary 2100 Project. EURO J Decis Process 1(3–4):233–262

Ritz C, Edwards TL, Durand G, Payne AJ et al (2015) Potential sea-level rise from Antarctic ice-sheet instability constrained by observations. Nature 528(7580):115

Rockström J, Gaffney O, Rogelj J, Meinshausen M et al (2017) A roadmap for rapid decarbonization. Science 355(6331):1269–1271

Slangen ABA, Carson M, Katsman CA, Van de Wal RSW et al (2014) Projecting twenty-first century regional sea-level changes. Clim Chang 124(1–2):317–332

Stephens SA, Bell RG, Lawrence J (2017) Applying principles of uncertainty within coastal hazard assessments to better support coastal adaptation. J Mar Sci Eng 5(3):40

Vinchon C, Baron-Yelles N, Berthelier E, Herivaux C, et al (2011) Miseeva: set up of a transdisciplinary approach to assess vulnerability of the coastal zone to marine inundation at regional and local scale, within a global change context. Littoral 2010–Adapting to Global Change at the Coast: Leadership, Innovation, and Investment, London 21-23 September 2010, 10p

Wong TE, Keller K (2017) Deep uncertainty surrounding coastal flood risk projections: a case study for New Orleans. Earth’s Future 5(10):1015–1026

Wong TE, Bakker AM, Keller K (2017) Impacts of Antarctic fast dynamics on sea-level projections and coastal flood defense. Clim Chang 144(2):347–364

Acknowledgments

We thank Alexander Bisaro, Patrick Bazin, Dominique Guyonnet, Jochen Hinkel, Déborah Idier, Carlos Oliveros, Rodrigo Pedreros, Robert Nicholls, and the WCRP Grand Challenge “Sea-Level Rise and Coastal Impacts” for useful discussions on coastal risks, decision-making, and uncertainties. Implementation of the methods was performed using the R package HYRISKFootnote 3.

Funding

This study received financial support from BRGM-funded project DEV-EXTRAPOLATE (in-kind contribution to the Convention Services Climatiques of the MTES) and ERA4CS/ECLISEA (Grant 690462).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(DOCX 335 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Rohmer, J., Le Cozannet, G. & Manceau, JC. Addressing ambiguity in probabilistic assessments of future coastal flooding using possibility distributions. Climatic Change 155, 95–109 (2019). https://doi.org/10.1007/s10584-019-02443-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-019-02443-4