Abstract

Long-term flood risk management often relies on future sea-level projections. Projected uncertainty ranges are however widely divergent as a result of different methodological choices. The IPCC has condensed this deep uncertainty into a single uncertainty range covering 66% probability or more. Alternatively, structured expert judgment summarizes divergent expert opinions in a single distribution. Recently published uncertainty ranges that are derived from these “consensus” assessments appear to differ by up to a factor four. This might result in overconfidence or overinvestment in strategies to cope with sea-level change. Here we explore possible reasons for these different interpretations. This is important for (i) the design of robust strategies and (ii) the exploration of pathways that may eventually lead to some kind of consensus distributions that are relatively straightforward to interpret.

Similar content being viewed by others

1 Introduction

Sea-level rise (SLR) poses substantial risks for many low-lying, coastal areas around the world (Nicholls and Cazenave 2010; Katsman et al. 2011). Even a minor increase may considerably increase the frequency of harmful floods (Tebaldi et al. 2012). The management of the associated risks requires a sound understanding of potential local SLR, including low probability, high impact events (Kopp et al. 2014; De Vries et al. 2014; Grinsted et al. 2015).

Local SLR can significantly deviate from the global signal due to non-oceanic effects such as subsidence and post-glacial rebound, and due to oceanic effects (Slangen et al. 2012). Changes in ocean circulation, heterogeneous density changes, and mass-loss of large ice bodies (e.g., affecting the gravity field, Earth’s rotation, and lithospheric flexure) may cause distinct spatial patterns. Local SLR projections thus require a separate treatment of these major components, including thermosteric expansion and mass loss of ice sheets, ice caps, and glaciers.

Sea-level projections are deeply uncertain (Hulme et al. 2009; Ranger et al. 2013; Applegate et al. 2015; Oppenheimer et al. 2016). Deep uncertainty occurs when experts and/or decision makers “do not know or cannot agree upon the system model relating actions to consequences or the prior probabilities on key parameters of the system model” (Lempert and Collins 2007). Experts disagree on the best methods to assess the uncertainties (Church et al. 2013; Moore et al. 2013), and their subjective probability estimates of SLR are widely divergent (Horton et al. 2014).

When confronted with deep uncertainties, analysts have to make a choice (see, for example, Budescu et al. 2014). One option is to ignore the deep uncertainties, i.e., to present a single pdf, perhaps accompanied by a disclaimer that severe changes outside the presented range are possible. From decision-making context, this alternative seems less favorable because decision makers tend to act differently when confronted with deep uncertainties or imprecise probabilities rather than with well-defined probabilities (e.g., Ellsberg 1961; Budescu et al. 2014). A second option is to try to achieve consensus and condense the deep uncertainties into a single “consensus” distribution. A third option, for example if a consensus appears not (yet) possible, is to present decision makers with the key aspects of this deep uncertainty.

Structured expert judgments can be useful in case of ambiguity, disagreeing models, and lack of empirical evidence (Oppenheimer et al. 2007; Aspinall 2010; IAC 2010; Mastrandrea et al. 2010; Bamber and Aspinall 2013; Horton et al. 2014). According to Cooke and Goossens (2008), “structured expert judgment refers to an attempt to subject the decision process to transparent methodological rules, with the goal of treating expert judgments as scientific data in a formal decision process.” Expert agreement is not the objective of structured expert judgment. Rather, it intends to explore the range of views and to help build a political or rational consensus (Cooke and Goossens 2008). Rational consensus can be achieved by means of a method that is defined and agreed-on before eliciting the experts (Cooke and Goossens 2008; Aspinall 2010). It is, however, non-trivial if and how to combine expert opinions (Morgan and Keith 1995). As a consequence, the reliability of structured expert elicitations is often questioned (e.g., Keith 1996; Church et al. 2013; Gregory et al. 2014; Clark et al. 2015).

As an alternative to structured expert judgment, the IPCC’s Fifth Assessment Report (hereafter, AR5) presents a likely range. According to the Guidance Note for Lead Authors of the IPCC Fifth Assessment Report on Consistent Treatment of Uncertainties, a likely outcome means that “the probability of this outcome can range from ≥66% (fuzzy boundaries implied) to 100% probability” (Mastrandrea et al. 2010). The likely range explicitly builds on the agreed-on, current state of knowledge. Aiming for scientific rigor and consistency with literature, the IPCC authors have chosen not to account for poorly understood mechanisms, like the collapse of the marine-based sectors of the Antarctic ice sheet, in the likely range (Church et al. 2013; Gregory et al. 2014). This range has been criticized for being overconfident and ignoring semi-empirical model studies (Kerr 2013; Rahmstorf 2013; Grinsted 2014). However, two recent studies seem fairly consistent (Mengel et al. 2016; Kopp et al. 2016) and many local projections (partly) rely on AR5 and its model ranges (e.g., Kopp et al. 2014; De Vries et al. 2014; Grinsted et al. 2015).

Yet, a likely range (i.e., spanning 66% probability or more) leaves users considerable room for interpretation, resulting in large differences between the various assessments (Fig. 1). For example, the climate change scenarios for the Netherlands (KNMI14) (Van den Hurk et al. 2014; De Vries et al. 2014) project a relatively small 90% probability range (red bars). In contrast, Kopp et al. (2014) project a 90% probability range that is twice as large (dark green bars). Grinsted et al. (2015) projects an even larger 90% probability range (purple bars). These studies present clear justifications of their methodological choices, but the consequences for projected ranges and derived decisions receive relatively little attention. The discrepancies between the uncertainty ranges of different studies are a sign of deep uncertainty. Understanding the sources of this deep uncertainty can be a key step to better support risk and decision analysis because this understanding may (i) help to build consensus and (ii) can inform the design of robust strategies (e.g., Hall et al. 2012; Singh et al. 2015; Hadka et al. 2015).

Here, we explore the main reasons for the different interpretations of exactly the same information on SLR. This insight may be useful for designing strategies to cope with the deep uncertainties surrounding sea-level projections. In the longer run, eliciting the reasons for these divergent projections may help reduce ambiguities or help build rational consensus. To demonstrate and quantify these effects, we first explore how interpreting a given uncertainty in projections as representing differing likelihoods impacts probabilistic projections. Next, we discuss the potential role of structured expert judgment. Finally, we explore how structured elicitation might be utilized to reduce the current deep uncertainties and help to better inform risk and decision analyses.

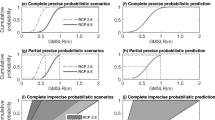

2 The interpretation of the IPCC’s likely range

One potential problem with the IPCC’s likely range is that users can interpret it to span 66% up to 100% probability, as consistent with AR5. Probabilistic sea-level projections can depend critically on this probabilistic interpretation. This is illustrated in Fig. 2, which shows how interpreting a given uncertainty range (spanned by the horizontal yellow lines) as representing different likelihoods (shown on the x-axis) extrapolates to differing 66, 90, and 99% probability ranges (y-axis), assuming normal and lognormal distributions for the different contributions (see the Appendix for the derivation of this figure). As seen from Fig. 2, uncertainty range increases more than linearly with decreasing probabilistic interpretation. This dependence on interpretation can largely explain the factor two difference in the projected 90% probability range between KNMI14 (red vertical bar) and Kopp et al. (2014) (green vertical bar).

Projected probability range of global sea-level change between 1995 and 2090 following RCP8.5 (y-axis) as function of the probabilistic interpretation of the IPCC’s likely range (x-axis). The IPCC communicates the uncertainty of future sea-level as a likely range (yellow arrow, lines). Future sea-level rise is expected to fall within this likely range with 66% probability or more. Any probability between 66 and 100% is consistent with this IPCC assessment (black arrow). The projected probabilistic uncertainty ranges (blue shades and dashed lines) depend strongly on the probabilistic interpretation of the likely range (x-axis). For example, the projected 90% probability range of KNMI14 (red vertical bar) is about half of the 90% probability range projected by Kopp et al. (dark green, vertical bar) mainly because of a different probabilistic interpretation of IPPC’s likely range

KNMI14 implicitly interprets the likely range as the 90% probability range, i.e., that the sea-level rise will be within this range with 90% probability. Many other studies (e.g., Kopp et al. 2014) interpret the likely range as the 66% probability range. The rationale given by KNMI14 is to be methodologically consistent with AR5 and internally consistent within KNMI14, and in this way to provide a widely accepted and actionable common framework for climate change adaptation in the Netherlands. The 66% probability interpretation is typically not explicitly motivated nor referenced. The 66% probability interpretation can make sense, for example if the objective is to produce wide (conservative) uncertainty ranges. From a robust decision-making perspective, conservative projections may be preferable to overconfident projections (see for example the discussions in Herman et al. 2015 and Bakker et al. 2016).

The likely range (i.e., spanning 66% probability or more) gives no clear lead on how to estimate higher quantiles, like 1:100, that are decision relevant (Kopp et al. 2014). The applied likely interpretation and distribution function largely determine the extrapolation. Yet, the scientific foundation for this methodological choice is largely unclear.

3 Expert elicitation and the role of ice sheets

Some have attributed the deep uncertainties surrounding sea-level projections to the response of the large ice sheets (Church et al. 2013; Bamber and Aspinall 2013). For instance, simulated and elicited projections of the Antarctic ice sheet contribution for the twenty-first century range from a few centimeters of global sea-level drop (Church et al. 2013) to an implied drastic several meters rise resulting from an almost complete disintegration of the West-Antarctic ice sheet (WAIS) (Pollard et al. 2015).

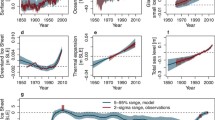

Probabilistic statements on high ice-sheet contributions are, however, controversial (Gregory et al. 2014; Clark et al. 2015). Different approaches result in widely diverging projections, as shown before in Fig. 1. For example, KNMI14 (red bars) applies, in line with AR5, physically reasoned/modeled upper limits as proposed by Katsman et al. (2011), whereas Perrette et al. (2013) (cyan bars) provide much larger uncertainty ranges based on a semi-empirical approach. Alternatively, some studies apply expert judgments (e.g., Grinsted et al. 2015; Kopp et al. 2014), notably elicited by Bamber and Aspinal (2013, hereafter BA13). Yet, different combination rules result in large differences too.

BA13 applies a structured expert elicitation to inform low-probability SLR projections. For this, they elicited from 13 experts their subjective estimates of, amongst others, the 5, 50, and 95% quantiles of future ice sheet decrease rates in 2100 in response to RCP8.5. Assuming a constant acceleration in time, they subsequently estimate the 5, 50, and 95% quantiles of the cumulative sea-level contributions in the year 2100 (Fig. 3, black crosses). For comparison and extrapolation (needed to estimate lower probability events such as 1:100 and 1:1000), we fitted beta distributions applying a 3.3-m (i.e., total WAIS collapse) upper bound (black lines). In order to provide a rational consensus, BA13 applies performance-based weights (i.e., weights based on the performance on seed questions on quantile estimations) to combine the expert opinions (purple dots) (Cooke 1991). This method has been shown to be superior over several other methods such as equal weighting and quantile weighting (Cooke and Goossens 2008; Bamber et al. 2016).

Survival functions (1 minus the cumulative distribution function) of the cumulative West-Antarctic ice sheet contribution to sea-level rise for the twenty-first century assuming RCP8.5 based on expert elicited quantile estimates and different combination strategies of quantile estimates. Black crosses are individual quantile estimates (5, 50, and 95%) of 13 experts elicited by Bamber and Aspinall (2013). The colored dots represent different strategies to aggregate the individual estimates. PerfWts (purple) is the aggregated estimate of Bamber and Aspinall (2013) applying expert performance weighting. EqualWts (green) represents their equal-weight aggregation (Bamber and Aspinall 2013), and Median (red) median aggregation. Orange (MaxRange) represents enveloping, i.e., the use the minimum estimate for the lower bounds and the maximum estimate for the upper bounds (Park and Budescu 2015). The continuous lines are fitted beta-distributions assuming an 3.3-m (i.e., total disintegration) upper limit. The dashed blue line is the uniform distribution

Grinsted et al. (2015) explicitly use the BA13 expert consensus to estimate the uncertain contribution of the “ignored” processes and add the BA13 estimates to the AR5’s likely range. Kopp et al. (2014), on the other hand, acknowledge the scientific consensus of AR5 and only use BA13’s to estimate the higher quantiles. Aiming for a smooth extrapolation, they fit a log-normal distribution to BA13’s expert consensus and scale this distribution down to match the AR5’s likely range. As a consequence, the 90% probability range of Kopp et al. (2014) is less than half of the range of Grinsted et al. (2015) (Fig. 1).

In a critical assessment of BA13’s elicitation, De Vries and Van de Wal (2015) propose a third approach. Concerned about weighting of experts and a too large influence of outlier opinions, they suggest to use a median estimate (the red dots), a method previously applied by Horton et al. (2014). Median-pooling is shown to be especially powerful in case of a large group of experts, relatively little over-confidence (Park and Budescu 2015; Gaba et al. 2016) and when the intended decision is not driven by tail-behavior of the uncertainty (Hora et al. 2013). Otherwise, the median approach may result in over-confident projections (Park and Budescu 2015).

4 Conclusions and discussion

We have shown how different, all arguably reasonable, interpretations of the imprecise information of the IPCC can result in widely divergent and deeply uncertain sea-level projections. Approaches to address this problem by eliciting and combining (subjective) information from experts have provided useful insights, but still result in deeply uncertain projections.

The examples illustrate that the construction of a consensus estimate from divergent expert assessments can be subject to considerable structural (and deep) uncertainty. This is consistent with the previous assessment that there is no “objective basis for combining expert opinion” (Keith 1996). Given this deep uncertainty, many (e.g., Keith 1996; Keller et al. 2008; Lempert et al 2012) have argued that a robust strategy, i.e., that performs well over a wide range of plausible futures/views (Lempert et al 1996), may be preferable over optimal strategies. Yet, depending on the applied decision criterion, the assessed robustness of a strategy can critically hinge on the range of views considered. Thus, robust strategies can also be very sensitive to outlier opinions and the way divergent expert assessments are aggregated (or not).

Many studies are silent on the aspect of deep uncertainty, for example by providing a single probability density function. This ignorance may lead to inconsistent decisions. Decision makers’ preferences often change when confronted with deep uncertainty (Ellsberg 1961; Budescu et al 2014). Improving its communication, e.g., by providing multiple plausible pdfs, can help to inform the design of more robust risk management strategies. Effective communication of deep uncertainties, however, depends strongly on the decision-context. Therefore, an efficient representation requires a tight interaction between decision analysts, scientists, and decision makers.

References

Applegate PJ, Parizek BR, Nicholas RE, Alley RB, Keller K (2015) Increasing temperature forcing reduces the Greenland Ice Sheet’s response time scale. Clim Dyn 45(7):2001–2011. doi:10.1007/s00382-014-2451-7

Aspinall W (2010) A route to more tractable expert advice. Nature 463(7279):294–295. doi:10.1038/463294a

Bakker AMR, Wong TE, Ruckert KL, Keller, K (2016) Sea-level projections accounting for deeply uncertain ice-sheet contributions. Nat Sci Rep (in review; http://arxiv.org/abs/1609.07119)

Bamber JL, Aspinall WP (2013) An expert judgement assessment of future sea level rise from the ice sheets. Nat Clim Chang 3:424–427. doi:10.1038/nclimate1778

Bamber JL, Aspinall WP, Cooke RM (2016) A commentary on “How to interpret expert judgment assessments of twenty-first century sea-level rise” by Hylke de Vries and Roderik SW van der Wal. Clim Chang 137(3):321–328. doi:10.1007/s10584-016-1672-7

Budescu DV, Broomell SB, Lempert RJ, Keller K (2014) Aided and unaided decisions with imprecise probabilities in the domain of losses. EURO J Decis Process 2(1):31–62. doi:10.1007/s40070-013-0023-4

Church JA, Clark PU, Cazenave A, Gregory JM, Jevrejeva S, Levermann A, Merrifield MA, Milne GA, Nerem RS, Nunn PD, Payne AJ, Pfeffer WT, Stammer D, Unnikrishnan AS (2013) Sea level change. In: Stocker TF et al (eds) Climate change 2013: the physical science basis. Contribution of working group I to the fifth assessment report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 1137–1216

Clark PU, Church JA, Gregory JM, Payne AJ (2015) Recent progress in understanding and projecting regional and global mean sea level change. Curr Clim Chang Rep 1(4):224–246. doi:10.1007/s40641-015-0024-4

Cooke RM (1991) Experts in uncertainty; opinion and subjective probability in science. Environmental ethics and science policy series. Oxford University Press, New York

Cooke RM, Goossens LLHJ (2008) TU Delft expert judgment data base. Reliab Eng Syst Saf 93(5):657–674. doi:10.1016/j.ress.2007.03.005

De Vries H, Van de Wal RSW (2015) How to interpret expert judgment assessments of 21st century sea-level rise. Clim Chang 130(2):87–100. doi:10.1007/s10584-015-1346-x

De Vries H, Van de Wal RSW (2016) Response to commentary by J. L. Bamber, W. P. Aspinall and R. M. Cooke (2016). Clim Chang 137(3):329–332. doi:10.1007/s10584-016-1712-3

De Vries H, Katsman C, Drijfhout S (2014) Constructing scenarios of regional sea level change using global temperature pathways. Environ Res Lett 9(11):115,007. doi:10.1088/1748-9326/9/11/115007

Ellsberg D (1961) Risk, ambiguity, and the savage axioms. Q J Econ 75(4):643–669, http://www.jstor.org/stable/1884324, visited on 2016-05-08

Gaba A, Tsetlin I, Winkler RL (2016) Combining interval forecasts. Working paper 2014/58/DSC, INSEAD, doi 10.2139/ssrn.2519007

Gregory JM, Church JA, Clark PU, Payne AJ, Merrifield MA, Nerem RS, Nunn PD, Pfeffer WT, Stammer D (2014) Comment on “Expert assessment of sea-level rise by AD 2100 and AD 2300”, by Horton et al. (2014). Quat Sci Rev 97:193–194. doi:10.1016/j.quascirev.2014.05.024

Grinsted A (2014) AR5 sea level rise uncertainty communication failure. http://www.glaciology.net/Home/MiscellaneousDebris/ar5sealevelriseuncertaintycommunicationfailure/, visited on 2015-12-15

Grinsted A, Jevrejeva S, Riva REM, Dahl-Jensen D (2015) Sea level rise projections for northern Europe under RCP8.5. Clim Res 64(1):15–23. doi:10.3354/cr01309

Hadka D, Herman J, Reed P, Keller K (2015) OpenMORDM: an open source framework for many-objective robust decision making. Environ Model Softw 74:114–120. doi:10.1016/j.envsoft.2015.07.014

Hall J, Lempert RJ, Keller K, Hackbarth A, Mijere C, McInerney DJ (2012) Robust climate policies under uncertainty: a comparison of robust decision-making and info-gap methods. Risk Anal 32(10):1657–1672. doi:10.1111/j.1539-6924.2012.01802.x

Herman J, Reed P, Zeff H, Characklis G (2015) How should robustness be defined for water systems planning under change? J Water Res Plan Manag 141(10), doi 10.1061/(ASCE)WR.1943-5452.0000509, 04015012

Hora SC, Fransen BR, Hawkins N, Susel I (2013) Median aggregation of distribution functions. Decis Anal 10(4):279–291. doi:10.1287/deca.2013.0282

Horton BP, Rahmstorf S, Engelhart SE, Kemp AC (2014) Expert assessment of sea-level rise by AD 2100 and AD 2300. Quat Sci Rev 84:1–6. doi:10.1016/j.quascirev.2013.11.002

Hulme M, Pielke R Jr, Dessai S (2009) Keeping prediction in perspective. Nat Rep Clim Chang 11:126–127. doi:10.1038/climate.2009.110

Inter Academy Council (IAC) (2010) Climate change assessments: review of the processes and procedures of the IPCC. Royal Netherlands Academy of Arts and Sciences, Amsterdam

Katsman CA, Sterl A, Beersma JJ, van den Brink HW, Church JA, Hazeleger W, Kopp RE, Kroon D, Kwadijk J, Lammersen R, Lowe J, Oppenheimer M, Plag HP, Ridley J, von Storch H, Vaughan DG, Vellinga P, Vermeersen LLA, van de Wal RSW, Weisse R (2011) Exploring high-end scenarios for local sea level rise to develop flood protection strategies for a low-lying delta—the Netherlands as an example. Clim Chang 109(3–4):617–645. doi:10.1007/s10584-011-0037-5

Keith DW (1996) When is it appropriate to combine expert judgments? Clim Chang 3(2):139–143. doi:10.1007/BF00140244

Keller K, Yohe G, Schlesinger M (2008) Managing the risks of climate thresholds: uncertainties and needed information. Clim Chang 91(1):5–10. doi:10.1007/s10584-006-9114-6

Kerr RA (2013) A stronger IPCC report. Science 342(6154):23. doi:10.1126/science.342.6154.23-b

Kopp RE, Horton RM, Little CM, Mitrovica JX, Oppenheimer M, Rasmussen DJ, Strauss BH, Tebaldi C (2014) Probabilistic 21st and 22nd century sea-level projections at a global network of tide-gauge sites. Earth’s Future 2(8):383–406. doi:10.1002/2014EF000239

Kopp RE, Kemp AC, Bittermann K, Horton BP, Donnelly JP, Gehrels WR, Hay CC, Mitrovica JX, Morrow ED, Rahmstorf S (2016) Temperature-driven global sea-level variability in the Common Era. Proc Natl Acad Sci U S A 113(11):E143–E1441. doi:10.1073/pnas.1517056113

Lempert RJ, Collins MT (2007) Managing the risk of uncertain threshold responses: comparison of robust, optimum, and precautionary approaches. Risk Anal 27(4):1009–1026. doi:10.1111/j.1539-6924.2007.00940.x

Lempert RJ, Schlesinger ME, Bankes SC (1996) When we don’t know the costs or the benefits: adaptive strategies for abating climate change. Clim Chang 33(2):235–274. doi:10.1007/BF00140248

Lempert R, Sriver RL, Keller K (2012) Characterizing uncertain sea level rise projections to support investment decisions. Tech. Rep. Publication Number: CEC-500-2012-056, California Energy Commission

Mastrandrea MD, Field CB, Stocker TF, Edenhofer O, Ebi KL, Frame DJ, Held H, Kriegler E, Mach KJ, Matschoss PR, Plattner GK, Yohe GW, Zwiers FW (2010) The guidance notes for lead authors of the IPCC fifth assessment report on consistent treatment of uncertainties. https://www.ipcc.ch/pdf/supporting-material/uncertainty-guidance-note.pdf, visited on 2016-05-08

Mengel M, Levermann A, Frieler K, Robinson A, Marzeion B, Winkelmann R (2016) Future sea level rise constrained by observations and long-term commitment. Proc Natl Acad Sci U S A 113(10):2597–2602. doi:10.1073/pnas.1500515113

Moore JC, Grinsted A, Zwinger T, Jevrejeva S (2013) Semiempirical and process-based global sea level projections. Rev Geophys 51(3):484–522. doi:10.1002/rog.20015

Morgan MG, Keith DW (1995) Subjective judgments by climate experts. Environ Sci Technol 29(10):468A–476A. doi:10.1021/es00010a753

Nicholls RJ, Cazenave A (2010) Sea-level rise and its impact on coastal zones. Science 328(5985):1517–1520. doi:10.1126/science.1185782

Oppenheimer M, O’Neill BC, Webster M, Agrawala S (2007) The limits of consensus. Science 317(5844):1505–1506. doi:10.1126/science.1144831

Oppenheimer M, Little CM, Cooke RM (2016) Expert judgement and uncertainty quantification for climate change. Nat Clim Chang 6:445–451. doi:10.1038/nclimate2959

Park S, Budescu DV (2015) Aggregating multiple probability intervals to improve calibration. Judgment Decis Mak 10(2):130–143

Perrette M, Landerer F, Riva R, Frieler K, Meinshausen M (2013) A scaling approach to project regional sea level rise and its uncertainties. Earth Syst Dyn 4(1):11–29. doi:10.5194/esd-4-11-2013

Pollard D, DeConto RM, Alley RB (2015) Potential Antarctic ice sheet retreat driven by hydrofracturing and ice cliff failure. Earth Planet Sci Lett 412:112–121. doi:10.1016/j.epsl.2014.12.035

Rahmstorf S (2013) AR5 sea level rise uncertainty communication failure. http://www.realclimate.org/index.php/archives/2013/10/sea-level-in-the-5th-ipcc-report/, visited on 2015-12-15

Ranger N, Reeder T, Lowe J (2013) Addressing ‘deep’ uncertainty over long-term climate in major infrastructure projects: four innovations of the Thames Estuary 2100 Project. EURO J Decis Process 1(3–4):233–262. doi:10.1007/s40070-013-0014-5

Singh R, Reed PM, Keller K (2015) Many-objective robust decision making for managing an ecosystem with a deeply uncertain threshold response. Ecol Soc 20(3):12. doi:10.5751/ES-07687-200312

Slangen ABA, Katsman CA, van de Wal RSW, Vermeersen LLA, Riva REM (2012) Towards regional projections of twenty-first century sea-level change based on IPCC SRES scenarios. Clim Dyn 38(5):1191–1209. doi:10.1007/s00382-011-1057-6

Tebaldi C, Strauss BH, Zervas CE (2012) Modelling sea level rise impacts on storm surges along US coasts. Environ Res Lett 7(1):014,032. doi:10.1088/1748-9326/7/1/014032

Van den Hurk B, Siegmund P, Klein Tank A (2014) KNMI’14: climate change scenarios for the 21st century—a Netherlands perspective. Sci Rep WR2014-01, KNMI, De Bilt, the Netherlands, http://www.knmi.nl/bibliotheek/knmipubWR/WR2014-01.pdf, visited on 2016-05-08

Acknowledgements

We thank Hylke de Vries, Bob Kopp, Roger Cooke, and Michael Oppenheimer for their valuable insights and comments. This work was partially supported by the National Science Foundation through the Network for Sustainable Climate Risk Management (SCRiM) under NSF cooperative agreement GEO-1240507, and the Penn State Center for Climate Risk Management. We thank Kelsey Ruckert for coding assistance. Any conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the funding agencies.

Author information

Authors and Affiliations

Corresponding author

Additional information

Code and data availability: The code and data to reproduce the results are available from the corresponding author upon request. A repository is available at: https://github.com/scrim-network/Deep-uncertainties-about-SL-projections.

Appendix

Appendix

The presented projections in Figs. 1 and 2 are scaled from the original published values (Perrette et al. 2013; Church et al. 2013; De Vries et al 2014; Kopp et al 2014; Grinsted et al 2015) to represent the sea-level rise (contributions) for the period 1995–2090. This period is chosen to minimize the scaling need. All cumulative projections assume a constant acceleration in time (second order polynomial) for all components.

We estimate the probabilistic uncertainty ranges of the global sea-level rise by summing the individual contributions via a Monte Carlo sampling assuming independence between the different sources of uncertainty. For thermosteric expansion, glaciers and small ice caps, and land water contributions, we fit a normal distribution to the different interpretations of the AR5’s likely range (i.e., probability of lower and upper bound). For the large ice sheet contributions, we fit a three-parameter log-normal distribution with offset (De Vries and Van de Wal 2015) to the median and to different interpretations of the AR5’s likely range (i.e., spanning 66% probability or more).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bakker, A.M.R., Louchard, D. & Keller, K. Sources and implications of deep uncertainties surrounding sea-level projections. Climatic Change 140, 339–347 (2017). https://doi.org/10.1007/s10584-016-1864-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-016-1864-1