Abstract

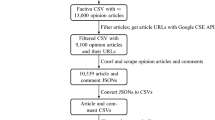

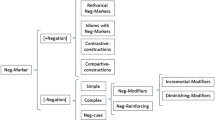

Even though peer review is a central aspect of scientific communication, research shows that the process reveals a power imbalance. The position of the reviewer allows them to be harsh and intentionally offensive without being held accountable. It casts doubt on the integrity of the peer-review process and transforms it into an unpleasant and traumatic experience for authors. Accordingly, more effort should be given to provide critical and constructive feedback. Hence, it is necessary to remedy the growing rudeness and lack of professionalism in the review system, by analyzing the tone of review comments and creating a classification on the level of politeness in a review comment. To this end, we develop the first annotated PolitePEER dataset encompassing five levels of politeness: (1) highly impolite, (2) impolite, (3) neutral, (4) polite, and (5) highly polite. The review sentences accrued from multiple venues, viz., ICLR, NeurIPS, Publons and ShitMyReviewersSay. We have formulated our annotation guidelines and conducted a thorough analysis of the PolitePEER dataset, ensuring the dataset quality with an inter-annotation agreement of 93%. Additionally, we have benchmarked PolitePEER for multiclass classification and provided an extensive analysis of the proposed baseline. As a result, the proposed PolitePEER can aid in developing a politeness indicator to notify the reviewer and the editors to amend and formalize the review accordingly. Our dataset and codes are available at https://github.com/PrabhatkrBharti/PolitePEER.git for the community to explore further.

Similar content being viewed by others

Notes

References

Andersson, L. M., & Pearson, C. M. (1999). Tit for tat? the spiraling effect of incivility in the workplace. Academy of Management Review, 24(3), 452–471.

Beaumont, L. J. (2019). Peer reviewers need a code of conduct too. Nature, 572(7769), 439–440.

Belcher, D. D. (2007). Seeking acceptance in an english-only research world. Journal of Second Language Writing, 16(1), 1–22.

Beltagy, I., Lo, K., Cohan, A. (2019). Scibert: A pretrained language model for scientific text. Preprint retrieved from http://arxiv.org/abs/1903.10676

Bharti, P.K., Ghosal, T., Agarwal, M., & Ekbal, A. (2022a). A dataset for estimating the constructiveness of peer review comments. In International conference on theory and practice of digital libraries (pp. 500–505). Springer.

Bharti, P.K., Ghosal, T., Agrawal, M., & Ekbal, A. (2022b). How confident was your reviewer? Estimating reviewer confidence from peer review texts. In International workshop on document analysis systems (pp. 126–139). Springer.

Bohannon, J. (2013). Who’s afraid of peer review? American Association for the Advancement of Science

Bonn, N.A. (2020). Noémie aubert bonn

Bornmann, L., & Mutz, R. (2015). Growth rates of modern science: A bibliometric analysis based on the number of publications and cited references. Journal of the Association for Information Science and Technology, 66(11), 2215–2222.

Brennan, S. E., & Ohaeri, J. O. (1999). Why do electronic conversations seem less polite? the costs and benefits of hedging. ACM SIGSOFT Software Engineering Notes, 24(2), 227–235.

Brown, P., & Levinson, S.C. (1978). Universals in language usage: Politeness phenomena. In Questions and politeness: Strategies in social interaction, pp. 56–311. Cambridge University Press

Brown, P., Levinson, S.C., & Levinson, S.C. (1987). Politeness: Some universals in language usage. Cambridge University Press

Burke, M., & Kraut, R. (2008). Mind your ps and qs: the impact of politeness and rudeness in online communities. In: Proceedings of the 2008 ACM conference on computer supported cooperative work (pp. 281–284). ACM

Caselli, T., Basile, V., Mitrović, J., & Granitzer, M. (2020). Hatebert: Retraining bert for abusive language detection in english. Preprint retrieved from http://arxiv.org/abs/2010.12472

Choudhary, G., Modani, N., & Maurya, N. (2021). React: A review comment dataset for act ionability (and more). In: Web information systems engineering–WISE 2021: 22nd International conference on web information systems engineering, WISE 2021, Melbourne, VIC, Australia, October 26–29, 2021 (pp. 336–343). Springer

Cohen, J. (1960). A coefficient of agreement for nominal scales. Educational and Psychological Measurement, 20(1), 37–46. https://doi.org/10.1177/001316446002000104

Coniam, D. (2012). Exploring reviewer reactions to manuscripts submitted to academic journals. System, 40(4), 544–553.

Danescu-Niculescu-Mizil, C., Lee, L., Pang, B., & Kleinberg, J. (2012). Echoes of power: Language effects and power differences in social interaction. In: Proceedings of the 21st international conference on world wide web (pp. 699–708)

Danescu-Niculescu-Mizil, C., Sudhof, M., Jurafsky, D., Leskovec, J., & Potts, C. (2013). A computational approach to politeness with application to social factors. Preprint retrieved from http://arxiv.org/abs/1306.6078

Dueñas, P. M. (2012). Getting research published internationally in english: An ethnographic account of a team of finance spanish scholars’ struggles. Ibérica, Revista de la Asociación Europea de Lenguas para Fines Específicos, 24, 139–155.

Duthler, K. W. (2006). The politeness of requests made via email and voicemail: Support for the hyperpersonal model. Journal of Computer-Mediated Communication, 11(2), 500–521.

Falkenberg, L. J., & Soranno, P. A. (2018). Reviewing reviews: An evaluation of peer reviews of journal article submissions. Limnology and Oceanography Bulletin, 27(1), 1–5.

Fortanet, I. (2008). Evaluative language in peer review referee reports. Journal of English for Academic Purposes, 7(1), 27–37.

Gao, Y., Eger, S., Kuznetsov, I., Gurevych, I., & Miyao, Y. (2019). Does my rebuttal matter? insights from a major nlp conference. Preprint retrieved from http://arxiv.org/abs/1903.11367

Ghosal, T., Kumar, S., Bharti, P. K., & Ekbal, A. (2022). Peer review analyze: A novel benchmark resource for computational analysis of peer reviews. Plos one, 17(1), 0259238.

Gilbert, E. (2012). Phrases that signal workplace hierarchy. In: Proceedings of the ACM 2012 conference on computer supported cooperative work (pp. 1037–1046). ACM

Grice, H.P. (1975). Logic and conversation. In: Speech acts (pp. 41–58). Brill

Herring, S.C. (1994). Politeness in computer culture: Why women thank and men flame. In: Cultural performances: Proceedings of the third Berkeley women and language conference (pp. 278–294)

Hewings, M. (2004). An’important contribution’or’tiresome reading’? a study of evaluation in peer reviews of journal article submissions. Journal of Applied Linguistics and Professional Practice, 2004, 247–274.

Holmes, J. (2005). When small talk is a big deal: Sociolinguistic challenges in the workplace. Second Language Needs Analysis, 344, 371.

Hua, X., Nikolov, M., Badugu, N., Wang, L. (2019). Argument mining for understanding peer reviews. Preprint retrieved from http://arxiv.org/abs/1903.10104

Hyland, K. (2016). Academic publishing: Issues and challenges in the construction of knowledge-oxford applied linguistics

Hyland, K.(2018). Metadiscourse: Exploring interaction in writing. Bloomsbury Publishing

Hyland, K., & Jiang, F.K . (2020). “This work is antithetical to the spirit of research”: An anatomy of harsh peer reviews. Journal of English for Academic Purposes 46, 10.

Hyland, K. (2005). Stance and engagement: A model of interaction in academic discourse. Discourse Studies, 7(2), 173–192.

Jefferson, T., Rudin, M., Folse, S.B., & Davidoff, F. (2006). Editorial peer review for improving the quality of reports of biomedical studies. Cochrane Database of Systematic Reviews 1, 4.

Kang, D., Ammar, W., Dalvi, B., Zuylen, M., Kohlmeier, S., Hovy, E.H., & Schwartz, R. (2018). A dataset of peer reviews (peerread): Collection, insights and NLP applications. In M. A. Walker, H. Ji, A. Stent (eds.) Proceedings of the 2018 conference of the North American chapter of the association for computational linguistics: Human language technologies, NAACL-HLT 2018, New Orleans, Louisiana, USA, June 1-6, 2018 (pp. 1647–1661). Association for Computational Linguistics. https://doi.org/10.18653/v1/n18-1149 .

Kendall, M. G., & Smith, B. (1939). The problem of m rankings. The Annals of Mathematical Statistics, 10(3), 275–287. https://doi.org/10.1214/aoms/1177732140

Kourilová, M. (1996). Interactive functions of language in peer reviews of medical papers written by non-native users of english. Unesco ALSED-LSP Newsletter, 19(1), 4–21.

Krippendorff, K. (2004). Content analysis: An introduction to its methodology (2nd ed). Sage

Lakoff, R.(1973). The logic of politeness: Or, minding your p’s and q’s. In: Proceedings from the annual meeting of the Chicago linguistic Society (pp. 292–305). Chicago Linguistic Society

Lakoff, R. (1977). What you can do with words: Politeness, pragmatics and performatives. In: Proceedings of the Texas conference on performatives, presuppositions and implicatures 9pp. 79–106). ERIC

Lauscher, A., Glavaš, G., & Ponzetto, S.P. (2018). An argument-annotated corpus of scientific publications. Association for Computational Linguistics

Leech, G.N. (2016). Principles of pragmatics. Routledge

Lin, J., Song, J., Zhou, Z., Chen, Y., & Shi, X. (2022). Moprd: A multidisciplinary open peer review dataset. Preprint retrieved froms http://arxiv.org/abs/2212.04972

Luu, S.T., & Nguyen, N.L.T. (2021). Uit-ise-nlp at semeval-2021 task 5: Toxic spans detection with bilstm-crf and toxicbert comment classification. Preprint retrieved from http://arxiv.org/abs/2104.10100

Matsui, A., Chen, E., Wang, Y., & Ferrara, E. (2021). The impact of peer review on the contribution potential of scientific papers. PeerJ, 9, 11999.

Mulligan, A., Hall, L., & Raphael, E. (2013). Peer review in a changing world: An international study measuring the attitudes of researchers. Journal of the American Society for Information Science and Technology, 64(1), 132–161.

Mungra, P., & Webber, P. (2010). Peer review process in medical research publications: Language and content comments. English for Specific Purposes, 29(1), 43–53.

Obeng, S. G. (1997). Language and politics: Indirectness in political discourse. Discourse & Society, 8(1), 49–83.

Paltridge, B.(2017). The discourse of peer review (pp. 978–981). Palgrave Macmillan

Peterson, K., Hohensee, M., & Xia, F. (2011). Email formality in the workplace: A case study on the enron corpus. In: Proceedings of the workshop on language in social media (LSM 2011) (pp. 86–95). LSM

Plank, B., & Dalen, R. (2019). Citetracked: A longitudinal dataset of peer reviews and citations (pp. 116–122). BIRNDL@ SIGIR

Prabhakaran, V., Rambow, O., & Diab, M. (2012). Predicting overt display of power in written dialogs. In: Proceedings of the 2012 conference of the North American chapter of the association for computational linguistics: Human language technologies (pp. 518–522). ACL

Rogers, P. S., & Lee-Wong, S. M. (2003). Reconceptualizing politeness to accommodate dynamic tensions in subordinate-to-superior reporting. Journal of Business and Technical Communication, 17(4), 379–412.

Scholand, A.J., Tausczik, Y.R., & Pennebaker, J.W. (2010) Social language network analysis. In: Proceedings of the 2010 ACM conference on computer supported cooperative work (pp. 23–26).

Schwartz, S. J., & Zamboanga, B. L. (2009). The peer-review and editorial system: Ways to fix something that might be broken. Perspectives on Psychological Science, 4(1), 54–61.

Shema, H. (2022). The birth of modern peer review. Retrieved July 15, 2022, from https://blogs.scientificamerican.com/information-culture/the-birth-of-modern-peer-review/.

Shen, C., Cheng, L., Zhou, R., Bing, L., You, Y., & Si, L. (2022). Mred: A meta-review dataset for structure-controllable text generation. Findings of the Association for Computational Linguistics: ACL, 2022, 2521–2535.

Silbiger, N. J., & Stubler, A. D. (2019). Unprofessional peer reviews disproportionately harm underrepresented groups in stem. PeerJ, 7, 8247.

Singh, S., Singh, M., & Goyal, P. (2021). Compare: A taxonomy and dataset of comparison discussions in peer reviews. In: 2021 ACM/IEEE joint conference on digital libraries (JCDL) (pp. 238–241). IEEE

Spencer, S. J., Logel, C., & Davies, P. G. (2016). Stereotype threat. Annual Review of Psychology, 67(1), 415–437.

Stappen, L., Rizos, G., Hasan, M., Hain, T., & Schuller, B.W. (2020). Uncertainty-aware machine support for paper reviewing on the interspeech 2019 submission corpus

Swales, J. (1996). Occluded genres in the academy. Academic Writing 1996, 45–58

Verma, R., Roychoudhury, R., Ghosal, T. (2022). The lack of theory is painful: Modeling harshness in peer review comments. In: Proceedings of the 2nd conference of the Asia-Pacific chapter of the association for computational linguistics and the 12th international joint conference on natural language processing (pp. 925–935). ACL

Voigt, R., Camp, N. P., Prabhakaran, V., Hamilton, W. L., Hetey, R. C., Griffiths, C. M., Jurgens, D., Jurafsky, D., & Eberhardt, J. L. (2017). Language from police body camera footage shows racial disparities in officer respect. Proceedings of the National Academy of Sciences, 114(25), 6521–6526.

Wilcox, C. (2019). Rude reviews are pervasive and sometimes harmful, study finds. Science, 366(6472), 1433–1433.

Year’s Best Peer Review Comments: Papers That "Suck the Will to Live" — discovermagazine.com. Retrieved January 02, 2023, https://www.discovermagazine.com/mind/years-best-peer-review-comments-papers-that-suck-the-will-to-live.

Yuan, W., Liu, P., & Neubig, G. (2022). Can we automate scientific reviewing? Journal of Artificial Intelligence Research, 75, 171–212.

Acknowledgements

The third author, Asif Ekbal, has received the Visvesvaraya Young Faculty Award. He owes a debt of gratitude to the Indian government and the Ministry of Electronics and Information Technology for their assistance.

Author information

Authors and Affiliations

Contributions

PKB: Conceptualization, data curation, investigation, methodology, experiments, writing - original draft and review & editing. MN: Data curation, data cleaning. MA: Supervision, reviewing & editing. AE: Supervision, reviewing & editing.

Corresponding authors

Ethics declarations

Conflict of interest

It is declared that none of the authors have any conflicts of interest concerning the publication of this article.

Ethical approval

We do not intend to attack specific individuals. Our purpose is to draw attention to the negative cultural zeitgeist in peer review, hoping that a discussion will stimulate improvement.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bharti, P.K., Navlakha, M., Agarwal, M. et al. PolitePEER: does peer review hurt? A dataset to gauge politeness intensity in the peer reviews. Lang Resources & Evaluation (2023). https://doi.org/10.1007/s10579-023-09662-3

Accepted:

Published:

DOI: https://doi.org/10.1007/s10579-023-09662-3