Abstract

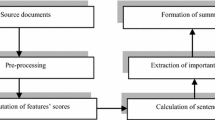

Due to the massive amount of information accessible on the internet, it has become a challenging task for users to discover the desired information. Automatic document summarization has become an emerging technology to address these issues. This allows the users to get the relevant information in a shortened version. However, the summary should have high content coverage and low redundancy to generate a good quality summary. Therefore, an enriched Dragonfly-Fuzzy Logic (FL) Single Document summarization is presented in this paper. Initially, the web document is preprocessed in which some functions such as segmentation, stop word removal, URL removal, stemming, etc. are performed. After preprocessing, the important features such as sentence location, proper nouns, numeric data, cue phrases, etc. are extracted from the web document. Here, the significance of the extracted features is decided by providing weights to each of the features using the Enriched Dragonfly Optimization Algorithm (EDOA). Once the weights are allotted for the features, the importance of the sentence is determined by using the FL system to form a summary. Finally, the sentence similarity in the generated summary is calculated, and then the similar sentences are eliminated from the summary to avoid redundancy issues. The performance of the proposed Dragonfly-FL summarization is tested in the CNN/Daily Mail dataset, and finally, the results are compared with the existing techniques such as MAMHOA, ExDoS, Karci summarization, and regression-based technique, DSN, Semantic approach, and BERTSUMEXT in terms of ROUGE-1, ROUGE-2, and ROUGE-L measures. The observation demonstrates that the proposed technique performs better than the existing techniques with precision, recall, and an F-score of 0.11, 0.05, and 0.01 respectively.

Similar content being viewed by others

References

Abualigah, L., Abd Elaziz, M., Sumari, P., Geem, Z. W., & Gandomi, A. H. (2022). Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Systems with Applications., 191, 116158.

Abualigah, L., Bashabsheh, M. Q., Alabool, H., & Shehab, M. (2020). Text summarization: A brief review. Recent Advances in NLP: The Case of Arabic Language (pp. 1–15).

Abualigah, L., Yousri, D., Abd Elaziz, M., Ewees, A. A., Al-Qaness, M. A., & Gandomi, A. H. (2021). Aquila optimizer: a novel meta-heuristic optimization algorithm. Computers & Industrial Engineering, 157, 107250.

Abujar, S., Hasan, M., & Hossain, S. (2019). A Sentence similarity estimation for text summarization using deep learning. In Proceedings of the 2nd International Conference on Data Engineering and Communication Technology (pp. 155–164).

Adelia, R., Suyanto, S., & Wisesty, U. N. (2019). Indonesian abstractive text summarization using bidirectional gated recurrent unit. Procedia Computer Science, 157, 581–588.

Alami, N., Meknassi, M., & En-nahnahi, N. (2019). Enhancing unsupervised neural networks based text summarization with word embedding and ensemble learning. Expert Systems with Applications, 123, 195–211.

Bidoki, M., Moosavi, M. R., & Fakhrahmad, M. (2020). A semantic approach to extractive multi-document summarization: Applying sentence expansion for tuning of conceptual densities. Information Processing & Management., 57(6), 102341.

Cagliero, L., & La Quatra, M. (2020). Extracting highlights of scientific articles: A supervised summarization approach. Expert Systems with Applications, 160, 113659.

Chouigui, A., Ben Khiroun, O., & Elayeb, B. (2021). An arabic multi-source news corpus: Experimenting on single-document extractive summarization. Arabian Journal for Science and Engineering., 46(4), 3925–3938.

Diao, Y., Lin, H., Yang, L., Fan, X., Chu, Y., Wu, D., & Xu, K. (2020). CRHASum: Extractive text summarization with contextualized-representation hierarchical-attention summarization network. Neural Computing and Applications, 32(15), 11491–11503.

Elayeb, B., Chouigui, A., Bounhas, M., & Khiroun, O. B. (2020). Automatic Arabic Text Summarization Using Analogical Proportions. Cognitive Computation, 12(5), 1043–1069.

Furner, C. P., & Zinko, R. A. (2017). The influence of information overload on the development of trust and purchase intention based on online product reviews in a mobile vs. web environment: an empirical investigation. Electronic Markets, 27(3), 211–224.

Gambhir, M., & Gupta, V. (2017). Recent automatic text summarization techniques: A survey. Artificial Intelligence Review, 47(1), 1–66.

Ghadimi, A., & Beigy, H. (2020). Deep submodular network: An application to multi-document summarization. Expert Systems with Applications., 152, 113392.

Ghodratnama, S., Beheshti, A., Zakershahrak, M., & Sobhanmanesh, F. (2020). Extractive document summarization based on dynamic feature space mapping. IEEE Access, 8, 139084–139095.

Hark, C., & Karcı, A. (2020). Karcı summarization: A simple and effective approach for automatic text summarization using Karcı entropy. Information Processing Management, 57(3), 102187.

Ishigaki, T., Kamigaito, H., Takamura, H., & Okumura, M. (2019). Discourse-aware hierarchical attention network for extractive single-document summarization. In Proceedings of the International Conference on Recent Advances in Natural Language Processing (RANLP 2019) (pp. 497–506).

Jin, H., & Wan, X. (2020) Abstractive Multi-Document Summarization via Joint Learning with Single-Document Summarization. In Findings of the Association for Computational Linguistics: EMNLP (pp. 2545–2554).

Joshi, A., Fidalgo, E., Alegre, E., & Fernández-Robles, L. (2019). SummCoder: An unsupervised framework for extractive text summarization based on deep auto-encoders. Expert Systems with Applications, 129, 200–215.

Kanapala, A., Pal, S., & Pamula, R. (2019). Text summarization from legal documents: A survey. Artificial Intelligence Review, 51(3), 371–402.

Krishnaveni, P., & Balasundaram, S. R. (2017). Automatic text summarization by local scoring and ranking for improving coherence. In 2017 International Conference on Computing Methodologies and Communication (ICCMC) (pp. 59–64).

Lin, C. Y., & Hovy, E. (2003). Automatic evaluation of summaries using n-gram co-occurrence statistics. In Proceedings of the 2003 Human Language Technology Conference of the North American Chapter of the Association for Computational Linguistics (pp. 150–157).

Liu, Y., Titov, I., & Lapata, M. (2019). Single document summarization as tree induction. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers) (pp. 1745–1755).

Mallick, C., Das, A. K., Dutta, M., Das, A. K., & Sarkar, A. (2019). Graph-based text summarization using modified TextRank. In Soft computing in data analytics (pp. 137–146).

Mao, X., Yang, H., Huang, S., Liu, Y., & Li, R. (2019). Extractive summarization using supervised and unsupervised learning. Expert Systems with Applications, 133, 173–181.

Meraihi, Y., Gabis, A. B., Mirjalili, S., & Ramdane-Cherif, A. (2021). Grasshopper optimization algorithm: Theory, variants, and applications. IEEE Access., 9, 50001–50024.

Mirshojaee, S. H., Masoumi, B., & Zeinali, E. (2020). MAMHOA: A multi-agent meta-heuristic optimization algorithm with an approach for document summarization issues. Journal of Ambient Intelligence and Humanized Computing, 1, 1–16.

Mohd, M., Jan, R., & Shah, M. (2020). Text document summarization using word embedding. Expert Systems with Applications, 143, 112958.

Moratanch, N., & Chitrakala, S. (2017). A survey on extractive text summarization. In 2017 international conference on computer, communication and signal processing (ICCCSP) (pp. 1–6).

Nasar, Z., Jaffry, S. W., & Malik, M. K. (2019). Textual keyword extraction and summarization: State-of-the-art. Information Processing Management, 56(6), 102088.

Nguyen, M. T., & Nguyen, M. L. (2017). Intra-relation or inter-relation exploiting social information for web document summarization. Expert Systems with Applications, 76, 71–84.

Rinaldi, A. M., & Russo, C. (2021). Using a multimedia semantic graph for web document visualization and summarization. Multimedia Tools and Applications, 80(3), 3885–3925.

Sanchez-Gomez, J. M., Vega-Rodríguez, M. A., & Pérez, C. J. (2020). A decomposition-based multi-objective optimization approach for extractive multi-document text summarization. Applied Soft Computing, 91, 106231.

Song, S., Huang, H., & Ruan, T. (2019). Abstractive text summarization using LSTM-CNN based deep learning. Multimedia Tools and Applications, 78(1), 857–875.

Sun, S., & Nenkova, A. (2019). The feasibility of embedding based automatic evaluation for single document summarization. InProceedings of the 2019 conference on empirical methods in natural language processing and the 9th international joint conference on natural language processing (EMNLP-IJCNLP) (pp. 1216–1221).

Van Lierde, H., & Chow, T. W. (2019). Query-oriented text summarization based on hypergraph transversals. Information Processing Management, 56(4), 1317–1338.

Verma, N.K., Singh, V., Rajurkar, S., Aqib, M. (2019). Fuzzy inference network with mamdani fuzzy inference system. In Computational Intelligence: Theories, Applications and Future Directions-Volume I. Springer, Singapore (pp. 375–388).

Vinod, P., Safar, S., Mathew, D., Venugopal, P., Joly, L. M., George, J. (2020). Fine-tuning the BERTSUMEXT model for Clinical Report Summarization. In 2020 International Conference for Emerging Technology (INCET) IEEE (pp. 1–7).

Acknowledgements

Not Applicable

Funding

There is no funding for this study.

Author information

Authors and Affiliations

Contributions

All the authors have participated in writing the manuscript and have revised the final version. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

Authors declares that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants and/or animals performed by any of the authors.

Consent to participate

There is no informed consent for this study.

Consent for publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Srivastava, A.K., Pandey, D. & Agarwal, A. Redundancy and coverage aware enriched dragonfly-FL single document summarization. Lang Resources & Evaluation 56, 1195–1227 (2022). https://doi.org/10.1007/s10579-022-09608-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10579-022-09608-1