Abstract

Background

To support student mental health, school staff must have knowledge of evidence-based practices and the capacity to implement them. One approach used to address this challenge is a group-based telementoring model called Extension for Community Healthcare Outcomes (ECHO). In other applications (e.g., healthcare settings), ECHO has been shown to increase healthcare professionals’ self-efficacy and knowledge of evidence-based practices leading to improved patient outcomes.

Objectives

This study examined the potential for ECHO to be used as a method for increasing school staff engagement and knowledge of evidence-based school mental health practices.

Methods

Using a quasi-experimental design, this study compared outcomes across two professional development experiences aimed at promoting school staff ability to provide evidence-based mental health services. School staff from four school districts participated in a school mental health training initiative. All participants (N = 57) had access to asynchronous, online mental health modules. A sub-sample (n = 33) was also offered monthly ECHO sessions.

Results

Tests of group difference in outcomes revealed significant increases in engagement with online learning (d = 0.58) and satisfaction (d = 0.82) for those who participated in ECHO as compared to those who did not. Knowledge about evidence-based practices was not significantly different between groups.

Conclusions

Results suggest that group-based telementoring may be a promising approach for improving engagement and satisfaction with training initiatives aimed at promoting evidence-based school mental health practices. However, further study of ProjectECHO using experimental designs is needed to make causal inferences about its effect on provider outcomes.

Similar content being viewed by others

Educators, policy makers, and researchers are increasingly interested in developing the capacity to support and promote the mental health of K-12 students. Despite this interest, structural barriers between researchers and school staff make it difficult for practitioners to access and use research-supported tools developed to promote student mental health. This research-to practice gap has led to an increased emphasis on developing strategies to facilitate the translation of scientific findings from researchers to practitioners. This work has led to various strategies, including professional development (Bottiani et al., 2018) as well as coaching and mentorship programs (Becker et al., 2013; Bradshaw et al., 2018; Hershfeldt et al., 2012) developed to increase school staff knowledge and skills for supporting student mental health using research supported practices. Some of these approaches have been shown to improve school staff knowledge and behavior (Kraft et al., 2018) and improve student academic and behavioral outcomes (Bradshaw et al., 2010). However, pragmatic and scientific challenges remain. For example, new research has found that providing a mentor or a coach can be prohibitively costly (Pas et al., 2020), and traditional professional development experiences are unlikely to lead to long-term learning (Hill et al., 2013; Taylor & Hamdy, 2013). This study examined one potential novel solution to these historic challenges by testing how a brief telementoring experience paired with asynchronous online learning might improve the effectiveness of a program designed to increase evidence-based school mental health (SMH) practices.

School Mental Health: Opportunities and Challenges

The growing interest in supporting student mental health stems, in part, from research showing that students tend to perform better academically, demonstrate fewer behavioral difficulties, and have improved long-term health outcomes when they are mentally healthy (Durlak et al., 2011). Addressing the mental health needs of students typically requires SMH professionals and school leaders to engage in a coordinated, multistep process to achieve these outcomes. This multistep process is commonly described in four stages: (a) defining goals, (b) assessing needs, (c) implementing strategies, and (d) evaluating and refining outcomes (CASEL, 2020). Defining goals at the outset ensures there is a shared vision among team members and increases buy-in among students, families, and staff by ensuring the specific needs of the school are met. Assessing needs and mapping available resources clarifies what is already available, and what areas need additional support. Action plans for implementation (with clearly established timelines, benchmarks, and defined roles for team members) help to ensure the plan moves forward. Finally, using data to monitor progress toward desired outcomes helps inform any adjustments or changes that should be made (Fagan et al., 2019; Markle et al., 2014; Sugai & Horner, 2009). To support this process, researchers have developed a variety of evidence-based tools that school staff can use to assess and promote student mental health (National Center for School Mental Health, 2019; Von der Embse et al., 2017). When these tools are used with fidelity, moderate to large improvements in student mental health are observed (Pas et al., 2020; Sugai & Horner, 2009). However, SMH professionals often report they lack expertise in using these research-supported practices (Mullen & Lambie, 2016).

The disconnect between available research-based strategies and practitioners’ use of evidence have led researchers to consider how to facilitate the transfer and uptake of research knowledge among school practitioners (Weist et al., 2003). One of the most popular approaches for facilitating this transfer of knowledge is providing professional development experiences designed to increase awareness about new approaches for supporting student mental health (Ball et al., 2010). Professional development experiences in schools typically consist of occasional short-term workshops or online learning experiences, which tend to focus on discrete topics (Darling-Hammond et al., 2009). Online learning is popular because it is a relatively low-cost, scalable approach for communicating research evidence to school practitioners. Unfortunately, these experiences tend to be insufficient for producing meaningful changes in professionals’ ability or confidence to apply research evidence because it provides limited opportunities to distribute practice over time or is disconnected from professional challenges school staff are facing (Hill et al., 2013; Taylor & Hamdy, 2013). Due to these limitations, other researchers have been exploring ways to engage participants in online learning using blended learning approaches (e.g., enhancing asynchronous instruction with coaching or professional learning teams). In some cases, this has been successful (Akoglu et al., 2019; Downer et al., 2009); while, in other instances, this blended approach has not demonstrated changes in school staff engagement and satisfaction (LoCasale-Crouch et al., 2016). Several conditions have been identified as critical features of effective online learning, including content focus, active learning, coherence, sustained duration, and collective participation (Desimone, 2009). In other words, effective online learning can be characterized as learning that provides participants timely information about issues directly related to their professional practice. Challenges remain in the deployment of this type of learning when needs of staff are diverse and providers have limited time to devote to professional development experiences.

In response to known challenges associated with online learning, novel strategies may be useful for expanding access to information about evidence-based SMH care. One approach, which is the focus of the current study, is a telementoring model called Extension for Community Healthcare Outcomes (ECHO). Telementoring refers to a form of training and support practice that occurs virtually and emphasizes collaborative relationships established among individuals. The ECHO model is one type of telementoring that was developed to increase physicians’ knowledge about best practice care through group-based virtual mentoring. The structure of the ECHO model involves regularly scheduled, live, virtual meetings with university faculty experts (typically four or five, referred to as the hub team) and healthcare providers (typically 20–30, referred to as the spokes). Each meeting consists of short introductions (approximately 10 min); a brief presentation about a relevant topic related to evidence-based care, typically given by one of the hub team members (approximately 10 min); and a case presentation from one of the spokes. During the case presentation, a facilitator invites the hub team and spokes to ask clarifying questions followed by specific recommendations (approximately 40 min). Empirical studies of the ECHO model tend to show moderate positive changes in clinicians’ self-efficacy and knowledge about evidence-based practices as well as patient outcomes (Arora et al., 2010; Arora et al., 2011).

However, these studies have largely been tested within medical settings (e.g., community health clinics) and, to our knowledge, no study to date has applied the ECHO model to the provision of school-based mental health services. In addition, some studies have noted challenges with sufficiently teaching spokes evidence-based practices over a relatively brief period (Hostutler et al., 2020). In other words, although the brief nature of the ECHO (60-minutes) is often considered a strength, it may not have sufficient duration for promoting change in learning. To address this, researchers have suggested that pairing ECHO telementoring experiences with other online learning (e.g., asynchronous learning) may be one approach for expanding capacity to teach evidence-based practices (Kearly et al., 2020).

Development and Background of Professional Learning for School Mental Health

This study was part of a larger, ongoing, statewide research-practice partnership in a southeastern state. School practitioners and university researchers collaboratively created the professional development experiences to increase equitable access to high-quality SMH services. Partnership activities were designed to remediate the barriers that high-need school districts often face when addressing student mental health needs (e.g., accessibility, availability, and acceptability issues; Bershad & Blaber, 2011; Blackstock et al., 2018). Partnership-provided supports included asynchronous online learning (referred to as modules) and the ECHO focused on aspects of SMH service provision.

Development of module content began in December 2019. First, through a series of meetings with senior district leaders (e.g., superintendents and directors of student affairs) and university faculty, professional development priorities related to SMH services were established. These topics included the use of evidence-based mental health interventions and supervisory practices of graduate student trainees. To develop content for these topics, researchers with expertise in the content areas worked with an online instruction designer who developed content according to best practices in online design and adult learning (e.g., modules were designed to be completed within an hour and included interactive components as well as multimedia content to increase relevance and engagement). Supplemental resources and tools relevant to module content were also provided. Furthermore, to scaffold learning, modules were structured such that participants had to complete them sequentially (i.e., participants were required to complete the module as well as the knowledge and satisfaction measures before accessing the next module). Beginning in August 2020, the partnership launched a staggered rollout of the modules focused on two topics: (a) evidence-based practices to support student mental health, which covered topics that included implementing tiered systems of support as well as behavioral and cognitive behavioral strategies for supporting student mental health (n = 5; launched August 2020); and (b) culturally responsive practices for supervising graduate student trainees, which included topics on establishing a supervisory relationship with attention to potential cultural differences and giving feedback (n = 3, launched December 2020). Participants completed modules at their own pace and were eligible for financial compensation upon successful completion of the training (given at the end of the school year).

Between September 2020 and December 2020, ECHO sessions occurred once monthly for 60-minutes (N = 4) via a secure video conferencing platform. The topic presentations covered content such as behavioral activation, motivational interviewing, and racial trauma in schools, while the case presentations focused on issues related to supporting transgender youth, interprofessional collaboration, and the mental health needs of high-achieving students. A single cohort of SMH staff as well as four hub team members participated in the ECHO. The hub team consisted of a school psychologist, school counselor, school social worker, and school nurse who were all currently employed as university trainers and who had research and applied experiences in SMH. Each ECHO session was supported by a facilitator (a licensed psychologist and school psychologist) who kept time and ensured ECHO sessions were implemented with fidelity (e.g., led introductions, initiated the topic and case presentations, ensured the environment remained productive, directed conversation between spokes and hubs, and made sure sessions progressed per the agenda). A technology support specialist was also available throughout each session to provide technical assistance to participants.

Current Study

We tested the hypothesis that pairing online learning modules with ECHO would be associated with increases in SMH staff engagement with the modules. Specifically, we hypothesized that ECHO participation would increase school practitioners’ engagement and satisfaction with program activities as well as their knowledge of evidence-based practices in SMH. The current study aims to answer three research questions. First, how does ECHO participation change SMH professionals’ engagement in asynchronous online learning modules designed to promote knowledge about evidence-based practices? Second, among professionals who completed the online modules, is ECHO participation associated with greater satisfaction with the modules? Third, among professionals who completed the online modules, is ECHO participation associated with greater knowledge about evidence-based practices upon completion of the modules?

We hypothesized that ECHO participation would be associated with positive changes across the three outcomes targeted. Specifically, based on prior work on professional learning communities (Annenberg Institute, 2004; Knapp, 2003), we hypothesized that the presence of an ECHO learning group would be associated with higher satisfaction with the asynchronous, online professional development modules. In addition, we hypothesized that the professionals taking part in the ECHO sessions would demonstrate increased engagement with the modules, and, subsequently, show greater knowledge of evidence-based SMH practices (Arora et al., 2010; Arora et al., 2011; Mazurek et al., 2019).

Method

This study occurred in the context of a larger U.S. Department of Education-funded practice grant to train SMH professionals. Consent to participate in the research aspects of the study was voluntary and was not required for participation in training activities. Analyses were conducted on preliminary outcomes collected during the first semester (i.e., August—December 2020) of training activities. A non-randomized, wait-list control trial design was used to adhere to requirements of the practice grant (e.g., preference for enhanced training activities was given to practitioners supervising current trainees and those who were prioritized by participating districts). It is important to consider that the present study occurred within the context of the COVID-19 pandemic. Although this may have impacted participants’ engagement with and response to study activities, we have no indication that participants in each study condition were impacted differently. Study methods are reported according to the Transparent Reporting of Evaluations with Nonrandomized Designs (TREND) guidelines (Des Jarlais et al., 2004).

Participants

Four high-need school districts within a southeastern state were invited to participate in partnership activities. Small- (those serving <10,000 students) and medium-sized (those serving 10,000–40,000 students) districts with higher than the state average of students living in poverty and/or with higher ratios of SMH providers to students than the state average were identified as high-need; and the four participating districts were chosen through consultation with state department of education representatives and with consideration of proximity near the university for internship placement feasibility (see Table 1 for district demographics). During the summer of 2020 (June through August) researchers worked with state department of education representatives and district leaders to identify and recruit SMH professionals (i.e., school counselors, school nurses, school psychologists, and school social workers) for participation in partnership activities. Participation was also open to other school-based professionals, identified by district leaders, who may benefit from training in SMH (e.g., administrators and behavior analysts). Identified participants (n = 77) were formally recruited through emails and virtual informational sessions (e.g., “coffee chats”).

This study included a sample of 57 educational professionals (see Table 2) from participating school districts who provided informed consent in accordance with the research protocol approved by the University’s Institutional Review Board. The sample consisted primarily of SMH providers (88% of participants), defined as: school counselors (n = 31), school nurses (n = 8), school social workers (n = 7), and school psychologists (n = 4). There were also a small number of non-SMH professionals (i.e., school district leaders, behavior analysts, and health clinic assistants: n = 7). Participants were predominantly female (83%), not of Hispanic/Latinx origin (88%), White (85%), and held a master’s degree or higher (84%).

Procedures

The research activities described were approved by the University’s Institutional Review Board. School mental health professionals who participated were eligible for financial compensation upon successful completion of training activities. Participants from other professions (e.g., administrators) were not eligible for financial compensation.

Interventions

Online Learning Modules. All participants were invited to engage in asynchronous online learning modules via Canvas. Two sets of online learning modules (8 modules total) were released during the first semester of training activities. The first set (5 modules) was released in August of 2020 and focused on evidence-based practices to support student mental health (e.g., multi-tiered systems of support, data-based decision-making, and cognitive-behavioral therapy in school-based counseling). The second set (3 modules) was released in December of 2020 and focused on culturally responsive practices for supervising graduate student trainees (e.g., supervising across differences and giving difficult feedback). Modules included presentations of concepts (e.g., lectures/didactics) and active learning opportunities (e.g., examples, practice, and reflection activities). They were designed to be completed independently in 60–90 min at a rate of 1–2 modules per month. Each module was supplemented with additional resources and tools. Participants completed the modules sequentially. After watching a module, participants gained access to its rating scale and knowledge quiz, which they had to complete before accessing the next module.

ECHO Sessions. A subset of participants was also invited to also take part in monthly ECHO sessions beginning at the start of the school year (August 2020; ECHO + modules condition). Four sessions were conducted during the first semester of study activities. ECHO sessions were group-based and occurred virtually via Zoom. Each session lasted for 60 min and followed the same protocol: 10 min for introductions, 15 min for the didactic presentation by university faculty with expertise in the topic (e.g., motivational interviewing, understanding racial trauma in schools), and 35 min for the case presentation by a school-based participant (10 min for the presentation, 10 min for clarifying questions, and 15 min for recommendations). After each session, recommendations provided during the case presentation were summarized and sent to the case presenter.

Assignment Method

Due to restrictions in the number of participants who could be involved in ECHO sessions (approximately 30), only a sub-sample of participants was invited to take part in the ECHO sessions beginning in September of 2020 (ECHO + modules condition). The remaining participants were invited to begin attending ECHO mid-year (January 2021; modules only condition). To adhere to restrictions outlined in the practice grant that funded this research, a non-randomized assignment method was used. First, SMH professionals who were currently supervising an intern or practicum student (n = 10) were assigned to the ECHO + modules condition (school counselors = 8, school psychologists = 1, and school social workers = 1). The remaining ECHO + modules participants were identified with consideration of four criteria: (a) representation from each district, (b) diversity of occupational backgrounds, (c) eligibility to supervise student trainees (i.e., two or more years of experience), and (d) district priorities. District leaders were asked to provide a preferred list of ECHO participants based on eligibility to supervise student trainees and district priorities. A total of 22 participants were identified by district leaders (school counselors =- 14, school nurses = 4, school psychologists = 2, school social workers = 2). However, 11 were rejected for participation in the ECHO + module condition due to overrepresentation of certain occupations. This left a total of 21 participants in the ECHO + module condition and 11 in the module only condition. The remaining participants (n = 25) were stratified by district and occupation and then assigned to conditions in consultation with district leaders with the goal of creating equivalent samples with respect to district representation and participant occupation. In total, 33 participants were assigned to the ECHO + modules condition, and 24 participants were assigned to the modules only condition (see Table 2). Due to the nature of the present study, participants and intervention facilitators were not blind to study conditions.

Data Collection Procedures

All questionnaires were completed virtually via the online learning platform. Group equivalency measures were completed prior to the initiation of the online modules. Satisfaction and knowledge measures were completed after participants finished each corresponding module. Participants could not access the next module until they completed the satisfaction scale for the previous module and earned at least an 80% on the knowledge quiz. Knowledge scores used in the present study reflect participants’ first attempt on knowledge quizzes. Engagement data were also collected via the online learning platform (i.e., module completion data). Demographic and employment data were collected at two time points. At the start of the intervention, district leaders provided information regarding participants’ occupational role and the district in which they were employed. At the end of the intervention, additional demographic data (e.g., gender, race/ethnicity, and years of experience) were collected via Qualtrics. ECHO participation data were collected via intervention records (i.e., attendance sheets completed by a member of the research team).

Measures

Two types of data were collected: group equivalency and outcome data. Group equivalency data were utilized to determine the presence of bias across conditions due to non-randomized grouping procedures. Outcome data were collected to test the hypotheses that participation in the ECHO sessions would be associated with increased engagement, satisfaction, and knowledge in the online modules.

Group Equivalency Measures

To determine group equivalence with respect to SMH competency, three measures (two self-efficacy scales and one knowledge quiz) were administered at the start of the intervention.

Self-Efficacy. Clinical self-efficacy was measured using the self-efficacy as a therapist scale (Wilkerson & Ramirez Basco, 2014), which assessed self-reported competencies in therapeutic skills. Participants indicated on a scale from 1 (“Very Limited”) to 5 (“Very High”) their capacity for skills such as “developing a positive therapeutic alliance” and “implementing effective therapeutic interventions.” The six-item clinical self-efficacy scale demonstrated high internal consistency (α = 0.85). Supervision self-efficacy was measured by combining the competence/effectiveness subscale of the Psychotherapy Supervisor Development Scale (Watkins et al., 1995) and the multicultural competence subscale of the Counselor Supervisor Self-Efficacy Scale (Barnes, 2002; Murphy, 2017). Participants indicated on a scale from 1 (“Never”) to 7 (“Always”) how often they demonstrate each competency. Sample items include, “As a supervisor, I structure the supervision experience effectively,” and “I am able to facilitate a supervisee’s cultural awareness.” The eight-item supervision self-efficacy scale demonstrated high internal consistency (α = 0.94).

Clinical Knowledge. Clinical knowledge was assessed using a case study quiz developed by the researchers and evaluated for content validity by licensed researchers/practitioners with expertise in SMH. Participants answered questions based on information presented in a case scenario (i.e., case history and presenting concerns of a student referred for additional support). Sample questions included, “Given the information in this case study, which ACEs (i.e., Adverse Childhood Experiences) are explicitly described in the case study?” and “Based on the team’s hypothesis, which type of cognitive-behavioral intervention strategy would be most effective to address the concerns observed at school?” Case study items were screened and reduced based on initial results to ensure adequate reliability. The final, four-item quiz demonstrated adequate discrimination and difficulty (average point-biserial correlation = 0.37 and average p-value = 0.68; Bashkov & Clauser, 2019).

Study Outcomes

Engagement. Engagement with the asynchronous online learning modules was defined by the number of modules completed. Thus, engagement here refers to a type of behavioral engagement measured by participation in the modules. Module completion was determined using intervention records from the online learning platform, which can be considered an objective measure of participant engagement (Walton et al., 2017). For each module, participant engagement was coded as 0 (incomplete) or 1 (complete). At the time data analyses were completed, participants had access to eight learning modules, and engagement was coded as 0 (incomplete) or 1 (complete) for each. Total engagement scores for each participant ranged from 0 (no modules completed) to 8 (all available modules completed).

Satisfaction. Satisfaction with the online learning modules was measured using a single-item measure, developed by the researchers to gauge the appropriateness of module content. Single-item satisfaction measures have been shown to be reliable and valid (see, e.g., Cheung & Lucas, 2014). Further, single-item scales perform well in the conditions of the present study (e.g., small sample size and high homogeneity of multi-item satisfaction measures; Diamantopoulos et al., 2012). Participants responded to the statement, “The content of this module was relevant and applicable to my role as a school mental health professional,” using a five-point Likert scale (1 = strongly disagree, 2 = disagree, 3 = neither agree nor disagree, 4 = agree, and 5 = strongly agree). Total satisfaction scores for each participant were calculated as the average satisfaction rating of completed modules.

Knowledge. Knowledge of evidence-based SMH practices was measured using researcher-developed quizzes, administered at the conclusion of each module to assess participants’ understanding of the information presented. The quizzes were evaluated for content validity by an interdisciplinary team of experts in SMH. Each quiz originally consisted of 10 multiple-choice items (e.g., “Which of the following is an example of how to collect impact data?” and “What specific concerns have cognitive-behavioral approaches been found to be effective for addressing?”). Quizzes were screened and reduced based on initial results to ensure adequate reliability. The final knowledge measures ranged from five to eight items per module and demonstrated adequate discrimination (average point-biserial correlation = 0.38) and difficulty (average p-value = 0.85; Bashkov & Clauser, 2019). Cronbach’s alpha of the combined, 49-item knowledge measure was 0.89. Scores for each module were calculated as the percent of items answered correctly on the participants’ first attempt taking the knowledge quiz. Total knowledge scores for each participant were calculated as the average knowledge score of completed modules.

Data Analytic Plan

All analyses were conducted using participant-level data and assessed mean differences across groups. Chi-squared tests (for categorical variables) and t-tests (for continuous variables) were conducted to determine the equivalence of the groups at the start of the intervention (i.e., whether the groups differed with respect to participants’ school division, position, or SMH competencies) using SPSS Version 21 (IBM Corporation, 2020). Post-intervention group difference analyses were conducted using overall engagement, satisfaction, and knowledge scores in R v. 4.0.2 (R Core Team, 2017). Overall engagement was calculated as the total number of modules completed for each participant. Overall satisfaction and knowledge scores were calculated as the average score for each participant across completed modules. If participants did not engage in any online modules, their total satisfaction and knowledge scores were considered missing and deleted in a listwise fashion. To examine group differences in outcomes, we used a Wilcoxon rank-sum test because it is robust to violations of normality and outcome data were either counts or not normally distributed. We also calculated Cohen’s d to provide a standardized measure of effect size: values between 0.2 and 0.5 were classified as “small” effects, values between from 0.5 to 0.8 were classified as “medium”, and values above 0.8 were classified as “large” (Cohen, 1988).

Then, we used a random intercept model to evaluate the change in module completion over the course of the four-month period. To estimate this model, we used the lme package (Bates et al., 2015) in R v. 4.0.2 and modeled the main effects and interaction for time and treatment condition (level 1) nested within participants (level 2). The engagement outcome, module completion, was measured as the cumulative number of modules completed each day throughout the four-month period. Treatment condition was modeled as a binomial indicator with one representing those who participated in the ECHO treatment condition. The interaction between these terms (i.e., time and treatment status) assessed potential group differences in change over time in module engagement over the course of the four-month period.

Results

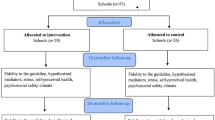

See Fig. 1 for the flow of participants through each stage of the study. Results provided support for the comparability of the modules only condition (n = 24) and the ECHO + modules condition (n = 33). There were no significant differences between the groups on personal (i.e., gender, ethnicity, race, and experience) or professional demographic characteristics, nor on the SMH competency measures (i.e., clinical skills, clinical self-efficacy, and supervision self-efficacy) at the beginning of training (see Table 2).

Descriptive statistics, reported in Table 3, show that participants reported high levels of satisfaction (on average, between 4.2 and 4.7) and knowledge (76–97% accuracy on their first attempt of the knowledge assessment) across each of the eight modules. Thus, after completing modules, most participants demonstrated an understanding of the evidence-based practices presented and agreed or strongly agreed that the content was relevant and applicable to their role in schools. Module engagement appeared to steadily decline over time, with a greater percentage of participants completing modules released earlier (e.g., between 62% and 71% completed modules 1–3) versus later (e.g., between 5% and 14% completed modules 6–8). Participants in the ECHO + modules group attended the ECHO sessions regularly, with attendance rates ranging from 85 to 97% each month.

Tests of group differences in outcomes revealed significant differences in module engagement and satisfaction (see Table 4 for descriptive statistics). Participants in the ECHO + modules group were observed to complete more modules on average (M = 4.03), as compared to the modules only group (M = 2.58). The Wilcoxon rank sum test indicated that these group differences were statistically significant (W = 517.5, p = .04); the standardized effect size fell in the medium range (d = 0.58). Likewise, the ECHO + modules group reported greater satisfaction with the modules (M = 4.51) as compared to the modules only group (M = 4.12; W = 274, p = .02); the standardized effect size fell in the large range (d = 0.82). Finally, we observed null effects for participants’ knowledge when comparing those in the ECHO + modules group (M = 85.16) to those in the modules only group (M = 88.77; W = 155.0, p = .36).

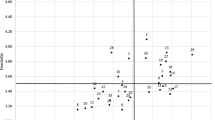

Results from the random intercept model revealed differences between groups in their module engagement over time (see Table 5). Specifically, results showed a significant interaction between time and treatment condition (b = 0.013, SE = 0.00, p < .001). Figure 2 presents the model-implied simple slopes by treatment condition, showing change in module completion over time by treatment condition (i.e., ECHO + modules or modules only). Light grey lines show loess lines for participants across both treatment conditions, illustrating individual variability in engagement. Taken together, there was a general, positive trend across both groups in terms of the number of modules completed over the course of the four-month period; however, those in the ECHO + modules condition were estimated to participate in the online learning modules at a faster rate than those in the modules only condition.

Discussion

Students in public school who are mentally healthy and develop positive social-emotional skills tend to demonstrate better academic, behavioral, and social outcomes (Durlak et al., 2011). This relationship, coupled with the rising mental health needs of students (Lo et al., 2020), has increased the focus on supporting student mental health within schools (Atkins et al., 2010; Hoover & Bostic, 2021). One challenge—which is the focus of this study—that schools face in supporting student mental health is training and supporting school staff in the delivery of evidence-based mental health care. One-time professional development experiences are popular because these trainings are easily scalable but tend to have limited or null impact on staff behavior (Hill et al., 2013; Lyons et al., 2016; Taylor & Hamdy, 2013). In this study, we examined the impact of a brief telementoring model, called ECHO, as a method for engaging school staff in SMH professional development activities. We paired traditional asynchronous online learning modules with ECHO and hypothesized that ECHO participation would increase module engagement and satisfaction as well as knowledge about evidence based SMH practices as compared to participation in online learning modules alone. The results of this study provided support for the original hypotheses. Namely, we found that supplementing online learning modules with ECHO increased staff engagement and satisfaction with the modules. However, differences were not observed in knowledge gained (measured by content quizzes embedded at the end of each training module) by participants in each condition.

These results suggest that ECHO may be a promising approach for improving SMH training initiatives. ECHO participation increased participant engagement in, and satisfaction with, more traditional training opportunities (i.e., online learning). The effect size of ECHO participation on engagement in online learning was found to be moderately positive (d = 0.58). This significant increase in engagement was promising given the potential for ECHO to be relatively low cost and scalable implementation support. In addition, the effect size of ECHO participation on participant satisfaction (d = 0.82) in this study was larger than previous research (Hedges g = 0.12; Ebner & Gegenfurtner, 2019). Thus, ECHO participation may promote a greater breadth and depth of knowledge as participants accessed more learning opportunities.

These results suggest that the ECHO model may be a scalable approach for supporting evidence based SMH practices. In this study, participants in the ECHO + modules condition only participated in four ECHO sessions (once monthly) for a total of 4 hours. The minimal additional time required for participants in ECHO + modules condition is notable because of the medium-to-large, positive changes observed in their engagement and satisfaction with the online learning modules. One explanation for these differences is that ECHO supports school staff engagement and satisfaction by providing opportunities to reinforce topics covered in the online professional development experiences while connecting with peers in similar professional roles. Although this study did not test the mechanisms by which ECHO produced these positive effects, this explanation is consistent with prior research on ECHO indicating that participation was associated with increased self-efficacy and professional satisfaction (Arora et al., 2010, Arora et al., 2011; Mazurek et al., 2020) and reduced feelings of professional isolation (Arora et al., 2010).

The results of this study also suggest that pairing ECHO with asynchronous learning opportunities may be a novel strategy for engaging SMH staff in ways that are both acceptable and relevant to their professional duties. Participants in this study were SMH professionals employed in high-need school districts (i.e., those with high levels of poverty and/or low numbers of mental health staff). In these districts, access to professional development experiences and research-supported practices can be particularly difficult because districts may have fewer financial resources to support staff development and greater demands on professionals’ time may limit their ability to engage in professional development experiences (Mullen et al., 2017). Thus, ECHO participation might provide a new way for professionals in under resourced schools to share information and connect with each other.

Inconsistent with our original hypotheses, we found null differences between conditions on knowledge about evidence-based practices assessed at the end of each professional development module. This finding suggests that ECHO participation was not associated with knowledge about content covered in the online modules. Although the reasons for these null effects were not tested in the current study, it is possible that engaging in online modules alone (when completed by participants) has a similar effect on knowledge as combining online modules with ECHO. Conversely, the items on the knowledge quizzes may have been so specific to the module content that scores did not capture additional knowledge gained through ECHO participation. More research is needed to understand how (or if) ECHO uniquely contributes to knowledge gains.

Limitations and Future Directions

There are at least four limitations with the current study. First, participants were not randomly assigned to the treatment conditions. Instead, participants were assigned to conditions with consideration of priorities outlined in the training grant and identified by partner districts. Although efforts were taken to establish the pre-treatment equivalence of the groups across demographic and competency variables, other unmeasured variables may account for the group differences observed in this study and, as such, causality should not be inferred based on these results alone. The strongest approach to testing these causal mechanisms would involve random assignment of providers to various treatment conditions (Shadish et al., 2002).

Second, data were collected only on provider engagement, satisfaction, and knowledge resulting from the associated training activities. Although these outcomes reflect proximal outcome variables for training, they are not indicators of either behavioral change (i.e., changes in staff practices related to supporting student mental health) or improvements in student mental health outcomes. Future studies should explore how the set of training and support activities shift school professionals’ practices related to evidence-based provision of SMH services as well as measure the impact on student mental, behavioral, and academic outcomes.

Third, this study was primarily limited to SMH staff and a small number of other school leaders (e.g., district administrators). Yet, programmatic shifts in SMH provision require schools to engage in a coordinated effort across multidisciplinary teams of professionals to assess, service, and evaluate the mental health needs of students (Hoover & Bostic, 2021). Although the inferences presented in this study are applicable to the individual school staff participants, future studies should consider how to measure shifts in school-wide attitudes and practices related the provision of SMH services.

Fourth, the generalizability of the study results is limited by the setting, sample, and context in which the study occurred. Because the sample was restricted to high-need school districts, results may not generalize to other districts with greater financial resources or more SMH staff. It may be the case, for example, that districts with greater financial resources offer more routine in-house professional development experiences and that school staff in these settings would find the district-provided experiences more acceptable and relevant to their professional practices as compared to those described in this study. In addition, the participants in our sample were overwhelmingly White, not of Hispanic/Latinx origin, and female. Although these demographics are comparable with national averages (see, e.g., American School Counselor Association, 2021), our results do not reflect the diversity of how individuals may respond to intervention activities based on socio-demographic factors. For example, individuals from underrepresented groups may experience group-based telementoring differently, and their experience may vary based on the demographic context of both their work environment and the ECHO groups. Finally, the present study occurred during the COVID-19 pandemic. Although we have no reason to believe the groups were impacted differently by the pandemic, the presence of pandemic-related procedures and stressors (e.g., distance learning, social distancing, trauma, grief, and economic insecurity) during the current study may impact the generalizability of these findings to future implementations of ECHO.

Conclusions

Our findings highlight the importance of using a prolonged, multidisciplinary, and responsive approach to address problems of practice and increase engagement with professional development. Although short-term professional development is a popular and often preferred mode of transmitting information to practitioners, it is largely ineffective because it does not allow participants to distribute learning over time nor does it necessarily connect to problems of practice facing school staff (i.e., may lack relevance; Darling-Hammond et al., 2009). To address this limitation, we found that, when asynchronous professional development experiences (online modules) are paired with group-based telementoring (ECHO), engagement and satisfaction with online training increases. This result may have been observed because participation in ECHO allows for ongoing multidisciplinary support from experts and practitioners while addressing specific problems of practice. Future research should evaluate levels of effectiveness related to the dosage and other methods of deployment (i.e., in-person, online modules, direct supervision, self-reflection, etc.).

References

Akoglu, K., Lee, H., & Kellogg, S. (2019). Participating in a MOOC and professional learning team: How a blended approach to professional development makes a difference. Journal of Technology and Teacher Education, 27(2), 129–163

American School Counselor Association (2021). ASCA research report: State of the profession 2020. https://www.schoolcounselor.org/getmedia/bb23299b-678d-4bce-8863-cfcb55f7df87/2020-State-of-the-Profession.pdf

Annenberg Institute (2004). Professional development strategies: Professional learning communities/instructional coaching. Brown University. https://www.annenberginstitute.org/publications/professional-development-strategies-professional-learning-communitiesinstructional

Arora, S., Kalishman, S., Thornton, K., Dion, D., Murata, G., Deming, P. … Pak, W. (2010). Expanding access to hepatitis C virus treatment--Extension for Community Healthcare Outcomes (ECHO) project: Disruptive innovation in specialty care. Hepatology, 52(3), 1124–1133. https://doi.org/10.1002/hep.23802

Arora, S., Thornton, K., Murata, G., Deming, P., Kalishman, S., Dion, D. … Qualls, C. (2011). Outcomes of treatment for hepatitis C virus infection by primary care providers. New England Journal of Medicine, 364(23), 2199–2207. https://doi.org/10.1056/NEJMoa1009370

Atkins, M. S., Hoagwood, K. E., Kutash, K., & Seidman, E. (2010). Toward the integration of education and mental health in schools. Administration and Policy on Child Adolescent Mental Health Services Research, 37(1–2), 40–47. https://doi.org/10.1007/s10488-010-0299-7

Ball, A., Anderson-Butcher, D., Mellin, E. A., & Green, J. H. (2010). A cross-walk of professional competencies involved in expanded school mental health: An exploratory study. School Mental Health, 2, 114–124. https://doi.org/10.1007/s12310-010-9039-0

Barnes, K. L. (2002). Development and initial validation of a measure of counselor supervisor self-efficacy (Publication No. 3045772) [Doctoral dissertation, Syracuse University]. ProQuest Dissertations & Theses Global

Bashkov, B. M., & Clauser, J. C. (2019). Determining item screening criteria using cost-benefit analysis. Practical Assessment, Research, and Evaluation, 24(2), 1–9. https://doi.org/10.7275/xsqm-8839

Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1), 1–48. https://doi.org/10.18637/jss.v067.i01

Becker, K. D., Bradshaw, C. P., Domitrovich, C., & Ialongo, N. S. (2013). Coaching teachers to improve implementation of the good behavior game. Administration and Policy in Mental Health and Mental Health Services Research, 40(6), 482–493. https://doi.org/10.1007/s10488-013-0482-8

Bershad, C., & Blaber, C. (2011). Realizing the promise of the whole-school approach to children’s mental health: A practical guide for schools. Education Development Center, Inc. http://www.promoteprevent.org/realizing-promise-whole-school-approach-childrens-mental-health-practical-guide-schools

Blackstock, J., Chae, K. B., Mauk, G. W., & McDonald, A. (2018). Achieving access to mental health care for school-aged children in rural communities. The Rural Educator, 39(1), 12–25. https://doi.org/10.35608/ruraled.v39i1.212

Bottiani, J. H., Larson, K. E., Debnam, K. J., Bischoff, C. M., & Bradshaw, C. P. (2018). Promoting educators’ use of culturally responsive practices: A systematic review of inservice interventions. Journal of Teacher Education, 69(4), 367–385. https://doi.org/10.1177/0022487117722553

Bradshaw, C. P., Mitchell, M. M., & Leaf, P. J. (2010). Examining the effects of schoolwide positive behavioral interventions and supports on student outcomes: Results from a randomized controlled effectiveness trial in elementary schools. Journal of Positive Behavior Interventions, 12(3), 133–148. https://doi.org/10.1177/1098300709334798

Bradshaw, C. P., Pas, E. T., Bottiani, J. H., Debnam, K. J., Reinke, W. M., Herman, K. C., & Rosenberg, M. S. (2018). Promoting cultural responsivity and student engagement through double check coaching of classroom teachers: An efficacy study. School Psychology Review, 47(2), 118–134. https://doi.org/10.17105/SPR-2017-0119.V47-2

CASEL (2020). Evidence-based social and emotional learning programs: CASEL criteria updates and rationale. https://casel.org/11_casel-program-criteria-rationale/

Cheung, F., & Lucas, R. E. (2014). Assessing the validity of single-item life satisfaction measures: results from three large samples. Quality of Life Research, 23(10), 2809–2818. https://doi.org/10.1007/s11136-014-0726-4

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Routledge. https://doi.org/10.4324/9780203771587

Darling-Hammond, L., Wei, R. C., Andree, A., Richardson, N., & Orphanos, S. (2009). Professional learning in the learning profession: A status report on teacher development in the United States and abroad. Stanford University: National Staff Development Council and The. https://edpolicy.stanford.edu/sites/default/files/publications/professional-learning-learning-profession-status-report-teacher-development-us-and-abroad.pdf School Redesign Network

Desimone, L. M. (2009). Improving impact studies of teachers’ professional development: Toward better conceptualizations and measures. Educational Researcher, 38(3), 181–199. https://doi.org/10.3102/0013189X08331140

Des Jarlais, D. C., Lyles, C., Crepaz, N., & Trend Group. (2004). Improving the reporting quality of nonrandomized evaluations of behavioral and public health interventions: The TREND statement. American Journal of Public Health, 94(3), 361–366

Diamantopoulos, A., Sarstedt, M., Fuchs, C., Wilczynski, P., & Kaiser, S. (2012). Guidelines for choosing between multi-item and single-item scales for construct measurement: A predictive validity perspective. Journal of the Academy of Marketing Science, 40(3), 434–449. https://doi.org/10.1007/s11747-011-0300-3

Downer, J. T., Kraft-Sayre, M. E., & Pianta, R. C. (2009). Ongoing, web-mediated professional development focused on teacher–child interactions: Early childhood educators’ usage rates and self-reported satisfaction. Early Education and Development, 20(2), 321–345. https://doi.org/10.1080/10409280802595425

Durlak, J. A., Weissberg, R. P., Dymnicki, A. B., Taylor, R. D., & Schellinger, K. (2011). The impact of enhancing students’ social and emotional learning: A meta-analysis of school-based universal interventions. Child Development, 82, 405–432. https://doi.org/10.1111/j.1467-8624.2010.01564.x

Ebner, C., & Gegenfurtner, A. (2019). Learning and satisfaction in webinar, online, and face-to-face instruction: A meta-analysis. Frontiers in Education, 4(92), 1–11. https://doi.org/10.3389/feduc.2019.00092

Fagan, A. A., Bumbarger, B. K., Barth, R. P., Bradshaw, C. P., Cooper, R., Supplee, B., L. H., & Walker, K., D (2019). Scaling up evidence-based interventions in US public systems to prevent behavioral health problems: Challenges and opportunities. Prevention Science, 20, 1147–1168. https://doi.org/10.1007/s11121-019-01048-8

Hershfeldt, P. A., Pell, K., Sechrest, R., Pas, E. T., & Bradshaw, C. P. (2012). Lessons learned coaching teachers in behavior management: The PBIS plus coaching model. Journal of Educational and Psychological Consultation, 22(4), 280–299. https://doi.org/10.1080/10474412.2012.731293

Hill, H. C., Beisiegel, M., & Jacob, R. (2013). Professional development research: Consensus, crossroads, and challenges. Educational Researcher, 42(9), 476–487. https://doi.org/10.3102/0013189X13512674

Hoover, S., & Bostic, J. (2021). Schools as a vital component of the child and adolescent mental health system. Psychiatric Services, 72, 37–48. https://doi.org/10.1176/appi.ps.201900575

Hostutler, C. A., Valleru, J., Maciejewski, H. M., Hess, A., Gleeson, S. P., & Ramtekkar, U. P. (2020). Improving pediatrician’s behavioral health competencies through the Project ECHO teleconsultation model. Clinical Pediatrics, 59(12), 1049–1057. https://doi.org/10.1177/0009922820927018

IBM Corporation. (2020). IBM SPSS Statistics for Macintosh, version 27.0. IBM Corp

Kearly, A., Oputa, J., & Harper-Hardy, P. (2020). Telehealth: An opportunity for state and territorial health agencies to improve access to needed health services. Journal of Public Health Management and Practice, 26(1), 86–90. https://doi.org/10.1097/phh.0000000000001115

Knapp, M. S. (2003). Professional development as a policy pathway. Review of Research in Education, 27, 109–157. https://doi.org/10.3102/0091732X027001109

Kraft, M. A., Blazar, D., & Hogan, D. (2018). The effect of teacher coaching on instruction and achievement: A meta-analysis of the causal evidence. Review of Educational Research, 88(4), 547–588. https://doi.org/10.3102/0034654318759268

Lo, C. B., Bridge, J. A., Shi, J., Ludwig, L., & Stanley, R. M. (2020). Children’s mental health emergency department visits: 2007–2016. Pediatrics, 145(6), https://doi.org/10.1542/peds.2019-1536

LoCasale-Crouch, J., Hamre, B., Roberts, A., & Neesen, K. (2016). If you build it, will they come? Predictors of teachers’ participation in and satisfaction with the Effective Classroom Interactions online courses. International Review of Research in Open and Distributed Learning, 17(1), 100–122. https://doi.org/10.19173/irrodl.v17i1.2182

Lyons, K., Young, T., Hanley, J., & Stolk, P. (2016). Professional development barriers and benefits in a tourism knowledge economy. International Journal of Tourism Research, 18(4), 319–326. https://doi.org/10.1002/jtr.2051

Markle, R. S., Splett, J. W., Maras, M. A., & Weston, K. J. (2014). Effective school teams: Benefits, barriers, and best practices. In M. D. Weist, N. A. Lever, C. P. Bradshaw, & J. Sarno Owens (Eds.), Handbook of school mental health: Research, training, practice, and policy (2nd ed., pp. 59–73). Springer. https://doi.org/10.1007/978-1-4614-7624-5_5

Mazurek, M. O., Curran, A., Burnette, C., & Sohl, K. (2019). ECHO Autism STAT: Accelerating early access to autism diagnosis. Journal of Autism and Developmental Disorders, 49(1), 127–137. https://doi.org/10.1007/s10803-018-3696-5

Mazurek, M. O., Parker, R. A., Chan, J., Kuhlthau, K., & Sohl, K. (2020). Effectiveness of the extension for community health outcomes model as applied to primary care for autism: A partial stepped-wedge randomized clinical trial. JAMA Pediatrics, 174(5), https://doi.org/10.1001/jamapediatrics.2019.6306

Mullen, P. R., Blount, A. J., Lambie, G. W., & Chae, N. (2017). School counselors’ perceived stress, burnout, and job satisfaction. Professional School Counseling, 21(1), https://doi.org/10.1177/2156759X18782468

Mullen, P. R., & Lambie, G. W. (2016). The contribution of school counselors’ self-efficacy to their programmatic service delivery. Psychology in the Schools, 53(3), 306–320. https://doi.org/10.1002/pits.21899

Murphy, B. (2017). Psychometric evaluation of the Counselor Supervisor Self-Efficacy Scale (Publication No. 1032303823) [Doctoral dissertation, University of Missouri-St. Louis]. Institutional Repository Library. https://irl.umsl.edu/dissertation/717

National Center for School Mental Health (2019).SHAPE. https://www.theshapesystem.com/

Pas, E. T., Lindstrom, Johnson, S., Alfonso, Y. N., & Bradshaw, C. P. (2020). Tracking time and resources associated with systems change and the adoption of evidence-based programs: The “hidden costs” of school-based coaching. Administration and Policy in Mental Health and Mental Health Services, 47(5), 720–734. https://doi.org/10.1007/s10488-020-01039-w

R Core Team (2017). R: A language and environment for statistical computing. R Foundation for Statistical Computing. Vienna, Austria. https://www.R-project.org/

Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Experimental and quasi-experimental designs for generalized causal inference. Houghton, Mifflin, and Company

Sugai, G., & Horner, R. H. (2009). Responsiveness-to-intervention and school-wide positive behavior supports: Integration of multi-tiered system approaches. Exceptionality, 17, 223–237. https://doi.org/10.1080/09362830903235375

Taylor, D. C., & Hamdy, H. (2013). Adult learning theories: Implications for learning and teaching in medication education: AMEE guide no. 83. Medical Teacher, 35(11), e1561–e1572. https://doi.org/10.3109/0142159X.2013.828153

Walton, H., Spector, A., Tombor, I., & Michie, S. (2017). Measures of fidelity of delivery of, and engagement with, complex, face-to-face health behaviour change interventions: A systematic review of measure quality. British Journal of Health Psychology, 22(4), 872–903. https://doi.org/10.1111/bjhp.12260

Watkins, C. E., Schneider, L. J., Hayes, J., & Nieberding, R. (1995). Measuring psychotherapy supervisor development: An initial effort at scale development and validation. The Clinical Supervisor, 13(1), 77–90. https://doi.org/10.1300/j001v13n01_06

Weist, M. D., Evans, S. W., & Lever, N. A. (Eds.). (2003). Handbook of school mental health: Advancing practice and research. Springer. https://doi.org/10.1007/978-0-387-73313-5

Wilkerson, A., & Ramirez Basco, M. (2014). Therapists’ self-efficacy for CBT dissemination: Is supervision the key? Journal of Psychology & Psychotherapy, 4(3), https://doi.org/10.4172/2161-0487.1000146

Virginia Department of Education (2021). Virginia School Quality Profiles. https://schoolquality.virginia.gov/

Von der Embse, N., Kilgus, S., Iaccarino, S., & Levi-Nielsen, S. (2017). Screening for student mental health risk: Diagnostic accuracy, measurement invariance, and predictive validity of the social, academic, and emotional behavior risk screener-student rating scale (SAEBRS-SRS). School Mental Health, 9, 273–283. https://doi.org/10.1007/s12310-017-9214-7

Funding

United States Department of Education, Office of Elementary & Special Education Grant #S184 × 190023.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lyons, M.D., Taylor, J.V., Zeanah, K.L. et al. Supporting School Mental Health Providers: Evidence from a Short-Term Telementoring Model. Child Youth Care Forum 52, 65–84 (2023). https://doi.org/10.1007/s10566-022-09673-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10566-022-09673-1