Abstract

Paraphrase generation is a fundamental problem in natural language processing. Due to the significant success of transfer learning, the “pre-training → fine-tuning” approach has become the standard. However, popular general pre-training methods typically require extensive datasets and great computational resources, and the available pre-trained models are limited by fixed architecture and size. The authors have proposed a simple and efficient approach to pre-training specifically for paraphrase generation, which noticeably improves the quality of paraphrase generation and ensures substantial enhancement of general-purpose models. They have used existing public data and new data generated by large language models. The authors have investigated how this pre-training procedure impacts neural networks of various architectures and demonstrated its efficiency across all architectures.

Similar content being viewed by others

References

X. Han, Z. Zhang, N. Ding, et al., “Pre-trained models: Past, present and future,” AI Open, Vol. 2, 225–250 (2021). https://doi.org/10.1016/j.aiopen.2021.08.002.

W. Zhao, L. Wang, K. Shen, R. Jia, and J. Liu, “Improving grammatical error correction via pre-training a copy-augmented architecture with unlabeled data,” arXiv:1903.00138v3 [cs.CL], 11 Jun (2019). https://doi.org/10.48550/arXiv.1903.00138.

K. Omelianchuk, V. Atrasevych, A. Chernodub, and O. Skurzhanskyi, “GECToR — Grammatical error correction: Tag, not rewrite,” arXiv:2005.12592v2 [cs.CL], 29 May (2020). https://doi.org/10.48550/arXiv.2005.12592.

J. Kasai, N. Pappas, H. Peng, J. Cross, and N. A. Smith, “Deep encoder, shallow decoder: Reevaluating non-autoregressive machine translation,” arXiv:2006.10369v4 [cs.CL], 24 Jun (2021). https://doi.org/10.48550/arXiv.2006.10369.

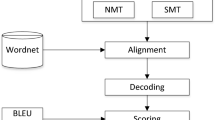

J. Wieting and K. Gimpel, “ParaNMT-50M: Pushing the limits of paraphrastic sentence embeddings with millions of machine translations,” arXiv:1711.05732v2 [cs.CL], 20 Apr (2018). https://doi.org/10.48550/arXiv.1711.05732.

L. Ouyang, J. Wu, X. Jiang, et al., “Training language models to follow instructions with human feedback,” arXiv:2203.02155v1 [cs.CL], 4 Mar (2022). https://doi.org/10.48550/arXiv.2203.02155.

T.-Y. Lin, M. Maire, S. Belongie, L. Bourdev, R. Girshick, J. Hays, P. Perona, D. Ramanan, C. L. Zitnick, and P. Doll_r, “Microsoft COCO: Common objects in context,” arXiv:1405.0312v3 [cs.CV], 21 Feb (2015). https://doi.org/10.48550/arXiv.1405.0312.

Z. Wang, W. Hamza, and R. Florian, “Bilateral multi-perspective matching for natural language sentences,” arXiv:1702.03814v3 [cs.AI], 14 Jul (2017). https://doi.org/10.48550/arXiv.1702.03814.

K. Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “BLEU: A method for automatic evaluation of machine translation,” in: Proc. 40th Annual Meeting on Association for Computational Linguistics (Philadelphia, Pennsylvania, USA, July 7–12, 2002), Association for Computational Linguistics (2002), pp. 311–318. https://doi.org/10.3115/1073083.1073135.

M. Snover, B. Dorr, R. Schwartz, L. Micciulla, and J. Makhoul, “A study of translation edit rate with targeted human annotation,” in: Proc. 7th Conf. of the Association for Machine Translation in the Americas: Technical Papers (Cambridge, Massachusetts, USA, August 8–12, 2006), Association for Machine Translation in the Americas (2006), pp. 223–231. URL: https://aclanthology.org/2006.amta-papers.25.

A. Lavie and A. Agarwal, “METEOR: An automatic metric for MT evaluation with high levels of correlation with human judgments,” in: Proc. Second Workshop on Statistical Machine Translation, (Prague, Czech Republic, June 2007), Association for Computational Linguistics (2007), pp. 228–231. URL: https://aclanthology.org/W07-0734.pdf.

S. Wubben, A. van den Bosch, and E. Krahmer, “Paraphrase generation as monolingual translation: Data and evaluation,” in: Proc. Sixth Intern. Natural Language Generation Conf. (Trim, Co. Meath, Ireland, July 7–9, 2010), Association for Computational Linguistics (2010). URL: https://aclanthology.org/W10-4223.pdf.

M. Post, “A call for clarity in reporting BLEU scores,” in: Proc. the Third Conf. on Machine Translation: Research Paper (Brussels, Belgium, 31 October – 1 November, 2018), Association for Computational Linguistics, (2018), pp. 186–191. https://doi.org/10.18653/v1/W18-64019.

J. Gehring, M. Auli, D. Grangier, D. Yarats, and Y. N. Dauphin, “Convolutional sequence to sequence learning,” in: Proc. 34th Intern. Conf. on Machine Learning (Sydney, Australia, August 6–11, 2017), PMLR, Vol. 70 (2017), pp. 1243–1252.

S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural Comput., Vol. 9, Iss. 8, 1735–1780 (1997). https://doi.org/10.1162/neco.1997.9.8.1735.

A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł.. Kaiser, and I. Polosukhin, “Attention is all you need,” in: Proc. 31st Conf. on Neural Information Processing Systems (NIPS 2017) (Long Beach, CA, USA, December 4–9, 2017), Advances in Neural Information Processing Systems, Vol. 30 (2017), pp. 5998–6008. https://doi.org/10.48550/arXiv.1706.03762.

B. Fabre, T. Urvoy, J. Chevelu, and D. Lolive, “Neural-driven search-based paraphrase generation,” in: Proc. 16th Conf. of the European Chapter of the Association for Computational Linguistics: Main Volume, Virtual Event, April 19–23 (2021), pp. 2100–2111. https://doi.org/10.18653/v1/2021.eacl-main.180.

A. Prakash, S. A. Hasan, K. Lee, V. Datla, A. Qadir, J. Liu, and O. Farri, “Neural paraphrase generation with stacked residual LSTM networks,” arXiv:1610.03098v3 [cs.CL], 13 Oct (2016) https://doi.org/10.48550/arXiv.1610.03098.

N. Miao, H. Zhou, L. Mou, R. Yan, and L. Li, “CGMH: Constrained sentence generation by Metropolis–Hastings sampling,” in: Proc. 33rd AAAI Conf. on Artificial Intelligence (AAAI-19) (Honolulu, Hawaii, USA, 27 January – 1 February 2019), Association for the Advancement of Artificial Intelligence, Vol. 33, No. 01 (2019), pp. 6834–6842. https://doi.org/10.1609/aaai.v33i01.33016834.

E. Pavlick, P. Rastogi, J. Ganitkevitch, B. Van Durme, and C. Callison-Burch, “PPDB 2.0: Better paraphrase ranking, fine-grained entailment relations, word embeddings, and style classification,” in: Proc. 53rd Annual Meeting of the Association for Computational Linguistics and the 7th Intern. Joint Conf. on Natural Language Processing (Beijing, China, July 26–31, 2015), Vol. 2: Short Papers, Association for Computational Linguistics (2015), pp. 425–430. https://doi.org/10.3115/v1/P15-2070.

M. Lewis, Y. Liu, N. Goyal, M. Ghazvininejad, A. Mohamed, O. Levy, V. Stoyanov, and L. Zettlemoyer, “BART: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension,” in: Proc. 58th Annual Meeting of the Association for Computational Linguistics (Online, July, 2020), Association for Computational Linguistics (2020), pp. 7871–7880. https://doi.org/10.18653/v1/2020.acl-main.703.

Y. Tay, M. Dehghani, J. Gupta, D. Bahri, V. Aribandi, Z. Qin, and D. Metzler, “Are pre-trained convolutions better than pre-trained transformers?” in: Proc. 59th Annual Meeting of the Association for Computational Linguistics and the 11th Intern. Joint Conf. on Natural Language Processing (Online, August, 2021), Vol. 1: Long Papers, Association for Computational Linguistics (2021), pp. 4349–4359. URL: https://aclanthology.org/2021.acl-long.335.pdf.

Y. Fu, Y. Feng, and J. P. Cunningham, “Paraphrase generation with latent bag of words,” arXiv:2001.01941v1 [cs.CL], 7 Jan (2020). https://doi.org/10.48550/arXiv.2001.01941.

K. Krishna, J. Wieting, and M. Iyyer, “Reformulating unsupervised style transfer as paraphrase generation,” in: Proc. 2020 Conf. on Empirical Methods in Natural Language Processing (EMNLP) (Online, November 16–20, 2020), Association for Computational Linguistics (2020), pp. 737–762. https://doi.org/10.18653/v1/2020.emnlp-main.55.

T. Goyal and G. Durrett, “Neural syntactic preordering for controlled paraphrase generation,” in: Proc. 58th Annual Meeting of the Association for Computational Linguistics (Online, July 5–10, 2020), Association for Computational Linguistics (2020), pp. 238–252. https://doi.org/10.18653/v1/2020.acl-main.22.

T. Hosking and M. Lapata, “Factorising meaning and form for intent-preserving paraphrasing,” in: Proc. 59th Annual Meeting of the Association for Computational Linguistics and the 11th Intern. Joint Conf. on Natural Language Processing (Online, August 1–6, 2021), Vol. 1: Long Papers, Association for Computational Linguistics (2021), pp. 1405–1418. https://doi.org/10.18653/v1/2021.acl-long.112.

Y. Fu, C. Tan, B. Bi, M. Chen, Y. Feng, and A. M. Rush, “Latent template induction with Gumbel-CRFs,” in: Advances in Neural Information Processing Systems 33: Proc. Thirty-fourth Conference on Neural Information Processing Systems (NeurIPS2020) (Online, December 6–12, 2020), Vol. 25, Neural Information Processing Systems Foundation, Inc. (NeurIPS) (2020). https://doi.org/10.48550/arXiv.2011.14244.

Author information

Authors and Affiliations

Corresponding author

Additional information

Translated from Kibernetyka ta Systemnyi Analiz, No. 2, March–April, 2024, pp. 3–12; DOI https://doi.org/10.34229/KCA2522-9664.24.2.1.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Skurzhanskyi, O.H., Marchenko, O.O. & Anisimov, A.V. Specialized Pre-Training of Neural Networks on Synthetic Data for Improving Paraphrase Generation. Cybern Syst Anal 60, 167–174 (2024). https://doi.org/10.1007/s10559-024-00658-7

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10559-024-00658-7