Abstract

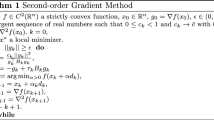

In this article we address the problem of minimizing a strictly convex quadratic function using a novel iterative method. The new algorithm is based on the well-known Nesterov’s accelerated gradient method. At each iteration of our scheme, the new point is computed by performing a line-search scheme using a search direction given by a linear combination of three terms, whose parameters are chosen so that the residual norm is minimized at each step of the process. We establish the linear convergence of the proposed method and show that its convergence rate factor is analogous to the one available for other gradient methods. Finally, we present preliminary numerical results on some sets of synthetic and real strictly convex quadratic problems, showing that the proposed method outperforms in terms of efficiency, a wide collection of state-of-the art gradient methods, and that it is competitive against the conjugate gradient method in terms of CPU time and number of iterations.

Similar content being viewed by others

Notes

The SuiteSparse Matrix Collection tool-box is available in https://sparse.tamu.edu/.

References

Ang, A.: Heavy ball method on convex quadratic problem. https://angms.science/doc/CVX/CVX_HBM.pdf

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8(1), 141–148 (1988)

Bertsekas, D.P.: Nonlinear Programming. Athena scientific, Belmont (1999)

Cauchy, A.: Méthode générale pour la résolution des systemes déquations simultanées. Comp. Rend. Sci. Paris 25(1847), 536–538 (1847)

Cyrus, S., Hu, B., Van Scoy, B., Lessard, L.: A robust accelerated optimization algorithm for strongly convex functions. In: 2018 Annual American Control Conference (ACC), pp. 1376–1381. IEEE (2018)

Davis, T.A., Hu, Y.: The university of florida sparse matrix collection. ACM Trans. Math. Softw. (TOMS) 38(1), 1 (2011)

De Asmundis, R., Di Serafino, D., Hager, W.W., Toraldo, G., Zhang, H.: An efficient gradient method using the yuan steplength. Comput. Optim. Appl. 59(3), 541–563 (2014)

Frassoldati, G., Zanni, L., Zanghirati, G.: New adaptive stepsize selections in gradient methods. J. Ind. Manag. Optim. 4(2), 299–312 (2008)

Hestenes, M.R., Stiefel, E.: Methods of Conjugate Gradients for Solving Linear Systems, vol. 49. NBS, Washington, DC (1952)

Leon, H.F.O.: A delayed weighted gradient method for strictly convex quadratic minimization. Comput. Optim. Appl. 74(3), 729–746 (2019)

Liu, Z., Liu, H., Dong, X.: An efficient gradient method with approximate optimal stepsize for the strictly convex quadratic minimization problem. Optimization 67(3), 427–440 (2018)

Luenberger, D.G., Ye, Y., et al.: Linear and Nonlinear Programming, vol. 2. Springer, Berlin (1984)

Nesterov, Y.: A method for solving the convex programming problem with convergence rate o(1/k\(\hat{2}\)). Soviet Mathematics Doklady 27, 372–376 (1983)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course, vol. 87. Springer (2013)

Nocedal, J., Wright, S.: Numerical Optimization. Springer (2006)

Oveido, H., Dalmau, O., Herrera, R.: Two novel gradient methods with optimal step sizes. J. Comput. Math. 39(3), 375–391 (2021)

Oviedo, H., Andreani, R., Raydan, M.: A family of optimal weighted conjugate-gradient-type methods for strictly convex quadratic minimization (2021). http://www.optimization-online.org/DB_HTML/2020/09/8039.html

Oviedo, H., Dalmau, O., Herrera, R.: A hybrid gradient method for strictly convex quadratic programming. Numer. Linear Algebra Appl. (2020). https://doi.org/10.1002/nla.2360

Polyak, B.T.: Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 4(5), 1–17 (1964)

Zhou, B., Gao, L., Dai, Y.H.: Gradient methods with adaptive step-sizes. Comput. Optim. Appl. 35(1), 69–86 (2006)

Acknowledgements

This work was supported in part by CONACYT (Mexico), Grants 258033 and 256126. The authors would like to thank two anonymous referees for their useful suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Lothar Reichel.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Oviedo, H., Dalmau, O. & Herrera, R. An accelerated minimal gradient method with momentum for strictly convex quadratic optimization. Bit Numer Math 62, 591–606 (2022). https://doi.org/10.1007/s10543-021-00886-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-021-00886-9