Abstract

A remarkable number of different numerical algorithms can be understood and analyzed using the concepts of symmetric spaces and Lie triple systems, which are well known in differential geometry from the study of spaces of constant curvature and their tangents. This theory can be used to unify a range of different topics, such as polar-type matrix decompositions, splitting methods for computation of the matrix exponential, composition of selfadjoint numerical integrators and dynamical systems with symmetries and reversing symmetries. The thread of this paper is the following: involutive automorphisms on groups induce a factorization at a group level, and a splitting at the algebra level. In this paper we will give an introduction to the mathematical theory behind these constructions, and review recent results. Furthermore, we present a new Yoshida-like technique, for self-adjoint numerical schemes, that allows to increase the order of preservation of symmetries by two units. The proposed techniques has the property that all the time-steps are positive.

Similar content being viewed by others

1 Introduction

In numerical analysis there exist numerous examples of objects forming a group, i.e. objects that compose in an associative manner, have an inverse and identity element. Typical examples are the group of orthogonal matrices or the group of Runge–Kutta methods. Semigroups, sets of objects closed under composition but not inversion, like for instance the set of all square matrices and explicit Runge–Kutta methods, are also well studied in literature.

However, there are important examples of objects that are neither a group nor a semigroup. One important case is the class of objects closed under a ‘sandwich-type’ product, \((a,b) \mapsto aba\). For example, the collection of all symmetric positive definite matrices and all selfadjoint Runge–Kutta methods. The sandwich-type composition \(aba\) for numerical integrators was studied at length in [19] and references therein. However, if inverses are well defined, we may wish to replace the sandwich product with the algebraically nicer symmetric product \((a,b) \mapsto ab^{-1}a\). Spaces closed under such products are called symmetric spaces and are the objects of study in this paper. There is a parallel between the theory of Lie groups and that of symmetric spaces. For Lie groups, fundamental tools are the Lie algebra (tangent space at the identity, closed under commutation) and the exponential map from the Lie algebra to the Lie group. In the theory of symmetric spaces there is a similar notion of tangent space. The resulting object is called a Lie triple system (LTS), and is closed under double commutators, \([X,[Y,Z]]\). Also in this case, the exponential maps the LTS into the symmetric space.

An important decomposition theorem is associated with symmetric spaces and Lie triple systems: Lie algebras can be decomposed into a direct sum of a LTS and a subalgebra. The well known splitting of a matrix as a sum of a symmetric and a skew symmetric matrix is an example of such a decomposition, the skew symmetric matrices are closed under commutators, while the symmetric matrices are closed under double commutators. Similarly, at the group level, there are decompositions of Lie groups into a product of a symmetric space and a Lie subgroup. The matrix polar decomposition, where a matrix is written as the product of a symmetric positive definite matrix and an orthogonal matrix is one example.

In this paper, we are concerned with the application of such structures to the numerical analysis of differential equations of evolution. The paper is organised as follows: in Sect. 2 we review some general theory of symmetric spaces and Lie triple systems. Applications of this theory in numerical analysis of differential equations are discussed in Sect.3, which, in turn, can be divided into two parts. In the first (Sects. 3.1–3.4), we review and discuss the case of differential equations on matrix groups. The properties of these decompositions and numerical algorithms based on them are studied in a series of papers. Polar-type decompositions are studied in detail in [23], with special emphasis on optimal approximation results. The paper [36] is concerned with important recurrence relations for polar-type decompositions, similar to the Baker–Campbell–Hausdorff formula for Lie groups, while [12, 14] employ this theory to reduce the implementation costs of numerical methods on Lie groups [11, 22]. We mention that polar-type decompositions are also closely related to the more special root-space decomposition employed in numerical integrators for differential equations on Lie groups in [25]. In [15] it is shown that the generalized polar decompositions can be employed in cases where the theory of [25] cannot be used.

In the second part of Sect. 3 (Sect. 3.4 and beyond), we will consider the application of this theory to numerical methods for the solution of differential equations with symmetries and reversing symmetries. By backward error analysis, numerical methods can be thought of as exact flows of nearby vector fields. The main goal is then to remove from the numerical method the undesired part of the error (either the one destroying the symmetry or the reversing symmetry). These error terms in the modified vector field generally consist of complicated derivatives of the vector field itself and are not explicitly calculated, they are just used formally for analysis of the method. In this context, the main tools are compositions at the group level, using the flow of the numerical method and the symmetries/reversing symmetries, together with their inverses. There is a substantial difference between preserving reversing symmetries and symmetries for a numerical method: the first can be always be attained by a finite (2 steps) composition (Scovel projection [29]), the second requires in general an infinite composition. Thus symmetries are generally more difficult to retain than reversing symmetries. For the retention of symmetry, we review the Thue–Morse symmetrization technique for arbitrary methods and present a new Yoshida-like symmetry retention technique for self-adjoint methods. The latter has always positive intermediate step sizes and can be of interest in the context of problems which require step size restrictions. We illustrate the use of these symmetrisation methods by some numerical experiments.

Finally, Sect. 4 is devoted to some concluding remarks.

2 General theory of symmetric spaces and Lie triple systems

In this section we present some background theory for symmetric spaces and Lie triple systems. We expect the reader to be familiar with some basic concepts of differential geometry, like manifolds, vector fields, etc. For a more detailed treatment of symmetric spaces we refer the reader to [6] and [16] which also constitute the main reference of the material presented in this section.

We shall also follow (unless otherwise mentioned) the notational convention of [6]: in particular, \(M\) is a set (manifold), the letter \(G\) is reserved for groups and Lie groups, gothic letters denote Lie algebras and Lie triple systems, latin lowercase letters denote Lie-group elements and latin uppercase letters denote Lie-algebra elements. The identity element of a group will usually be denoted by \(e\) and the identity mapping by \(\hbox {id}\).

2.1 Symmetric spaces

Definition 2.1

(See [16]) A symmetric space is a manifold \(M\) with a differentiable symmetric product \(\cdot \) obeying the following conditions:

-

(i)

\(x \cdot x = x,\)

-

(ii)

\(x \cdot (x \cdot y) = y,\)

-

(iii)

\(x \cdot (y \cdot z) = (x \cdot y) \cdot (x \cdot z),\)

and moreover

-

(iv)

every \(x\) has a neighbourhood \(U\) such that for all \(y\) in \(U\) \(x \cdot y = y\) implies \(y=x\).

The latter condition is relevant in the case of manifolds \(M\) with open set topology (as in the case of Lie groups) and can be disregarded for sets \(M\) with discrete topology: a discrete set \(M\) endowed with a multiplication obeying (i)–(iii) will be also called a symmetric space.

A pointed symmetric space is a pair \((M,o)\) consisting of a symmetric space \(M\) and a point \(o\) called base point. Note that when \(M\) is a Lie group, it is usual to set \(o=e\). Moreover, if the group is a matrix group with the usual matrix multiplication, it is usual to set \(e=I\) (identity matrix).

The left multiplication with an element \(x \in M\) is denoted by \(S_x\),

and is called symmetry around \(x\). Note that \(S_x x = x\) because of (i), hence \(x\) is fixed point of \(S_x\) and it is isolated because of (iv). Furthermore, (ii) implies that \(S_x\) is an involutive automorphism of \(M\), i.e. \(S_x^2 = \hbox {id}\).

Symmetric spaces can be constructed in several different ways, the following are important examples:

-

1.

Manifolds with an intrinsically defined symmetric product. As an example, consider the \(n\)-sphere as the set of unit vectors in \(\mathbb {R}^{n+1}\). The product

$$\begin{aligned} x\cdot y = S_x y = (2xx^T -I)y \end{aligned}$$turns this into a symmetric space. The above operation is the reflection of points on a sphere. This can be generalized to \(m\)-dimensional subspaces in \(\mathbb {R}^n\) (\(m\le n\)) in the following manner: Assume that \(x=[x_1, \ldots , x_m]\) is a full rank matrix. Define \(P_x\) the orthogonal projection operator onto the range of \(x\), \(P_x = x(x^Tx)^{-1} x^T\). Consider the reflection \(R_x= 2P_x - I\). Define \(x\cdot y = R_x y\). This operation obeys the conditions (i)–(vi) whenever \(x,y,z\) are \(m\times n\) full rank matrices. In particular, note that (i) is equivalent to \(R_x^2=I\), i.e. the reflection is an involutive matrix.

-

2.

Subsets of a continuous (or discrete) group \(G\) that are closed under the composition \(x\cdot y = x y^{-1} x\), where \(xy\) is the usual multiplication in \(G\). Groups themselves, continuous, as in the case of Lie groups, or discrete, are thus particular instances of symmetric spaces. As another example, consider the set of all symmetric positive definite matrices as a subset of all nonsingular matrices, which forms a symmetric space with the product

$$\begin{aligned} a\cdot b = ab^{-1}a. \end{aligned}$$ -

3.

Symmetric elements of automorphisms on a group. An automorphism on a group \(G\) is a map \(\sigma :{G} \rightarrow {G}\) satisfying \(\sigma (ab)=\sigma (a)\sigma (b)\). The symmetric elements are defined as

$$\begin{aligned} \fancyscript{A} = \{ g\in G \; : \; \sigma (g)=g^{-1} \}. \end{aligned}$$It is easily verified that \(\fancyscript{A}\) obeys (i)–(iv) when endowed with the multiplication \(x\cdot y = x y^{-1} x\), hence it is a symmetric space. As an example, symmetric matrices are symmetric elements under the matrix automorphism \(\sigma (a) = a^{-T}\).

-

4.

Homogeneous manifolds. Given a Lie group \(G\) and a subgroup \(H\), a homogeneous manifold \(M=G/H\) is the set of all left cosets of \(H\) in \(G\). Not every homogeneous manifold possesses a product turning it into a symmetric space, however, we will see in Theorem 2.1 that any connected symmetric space arises in a natural manner as a homogeneous manifold.

-

5.

Jordan algebras. Let \({\mathfrak {a}}\) be a finite-dimensional vector space with a bilinear multiplicationFootnote 1 \(x*y\) such that

$$\begin{aligned} x*y = y*x, \qquad x*(x^2 *y) = x^2 *(x*y) \end{aligned}$$(powers defined in the usual way, \(x^m = x*x^{m-1}\)), with unit element \(e\). Define \(L_x(y)= x*y\) and set \(P_x = 2L_x^2 - L_{x^2}\). Then, the set of invertible elements of \({\mathfrak {a}}\) is a symmetric space with the product

$$\begin{aligned} x\cdot y = P_x (y^{-1}). \end{aligned}$$In the context of symmetric matrices, take \(x*y = \frac{1}{2}(xy+yx)\), where \(xy\) denotes the usual matrix multiplication. After some algebraic manipulations, one can verify that the product \(x\cdot y =P_x(y^{-1})= 2 x*(x*y^{-1}) - (x*x)*y^{-1}= xy^{-1}x\) as in example 2.

Let \(G\) be a connected Lie group and let \(\sigma \) be an analytic involutive automorphism, i.e. \(\sigma \not = \hbox {id}\) and \(\sigma ^2 = \hbox {id}\). Let \(G^\sigma \) denote \(\hbox {fix}\sigma =\{ g\in G \; : \; \sigma (g)=g\}\), \(G^\sigma _e\) its connected component including the base point, in this case the identity element \(e\) and finally let \(K\) be a closed subgroup such that \(G^\sigma _e \subset K \subset G^\sigma \). Set \(G_\sigma = \{ x \in G: \sigma (x) = x^{-1} \}\).

Theorem 2.1

[16] The homogeneous space \(M = G/K\) is a symmetric space with the product \(xK \cdot yK = x \sigma (x)^{-1} \sigma (y)K\) and \(G_\sigma \) is a symmetric space with the product \(x \cdot y = x y^{-1} x\). The space of symmetric elements \(G_\sigma \) is isomorphic to the homogeneous space \(G/G^\sigma \). Moreover, every connected symmetric space is of the type \(G/K\) and also of the type \(G_\sigma \).

The interesting consequence of the above theorem is that every connected symmetric space is also a homogeneous space, which implies a factorization: as coset representatives for \(G/G^\sigma \) one may choose elements of \(G_\sigma \), thus any \(x\in G\) can be decomposed in a product \( x = pk\), where \( p\in G_\sigma \text{ and } k\in G^\sigma \). In other words,

The matrix polar decomposition is a particular example, discussed in Sect. 3.1.

The automorphism \(\sigma \) on \(G\) induces an automorphism on the Lie algebra \({\mathfrak {g}}\) and also a canonical decomposition of \({\mathfrak {g}}\). Let \({\mathfrak {g}}\) and \({\mathfrak {k}}\) denote the Lie algebras of \(G\) and \(K\) respectively and denote by \(\,\mathrm{d}\sigma \) the differential of \(\sigma \) at \(e\),

Note that \(\,\mathrm{d}\sigma \) is an involutive automorphism of \({\mathfrak {g}}\) and has eigenvalues \(\pm 1\). Moreover, \(X \in {\mathfrak {k}}\) implies \(\,\mathrm{d}\sigma (X) =X\). Set \({\mathfrak {p}} = \{ X \in {\mathfrak {g}}: \,\mathrm{d}\sigma (X) =-X\}\). Then,

[6]. It is easily verified that

that is, \({\mathfrak {k}}\) is a subalgebra of \({\mathfrak {g}}\) while \({\mathfrak {p}}\) is an ideal in \({\mathfrak {k}}\). Given \(X \in {\mathfrak {g}}\), its canonical decomposition \({\mathfrak {p}}\oplus {\mathfrak {k}}\) is \(X=P+K\), with \(P \in {\mathfrak {p}}\) and \(K \in {\mathfrak {k}}\),

We have already observed that there is close connection between projection matrices, reflections (involutive matrices) and hence symmetric spaces. In a linear algebra context, this statement can be formalized as follows. Recall that a matrix \(\Pi \) is a projection if \(\Pi ^2=\Pi \).

Lemma 2.1

To any projection matrix \(\Pi \) there corresponds an involutive matrix \(S= I-2\Pi \). Conversely, to any involutive matrix \(S\) there correspond two projection matrices \(\Pi _S^-= \frac{1}{2} (I-S)\) and \(\Pi _S^+=\frac{1}{2}(I+S)\). These projections satisfy \(\Pi _S^- + \Pi _S^+ = I\) and \(\Pi _S^- \Pi _S^+ = \Pi _S^+ \Pi _S^{-} =0 \), moreover \(S\Pi _S^{\pm } = \pm \Pi _S\), i.e. the projection \(\Pi _S^\pm \) projects onto the \(\pm 1\) eigenspace of \(S\).

Note that if \(S\) is involutive, so is \(-S\), which corresponds to the opposite identification of the \(\pm 1\) eigenspaces. A matrix \(K\), whose columns are in the span of the \(+1\) eigenspace is said to be block-diagonal with respect to the automorphism, while a matrix \(P\), whose columns are in the span of the \(-1\) eigenspace, is said to be 2-cyclic.

In the context of Lemma 2.1, we recognize that \(P \in {\mathfrak {p}}\) is the 2-cyclic part and \(K \in {\mathfrak {k}} \) is the block-diagonal part. Namely, if \(X\) is represented by a matrix, then

where \(X^{ij} = \Pi _S^{i} X \Pi _S^j\) restricted to the appropriate subspaces. Then, \(X^{--}\) and \(X^{++}\) correspond to the block-diagonal part \(K\) and \(X^{-+}, X^{+-}\) corresponding to the 2-cyclic part \(P\).

In passing, we mention that the decomposition (2.5) is called a Cartan decomposition whenever the Cartan–Killing form \(B(X,Y) = \hbox {tr}(\hbox {ad}_{X}\hbox {ad}_{Y}\!)\) is nondegenerate, hence it can be used to introduce a positive bilinear form \(B_{\,\mathrm{d}\sigma }=-B(X, \,\mathrm{d}\sigma (Y))\). If this is the case, the linear subspaces \({\mathfrak {k}}, {\mathfrak {p}}\) are orthogonal.

The involutive automorphism \(\sigma \) need not be defined at the group level \(G\) and thereafter lifted to the algebra by (2.3). It is possible to proceed the other way around: an involutive algebra automorphism \(\,\mathrm{d}\sigma \) on \({\mathfrak {g}}\), which automatically produces a decomposition (2.4)–(2.5), can be used to induce a group automorphism \(\sigma \) by the relation

and a corresponding group factorization (2.2). Thus, we have an “upstairs-downstairs” viewpoint: the group involutive automorphisms generate corresponding algebra automorphisms and vice versa. This “upstairs-downstairs” view is useful: in some cases, the group factorization (2.2) is difficult to compute starting from \(x\) and \(\sigma \), while the splitting at the algebra level might be easy to compute from \(X\) and \(\,\mathrm{d}\sigma \). In other cases, it might be the other way around.

2.2 Lie triple systems

In Lie group theory Lie algebras are important since they describe infinitesimally the structure of the tangent space at the identity. Similarly, Lie triple systems give the structure of the tangent space of a symmetric space.

Definition 2.2

[16] A vector space with a trilinear composition \([X,Y,Z]\) is called a Lie triple system (LTS) if the following identities are satisfied:

-

(i)

\([X,X,X]=0\),

-

(ii)

\([X,Y,Z]+[Y,Z,X] + [Z,X,Y] =0\),

-

(iii)

\([X,Y,[U,V,W]] = [[X,Y,U], V,W] + [U, [X,Y,V],W] +[U,V,[X,Y,W]]\).

A typical way to construct a LTS is by means of an involutive automorphism of a Lie algebra \({\mathfrak {g}}\). With the same notation as above, the set \({\mathfrak {p}}\) is a LTS with the composition

Vice versa, for every finite dimensional LTS there exists a Lie algebra \({\mathfrak {G}}\) and an involutive automorphism \(\sigma \) such that the given LTS corresponds to \({\mathfrak {p}}\). The algebra \({\mathfrak {G}}\) is called standard embedding of the LTS. In general, any subset of \({\mathfrak {g}}\) that is closed under the operator

is a Lie triple system. It can be shown that being closed under \(\mathrm {T}_{X}\) guarantees being closed under the triple commutator.

3 Application of symmetric spaces in numerical analysis

3.1 The classical polar decomposition of matrices

Let \(\mathrm{GL}(N)\) be the group of \(N\times N\) invertible matrices. Consider the map

It is clear that \(\sigma \) is an involutive automorphism of \(\mathrm{GL}(N)\). Then, according to Theorem 2.1, the set of symmetric elements \(G_\sigma = \{ x \in \mathrm{GL}(N): \sigma (x) =x^{-1}\}\) is a symmetric space. We observe that \(G_\sigma \) is the set of invertible symmetric matrices. The symmetric space \(G_\sigma \) is disconnected and particular mention deserves its connected component containing the identity matrix \(I\), since it reduces to the set of symmetric positive definite matrices. The subgroup \(G^\sigma \) consists of all orthogonal matrices. The decomposition (2.2) is the classical polar decomposition, any nonsingular matrix can be written as a product of a symmetric matrix and an orthogonal matrix. If we restrict the symmetric matrix to the symmetric positive definite (spd) matrices, then the decomposition is unique. In standard notation, \(p\) is denoted by \(s\) (spd matrix), while \(k\) is denoted by \(q\) (orthogonal matrix).Footnote 2 At the algebra level, the corresponding splitting is \({\mathfrak {g}}= {\mathfrak {p}}\oplus {\mathfrak {k}}\), where \({\mathfrak {k}} = \{ X \in {\mathfrak {gl}}(N) : \,\mathrm{d}\sigma (X) = X \} = {\mathfrak {so}}(N), \) is the classical algebra of skew-symmetric matrices, while \( {\mathfrak {p}} = \{ X \in {\mathfrak {gl}}(N) : \,\mathrm{d}\sigma (X) = -X \} \) is the classical set of symmetric matrices. The latter is not a subalgebra of \({\mathfrak {gl}}(N)\) but is closed under \(\mathrm {T}_{X}\), hence is a Lie triple system. The decomposition (2.6) is nothing else than the canonical decomposition of a matrix into its skew-symmetric and symmetric part, \( X = P+K = \frac{1}{2}(X-\,\mathrm{d}\sigma (X)) + \frac{1}{2}(X+\,\mathrm{d}\sigma (X)) = \frac{1}{2}(X-X^{T}) + \frac{1}{2}(X+X^{T}). \) It is well known that the polar decomposition \(x = sq\) can be characterized in terms of best approximation properties. The orthogonal part \(q\) is the best orthogonal approximation of \(x\) in any orthogonally invariant norm (e.g. 2-norm and Frobenius norm). Other classical polar decompositions \(x=sq\), with \(s\) Hermitian and \(q\) unitary, or with \(s\) real and \(q\) coinvolutory (i.e. \(q\bar{q}=I\)), can also be fitted in this framework [3] with the choice of automorphisms \(\sigma (x) = x^{-*}= \bar{x}^{-\mathrm {T}}\) (Hermitian adjoint), and \(\sigma (x) = \bar{x}\) respectively (where \(\bar{x}\) denotes the complex conjugate of \(x\)).

The group decomposition \(x=sq\) can also be studied via the algebra decomposition.

3.2 Generalized polar decompositions

In [23] such decompositions are generalized to arbitrary involutive automorphisms, and best approximation properties are established for the general case.

In [36] an explicit recurrence is given, if \(\exp (X) = \exp (P+K)= x\), \(\exp (S)=s\) and \(\exp (Q)=q\) then \(S\) and \(Q\) can be expressed in terms of commutators of \(P\) and \(K\). The first terms in the expansions of \(S\) and \(Q\) are

Clearly, also other types of automorphisms can be considered, generalizing the group factorization (2.2) and the algebra splitting (2.6). For instance, there is a large source of involutive automorphisms in the set of involutive inner automorphisms

that can be applied to subgroups of \(G=GL(n)\) to obtain a number of interesting factorizations. The matrix \(r\) has to be involutive, \(r^2=I\), but it need not be in the group: the factorization makes sense as long as \(\sigma (x)\) is in the group (resp. \(\,\mathrm{d}\sigma (X)\) is in the algebra). As an example, let \(G=SO(n)\) be the group of orthogonal matrices, and let \(r=[-e_1, e_2, \ldots , e_n] = I - 2 e_1 e_1^T\), where \(e_i\) denotes the \(i\)th canonical unit vector in \(\mathbb {R}^n\). Obviously, \(r \not \in SO(n)\), as \(\det r = -1\); nevertheless, we have \((rxr)^T(rxr) = r^T x^T r^T r x r = I\), as long as \(x \in SO(n)\), since \(r^Tr=I\), thus \(\sigma (x) = rxr \in SO(n)\). It is straightforward to verify that the subgroup \(G^\sigma \) of Theorem 2.1 consists of all orthogonal \(n\times n\) matrices of the form

where \(q_{n-1}\in SO(n-1)\). Thus the corresponding symmetric space is \(G/G^\sigma = SO(n)/SO(n-1)\). Matrices belong to the same coset if their first column coincide, thus the symmetric space can be identified with the \((n-1)\)-sphere \(S^{n-1}\).

The corresponding splitting of a skew-symmetric matrix \(V\in {\mathfrak {g}}= {\mathfrak {so}}(n)\) is

Thus any orthogonal matrix can be expressed as the product of the exponential of a matrix in \({\mathfrak {p}}\) and one in \({\mathfrak {k}}\). The space \({\mathfrak {p}}\) can be identified with the tangent space to the sphere in the point \((1,0,\ldots ,0)^T\). Different choices of \(r\) give different interesting algebra splittings and corresponding group factorizations. For instance, by choosing \(r\) to be the anti-identity, \(r = [e_n, e_{n-1}, \ldots , e_1]\), one obtains an algebra splitting in persymmetric and perskew-symmetric matrices. The choice \(r=[-e_1, e_2, \ldots , e_n, -e_{n+1}, e_{n+2} \ldots , e_{2n}]\) for symplectic matrices, gives the splitting in lower-dimensional symplectic matrices forming a sub-algebra and a Lie-triple system, and so on. In [12, 38], such splittings are used for the efficient approximation of the exponential of skew-symmetric, symplectic and zero-trace matrices. In [14] similar ideas are used to construct computationally effective numerical integrators for differential equations on Stiefel and Grassman manifolds.

3.3 Generalized polar coordinates on Lie groups

A similar framework can be used to obtain coordinates on Lie groups [15], which are of interest when solving differential equations of the type

by reducing the problem recursively to spaces of smaller dimension. Recall that \(x(t) = \exp (\Omega (t))\) where \(\Omega \) obeys the differential equation \(\dot{\Omega } = \hbox {dexp}^{-1}_\Omega X\), where \(\hbox {dexp}^{-1}_A= \sum _{j=0}^\infty \frac{B_j}{j!} \hbox {ad}_A^j\), \(B_j\) being the \(j\)th Bernoulli number, see [11].

Decomposing \( X = \Pi _\sigma ^{-}X + \Pi _\sigma ^{+}X \in \mathfrak {p}\oplus \mathfrak {k}\), where \(\Pi _\sigma ^{\pm }X = \frac{1}{2} (X \pm \,\mathrm{d}\sigma (X))\), the solution can be factorized as

where

Note that (3.5) depends on \(P\), however, it is possible to formulate it solely in terms of \(K\), \(\Pi ^{-}X\) and \(\Pi ^{+}X\), but the expression becomes less neat. In block form:

where \(v = \mathrm {ad}_P\). The above formula paves the road for a recursive decomposition, by recognizing that \(\mathrm {dexp}^{-1}_K \) is the \(\mathrm {dexp}^{-1}\) function on the restricted sub-algebra \(\mathfrak {k}\). By introducing a sequence of involutive automorphisms \(\sigma _i\), one induces a sequence of subalgebras, \(\mathfrak {g} =\mathfrak {g}_0 \supset \mathfrak {g}_1 \supset \mathfrak {g}_2 \supset \ldots \), of decreasing dimension, \(\mathfrak {g}_{i+1} = \mathrm {Range} (\Pi _{\sigma _i}^+)\). Note also that the functions appearing in the above formulation are all analytic functions of the \(\mathrm {ad}\)-operator, and are either odd or even functions, therefore they can be expressed as functions of \(\mathrm {ad}^2\) on \(\mathfrak {p}\). In particular, this means that, as long as we can compute analytic functions of the \(\mathrm {ad}^2\) operator, the above decomposition is computable.

Thus, the problem is reduced to the computation of analytic functions of the 2-cyclic part \(P\) as well as analytic functions of \(\hbox {ad}_P\) (trivialized tangent maps and their inverse). The following theorem addresses the computation of such functions using the same framework of Lemma 2.1.

Theorem 3.1

[15] Let \(P\) be the 2-cyclic part of \(X=P+(X-P)\) with respect to the involution \(S\), i.e. \(SPS=-P\). Let \(\Theta = P^2\Pi ^{-}_S\). For any analytic function \(\psi (s)\), we have

where \(\psi _1(s) = \frac{1}{2\sqrt{s}}(\psi (\sqrt{s})-\psi (-\sqrt{s}))\) and \(\psi _2(s) = \frac{1}{2s} (\psi (\sqrt{s}) + \psi (-\sqrt{s}) - 2\psi (0))\).

A similar result holds for \(\hbox {ad}_P\), see [15]. It is interesting to remark that if

then

where \(\psi _1, \psi _2\) as above. In particular, the problem is reduced to computing analytic functions of the principal square root of a matrix [9]: numerical methods that compute these quantities accurately and efficiently are very important for competitive numerical algorithms. Typically, \(\Theta \) is a low-rank matrix, hence computations can be done using eigenvalues and eigenfunctions of the \(\mathrm {ad}\) operator restricted to the appropriate space, see [14, 15]. In particular, if \(A,B\) are vectors, then \(B^T A\) is a scalar and the formulas become particularly simple.

These coordinates have interesting applications in control theory. Some early use of these generalized Cartan decompositions (2.4)–(2.5) (which the author calls \(\mathbb {Z}_2\)-grading) to problems with nonholonomic constraints can be found in [1]. In [13], the authors embrace the formalism of symmetric spaces and use orthogonal (Cartan) decompositions with applications to NMR spectroscopy and quantum computing, using adjoint orbits as main tool. Generally, these decompositions can be found in the literature, but have been applied mostly to the cases when \([\mathfrak {k}, \mathfrak {k} ]=\{0\} \) or \([\mathfrak {p}, \mathfrak {p} ]=\{0\} \), or both, as (3.4)–(3.5) become very simple and the infinite sums reduce to one or two terms. The main contribution of [15] is the derivation of such differential equations and the evidence that such equations can be solved efficiently using linear algebra tools. See also [37] for some applications to control theory.

3.4 Symmetries and reversing symmetries of differential equations

Let \(\mathrm {Diff}(M)\) be the group of diffeomorphisms of a manifold \(M\) onto itself. We say that a map \(\varphi \in \mathrm {Diff}(M)\) has a symmetry \({\fancyscript{S}}:M \rightarrow M\) if

(the multiplication indicating the usual composition of maps, i.e. \(\varphi _{1} \varphi _{2} = \varphi _{1} \circ \varphi _{2}\)), while if

we say that \({\fancyscript{R}}\) is a reversing symmetry of \(\varphi \) [19]. Without further ado, we restrict ourselves to involutory symmetries, the main subject of this paper. Symmetries and reversing symmetries are very important in the context of dynamical systems and their numerical integration. For instance, nongeneric bifurcations can become generic in the presence of symmetries and vice versa. Thus, when using the integration time-step as a bifurcation parameter, it is vitally important to remain within the smallest possible class of systems. Reversing symmetries, on the other hand, give rise to the existence of invariant tori and invariant cylinders [21, 27, 30, 31].

It is a classical result that the set of symmetries possess the structure of a group—they behave like automorphisms and fixed sets of automorphisms. The group structure, however, does not extend to reversing symmetries and fixed points of anti-automorphisms, and in the last few years the set of reversing symmetries has received the attention of numerous numerical analysts. In [19] it was observed that the set of fixed points of an involutive anti-automorphism \({\fancyscript{A}}_{-}\) was closed under the operation

that McLachlan et al. called “sandwich product”.Footnote 3 Indeed, our initial goal was to understand such structures and investigate how they could be used to devise new numerical integrators for differential equations with some special geometric properties. We recognise, cf. Sect. 2.1, that the set of fixed points of an anti-automorphism is a symmetric space. Conversely, any connected space of invertible elements closed under the “sandwich product”, is the set of the fixed points of an involutive automorphism (cf. Theorem 2.1) and has associated to it a LTS. To show this, consider the well known symmetric BCH formula,

[28], which is used extensively in the context of splitting methods [18]. Because of the sandwich-type composition (symmetric space structure), the corresponding \(Z\) must be in the LTS space, and this explains why it can be written as powers of the double commutator operators \(T_X=\hbox {ad}_X^2, T_Y=\hbox {ad}_Y^2\) applied to \(X\) and \(Y\). A natural question to ask is: what is the automorphism \(\sigma \) having such sandwich-type composition as anti-fixed points? As \(\hbox {fix}\fancyscript{A}_{-}=\{ z | \sigma (z)= z^{-1}\}\), we see that, by writing \(z = \exp (Z)\), we have \(\sigma (\exp (Z)) = (\exp (Z))^{-1} = \exp (-Z)\). In the context of numerical integrators, the automorphism \(\sigma \) consists in changing the time \(t\) to \(-t\). This will be proved in Sect. 3.5.

If \(M\) is a finite dimensional smooth compact manifold, the infinite dimensional group \(\mathrm {Diff}(M)\) of smooth diffeomorphisms \(M \rightarrow M\) does not have, strictly speaking, the structure of a Lie group, but the more complex structure of a Frechét Lie group [24]. In Frechét spaces, basic theorems of differential calculus, like the inverse function theorem, do not hold. In the specific case of \(\mathrm {Diff}(M)\), the exponential map from the set of vector fields on \(M\), \(\mathrm {Vect}(M)\), to \(\mathrm {Diff}(M)\) is not a one-to-one map not even in the neighbourhood the identity element, hence there exist diffeomorphisms arbitrarily close to the identity which are not on any one-parameter subgroup and others which are on many. However, these regions become smaller and smaller the closer we approach the identity [24, 26], and, for our purpose, we can disregard these regions and assume that \(\mathrm {Diff}(M)\) is a Lie group with Lie algebra \(\mathrm {Vect}(M)\).

There are two different settings that we can consider in this context. The first is to analyze the set of differentiable maps that possess a certain symmetry (or a discrete set of symmetries). The second is to consider the structure of the set of symmetries of a fixed diffeomorphism. The first has a continuous-type structure while the second is more often a discrete type symmetric space.

Proposition 3.1

The set of diffeomorphisms \(\varphi \) that possess \({\fancyscript{R}}\) as an (involutive) reversing symmetry is a symmetric space of the type \(G_\sigma \).

Proof

Denote

It is clear that \(\sigma \) acts as an automorphism,

moreover, if \({\fancyscript{R}}\) is an involution then so is also \(\sigma \). Note that the set of diffeomorphisms \(\varphi \) that possess \({\fancyscript{R}}\) as a reversing symmetry is the space of symmetric elements \(G_{\sigma }\) defined by the automorphism \(\sigma \) (cf. Sect. 2). Hence the result follows from Theorem 2.1. \(\square \)

Proposition 3.2

The set of reversing symmetries acting on a diffeomorphism \(\varphi \) is a symmetric space with the composition \({\fancyscript{R}}_1 \cdot {\fancyscript{R}}_2 = {\fancyscript{R}}_1 {\fancyscript{R}}_2^{-1} {\fancyscript{R}}_1\).

Proof

If \(\fancyscript{R}\) is a reversing symmetry of \(\varphi \), i.e. \(\fancyscript{R} \varphi \fancyscript{R}^{-1} = \varphi ^{-1}\), then it is easily verified that \(\fancyscript{R}^{-1} \varphi ^{-1} \fancyscript{R} = \varphi \), hence \(\fancyscript{R}^{-1}\) is a reversing symmetry of \(\varphi ^{-1}\). Next, we observe that if \(\fancyscript{R}_1\) and \(\fancyscript{R}_2\) are two reversing symmetries of \(\varphi \) then so is also \(\fancyscript{R}_1 {\fancyscript{R}}_2^{-1} \fancyscript{R}_1\), since

It follows that the composition \(\fancyscript{R}_1 \cdot \fancyscript{R}_2 = \fancyscript{R}_1 \fancyscript{R}_2^{-1} \fancyscript{R}_1\) is an internal operation on the set of reversing symmetries of a diffeomorphism \(\varphi \).

With the above multiplication, the conditions (i)–(iii) of Definition 2.1 are easily verified. This proves the assertion in the case when \(\varphi \) has a discrete set of reversing symmetries. If such a set is continuous and \({\fancyscript{R}}\) is differentiable, the condition iv) follows from the properties of \(\mathrm {Diff}(M)\) and we have again a symmetric space of the type \(G_\sigma \), choosing \(\sigma ({\fancyscript{R}}) = \varphi ^{-1} {\fancyscript{R}}^{-1} \varphi ^{-1}\) (note that \(\varphi \) needs not be an involution). \(\square \)

In what follows, we assume that \(\fancyscript{T}\in \mathrm {Diff}(M)\) is differentiable and involutory (\(\fancyscript{T}^{-1}=\fancyscript{T}\)), thus \(\sigma (\varphi ) = \fancyscript{T} \varphi \fancyscript{T}\).

Acting on \(\varphi = \exp (tX)\), we have

where \(\fancyscript{T}_{*}\) is the tangent map of \(\fancyscript{T}\). The pullback is natural with respect to the Jacobi bracket,

for all vector fields \(X,Y\). Hence the map \(\,\mathrm{d}\sigma \) is an involutory algebra automorphism. Let \({\mathfrak {k}}_\sigma \) and \({\mathfrak {p}}\) be the eigenspaces of \(\,\mathrm{d}\sigma \) in \({\mathfrak {g}}= \mathrm {diff}(M)\). Then

where

is the Lie algebra of vector fields that have \(\fancyscript{T}\) as a symmetry and

is the Lie triple system, vector fields corresponding to maps that have \(\fancyscript{T}\) as a reversing symmetry. Thus, as is the case for matrices, every vector field \(X\) can be split into two parts,

having \(\fancyscript{T}\) as a symmetry and reversing symmetry respectively.

In the context of ordinary differential equation, let us consider

Given an arbitrary involutive function \(\fancyscript{T}\), the vector field \(F\) can always be canonically split into two components, having \({\fancyscript{T}}\) as a symmetry and reversing symmetry respectively. However, if one of these components equals zero, then the system (3.8) has \(\fancyscript{T}\) as a symmetry or a reversing symmetry.

3.5 Selfadjoint numerical schemes as a symmetric space

Let us consider the ODE (3.8), whose exact flow will be denoted as \(\varphi = \exp (tF)\). Backward error analysis for ODEs implies that a (consistent) numerical method for the integration of (3.8) can be interpreted as the sampling at \(t=h\) of the flow \(\varphi _h(t)\) of a vector field \(F_h\) (the so called modified vector field) which is close to \(F\),

where \(p\) is the order of the method (note that setting \(t=h\), the local truncation error is of order \(h^{p+1}\)). The series equalities for modified flows and vector fields must be intended in a formal sense, i.e. the infinite series may not converge, see [4]. Typically, a truncated \(\bar{k}\)-terms series approximation has smaller and smaller error as \(\bar{k}\) increases, but, after a certain optimal index \(\bar{k}_0\), the error increases. However, such a \(\bar{k}_0\) is larger than the range that is of interest in our analysis.

Consider next the map \(\sigma \) on the set of flows depending on the parameter \(h\) defined as

where \(\varphi _{-h}(t) = \exp (t F_{-h})\), with \(F_{-h} = F +(- h)^p E_p + (-h)^{p+1} E_{p+1} + \cdots \).

The map \(\sigma \) is involutive, since \(\sigma ^2 = \hbox {id}\), and it is easily verified by means of the BCH formula that \(\sigma (\varphi _{1,h} \varphi _{2,h}) = \sigma (\varphi _{1,h}) \sigma (\varphi _{2,h})\), hence \(\sigma \) is an automorphism. Consider next

Then \(\varphi _h \in G_\sigma \) if and only if \(\varphi _{-h}(-t) = \varphi _h^{-1}(t)\), namely the method \(\varphi _h\) is selfadjoint.

Proposition 3.3

The set of one-parameter, consistent, selfadjoint numerical schemes is a symmetric space in the sense of Theorem 2.1, generated by \(\sigma \) as in (3.9).

Next, we perform the decomposition (2.4). We deduce from (3.9) that

hence,

is the subalgebra of vector fields that are odd in \(h\), and

is the LTS of vector fields that possess only even powers of \(h\). Thus, if \(F_h\) is the modified vector field of a numerical integrator \(\varphi _h\), its canonical decomposition in \({\mathfrak {k}} \oplus {\mathfrak {p}}\) is

the first term containing only odd powers of \(h\) and the second only even powers. Then, if the numerical method \(\varphi _h(h)\) is selfadjoint, it contains only odd powers of \(h\) locally (in perfect agreement with classical results on selfadjoint methods [5]).

3.6 Connections with the generalized Scovel projection for differential equations with reversing symmetries

In [23] it has been shown that it is possible to generalize the polar decomposition of matrices to Lie groups endowed with an involutive automorphism: every Lie group element \(z\) sufficiently close to the identity can be decomposed as \(z=xy\) where \(x \in G_\sigma \), the space of symmetric elements of \(\sigma \), and \(y\in G^\sigma \), the subgroup of \(G\) of elements fixed under \(\sigma \). Furthermore, setting \(z = \exp (tZ)\) and \(y = \exp (Y(t))\), one has that \(Y(t)\) is an odd function of \(t\) and it is a best approximant to \(z\) in \(G^\sigma \) in \(G^\sigma \) right-invariant norms constructed by means of the Cartan–Killing form, provided that \(G\) is semisimple and that the decomposition \({\mathfrak {g}}= {\mathfrak {p}} \oplus {\mathfrak {k}}\) is a Cartan decomposition.

Assume that \(\varphi \), the exact flow of the differential equation (3.8), has \({\fancyscript{R}}\) as a reversing symmetry (i.e. \(F \in {\mathfrak {p}}_\sigma \), where \(\sigma (\varphi ) = {\fancyscript{R}} \varphi {\fancyscript{R}}^{-1}\)), while its approximation \(\varphi _{h}\) has not. We perform the polar decomposition

i.e. \(\psi _h\) has \(\fancyscript{R}\) as a reversing symmetry, while \(\chi _{h}\) has \({\fancyscript{R}}\) as a symmetry. Since the original flow has \(\fancyscript{R}\) as a reversing symmetry (and not symmetry), \(\chi _h\) is the factor that we wish to eliminate. We have \(\psi _{h}^{2} = \varphi _{h} \sigma (\varphi _{h})^{-1}\). Hence the method obtained composing \(\varphi _{h}\) with \(\sigma (\varphi _{h})^{-1}\) has the reversing symmetry \({\fancyscript{R}}\) every other step. To obtain \(\psi _h\) we need to extract the square root of the flow \(\varphi _{h} \sigma (\varphi _{h})^{-1}\). Now, if \(\varphi (t)\) is a flow, then its square root is simply \(\varphi (t/2)\). However, if \(\varphi _h(t)\) is the flow of a consistent numerical method (\(p \ge 1\)), namely the numerical integrator corresponds to \(\varphi _h(h)\), it is not possible to evaluate the square root \(\varphi _h(h/2)\) by simple means as it is not the same as the numerical method with half the stepsize, \(\varphi _{h/2}(h/2)\). The latter, however, offers an approximation to the square root: note that

an expansion which, compared with \(\varphi _h(h)\), reveals that the error in approximating the square root with the numerical method with half the stepsize is of the order of

a term that is subsumed in the local truncation error. The choice

as an approximation to \(\psi _h\) (we stress that each flow is now evaluated at \(t=h/2\)), yields a map that has the reversing symmetry \({\fancyscript{R}}\) at each step by design, since

Note that \(\tilde{\psi }_{h} = \varphi _{h/2} \sigma (\varphi _{h/2}^{-1})\), where \(\varphi _{h/2}^{-1}(t) = \varphi _{-h/2}^{*}(-t)\) is the inverse (or adjoint) method of \(\varphi _{h/2}\). If \(\sigma \) is given by (3.9), then \(\sigma (\varphi _{h/2}^{-1}) = \varphi _{h/2}^*(h/2)\) and this algorithm is precisely the Scovel projection [29] originally proposed to generate selfadjoint numerical schemes from an arbitrary integrator, and then generalized to the context of reversing symmetries [19].

Proposition 3.4

The generalized Scovel projection is equivalent to choosing the \(G_{\sigma }\)-factor in the polar decomposition of a flow \(\varphi _h\) under the involutive automorphism \(\sigma (\varphi ) = {\fancyscript{R}} \varphi {\fancyscript{R}}^{-1}\), whereby square roots of flows are approximated by numerical methods with half the stepsize.

3.7 Connection with the Thue–Morse sequence and differential equations with symmetries

Another algorithm that can be related to the generalized polar decomposition of flows is the application of the Thue–Morse sequence to improve the preservation of symmetries by means of a numerical integrator [10]. Given an involutive automorphism \(\fancyscript{\sigma }\) and a numerical method \(\varphi _h\) in a group \(G\) of numerical integrators, Iserles et al. [10] construct the sequence of methods

Since \(\varphi ^{[k]} = \sigma ^0 \varphi ^{[k]}\), it is easily observed that the \(k\)th method corresponds to composing \(\sigma ^0 \varphi ^{[k]}\) and \(\sigma ^1 \varphi ^{[k]}\) according to the \(k\)th Thue–Morse sequence \(01101001\ldots \), as displayed below in Table 1 (see [20, 32]). Iserles et al. [10] showed, by a recursive use of the BCH formula, that each iteration improves by one order the preservation of the symmetry \({\fancyscript{S}}\) by a consistent numerical method, where \({\fancyscript{S}}\) is the involutive automorphism such that \(\sigma (\varphi ) = {\fancyscript{S}} \varphi {\fancyscript{S}}^{-1}\). The main argument of the proof is that if the method \(\varphi ^{[k]}\) has a given symmetry error, the symmetry error of \(\sigma (\varphi ^{[k]})\) has the opposite sign. Hence the two leading symmetry errors cancel and \(\varphi ^{[k+1]}\) has a symmetry error of one order higher. In other words, if the method \(\varphi _h\) preserves \({\fancyscript{S}}\) to order \(p\), then \(\varphi ^{[k]}\) preserves the symmetry \({\fancyscript{S}}\) to order \(p+k\) every \(2^k\) steps. As \(\sigma \) changes the sign of the symmetry error only, if a method \(\varphi _h\) has a given approximation order \(p\), so does \(\sigma (\varphi _h)\). Thus \(\varphi ^{[0]} = \sigma (\varphi _h)\) can be used as initial condition in (3.12), obtaining the conjugate Thue–Morse sequence \(10010110\ldots \). By a similar argument, also

generate sequences with increasing order of preservation of symmetry.

Example 3.1

As an illustration of the technique, consider the spatial PDE \(u_t = u_x+u_y + f(u)\), where \(f\) is analytic in \(u\). Let the PDE be defined over the domain \([-L,L]\times [-L,L]\) with periodic boundary conditions and initial value \(u_0\). If \(u_0\) is symmetric on the domain, i.e. \(u_0(y,x) = u_0(x,y)\), so is the solution for all \(t\). The symmetry is \(\sigma (u(t, x,y)) = u(t, y,x)\). Now, assume that we solve the equation by the method of alternating directions, where the method \(\varphi \) corresponds to solving with respect to the \(x\) variable keeping \(y\) fixed, while \(\sigma (\varphi )\) with respect to the \(y\) variable keeping \(x\) fixed. The symmetry will typically be broken at the first step. Nevertheless, we can get a much more symmetric solution if the sequence of the directions obeys the Thue–Morse sequence (iteration \(\varphi ^{[k]}\)) or the equivalent sequence given by iteration \(\chi ^{[k]}\). This example is illustrated in Figs. 1, 2 for a basic method using the Forward Euler and Heun’s method respectively. The time integration interval is \([0, 10]\). The differential operators \(\partial _x, \partial _y\) are implemented using second order central differences. The experiments are performed with constant stepsize \(h=10^{-2}\) and uniform spatial discretization \(\Delta x = \Delta y = 10^{-1}\).

“Modes” of the symmetry error \(e_k=\max _{x_i,y_j} |u_k(x_i,y_j) - u_k(y_j,x_i)|\) versus time \(t_k=kh\) for the method of alternating directions for the PDE \(u_t = u_x+u_y + 2\times 10^{-3} u^2\), on a square (\(L=1\)), with initial condition \(u_0 = e^{-x^2-y^2}\), isotropic mesh and periodic boundary conditions. See Example 3.1 for details. The integration is performed using the Forward Euler as a basic method. The order of the overall approximation is the same as the original method (first order only), the only difference is the order of the directions, chosen according to the Thue–Morse sequence. The different symbols correspond to different sampling rates: every step, every second step, fourth, eight, .... From top left to bottom right: sequences ‘0’, ‘01’, ‘0110’, ‘01101001’, etc.

Global error and symmetry error \(e_k =\max _{x_i,y_j} |u_k(x_i, y_j) - u_k(y_j, x_i)|\) versus step size \(h\) for the method of alternating directions for the PDE \(u_t = u_x+u_y +2\times 10^{-3} u^2\), as in Example 3.1. The basic method \(\varphi \) (method “0”) is now a symmetric composition with the Heun method: half step in the \(x\) direction, full step in the \(y\) direction, half step in the \(x\) direction, all of them performed with the Heun method (improved Euler). It is immediately observed that the symmetry error is improved by choosing the directions according to the Thue–Morse sequence. The order of the method (global error) remains unchanged (order two).

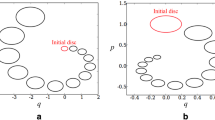

Example 3.2

A generic GR method for finding the eigenvalues of a square matrix \(A\) (for simplicity, assume it has distinct real eigenvalues) consists in the iteration of two simple steps: compute a matrix factorization \(A_k=G_k R_k\) and then construct the update \(A_{k+1}=R_kG_k\). The matrix \(A_{k+1}\) is similar to \(A_k\) as \(A_{k+1} = R_k A_k R_k^{-1}\). The iterations are then repeated until the matrix \(A_k\) eventually converges to an upper triangular matrix so that the eigenvalues can be read off the diagonal. The most famous method in this class is the QR method for eigenvalues, but other many other variants exist, see [33] for an extended discussion. These methods can be related to isospectral flows, i.e. matrix differential equations that preserve the eigenvalues, and can be interpreted as discrete time sampling of the matrix flow. Disregarding stability issues, consider a GR method using a simple LU factorization, \(A_k=L_k U_k\), \(A_{k+1}=U_k L_k\). It is soon observed that, given a symmetric initial matrix \(A_0\), the subsequent iteration produce a non-symmetric matrix. However, \(A_{k+1}\) can be thought as a step of a consisten numerical method applied to the differential equation, therefore we might decrease the symmetry error using the Thue–Morse sequence. The ‘1’ step is the application of the factorization to \(A_k^T\), rather than to \(A_k\), to produce \(A_{k+1}^T\). Typically the ‘01’ sequence has the most significant effect. The other sequences ‘0110’, ‘01101001’, ..., might display a more erratic behaviour, as the analysis of is valid in the asymptotic limit \(h\rightarrow 0\), whereas the step size of the discrete sampling might be too large for the analysis to be valid. Figure 3 shows the symmetry error of the Thue–Morse technique applied to two different symmetric, positive definite, initial conditions \(A_0\) with the same set of eigenvalues. We have used \(A_0 = Q \Lambda Q^T\), where \(Q\) is obtained as the orthogonal factor in the QR factorization of a random matrix of dimension five and \(\Lambda =\mathrm {diag}([1,2,3,4,5])\). The factorization \(A_k=L_k U_k\) is computed using the Matlab lu command.

Symmetry error \(e_k=\max _{i,j}| (A_k)_{i,j} - (A_k)_{j,i}|\) for a GR method for eigenvalues. The basic method is \(A_k=L_k U_k\), \(A_{k+1}=U_k L_k\) (method “0”). The method “1” consists of applying the same algorithm to \(A_k^T\) rather than \(A_k\). The symmetry error is reduced by the Thue–Morse sequence because the basic method can be interpreted as a discrete-time flow of a matrix differential equation. However, since the discrete time-step might be too large with respect to the asymptotic regime in which the Thue–Morse analysis is valid, the longer sequences might display a more erratic behaviour (right plot)

3.8 Connections with a Yoshida-type composition and differential equations with symmetries

In a famous paper that appeared in 1990 [34] Yoshida showed how to construct high order time-symmetric integrators starting from lower order time-symmetric symplectic ones. Yoshida showed that, if \(\varphi \) is a selfadjoint numerical integrator of order \(2p\), then

is a selfadjoint numerical method of order \(2p+2\) provided that the coefficients \(\alpha \) and \(\beta \) satisfy the condition

whose only real solution is

In the formalism of this paper, time-symmetric methods correspond to \(G_\sigma \)-type elements with \(\sigma \) as in (3.9) and it is clearly seen that the Yoshida technique can be used in general to improve the order of approximation of \(G_{\sigma }\)-type elements.

A similar procedure can be applied to improve the order of the retention of symmetries and not just reversing symmetries. To be more specific, let \({\fancyscript{S}}\) be a symmetry of the given differential equation, namely \({\fancyscript{S}}_{*} F = F {\fancyscript{S}}\), with \({\fancyscript{S}} \not = \hbox {id}\), \({\fancyscript{S}}^{-1} = {\fancyscript{S}}\), \({\fancyscript{S}}_{*}\) denoting the pullback of \({\fancyscript{S}}\) to \({\mathfrak {g}}= \mathrm {Vect}(M)\) (see Sect. 3.4). Here, the involutive automorphism is given by

so that

Proposition 3.5

Assume that \(\varphi _{h}(t)\) is the flow of a self-adjoint numerical method of order \(2p\), \( \varphi _{h}(t) = \exp (tF_{h})\), \( F_{h} = F + h^{2p}E_{2p} + h^{2p+2}E_{2p+2} + \cdots \), where \(E_{j} = P_{j} + K_{j}\), \(P_{j} \in {\mathfrak {p}}, K_{j} \in {\mathfrak {k}}\) and \(F\) has \(\fancyscript{S}\) as a symmetry. The composition

with

has symmetry error \(2p+2\) at \(t=h\).

Proof

Write (3.15) as

Application of the symmetric BCH formula, together with the fact that \(\,\mathrm{d}\sigma \) acts by changing the signs on the \({\mathfrak {p}}\)-components only, allows us to write the relation (3.15) as

where the \({\fancyscript{O}}\!\left( th^{2p+2}\right) \) comes from the \(E_{2p+2}\) term and the \({\fancyscript{O}}\!\left( t^3 h^{2p}\right) \) from the commutation of the \(F\) and \(E_{2p}\) terms (recall that no first order commutator appears in the symmetric BCH formula). The numerical method is obtained letting \(t=h\). We require \(2a+b=1\) for consistency, and \( 2a^{2p+1}-b^{2p+1}=0\) to annihilate the coefficient of \(P_{2p}\), the lowest order \({\mathfrak {p}}\)-term. The resulting method \(\varphi ^{[1]}_{h}(t)\) retains the symmetry \({\fancyscript{S}}\) to order \(2p+2\), as the first leading symmetry error is a \({\fancyscript{O}}\!\left( h^{2p+3}\right) \) term. \(\square \)

The procedure described in Proposition 3.5 allows us to gain two extra degrees in the retention of symmetry per iteration, provided that the underlying method is selfadjoint, compared with the Thue–Morse sequence of [10] that yields one extra degree in symmetry per iteration but does not require selfadjointness. As for the Yoshida technique, the composition (3.15) can be iterated \(k\) times to obtain a time-symmetric method of order \(2p\) that retains symmetry to order \(2(p+k)\). The disadvantage of (3.15), with respect to the classical Yoshida approach is the fact that the order of the method is retained (and does not increase by 2 units as the symmetry error). The main advantage is that all the steps are positive, in particular the second step, \(b\), whereas \(\beta \) in (3.14) is always negative for \(p\ge 3\) and typically larger than \(\alpha \), requiring step-size restrictions. In the limit, when \(p\rightarrow \infty \), \(a,b\rightarrow 1/3\), i.e. the step lengths become equal.

Example 3.3

Consider the PDE \(u_t = \nabla ^2 u - u (u-1)^2\), defined on the square \([-1,1]\times [-1,1]\), with a Gaussian initial condition, \(u(0) = \mathrm {e}^{-9x^2-9y^2}\), and periodic boundary conditions. The problem is semi-discretized on a coarse uniform and isotropic mesh with spacing \(\delta =0.1\) and is reduced to the set of ODEs \( \dot{u} = (D_{xx} + D_{yy}) u + f(u)=F(u)\), where \(D_{xx}=I \otimes D_2\) and \(D_{yy}= D_2 \otimes I\), \(D_2\) being the circulant matrix of the standard second order divided differences, with stencil \(\frac{1}{\delta ^2}[1,-2,1]\). For the time integration, we consider the splitting \(F = F_1 + F_2\), where \(F_1 = D_{xx} + \frac{1}{2} f\) and \(F_2 = D_{yy} + \frac{1}{2}f\) and the second order self-adjoint method:Footnote 4

We display the global error and the symmetry error for time-integration step sizes \(h =3 h_0 \times [1, \frac{1}{2}, \ldots ,\frac{ 1}{64}]\), where the parameter \(h_0\) is chosen to be the largest step size for which the basic method \(\varphi \) in (3.17) is stable. The factor \(3\) comes from the fact that both the Yoshida and our symmetrization method (3.15) require three sub-steps of the basic method. So, one step of the Yoshida and our symmetrising composition can be expected to cost the same as the basic method (3.17).

As we can see in Fig. 4, the Yoshida technique, with \(\alpha = 1/(2-2^{1/3})\) and \(\beta =1-2\alpha \), does the job of increasing the order of accuracy of the method from two to four, and so does the symmetry error. However, since \(\alpha <1/2\), the \(\beta \)-step is negative, and, as a consequence, it is observed that the method fails to converge for the two largest values of the step size. Conversely, our symmetrization method (3.15) has \(a = 1/(2+2^{1/3})\) and \(b=1-2a\), with \(b\) positive, and the method converges for all the time-integration steps. As expected, the order is not improved, but the symmetry order is improved by two units. The symmetry \(\sigma \) is applied by transposing the matrix representation \(u_k \approx u(t_k, x_i, y_j)\) of the solution at \((x_i, y_j) = (i\delta , j\delta )\), before and after the intermediate \(b\)-step. Otherwise, the two implementations are identical.

The result yields in the non-stiff regime, i.e. when the Taylor expansion is a good approximation to the exact solution. Such problems, with coarse or even fixed space discretization arise, for instance, in the image processing applications, like image impainting [2]. For problems requiring finer space discretizations, the PDE becomes stiff and other methods might be more appropriate.

Error versus step size for the Yoshida technique (left) and the symmetrization technique (3.15) (right) for the basic self-adjoint method (3.17) applied to a semidiscretization of \(u_t = \nabla ^2 u - u (u-1)^2\). The order with the Yoshida technique increases by two units, but the method does not converge for the two largest values of the step size. Our symmetrization technique improves by two units the order of retention of symmetry (but not global error). Due to the positive step-sizes, it converges also for the two largest values of the step size. See text for details.

Example 3.4

We consider a continuous equivalent of the GR eigenvalue problem described in Example 3.2. Let the matrix \(A(t)\) be the solution of the differential equation \(\frac{\mathrm {d}}{\mathrm {d} t} A = [B(A),A]\), with initial condition \(A_0\), where \(B(A) = A_\mathrm {L} -A_\mathrm {U}\), \(A = A_\mathrm {L}+ A_\mathrm {D} +A_\mathrm {U}\) being the decomposition of a matrix in strictly lower triangular part, diagonal and strictly upper triangular part. Introducing a new matrix \(L\), where \(\frac{\mathrm {d}}{\mathrm {d} t} L = B(L A_0 L^{-1}) L=F(L)\), \(L(0) = I\), it can be easily verified that \(A = L A_0 L^{-1}\) is a solution of the original problem and the similarity transformation that defines the change of variables is an isospectral transformation. Thus isospectrality can be preserved by solving for \(L\) and using similarity transformations. If the matrix \(A\) is symmetric then \(L\) is an orthogonal matrix and \(A\) will remain symmetric for all time. When numerically solving for \(L\), the orthogonality will be lost whenever we use a simple method like Forward Euler, and, in general, any method that does not preserve orthogonality. The spectrum will still be retained as long as we use the similarity transformation to update \(A\) [35]. Consider the following basic method

which is selfadjoint of order two. The symmetry is given by \(\sigma (A) = A^T\). Again we apply both the Yoshida method and the method of Prop. 3.5. The results, shown in Fig. 5, are very similar to those presented in Example 3.3 and we see that (3.15) behaves better for larger step sizes, whereas the Yoshida technique fails in providing converging solutions for the implicit iterations. Again, the symmetry is applied by transposing the matrix \(A\) before and after the \(b\) step. Otherwise, the implementations are identical. The stepsizes are \(h = [1, \frac{1}{2}, \ldots ,\frac{ 1}{64}]\) and the initial condition is the same for both experiments and computed as in Example 3.2.

Global and symmetry error versus step size \(h\) for the Yoshida technique (left) and the symmetrization technique (3.15) (right) for the basic self-adjoint method (3.18) when applied to the isospectral problem described in Example 3.4 solved in the interval \([0,T]\), \(T=10\). See text for details

4 Conclusions and remarks

In this paper we have shown that the algebraic structure of Lie triple systems and the factorization properties of symmetric spaces can be used as a tool to: (1) understand and provide a unifying approach to the analysis of a number of different algorithms; and (2) devise new algorithms with special symmetry/reversing symmetry properties in the context of the numerical solution of differential equations. In particular, we have seen that symmetries are more difficult to retain (to date, we are not aware of methods that can retain a generic involutive symmetry in a finite number of steps), while the situation is simpler for reversing symmetries, which can be achieved in a finite number of steps using the Scovel composition. So far, we have considered the most generic setting where the only allowed operations are non-commutative compositions of maps (the map \(\varphi \), its transformed, \(\sigma (\varphi )\), and their inverses). If the underlying space is linear and so is the symmetry, i.e. \(\sigma (\varphi _1 + \varphi _2) = \sigma (\varphi _1) + \sigma (\varphi _2)\), the map \( \tilde{\varphi }_h = \frac{\varphi _h + \sigma (\varphi _h)}{2} \) obviously satisfies the symmetry \(\sigma \), as \(\sigma (\tilde{\varphi }_h) = \tilde{\varphi }_h\). Because of the linearity and the vector-space property, we can use the same operation as in the tangent space, namely we identify \(\sigma \) and \(\,\mathrm{d}\sigma \). This is in fact the most common symmetrization procedure for linear symmetries in linear spaces. For instance, in the context of the alternating-direction examples, a common way to resolve the symmetry issue is to first solve, say, the \(x\) and the \(y\) direction, solve the \(y\) and the \(x\) direction with the same initial condition, and then average the two results. However, in the context eigenvalue problems, the underlying space is not linear: the average of two matrices with the same eigenvalues does not yield a matrix with the same eigenvalues. Averaging destroys the preservation of the spectrum, a property that is fundamental for GR-type eigenvalue algorithms.

In this paper we did not mention the use and the development of similar concepts in a more strict linear algebra setting.Footnote 5 Some recent works deal with the further understanding of scalar products and structured factorizations, and more general computation of matrix functions preserving group structures, see for instance [7, 8, 17] and references therein. Some of these topics are covered by other contributions in the present BIT issue, which we strongly encourage the reader to read to get a more complete picture of the topic and its applications.

Notes

Typically non-associative.

Usually, matrices are denoted by upper case letters. Here we hold on the convention described in Sect. 2.

The authors called the set of vector fields closed under the sandwich product \( \varphi _1 \cdot \varphi _2 = \varphi _1 \varphi _2 \varphi _1\) a pseudogroup.

We have simply split the nonlinear term in two equal parts. Surely, it can be treated in many different ways, but that is besides the illustrative scope of the example.

It seems that numerical linear algebra authors prefer to work with Jordan algebras (see Sect. 2), rather than Lie triple systems. We believe that the LTS description is natural in the context of differential equations and vector fields because it fits very well with the Lie algebra structure of vector fields.

References

Brockett, R.: Explicitly solvable control problems with nonholonomic constraints. In: Proceedings of the 38th Conference on Decision and Control, Phoenix (1999)

Calatroni, L., Düring, B., Schönlieb, C.-B.: ADI splitting schemes for a fourth-order nonlinear partial differential equation from image processing. DCDS-A 34(3), 931–957 (2014)

De Bruijn, N.G., Szekeres, G.: On some exponential and polar representations. Nieuw Archief voor Wiskunde (3) III, 20–32 (1955)

Hairer, E., Lubich, C., Wanner, G.: Geometric Numerical Integration. Springer, Berlin (2002)

Hairer, E., Nørsett, S.P., Wanner, G.: Solving Ordinary Differential Equations I. Nonstiff Problems, 2nd revised edn. Springer, Berlin (1993)

Helgason, S.: Differential Geometry, Lie Groups and Symmetric Spaces. Academic Press, London (1978)

Higham, N.J., Mackey, D.S., Mackey, N., Tisseur, F.: Functions preserving matrix groups and iterations for the matrix square root. SIAM J. Matrix Anal. Appl. 26(3), 849–877 (2005)

Higham, N.J., Mehl, C., Tisseur, F.: The canonical generalized polar decomposition. SIAM J. Matrix Anal. Appl. 31(4), 2163–2180 (2010)

Higham, N.J.: Functions of Matrices: Theory and Computation. SIAM (2008)

Iserles, A., McLachlan, R., Zanna, A.: Approximately preserving symmetries in numerical integration. Eur. J. Appl. Math. 10, 419–445 (1999)

Iserles, A., Munthe-Kaas, H., Nørsett, S.P., Zanna, A.: Lie-group methods. Acta Numer. 9, 215–365 (2000)

Iserles, A., Zanna, A.: Efficient computation of the matrix exponential by generalized polar decompositions. SIAM J. Numer. Anal. 42(5), 2218–2256 (2005)

Khaneja, N., Brockett, R., Glaser, S.J.: Time optimal control in spin systems. Phys. Rev. A 63, 032308 (2001)

Krogstad, S.: A low complexity Lie group method on the Stiefel manifold. BIT 43(1), 107–122 (2003)

Krogstad, S., Munthe-Kaas, H.Z., Zanna, A.: Generalized polar coordinates on Lie groups and numerical integrators. Numer. Math. 114, 161–187 (2009)

Loos, O.: Symmetric Spaces I: General Theory. Benjamin Inc, New York (1969)

Mackey, D.S., Mackey, N., Tisseur, F.: Structured factorizations in scalar product spaces. SIAM J. Matrix Anal. Appl. 27(3), 821–850 (2006)

McLachlan, R.I., Quispel, G.R.W.: Splitting methods. Acta Numer. 11, 341–434 (2002)

McLachlan, R.I., Quispel, G.R.W., Turner, G.S.: Numerical integrators that preserve symmetries and reversing symmetries. SIAM J. Numer. Anal. 35(2), 586–599 (1998)

Morse, M.: Recurrent geodesics on a surface of negative curvature. Trans. Am. Math. Soc. 22, 84–100 (1921)

Moser, J.: Stable and Random Motion in Dynamical Systems. Princeton University Press, Princeton (1973)

Munthe-Kaas, H.: High order Runge–Kutta methods on manifolds. Appl. Numer. Math. 29, 115–127 (1999)

Munthe-Kaas, H., Quispel, G.R.W., Zanna, A.: Generalized polar decompositions on Lie groups with involutive automorphisms. J. Found. Comput. Math. 1(3), 297–324 (2001)

Omori, H.: On the group of diffeomorphisms of a compact manifold. Proc. Symp. Pure Math. 15, 167–183 (1970)

Owren, B., Marthinsen, A.: Integration methods based on canonical coordinates of the second kind. Numer. Math. 87(4), 763–790 (2001)

Pressley, A., Segal, G.: Loop Groups, Oxford Mathematical Monographs. Oxford University Press, Oxford (1988)

Roberts, J.A.G., Quispel, G.R.W.: Chaos and time-reversal symmetry: order and chaos in reversible synamical systems. Phys. Rep. 216, 63–177 (1992)

Sanz-Serna, J.M., Calvo, M.P.: Numerical Hamiltonian Problems. AMMC 7. Chapman & Hall, London (1994)

Scovel, J.C.: Symplectic numerical integration of Hamiltonian systems, MSRI. In: Ratiu, T. (ed.) The Geometry of Hamiltonian Systems, vol. 22, pp. 463–496. Springer, New York (1991)

Sevryuk, M.B.: Reversible Systems. Number 1211 in Lect. Notes Math. Springer, Berlin (1986)

Stuart, A.M., Humphries, A.R.: Dynamical Systems and Numerical Analysis. Cambridge University Press, Cambridge (1996)

Thue, A.: Über unendliche Zeichenreihen. In: Nagell, T. (ed.) Selected mathematical papers of Axel Thue, pp. 139–158. Universitetsforlaget, Oslo (1977)

Watkins, D.: The Matrix Eigenvalue Problem. GR and Krylov Subspace Methods. SIAM, Philadelphia (2007)

Yoshida, H.: Construction of higher order symplectic integrators. Phys. Lett. A 150, 262–268 (1990)

Zanna, A.: On the Numerical Solution of Isospectral Flows. PhD Thesis, University of Cambridge, United Kingdom (1998)

Zanna, A.: Recurrence relation for the factors in the polar decomposition on Lie groups. Math. Comput. 73, 761–776 (2004)

Zanna, A.: Generalized polar decompositions in control. In: Mathematical papers in honour of Fátima Silva Leite, volume 43 of Textos Mat. Sér. B, pp. 123–134. Univ. Coimbra (2011)

Zanna, A., Munthe-Kaas, H.Z.: Generalized polar decompositions for the approximation of the matrix exponential. SIAM J. Matrix Anal. 23(3), 840–862 (2002)

Acknowledgments

The authors wish to thank the Norwegian Research Council and the Australian Research Council for financial support. Special thanks to Yuri Nikolayevsky who gave us important pointers to the literature on symmetric spaces.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Ahmed Salam.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Munthe-Kaas, H.Z., Quispel, G.R.W. & Zanna, A. Symmetric spaces and Lie triple systems in numerical analysis of differential equations. Bit Numer Math 54, 257–282 (2014). https://doi.org/10.1007/s10543-014-0473-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-014-0473-5