Abstract

Assistive robot arms enable people with disabilities to conduct everyday tasks on their own. These arms are dexterous and high-dimensional; however, the interfaces people must use to control their robots are low-dimensional. Consider teleoperating a 7-DoF robot arm with a 2-DoF joystick. The robot is helping you eat dinner, and currently you want to cut a piece of tofu. Today’s robots assume a pre-defined mapping between joystick inputs and robot actions: in one mode the joystick controls the robot’s motion in the x–y plane, in another mode the joystick controls the robot’s z–yaw motion, and so on. But this mapping misses out on the task you are trying to perform! Ideally, one joystick axis should control how the robot stabs the tofu, and the other axis should control different cutting motions. Our insight is that we can achieve intuitive, user-friendly control of assistive robots by embedding the robot’s high-dimensional actions into low-dimensional and human-controllable latent actions. We divide this process into three parts. First, we explore models for learning latent actions from offline task demonstrations, and formalize the properties that latent actions should satisfy. Next, we combine learned latent actions with autonomous robot assistance to help the user reach and maintain their high-level goals. Finally, we learn a personalized alignment model between joystick inputs and latent actions. We evaluate our resulting approach in four user studies where non-disabled participants reach marshmallows, cook apple pie, cut tofu, and assemble dessert. We then test our approach with two disabled adults who leverage assistive devices on a daily basis.

Similar content being viewed by others

1 Introduction

Our approach makes it easier for users to control assistive robots. (Left) assistive robot arms are dexterous and high-dimensional, but humans must teleoperate these robots with low-dimensional interfaces, such as 2-DoF joysticks. (Right) we focus on assistive eating tasks; for example, trying to get a piece of tofu. (Top) existing work maps joystick inputs to end-effector motion. Here the user must toggle back and forth between multiple modes to control their desired end-effector motion. (Bottom) we learn task specific mapping that embeds the robot’s high-dimensional actions into low-dimensional latent actions z. Now pressing up and down controls the robot along a reaching and stabbing motion, while pressing right and left moves the robot arm through precise cutting and scooping motions. The user no longer needs to change modes

For over one million American adults living with physical disabilities, performing everyday tasks like grabbing a bite of food or pouring a glass of water presents a significant challenge (Taylor 2018). Assistive devices—such as wheelchair-mounted robot arms—have the potential to improve these people’s independence and quality of life (Argall 2018; Jacobsson et al. 2000; Mitzner et al. 2018; Carlson and Millan 2013). A key advantage of these robots is their dexterity: assistive arms move along multiple degrees-of-freedom (DoFs), orchestrating complex motions like stabbing a piece of tofu or pouring a glass of water. Unfortunately, this very dexterity makes assistive arms hard to control.

Imagine that you are leveraging an assistive robot arm to eat dinner (see Fig. 1). You want the robot to reach for some tofu on the table in front of you, cut off a piece, and then pick it up with its fork. Non-disabled persons can use their own body to show the robot how to perform this task: for instance, the human grabs the tofu with their own arm, and the robot mimics the human’s motion (Rakita et al. 2017, 2019). But mimicking is not feasible for people living with physical disabilities—instead, these users are limited to low-dimensional controllers. Today’s assistive robot arms leverage joysticks (Herlant et al. 2016), sip-and-puff devices (Argall 2018), or brain-computer interfaces (Muelling et al. 2017). So to get a bite of tofu, you must carefully coordinate the dexterous robot arm while only pressing up-down-left-right on a joystick. Put another way, users are challenged by an inherent mismatch between low-dimensional interfaces and high-dimensional robots.

Existing work on assistive robots tackles this problem with pre-defined mappings between user inputs and robot actions. These mappings incorporate modes, and the user switches between modes to control different robot DoFs (Herlant et al. 2016; Aronson et al. 2018; Newman et al. 2018). For instance, in one mode the user’s 2-DoF joystick controls the x–y position of the end-effector, in a second mode the joystick controls the z-yaw position of the end-effector, and so on. Importantly, these pre-defined mappings miss out on the human’s underlying task. Consider teleoperating the robot to cut off a piece of tofu and then stab it with its fork. First you must use the x–y mode to align the fork above the tofu, then roll-pitch to orient the fork for cutting, then z-yaw to move the fork down into the tofu, and then back to roll-pitch to return the fork upright, and finally z–yaw to stab the tofu—and this is assuming you never undo a motion or make a correction!

Controlling assistive robots becomes easier when the joystick inputs map directly to task-related motions. Within our example, one joystick DoF could produce a spectrum of stabbing motions, while the other DoF teleoperates the robot through different cutting motions. To address the fundamental mismatch between high-DoF robot arms and low-DoF control interfaces, we learn a mapping between these spaces:

We make it easier to control high-dimensional robots by embedding the robot’s actions into low- dimensional and human-controllable latent actions.

Latent actions here refer to a low-DoF representation that captures the most salient aspects of the robot’s motions. Intuitively, we can think of these latent actions as similar to the eigenvectors of a matrix composed of high-dimensional robot motions. Returning to our motivation, imagine that you have eaten dinner with the assistive robot many times. Across all of these meals there are some common motions: reaching for food items, cutting, pouring, scooping, etc. At the heart of our approach we learn an embedding that captures these underlying motion patterns, and enables the human to control via these learned embeddings, which we refer to as latent actions.

Overall, we make the following contributions:Footnote 1

Learning latent actions Given a dataset of task-related robot motions, we develop a framework for learning to map the user’s low-dimensional inputs to high-dimensional robot actions. For instance, imagine using a 2-DoF joystick to teleoperate a 7-DoF assistive robot arm. To reach for a piece of tofu, you need a mapping function—something that interprets your joystick inputs into robot actions. Of course, not just any mapping will do; you need something that is intuitive and meaningful, so that you can easily coordinate all the robot’s joints to move towards your tofu. In Sect. 6 we introduce a set of properties that user-friendly latent actions must satisfy, and formulate learning models that capture these properties.

Integrating shared autonomy But what happens once you’ve guided the robot to reach the tofu (i.e., your high-level goal)? Next, you need to precisely manipulate the robot arm in order to cut off a piece and pick it up with your fork. Here relying on latent actions alone is challenging, since small changes in your joystick input may accidentally move the robot away from your goal. To address this problem, in Sect. 7 we incorporate shared autonomy, where both the human and robot arbitrate control over the robot’s motion. Here the robot autonomously helps the user reach and maintain their desired high-level goals, while the user leverages latent actions to perform precise manipulation tasks (e.g., cutting, stabbing, and scooping). We show convergence bounds on the robot’s distance to the most likely goal, and develop a training procedure to ensure the human can still guide the robot to different goals if they change their mind.

Personalizing alignment Throughout the assistive eating task your joystick inputs have produced different robot motions. To guide the robot towards the tofu, you pressed the joystick up; to orient the fork for cutting, you pressed the joystick right; and to stab your piece of tofu, you pressed the joystick down. This alignment between joystick inputs and robot outputs may make sense to you—but different users will inevitably have different preferences! Accordingly, in Sect. 6 we leverage user expectations to personalize the alignment between joystick directions and latent actions. Part of this alignment process involves asking the human what they prefer (e.g., what joystick direction should correspond to scooping?). We minimize the number of queries by formalizing and leveraging the priors that humans expect when controlling robotic systems.

Conducting user studies In order to compare our approach to the state-of-the-art, we performed four user studies inspired by assistive eating tasks. Non-disabled participants teleoperated a 7-DoF robot arm using a 2-DoF joystick to reach marshmallows, make a simplified apple pie, cut tofu, and assemble dessert. We compared our latent action approach to both pre-defined mappings and shared autonomy baselines, including the HARMONIC dataset (Newman et al. 2018). We found that latent actions help users complete high-level reaching and precise manipulations with their preferred alignment, resulting in improved objective and subjective performance.

Evaluating with disabled users We applied our proposed approach with two disabled adults who leverage assistive devices when eating on a daily basis. These adults have a combined five years of experience with assistive robot arms, and typically control their arms with pre-defined mappings. In our case study both participants cut tofu and assembled a marshmallow dessert using either learned latent actions or a pre-defined mapping. Similar to our results with non-disabled users, here latent actions helped these disabled participants more quickly and accurately perform eating tasks.

Unifying previous research This paper combines our earlier work from (Losey et al. 2020; Jeon et al. 2020; Li et al. 2020). We build on these preliminary results by integrating each part into an overarching formalism (Sect. 7), demonstrating how each component relates to the overall approach, and evaluating the resulting approach with disabled members of our target population (Sect. 10).

2 Related work

Our approach learns latent representations of dexterous robot motions, and then combines those representations with shared autonomy to facilitate both coarse reaching and precise manipulation tasks. We apply this approach to assistive robot arms—specifically for assistive eating—so that users intuitively teleoperate their robot through a spectrum of eating-related tasks.

Assistive eating Making and eating dinner without the help of a caretaker is particularly important to people living with physical disabilities (Mitzner et al. 2018; Jacobsson et al. 2000). As a result, a variety of robotic devices and algorithms have been developed for assistive eating (Brose et al. 2010; Naotunna et al. 2015). We emphasize that these devices are high-dimensional in order to reach and manipulate food items in 3D space (Argall 2018). When considering how to control these devices, prior works break the assistive eating task into three parts: (i) reaching for the human’s desired food item, (ii) manipulating the food item to get a bite, and then (iii) returning that bite back to the human’s mouth. Recent research on assistive eating has explored automating this process: here the human indicates what type of food they would like using a visual or audio interface, and then the robot autonomously reaches, manipulates, and returns a bite of the desired food to the user (Feng et al. 2019; Park et al. 2020; Gallenberger et al. 2019; Gordon et al. 2020; Canal et al. 2016). However, designing a fully autonomous system to handle a task as variable and personalized as eating is exceedingly challenging: consider aspects like bite size or motion timing. Indeed—when surveyed in Bhattacharjee et al. (2020)—users with physical disabilities indicated that they preferred partially autonomy during eating tasks, since this better enables the user to convey their own preferences. In line with these findings, we develop a partially autonomous algorithm that assists the human while letting them maintain control over the robot’s motion.

Latent representations Carefully orchestrating complex movements of high-dimensional robots is difficult for humans, especially when users are limited to a low-dimensional control interface (Bajcsy et al. 2018). Prior work has tried to prune away unnecessary control axes in a data-driven fashion through Principal Component Analysis (Ciocarlie and Allen 2009; Artemiadis and Kyriakopoulos 2010; Matrone et al. 2012). Here the robot records demonstrated motions, identifies the first few eigenvectors, and leverages these eigenvectors to map the human’s inputs to high-dimensional motions. But PCA produces a linear embedding—and this embedding remains constant, regardless of where the robot is or what the human is trying to accomplish. To capture intricate non-linear embeddings, we turn to recent works that learn latent representations from data (Jonschkowski and Brock 2014). Robots can learn low-dimensional models of states (Pacelli and Majumdar 2020), dynamics (Watter et al. 2015; Xie et al. 2020), movement primitives (Noseworthy et al. 2020), trajectories (Co-Reyes et al. 2018), plans (Lynch et al. 2019), policies (Edwards et al. 2019), skills (Pertsch et al. 2020), and action representations for reinforcement learning (Chandak et al. 2019). One common theme across all of these works is that there are underlying patterns in high-DoF data, and the robot can succinctly capture these patterns with a low-DoF latent space. A second connection is that these works typically leverage autoencoders (Kingma and Welling 2014; Doersch 2016) to learn the latent space. Inspired by these latent representation methods, we similarly adapt an autoencoder model to extract the underlying pattern in high-dimensional robot motions. But unlike prior methods, we give the human control over this embedding—putting a human-in-the-loop for assistive teleoperation.

Shared autonomy Learning latent representations provides a mapping from low-dimensional inputs to high-dimensional actions. But how do we combine this learned mapping with control theory to ensure that the human can accurately complete their desired task? Prior work on assistive arms leverages shared autonomy, where the robot’s action is a combination of the human’s input and autonomous assistance (Dragan and Srinivasa 2013; Javdani et al. 2018; Jain and Argall 2019; Broad et al. 2020). Here the human controls the robot with a low-DoF interface (typically a joystick), and the robot leverages a pre-defined mapping with toggled modes to convert the human’s inputs into end-effector motion (Aronson et al. 2018; Herlant et al. 2016; Newman et al. 2018). To assist the human, the robot maintains a belief over a discrete set of possible goal objects in the environment: the robot continually updates this belief by leveraging the human’s joystick inputs as evidence in a Bayesian framework (Dragan and Srinivasa 2013; Javdani et al. 2018; Jain and Argall 2019; Gopinath et al. 2016; Nikolaidis et al. 2017). As the robot becomes increasingly confident in the human’s goal, it provides assistance to autonomously guide the end-effector towards that target. We emphasize that so far the robot has employed a pre-defined input mapping—but more related to our approach are (Reddy et al. 2018; Broad et al. 2020; Reddy et al. 2018), where the robot proposes or learns suitable dynamics models to translate user inputs to robot actions. For instance, in Reddy et al. (2018), Broad et al. (2020) the robot leverages a reinforcement learning framework to identify how to interpret and assist human inputs. Importantly, here the input space has the same number of dimensions as the action space, and so no embedding is required. We build upon this previous research in shared autonomy by helping the user reach and maintain their high-level goals, but we do so by leveraging latent representations to learn a mapping from low-DoF human inputs to high-DoF robot outputs.

3 Problem setting

We consider settings where a human user is teleoperating an assistive robot arm. The human interacts with the robot using a low-dimensional interface: this could be a joystick, sip-and-puff device, or brain-computer interface. We specifically focus on interfaces with a continuous control input (or an input that could be treated as continuous). For clarity, we will assume the teleoperation interface is a joystick throughout the rest of the paper, and we will use a joystick input in all our experiments. The assistive robot’s first objective is to map these joystick inputs to meaningful high-dimensional motions. But assistive robots can do more than just interpret the human’s inputs—they can also act autonomously to help the user reach and maintain their goals. Hence, the robot’s second objective is to integrate the learned mapping with shared autonomy. In practice, the mappings that the robot learns for one user may be counter-intuitive for another. Our final objective is to align the human’s joystick inputs with the latent actions, so that users can intuitively convey their desired motions through the control interface.

In this section we formalize our problem setting, and outline our proposed solutions to each objective. We emphasize the main variables in Table 1.

Task The human operator has a task in mind that they want the robot to accomplish. We formulate this task as a Markov decision process: \(\mathcal {M} = (\mathcal {S}, \mathcal {A}, \mathcal {T}, R, \gamma , \rho _0)\). Here \(s \in \mathcal {S} \subseteq \mathbb {R}^n\) is the state and \(a \in \mathcal {A} \subseteq \mathbb {R}^m\) is the robot’s high-DoF action. Because we are focusing on the high-dimensional robot arm, we refer to s as the robot’s state, but in practice the state s may contain both the robot’s arm position and the location of other objects in the environment (e.g., the position of the tofu).

The robot transitions between states according to \(\mathcal {T}(s, a)\), and receives reward R(s) at each timestep. We let \(\gamma \in [0, 1)\) denote the discount factor, and \(\rho _0\) captures the initial state distribution. During each interaction the robot is not sure what the human wants to accomplish (i.e., the robot does not know R). Returning to our running example, the robot does not know whether the human wants a bite of tofu, a drink of water, or something else entirely. The human communicates their desired task through joystick inputs \(u \in \mathbb {R}^d\). Because the human’s input is of lower dimension than the robot’s action, we know that \(d < m\).

Dataset Importantly, this is not the first time the user has guided their robot through the process of eating dinner. We assume access to a dataset of task demonstrations: these demonstrations can be kinesthetically provided by a caregiver or collected beforehand by the disabled user with a baseline teleoperation scheme. For example, the disabled user leverages their standard, pre-defined teleoperation mapping to guide the robot through the process of reaching for objects on the table (e.g., the plate, a glass of water) and manipulating these objects (e.g., scooping rice, picking up the glass). We collect these demonstrations and employ them to train our latent action approach. Formally, we have a dataset \(\mathcal {D} = \{(c_0, a_0), (c_1, a_1), \ldots \}\) of context-action pairs that demonstrate high-dimensional robot actions. Notice that here we introduce the context \(c \in \mathcal {C}\): this context captures the information available to the robot. For now we can think of the context c as the same as the robot’s state (i.e., \(c = s\)), but later we will explore how the robot can also incorporate its understanding of the human’s goal into this context.

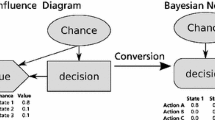

Model for learning and leveraging latent actions. (Left) given a dataset of context-action pairs, we embed the robot’s high-dimensional behavior (c, a) into a low-dimensional latent space \(z \in \mathcal {Z}\). The encoder and decoder are trained to ensure user-friendly properties while minimizing the error between the commanded human action \(a_h\) and the demonstrated action a. As a result, the decoder \(\phi (z, c)\) provides an intuitive mapping from low-dimensional latent actions to high-dimensional robot actions. (Right) at run time the human controls the robot via these low-dimensional latent actions. For now we simplify the alignment model so that \(z = u\), meaning that the human’s joystick inputs directly map to latent actions

Controlling an assistive robot with learned latent actions. The robot has been trained on demonstrations of pouring tasks, and learns a 2-DoF latent space. One axis of the latent space moves the cup across the table, and the other latent dimension pours the cup. This latent space satisfies our conditioning property because the decoded action ah depends on the current context c. This latent space also satisfies controllability because the human can leverage the two learned latent dimensions to complete the demonstrated pouring tasks (e.g., pour water into the bowl)

Latent actions Given this dataset, we first learn a latent action space \(\mathcal {Z} \subset \mathbb {R}^d\), as well as a decoder function \(\phi : \mathcal {Z} \times \mathcal {C} \rightarrow \mathcal {A}\). Here \(\mathcal {Z}\) is a low-dimensional embedding of \(\mathcal {D}\)—we specify the dimensionality of \(\mathcal {Z}\) to match the number of degrees-of-freedom of the joystick, so that the user can directly input latent actions \(z \in \mathcal {Z}\). Based on the human’s latent action z as well as the current context \(c \in \mathcal {C}\), the robot leverages the decoder \(\phi \) to reconstruct a high-dimensional action (see Figure 2):

Notice that we use \(a_h\) here: this is because this robot action is commanded by the human’s input. Consider pressing the joystick right to cause the robot arm to cut some tofu. The joystick input is a low-DoF input u, we map this input to a latent action \(z \in \mathcal {Z}\), and then leverage \(\phi \) to decode z into a high-DoF commanded action \(a_h\) that cuts the tofu. We formalize properties of \(\mathcal {Z}\) and models for learning \(\phi \) in Sect. 6.

Shared autonomy The human provides joystick inputs u—which we treat as latent actions z—and these latent actions map to high-dimensional robot actions \(a_h\). But how can the robot assist the human through its own autonomous behavior? More formally, how should the robot choose autonomous actions \(a_r\) that help guide the user? Similar to recent work on shared autonomy (Jain and Argall 2019; Dragan and Srinivasa 2013; Newman et al. 2018), we define the robot’s overall action as the linear combination of \(a_h\) (the human’s commanded action) and \(a_r\) (the robot’s autonomous guidance):

In the above, \(\alpha \in [0, 1]\) parameterizes the trade-off between direct human teleoperation (\(\alpha = 0\)) and complete robot autonomy (\(\alpha = 1\)). We specifically focus on autonomous actions that help the user reach and maintain their high-level goals. Let \(\mathcal {G}\) be a discrete set of goal positions the human might want to reach (e.g., their tofu, the rice, or a glass of water), and let \(g^* \in \mathcal {G}\) be the human’s true goal (e.g., the tofu). The robot assists the user towards goals it thinks are likely:

Here \(a_r\) is a change of state (i.e., a joint velocity) that moves from s towards the mode of the inferred goal position, and b denotes the robot’s belief. This belief is a probability distribution over the candidate goals, where \(b(g) = 1\) indicates that the robot is completely convinced that g is what the human wants. We analyze dynamics of combining Eqs. (1–3) in Sect. 7.

Alignment Recall that the human’s joystick input is u, and that our approach treats this joystick input as a latent actions z. A naive robot will simply set \(z = u\). But this misses out on how different users expect the robot to interpret their commands. For example, let us say the assistive robot is directly above some tofu. One user might expect pressing right to cause a stabbing motion, while a second user expects pressing right to cut the tofu. To personalize our approach to match individual user expectations, we learn an alignment function:

Unlike Equation (1), this is not an embedding, since both u and z have d dimensions. But like Equation (1), the alignment model does depend on the robot’s current context. A user might expect pressing right to stab the tofu when they are directly above it—but when they are interacting with a glass of water, that same user expects pressing right to tilt and pour the glass. We learn f across different contexts in Sect. 6.

4 Learning latent actions

In this section we focus on learning latent actions (see Figs. 2, 3). We define latent actions as a low-dimensional embedding of high-dimensional robot actions, and we learn this embedding from the dataset \(\mathcal {D}\) of offline demonstrations. Overall, we will search for two things: i) a latent action space \(\mathcal {Z} \subset \mathbb {R}^d\) that is of lower dimension than the robot’s action space \(\mathcal {A} \subseteq \mathbb {R}^m\), and ii) a decoder function \(\phi \) that maps from latent actions to robot actions. In practice, latent actions provide users a non-linear mapping for robot control: e.g., pressing right on the joystick causes the robot to perform a stabbing motion. We emphasize that these latent actions do not always have semantic meanings—they are not always “stabbing” or “cutting”—but generally embed the robot’s high-dimensional motion into a low-dimensional space.

Recall our motivating example, where the human is trying to teleoperate their assistive robot using a joystick to reach and manipulate food items. When controlling the robot, there are several properties that the human expects: e.g., smooth changes in the joystick input should not cause abrupt changes in robot motion, and when the human holds the joystick in a constant direction, the robot’s motion should not suddenly switch direction. In what follows, we first formalize the properties necessary for latent actions to be intuitive. These properties will guide our approach, and provide a principled way of assessing the usefulness of latent actions with humans-in-the-loop. Next, we will explore different models for learning latent actions that capture our intuitive properties.

4.1 Latent action properties

We identified four properties that user-friendly latent actions should have: conditioning, controllability, consistency, and scalability.

Conditioning Because \(\phi \) maps from latent actions to robot actions, at first glance it may seem intuitive for \(\phi \) to only depend on z, i.e., \(a_h = \phi (z)\). But this quickly breaks down in practice. Imagine that you are controlling the robot arm to get some tofu: at the start of the task, you press right and left on the joystick to move the robot towards your target. But as the robot approaches the tofu, you no longer need to keep moving towards a goal; instead, you need to use those same joystick inputs to carefully align the orientation of the fork, so that you can cut off a piece. Hence, latent actions must convey different meanings in different contexts. This is especially true because the latent action space is smaller than the robot action space, and so there are more actions to convey than we can capture with z alone. We therefore introduce \(c \in \mathcal {C}\), the robot’s current context, and condition the decoder on c, so that \(a_h = \phi (z, c)\). In the rest of this section we will treat the robot’s state s as its context, so that \(c = s\).

Controllability For latent actions to be useful, human operators must be able to leverage these actions to control the robot through their desired task. Recall that the dataset \(\mathcal {D}\) includes relevant task demonstrations, such as picking up a glass, reaching for the kitchen shelf, and scooping rice. We want the user to be able to control the robot through these same tasks when leveraging latent actions. Let \(s_i, s_j \in \mathcal {D}\) be two states from the dataset of demonstrations, and let \(s_{1}, s_{2}, ..., s_{K}\) be the sequence of states that the robot visits when starting in state \(s_0 = s_i\) and taking latent actions \(z_1, ..., z_K\). The robot transitions between the visited states using its transition function \(\mathcal {T}\) and the learned decoder \(\phi \): \(s_{k} = \mathcal {T}(s_{k-1}, \phi (z_{k-1}, s_{k-1}))\). Formally, we say that a latent action space \(\mathcal {Z}\) is controllable if for every pair of states \((s_i, s_j)\) there exists a sequence of latent actions \(\{z_k\}_{k=1}^K, z_k \in \mathcal {Z}\) such that \(s_j = s_K\). In other words, a latent action space is controllable if it can move the robot between pairs of start and goal states from the demonstrated tasks.

Consistency Let us say you are using a one-DoF joystick to guide the robot arm along a line. When you hold the joystick to the right, you expect the robot to immediately move right—but more than that, you expect the robot to move right at every point along the line! For example, the robot should not move right for a while, then suddenly go left, and switch back to going right again. To capture this, we define a latent action space \(\mathcal {Z}\) as consistent if the same latent action \(z \in \mathcal {Z}\) has a similar effect on how the robot behaves in nearby states. We formulate this similarity via a task-dependent metric \(d_M\): e.g., in pouring tasks \(d_M\) could measure the orientation of the robot’s end-effector, and in reaching tasks \(d_M\) could measure the position of the end-effector. Applying this metric, consistent latent actions should satisfy:

when \(\Vert s_1 - s_2\Vert < \delta \) for some \(\epsilon , \delta >0\). We emphasize that we do not need to know \(d_M\) for our approach; we only introduce this metric as a way of quantifying similarity.

Scalability Our last property is complementary to consistency. Thinking again about the example of teleoperating a robot along a line, when you press the joystick slightly to the right, you expect the robot to move slowly; and when you hold the joystick all the way to the right, you anticipate that the robot will move quickly. Smaller inputs should cause smaller motions, and larger inputs should cause larger motions. Formally, we say that a latent action space \(\mathcal {Z}\) is scalable if \(\Vert s-s'\Vert \rightarrow \infty \) as \(\Vert z \Vert \rightarrow \infty \), where \(s'=\mathcal {T}(s, \phi (z, s))\). When put together, our consistency and scalability properties ensure that the decoder function \(\phi \) is Lipschitz continuous.

Shared autonomy with learned latent actions. (Left) as the human teleoperates the robot towards their desired goal, the robot’s belief in that goal increases, and the robot selects assistive actions ar to help the human autonomously reach and maintain their high-level goal. (Right) the meaning of the latent actions changes as a function of the robot’s belief. At the start of the task—when the robot is not sure about any goal—the latent actions z produce high-level reaching motions (shown in blue). As the robot becomes confident in the human’s goal the meaning of the latent actions becomes more refined, and z increasingly controls fine-grained manipulation (shown in green)

4.2 Models for learning latent actions

Now that we have formally introduced the properties that a user-friendly latent space should satisfy, we will explore low-DoF embeddings that capture these properties; specifically, models which learn \(\phi :\mathcal {Z} \times \mathcal {C} \rightarrow \mathcal {A}\) from offline demonstrations \(\mathcal {D}\). We are interested in models that balance expressiveness with intuition: the embedding must reconstruct high-DoF actions while remaining controllable, consistent, and scalable. We assert that only models which reason over the robot’s context when decoding the human’s inputs can accurately and intuitively interpret the latent action. Our overall model structure is outlined in Fig. 2.

Reconstructing Actions Let us return to our assistive eating example: when the person applies a low-dimensional joystick input, the robot completes a high-dimensional action. We use autoencoders to move between these low- and high-DoF action spaces. Define \(\psi : \mathcal {C} \times \mathcal {A} \rightarrow \mathcal {Z}\) as an encoder that embeds the robot’s behavior into a latent space, and define \(\phi : \mathcal {Z} \rightarrow \mathcal {A}\) as a decoder that reconstructs a high-DoF robot action \(a_h\) from this latent space. Intuitively, the reconstructed robot action \(a_h\) should match the demonstrated action a. To encourage models to learn latent actions that accurately reconstruct high-DoF robot behavior, we incorporate the reconstruction error \(\Vert a - a_h\Vert ^2\) into the model’s loss function. Let \(\mathcal {L}\) denote the loss function our model is trying to minimize; when we only focus on reconstructing actions, our loss function is:

Both principal component analysis (PCA) and autoencoder (AE) models minimize this loss function.

Regularizing Latent Actions When the user slightly tilts the joystick, the robot should not suddenly cut the entire block of tofu. To better ensure this consistency and scalability, we incorporate a normalization term into the model’s loss function. Let us define \(\psi : \mathcal {C} \times \mathcal {A} \rightarrow \mathbb {R}^d \times \mathbb {R}_+^d\) as an encoder that outputs the mean \(\mu \) and covariance \(\sigma \) over the latent action space. We penalize the divergence between this latent action space and a normal distribution: \(KL(\mathcal {N}(\mu , \sigma ) ~\Vert ~ \mathcal {N}(0, 1))\). When we incorporate this normalizer, our loss function becomes:

Variational autoencoder (VAE) models Kingma and Welling (2014); Doersch (2016) minimize this loss function by trading-off between reconstruction error and normalization.

Conditioning on State Importantly, we recognize that the meaning of the human’s joystick input often depends on what part of the task the robot is performing. When the robot is above a block of tofu, pressing down on the joystick indicates that the robot should stab the food; but—when the robot is far away from the tofu —it does not make sense for the robot to stab! So that robots can associate meanings with latent actions, we condition the interpretation of the latent action on the robot’s current context. Define \(\phi : \mathcal {Z} \times \mathcal {C} \rightarrow \mathcal {A}\) as a decoder that now makes decisions based on both z and c. Leveraging this conditioned decoder, our final loss function is:

We expect that conditional autoencoders (cAE) and conditional variational autoencoders (cVAE) which use \(\phi \) will learn more expressive and controllable actions than their non-context conditioned counterparts. Note that cVAEs minimize Eq. (8), while cAEs do not include the normalization term (i.e., \(\lambda = 0\)).

Relation to Properties Models trained to minimize the listed loss functions are encouraged to satisfy our user-friendly properties. For example, in Eq. (8) the decoder is conditioned on the current context, while minimizing the reconstruction loss \(\Vert a - \phi (z,c)\Vert ^2\) ensures that the robot can reproduce the demonstrations, and is therefore controllable. Enforcing consistency and scalability are more challenging—particularly when we do not know the similarity metric \(d_M\)—but including the normalization term prevents the latent space from assigning arbitrary and irregular values to z. To better understand how these models enforce our desired properties, we conduct a set of controlled simulations in Sect. 8.1.

5 Combining latent actions with shared autonomy

The latent actions learned in Sect. 4 provide an expressive mapping between low-dimensional user inputs and high-dimensional robot actions. But controlling an assistive robot with latent actions alone still presents a challenge: any imprecision or noise in either the user’s inputs or latent space is reflected in the decoded actions. Recall our eating example: at the start of the task, the human uses latent actions to guide the robot towards their high-level goal (i.e., reaching the tofu). Once the robot is close to the tofu, however, the human no longer needs to control reaching motions—instead, the human leverages latent actions to precisely manipulate the tofu, performing low-level cutting and stabbing tasks. Here the human’s inputs should not unintentionally cause the robot arm to drift away from the tofu or suddenly jerk into the table. Instead, the robot should maintain the human’s high-level goal. In this section we incorporate shared autonomy alongside latent actions: this approach assists the human towards their high-level goals, and then maintains these goals as the human focuses on low-level manipulation. We visualize shared autonomy with learned latent actions in Fig. 4.

5.1 Latent actions with shared autonomy

We first explain how to combine latent actions with shared autonomy. Remember that the human’s joystick input is u and the latent action is z. For now we assume some pre-defined mapping from u to z (i.e., \(z=u\)) so that the human’s joystick inputs are treated as latent actions. In the last section we learned a decoder \(a_h = \phi (z,c)\), where the output of this decoder is a high-dimensional robot action commanded by the human. Here we combine this commanded action with \(a_r\), an autonomous assistive action that helps the user reach and maintain their high-level goals.

Belief over Goals Similar to Dragan and Srinivasa (2013); Javdani et al. (2018); Gopinath et al. (2016), we assume access to a discrete set of high-level goals \(\mathcal {G}\) that the human may want to reach. Within our eating scenario these goals are food items (e.g., the tofu, rice, a plate, marshmallows). Although the robot knows which goals are possible, the robot does not know the human’s current goal \(g^* \in \mathcal {G}\). We let \(b=P(g ~|~ s^{0:t}, u^{0:t})\) denote the robots belief over this space of candidate goals, where \(b(g) = 1\) indicates that the robot is convinced that g is the humans desired goal. Here \(s^{0:t}\) is the history of states and \(u^{0:t}\) is the history of human inputs: we use Bayesian inference to update the robot’s belief b given the human’s past decisions:

This Bayesian inference approach for updating b is explored by prior work on shared autonomy Jain and Argall (2019).

Importantly, the meaning of the human’s joystick inputs changes as a function of the robot’s belief. Imagine that you are using a 1-DoF joystick to get the tofu in our eating example. At the start of the task—when the robot is unsure of your goal—you press left and right on the joystick to move towards your high-level goal. Once you’ve reached the tofu—and the robot is confident in your goal—you need to use those same joystick inputs to carefully align the orientation of the fork. In order to learn latent action spaces that can continuously alternate along a spectrum of high-level goals and fine-grained preferences, we now condition \(\phi \) on the robot’s current state as well as its belief. Hence, instead of \(c=s\), we now have \(c = (s, b)\). Conditioning on belief enables the meaning of latent actions to change based on the robot’s confidence. As a result of this proposed structure, latent actions purely indicate the desired goal when the robot is unsure; and once the robot is confident about the human’s goal, latent actions gradually change to convey the precise manipulation. We note that b is available when collecting demonstrations \(\mathcal {D}\), since the robot can compute its belief using the Bayesian update above based on the demonstrated trajectory. Take a demonstration that moves the robot to the tofu: initially the robot has a uniform belief over goals, but as the demonstration moves towards the tofu, the robot applies Eq. (9) to increase its belief b over the tofu goal.

Shared Autonomy Recall that the robot applies assistance via action \(a_r\) in Eq. (2). In order to assist the human, the robot needs to understand the human’s intent—i.e., which goal they want to reach. The robot’s understanding of the human’s intended goal is captured by belief b, and we leverage this belief to select an assistive action \(a_r\). As shown in Eq. (3), the robot selects \(a_r\) to guide the robot towards each discrete goal \(g \in \mathcal {G}\) in proportion to the robot’s confidence in that goalFootnote 2. Combining these equations with our learned latent actions, we find the robot’s overall action a:

Recall that \(\alpha \in [0, 1]\) arbitrates between human control (\(\alpha = 0\)) and assistive guidance (\(\alpha = 1\)). In practice, if the robot has a uniform prior over which morsel of food the human wants to eat, \(a_r\) guides the robot to the center of these morsels. And—when the human indicates a desired morsel—\(a_r\) moves the robot towards that target before maintaining the target position.

5.2 Reaching and changing goals

In Eq. (10) we incorporated shared autonomy with latent actions to tackle assistive eating tasks that require high-level reaching and precise manipulation. Both latent actions and shared autonomy have an independent role within this method: but how can we be sure that the combination of these tools will remain effective? Returning to our eating example—if the human inputs latent actions, will shared autonomy correctly guide the robot to the desired morsel of food? And what if the human has multiple goals in mind (e.g., getting a chip and then dipping it in salsa)—can the human leverage latent actions to change goals even when shared autonomy is confident in the original goal?

Converging to the Desired Goal We first explore how our approach ensures that the human reaches their desired goal. Consider the Lyapunov function:

where e denotes the error between the robot’s current state s and the human’s goal \(g^*\). We want the robot to choose actions that minimize Eq. (11) across a spectrum of user skill levels and teleoperation strategies. Let us focus on the common setting in which s is the robot’s joint position and a is the joint velocity, so that \(\dot{s}(t) = a(t)\). Taking the derivative of Eq. (11) and substituting in this transition function, we reachFootnote 3:

We want Eq. (12) to be negative, so that V (and thus the error e) decrease over time. A sufficient condition for \(\dot{V} < 0\) is:

where \(\mathcal {G}'\) is the set of all goals except \(g^*\). As a final step, we bound the magnitude of the decoded action, such that \(\Vert \phi (\cdot )\Vert < \sigma _h\), and we define \(\sigma _r\) as the distance between s and the furthest goal: \(\sigma _r = \max _{g \in \mathcal {G}'} \Vert g - s\Vert \). Now we have \(\dot{V} < 0\) if:

We define \(\delta := \sigma _h + \big (1 - b(g^*)\big )\cdot \sigma _r\). We therefore conclude that our approach in Eq. (10) yields uniformly ultimately bounded stability about the human’s goal, where \(\delta \) affects the radius of this bound Spong et al. (2006). As the robot’s confidence in \(g^*\) increases, \(\delta \rightarrow \sigma _h\), and the robot’s error e decreases so long as \(\Vert e(t) \Vert > \sigma _h\). Intuitively, this guarantees that the robot will move to some ball around the human’s goal \(g^*\) (even if we treat the human input as a disturbance), and the radius of that ball decreases as the robot becomes more confident.

Overview of alignment model. The human has in mind a preferred mapping between their joystick inputs u and their commanded actions \(a_h\). We break this into a two step process: aligning the joystick inputs with latent actions z, and then decoding z into a high-DoF action \(a_h\). In previous sections we focused on the decoder \(\phi \); now we learn a personalized alignment model f. The robot learns f offline in a semi-supervised manner by combining labeled queries with intuitive priors that capture the human’s underlying expectations of how the control mapping should behave

Changing Goals Our analysis so far suggests that the robot becomes constrained to a region about the most likely goal. This works well when the human correctly conveys their intentions to the robot—but what if the human makes a mistake, or changes their mind? How do we ensure that the robot is not trapped at an undesired goal? Re-examining Eq. (14), it is key that—in every context c—the human can convey sufficiently large actions \(\Vert \phi (z,c)\Vert \) towards their preferred goal, ensuring that \(\sigma _h\) does not decrease to zero. Put another way, the human must be able to increase the radius of the bounding ball, reducing the constraint imposed by shared autonomy.

To encourage the robot to learn latent actions that increase this radius, we introduce an additional term into our model’s loss function \(\mathcal {L}\). We reward the robot for learning latent actions that have high entropy with respect to the goals; i.e., in a given context c there exist latent actions z that cause the robot to move towards each of the goals \(g \in \mathcal {G}\). Define \(p_{c}(g)\) as proportional to the total score \(\eta \) accumulated for goal g:

where the score function \(\eta \) indicates how well action z taken from context c conveys the intent of moving to goal g, and the distribution \(p_{c}\) over \(\mathcal {G}\) captures the proportion of latent actions z at context c that move the robot toward each goal. Intuitively, \(p_{c}\) captures the comparative ease of moving toward each goal: when \(p_{c}(g) \rightarrow 1\), the human can easily move towards goal g since all latent actions at c induce movement towards goal g and consequently, no latent actions guide the robot towards any other goals. We seek to avoid learning latent actions where \(p_{c}(g) \rightarrow 1\), because in these scenarios the teleoperator cannot correct their mistakes or move towards a different goal. Recall from Sect. 4 that the model should minimize the reconstruction error while regularizing the latent space. We now argue that the model should additionally maximize the Shannon entropy of p, so that the loss function becomes:

Here the hyperparameter \(\lambda _2 > 0\) determines how much importance is assigned to maximizing the entropy over goals. When combining shared autonomy with latent actions, we employ this loss function to train the decoder \(\phi \) from dataset \(\mathcal {D}\).

6 Aligning latent actions with user preferences

In Sect. 6 we learned latent actions, and in Sect. 7 we combined these latent actions with shared autonomy to handle precise manipulation tasks. Throughout these sections we treated the human’s joystick inputs as the latent actions (i.e., \(z = u\)) since both the joystick inputs and the latent actions are the same dimensionality. However, different users have different expectations for how the robot will interpret their inputs! Imagine that the shared autonomy has assisted us to the tofu, and now we want to control the robot through a cutting motion. One user expects \(u = down\) to cause the robot to cut, but another person thinks \(u = down\) should cause a stabbing motion. Accordingly, in this section we learn a personalized alignment \(z = f(u, c)\) that converts the human’s joystick inputs u to their preferred latent action z (see Fig. 5). Our goal is to make the robotic system easier to control: instead of forcing the human to adapt to \(\phi \), we want the robot to adapt to the user’s preferences (without fundamentally changing the latent action space or decoder \(\phi \)).

To learn the human’s preference we will query the user, showing them example robot motions and then asking them for the corresponding joystick input. But training our alignment model f may require a large number of motion-joystick pairs, particularly in complex tasks where the user must leverage the same joystick input to accomplish several things. It is impractical to ask the human to provide all of these labels. Accordingly, to address the challenge of insufficient training data, we employ a semi-supervised learning method (Chapelle et al. 2006). In this section we first outline our approach, and then formulate a set of intuitive priors that facilitate semi-supervised learning from limited human feedback.

6.1 Alignment model

Recall from Eq. (4) that we seek to learn a function approximator \(f : \mathcal {U} \times \mathcal {C} \rightarrow \mathcal {Z}\). Importantly, this alignment model is conditioned on the current context \(c \in \mathcal {C}\). Consider the person in our motivating example, who is using a 2-axis joystick to control a high-DoF assistive robot arm to reach and cut tofu. The user’s preferred way to control the robot is unclear: what does the user mean if they push the joystick right? When the robot is left of the tofu, the user might intend to move the robot towards the tofu—but when the robot is directly above the tofu, pressing right now indicates that the robot should rotate and start a cutting motion! This mapping from the user input to intended action is not only person dependent, but it is also context dependent. In practice, this context dependency prevents us from learning a single transformation to uniformly apply across the robot’s workspace; instead, we need an intelligent strategy for understanding the human’s preferences in different contexts.

Model To capture this interdependence we employ a general Multi-Layer Perceptron (MLP). The MLP f takes in the current user input \(u^t\) and context \(c^t\), and outputs a latent action \(z^t\). Combining f with our latent action model, we now have a two-step mapping between the human’s low-dimensional input and the human’s high-dimensional command:

When using our alignment model online, we get the human’s commanded action using Eq. (17), and then combine this with shared autonomy to provide the overall robot action a. But offline—when we are learning f—we set \(a = a_h\), so that the robot directly executes the human’s commanded action. This disentangles the effects of shared autonomy and latent actions, and lets us focus on learning the preferred mapping from joystick inputs u to robot actions a. Given that the robot takes action \(a^t\) at the current timestep t, the state \(s^{t+1}\) at the next timestep follows our transition model: \(s^{t+1} = \mathcal {T}(s^t, a^t)\). Letting \(a = a_h\), and plugging in Equation (17), we get the following relationship between joystick inputs and robot motion:

Our objective is to learn f so that \(s^{t+1} = T(s^t, u^t)\) matches the human’s expectations. The overall training process is visualized in Fig. 6.

Loss function We train f to minimize the loss function \(\mathcal {L}_{\text {align}}\). We emphasize that this loss function (used for training the alignment model f) is different than the loss function described in Sects. 6 and 7 (which was used for training the decoder \(\phi \)). Importantly, \(\mathcal {L}_{\text {align}}\) must capture the individual user’s expected joystick mapping—and to understand what the user expects, we start by asking a set of questions. In each separate query, the robot starts in a state \(s^t\) and moves to some state \(s^*\). We then ask the user to label this motion with their preferred joystick input u, resulting in the labeled data tuple \((s^t, u^t, s^*)\). For instance, the robot arm starts above the tofu, and then stabs down to break off a piece: you might label this motion by holding down on the joystick (i.e., \(u = \text {down}\)).

Given a start state \(s^t\) and input \(u^t\) from the user’s labeled data, the robot learns f to minimize the distance between \(T(s^t, u^t)\) and \(s^*\). Letting N denote the number of queries that the human has answered, and letting d be the distance metric, our alignment function should minimize:

If we could ask the human as many questions as necessary, then \(\mathcal {L}_{\text {align}} = \mathcal {L}_{\text {sup}}\), and our alignment function only needs to minimize the supervised loss. But collecting this large dataset is impractical. Accordingly, to minimize the number of questions the human must answer, we introduce additional loss terms in \(\mathcal {L}_{\text {align}}\) that capture underlying priors in human expectations.

Training our alignment model \(z = f(u, c)\). Here the context c is equal to the robot’s state s. (Left) the example task is to move the robot’s end-effector in a 2D plane, and the current user prefers for the robot’s end-effector motion to align with their joystick axes, so that \(u_1\) moves the robot in the x-axis and \(u_2\) moves the robot in the y-axis. (Right) we take snapshots at three different points during training, and plot how the robot actually moves when the human presses up, down, left, and right. Note that this alignment is context dependent. As training progresses, the robot learns the alignment f, and the robot’s motions are gradually and consistently pushed to match with the human’s individual preferences

6.2 Reducing human data with intuitive priors

Our insight here is that humans share some underlying expectations of how the control mapping should behave (Jonschkowski and Brock 2014). We will formulate these common expectations—i.e., priors—as loss terms that f minimizes within semi-supervised learning.

When introducing these priors, it helps to refine our notation. Recall that s is the system state: here we use s to specifically refer to the robot’s joint position, and we denote the forward kinematics of the robot arm as \(x = \varPsi (s)\). End-effector pose x is particularly important, since humans often focus on the robot’s gripper during eating tasks. When the human applies joystick input u at state s, the corresponding change in end-effector pose x is: \(\varDelta x = \varPsi (T(u, s)) - \varPsi (s)\). With these definitions in mind, we argue an intuitive controller should satisfy the properties listed below. We emphasize that—although these properties share some common themes with the latent action properties from Sect. 6—the purpose of these properties is different. When formalizing the properties for latent actions we focused on enabling the human to complete tasks using these latent actions. By contrast, here we focus on intuitive and task-agnostic expectations for controller mappings.

Proportionality The amount of change in the position and orientation of the robot’s end-effector should be proportional to the scale of the human’s input. In other words, for scalar \(\alpha \), we expect:

We accordingly define the proportionality loss \(\mathcal {L}_{\text {prop}}\) as:

where \(\alpha \) is sampled from our range of joystick inputs.

Reversability If a joystick input u makes the robot move forward from \(s_1\) to \(s_2\), then the opposite input \((-u)\) should move the robot back from \(s_2\) to its original end-effector position. In other words, we expect:

This property ensures users can recover from their mistakes. We define the reversability loss \(\mathcal {L}_{\text {reverse}}\) as:

Here \(\varPsi (s)\) is the current position and orientation of the robot’s end-effector, and the right term is the pose of the end-effector after executing human input u followed by the opposite input \((-u)\).

Consistency The same input taken at nearby states should lead to similar changes in robot pose. We previously discussed a similar property in Sect. 6 when formalizing latent actions. Here we specifically focus on the input-output relationship between joystick input u and end-effector position \(x = \varPsi (s)\):

We expect \(\Vert \varDelta x_1 - \varDelta x_2 \Vert \rightarrow 0\) as \(\Vert s_1 - s_2 \Vert \rightarrow 0\). Consistency prevents sudden changes in the alignment mapping. We define the consistency loss \(\mathcal {L}_{\text {con}}\) as:

When the hyperparameter \(\gamma \rightarrow 0\), the robot only enforces consistency at local states, and when \(\gamma \rightarrow \infty \), the robot tries to enforce consistency at all states.

Semi-supervised learning When learning our alignment model f, we first collect a batch of robot motions \((s, s^*)\). The human labels N of these (start-state, end-state) pairs with their preferred joystick input u, so that we have labeled data \((s, u, s^*)\). We then train the alignment model to minimize the supervised loss for the labeled data, as well as the semi-supervised loss for the unlabeled data. Hence, the cumulative loss function is:

Importantly, incorporating these different loss terms—which are inspired by human priors over controllable spaces (Jonschkowski and Brock 2014)—enables the robot to generalize the labeled human data (which it performs supervised learning on) to unlabeled states (which it can now perform semi-supervised learning on).

7 Algorithm

Sections 4, 5, and 6 developed parts of our approach. Here we put these pieces together to present our general algorithm for controlling assistive eating robots with learned latent actions. Our approach is summarized in Algorithm 1 and explained below.

Given an assistive eating scenario, we start by identifying the food items and other potential goals that the human may want to reach (Line 1). We then collect high-dimensional kinesthetic demonstrations, where a caretaker backdrives the robot through task-related motions that interact with these potential goals (Line 2). Leveraging the properties and models from Sect. 6, we then train our latent action space and learn decoder \(\phi \) (Line 3). Next, we show the user example robot motions—e.g., by sampling values of z—and ask the user to label these motions with their preferred joystick input (Line 4). Applying the priors developed in Sect. 6, we generalize from a small number of human labels to learn the alignment f between joystick inputs and latent actions (Line 5).

Once we have learned \(\phi \) and f, we are ready for the human-in-the-loop. At each timestep the human presses their low-DoF joystick to provide input u. We find the latent action z that is aligned with the human’s input (Line 6), and then decode that low-DoF latent action to get a high-DoF robot action \(a_h\) (Line 7). In order to help the human reach and maintain their high-level goals, we incorporate the shared autonomy approach from Sect. 7. Shared autonomy selects an assistive action \(a_r\) based on the current belief over the human’s goal (Line 8), and the robot blends \(a_r\) and \(a_h\) to take overall action a (Line 9). Finally, the robot applies Bayesian inference to update its understanding of the human’s desired goal based on their joystick input (Line 10). We repeat this process until the human has finished eating.

How Practical is Our Approach? One concern is the amount of data required to learn latent actions. In all of the studies reported below—where the assistive robot makes a mock-up apple pie, assembles dessert, and cuts tofu—the robot was trained with a maximum of twenty minutes of kinesthetic demonstrations, and all training was done on-board the robot computer. We recognize that this short training time is likely due to the structure of the cVAE model used in these tasks and may not hold true in general; however, this easy implementation holds promise for future use. In the following sections, we demonstrate the objective and subjective benefits of Algorithm 1, as well as highlighting some of its shortcomings.

Where Do the Goals Come From? Another question is how the robot detects the discrete set of goals \(\mathcal {G}\) that the human may want to reach. Here we turn to perception, where recent assistive eating work shows how robot arms can estimate the pose of various objects of interest (Feng et al. 2019; Park et al. 2020). Determining which objects are potential goals is simplified in eating settings, since the target items are largely consistent (e.g., food items, cups, plates, and bowls). The location of these goals is included in the state s and the robot uses this information when decoding the human’s joystick inputs. Although not covered in this paper, it is also possible to condition latent actions directly on the robot’s perception, so that s becomes the visual inputs (Karamcheti et al. 2021).

8 Simulations

We performed three separate simulations, one for each key aspect of our proposed method. First we leverage different autoencoder models to learn latent actions, and determine which types of models best capture the user-friendly properties formalized in Sect. 6. Our second simulation then compares learned latent actions alone to latent actions with shared autonomy (Sect. 7), and focuses on how shared autonomy helps users reach, maintain, and change their high-level goals. Finally, we learn the alignment model from Sect. 6 between joystick inputs and latent actions. We compare versions of our semi-supervised approach with intuitive priors, and see how these priors improve the alignment when we only have access to limited and imperfect human feedback. All three simulations were performed in controlled conditions with simulated humans and simulated or real robot arms. These simulated humans chose joystick inputs according to mathematical models of human decision making, as detailed below.

8.1 Do learned latent actions capture our user-friendly properties?

Here we explore how well our proposed models for learning latent actions capture the user-friendly properties formalized in Sect. 6. These properties include controllability, consistency, and scalability.

Setup We simulate one-arm and two-arm planar robots, where each arm has \(n=5\) degrees-of-freedom. The state \(s \in \mathbb {R}^{n}\) is the robot’s joint position, and the action \(a \in \mathbb {R}^{n}\) is the robot’s joint velocity. Hence, the robot transitions according to: \(s^{t+1} = s^t + a^t \cdot dt\), where dt is the step size. Demonstrations consist of trajectories of state-action pairs: in each of different simulated tasks, the robot trains with a total of 10,000 state-action pairs.

Tasks The simulated robots perform four different tasks.

-

1.

Sine: one 5-DoF robot arm moves its end-effector along a sine wave with a 1-DoF latent action

-

2.

Rotate: two 5-DoF robot arms are holding a box, and rotate that box about a fixed point using a 1-DoF latent action

-

3.

Circle: one 5-DoF robot moves back and forth along circles of different radii with a 2-DoF latent action

-

4.

Reach: one 5-DoF robot arm reaches from a start location to a goal region with a 1-DoF latent action

Model details We test latent action models which minimize the different loss function described in Sect. 6. Specifically, we test:

-

Principal Component Analysis (PCA)

-

Autoencoders (AE)

-

Variational autoencoders (AE)

-

Conditioned autoencoders (cAE)

-

Conditioned variational autoencoders (cVAE)

The encoders and decoders contain between two and four linear layers (depending on the task). The loss function is optimized using Adam with a learning rate of \(1e^{-2}\). Within the VAE and cVAE, we set the normalization weight \(<1\) to avoid posterior collapse.

Dependent measures To determine accuracy, we measure the mean-squared error between the intended actions a and reconstructed actions \({\hat{a}}\) on a test set of state-action pairs (s, a) drawn from the same distribution as the training set.

To test model controllability, we select pairs of start and goal states \((s_i,s_j)\) from the test set, and solve for the latent actions z that minimize the error between the robot’s current state and \(s_j\). We then report this minimum state error.

We jointly measure consistency and scalability: to do this, we select 25 states along the task, and apply a fixed grid of latent actions \(z_i\) from \([-1, +1]\) at each state. For every (s, z) pair we record the distance and direction that the end-effector travels (e.g., the direction is \(+1\) if the end-effector moves right). We then find the best-fit line relating z to distance times direction, and report its \(R^2\) error.

Our results are averaged across 10 trained models of the same type, and are listed in the form \(mean \pm SD\).

Hypotheses We have the following two hypotheses:

- H1.:

-

Only latent action models conditioned on the context will accurately reconstruct actions from low-DoF inputs.

- H2.:

-

Conditioned autoencoders and conditioned variational autoencoders will learn a latent space that is controllable, consistent, and scalable.

Results for the Sine task. (A) Mean-squared error between intended and reconstructed actions normalized by PCA test loss. (B) Effect of the latent action z at three states along the sine wave for the cVAE model. Darker colors correspond to \(z>0\) and lighter colors signify \(z<0\). Above we plot the distance that the end effector moves along the sine wave as a function of z at each state. (C) Rollout of robot behavior when applying a constant latent input \(z=+1\), where both VAE and cVAE start at the same state. (D) End-effector trajectories for multiple rollouts of VAE and cVAE

Sine task This task and our results are shown in Fig. 7. We find that conditioning the decoder on the current context, i.e., \(\phi (z,c)\), greatly improves accuracy when compared to the PCA baseline, i.e., \(\phi (z)\). Here AE and VAE incur \(98.0\pm 0.6\%\) and \(100\pm 0.8\%\) of the PCA loss, while cAE and cVAE obtain \(1.37\pm 1.2\%\) and \(3.74\pm 0.4\%\) of the PCA loss, respectively.

We likewise observe that cAE and cVAE are more controllable than their alternatives. When using the learned latent actions to move between 1000 randomly selected start and end states along the sine wave, cAE and cVAE have an average end-effector error of \(0.05\pm 0.01\) and \(0.10\pm 0.01\). Models without state conditioning—PCA, AE, and VAE—have average errors 0.90, \(0.94\pm 0.01\), and \(0.95\pm 0.01\).

When evaluating consistency and scalability, every tested model has a roughly linear relationship between latent actions and robot behavior: PCA has the highest \(R^2 = 0.99\), while cAE and cVAE have the lowest \(R^2 = 0.94 \pm 0.04\) and \(R^2 = 0.95 \pm 0.01\).

Results for the Rotate task. (A) The robot uses two arms to hold a light blue box, and learns to rotate this box around the fixed point shown in teal. Each state corresponds to a different fixed point, and positive z causes counterclockwise rotation. On right we show how z affects the rotation of the box at each state. (B) rollout of the robot’s trajectory when the user applies \(z=+1\) for VAE and cVAE models, where both models start in the same state. Unlike the VAE, the cVAE model coordinates its two arms

Rotate task We summarize the results for this two-arm task in Fig. 8. Like in the Sine task, the models conditioned on the current context are more accurate than their non-conditioned counterparts: AE and VAE have \(28.7\pm 4.8\%\) and \(38.0\pm 5.8\%\) of the PCA baseline loss, while cAE and cVAE reduce this to \(0.65\pm 0.05\%\) and \(0.84\pm 0.07\%\). The context conditioned models are also more controllable: when using the learned z to rotate the box, AE and VAE have \(56.8\pm 9\%\) and \(71.5\pm 8\%\) as much end-effector error as the PCA baseline, whereas cAE and cVAE achieve \(5.4\pm 0.1\%\) and \(5.9\pm 0.1\%\) error.

When testing for consistency and scalability, we measure the relationship between the latent action z and the change in orientation for the end-effectors of both arms (i.e., ignoring their location). Each model exhibits a linear relationship between z and orientation: \(R^2 = 0.995\pm 0.004\) for cVAE and \(R^2 = 0.996\pm 0.002\) for cVAE. In other words, there is an approximately linear mapping between z and the orientation of the box that the two arms are holding.

Circle task Next, consider the one-arm task in Fig. 9 where the robot has a 2-DoF latent action space. We here focus on the learned latent dimensions \(z = [z_1,z_2]\), and examine how these latent dimensions correspond to the underlying task. Recall that the training data consists of state-action pairs which translate the robot’s end-effector along (and between) circles of different radii. Ideally, the learned latent dimensions correspond to these axes, e.g., \(z_1\) controls tangential motion while \(z_2\) controls orthogonal motion. Interestingly, we found that this intuitive mapping is only captured by the state conditioned models. The average angle between the directions that the end-effector moves for \(z_1\) and \(z_2\) is \(27\pm 20^{\circ }\) and \(34 \pm 15^{\circ }\) for AE and VAE models, but this angle increases to \(72 \pm 9^{\circ }\) and \(74 \pm 12^{\circ }\) for the cAE and cVAE (ideally \(90^{\circ }\)). The state conditioned models better disentangle their low-dimensional embeddings, supporting our hypotheses and demonstrating how these models produce user-friendly latent spaces.

Results for the Circle task. (A) mean-squared error between desired and reconstructed actions normalized by the PCA test loss. (B) 2-DoF latent action space \(z = [z_1, z_2]\) for VAE and cVAE models. The current end-effector position is shown in black, and the colored grippers depict how changing \(z_1\) or \(z_2\) affects the robot’s state. Under the cVAE model, these latent dimensions move the end-effector tangent or orthogonal to the circle

Results for the Reach task. In both plots, we show the end-effector trajectory when applying constant inputs \(z \in [-1,+1]\). The lightest color corresponds to \(z=-1\) and the darkest color is \(z=+1\). The goal region is highlighted, and the initial end-effector position is black. (a) trajectories with the VAE model. (b) trajectories with the cVAE model. The latent action z controls which part of the goal region the trajectory moves towards

Reach task In the final task, a one-arm robot trains on trajectories that move towards a goal region (see Fig. 10). The robot learns a 1-DoF latent space, where z controls the direction that the trajectory moves (i.e., to the left or right of the goal region). We focus on controllability: can robots utilize latent actions to reach their desired goal? In order to test controllability, we sample 100 goals randomly from the goal region, and compare robots that attempt to reach these goals with either VAE or cVAE latent spaces. The cVAE robot more accurately reaches its goal: the \(L_2\) distance between the goal and the robot’s final end-effector position is \(0.57\pm 0.38\) under VAE and \(0.48\pm 0.5\) with cVAE. Importantly, using conditioning also improves the movement quality. The average start-to-goal trajectory is \(5.1\pm 2.8\) units when using the VAE, and this length drops to \(3.1\pm 0.5\) with the cVAE model.

Summary The results of our Sine, Rotate, Circle, and Reach tasks support hypotheses H1 and H2. Latent action models that are conditioned on the context more accurately reconstruct high-DoF actions from low-DoF embeddings (H1). Moreover, conditioned autoencoders and conditioned variational autoencoders learn latent action spaces which capture our desired properties: controllability, consistency, and scalability (H2).

8.2 Do latent actions with shared autonomy help users reach and change goals?

Now that we have tested our method for learning latent actions, the next step is to combine these latent actions with shared autonomy (see Sect. 7). Here we explore how this approach works with a spectrum of different simulated users. We simulate human teleoperators with various levels of expertise and adaptability, and measure whether these users can interact with our algorithm to reach and change high-level goals.

Incorporating shared autonomy In the previous simulations we used latent actions by themselves to control the robot. Now we compare this approach with and without shared autonomy:

-

Latent actions with no assistance (LA)

-

Latent actions with shared autonomy (LA+SA)

-

Latent actions trained to maximize entropy with shared autonomy (LA+SA+Entropy)

For both LA and LA+SA we learn the latent space with a conditioned autoencoder (i.e., cAE in the previous section). However, here the context includes both state and belief. In other words, \(c = (s,b)\). We also test LA + SA + Entropy, where the model uses Equation (16) to reward entropy in the learned latent space.

Environments We implement these models on both a simulated and a real robot. The simulated robot is a 5-DoF planar arm, and the real robot is a 7-DoF Franka Emika. For both robots, the state s captures the current joint position, and the action a is a change in joint position, so that: \(s^{t+1} = s^t + a^t \cdot dt\).

Task We consider a manipulation task where there are two coffee cups in front of a robot arm (see Fig. 12). The human may want to reach and grasp either cup (i.e., these cups are the potential goals). We embed the robot’s high-DoF actions into a 1-DoF input space: the simulated users had to convey both their goal and preference only by pressing left and right on the joystick.

Simulated humans The users attempting to complete this task are approximately optimal, and make decisions that guide the robot accordingly to their goal \(g^*\). Remember that x is the position of the robot’s end-effector and \(\varPsi \) is the forward kinematics. The humans have reward function \(R = -\Vert g^* - x\Vert ^2\), and choose latent actions z to move the robot towards \(g^*\):

Here \(\beta \ge 0\) is a temperature constant that affects the user’s rationality. When \(\beta \rightarrow 0\), the human selects increasingly random z, and when \(\beta \rightarrow \infty \), the human always chooses the z that moves towards \(g^*\). We simulate different types of users by varying \(\beta (t)\).

Users with fixed expertise We first simulate humans that have fixed levels of expertise. Here expertise is captured by \(\beta \) from Eq. (24): users with high \(\beta \) are proficient, and rarely make mistakes with noisy inputs. We anticipate that all algorithms will perform similarly when humans are always perfect or completely random—but we are particularly interested in the spectrum of users between these extremes, who frequently mis-control the robot.

Our results relating \(\beta \) to performance are shown in Fig. 11. In accordance with our convergence result from Sect. 7, we find that introducing shared autonomy helps humans reach their desired grasp more quickly, and with less final state error. The performance difference between LA and LA+SA decreases as the human’s expertise increases—looking specifically at the real robot simulations, LA takes \(45\%\) more time to complete the task than LA+SA at \(\beta = 75\), but only \(30\%\) more time when \(\beta = 1000\). We conclude that shared autonomy improves performance across all levels of expertise, both when latent actions are trained with and without entropy.

Simulated humans for different levels of rationality. As \({\beta \rightarrow \infty }\), the human’s choices approach optimal inputs. Final State Error (in all plots) is normalized by the distance between goals. Introducing shared autonomy (SA) improves the convergence of latent actions (LA), particularly when the human teleoperator is noisy and imperfect

Simulated humans that change their intended goal part-way through the task. Change is the timestep where this change occurs, and Confidence refers to the robot’s belief in the human’s true goal. Because of the constraints imposed by shared autonomy, users need latent actions that can overcome misguided assistance and move towards a less likely (but correct) goal. Encouraging entropy in the learned latent space (LA + SA + Entropy) enables users to switch goals

Users that change their mind One downside of shared autonomy is over-assistance: the robot may become constrained at likely (but incorrect) goals. To examine this adverse scenario we simulate humans that change which coffee cup they want to grasp after N timesteps. These simulated users intentionally move towards the wrong cup while \(t \le N\), and then try to reach the correct cup for the rest of the task. We model humans as near-optimal immediately after changing their mind about the goal.

We visualize our results in Fig. 12. When the latent action space is trained only to minimize reconstruction loss (LA + SA), users cannot escape the shared autonomy constraint around the wrong goal as N increases. Intuitively, this occurs because the latent space controls the intended goal when the belief b is roughly uniform, and then switches to controlling the preferred trajectory once the robot is confident. So if users change their goal after first convincing the robot, the latent space no longer contains actions that move towards this correct goal! We find that our proposed entropy loss function addresses this shortcoming: LA + SA + Entropy users are able to input actions z that alter the robot’s goal. Our results suggest that encouraging entropy at training time improves the robustness of the latent space.

Simulated humans that learn how to teleoperate the robot. The human’s rationality \(\beta (t)\) is linear in time, and either increases with a high slope (Fast Learner) or low slope (Slow Learner). As the human learns, they get better at choosing inputs that best guide the robot towards their true goal. We find that latent actions learned with the entropy reward (LA+SA+Entropy) are more versatile, so that the human can quickly undo mistakes made while learning