Abstract

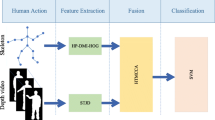

Currently, human action recognition has witnessed remarkable progress, and its achievements have been applied to daily life. However, most methods extract features from only a single view within each modality, which may not comprehensively capture the diversity and complexity of actions. Moreover, the ineffective removal of redundant information can result in an inconspicuous description of key information. These issues cloud affect the final action recognition accuracy. To address these issues, this paper proposes a novel method for single-subject routine action recognition, which combines multi-view key information representation and multi-modal fusion. Firstly, the energy of non-primary motion areas is reduced by motion mean normalization in the depth video sequence, thereby enhancing key information of action. Then, depth motion history map (DMHM) and depth spatio-temporal energy map (DSTEM) are extracted from planes and axes, respectively. The proposed DMHM effectively preserves the spatio-temporal information of actions, DSTEM preserves the motion contour and energy information. In terms of skeleton sequences, statistical features and motion contribution degree of each joint are extracted from the view of motion distribution and weights, respectively. Finally, depth and skeleton features are fused to achieve multi-modal fusion-based action recognition. The proposed method highlights the information of the main motion areas, and achieves recognition accuracies of 96.70\(\%\) on MSR-Action3D, 93.26\(\%\) on UTD-MHAD, and above 97.73\(\%\) on all tests of CZU-MHAD. The experimental results demonstrate that the proposed method effectively preserves action information and has better recognition accuracy than most existing methods.

Similar content being viewed by others

References

Sun Z, Ke Q, Rahmani H, Bennamoun M, Wang G, Liu J (2023) Human action recognition from various data modalities: a review. IEEE Trans Pattern Anal Mach Intell 45(3):3200–3225

Li T, Wang H, Fan D, Wang D, Yin L, Lan Q (2023) Research on virtual skiing system based on harmonious human-computer interaction. In: Proceedings of 2022 international conference on virtual reality, human-computer interaction and artificial intelligence (VRHCIAI), Changsha, China

Ludl D, Gulde T, Curio C (2020) Enhancing data-driven algorithms for human pose estimation and action recognition through simulation. IEEE Trans Intell Transp Syst 21(9):3990–3999

Ma W, Xiong H, Dai X, Zheng X, Zhou Y (2018) An indoor scene recognition-based 3D registration mechanism for real-time AR-GIS visualization in mobile applications. ISPRS Int J Geo Inf 7(3):112

Cong R, Lei J, Fu H, Hou J, Huang Q, Kwong S (2020) Going from RGB to RGBD saliency: a depth-guided transformation model. IEEE Transactions on Cybernetics 50(8):3627–3639

Bobick AF, Davis JW (2001) The recognition of human movement using temporal templates. IEEE Trans Pattern Anal Mach Intell 23(3):257–267

Yang X, Zhang C, Tian Y (2012) Recognizing actions using depth motion maps-based histograms of oriented gradients. In: Proceedings of 20th ACM international Conference multimedia (MM), New York, NY, USA

Cheng K, Zhang Y, He X, Chen W, Cheng J, Lu H (2020) Skeleton-based action recognition with shift graph convolutional network. In: Proceedings of IEEE/CVF conference on computer vision and pattern recognition (CVPR), Seattle, WA, USA

Li C, Xie C, Zhang B, Han J, Zhen X, Chen J (2022) Memory attention networks for skeleton-based action recognition. IEEE Transactions on Neural Networks and Learning Systems 33(9):4800–4814

Hardoon DR, Szedmak S, Shawe-Taylor J (2004) Canonical correlation analysis: An overview with application to learning methods. Neural Comput 16(12):2639–2664

Haghighat M, Abdel-Mottaleb M, Alhalabi W (2016) Discriminant correlation analysis: Real-time feature level fusion for multimodal biometric recognition. IEEE Trans Inf Forensics Secur 11(9):1984–1996

Wang K, He R, Wang L, Wang W, Tan T (2016) Joint feature selection and subspace learning for cross-modal retrieval. IEEE Trans Pattern Anal Mach Intell 38(10):2010–2023

Li C, Huang Q, Li X, Wu Q (2021) Human action recognition based on multi-scale feature maps from depth video sequences. Multimedia Tools and Applications 80:32111–32130

Li X, Hou Z, Liang J, Chen C (2020) Human action recognition based on 3D body mask and depth spatial-temporal maps. Multimedia Tools Application 79:35761–35778

Liu X, Li Y, Wang Q (2018) Multi-view hierarchical bidirectional recurrent neural network for depth video sequence based action recognition. Int J Pattern Recognit Artif Intell 32(10):1850033

Tasnim N, Baek JH (2022) Deep learning-based human action recognition with key-frames sampling using ranking methods. Appl Sci 12(9):4165

Sánchez-Caballero A, Fuentes-Jiménez D, Losada-Gutiérrez C (2023) Real-time human action recognition using raw depth video-based recurrent neural networks. Multimed Tool Appl 82:16213–16235

Ding C, Liu K, Cheng F, Belyaev E (2021) Spatio-temporal attention on manifold space for 3D human action recognition. Appl Intell 51:560–570

Zhang C, Liang J, Li X, Xia Y, Di L, Hou Z, Huan Z (2022) Human action recognition based on enhanced data guidance and key node spatial temporal graph convolution. Multimed Tool Appl 81:8349–8366

Si C, Chen W, Wang W, Wang L, Tan T (2019) An attention enhanced graph convolutional lstm network for skeleton-based action recognition. In: Proceedings of IEEE/CVF conference on computer vision and pattern recognition (CVPR), Los Angeles, California, USA

Liu J, Wang G, Duan L, Abdiyeva K, Kot AC (2018) Skeleton-based human action recognition with global context-aware attention lstm networks. IEEE Trans Image Process 27(4):1586–1599

Plizzari C, Cannici M, Matteucci M (2021) Spatial temporal transformer network for skeleton-based action recognition. In: Proceedings of international conference on pattern recognition, Milan, Italy

Hou Y, Li Z, Wang P, Li W (2018) Skeleton optical spectra based action recognition using convolutional neural networks. IEEE Trans Circuits Syst Video Technol 28(3):807–811

Chao X, Hou Z, Liang J, Yang T (2020) Integrally cooperative spatio-temporal feature representation of motion joints for action recognition. Sensors 20(18):1–22

Guo D, Xu W, Qian Y, Ding W (2023) M-FCCL: memory-based concept-cognitive learning for dynamic fuzzy data classification and knowledge fusion. Inform Fusion 100:101962

Xu W, Guo D, Qian Y, Ding W (2023) Two-way concept-cognitive learning method: a fuzzy-based progressive learning. IEEE Trans Fuzzy Syst 31(6):1885–1899

Guo D, Xu W, Qian Y, Ding W (2023) Fuzzy-granular concept-cognitive learning via three-way decision: performance evaluation on dynamic knowledge discovery. IEEE Trans Fuzzy Syst, Early Access

Guo D, Xu W (2023) Fuzzy-based concept-cognitive learning: an investigation of novel approach to tumor diagnosis analysis. Inform Fusion 639:118998

Wu Z, Wan S, Yan L, Yue L (2018) Autoencoder-based feature learning from a 2D depth map and 3D skeleton for action recognition. J Comput 29(4):82–95

Zhang E, Xue B, Cao F, Duan J, Lin G, Lei Y (2019) Fusion of 2D CNN and 3D densenet for dynamic gesture recognition. Electronics 8(12):1–15

Dawar N, Kehtarnavaz N (2018) Real-time continuous detection and recognition of subject-specific smart tv gestures via fusion of depth and inertial sensing. IEEE Access 6:7019–7028

Liu Z, Pan X, Li Y, Chen Z (2020) A game theory based CTU-level bit allocation scheme for HEVC region of interest coding. IEEE Trans Image Process 30:794–805

He R, Tan T, Wang L, Zheng W (2012) \(l_{21}\) regularized correntropy for robust feature selection. In: Proceedings of 2012 IEEE conference on computer vision and pattern recognition, Providence, RI, USA

Li W, Zhang Z, Liu Z (2010) Action recognition based on a bag of 3D points. In: Proceedings of 2010 IEEE computer society conference on computer vision and pattern recognition-workshops, San Francisco, CA, USA

Chen C, Jafari R, Kehtarnavaz N (2015) UTD-MHAD: a multimodal dataset for human action recognition utilizing a depth camera and a wearable inertial sensor. In: Proceedings of IEEE international conference on image processing (ICIP), Quebec City, QC, Canada

Chao X, Hou Z, Mo Y (2022) CZU-MHAD: a multimodal dataset for human action recognition utilizing a depth camera and 10 wearable inertial sensors. IEEE Sens J 22(7):7034–7042

Chen C, Jafari R, Kehtarnavaz N (2015) Action recognition from depth sequences using depth motion maps-based local binary patterns. In: Proceedings of 2015 IEEE winter conference on applications of computer vision, waikoloa, HI, USA

Min Y, Zhang Y, Chai X, Chen X (2020) An efficient PointLSTM for point clouds based gesture recognition. In: Proceedings of IEEE/CVF conference on computer vision and pattern recognition (CVPR), Seattle, WA, USA

Li X, Huang Q, Wang Z (2023) Spatial and temporal information fusion for human action recognition via Center Boundary Balancing Multimodal Classifier. J Vis Commun Image Represent 90:103716

Tasnim N, Islam MM, Baek JH (2020) Deep learning-based action recognition using 3D skeleton joints information. Inventions 5(3):49

Liu M, Yuan J (2018) Recognizing human actions as the evolution of pose estimation maps. In: Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, Utah, USA

Memmesheimer R, Theisen N, Paulus D (2020) Gimme signals: discriminative signal encoding for multimodal activity recognition. In: Proceedings of IEEE/RSJ international conference on intelligent robots and systems, Las Vegas, NV, USA

Zhao R, Xu W, Su H, Ji Q (2019) Bayesian hierarchical dynamic model for human action recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Long Beach, California, USA

Wang P, Li W, Li C, Hou Y (2018) Action recognition based on joint trajectory maps with convolutional neural networks. Knowl-Based Syst 158:43–53

Shi H, Hou Z, Liang J, Lin E, Zhong Z (2023) DSFNet: a distributed sensors fusion network for action recognition. IEEE Sens J 23(1):839–848

Chao X, Hou Z, Mo Y, Shi H, Yao W (2023) Structural feature representation and fusion of human spatial cooperative motion for action recognition. Multimedia Syst 29:1301–1314

Acknowledgements

This work was supported by the National Science Foundation of China under Grant No.41971343, and Jiangsu Graduate Student Research Innovation Program under Grant No.KYCX23_1693.

Author information

Authors and Affiliations

Contributions

Conceptualization, Xin Chao, Genlin Ji, and Xiaosha Qi. Methodology, Xin Chao, Genlin Ji. Validation, Xin Chao. Writing original draft preparation, Xin Chao. Funding acquisition, Xin Chao and Genlin Ji. All authors have read and agreed to the version of this paper.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no conflict of interest to this work.

Ethical and informed consent for data used

The data used in this paper are all published public datasets.

Data availability and access

This paper has no associated data.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chao, X., Ji, G. & Qi, X. Multi-view key information representation and multi-modal fusion for single-subject routine action recognition. Appl Intell 54, 3222–3244 (2024). https://doi.org/10.1007/s10489-024-05319-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05319-y