Abstract

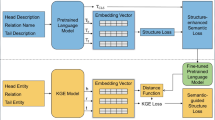

Knowledge graph embedding converts knowledge graphs based on symbolic representations into low-dimensional vectors. Effective knowledge graph embedding methods are key to ensuring downstream tasks. Some studies have shown significant performance differences among various knowledge graph embedding models on different datasets. They attribute this issue to the insufficient representation ability of the models. However, what representation ability knowledge graph embedding models possess is still unknown. Therefore, this paper first selects three representative models for analysis: translation and rotation models in distance models, and the Bert model in neural network models. Based on the analysis results, it can be concluded that the translation model focuses on clustering features, the rotation model focuses on hierarchy features, and the Bert model focuses on word co-occurrence features. This paper categorize clustering and hierarchy as structure features, and word co-occurrence as semantic features. Furthermore, a model that solely focuses on a single feature will lead to a lack of accuracy and generality, making it challenging for the model to be applicable to modern large-scale knowledge graphs. Therefore, this paper proposes an ensemble model with structure and semantic features for knowledge graph embedding. Specifically, the ensemble model includes a structure part and a semantic part. The structure part consists of three models: translation, rotation and cross. Translation and rotation models serve as basic feature extraction, while the cross model enhances the interaction between them. The semantic part is built based on Bert and integrated with the structure part after fine-tuning. In addition, this paper also introduces a frequency model to mitigate the training imbalance caused by differences in entity frequencies. Finally, we verify the effectiveness of the model through link prediction. Experiments show that the ensemble model has achieved improvement on FB15k-237 and YAGO3-10, and also has good performance on WN18RR, proving the effectiveness of the model.

Similar content being viewed by others

Availability of data and materials

The authors declare that the data and materials are reliable.

References

Wang Q, Mao Z, Wang B, Guo L (2017) Knowledge graph embedding: a survey of approaches and applications. IEEE Trans Knowl Data Eng 29(12):2724–2743

Wang M, Qiu L, Wang X (2021) A survey on knowledge graph embeddings for link prediction. Symmetry 13(3):485

Zhang Z, Cai J, Zhang Y, Wang J (2020) Learning hierarchy-aware knowledge graph embeddings for link prediction. Proceedings of the AAAI conference on artificial intelligence 34:3065–3072

Bordes A, Usunier N, Garcia-Duran A, Weston J, Yakhnenko O (2013) Translating embeddings for modeling multi-relational data. Adv Neural Inf Process Syst 26

Sun Z, Deng ZH, Nie JY, Tang J (2019) Rotate: Knowledge graph embedding by relational rotation in complex space. arXiv:1902.10197

Ebisu T, Ichise R (2019) Generalized translation-based embedding of knowledge graph. IEEE Trans Knowl Data Eng 32(5):941–951

Le T, Huynh N, Le B (2022) Knowledge graph embedding by projection and rotation on hyperplanes for link prediction. Appl Intell 1–25

Ren F, Li J, Zhang H, Yang X (2020) Transp: a new knowledge graph embedding model by translating on positions. In: 2020 IEEE International Conference on Knowledge Graph (ICKG), pp 344–351. IEEE

Song T, Luo J, Huang L (2021) Rot-pro: modeling transitivity by projection in knowledge graph embedding. Adv Neural Inf Process Syst 34:24695–24706

Zhang S, Tay Y, Yao L, Liu Q (2019) Quaternion knowledge graph embeddings. Adv Neural Inf Process Syst 32

Chami I, Wolf A, Juan DC, Sala F, Ravi S, Ré C (2020) Low-dimensional hyperbolic knowledge graph embeddings. arXiv:2005.00545

Chen W, Zhao S, Zhang X (2023) Enhancing knowledge graph embedding with type-constraint features. Appl Intell 53(1):984–995

Li M, Sun Z, Zhang S, Zhang W (2021) Enhancing knowledge graph embedding with relational constraints. Neurocomputing 429:77–88

Dettmers T, Minervini P, Stenetorp P, Riedel S (2018 Convolutional 2d knowledge graph embeddings. In: Proceedings of the AAAI conference on artificial intelligence, vol 32

Feng J, Wei Q, Cui J, Chen J (2022) Novel translation knowledge graph completion model based on 2d convolution. Appl Intell 52(3):3266–3275

Devlin J, Chang MW, Lee K, Toutanova K (2018) Bert: pre-training of deep bidirectional transformers for language understanding. arXiv:1810.04805

Yao L, Mao C, Luo Y (2019) Kg-bert: bert for knowledge graph completion. arXiv:1909.03193

Baghershahi P, Hosseini R, Moradi H (2023) Self-attention presents low-dimensional knowledge graph embeddings for link prediction. Knowl-Based Syst 260:110124

Duan H, Liu P, Ding Q (2023) Rfan: relation-fused multi-head attention network for knowledge graph enhanced recommendation. Appl Intell 53(1):1068–1083

Van DTT, Lee YK (2023) A similar structural and semantic integrated method for rdf entity embedding. Appl Intell 1–15

Chen L, Cui J, Tang X, Qian Y, Li Y, Zhang Y (2022) Rlpath: a knowledge graph link prediction method using reinforcement learning based attentive relation path searching and representation learning. Appl Intell 1–12

Fang Y, Wang H, Zhao L, Yu F, Wang C (2020) Dynamic knowledge graph based fake-review detection. Appl Intell 50:4281–4295

Bordes A, Weston J, Collobert R, Bengio Y (2011) Learning structured embeddings of knowledge bases. Proceedings of the AAAI conference on artificial intelligence 25:301–306

Funding

This work was supported in part by the National Natural Science Foundation of China (NSFC) (92267205), the National Natural Science Foundation of China (NSFC) (U1911401), and in part by the Science And Technology Innovation Program of Hunan Province in China (2021RC4054).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, Y., Peng, Y. & Guo, J. Enhancing knowledge graph embedding with structure and semantic features. Appl Intell 54, 2900–2914 (2024). https://doi.org/10.1007/s10489-024-05315-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05315-2