Abstract

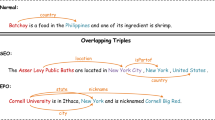

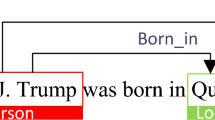

Joint relational triple extraction treats entity recognition and relation extraction as a joint task to extract relational triples, and this is a critical task in information extraction and knowledge graph construction. However, most existing joint models still fall short in terms of extracting overlapping triples. Moreover, these models ignore the trigger words of potential relations during the relation detection process. To address the two issues, a joint model based on Potential Relation Detection and Conditional Entity Mapping is proposed, named PRDCEM. Specifically, the proposed model consists of three components, i.e., potential relation detection, candidate entity tagging, and conditional entity mapping, corresponding to three subtasks. First, a non-autoregressive decoder that contains a cross-attention mechanism is applied to detect potential relations. In this way, different potential relations are associated with the corresponding trigger words in the given sentence, and the semantic representations of the trigger words are fully utilized to encode potential relations. Second, two distinct sequence taggers are employed to extract candidate subjects and objects. Third, an entity mapping module incorporating conditional layer normalization is designed to align the candidate subjects and objects. As such, each candidate subject and each potential relation are combined to form a condition that is incorporated into the sentence, which can effectively extract overlapping triples. Finally, the negative sampling strategy is employed in the entity mapping module to mitigate the error propagation from the previous two components. In a comparison with 15 baselines, the experimental results obtained on two widely used public datasets demonstrate that PRDCEM can effectively extract overlapping triples and achieve improved performance.

Similar content being viewed by others

Data availability

The datasets during the current study are available in the Github repository, https://github.com/hy-struggle/PRGC/tree/main/datahttps://github.com/hy-struggle/PRGC/tree/main/data.

Notes

For example, the BertTokenizer will segmented “unwanted” to [“un”, “##want”, “##ed”]. Thus n words split into l tokens, where \(l \ge n\).

https://github.com/hy-struggle/PRGC

References

Yao X, Van Durme B (2014) Information extraction over structured data: Question answering with Freebase. In: Proceedings of the 52nd annual meeting of the association for computational linguistics (vol 1: Long Papers), pp 956–966. https://doi.org/10.3115/v1/P14-1090

Miwa M, Bansal M (2016) End-to-end relation extraction using LSTMs on sequences and tree structures. In: Proceedings of the 54th annual meeting of the association for computational linguistics (vol 1: Long Papers), pp 1105–1116. https://doi.org/10.18653/v1/P16-1105

Li Q, Ji H (2014) Incremental joint extraction of entity mentions and relations. In: Proceedings of the 52nd annual meeting of the association for computational linguistics (vol 1: Long Papers), pp 402–412. https://doi.org/10.3115/v1/P14-1038

Li X, Li Y, Yang J, et al (2022) A relation aware embedding mechanism for relation extraction. Appl Intell 52(9):10,022–10,031. https://doi.org/10.1007/s10489-021-02699-3

Luan Y, He L, Ostendorf M, et al (2018) Multi-task identification of entities, relations, and coreference for scientific knowledge graph construction. In: Proceedings of the 2018 conference on empirical methods in natural language processing, pp 3219–3232. https://doi.org/10.18653/v1/D18-1360

Lin Y, Liu Z, Sun M, et al (2015) Learning entity and relation embeddings for knowledge graph completion. In: Proceedings of the twenty-ninth AAAI conference on artificial intelligence, pp 2181–2187. https://doi.org/10.1609/aaai.v29i1.9491

Li F, Zhang M, Fu G et al (2017) A neural joint model for entity and relation extraction from biomedical text. BMC Bioinformatics 18(1):198. https://doi.org/10.1186/s12859-017-1609-9

Lin Y, Shen S, Liu Z, et al (2016) Neural relation extraction with selective attention over instances. In: Proceedings of the 54th annual meeting of the association for computational linguistics (vol 1: Long Papers), pp 2124–2133. https://doi.org/10.18653/v1/P16-1200

Zhong Z, Chen D (2021) A frustratingly easy approach for entity and relation extraction. In: Proceedings of the 2021 conference of the north american chapter of the association for computational linguistics: human language technologies, pp 50–61. https://doi.org/10.18653/v1/2021.naacl-main.5

Ye D, Lin Y, Li P, et al (2022) Packed levitated marker for entity and relation extraction. In: Proceedings of the 60th annual meeting of the association for computational linguistics (vol 1: Long Papers), pp 4904–4917. https://doi.org/10.18653/v1/2022.acl-long.337

Ren X, Wu Z, He W, et al (2017) Cotype: Joint extraction of typed entities and relations with knowledge bases. In: Proceedings of the 26th international conference on world wide web, pp 1015–1024. https://doi.org/10.1145/3038912.3052708

Wei Z, Su J, Wang Y, et al (2020) A novel cascade binary tagging framework for relational triple extraction. In: Proceedings of the 58th annual meeting of the association for computational linguistics, pp 1476–1488. https://doi.org/10.18653/v1/2020.acl-main.136

Li X, Luo X, Dong C, et al (2021) TDEER: An efficient translating decoding schema for joint extraction of entities and relations. In: Proceedings of the 2021 conference on empirical methods in natural language processing, pp 8055–8064. https://doi.org/10.18653/v1/2021.emnlp-main.635

Yu B, Zhang Z, Shu X, et al (2020) Joint extraction of entities and relations based on a novel decomposition strategy. In: 24th European conference on artificial intelligence and 10th conference on prestigious applications of artificial intelligence, pp 2282–2289. https://doi.org/10.3233/FAIA200356

Zeng X, Zeng D, He S, et al (2018) Extracting relational facts by an end-to-end neural model with copy mechanism. In: Proceedings of the 56th annual meeting of the association for computational linguistics (vol 1: Long Papers), pp 506–514. https://doi.org/10.18653/v1/P18-1047

Zeng D, Zhang H, Liu Q (2020) Copymtl: Copy mechanism for joint extraction of entities and relations with multi-task learning. In: The thirty-fourth AAAI conference on artificial intelligence, pp 9507–9514. https://doi.org/10.1609/aaai.v34i05.6495

Eberts M, Ulges A (2020) Span-based joint entity and relation extraction with transformer pre-training. In: 24th European conference on artificial intelligence and 10th conference on prestigious applications of artificial intelligence, pp 2006–2013. https://doi.org/10.3233/FAIA200321

Ji B, Yu J, Li S, et al (2020) Span-based joint entity and relation extraction with attention-based span-specific and contextual semantic representations. In: Proceedings of the 28th international conference on computational linguistics, pp 88–99. https://doi.org/10.18653/v1/2020.coling-main.8

Zhao T, Yan Z, Cao Y, et al (2020) Asking effective and diverse questions: A machine reading comprehension based framework for joint entity-relation extraction. In: Proceedings of the twenty-ninth international joint conference on artificial intelligence, pp 3948–3954. https://doi.org/10.24963/ijcai.2020/546

Li X, Yin F, Sun Z, et al (2019) Entity-relation extraction as multi-turn question answering. In: Proceedings of the 57th annual meeting of the association for computational linguistics, pp 1340–1350. https://doi.org/10.18653/v1/P19-1129

Zheng H, Wen R, Chen X, et al (2021) PRGC: Potential relation and global correspondence based joint relational triple extraction. In: Proceedings of the 59th annual meeting of the association for computational linguistics and the 11th international joint conference on natural language processing (vol 1: Long Papers), pp 6225–6235. https://doi.org/10.18653/v1/2021.acl-long.486

Devlin J, Chang MW, Lee K, et al (2019) BERT: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 conference of the north american chapter of the association for computational linguistics: human language technologies, vol 1 (Long and Short Papers), pp 4171–4186. https://doi.org/10.18653/v1/N19-1423

Wang Y, Yu B, Zhang Y, et al (2020) TPLinker: Single-stage joint extraction of entities and relations through token pair linking. In: Proceedings of the 28th international conference on computational linguistics, pp 1572–1582. https://doi.org/10.18653/v1/2020.coling-main.138

Mintz M, Bills S, Snow R, et al (2009) Distant supervision for relation extraction without labeled data. In: Proceedings of the joint conference of the 47th annual meeting of the ACL and the 4th international joint conference on natural language processing of the AFNLP, pp 1003–1011. https://aclanthology.org/P09-1113

Akbik A, Bergmann T, Vollgraf R (2019) Pooled contextualized embeddings for named entity recognition. In: Proceedings of the 2019 conference of the north american chapter of the association for computational linguistics: human language technologies, vol 1 (Long and Short Papers), pp 724–728. https://doi.org/10.18653/v1/N19-1078

Luo Y, Xiao F, Zhao H (2020) Hierarchical contextualized representation for named entity recognition. In: The thirty-fourth AAAI conference on artificial intelligence, pp 8441–8448. https://doi.org/10.1609/aaai.v34i05.6363

Zeng D, Liu K, Lai S, et al (2014) Relation classification via convolutional deep neural network. In: Proceedings of COLING 2014, the 25th international conference on computational linguistics: technical papers, pp 2335–2344. https://aclanthology.org/C14-1220

Zhou P, Shi W, Tian J, et al (2016) Attention-based bidirectional long short-term memory networks for relation classification. In: Proceedings of the 54th annual meeting of the association for computational linguistics (vol 2: Short Papers), pp 207–212. https://doi.org/10.18653/v1/P16-2034

Miwa M, Sasaki Y (2014) Modeling joint entity and relation extraction with table representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pp 1858–1869. https://doi.org/10.3115/v1/D14-1200

Yuan Y, Zhou X, Pan S, et al (2020) A relation-specific attention network for joint entity and relation extraction. In: Proceedings of the twenty-ninth international joint conference on artificial intelligence, pp 4054–4060. https://doi.org/10.24963/ijcai.2020/561

Pfeifer B, Holzinger A, Schimek MG (2022) Robust random forest-based all-relevant feature ranks for trustworthy ai. Stud Health Technol Inform 294:137–138. https://doi.org/10.3233/SHTI220418

Zheng S, Wang F, Bao H, et al (2017) Joint extraction of entities and relations based on a novel tagging scheme. In: Proceedings of the 55th annual meeting of the association for computational linguistics (vol 1: Long Papers), pp 1227–1236. https://doi.org/10.18653/v1/P17-1113

Dixit K, Al-Onaizan Y (2019) Span-level model for relation extraction. In: Proceedings of the 57th annual meeting of the association for computational linguistics, pp 5308–5314. https://doi.org/10.18653/v1/P19-1525

Fu TJ, Li PH, Ma WY (2019) GraphRel: Modeling text as relational graphs for joint entity and relation extraction. In: Proceedings of the 57th annual meeting of the association for computational linguistics, pp 1409–1418. https://doi.org/10.18653/v1/P19-1136

Wan Q, Wei L, Chen X et al (2021) A region-based hypergraph network for joint entity-relation extraction. Knowl-Based Syst 228(107):298. https://doi.org/10.1016/j.knosys.2021.107298

Xu B, Wang Q, Lyu Y, et al (2022) EmRel: Joint representation of entities and embedded relations for multi-triple extraction. In: Proceedings of the 2022 conference of the north american chapter of the association for computational linguistics: human language technologies, pp 659–665. https://doi.org/10.18653/v1/2022.naacl-main.48

Gao C, Zhang X, Li L et al (2023) ERGM: A multi-stage joint entity and relation extraction with global entity match. Knowl-Based Syst 271(110):550. https://doi.org/10.1016/j.knosys.2023.110550

Sui D, Zeng X, Chen Y, et al (2023) Joint Entity and Relation Extraction With Set Prediction Networks. IEEE Trans Neural Netw Learn Syst pp 1–12. 10.1109/TNNLS.2023.3264735

Vaswani A, Shazeer N, Parmar N, et al (2017) Attention is all you need. In: Advances in neural information processing systems 30: annual conference on neural information processing systems, pp 5998–6008. https://proceedings.neurips.cc/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf

Liu Y, Ott M, Goyal N, et al (2019) RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv:1907.11692

Ba JL, Kiros JR, Hinton GE (2016) Layer Normalization. arXiv:1607.06450

Su J (2019) Conditional text generation based on conditional layer normalization-Scientific Spaces. https://kexue.fm/archives/712 Accessed 07 Aug 2023

Riedel S, Yao L, McCallum A (2010) Modeling Relations and Their Mentions without Labeled Text. In: Machine learning and knowledge discovery in databases, pp 148–163. https://doi.org/10.1007/978-3-642-15939-8_10

Gardent C, Shimorina A, Narayan S, et al (2017) Creating training corpora for NLG micro-planners. In: Proceedings of the 55th annual meeting of the association for computational linguistics (vol 1: Long Papers), pp 179–188. https://doi.org/10.18653/v1/P17-1017

Lai T, Cheng L, Wang D et al (2022) RMAN: Relational multi-head attention neural network for joint extraction of entities and relations. Appl Intell 52(3):3132–3142. https://doi.org/10.1007/s10489-021-02600-2

Zeng X, He S, Zeng D, et al (2019) Learning the extraction order of multiple relational facts in a sentence with reinforcement learning. In: Proceedings of the 2019 conference on empirical methods in natural language processing and the 9th international joint conference on natural language processing, pp 367–377. 10.18653/v1/D19-1035

Acknowledgements

This work is supported in part by the National Key Research and Development Program of China (No.2020YFC2003502), the National Natural Science Foundation of China (No.62276038, No.62221005), the Foundation for Innovative Research Groups of Natural Science Foundation of Chongqing (No.cstc2019jcyjcxttX0002), and the Key Cooperation Project of Chongqing Municipal Education Commission (HZ2021008).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study’s conception and design. Xiong Zhou contributed to the conception of the study and performed the experiments. Qinghua Zhang and Man Gao contributed to the manuscript preparation. Xiong Zhou and Man Gao performed the experiment analysis and wrote the manuscript. Qinghua Zhang and Guoyin Wang helped perform the analysis with constructive discussions.

Corresponding author

Ethics declarations

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: List of abbreviations

Appendix A: List of abbreviations

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhou, X., Zhang, Q., Gao, M. et al. Joint relational triple extraction based on potential relation detection and conditional entity mapping. Appl Intell 53, 29656–29676 (2023). https://doi.org/10.1007/s10489-023-05111-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-05111-4