Abstract

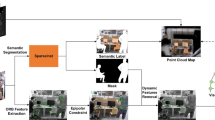

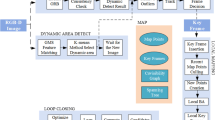

Simultaneous Localization and Mapping (SLAM) is one of the research hotspots in the field of robotics, and it is also a prerequisite for autonomous robot navigation. The localization accuracy and stability of traditional SLAM based on static scene assumption declines due to the interference of dynamic objects. To solve the problem, this paper proposes a semantic SLAM system for dynamic environments based on object detection named DO-SLAM. Firstly, DO-SLAM uses YOLOv5 to identify objects in dynamic environments and obtains the semantic information; Then, combined with the outlier detection mechanism proposed in this paper, the dynamic objects are effectively determined and the feature points on the dynamic objects are eliminated; The combination method can reduce the interference of dynamic objects to SLAM, and improve the stability and localization accuracy of the system. At the same time, a static dense point cloud map is constructed for high-level tasks. Finally, the effectiveness of DO-SLAM is verified on the TUM RGB-D dataset. The results show that the Absolute Trajectory Error (ATE) and Relative Pose Error (RPE) are reduced, indicating that DO-SLAM can reduce the interference of dynamic objects.

Graphical abstract

Similar content being viewed by others

Availability of data and materials

The data that support the findings of this study are openly available in TUM at https://vision.in.tum.de/data/datasets/rgbd-dataset/download.

Code Availability

Not applicable

References

Giubilato R, Chiodini S, Pertile M, Debei S (2019) An evaluation of ROS-compatible stereo visual SLAM methods on a nVidia Jetson TX2. Measurement 140:161–170

Özbek BK, Turan M (2020) Research on the availability of VINS-Mono and ORB-SLAM3 algorithms for aviation. WSEAS Trans Comput 19:216–223

Zhang C (2021) PL-GM:RGB-D SLAM with a novel 2D and 3D geometric constraint model of point and line features. IEEE Access 9:9958–9971

Soares JCV, Gattass M, Meggiolaro MA (2021) Crowd-SLAM: visual SLAM towards crowded environments using object detection. J Intell Robot Syst 102(2):50-1–50-16. https://doi.org/10.1007/s10846-021-01414-1

Opdenbosch DV, Steinbach E (2019) Collaborative visual SLAM using compressed feature exchange. IEEE Robot Autom Lett 4(1):57–64

Mo J, Islam MJ, Sattar J (2022) Fast direct stereo visual SLAM. IEEE Robot Autom Lett 7(2):778–785

Sualeh M, Kim GW (2019) Simultaneous localization and mapping in the Epoch of semantics: a survey. Int J Control Autom Syst 17(3):729–742

Xu ZZ, Xiao SJ (2021) Spatial semantic graph enhanced monocular SLAM System. Comput Animat Virt W 32(4). https://doi.org/10.1002/cav.2025

Li A, Ruan XG, Huang J et al (2019) Review of vision-based simultaneous localization and mapping. In: Proceedings of 2019 IEEE 3rd information technology, networking, electronic and automation control conference (ITNEC), pp 117-123. IEEE

Campos C, Elvira R, Rodriguez JJG et al (2021) An accurate open-source library for visual, visual-inertial, and multimap SLAM. IEEE Trans Robot 37(6):1874–1890

Yu LJ, Yang EF, Yang BY (2022) AFE-ORB-SLAM: robust monocular VSLAM based on adaptive FAST threshold and image enhancement for complex lighting environments. J Intell Robot Syst: Theory Appl 105(2). https://doi.org/10.1007/s10846-022-01645-w

Zubizarreta J, Aguinaga I, Montiel JMM (2020) Direct sparse mapping. IEEE Trans Robot 36(4):1363–1370. https://doi.org/10.1109/TRO.2020.2991614

Gomez-Ojeda R et al (2019) PL-SLAM: a stereo SLAM system through the combination of points and line segments. IEEE Trans Robot 35(3):734–746. https://doi.org/10.1109/TRO.2019.2899783

Shin YS, Park YS, Kim A (2020) DVL-SLAM: sparse depth enhanced direct visual-LiDAR SLAM. Auton Robots 44(2):115–130. https://doi.org/10.1007/s10514-019-09881-0

Dong X, Cheng L, Peng H et al (2022) FSD-SLAM: a fast semi-direct SLAM algorithm. Complex Intell Syst 8:1823–1834. https://doi.org/10.1007/s40747-021-00323-y

Han B, Xu L (2020) MLC-SLAM: mask loop closing for monocular SLAM. Int J Robot Autom 37(1):107–114. https://doi.org/10.2316/J.2022.206-0510

Bao Y, Yang Z, Pan Y, Huan R (2022) Semantic-direct visual odometry. IEEE Robot Autom Lett 7(3):6718–6725. https://doi.org/10.1109/LRA.2022.3176799

Song C, Zeng B, Su T et al (2022) Data association and loop closure in semantic dynamic SLAM using the table retrieval method. Appl Intell 52:11472–11488. https://doi.org/10.1007/s10489-021-03091-x

Song S, Lim H et al (2022) DynaVINS: a visual-inertial SLAM for dynamic environments. IEEE Robot Autom Lett 7(4):11523–11530. https://doi.org/10.1109/LRA.2022.3203231

Mur-Artal R, Tardos JD (2017) ORB-SLAM2: an open-source SLAM system for monocular, stereo, and RGB-D cameras. IEEE Trans Robot 33(5):1255–1262

Xu Y, Wang YY et al (2022) ESD-SLAM: an efficient semantic visual SLAM towards dynamic environments. J Intell Fuzzy Syst 42(6):5155–5164. https://doi.org/10.3233/JIFS-211615

Dai WC, Zhang Y, Li P et al (2020) RGB-D SLAM in dynamic environments using point correlations. IEEE Trans Pattern Anal Mach Intell 44(1):373–389

Palazzolo E, Behley J, Lottes P et al (2019) ReFusion: 3D reconstruction in dynamic environments for RGB-D cameras exploiting residuals. In: 2019 IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 7855-7862. https://doi.org/10.1109/IROS40897.2019.8967590

Czarnowski J, Laidlow T, Clark R et al (2020) DeepFactors: real-time probabilistic dense monocular SLAM. IEEE Robot Autom Lett 5(2):721–728. https://doi.org/10.1109/LRA.2020.2965415

Wang RZ, Wan WH, Wang YK et al (2019) A new RGB-D SLAM method with moving object detection for dynamic indoor scenes. Remote Sens 11(10)

Cheng JY, Wang CQ et al (2020) Robust visual localization in dynamic environments based on sparse motion removal. IEEE Trans Autom Sci Eng 17(2):658–669

Luo H, Pape C, Reithmeier E (2022) Robust RGBD visual odometry using windowed direct bundle adjustment and slanted support plane. IEEE Robot Autom Lett 7(1):350–357. https://doi.org/10.1109/LRA.2021.3126347

Qin ZX, Yin MG et al (2020) SP-Flow: self-supervised optical flow correspondence point prediction for real-time SLAM. Comput Aided Geom Des 82:101928. https://doi.org/10.1016/j.cagd.2020.101928

Cheng JY, Sun YX et al (2019) Improving monocular visual SLAM in dynamic environments: an optical-flow-based approach. Adv Robot 33(12):576–589. https://doi.org/10.1080/01691864.2019.1610060

Chen WF, Shang GT, Hu K et al (2022) A Monocular-visual SLAM system with semantic and optical-flow fusion for indoor dynamic environments. Micromachines 13(11):2006. https://doi.org/10.3390/mi13112006

Liu YB, Miura J (2021) RDMO-SLAM: real-time visual SLAM for dynamic environments using semantic label prediction with optical flow. IEEE Access 9:106981–106997. https://doi.org/10.1109/ACCESS.2021.3100426

Fu Q, Yu HS et al (2022) Fast ORB-SLAM without keypoint descriptors. IEEE Trans Image Process 31:1433–1446. https://doi.org/10.1109/TIP.2021.3136710

Eppenberger T, Cesari G, Dymczyk M et al (2020) Leveraging stereo-camera data for real-time dynamic obstacle detection and tracking. In: 2020 IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 10528–10535

Zhang TW, Zhang HY, Li Y et al (2020) FlowFusion: dynamic dense RGB-D SLAM based on optical flow. In: 2020 IEEE international conference on robotics and automation (ICRA), pp 7322-7328

Sun DQ, Roth S, Black MJ (2010) Secrets of optical flow estimation and their principles. In: 2010 IEEE conference on computer vision and pattern recognition (CVPR), pp 2432-2439

Xiao LH, Wang JG, Qiu XS et al (2019) Dynamic-SLAM: semantic monocular visual localization and mapping based on deep learning in dynamic environment. Robot Auton Syst 117:1–16

Yin HS, Li SM et al (2022) Dynam-SLAM: an accurate, robust stereo visual-inertial SLAM method in dynamic environments. IEEE Trans Robot 39(1):289–308. https://doi.org/10.1109/TRO.2022.3199087

Su P, Luo SY, Huang XC (2022) Real-time dynamic SLAM algorithm based on deep learning. IEEE Access 10:87754–87766. https://doi.org/10.1109/ACCESS.2022.3199350

Lu XY, Wang H et al (2020) DM-SLAM: monocular SLAM in dynamic environments. Appl Sci 10(12):4252. https://doi.org/10.3390/app10124252

Xing ZW, Zhu XR, Dong DC (2022) DE-SLAM: SLAM for highly dynamic environment. J Field Robot 39(5):528–542. https://doi.org/10.1002/rob.22062

Wu WX, Guo L, Gao HL et al (2022) YOLO-SLAM: A semantic SLAM system towards dynamic environment with geometric constraint. Neural Comput Appl 34(8):6011–6026

Jocher G (2020) YOLOv5 by Ultralytics (Version 7.0) [Computer software]. https://doi.org/10.5281/zenodo.3908559

Oksuz K, Cam BC et al (2021) Imbalance problems in object detection: a review. IEEE Trans Pattern Anal Mach Intell 43(10):3388–3415. https://doi.org/10.1109/TPAMI.2020.2981890

Wang WW, Hong W, Wang F et al (2020) GAN-knowledge distillation for one-stage object detection. IEEE Access 8:60719–60727. https://doi.org/10.1109/ACCESS.2020.2983174

Shi PZ, Zhao CF (2020) Review on deep based object detection. In: 2020 International conference on intelligent computing and human-computer interaction (ICHCI), pp 372-377. https://doi.org/10.1109/ICHCI51889.2020.00085

Bochkovskiy A, Wang CY, Liao H (2020) YOLOv4: optimal speed and accuracy of object detection. arXiv:2004.10934

Liu S, Qi L, Qin HL et al (2018) Path aggregation network for instance segmentation. IEEE Trans Pattern Anal Mach Intell 44(7):3386–3403

Qian J, Wei JK, Chen H et al (2022) Multimodal failure matching point based motion object saliency detection for unconstrained videos. Appl Artif Intell 36(1)

Chum O, Matas J (2005) Matching with PROSAC - progressive sample consensus. In: Computer vision and pattern recognition (CVPR), pp 220-226. IEEE

DeTone D, Malisiewicz T, Rabinovich A (2018) SuperPoint: self-supervised interest point detection and description. In: 2018 IEEE/CVF conference on computer vision and pattern recognition workshops (CVPRW), pp 337-33712. https://doi.org/10.1109/CVPRW.2018.00060

Sarlin PE, DeTone D, Malisiewicz T, Rabinovich A (2022) SuperGlue: learning feature matching with graph neural networks. In: 2020 IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 4937-4946. https://doi.org/10.1109/CVPR42600.2020.00499

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Bay H, Ess A et al (2008) Speeded-up robust features (SURF). Comput Vis Image Underst 110(3):346–359. https://doi.org/10.1016/j.cviu.2007.09.014

Rublee E, Rabaud V, Konolige K, Bradski G (2011) ORB: An efficient alternative to SIFT or SURF. In: 2011 International conference on computer vision, pp 2564-2571. https://doi.org/10.1109/ICCV.2011.6126544

Fischler MA, Bolles RC (1981) Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun ACM 24(6):381–395

Sturm J, Engelhard N, Endres F et al (2012) A benchmark for the evaluation of RGB-D SLAM systems. In: 2012 IEEE/RSJ International conference on intelligent robots and systems (IROS), pp 573-580

Zhao Y, Xiong Z, Zhou SL et al (2022) KSF-SLAM: A key segmentation frame based semantic SLAM in dynamic environments. J Intell Robot Syst 105(1)

Yu C, Liu ZX, Liu XJ et al (2018) DS-SLAM: a semantic visual SLAM towards dynamic environments. 2018 IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 1168-1174

Liu YB, Jun MR (2021) RDS-SLAM: real-time dynamic SLAM using semantic segmentation methods. IEEE Access 9:23772–23785

Funding

This work was supported by National Key Research and Development Program (Grant No. 2022YFD2001704).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Yunhong Duan and Jincun Liu. The first draft of the manuscript was written by Yaoguang Wei and Bingqian Zhou. Dong An participated in the revision of the paper and provided many pertinent suggestions.

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Ethics approval

Not applicable

Consent to participate

All authors participated in the manuscript and agreed to participate in it.

Consent for publication

All authors read and approved the final manuscript. All authors agreed to publish it.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wei, Y., Zhou, B., Duan, Y. et al. DO-SLAM: research and application of semantic SLAM system towards dynamic environments based on object detection. Appl Intell 53, 30009–30026 (2023). https://doi.org/10.1007/s10489-023-05070-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-05070-w