Abstract

Determining the source of a weak sound signal can be difficult, particularly in busy or noisy surroundings. The hand-engineered characteristics and algorithms used in traditional methods for source separation and localization are irresistible to changes in the signal or the noise. In environmental sound classification(ESC) the effectiveness of representative features collected from the environmental sounds is crucial to the classification performance. However, semantically meaningless frames and silent frames frequently hinder the performance of ESC. In order to address this problem, we proposed a new context-aware attention-based neural network for weak environmental sound source identification. Our method is based on the idea that the attention mechanism will be able to concentrate on important elements of the input signal and suppress irrelevant ones, enabling the network to locate the weak sound’s source more effectively. To solve the limited capacity problem faced by the attention maps of the attention model we are using context information as additional input. The identification of weak signals and finding corresponding context information is also a challenging problem because of the degradation or noise in the signal. To solve this issue we proposed a novel algorithm based on MFCC for context feature generation. Additionally, the robustness and generalizability of the classification model are improved by using multiple feature extraction techniques which reduces the reliance on any single feature extraction technique. We test our methodology with experiments on datasets of simulated weak sound signals with varying amounts of noise and clutter. We evaluated the performance of our attention-based neural network in comparison to a number of established techniques. Our findings demonstrate that, especially in noisy and congested environments, the proposed model outperforms the baselines in terms of source identification accuracy. Overall, our work illustrates the efficiency of employing an attention-based neural network based on context information for the identification of weak sound sources and implies that this strategy may be a promising approach for further research in this area.

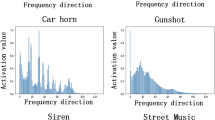

Graphical abstract

Similar content being viewed by others

Availability of data and materials

1. UrbanSound8k : https://urbansounddataset.weebly.com/urbansound8k.html

References

Ahmad S et al (2020) Environmental sound classification using optimum allocation sampling based empirical mode decomposition. Physica A: Stat Mech App 537:122613. https://www.sciencedirect.com/science/article/pii/S0378437119314955. https://doi.org/10.1016/j.physa.2019.122613

Akbal E (2020) An automated environmental sound classification methods based on statistical and textural feature. Appl Acoust 167:107413. https://www.sciencedirect.com/science/article/pii/S0003682X2030517X. https://doi.org/10.1016/j.apacoust.2020.107413

Chen Y, Guo Q, Liang X, Wang J, Qian Y (2019) Environmental sound classification with dilated convolutions. Appl Acoust 148:123–132. https://www.sciencedirect.com/science/article/pii/S0003682X18306121. https://doi.org/10.1016/j.apacoust.2018.12.019

Presannakumar K, Mohamed A (2023) Deep learning based source identification of environmental audio signals using optimized convolutional neural networks. Appl Soft Comput 143:110423. https://www.sciencedirect.com/science/article/pii/S1568494623004416. https://doi.org/10.1016/j.asoc.2023.110423

Fang Z, Yin B, Du Z, Huang X (2022) Fast environmental sound classification based on resource adaptive convolutional neural network. Sci Rep 12(1):6599. https://doi.org/10.1038/s41598-022-10382-x

Huang Z et al (2020) Urban sound classification based on 2-order dense convolutional network using dual features. Appl Acoust 164:107243. https://www.sciencedirect.com/science/article/pii/S0003682X19312691. https://doi.org/10.1016/j.apacoust.2020.107243

Piczak KJ (2015) Environmental sound classification with convolutional neural networks 1–6

Mu W, Yin B, Huang X, Xu J, Du Z (2021) Environmental sound classification using temporal-frequency attention based convolutional neural network. Sci Rep 11(1):21552. https://doi.org/10.1038/s41598-021-01045-4

(2019) Mobile crowdsourcing based context-aware smart alarm sound for smart living. Perv Mob Comput 55:32–44. https://www.sciencedirect.com/science/article/pii/S1574119218302104. https://doi.org/10.1016/j.pmcj.2019.02.003

Ma D, Li S, Zhang X, Wang H (2017) Interactive attention networks for aspect-level sentiment classification, IJCAI’17, 4068–4074 (AAAI Press)

Zhang Z, Xu S, Zhang S, Qiao T, Cao S (2021) Attention based convolutional recurrent neural network for environmental sound classification. Neurocomputing 453:896–903. https://www.sciencedirect.com/science/article/pii/S0925231220313618. https://doi.org/10.1016/j.neucom.2020.08.069

Hong G et al (2021) A multi-scale gated multi-head attention depthwise separable cnn model for recognizing covid-19. Sci Rep 11(1):18048. https://doi.org/10.1038/s41598-021-97428-8

Ren Z et al (2022) Deep attention-based neural networks for explainable heart sound classification. Mach Learn Appl 9:100322. https://www.sciencedirect.com/science/article/pii/S266682702200038X. https://doi.org/10.1016/j.mlwa.2022.100322

Giannakopoulos T, Spyrou E, Perantonis SJ (2019) In: MacIntyre J, Maglogiannis I, Iliadis L, Pimenidis E (eds) Recognition of urban sound events using deep context-aware feature extractors and handcrafted features. (eds MacIntyre J, Maglogiannis I, Iliadis L, Pimenidis E) Artificial Intelligence Applications and Innovations Springer International Publishing

Tripathi AM, Mishra A (2021) Environment sound classification using an attention-based residual neural network. Neurocomputing 460:409–423. https://www.sciencedirect.com/science/article/pii/S0925231221009358. https://doi.org/10.1016/j.neucom.2021.06.031

Jaiswal RK, Dubey RK (2022) Non-intrusive speech quality assessment using context-aware neural networks. Int J Speech Technol 25(4):947–965. https://doi.org/10.1007/s10772-022-10011-y

Li M, Huang W, Zhang T (2022) Attention based convolutional neural network with multi-frequency resolution feature for environment sound classification. Neural Process Lett. https://doi.org/10.1007/s11063-022-11041-y

Zhao M, Jia X (2017) A novel strategy for signal denoising using reweighted svd and its applications to weak fault feature enhancement of rotating machinery. Mech Syst Signal Process 94:129-147

Qin Y, Xing J, Mao Y (2016) Weak transient fault feature extraction based on an optimized morlet wavelet and kurtosis. Meas Sci Technol 27(8):085009

Yu D, Cheng J, Yang Y (2005) Application of emd method and hilbert spectrum to the fault diagnosis of roller bearings. Mech Syst Signal Process 19(2):259–270

Purwins H et al (2019) Deep learning for audio signal processing. IEEE J Sel Top Sig Process 13(2):206–219. https://doi.org/10.1109/JSTSP.2019.2908700

Abdulbaqi J et al (2020) Speech-based activity recognition for trauma resuscitation, 1–8

Xie J, Colonna JG, Zhang J (2021) Bioacoustic signal denoising: a review. Artif Intell Rev 54(5):3575–3597. https://doi.org/10.1007/s10462-020-09932-4

(2020) Classification of heart sounds using discrete time-frequency energy feature based on s transform and the wavelet threshold denoising. Biomed Sig Process Control 57:101684. https://www.sciencedirect.com/science/article/pii/S1746809419302654. https://doi.org/10.1016/j.bspc.2019.101684

Su Y, Zhang K, Wang J, Zhou D, Madani K (2020) Performance analysis of multiple aggregated acoustic features for environment sound classification. Appl Acoust 158:107050. https://www.sciencedirect.com/science/article/pii/S0003682X19302701. https://doi.org/10.1016/j.apacoust.2019.107050

Zölzer U (2022) Digital Audio Signal Processing, 3rd edn. Wiley

Sharma G, Umapathy K, Krishnan S (2020) Trends in audio signal feature extraction methods. Appl Acoust 158:107020. https://www.sciencedirect.com/science/article/pii/S0003682X19308795. https://doi.org/10.1016/j.apacoust.2019.107020

Chandrakala S, M V, N S, L JS, (2021) Multi-view representation for sound event recognition. SIViP 15(6):1211–1219. https://doi.org/10.1007/s11760-020-01851-9

Liu C, Hong F, Feng H, Zhai Y, Chen Y (2021) Environmental sound classification based on stacked concatenated dnn using aggregated features. J Signal Process Syst 93(11):1287–1299. https://doi.org/10.1007/s11265-021-01702-x

Li H et al (2023) Environmental sound classification based on car-transformer neural network model. Circ Syst Sig Process. https://doi.org/10.1007/s00034-023-02339-w

Khare SK, Bajaj V, Sengur A, Sinha G (2022) in 10 - classification of mental states from rational dilation wavelet transform and bagged tree classifier using eeg signals (eds Bajaj V, Sinha G) Artificial Intelligence-Based Brain-Computer Interface, 217–235 Academic Press, https://www.sciencedirect.com/science/article/pii/B978032391197900014X

Mirzaei S, Jazani IK (2023) Acoustic scene classification with multi-temporal complex modulation spectrogram features and a convolutional lstm network. Multimedia Tools Appl 82(11):16395–16408. https://doi.org/10.1007/s11042-022-14192-1

Huang Y, Tian K, Wu A, Zhang G (2019) Feature fusion methods research based on deep belief networks for speech emotion recognition under noise condition. J Ambient Intell Humanized Comput 10(5):1787–1798. https://doi.org/10.1007/s12652-017-0644-8

Zheng F, Zhang G, Song Z (2001) Comparison of different implementations of mfcc. J Comput Sci Technol 16(6):582–589. https://doi.org/10.1007/BF02943243

Noumida A, Rajan R (2022) Multi-label bird species classification from audio recordings using attention framework. Appl Acoust 197:108901. https://www.sciencedirect.com/science/article/pii/S0003682X22002754. https://doi.org/10.1016/j.apacoust.2022.108901

Piczak KJ (2015) ESC: Dataset for Environmental Sound Classification. https://doi.org/10.7910/DVN/YDEPUT

Salamon J, Bello JP (2014) A dataset and taxonomy for urban sound research. PloS one 9(12):e114733

Zhang Z, Xu S, Zhang S, Qiao T, Cao S (2021) Attention based convolutional recurrent neural network for environmental sound classification. Neurocomputing 453:896–903. https://www.sciencedirect.com/science/article/pii/S0925231220313618. https://doi.org/10.1016/j.neucom.2020.08.069

Piczak KJ (2015) Environmental sound classification with convolutional neural networks, 1–6

Aytar Y, Vondrick C, Torralba A (2016) Soundnet: Learning sound representations from unlabeled video, NIPS’16. Curran Associates Inc., Red Hook, NY, USA, pp 892–900

Demir F, Turkoglu M, Aslan M, Sengur A (2020) A new pyramidal concatenated cnn approach for environmental sound classification. Appl Acoust 170:107520. https://www.sciencedirect.com/science/article/pii/S0003682X20306241. https://doi.org/10.1016/j.apacoust.2020.107520

Li S et al (2018) An ensemble stacked convolutional neural network model for environmental event sound recognition. Appl Sci 8(7). https://www.mdpi.com/2076-3417/8/7/1152. https://doi.org/10.3390/app8071152

Su Y, Zhang K, Wang J, Zhou D, Madani K (2020) Performance analysis of multiple aggregated acoustic features for environment sound classification. Appl Acoust 158:107050. https://www.sciencedirect.com/science/article/pii/S0003682X19302701. https://doi.org/10.1016/j.apacoust.2019.107050

Su Y, Zhang K, Wang J, Madani K (2019) Environment sound classification using a two-stream cnn based on decision-level fusion. Sensors 19(7). https://www.mdpi.com/1424-8220/19/7/1733. https://doi.org/10.3390/s19071733

Wu B, Zhang X-P (2022) Environmental sound classification via time-frequency attention and framewise self-attention-based deep neural networks. IEEE Internet of Things J 9(5):3416–3428. https://doi.org/10.1109/JIOT.2021.3098464

Funding

The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Anuj Mohamed contributed equally to this work.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Presannakumar, K., Mohamed, A. Source identification of weak audio signals using attention based convolutional neural network. Appl Intell 53, 27044–27059 (2023). https://doi.org/10.1007/s10489-023-04973-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04973-y