Abstract

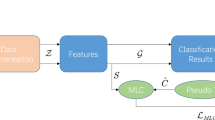

Consistency regularization (CR) is representative semi-supervised learning (SSL) technique that maintains the consistency of predictions from multiple views on the same unlabeled data during the training. In recent SSL studies, the approaches of self-supervised learning with CR, which conducts the pre-training based on unsupervised learning and fine-tuning based on supervised learning, have provided excellent classification accuracy. However, the data augmentation used to generate multiple views in CR has a limitation for expanding the training data distribution. In addition, the existing self-supervised learning using CR cannot provide the high-density clustering result for each class of the labeled data in a representation space, thus it is vulnerable to outlier samples of the unlabeled data with strong augmentation. Consequently, the unlabeled data with augmentation for SSL may not improve the classification performance but rather degrade it. To solve these, we propose a new training methodology called adversarial representation teaching (ART), which consists of the labeled sample-guided representation teaching and adversarial noise-based CR. In our method, the adversarial attack-robust teacher model guides the student model to form a high-density distribution in representation space. This allows for maximizing the improvement by the strong embedding augmentation in the student model for SSL. For the embedding augmentation, the adversarial noise attack on the representation is proposed to successfully expand a class-wise subspace, which cannot be achieved by the existing adversarial attack or embedding expansion. Experimental results showed that the proposed method provided outstanding classification accuracy up to 1.57% compared to the existing state-of-the-art methods under SSL conditions. Moreover, ART significantly outperforms the classification accuracies up to 1.57%, 0.53%, and 0.3% over our baseline method on the CIFAR-10, SVHN, and ImageNet datasets, respectively.

Similar content being viewed by others

Code Availability

Our code is publicly available at https://github.com/qorrma/pytorch-art.

Notes

The manifold hypothesis assumes that the data in the high-dimensional feature (undefinable at Euclidean space) can be mapped into a low dimensional space. Thus, the distance minimization in the low dimensional spaces can also minimize the distance in a high dimensional space.

C, H, and W are the color channel, height, and width of an image, respectively.

\(H({\textbf {p}},{\textbf {q}}):=\sum _{k=1}^{K}{-q_k\log p_k}\), where K, \({\textbf {q}}\), and \({\textbf {p}}\) are the number of classes, ground truth vector, and prediction vector, respectively,

\(\bar{H}({\textbf {p}}):=\sum _{k=1}^{K}{-p_k\log p_k}\), where K and \({\textbf {p}}\) are the numbers of classes and prediction vector, respectively,

References

Tan M, Le Q (2019) Efficientnet: Rethinking model scaling for convolutional neural networks. In: International conference on machine learning, pp 6105–6114. PMLR

Pham H, Dai Z, Xie Q, Le QV (2021) Meta pseudo labels. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11557–11568

Wang Y, Chen H, Heng Q, Hou W, Fan Y, Wu Z, Wang J, Savvides M, Shinozaki T, Raj B, Schiele B, Xie X (2023) Freematch: Self-adaptive thresholding for semi-supervised learning. In: The eleventh international conference on learning representations. https://openreview.net/forum?id=PDrUPTXJI_A

Assran M, Caron M, Misra I, Bojanowski P, Joulin A, Ballas N, Rabbat M (2021) Semi-supervised learning of visual features by nonparametrically predicting view assignments with support samples. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 8443–8452

Chen T, Kornblith S, Norouzi M, Hinton G (2020) A simple framework for contrastive learning of visual representations. In: International conference on machine learning, pp 1597–1607. PMLR

Sohn K, Berthelot D, Carlini N, Zhang Z, Zhang H, Raffel CA, Cubuk ED, Kurakin A, Li C-L (2020) Fixmatch: Simplifying semisupervised learning with consistency and confidence. Adv Neural Inf Process Syst 33:596–608

Zhang B, Wang Y, Hou W, Wu H, Wang J, Okumura M, Shinozaki T (2021) Flexmatch: Boosting semi-supervised learning with curriculum pseudo labeling. Adv Neural Inf Process Syst 34:18408–18419

Chen H, Tao R, Fan Y, Wang Y, Wang J, Schiele B, Xie X, Raj B, Savvides M (2023) Softmatch: Addressing the quantity-quality tradeoff in semi-supervised learning. In: The eleventh international conference on learning representations. https://openreview.net/forum?id=ymt1zQXBDiF

Oliver A, Odena A, Raffel CA, Cubuk ED, Goodfellow I (2018) Realistic evaluation of deep semi-supervised learning algorithms. Adv Neural Inf Process Syst 31

Caron M, Misra I, Mairal J, Goyal P, Bojanowski P, Joulin A (2020) Unsupervised learning of visual features by contrasting cluster assignments. Adv Neural Inf Process Syst 33:9912–9924

Chen T, Kornblith S, Swersky K, Norouzi M, Hinton GE (2020) Big selfsupervised models are strong semi-supervised learners. Adv Neural Inf Process Syst 33:22243–22255

Grill J-B, Strub F, Altché F, Tallec C, Richemond P, Buchatskaya E, Doersch C, Avila Pires B, Guo Z, Gheshlaghi Azar M et al (2020) Bootstrap your own latent-a new approach to self-supervised learning. Adv Neural Inf Process Syst 33:21271–21284

He K, Fan H, Wu Y, Xie S, Girshick R (2020) Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9729–9738

Chen X, He K (2021) Exploring simple siamese representation learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 15750–15758

Miyato T, Maeda S-i, Koyama M, Ishii S (2018) Virtual adversarial training: a regularization method for supervised and semi-supervised learning. IEEE Trans Pattern Anal Mach Intell 41(8):1979–1993

Xie Q, Dai Z, Hovy E, Luong T, Le Q (2020) Unsupervised data augmentation for consistency training. Adv Neural Inf Process Syst 33:6256–6268

Ko B, Gu G (2020) Embedding expansion: Augmentation in embedding space for deep metric learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7255–7264

Duan Y, Zheng W, Lin X, Lu J, Zhou J (2018) Deep adversarial metric learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2780–2789

Zhao Y, Jin Z, Qi G-j, Lu H, Hua X-s (2018) An adversarial approach to hard triplet generation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 501–517

Zheng W, Chen Z, Lu J, Zhou J (2019) Hardness-aware deep metric learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 72–81

Yang X, Song Z, King I, Xu Z (2022) A survey on deep semi-supervised learning. IEEE Trans Knowl Data Eng

Hoyer L, Dai D, Wang H, Van Gool L (2023) Mic: Masked image consistency for context-enhanced domain adaptation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11721–11732

Akhtar N, Mian A (2018) Threat of adversarial attacks on deep learning in computer vision: A survey. Ieee Access 6:14410–14430

Lee D-H et al (2013) Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In: workshop on challenges in representation learning, ICML, vol. 3, pp 896

Cai Z, Xiong Z, Xu H, Wang P, Li W, Pan Y (2021) Generative adversarial networks: A survey toward private and secure applications. ACM Computing Surveys (CSUR) 54(6):1–38

Khan S, Naseer M, Hayat M, Zamir SW, Khan FS, Shah M (2022) Transformers in vision: A survey. ACM computing surveys (CSUR) 54(10s):1–41

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: Hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 10012–10022

You Y, Li J, Reddi S, Hseu J, Kumar S, Bhojanapalli S, Song X, Demmel J, Keutzer K, Hsieh C-J (2020) Large batch optimization for deep learning: Training bert in 76 minutes. In: International conference on learning representations. https://openreview.net/forum?id=Syx4wnEtvH

Samuli L, Timo A (2017) Temporal ensembling for semi-supervised learning. In: International Conference on Learning Representations (ICLR), vol. 4, pp 6

Verma V, Kawaguchi K, Lamb A, Kannala J, Solin A, Bengio Y, Lopez-Paz D (2022) Interpolation consistency training for semi-supervised learning. Neural Netw 145:90–106

Berthelot D, Carlini N, Goodfellow I, Papernot N, Oliver A, Raffel CA (2019) Mixmatch: A holistic approach to semi-supervised learning. Adv Neural Inf Process Syst 32

Berthelot D, Carlini N, Cubuk ED, Kurakin A, Sohn K, Zhang H, Raffel C (2020) Remixmatch: Semi-supervised learning with distribution matching and augmentation anchoring. In: International conference on learning representations

Xu Y, Shang L, Ye J, Qian Q, Li Y-F, Sun B, Li H, Jin R (2021) Dash: Semi-supervised learning with dynamic thresholding. In: International conference on machine learning, pp 11525–11536. PMLR

Cui J, Zong L, Xie J, Tang M (2023) A novel multi-module integrated intrusion detection system for high-dimensional imbalanced data. Appl Intell 53(1):272–288

Karras T, Laine S, Aila T (2019) A style-based generator architecture for generative adversarial networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4401–4410

Zhang B, Gu S, Zhang B, Bao J, Chen D, Wen F, Wang Y, Guo B (2022) Styleswin: Transformer-based gan for high-resolution image generation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11304–11314

Park SJ, Park HJ, Kang ES, Ngo BH, Lee HS, Cho SI (2022) Pseudo label rectification via co-teaching and decoupling for multisource domain adaptation in semantic segmentation. IEEE Access 10:91137–91149

Chen L-C, Zhu Y, Papandreou G, Schroff F, Adam H (2018) Encoderdecoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 801–818

Ngo BH, Kim JH, Park SJ, Cho SI (2022) Collaboration between multiple experts for knowledge adaptation on multiple remote sensing sources. IEEE Trans Geosci Remote Sens 60:1–15

Funding

This research was supported by National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) under Grant RS2023-00208763, Institute of Information & communications Technology Planning & Evaluation (IITP) under the Artificial Intelligence Convergence Innovation Human Resources Development (IITP-2023-RS-2023-00254592) grant funded by the Korea government (MSIT), and Development of Neural Network Architecture and Circuits for Few-Shot Learning (NRF-2022M3F3A2A01085463).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Park, J.H., Kim, J.H., Ngo, B.H. et al. Adversarial representation teaching with perturbation-agnostic student-teacher structure for semi-supervised learning. Appl Intell 53, 26797–26809 (2023). https://doi.org/10.1007/s10489-023-04950-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04950-5