Abstract

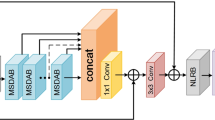

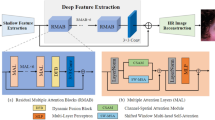

Image super-resolution (SR) has been extensively investigated in recent years. However, due to the absence of trustworthy and precise perceptual quality standards, it is challenging to objectively measure the performance of different SR approaches. In this paper, we propose a novel triple attention dual-scale residual network called TADSRNet for no-reference super-resolution image quality assessment (NR-SRIQA). Firstly, we simulate the human visual system (HVS) and construct a triple attention mechanism to acquire more significant portions of SR images through cross-dimensionality, making it simpler to identify visually sensitive regions. Then a dual-scale convolution module (DSCM) is constructed to capture quality-perceived features at different scales. Furthermore, in order to collect more informative feature representation, a residual connection is added to the network to compensate for perceptual features. Extensive experimental results demonstrate that the proposed TADSRNet can predict visual quality with greater accuracy and better consistency with human perception compared with existing IQA methods. The code will be available at https://github.com/kbzhang0505/TADSRNet.

Similar content being viewed by others

Data Availability

The authors can provide the code of this paper at the website of GitHub via https://github.com/kbzhang0505/TADSRNet.

References

Yang W, Zhou F, Zhu R, Fukui K, Wang G, Xue J-H (2019) Deep learning for image super-resolution. Neurocomputing

Yang W, Zhang X, Tian Y, Wang W, Xue J-H, Liao Q (2019) Deep learning for single image super-resolution: A brief review. IEEE Trans Multimed, 21(12):3106–3121

Zhang K, Luo S, Li M, Jing J, Lu J, Xiong Z (2020) Learning stacking regressors for single image super-resolution. Appl Intell, 50(12):4325–4341

Zhang Y, Tian Y, Kong Y, Zhong B, Fu Y (2018) Residual dense network for image super-resolution. In: Proc IEEE Conf Comput Vis Pattern Recognit, pp 2472–2481

Li M, Ma B, Liu Y, Zhang Y (2022) s-lmpnet: a super-lightweight multistage progressive network for image super-resolution. Appl Intell, 1-20

Zhang Y, Sun Y, Liu S (2022) Deformable and residual convolutional network for image super-resolution. Appl Intell, 52(1):295–304

Liu J, Zhang W, Tang Y, Tang J, Wu G (2020) Residual feature aggregation network for image super-resolution. In: Proc IEEE/CVF Conf Comput Vis Pattern Recognit, pp 2359–2368

Zhao T, Lin Y, Xu Y, Chen W, Wang Z (2021) Learning-based quality assessment for image super-resolution. IEEE Trans Multimed

Wang Z, Bovik AC (2006) Modern image quality assessment. Synth Lect Image Vid Multimed Process, 2(1):1–156

Mohammadi P, Ebrahimi-Moghadam A, Shirani S (2014) Subjective and objective quality assessment of image: A survey. arXiv preprint arXiv:1406.7799

Zhai G, Min X (2020) Perceptual image quality assessment: a survey. Sci China Inf Sci, 63:1–52

Zhang K, Zhu D, Li J, Gao X, Gao F, Lu J (2021) Learning stacking regression for no-reference super-resolution image quality assessment. Signal Process, 178:107771

Xiong Z, Lin M, Lin Z, Sun T, Yang G, Wang Z (2020) Single image super-resolution via image quality assessment-guided deep learning network. PloS ONE, 15(10):0241313

Bare B, Li K, Yan B, Feng B, Yao C (2018) A deep learning based no-reference image quality assessment model for single-image superresolution. In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp 1223–1227

Fang Y, Zhang C, Yang W, Liu J, Guo Z (2018) Blind visual quality assessment for image super-resolution by convolutional neural network. Multimed Tools Appl, 77(22):29829–29846

Lai Q, Khan S, Nie Y, Sun H, Shen J, Shao L (2020) Understanding more about human and machine attention in deep neural networks. IEEE Trans Multimed, 23:2086–2099

Lu E, Hu X (2022) Image super-resolution via channel attention and spatial attention. Appl Intell, 52(2):2260–2268

Woo S, Park J, Lee J-Y, Kweon IS (2018) Cbam: Convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 3–19

Misra D, Nalamada T, Arasanipalai AU, Hou Q (2021) Rotate to attend:Convolutional triplet attention module. In: Proc IEEE/CVF Winter Conf Appl Comput Vis, pp 3139–3148

Qin J, Huang Y, Wen W (2020) Multi-scale feature fusion residual network for single image super-resolution. Neurocomputing 379:334–342

Gao W, Yu L, Tan Y, Yang P (2022) Msimcnn: Multi-scale inception module convolutional neural network for multi-focus image fusion. Appl Intell, 1–16

Saad MA, Bovik AC, Charrier C (2011) Dct statistics model-based blind image quality assessment. In: 18th IEEE International Conference on Image Processing, pp 3093–3096

Liu L, Liu B, Huang H, Bovik AC (2014) No-reference image quality assessment based on spatial and spectral entropies. Signal Process Image Commun, 29(8):856–863

Ma C, Yang C-Y, Yang X, Yang M-H (2017) Learning a no-reference quality metric for single-image super-resolution. Comput Vis Image Underst, 158:1–16

Zhou F, Yao R, Liu B, Qiu G (2019) Visual quality assessment for superresolved images: Database and method. IEEE Trans Image Process, 28(7):3528–3541

Jiang Q, Liu Z, Gu K, Shao F, Zhang X, Liu H, Lin W (2022) Single image super-resolution quality assessment: a real-world dataset, subjective studies, and an objective metric. IEEE Trans Image Process, 31:2279–2294

Kang L, Ye P, Li Y, Doermann D (2014) Convolutional neural networks for no-reference image quality assessment. In: Proc IEEE Conf Comput Vis Pattern Recognit, pp 1733–1740

Kang L, Ye P, Li Y, Doermann D (2015) Simultaneous estimation of image quality and distortion via multi-task convolutional neural networks. In: IEEE International Conference on Image Processing (ICIP), pp 2791–2795

Yan Q, Gong D, Zhang Y (2018) Two-stream convolutional networks for blind image quality assessment. IEEE Trans Image Process, 28(5):2200–2211

Zhang W, Ma K, Yan J, Deng D, Wang Z (2018) Blind image quality assessment using a deep bilinear convolutional neural network. IEEE Trans Circ Syst Vid Technol, 30(1):36–47

Su S, Yan Q, Zhu Y, Zhang C, Ge X, Sun J, Zhang Y (2020) Blindly assess image quality in the wild guided by a self-adaptive hyper network. In: Proc IEEE/CVF Conf Comput Vis Pattern Recognit, pp 3667–3676

Zhang T, Zhang K, Xiao C, Xiong Z, Lu J (2022) Joint channel-spatial attention network for super-resolution image quality assessment. Appl Intell, 1–15

Cheng D, Chen L, Lv C, Guo L, Kou Q (2022) light-guided and crossfusion u-net for anti-illumination image super-resolution. IEEE Trans Circ Syst Vid Technol, 32(12):8436–8449

Chen L, Guo L, Cheng D, Kou Q (2021) structure-preserving and colorrestoring up-sampling for single low-light image. IEEE Trans Circ Syst Vid Technol, 32(4):1889–190

Li F, Zhang Y, Cosman PC (2021) mmmnet: An end-to-end multi-task deep convolution neural network with multi-scale and multi-hierarchy fusion for blind image quality assessment. IEEE Trans Circ Syst Vid Technol, 31(12):4798–4811

Guo H, Bin Y, Hou Y, Zhang Q, Luo H (2021) iqma network: Image quality multi-scale assessment network. In: Proc IEEE/CVF Conf Comput Vis Pattern Recognit, pp 443–452

Wang C, Lv X, Fan X, Ding W, Jiang X (2023) two-channel deep recursive multi-scale network based on multi-attention for no-reference image quality assessment. Int J Mach Learn Cyberne, 1–17

Gao X, Lu W, Tao D, Li X (2010) Image quality assessment and human visual system. Vis Commun Image Process, SPIE 7744:316–325

You J, Korhonen J (2022) Attention integrated hierarchical networks for noreference image quality assessment. J Vis Commun Image Represent, 82:103399

Bosse S, Maniry D, Müller K-R, Wiegand T, Samek W, (2017) Deep neural networks for no-reference and full-reference image quality assessment. IEEE Transactions on image processing 27(1):206–219

Yang S, Jiang Q, Lin W, Wang Y (2019) Sgdnet: An end-to-end saliencyguided deep neural network for no-reference image quality assessment. In: Proceedings of the 27th ACM International Conference on Multimedia, pp 1383–1391

Liu Y, Jia Q, Wang S, Ma S, Gao W (2022) Textural-structural joint learning for no-reference super-resolution image quality assessment. arXiv preprint arXiv:2205.13847

Hu J, Shen L, Sun G (2018) squeeze-and-excitation networks. In: Proc IEEE Conf Comput Vis Pattern Recognit, pp 7132–7141

Cao Y, Xu J, Lin S, Wei F, Hu H (2019) gcnet: Non-local networks meet squeeze-excitation networks and beyond. In: Proc IEEE/CVF Int Conf Comput Vis Workshops, pp 0-0

Wang Q, Wu B, Zhu P, Li P, Zuo W, Hu Q (2020) eca-net: Efficient channel attention for deep convolutional neural networks. Proc IEEE/CVF Conf Comput Vis Pattern Recognit, pp 11534–11542

Huang Z, Wang X, Huang L, Huang C, Wei Y, Liu W (2019) ccnet:Criss-cross attention for semantic segmentation. In: Proc IEEE/CVF Int Conf Comput Vis, pp 603–612

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61971339, Grant 62061047, and Grant 61471161, in part by the Textile Intelligent Equipment Information and Control Innovation Team of Shaanxi Innovation Ability Support Program under Grant 2021TD-29, in part by the Textile Intelligent Equipment Information and Control Innovation Team of Shaanxi Innovation Team of Universities, in part by the Natural Science Foundation of Xinjiang Uygur Autonomous Region under Grant 2020D01C157, and in part by the Key Project of the Natural Science Foundation of Shaanxi Province under Grant 2018JZ6002.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

Ethical Statement

This paper does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Quan, X., Zhang, K., Li, H. et al. TADSRNet: A triple-attention dual-scale residual network for super-resolution image quality assessment. Appl Intell 53, 26708–26724 (2023). https://doi.org/10.1007/s10489-023-04932-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04932-7