Abstract

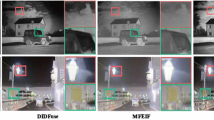

In this paper, a robust infrared and visible image fusion scheme that joins a dual-branch multi-receptive-field neural network and a color vision transfer algorithm is designed to aggregate infrared and visible video sequences. The proposed method enables the fused image to effectively recognize thermal objects, contain rich texture information and ensure visual perception quality. The fusion network is an integrated encoder-decoder modal with a multi-receptive-field attention mechanism that is implemented via hybrid dilated convolution (HDC) and a series of convolution layers to form an unsupervised framework. Specifically, the multi-receptive-field attention mechanism aims to extract comprehensive spatial information to enable the encoder to separately focus on the substantial thermal radiation from the infrared modal and the environmental characteristics from the visible modal. In addition, to ensure that the fused image has rich color, high fidelity and steady brightness, a color vision transfer method is proposed to recolor the fused gray results by deriving a map from the visible image serving as a reference. Extensive experiments verify the importance and robustness of each step in the subjective and objective evaluation and demonstrate that our work represents a trade-off among color fidelity, fusion performance and computational efficiency. Moreover, we will publish our research content, data and code publicly at https://github.com/DZSYUNNAN/RGB-TIR-image-fusion.

Similar content being viewed by others

References

Ma J, Ma Y, Li C (2019) Infrared and visible image fusion methods and applications: A survey. Inf Fusion 45:153–178

Geng J, Miao Z, Zhang X (2015) Efficient heuristic methods for multimodal fusion and concept fusion in video concept detection. IEEE Trans Multimedia 17(4):498–511

Javan FD, Samadzadegan F, Mehravar S, Toosi A, Stein A (2021) A review of image fusion techniques for pan-sharpening of high-resolution satellite imagery. ISPRS J Photogramm Remote Sens 171:101–117

Hu H, Wu J, Li B, Guo Q, Zheng J (2017) An adaptive fusion algorithm for visible and infrared videos based on entropy and the cumulative distribution of gray levels. IEEE Trans Multimedia 19(12):2706–2719

Zhang Q, Wang L, Ma Z, Li H (2012) A novel video fusion framework using surfacelet transform. Opt Commun 285(13–14):3032–3041

Zhang Q, Chen Y, Wang L (2013) Multisensor video fusion based on spatial–temporal salience detection. Signal Process 93(9):2485–2499

Bin S, Yingjie L, Rongguo F (2020) Multi-Band infrared and visual video registration and fusion parallel acceleration method. Presented at the Proceedings of the 2020 International conference on computing, Networks and Internet of Things, Sanya, China, 107-112

Li J, Huo H, Li C, Wang R, Sui C, Liu Z (2021) Multigrained attention network for infrared and visible image fusion. IEEE Trans Instrum Meas 70:1–12

Zhang Q, Liu Y, Rick S (2018) Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: A review. Inf Fusion 40:57–75

Luo X, Zhang Z, Zhang B, Wu X (2017) Image fusion with contextual statistical similarity and nonsubsampled shearlet transform. IEEE Sensors J PP(6):1760–1771

Zhang TY, Zhou Q, Feng HJ, Xu ZH, Li Q, Chen YT (2013) Fusion of infrared and visible light images based on nonsubsampled shearlet transform. Proc SPIE 8907, id. 89071H, 8 pp

Jiang Y, Wu Z, Tang J, Li Z, Xue X, Chang S (2018) Modeling multimodal clues in a hybrid deep learning framework for video classification. IEEE Trans Multimedia 20(11):3137–3147

Hou RC, Zhou DM, Nie RC (2020) VIF-Net: An unsupervised framework for infrared and visible image fusion. IEEE Trans Comput Imaging 6:640–651

Ma J, Yu W, Liang P et al (2019) FusionGAN: A generative adversarial network for infrared and visible image fusion. Information Fusion 48:11–26

Ma J, Zhang H, Shao Z, Liang P, Xu H (2021) GANMcC: A generative adversarial network with multiclassification constraints for infrared and visible image fusion. IEEE Trans Instrum Meas 70:1–14

Liu Y, Chen X, Cheng J, Peng H, Wang Z (2018) Infrared and visible image fusion with convolutional neural networks. Int J Wavelets Multiresolution Inf Process 16(3):1850018

Vanmali AV, Gadre VM (2017) Visible and NIR image fusion using weight-map-guided Laplacian–Gaussian pyramid for improving scene visibility. Sādhanā 42(7):1063–1082

Li H, Wu XJ, Kittler J (2021) RFN-Nest: an end-to-end residual fusion network for infrared and visible images. Inf Fusion 73:72–86

Li H, Wu XJ, Durrani TS (2019) Infrared and visible image fusion with ResNet and zero-phase component analysis. Infrared Phys Technol 102:103039

Wang Z (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13:600–612

Li J, Huo HT, Li C, Wang RH, Feng Q (2021) "AttentionFGAN: infrared and visible image fusion using attention-based generative adversarial networks," (in English). IEEE Trans Multimedia 23:1383–1396

Faridul HS, Pouli T, Chamaret C, Stauder J, Reinhard E, Kuzovkin D, Tremeau A (2016) Colour mapping: a review of recent methods, extensions and applications. Comput Graphics Forum 35(1):59–88

A-Monem ME, Hammood TZ (2020) Video colorization methods: a survey. Iraqi J Sci:675–686

Hogervorst MA, Toet A (2010) Fast natural color mapping for night-time imagery. Inf Fusion 11(2):69–77

Reinhard E, Pouli T (2011) Colour spaces for colour transfer. In: Computational Color Imaging - Third International Workshop, CCIW vol. 6626, pp. 1–15

Gómez-Gavara C, Piella G, Vázquez J et al (2021) LIVERCOLOR: An Algorithm Quantification of Liver Graft Steatosis Using Machine Learning and Color Image Processing. HPB 23(supplement 3):S691–S692

Pavlovic R, Petrovic V (2012) Multisensor colour image fusion for night vision. Sensor Signal Processing for Defence, pp. 1–5

Florea L, Florea C (2019) Directed color transfer for low-light image enhancement. Digit Signal Process 93:1–12

Fang Y, Li Y, Tu X, Tan T, Wang X (2020) Face completion with hybrid dilated convolution. Signal Process Image Commun 80:115664

Wang P, Chen P, Yuan Y, Liu D, Cottrell G (2018) Understanding Convolution for Semantic Segmentation. In: 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 1451–1460

Liu Y, Zhou D, Nie R, Ding Z, Guo Y, Ruan X, Xia W, Hou R (2022) TSE_Fuse: two stage enhancement method using attention mechanism and feature-linking model for infrared and visible image fusion. Digital Signal Process 123:103387

Li H, Wu X-J, Kittler J (2020) MDLatLRR: A novel decomposition method for infrared and visible image fusion. IEEE Trans Image Process 29:4733–4746

Li H, Wu XJ, Kittler J (2018) Infrared and visible image fusion using a deep learning framework. In: International Conference on Pattern Recognition, pp. 2705–2710

Li H, Wu X (2019) DenseFuse: a fusion approach to infrared and visible images. IEEE Trans Image Process 28(5):2614–2623

Ding Z, Li H, Zhou D, Li H, Liu Y, Hou R (2021) CMFA_Net: A cross-modal feature aggregation network for infrared-visible image fusion. Infrared Phys Technol 118:103905

Toet A (2014) TNO image fusion dataset. Figshare. Data. [Online]. Available: https://figshare.com/articles/TNimageFusionDataset/1008029. Accessed 26 Apr 2014

INO video dataset. [Online]. Available: https://www.ino.ca/en/videoanalytics-dataset/

Qu G, Zhang D, Yan P (2002) Information measure for performance of image fusion. Electron Lett 38(7):313–315

Wang Q, Shen Y (2004) Performances evaluation of image fusion techniques based on nonlinear correlation measurement. In: Proceedings of the 21st IEEE Instrumentation and Measurement Technology Conference (IEEE Cat. No.04CH37510)

Kandadai S, Hardin J, Creusere CD (2008) Audio quality assessment using the mean structural similarity measure. In: IEEE international conference on acoustics

Li H, Wu XJ, Durrani T (2020) NestFuse: an infrared and visible image fusion architecture based on nest connection and spatial/channel attention models. IEEE Trans Instrum Meas 69(12):9645–9656

Acknowledgments

The authors would like to thank the editors and the anonymous reviewers for their careful work and valuable suggestions for this study and declare that there are no conflict of interest regarding the publication of this paper. This work is supported by the National Natural Science Foundation of China (Nos. 62066047, 61966037, 61861045). “Famous teacher of teaching” of Yunnan 10000 Talents Program. Key project of Basic Research Program of Yunnan Province (No. 202101AS070031). General project of national Natural Science Foundation of China (No. 81771928). The Yunnan University’s Research Innovation Fund for Graduate Students (No. 2020298). Scientific Research Fund Project of Education Department of Yunnan Province for Graduate Students (No. 2021Y022).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ding, Z., Li, H., Zhou, D. et al. A robust infrared and visible image fusion framework via multi-receptive-field attention and color visual perception. Appl Intell 53, 8114–8132 (2023). https://doi.org/10.1007/s10489-022-03952-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03952-z